AMD MI300X posts fastest ever Geekbench 6 OpenCL score — 19% faster than RTX 4090, and only eight times as expensive

Someone had fun testing AMD's latest and greatest AI GPU in a synthetic OpenCL benchmark.

AMD's fire-breathing MI300X GPU has made its official debut on Geekbench 6 OpenCL, outpacing previous chart-toppers such as the RTX 4090. However, despite being one of the fastest GPUs on the Geekbench 6 charts, the AMD GPU's score does not reflect its real performance and shows why it's a terrible idea to benchmark data center AI GPUs using consumer grade OpenCL applications (which is what Geekbench 6 is).

Let's get the benchmark numbers out of the way, though. The MI300X boasts a score of 379,660 points in Geekbench 6.3.0's GPU-focused OpenCL benchmark, making it the fastest GPU on the Geekbench browser to date. (Note that it's not listed on the official OpenCL results page yet.) That gives it the pole position, ahead of the second highest score that goes to, ironically, another enterprise GPU, the Nvidia L40S. The L40S managed 352,507, which in turn beats the RTX 4090's 319,583 result by 10%.

So, the MI300X beats all contenders right now, outpacing the RTX 4090 (the fastest consumer GPU on the list) by 60,077 points or 18.8%. Clearly, other factors are holding back some of these GPUs, as Nvidia's H100 PCIe also shows up on the list with a meager score of only 281,868. Don't use Geekbench 6 OpenCL as a measuring stick for enterprise-grade hardware, in other words. It's like driving a Formula One car in a school zone to check the acceleration and handling of the vehicle.

We should also discuss price. RTX 4090 is easy enough to pin down, with a $1,599 MSRP and a current lowest price of $1,739.99 online. AMD MI300X is a different story, as you generally buy those with servers and support contracts. However, a quick search gives a suggested price of anywhere from $10,000 to $20,000 per GPU — we can't say for certain how accurate that data is, as the companies actually buying and selling the hardware generally don't reveal such information, but obviously the MI300X plays in a completely different league than consumer hardware. You also can't just run out and buy an MI300X to slot into your standard desktop PC; you'll need a server with OCP accelerator module support.

| GPU | OpenCL |

|---|---|

| AMD Instinct MI300X | 379,660 |

| Nvidia L40S | 352,507 |

| RTX 4090 | 319,583 |

| Nvidia H100 PCIe | 281,868 |

You only need to look at the rankings to quickly see that all may not be right with this benchmark. The RTX 4090 outpaces the RTX 4080 Super by 28% — not an entirely out of the question result, but that's using the same architecture. The RTX 4080 Super meanwhile beats AMD's top consumer GPU, the RX 7900 XTX, by 21%. If this were a ray tracing or AI performance test, that wouldn't be out of the question, but in general FP32 compute performance the 7900 XTX tends to be far closer than these results would suggest. And again, that's not even looking at the often terrible results from data center GPUs like the MI300X and H100.

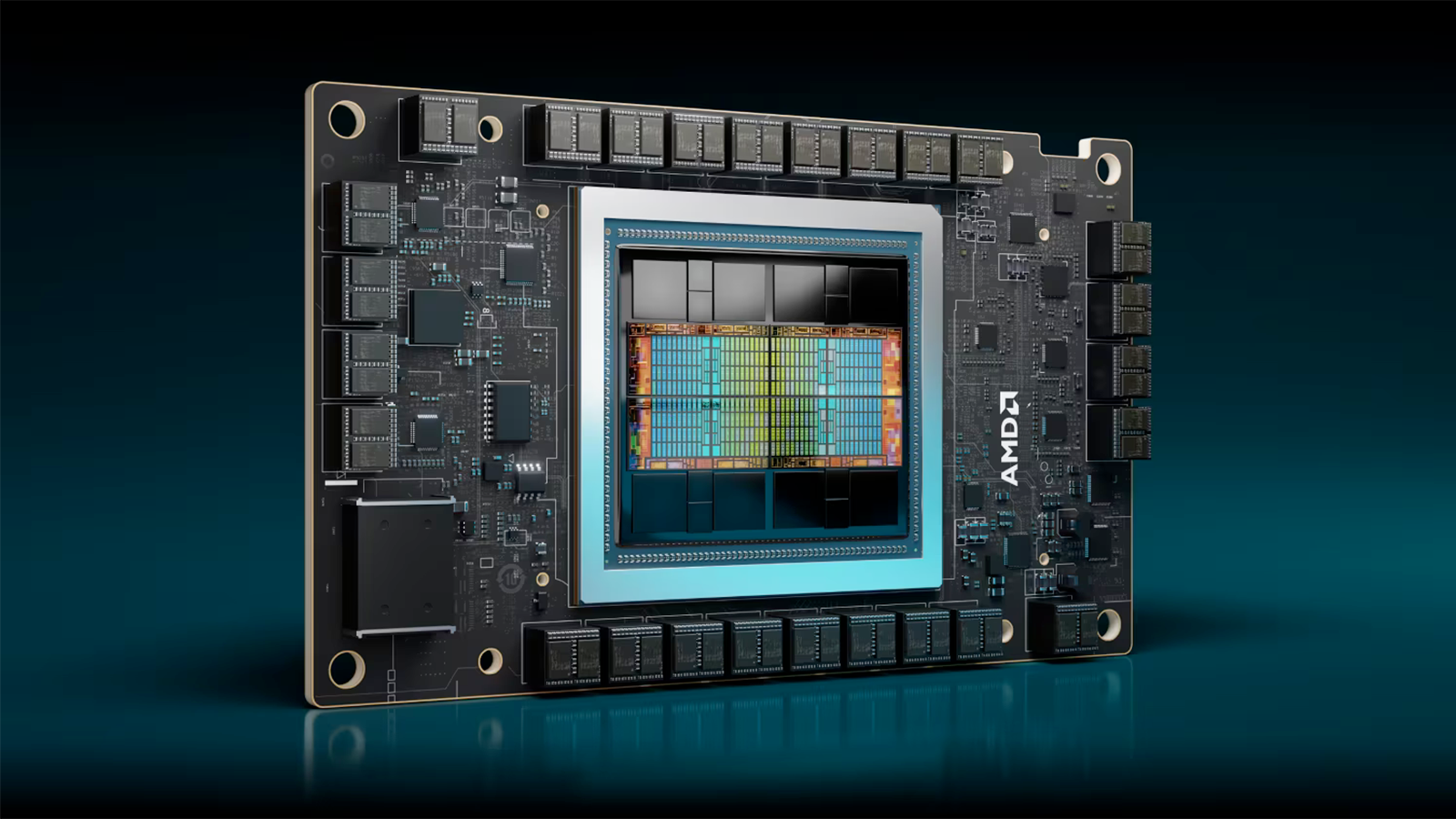

Spec-wise, the AMD MI300X GPU should be in a league of its own. It has 192GB of HBM3 memory with 5.3 TB/s of bandwidth, paired with 304 CDNA3 Compute Units (CUs) and 163.4 TFLOPS of FP32 performance. And that's not even its strong suit. As an AI GPU, it also boasts 2.6 petaflops of FP16 throughput, as well as 2,600 TOPS of inference performance — sort of puts the 40 TOPS requirement of Copilot+ to shame. The MI300X also comes with severe power requirements to match, with a peak 750W power rating.

The MI300X is AMD's latest enterprise GPU, designed to compete with the likes of Nvidia's H100 and H200 AI GPUs. The GPU takes advantage of AMD's CDNA 3 graphics architecture and heavily utilizes 3D-stacking technologies. In fact, the GPU itself is so large that it does not come in a traditional PCIe graphics card form factor. In proper AI-based benchmarks, the MI300X is purportedly up to 60% faster than Nvidia's H100, let alone the RTX 4090.

By contrast, the RTX 4090 is barely half as powerful as the AMD chip in FP32 perforamnce. It features 24GB of GDDR6X and 1TB/s of memory bandwidth, 128 SMs with 82.6 TFLOPS of FP32 compute, and 1,321 TOPS of AI performance. Power consumption is also substantially lower at 450W.

The MI300X's Geekbench 6 debut reveals just how poor such a test is for measuring higher performance GPUs. Sure, sometimes the results aren't terribly out of whack, but OpenCL driver optimizations alone likely account for a large amount of the potential performance. The test can run on a wide range of hardware — Qualcomm's Snapdragon X Elite as an example posts a score of 23,493 — but it's clearly not tuned for all potential workloads. Like most synthetic benchmarks, it only looks at a very narrow slice of the potential performance on tap.

And that's fine, just as long as people looking at the benchmarks know what they mean. We're pretty certain the MI300X result is more just someone with access to AMD's MI300X having some fun seeing what would happen on Geekbench 6, rather than a serious effort to evaluate the GPU. We can't wait to see how 1.2 million GPUs in a supercomputer cluster rate in the same test.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

Krieger-San You mention in the title that the GPU is 12x more expensive, yet there's no factual information anywhere in the article to state its price in comparison to 4090.Reply

Out of curiosity, what pricing information are you referring to? -

JarredWaltonGPU Reply

A quick Google search suggests MI300X costs around $20,000. 🤷♂️Krieger-San said:You mention in the title that the GPU is 12x more expensive, yet there's no factual information anywhere in the article to state its price in comparison to 4090.

Out of curiosity, what pricing information are you referring to? -

KnightShadey ReplyKrieger-San said:You mention in the title that the GPU is 12x more expensive, yet there's no factual information anywhere in the article to state its price in comparison to 4090.

Out of curiosity, what pricing information are you referring to?

Yeah, it's a mediocre written article a couple of days after others commented on the price difference, and get's the price difference wrong realistically. 🙄

Even using THG's own numbers from this article;

https://www.tomshardware.com/tech-industry/artificial-intelligence/nvidias-h100-ai-gpus-cost-up-to-four-times-more-than-amds-competing-mi300x-amds-chips-cost-dollar10-to-dollar15k-apiece-nvidias-h100-has-peaked-beyond-dollar40000

MI300X usual base price is $15K, but M$ is getting them flr $10K.

Now RTX4090 on Amazon, quick look find a PNY as cheapest I can see for $1,739

https://www.amazon.com/geforce-rtx-4090/s?k=geforce+rtx+4090

Which makes the price difference of $15K/1.7 = 8.8x , not the 10 of the original articles nor the 12 of this one. 🧐 -

KnightShadey ReplyJarredWaltonGPU said:A quick Google search suggests MI300X costs around $20,000. 🤷♂️

Really? Unlikely.

Seems like THG should've stuck with their own article source rather than the reddit post on google of $20K, especially given the 2+ day lag from the original breaking of this result in other articles that do quote price and not ask the reader to google it;

https://www.techradar.com/pro/amd-pulverizes-nvidias-rtx-4090-in-popular-geekbench-opencl-benchmark-but-you-will-need-a-small-mortgage-to-buy-amds-fastest-gpu-ever-produced

BTW, google also quotes the $10K number in THG's article for M$ price which would make the ratio ever smaller at 5.75x compared to current cheapest RTX4090 on Amazon.

Considering that there is a legacy GPU buyer's guide with pricing (a descendant of the early one Cleeve, Pauldh, and I started in the forum) , seems like at least the latest 4090 pricing should've been readily on hand. 🤔 -

atmapuri Aren't all RTX cards from NVidia LHR? Low-Hash-Rate meaning that OpenCL performance is reduced by 10x? We always use AMD because of that. I dont understand what is Geekbench measuring on 4090.Reply -

JarredWaltonGPU Reply

You're rather missing the point here, which isn't the price of the MI300X compared to RTX 4090 or anything else, but instead is the fact that running Geekbench 6 OpenCL benchmarks on a single MI300X is silly. I've updated the "twelve times" to "eight times" though, which obviously completely changes... nothing at all as far as the rest of the text is concerned.KnightShadey said:Really? Unlikely.

Seems like THG should've stuck with their own article source rather than the reddit post on google of $20K, especially given the 2+ day lag from the original breaking of this result in other articles that do quote price and not ask the reader to google it;

https://www.techradar.com/pro/amd-pulverizes-nvidias-rtx-4090-in-popular-geekbench-opencl-benchmark-but-you-will-need-a-small-mortgage-to-buy-amds-fastest-gpu-ever-produced

BTW, google also quotes the $10K number in THG's article for M$ price which would make the ratio ever smaller at 5.75x compared to current cheapest RTX4090 on Amazon.

Considering that there is a legacy GPU buyer's guide with pricing (a descendant of the early one Cleeve, Pauldh, and I started in the forum) , seems like at least the latest 4090 pricing should've been readily on hand. 🤔 -

JarredWaltonGPU Reply

Nvidia LHR specifically detected Ethereum hashing and attempted to limit performance. It did not impact (AFAIK) any other hashing algorithms and was not at all universal to OpenCL. Later software implementations were able to work around the hashrate limiter, but then Ethereum ended proof of work mining and went proof of stake and so none of it matters. Ada Lovelace GPUs (RTX 40-series) do not have any LHR stuff in the drivers or firmware, because by the time they came out, GPU mining was horribly inefficient and thus no one was buying 4090 etc. to do crypto. Or at least, no one smart.atmapuri said:Aren't all RTX cards from NVidia LHR? Low-Hash-Rate meaning that OpenCL performance is reduced by 10x? We always use AMD because of that. I dont understand what is Geekbench measuring on 4090. -

jeremyj_83 Reply

This is akin to using Geekbench to measure the performance of sever CPUs. I remember seeing Geekbench leaks for the 128c/256t Epyc 9754. The numbers are completely useless as Geekbench isn't for server products.JarredWaltonGPU said:Geekbench 6 OpenCL benchmarks on a single MI300X is silly. -

DS426 It would have been enough to say that MI300X is "several times as expensive" as 4090 as there's simply no arguing that with no specific multiplication asserted. Providing a specific multiple infers to me that the author wants to communicate that there's lower value of the MI300X, which clearly isn't the case since consumer vs. enterprise is being contested and at least admittedly as the article points out, doesn't account for AI performance or basically any other particular performance metrics.Reply

That said, going on pricing history of the MI250 and what I can tell is realistic pricing for non hyperscalers like M$ and such, $15K is probably pretty common, even if there's several cases of contracts coming in closer to $12K or so. -

Reply

However, despite being one of the fastest GPUs on the Geekbench 6 charts, the AMD GPU's score does not reflect its real performance and shows why it's a terrible idea to benchmark datacenter AI GPUs using consumer grade OpenCL applications (which is what Geekbench 6 is).

Don't use Geekbench 6 OpenCL as a measuring stick for enterprize-grade hardware, in other words.

First of all, no one said GB OpenCL is the perfect benchmark for testing server grade hardware.

And, obviously, the score also won't reflect the card's real world performance, and is a terrible idea, but that was NOT the whole point of this benchmark which was done by an independent testing group. Nope. We all know this.

They just wanted to test the card's performance in Geekbench's OpenCL benchmark. Nobody in their sane mind would use this benchmark as a measuring stick to judge AMD MI300X accelerator's performance either. And that was also not the motive for this test either.

No, this was just a test done to check the OpenCL score of this accelerator. That's all.

Also, comparing the MI300X with the RTX 4090 is obviously silly and unfair, and makes no sense since they both are totally different products, differ vastly in hardware/compute specs, and target entirely different markets as well.

FWIW, the benchmark already has few Nvidia Quadro and other server cards in the ranked listing. This isn't the first time a server-level GPU has been spotted though.

19% faster than RTX 4090, and only twelve times as expensive

Funny how you use the word "only" as if the MI300X is some cheap and an affordable consumer product. We are talking about 10-15K USD ballpark here. Poor choice of words for the article heading, btw.

And as I mentioned before, comparing the MI300X with the RTX 4090 is just downright silly.