Why you can trust Tom's Hardware

AMD Radeon RX 9070 XT and RX 9070 Power, Clocks, Temps, and Noise

All our gaming tests are conducted using an Nvidia PCAT v2 device, which allows us to capture total graphics card power, GPU clocks, GPU temperatures, and some other data as we run each gaming benchmark. We have separate 1080p, 1440p, and 4K results for each area, which we'll order from highest to lowest resolution for these tests.

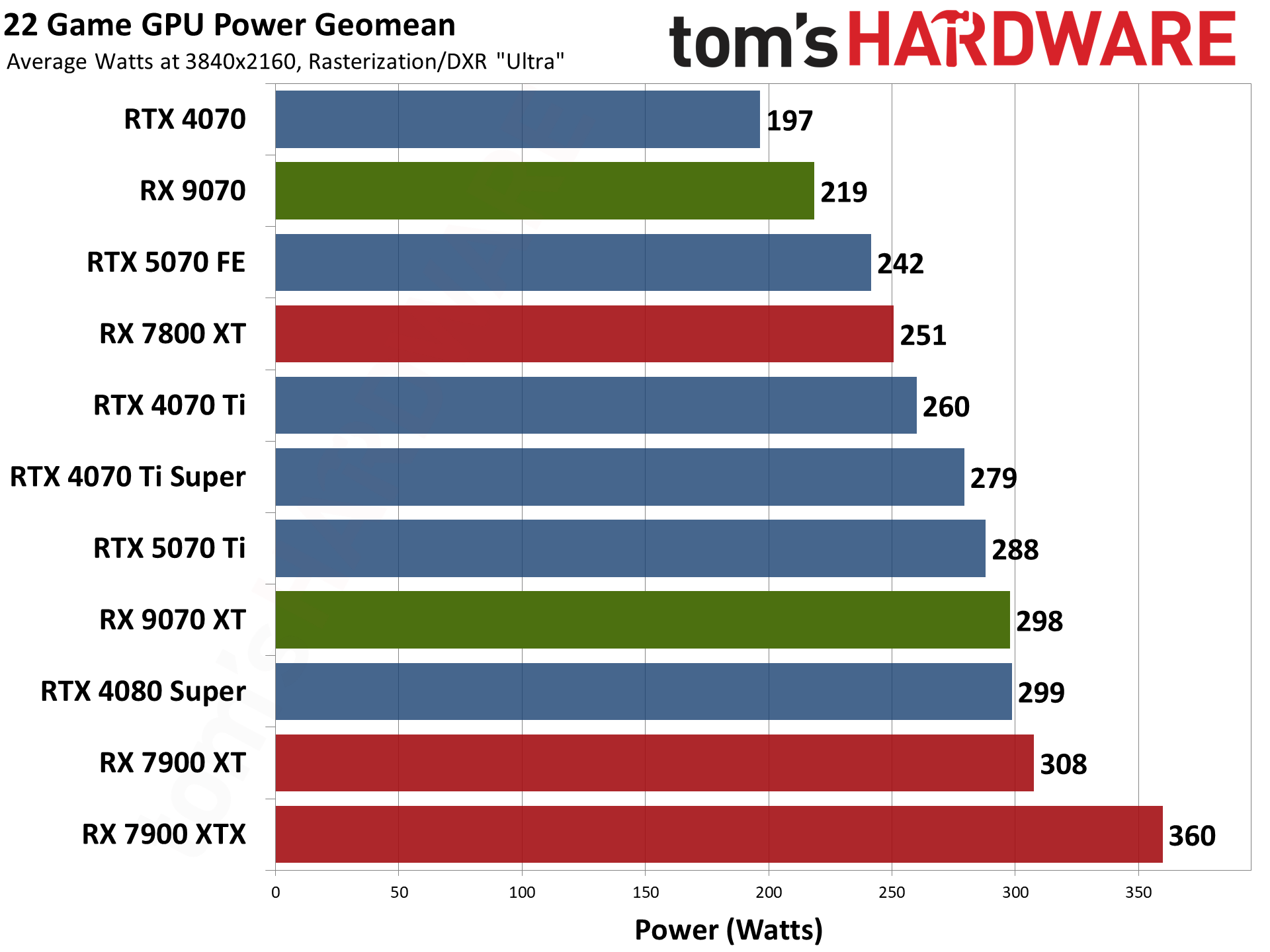

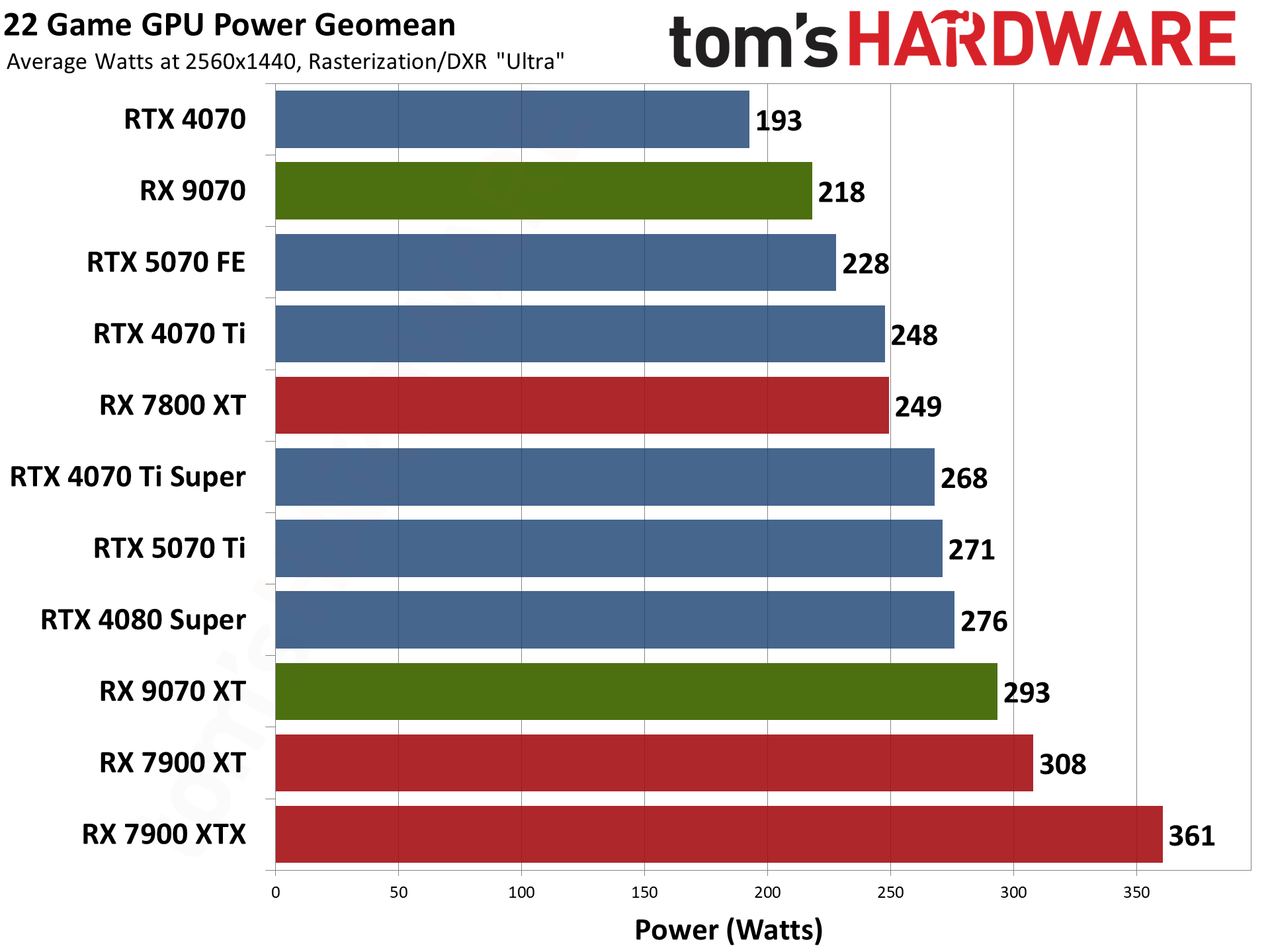

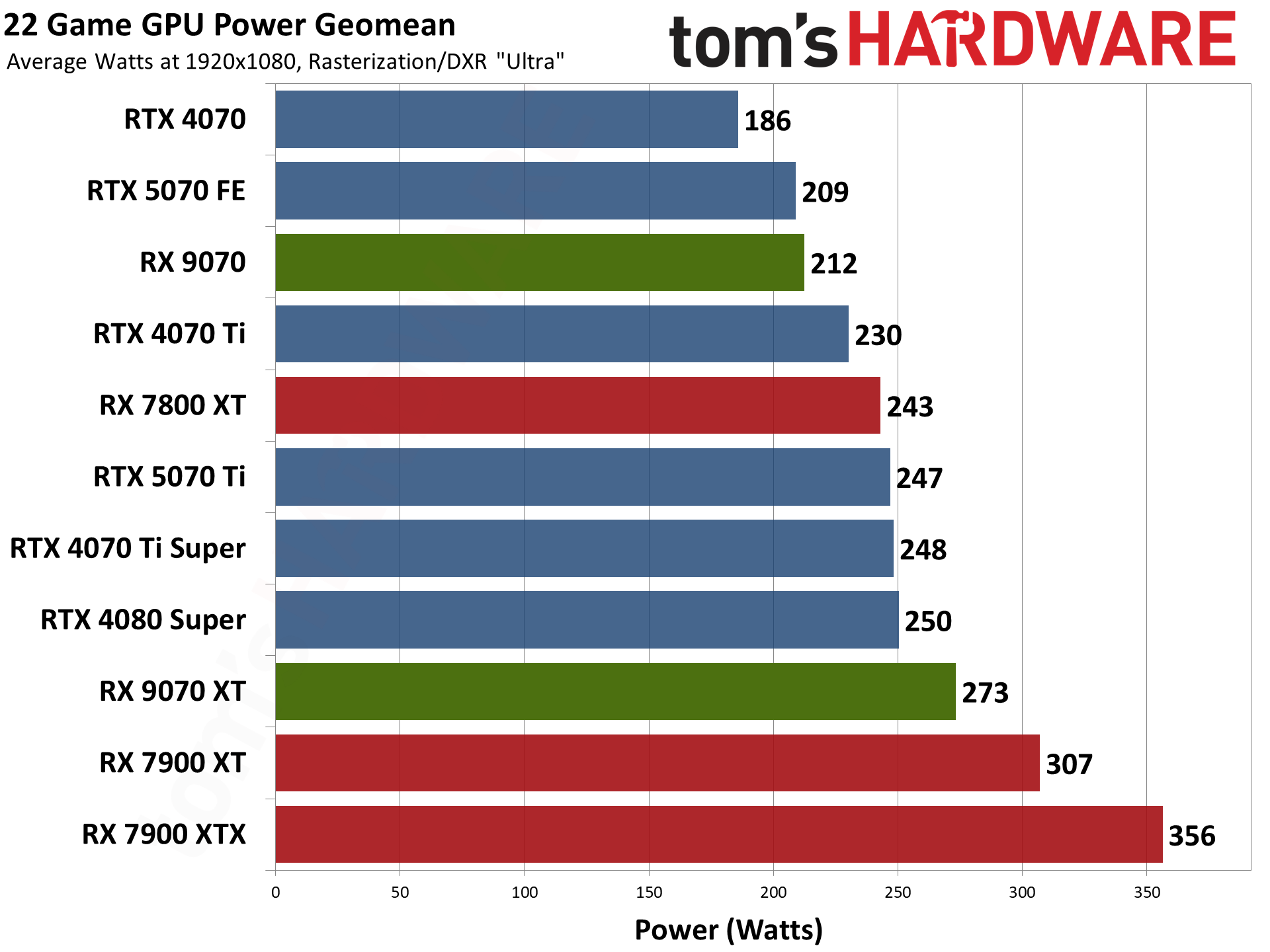

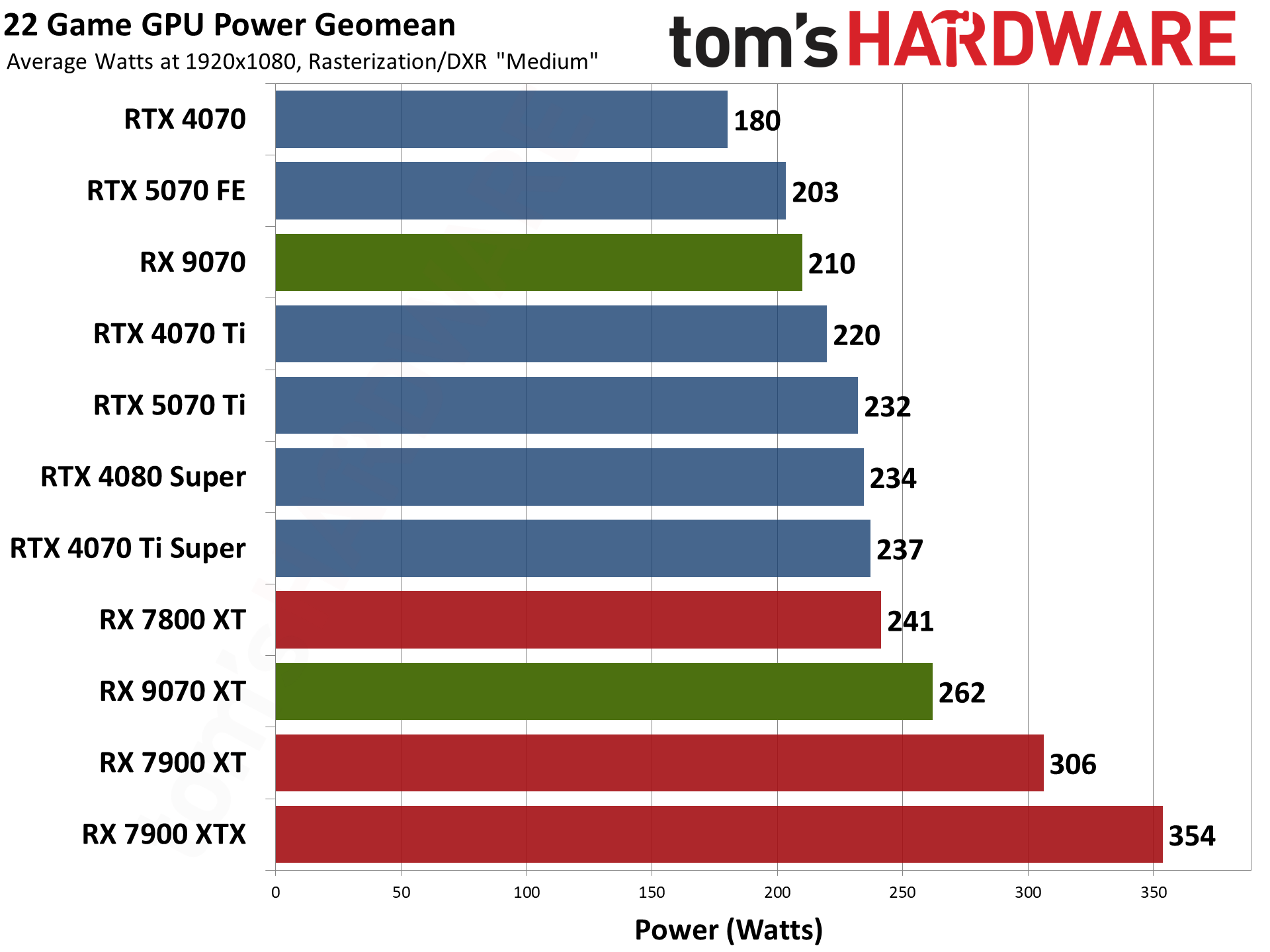

AMD's power requirements were a lot higher than Nvidia with the prior generation, but with RDNA 4 and Blackwell the two companies are more or less on the same process node — N4P for AMD and 4N for Nvidia. The 9070 XT has a 304W TBP and comes in slightly below that mark at 4K, while the 9070 has a 220W TBP and is basically right on target.

Dropping down to lower resolutions and settings reduces power draw on all the cards, and the net result is that the 9070 generally uses less power than the 5070 — they're basically tied at 1080p, while AMD proves to be more efficient by using less power at 1440p and 4K.

The 9070 XT meanwhile ends up using more power across the test suite compared to the 5070 Ti. That's interesting, as Nvidia uses more power with the 5070 relative to the 9070, while the 5070 Ti offers more performance than the 9070 XT while drawing less power.

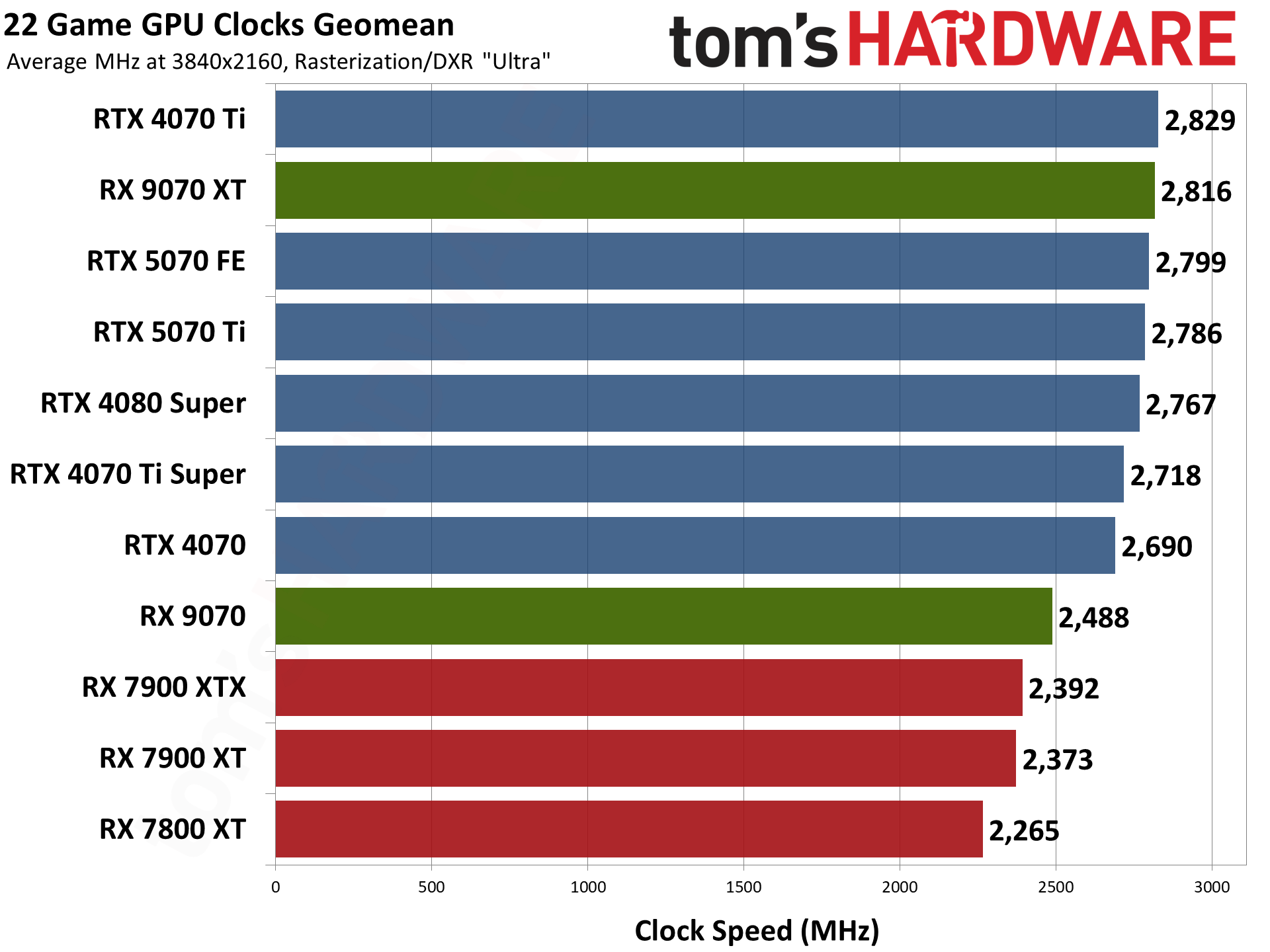

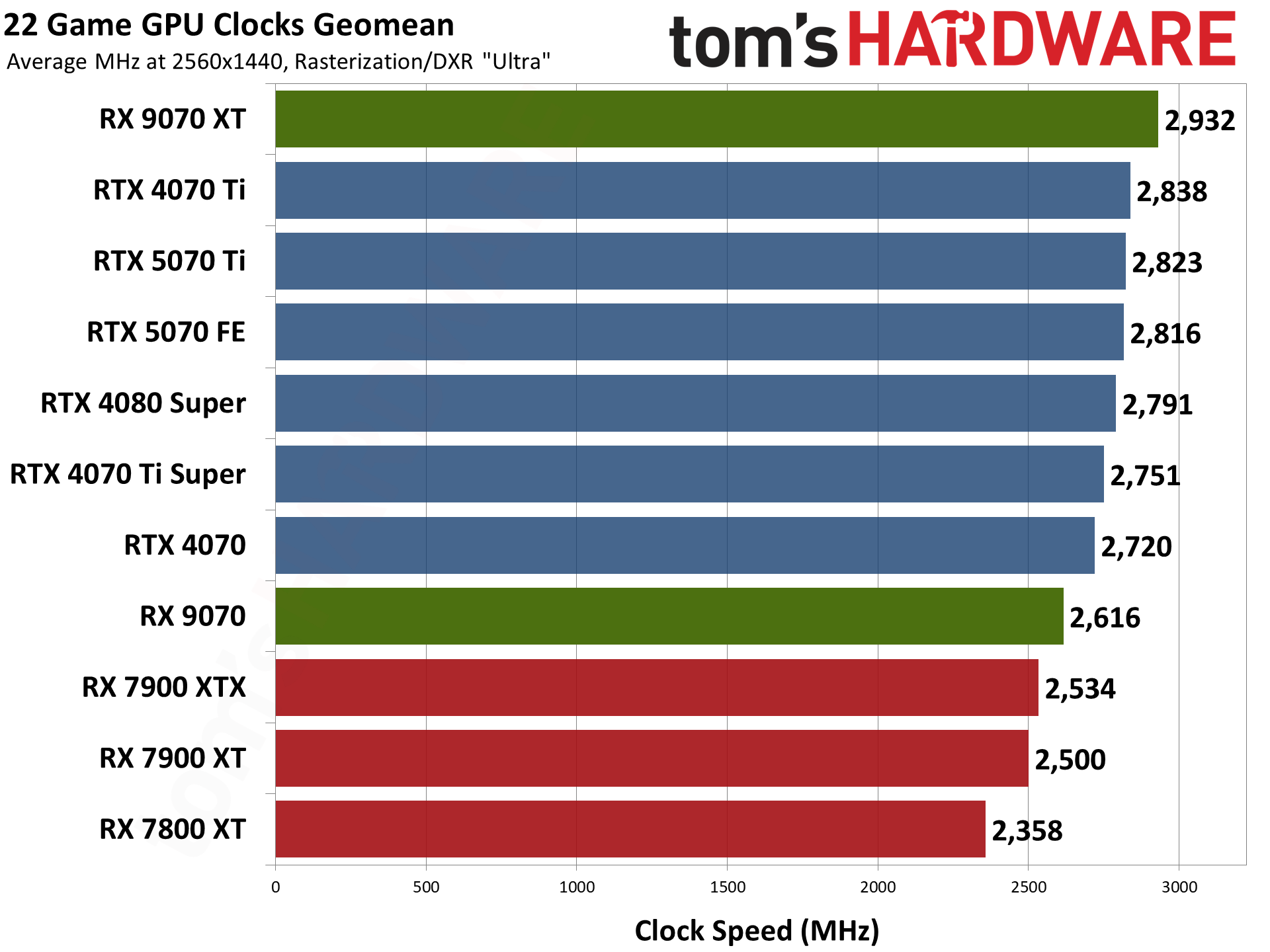

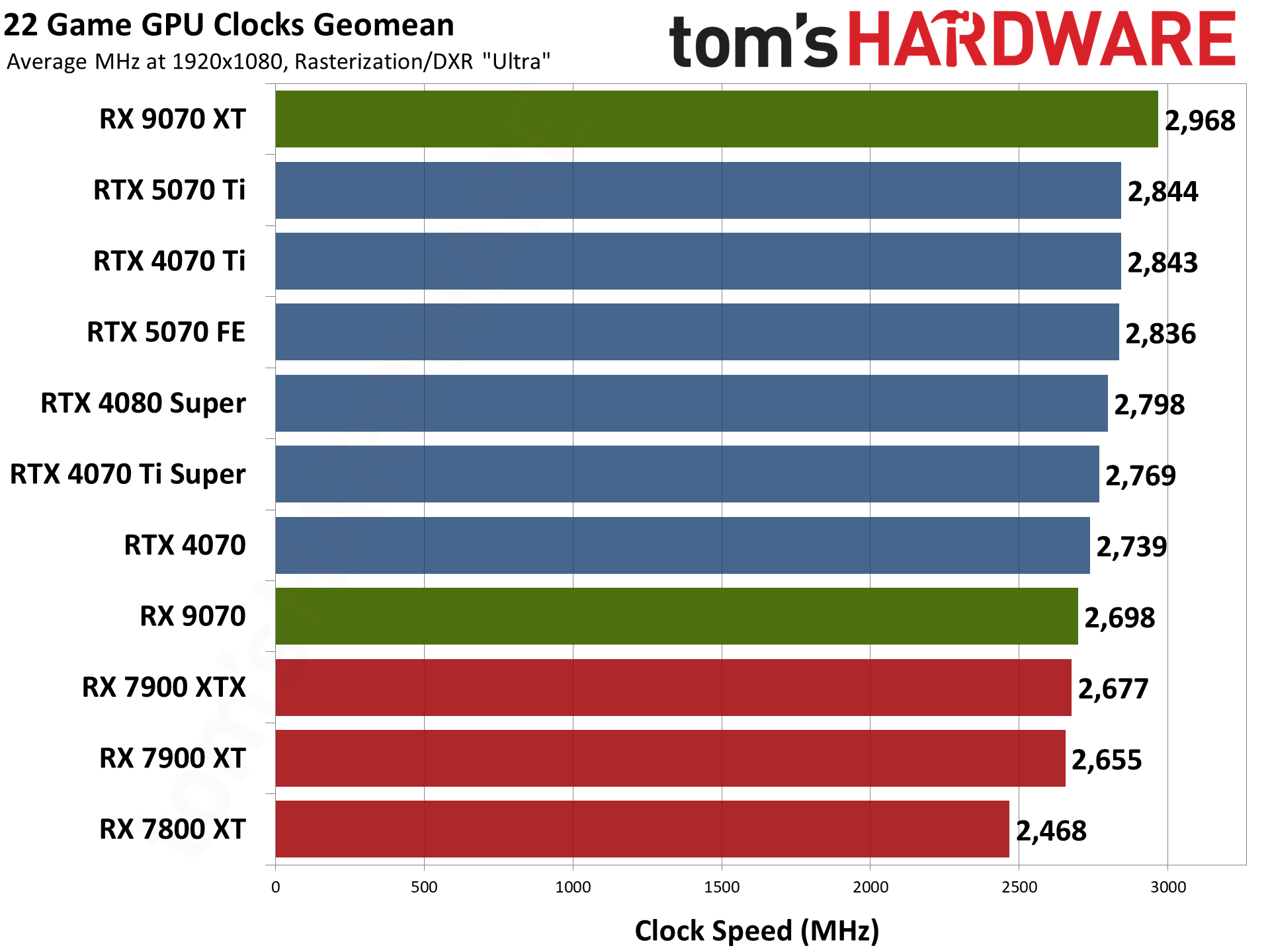

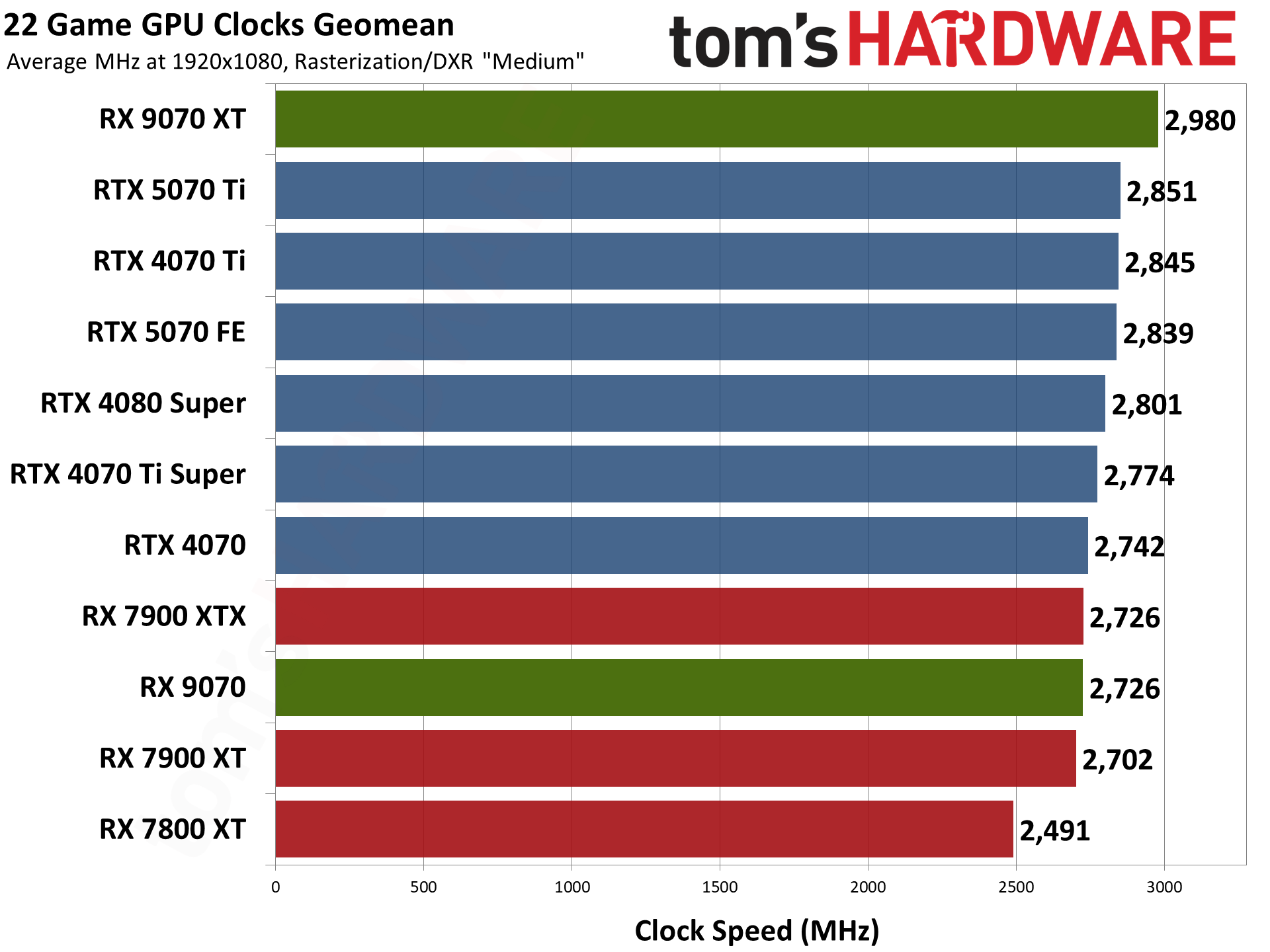

Clock speeds among the different GPUs and architectures aren't super important, but it's interesting to see where things land. AMD has increased clock speeds on average compared to RDNA 3, with the 9070 XT at times breaking the 3.0 GHz barrier even at stock settings. It does fall off the pace a bit at 4K, basically tied with the 5070 Ti, but it's over 2.9 GHz at all the lower resolutions.

For the RX 9070, it exceeds its rated boost clock at 1440p and 1080p but falls below 2.5 GHz at 4K. Power limits appear to be a significant limiting factor in performance at 4K for the card, so manually overclock could end up being quite beneficial.

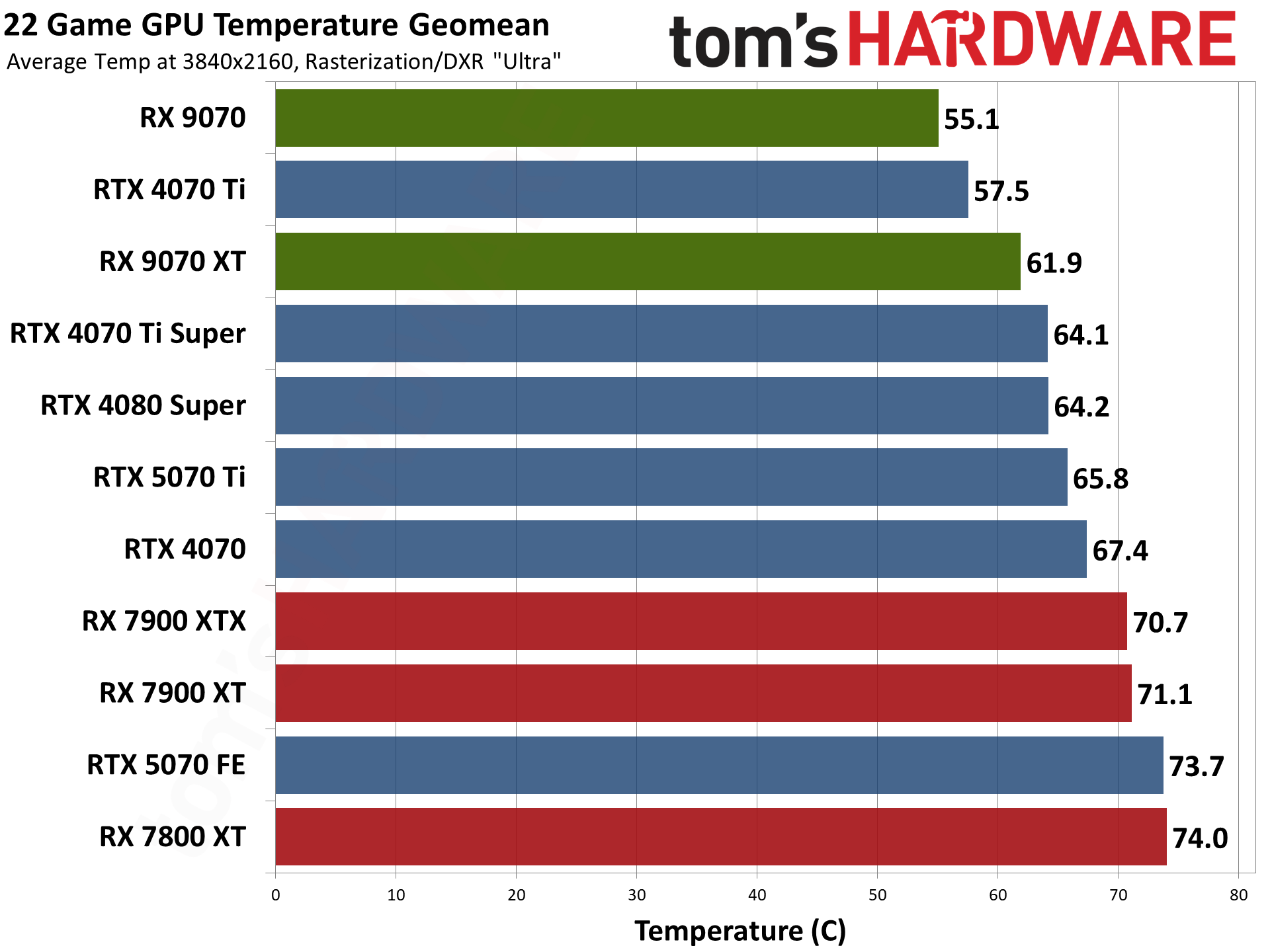

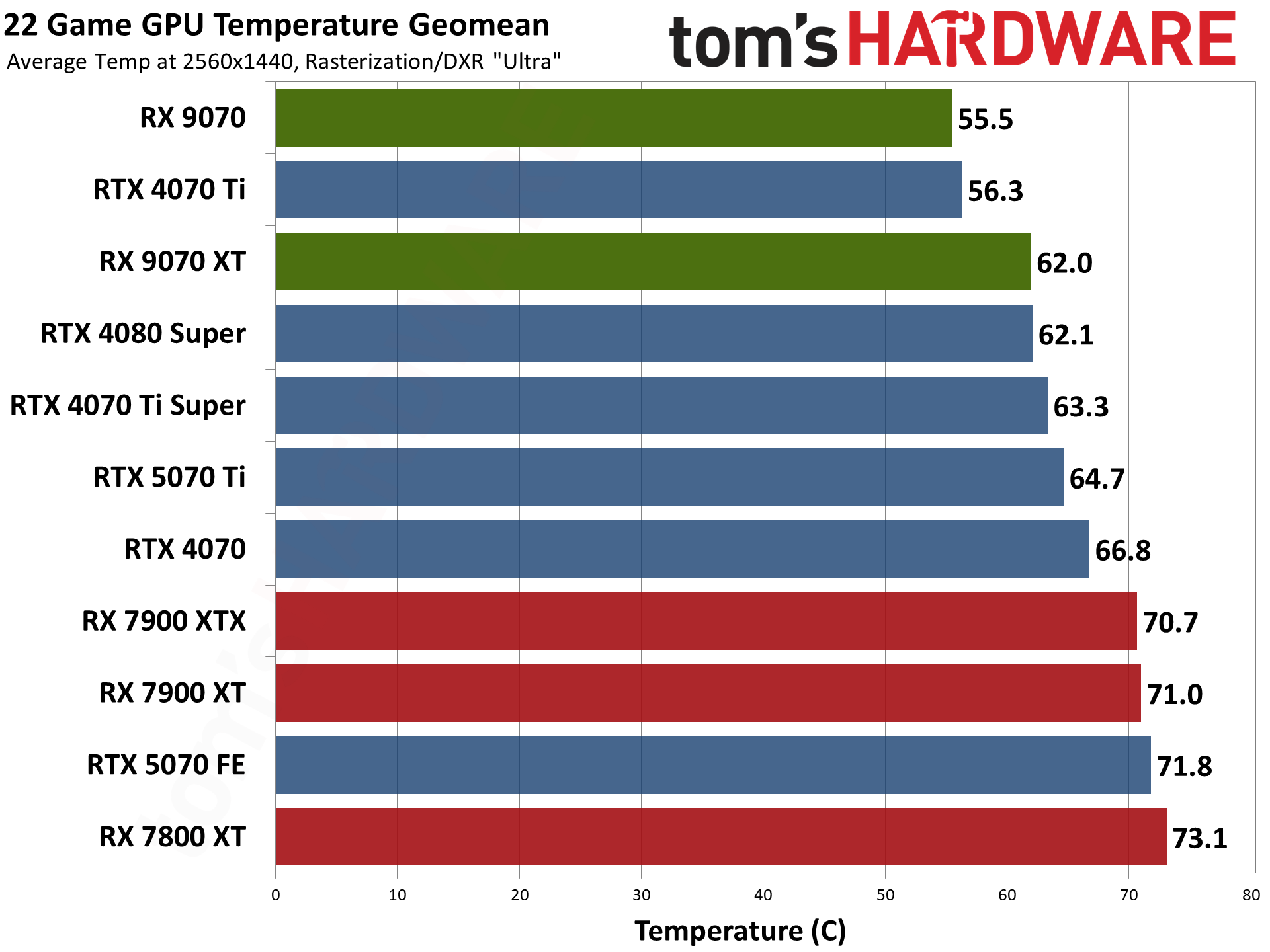

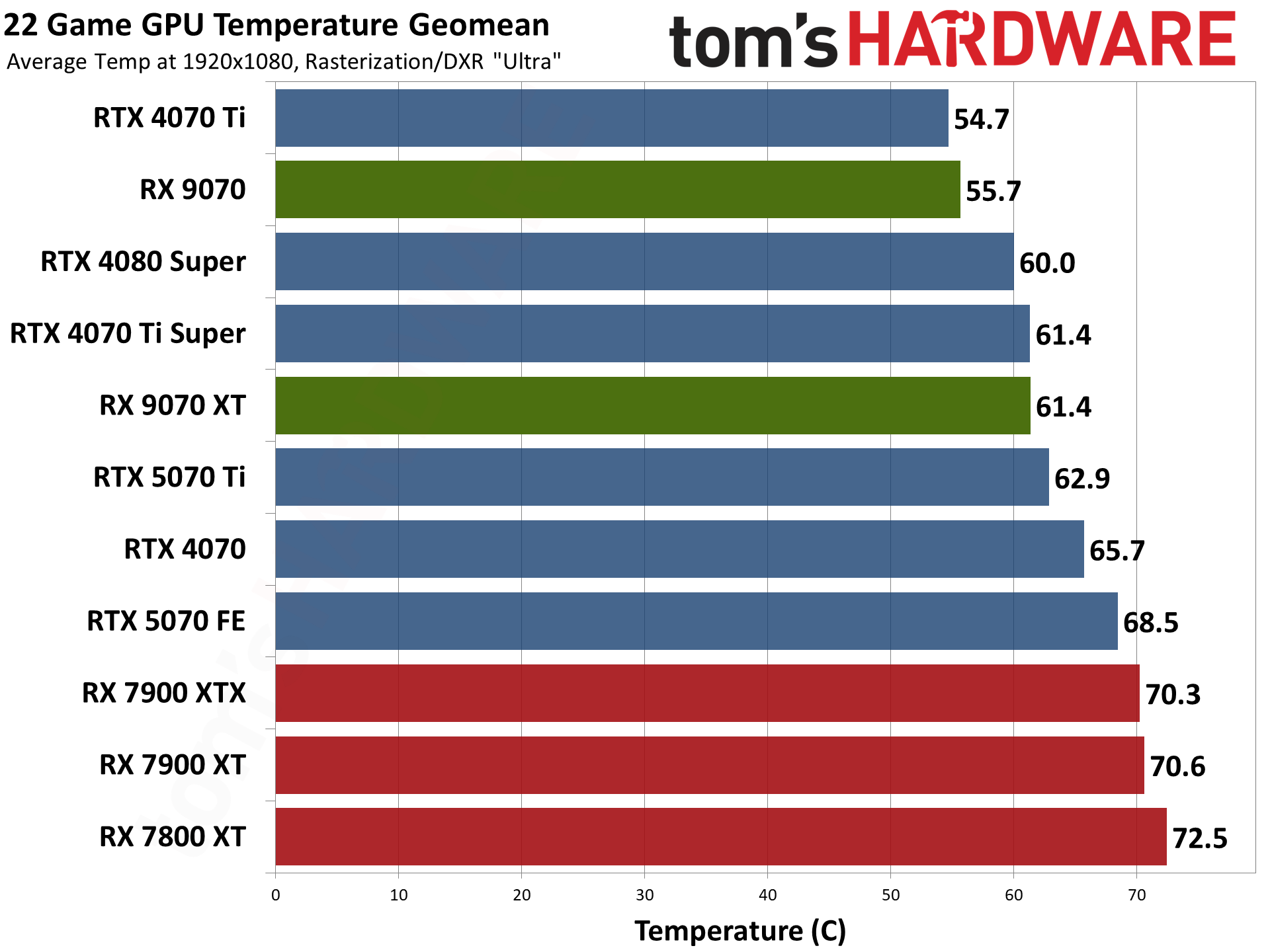

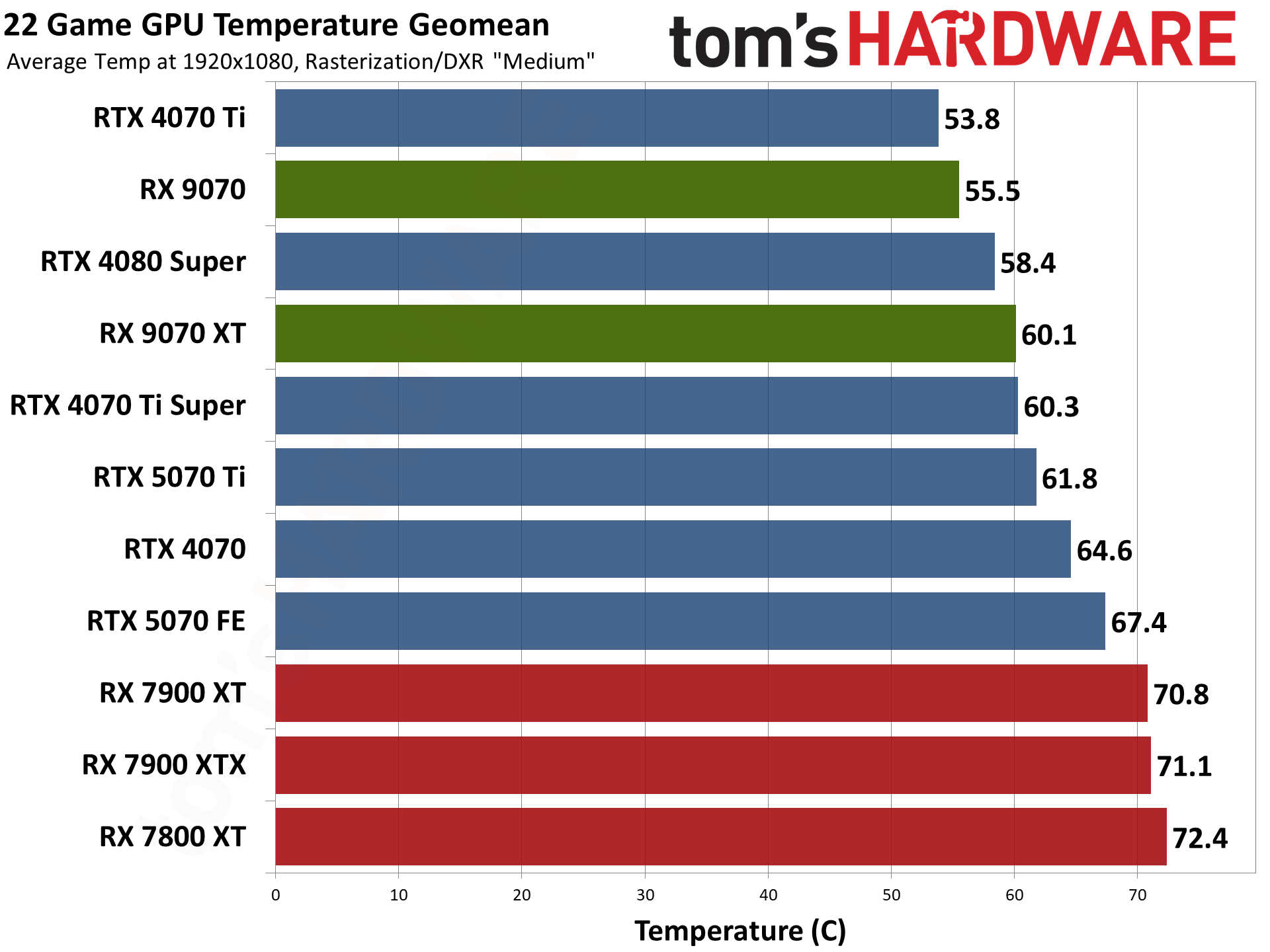

Like the clock speeds, comparing GPU temperatures without considering other aspects of the cards doesn't make much sense. One card might run its fans at higher RPMs, generating more noise while being "cooler." So these graphs should be used alongside the noise and performance results.

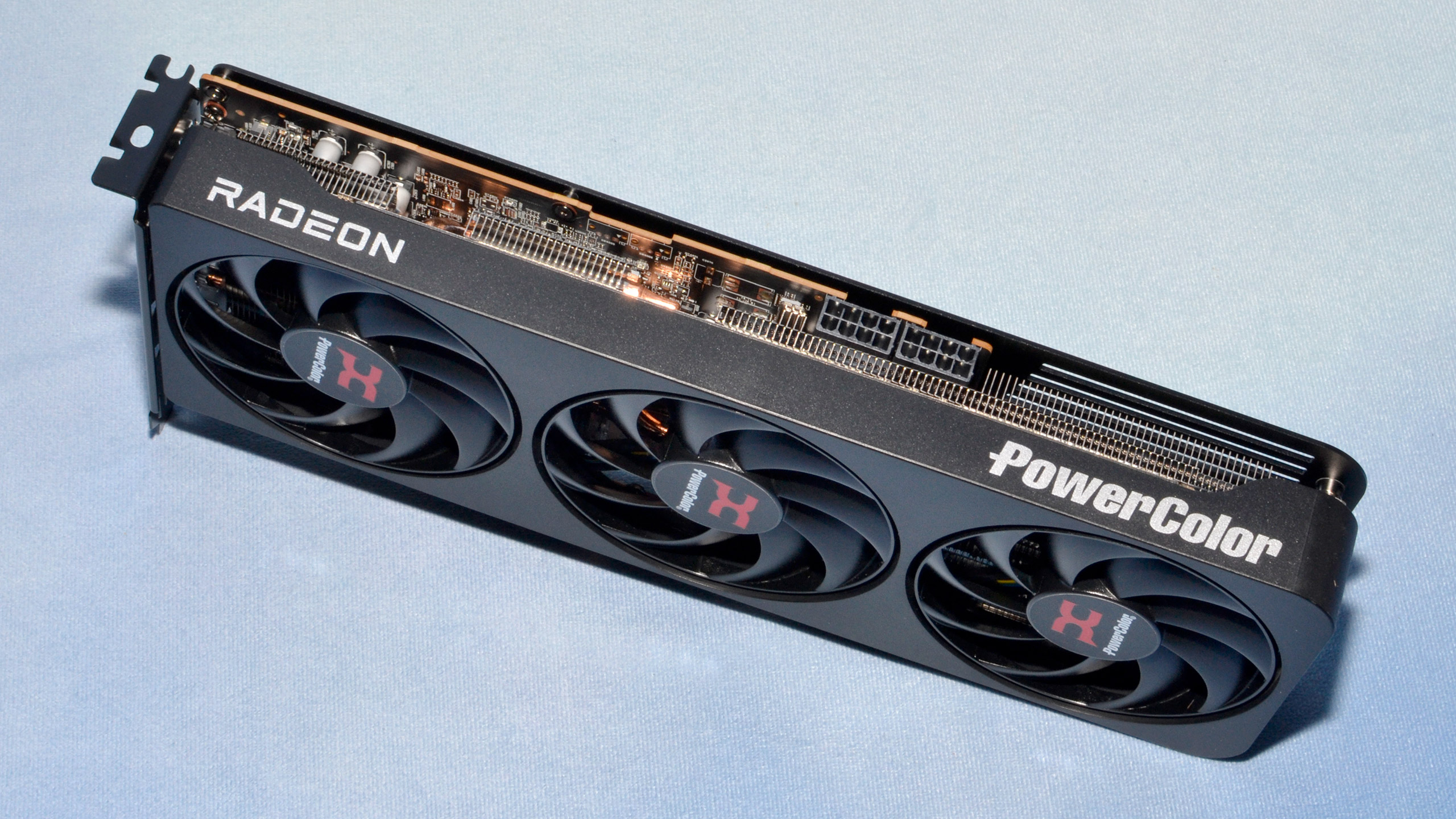

AMD doesn't make reference 9070 cards, so the results here are a reflection of the GPUs to a certain degree, but really they're more an indication of how the PowerColor Reaper cards run. And they do a lot better than the 5070 Founders Edition, considering it's one of the hotter running cards.

But we also need to look at noise levels...

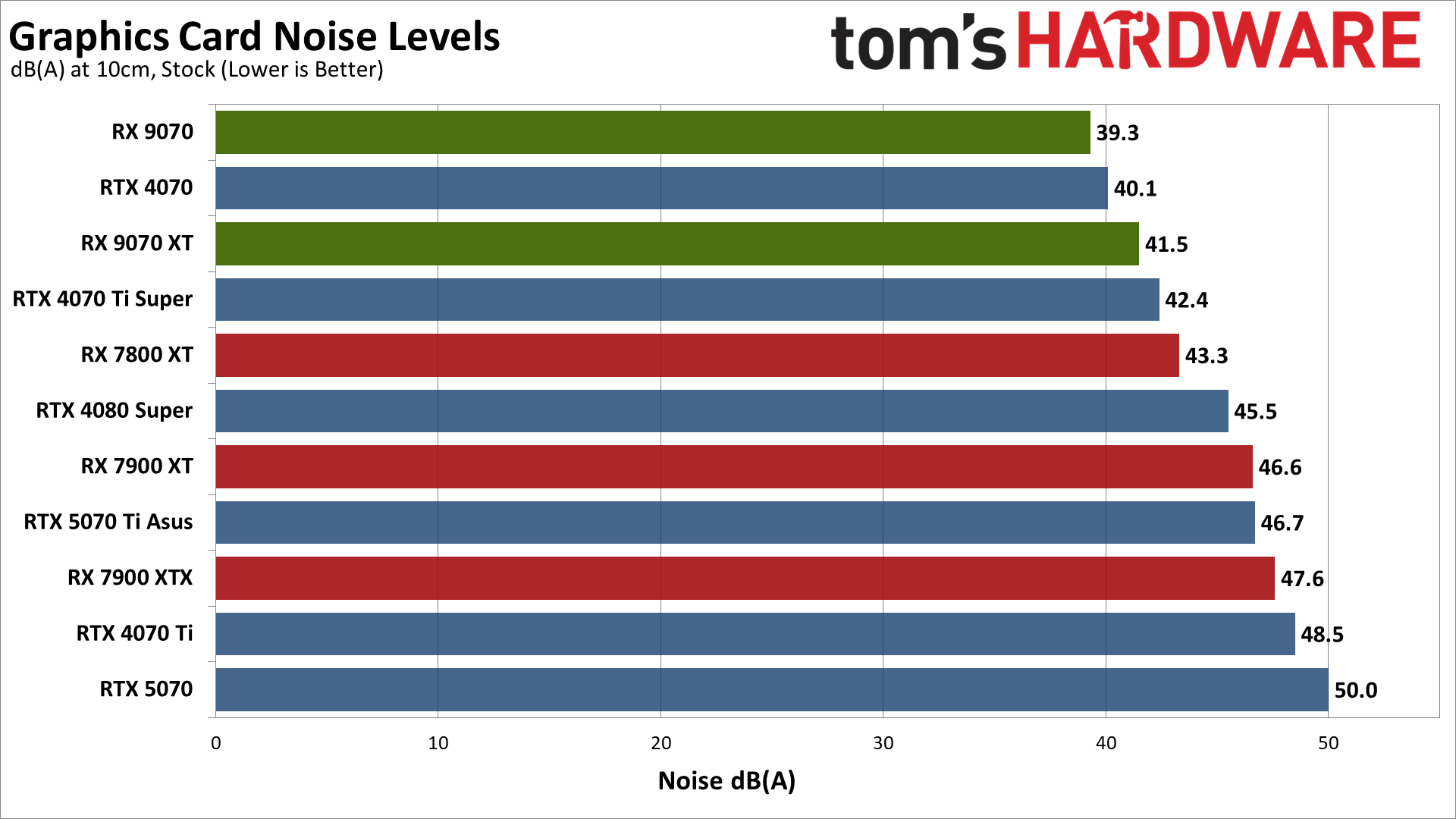

We check noise levels using an SPL (sound pressure level) meter placed 10cm from the card, with the mic aimed right at the center of one fan: the center fan if there are three fans, or the right fan for two fans. This helps minimize the impact of other noise sources, like the fans on the CPU cooler. The new noise floor of our test environment and equipment is around 34 dB(A), due to the noise from the CPU cooling pump.

Even more impressive than the thermals on the PowerColor cards is their noise levels. Only the RTX 4070 ended up being quieter than the 9070 XT, but it also ran quite a bit warmer. Not that any of these cards are really running hot, but it does show that traditional cooler designs with triple fans are still very capable.

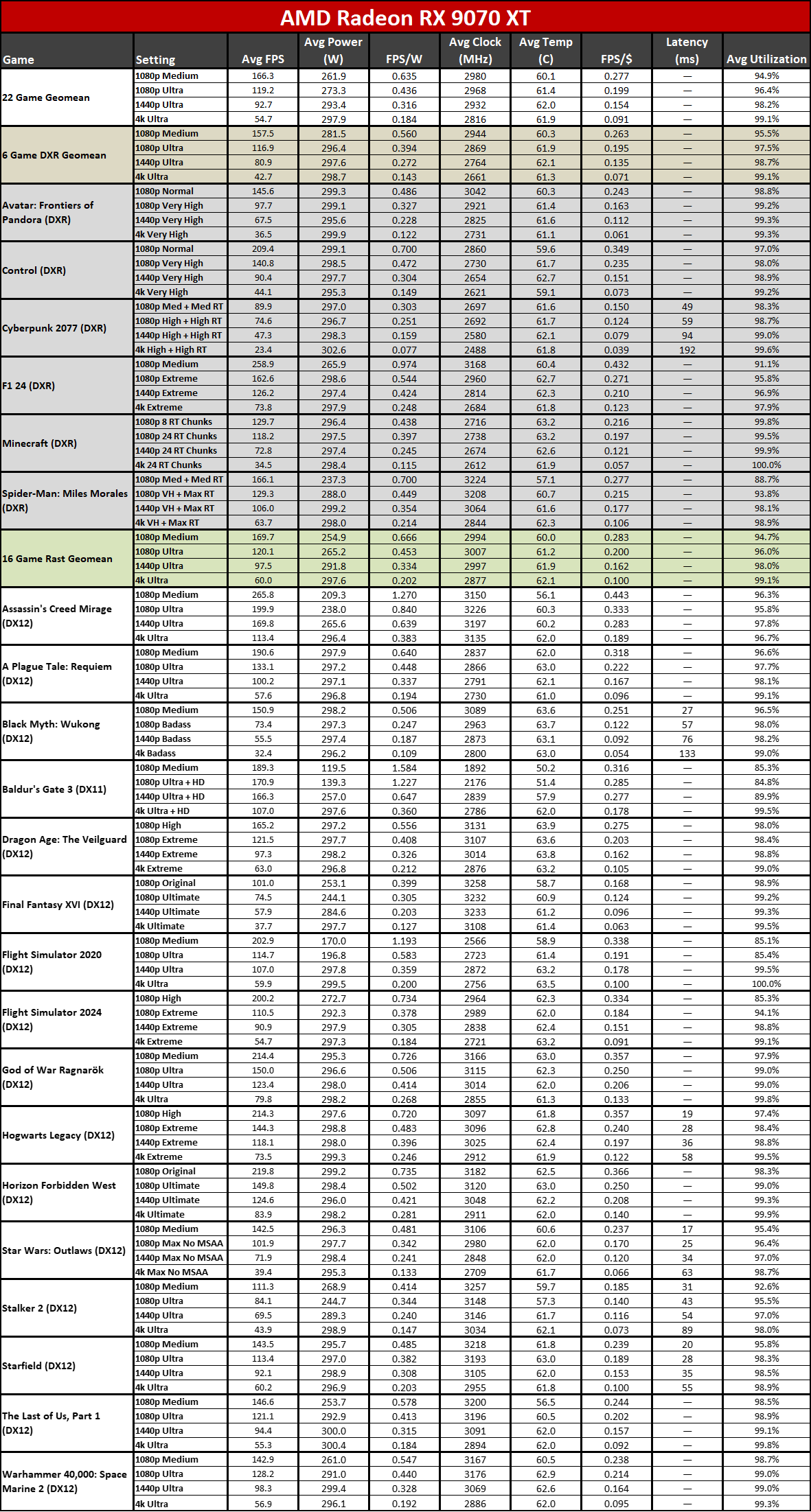

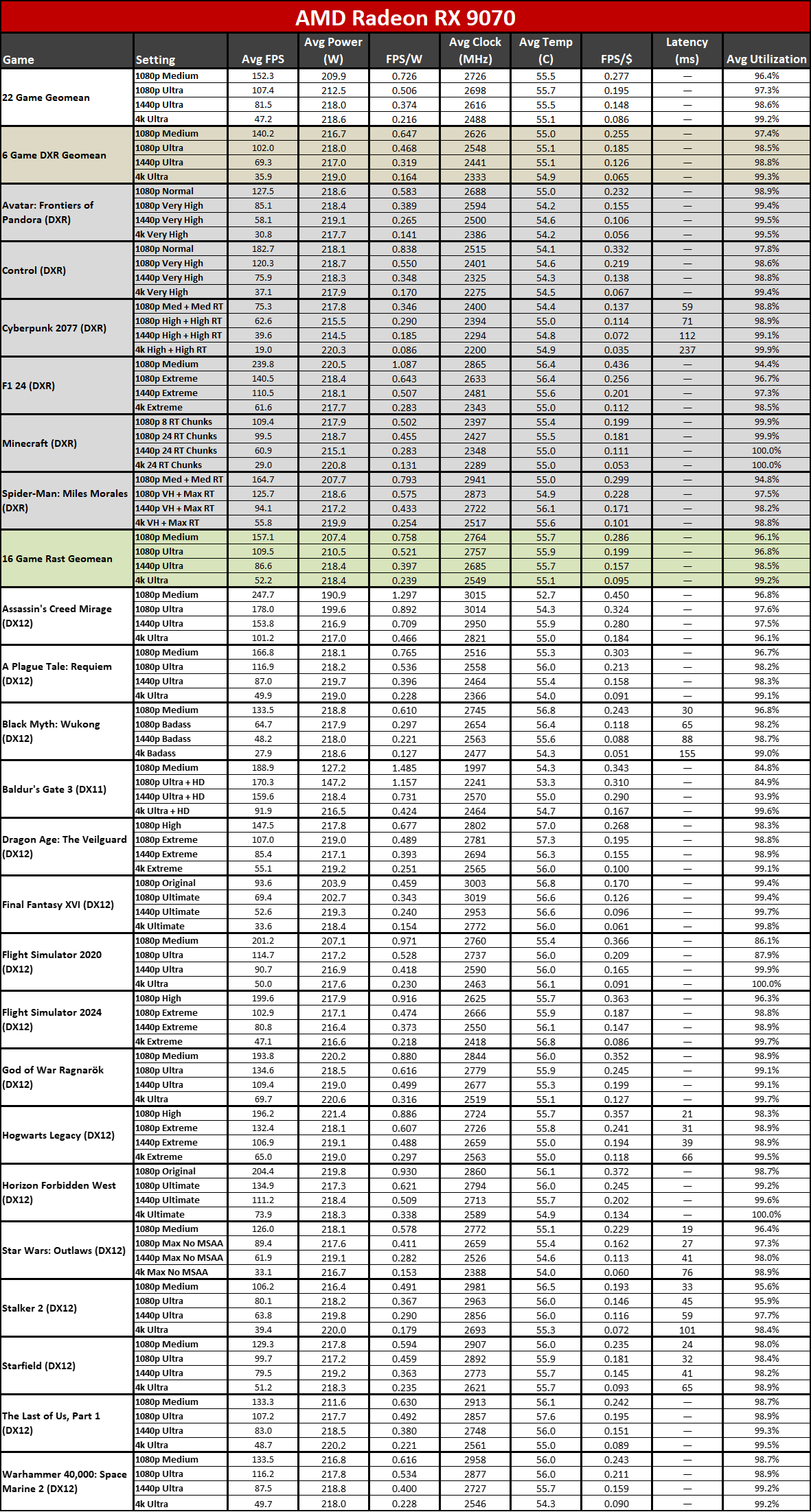

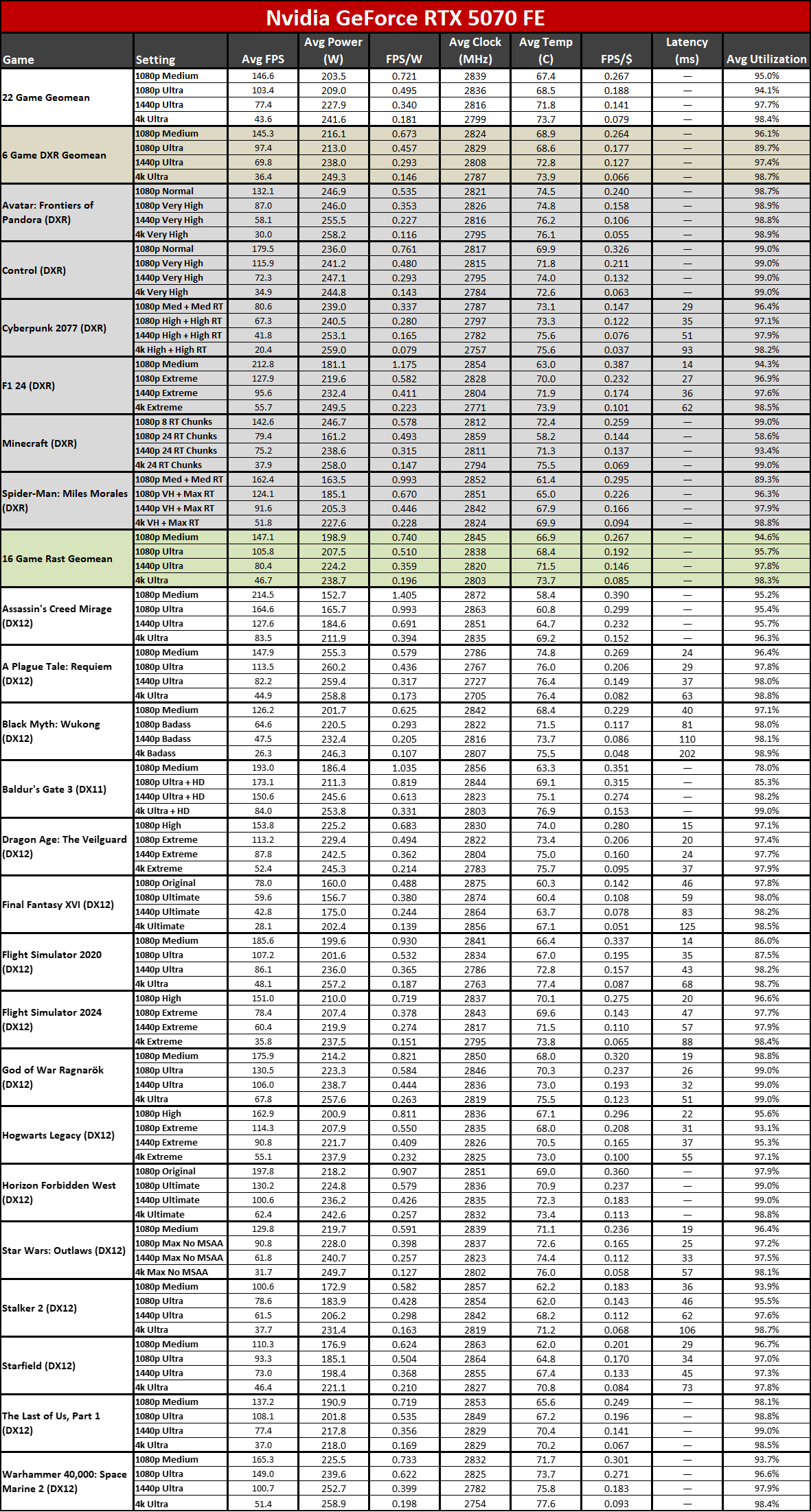

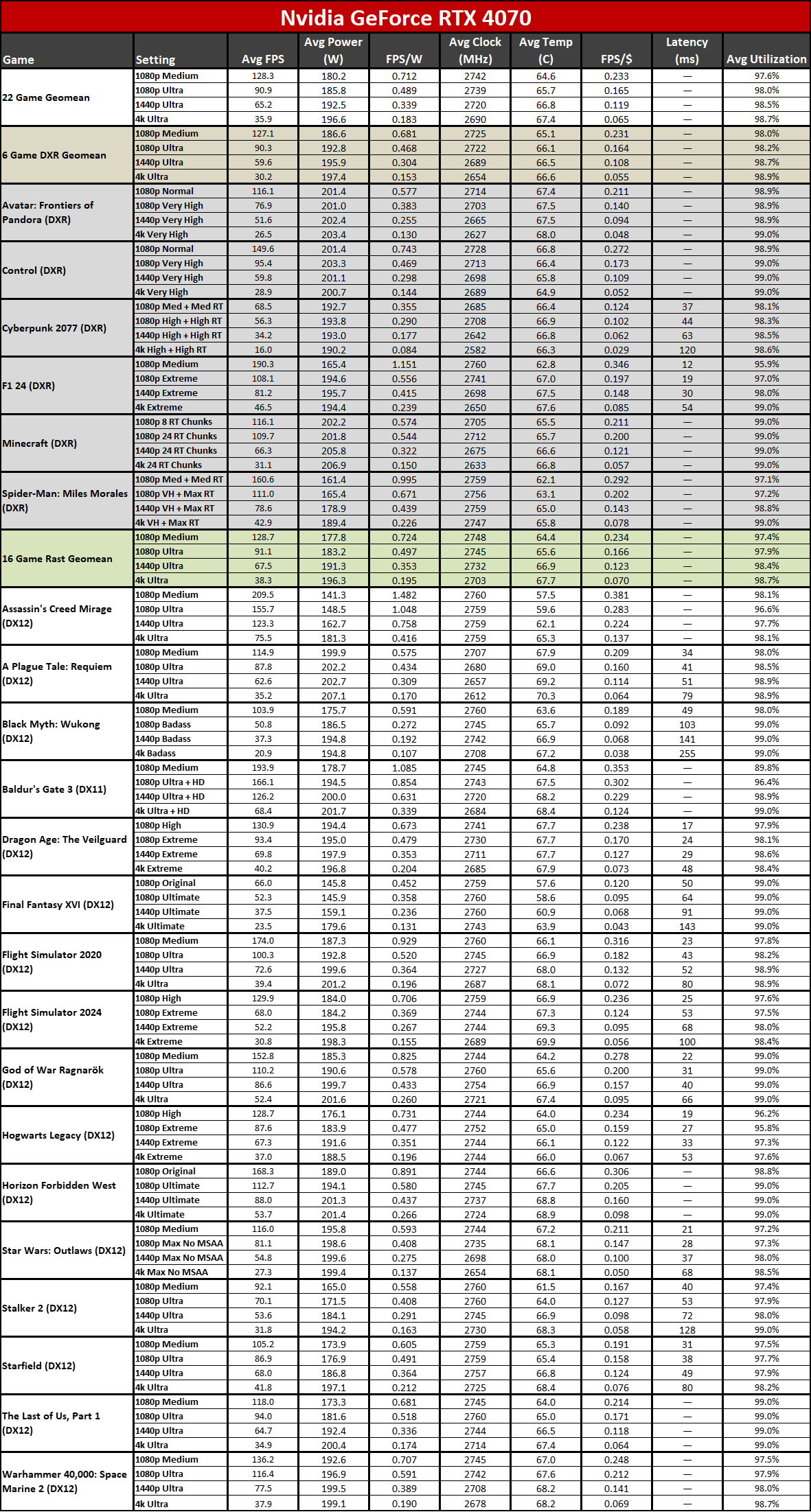

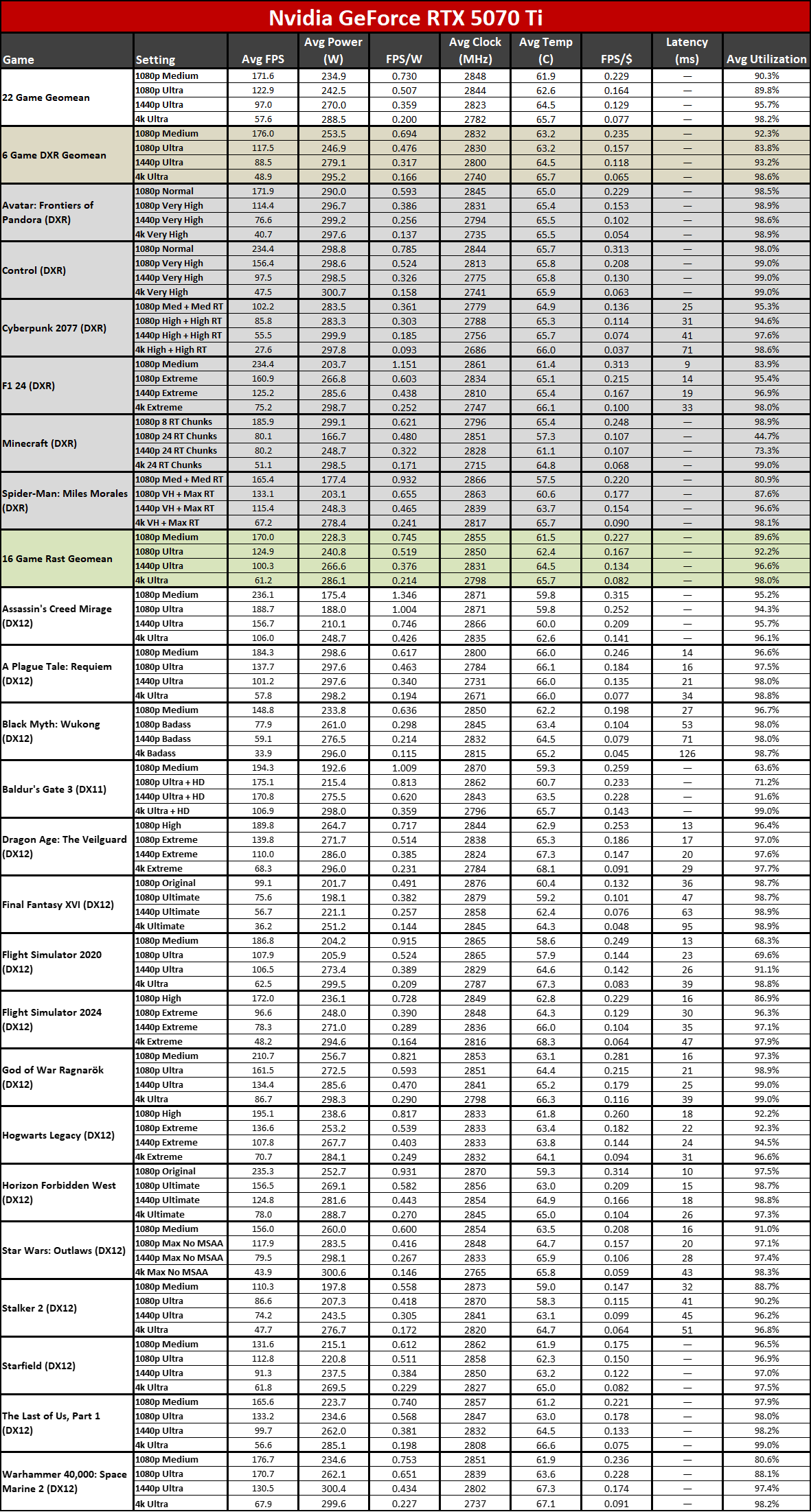

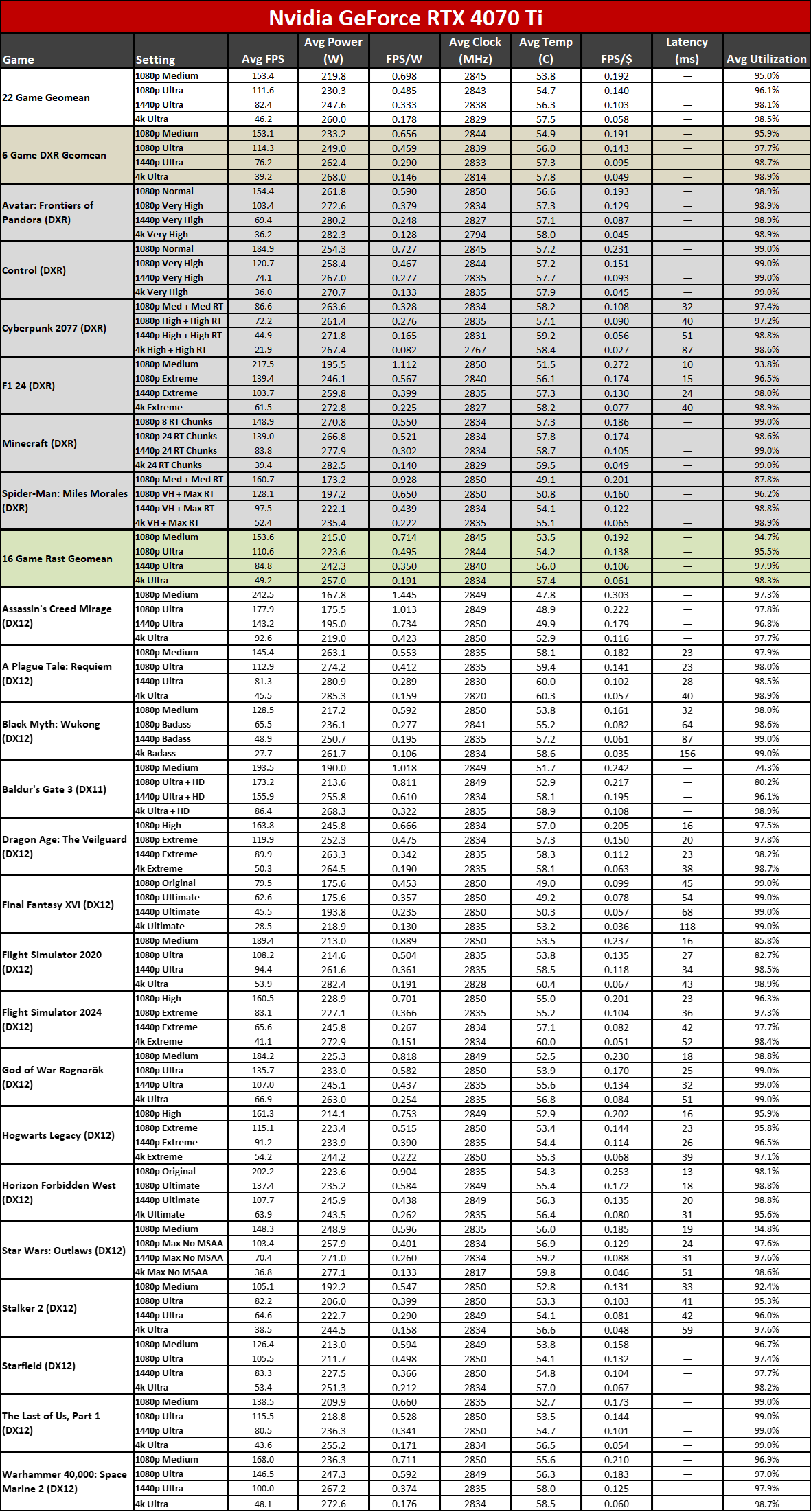

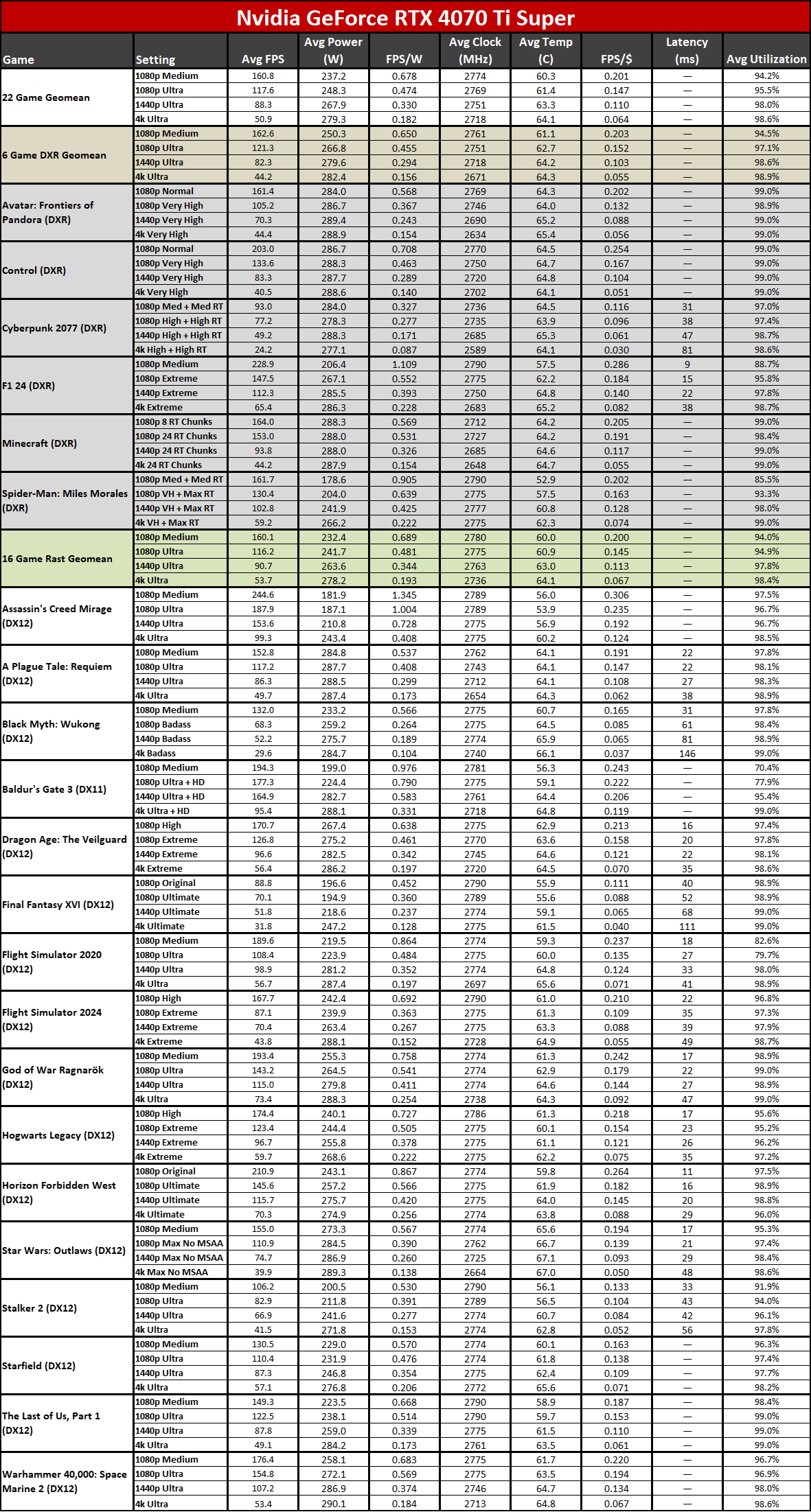

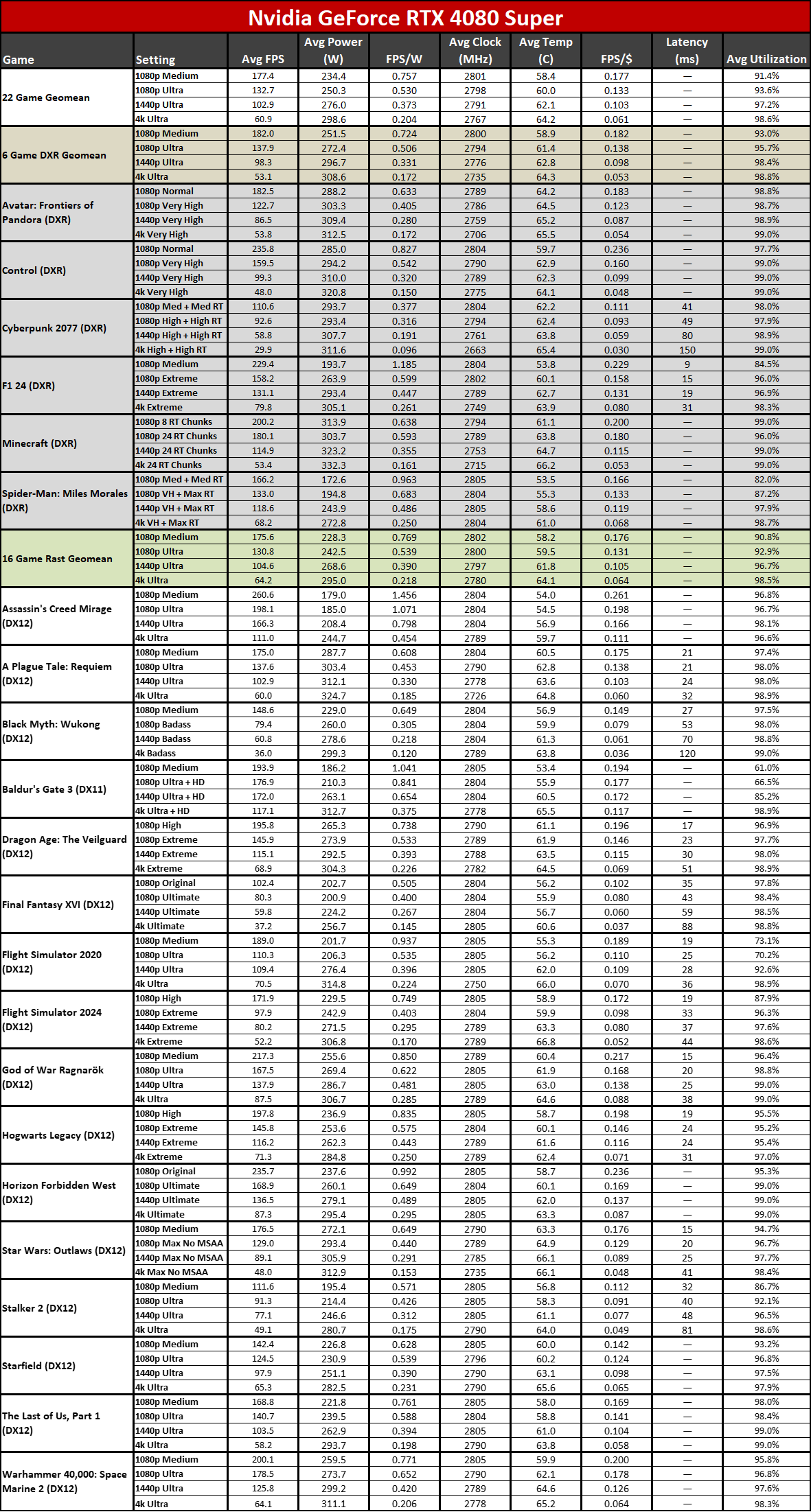

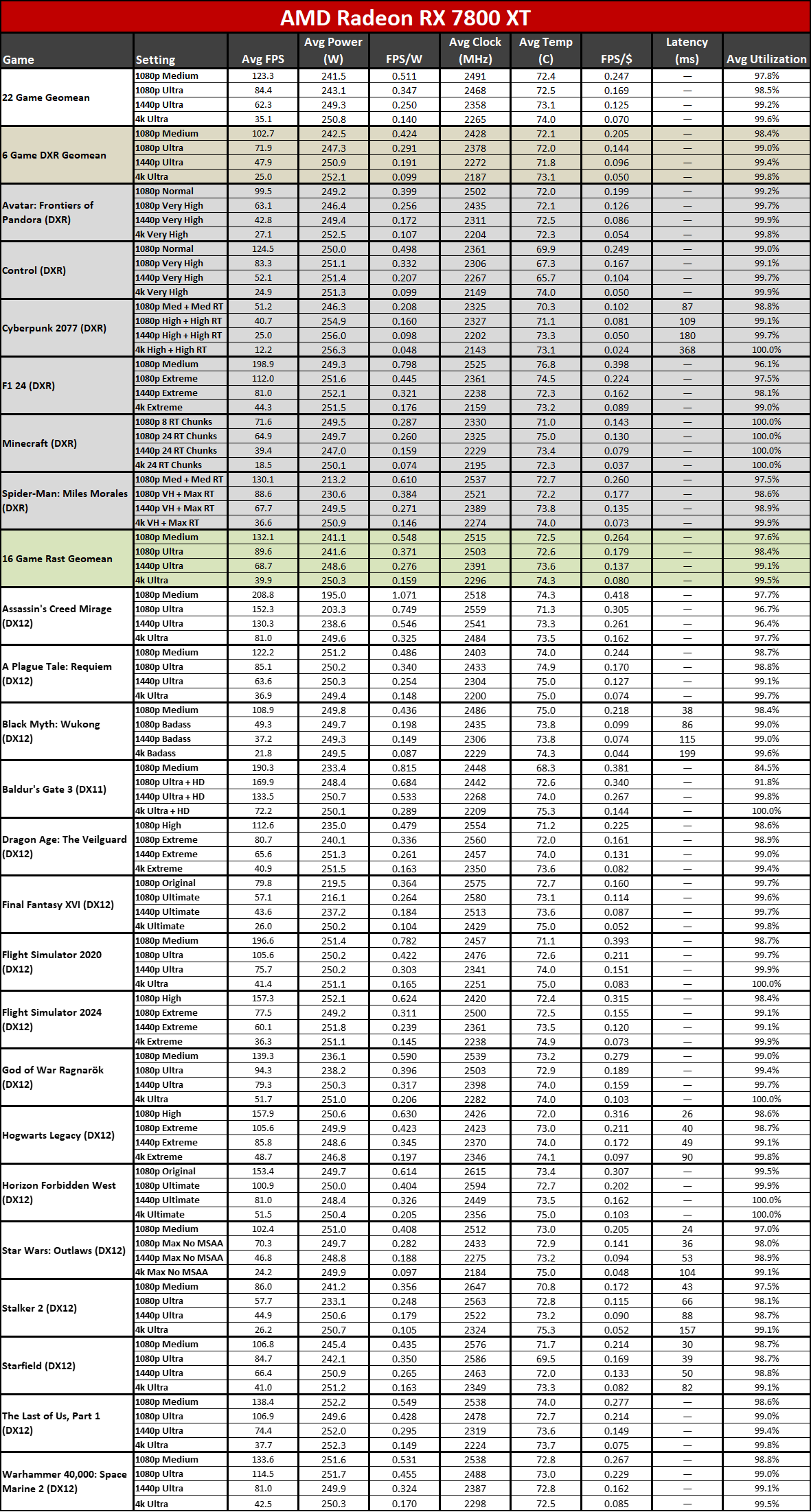

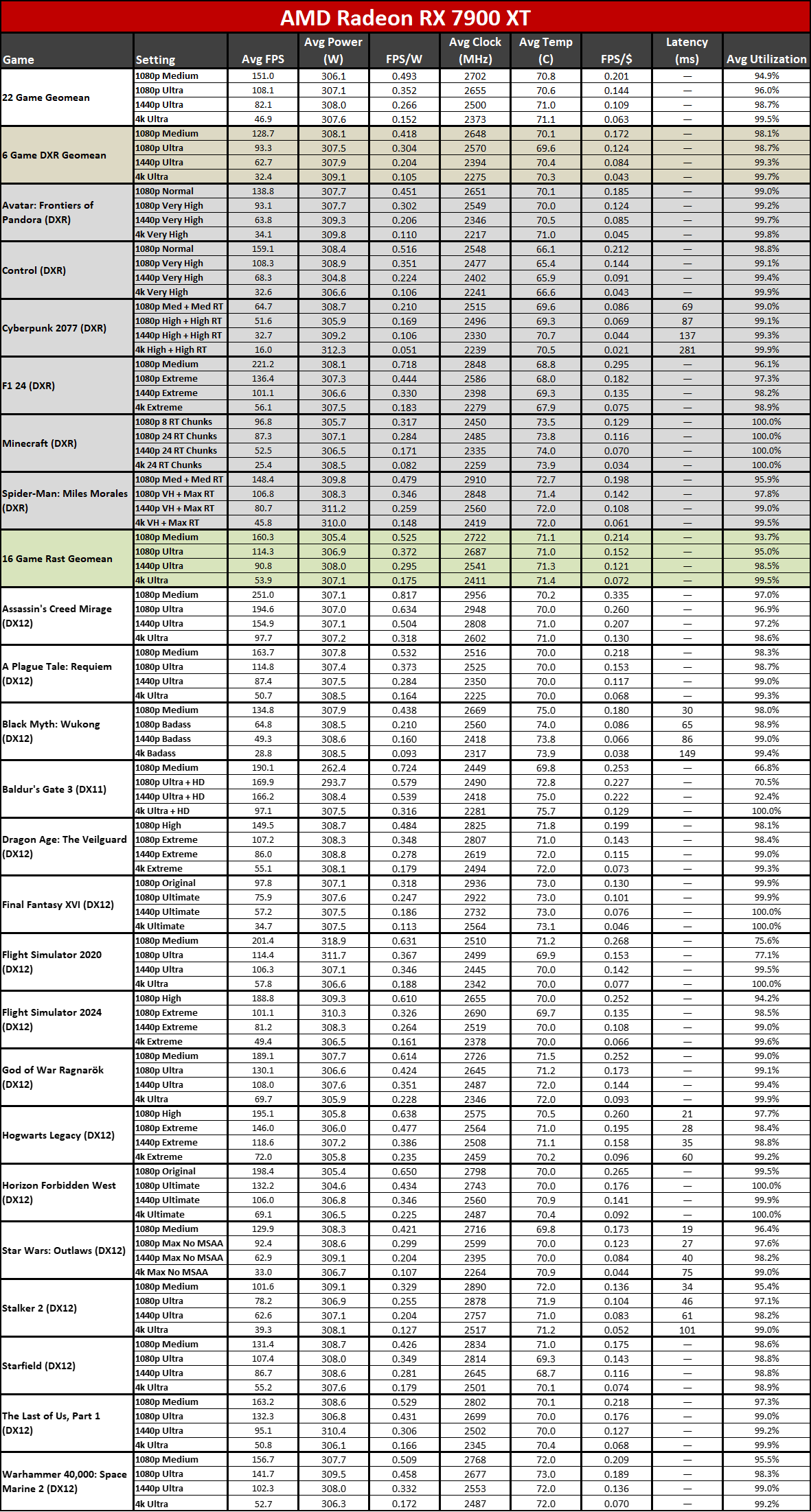

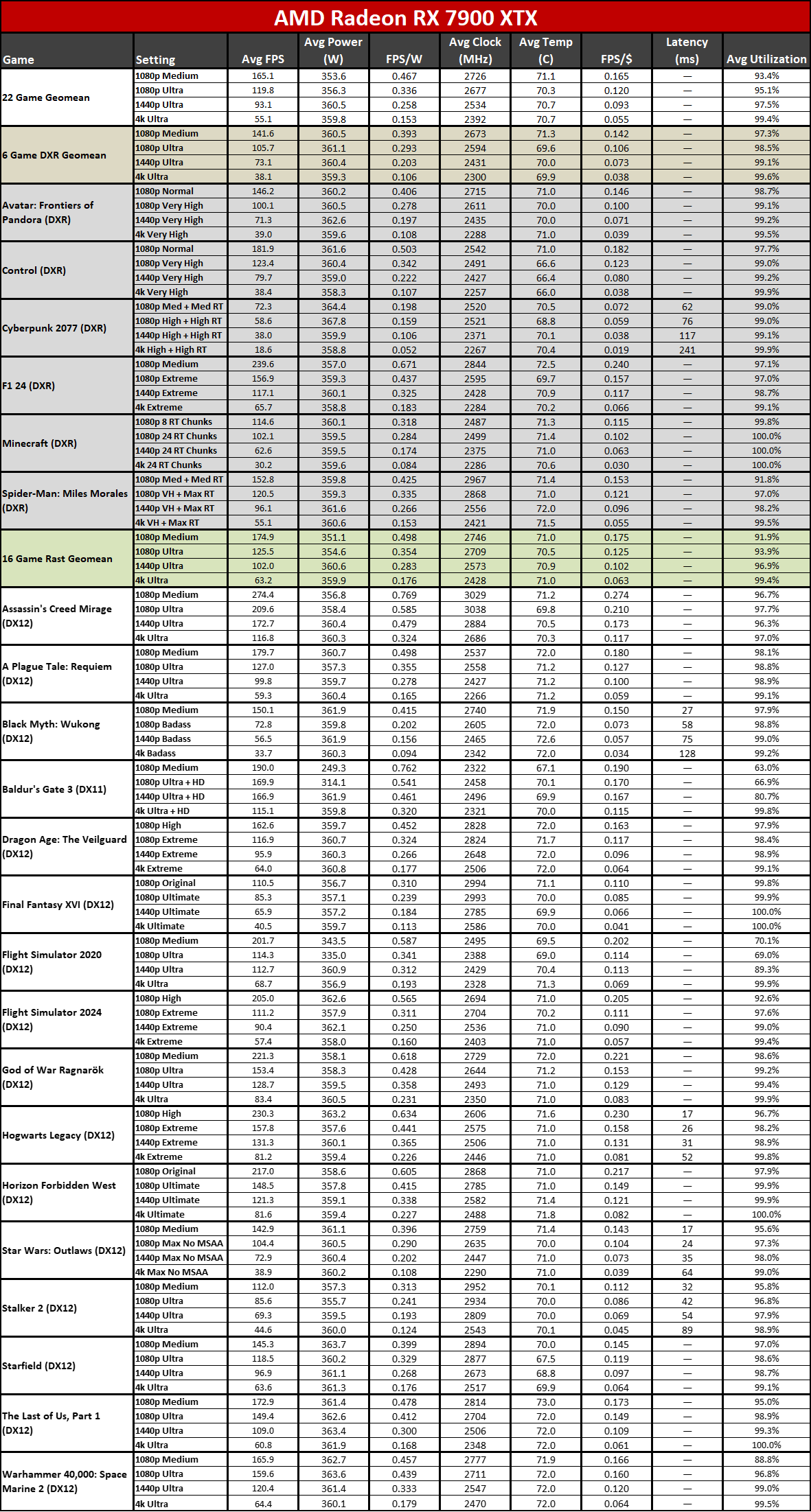

Here's the full table of testing results, with FPS/$ calculated using the various launch MSRPs for the cards. That's because current retail prices are all wildly inflated, and many of the previous generation GPUs are now discontinued. We can only hope prices on the latest generation cards actually manage to reach MSRPs at some point. (Wishful thinking, perhaps.) Latency results are included for some of the games as well, and you can see the game-by-game power figures.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: AMD Radeon RX 9070 XT and RX 9070 Power, Clocks, Temps, and Noise

Prev Page AMD Radeon RX 9070 XT and RX 9070 Content Creation, Professional Apps, and AI Next Page AMD Radeon RX 9070 XT and RX 9070: The XT is great, the vanilla card less so

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Crazyy8 I don't have much money, but I might have just enough for the 9070 XT. I might finally be able to say I have high end hardware! :smile:Reply

Look forward to release, hope it isn't scalped! -

cknobman I'll be online super early to try and snag a $599 9070xt.Reply

Count me in the market share shift over to AMD. -

palladin9479 Now it's all down to availability and how much product AMD can pump into the channel. Anyone trying to buy a dGPU is left with almost no real options other then to pay ridiculous markups to scant supply. If AMD can pump enough product into the channel, then those prices will go down cause well, that's how the supply vs demand curve works.Reply -

Elusive Ruse Thank you for the detailed review and analysis as always @JarredWaltonGPU I’m glad that we finally have a proper offering at the midrange. AMD dealt a powerful blow to Nvidia, maybe a bit late but better than never.Reply

The ball is now in Nvidia’s court, let’s see how much they care about the gaming market, I also believe this is a matter of brand image as well; losing to AMD even if it’s not at the high end still hurts. -

JarredWaltonGPU Reply

Seriously! Well, more like late 2020 or early 2021. Everything is basically sold out right now (though 5070 has a few $599 to $699 models actually in stock right this second at Newegg).Gururu said:Very nice review although the setting feels like a time warp to Dec 2022. -

Colif They are nice cards but I will follow my own advice and not buy a card this generation and see what next brings. Xt beats mine in some games but not all, and since I don't play RT games anyway, I don't think its worth itReply

I am saving for a new PC so it wouldn't make sense to buy one now anyway. Maybe next gen I get one that is same color as new PC will be... -

SonoraTechnical Saw this about the Powercolor Reaper in the article:Reply

PowerColor takes the traditional approach of including three DisplayPort 2.1a ports and a single HDMI 2.1b port. However, the specifications note that only two simultaneous DP2.1 connections can be active at the same time. Also, these are UHBR13.5 (54 Gbps) ports, rather than the full 80 Gbps maximum that DisplayPort 2.1a allows for.

So, 3 monitors using display port for FS2024 is a non-starter? Have to mix technologies (DP and HDMI?) Is this going to be true for most RX 9070 XT cards? -

JarredWaltonGPU Reply

Not by my numbers. AMD said the 9070 XT would be about 10% slower than the 7900 XTX in rasterization and 10% faster in ray tracing. Even if that were accurate, it would still make the 9070 XT faster than the 7900 XT.baboma said:I watched the HUB review, since it came out first. The short of it: 9070XT = 7900XT (not XTX), both in perf and power consumption. So if you think Nvidia (Huang) lied about 5070's perf, then AMD also lied.

394

395 -

Colif The low stock and things being OOS the same day they released reminds me of the 7900 release day. I was too slow to get an XTX but I was happy with an XT.Reply