Why you can trust Tom's Hardware

AMD Radeon RX 9070 XT and RX 9070 Rasterization Gaming Performance

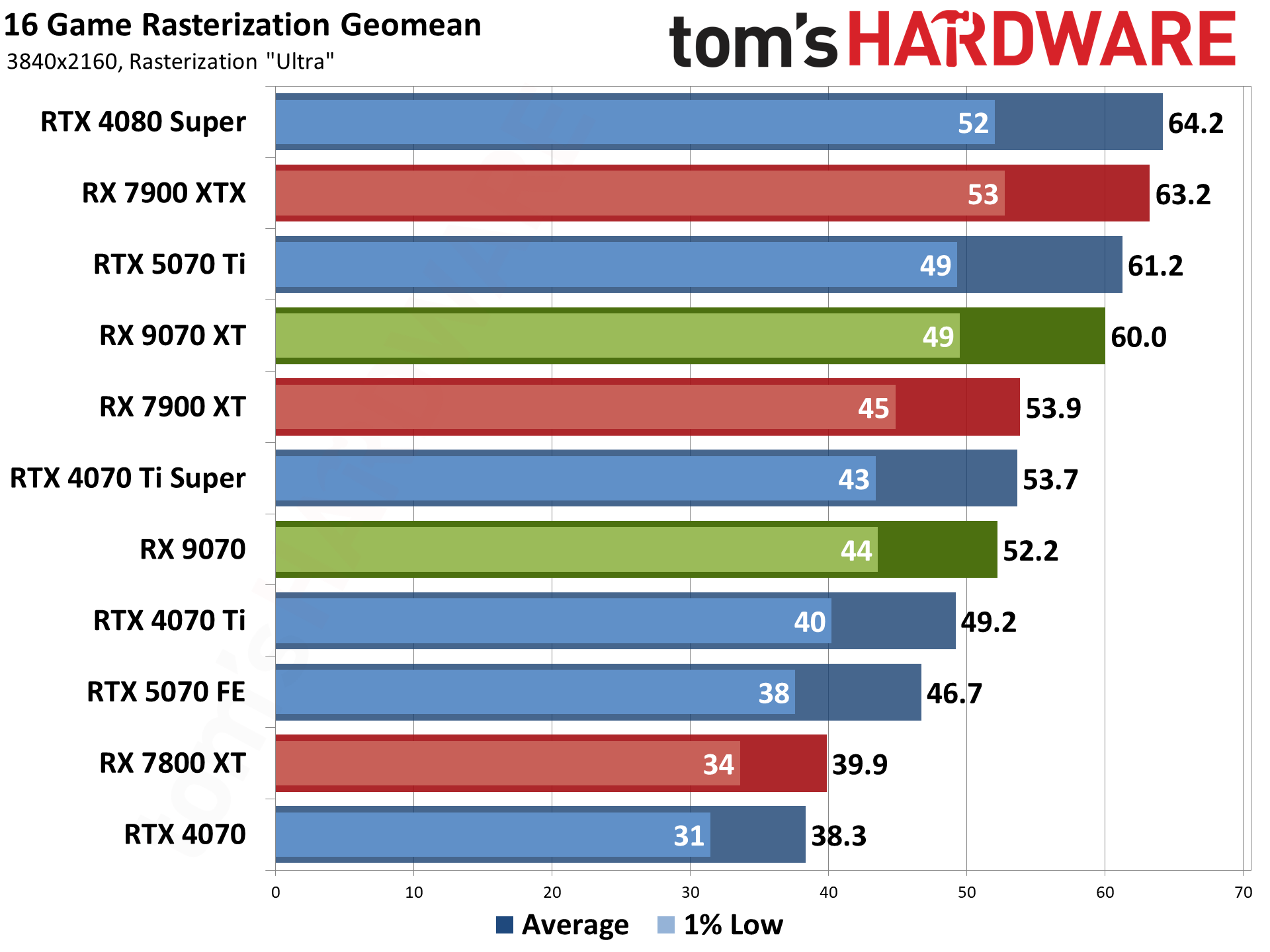

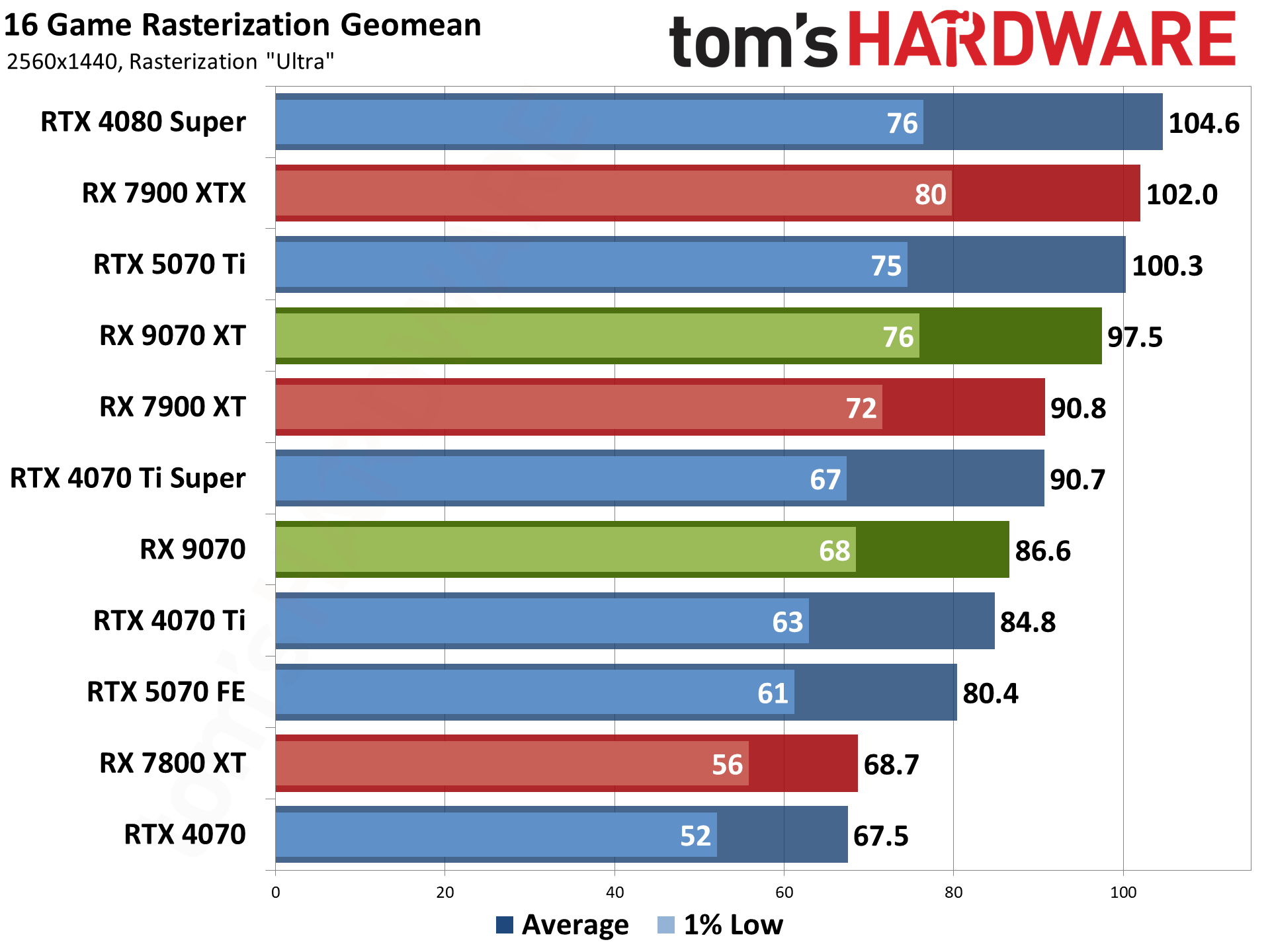

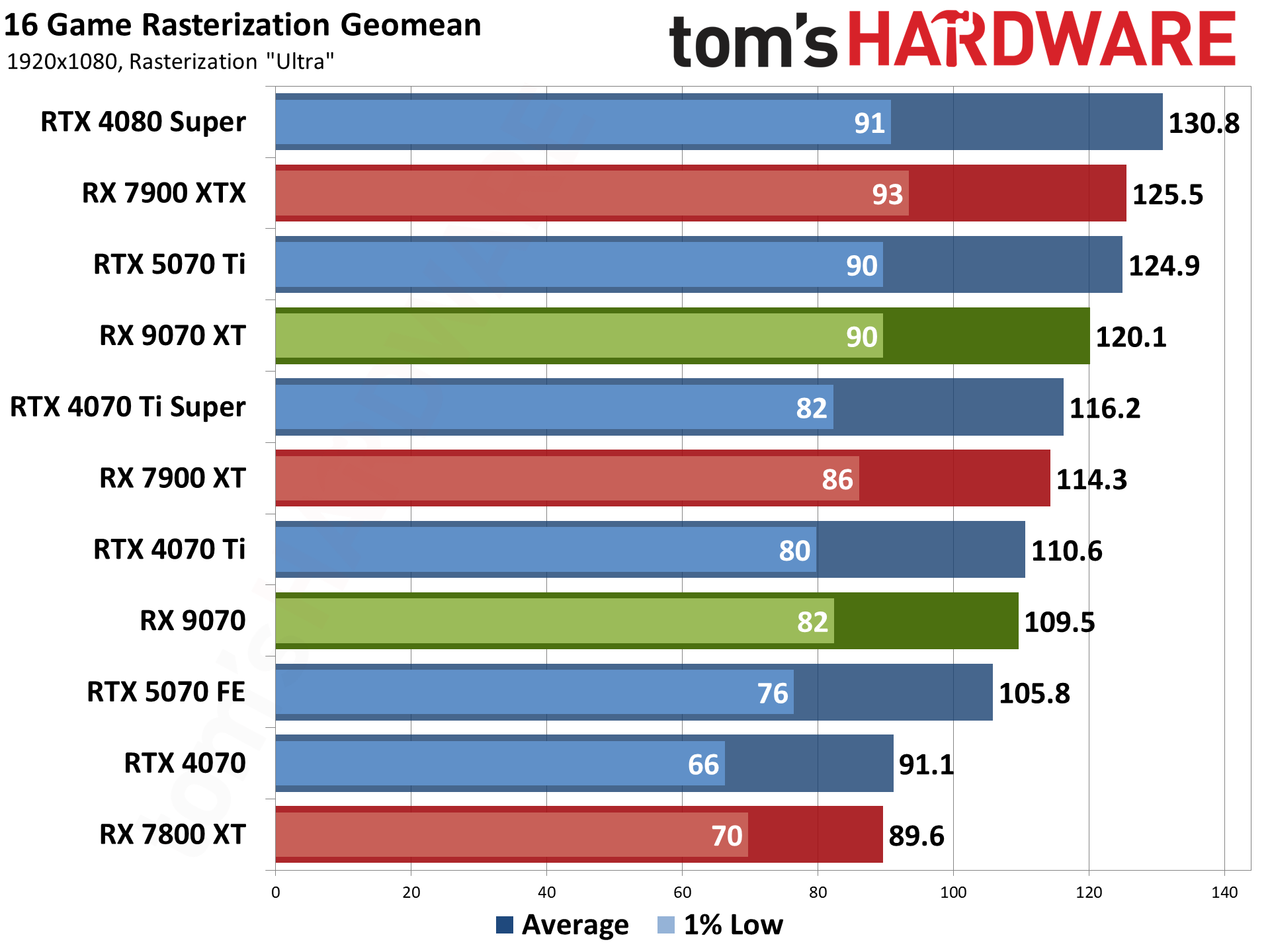

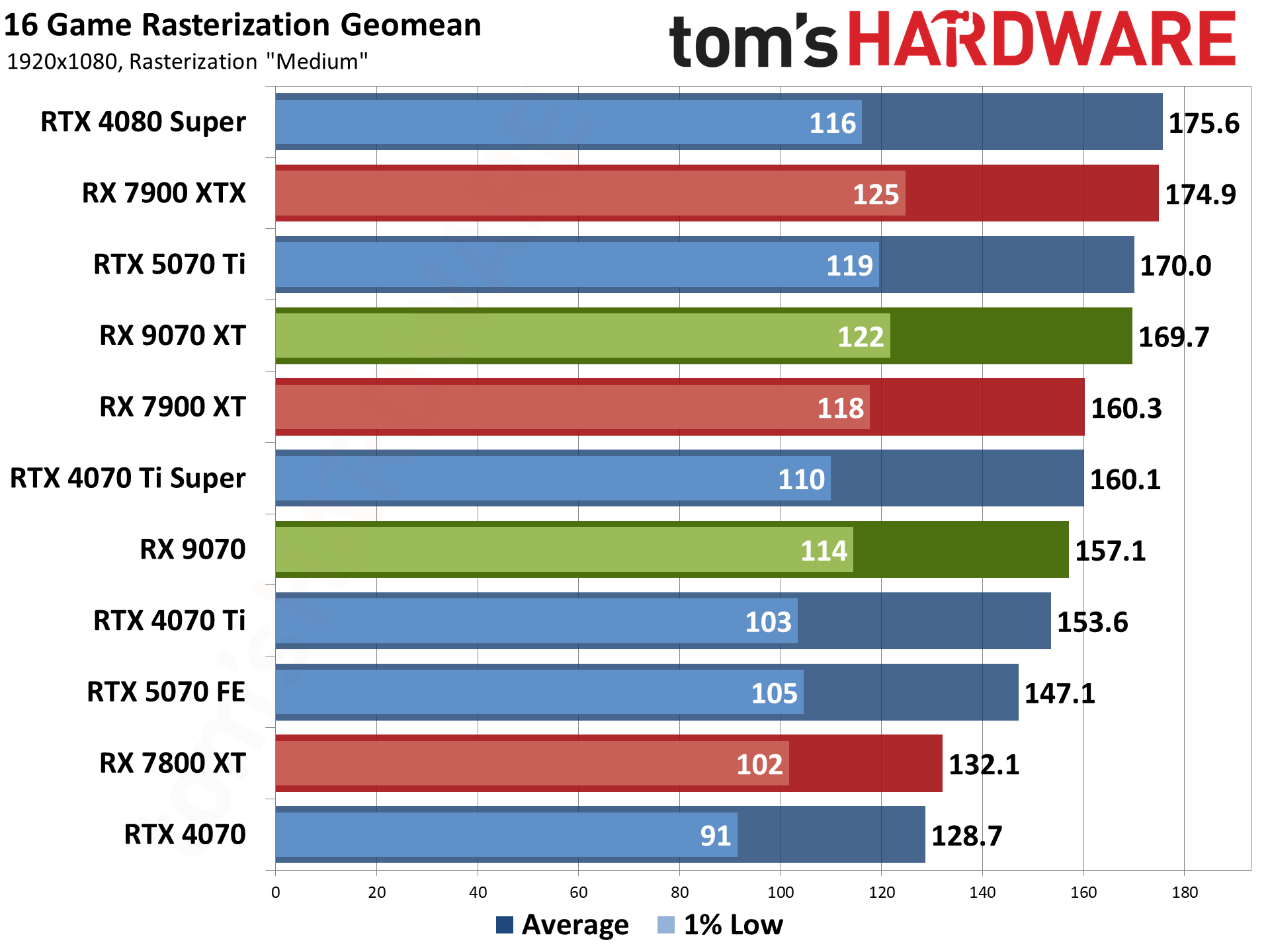

We divide gaming performance into two categories: traditional rasterization games and ray-tracing games. We benchmark each game using four different test settings: 1080p medium, 1080p ultra, 1440p ultra, and 4K ultra.

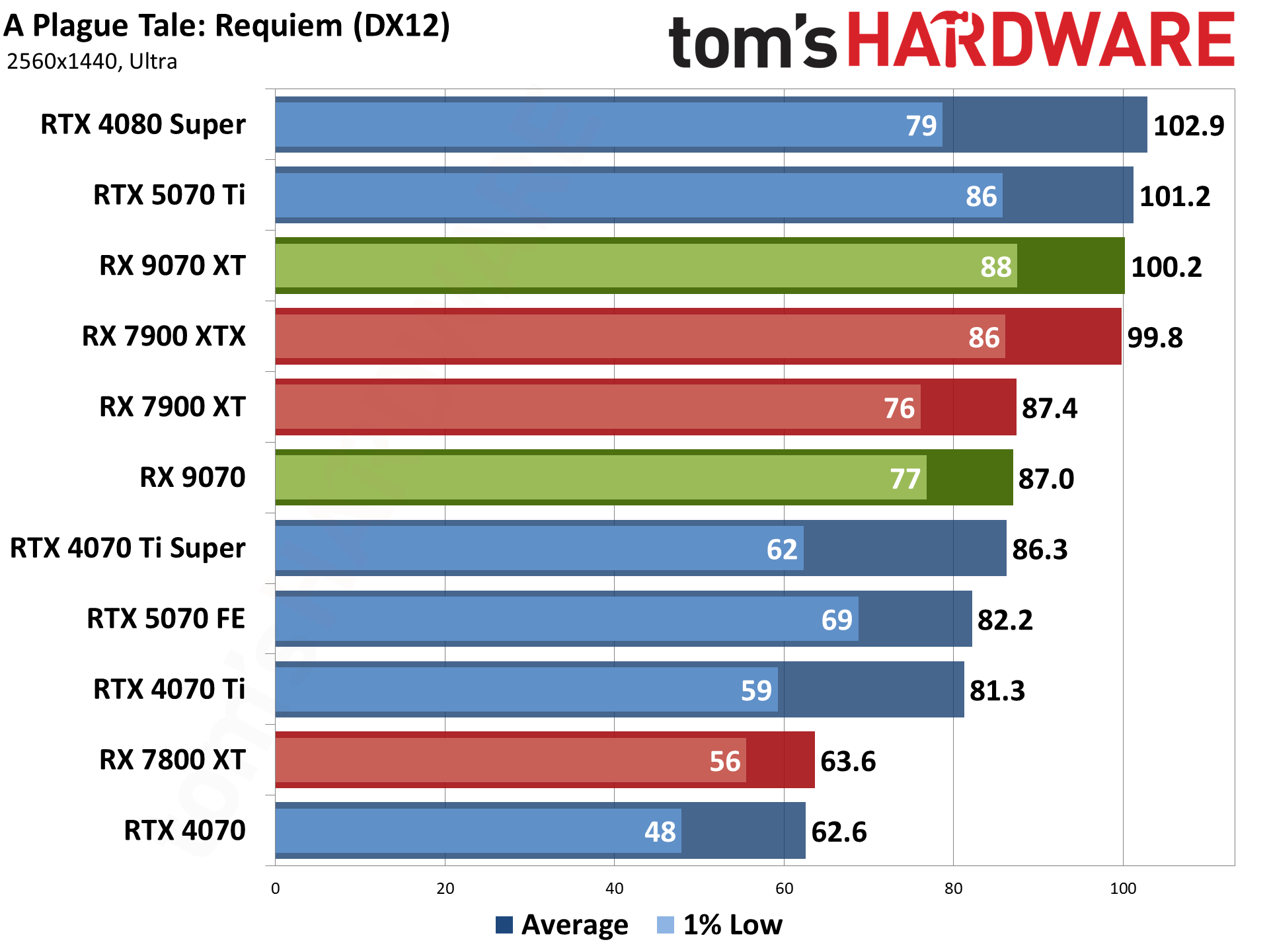

Like the RTX 5070, we'd rate the 1440p ultra results as the most important here, though arguably the 9070 XT can also target 4K. So, we'll go ahead and just sort each grouping from highest to lowest resolution/setting. Do note that 1440p also correlates with 4K using quality mode upscaling, though there's some overhead for the algorithms, and 1080p likewise correlates with 4K using performance mode upscaling.

The interesting thing here is going to be seeing how the two 9070-series cards compete with each other as well as with Nvidia's RTX 5070 and RTX 5070 Ti. The latter has a much higher $749 MSPR, and it's currently selling at $1,149 and up. Even if the 9070 XT can't quite catch the 5070 Ti, if it can come close while also staying closer to its $599 MSRP, it would represent a serious coup.

We'll start with the rasterization suite of 16 games, as that's arguably still the most useful measurement of gaming performance. Plenty of games that have ray tracing support end up running so poorly that it's more of a feature checkbox than something useful.

We'll provide limited to no commentary on most of the individual game charts, letting the numbers speak for themselves. The Geomean charts will be the main focus, since those provide the big picture overview of where the RX 9070 XT and RX 9070 land relative to the other GPUs.

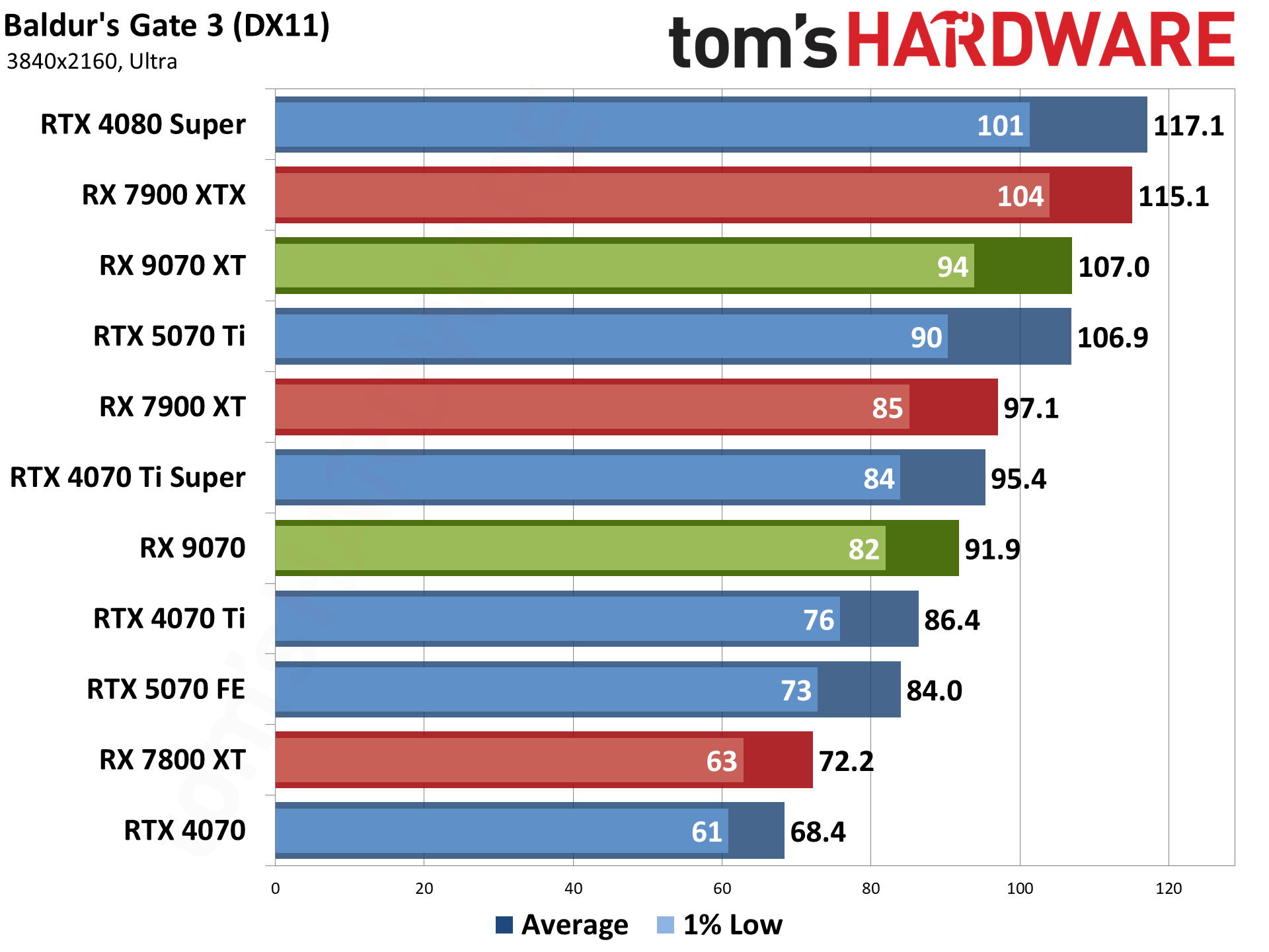

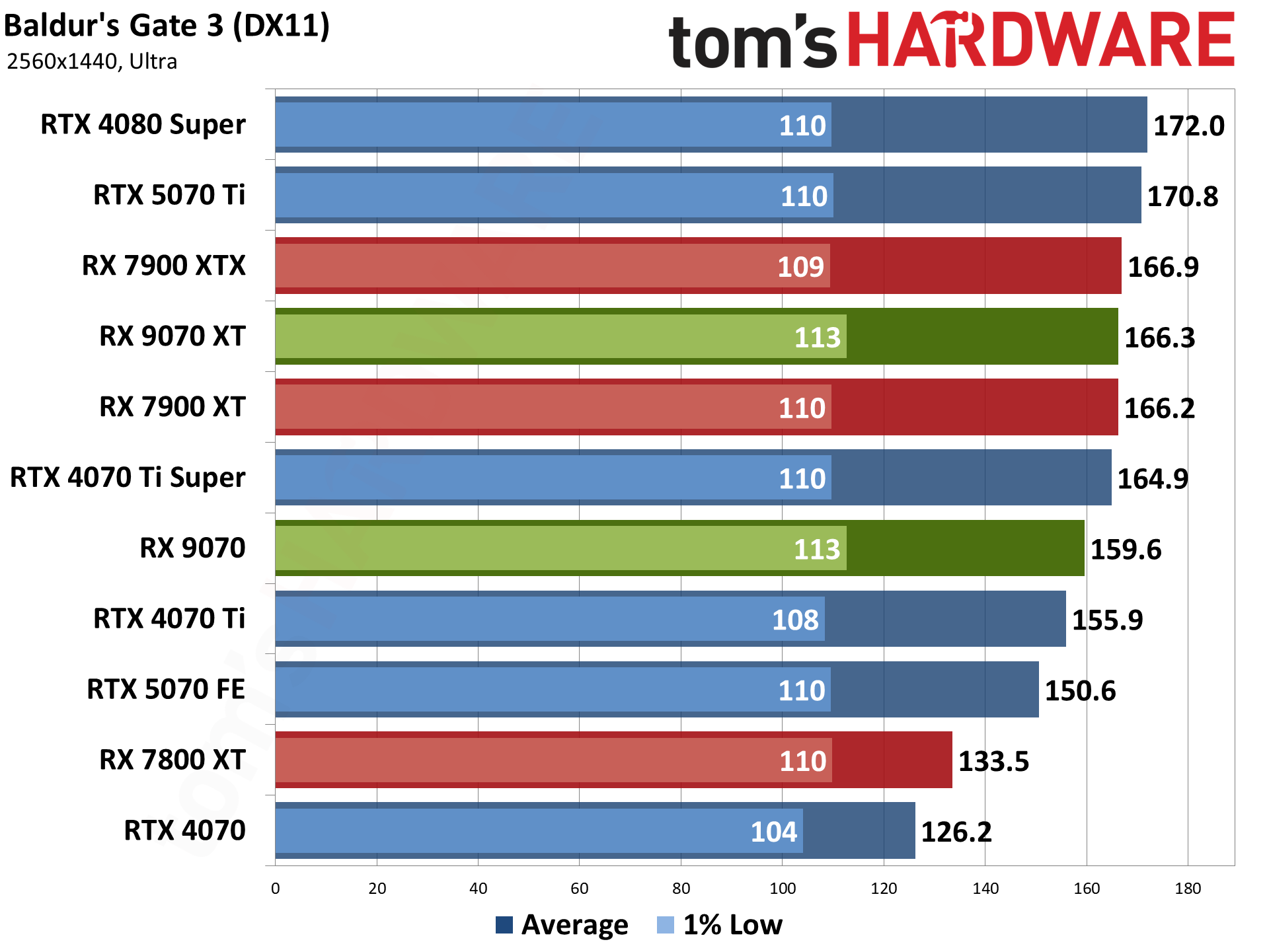

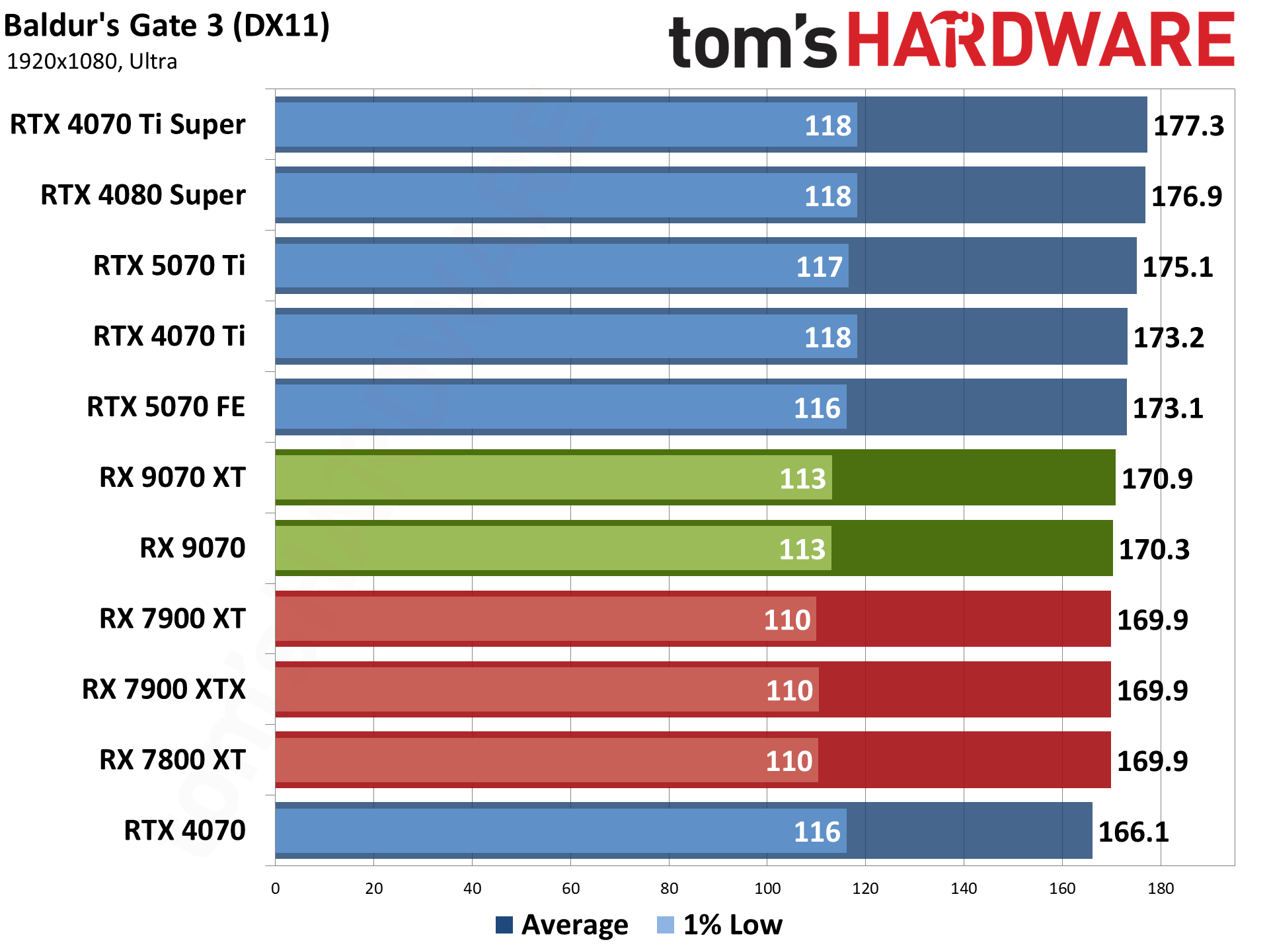

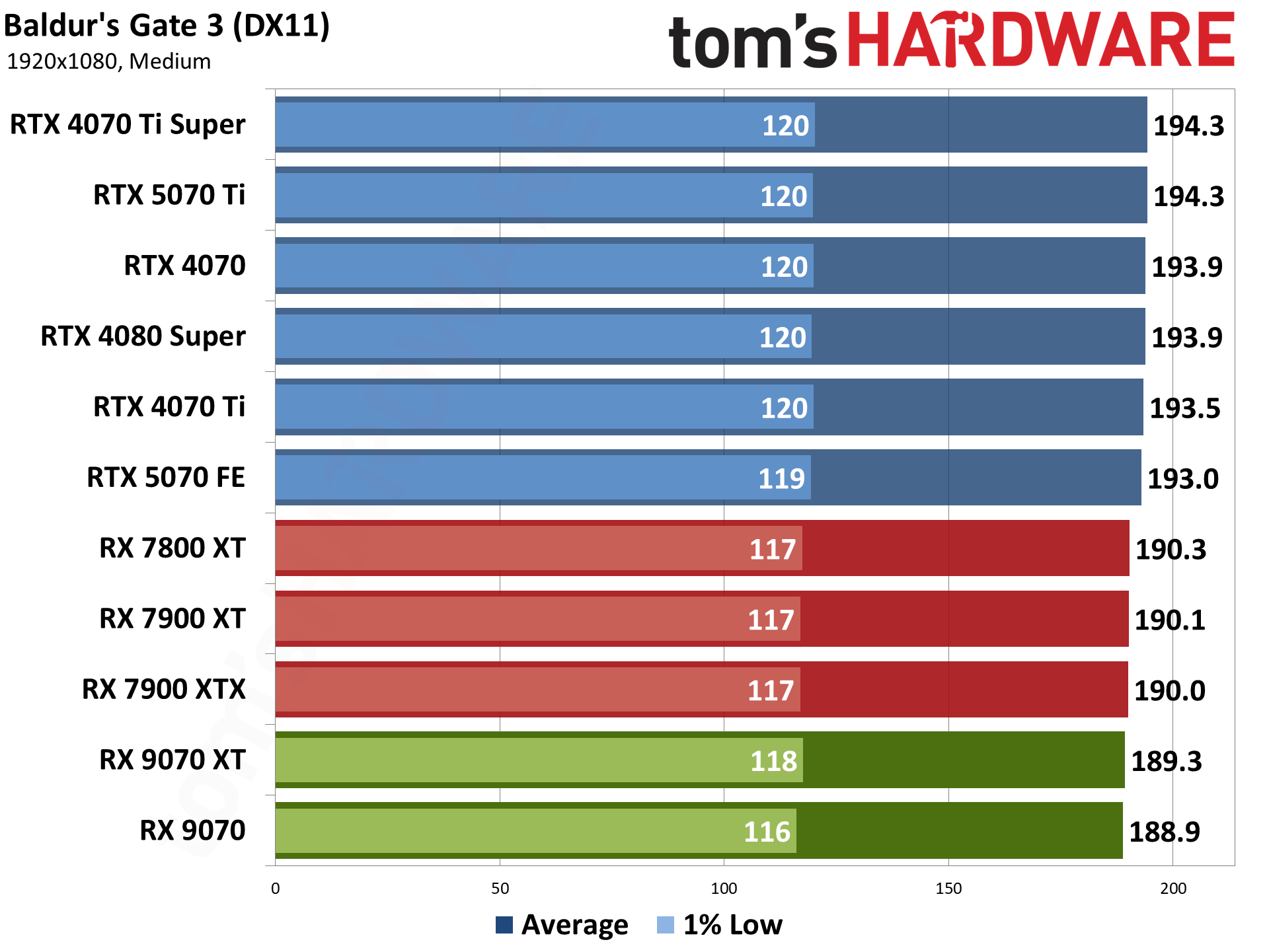

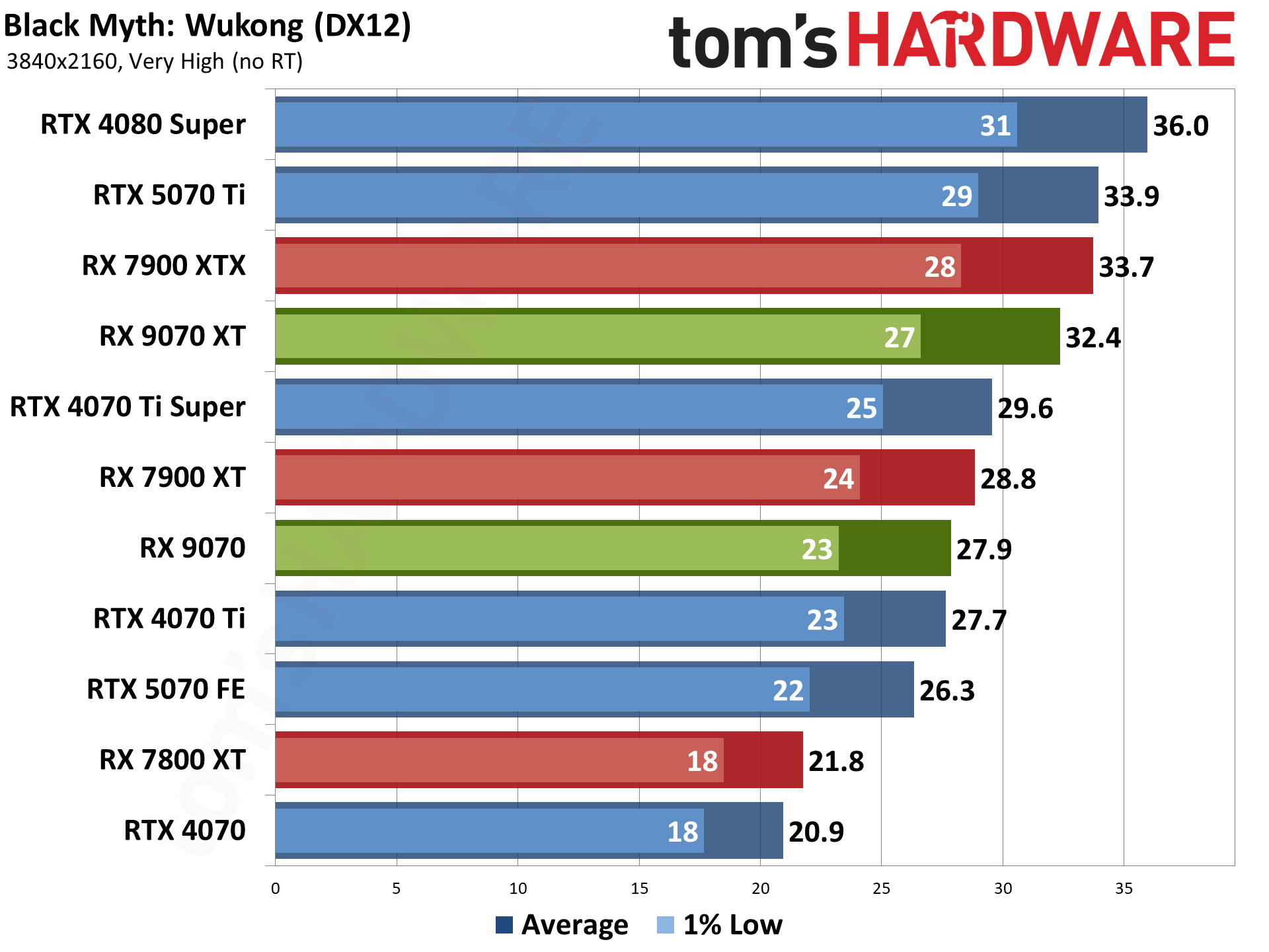

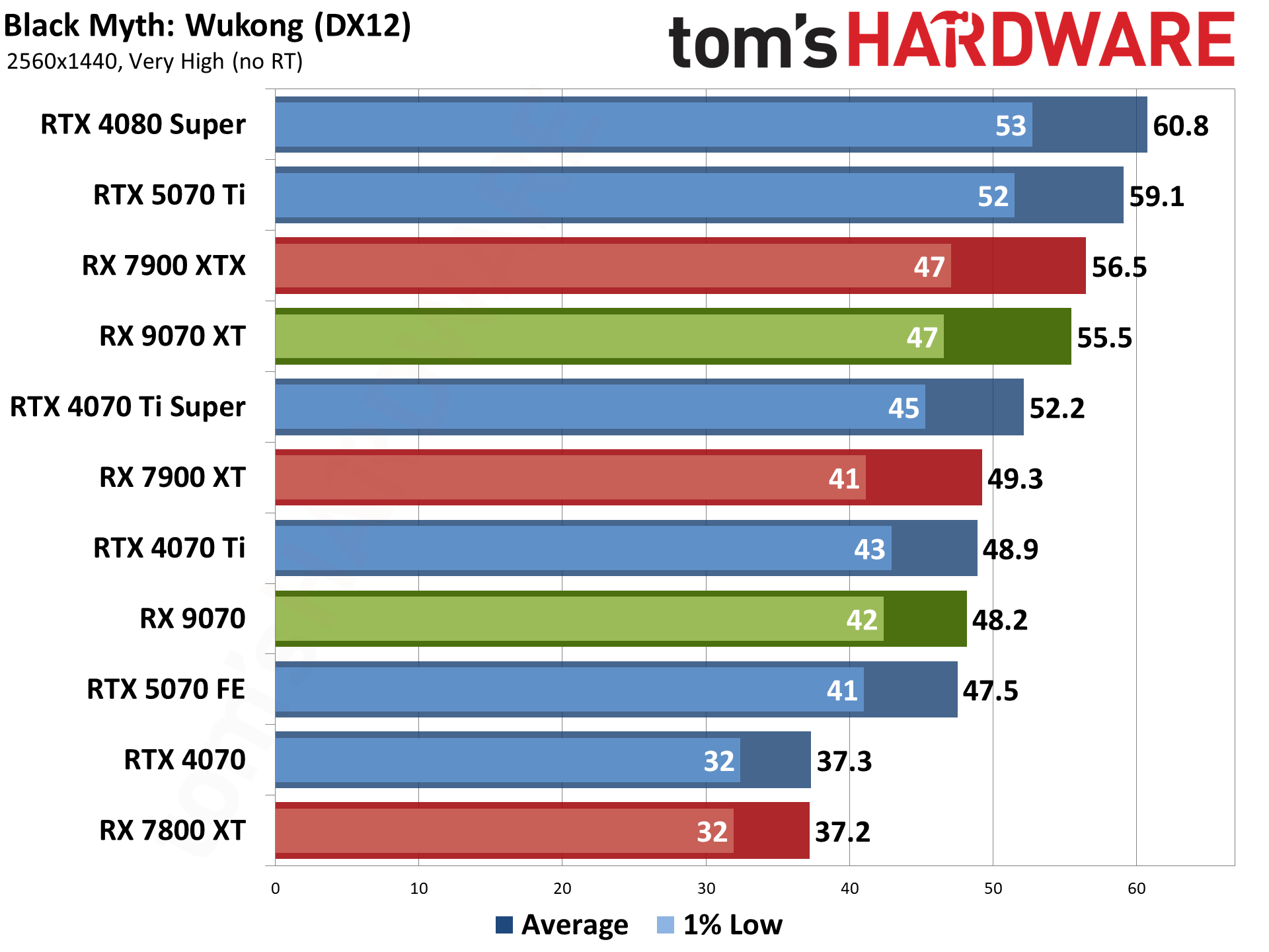

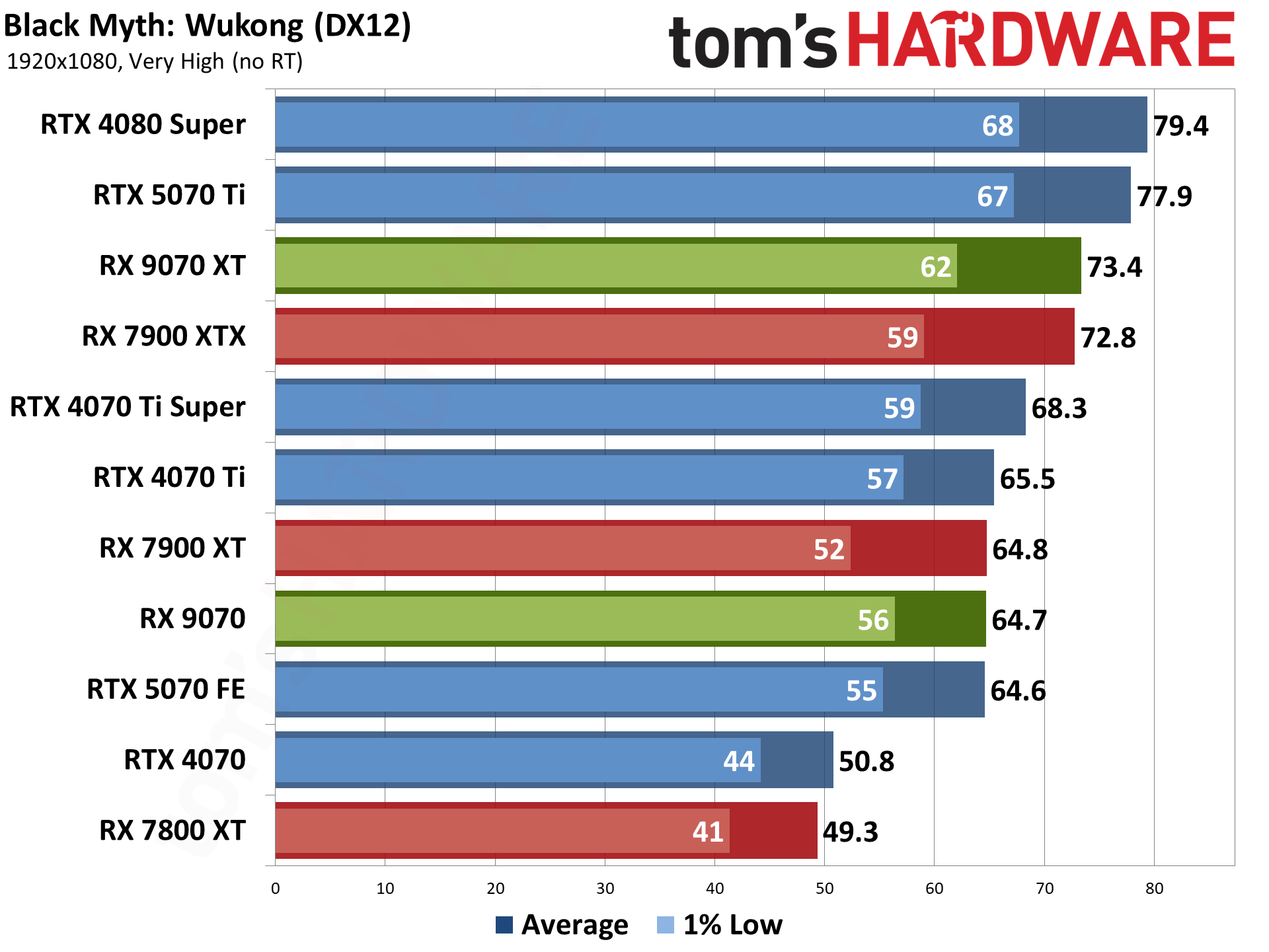

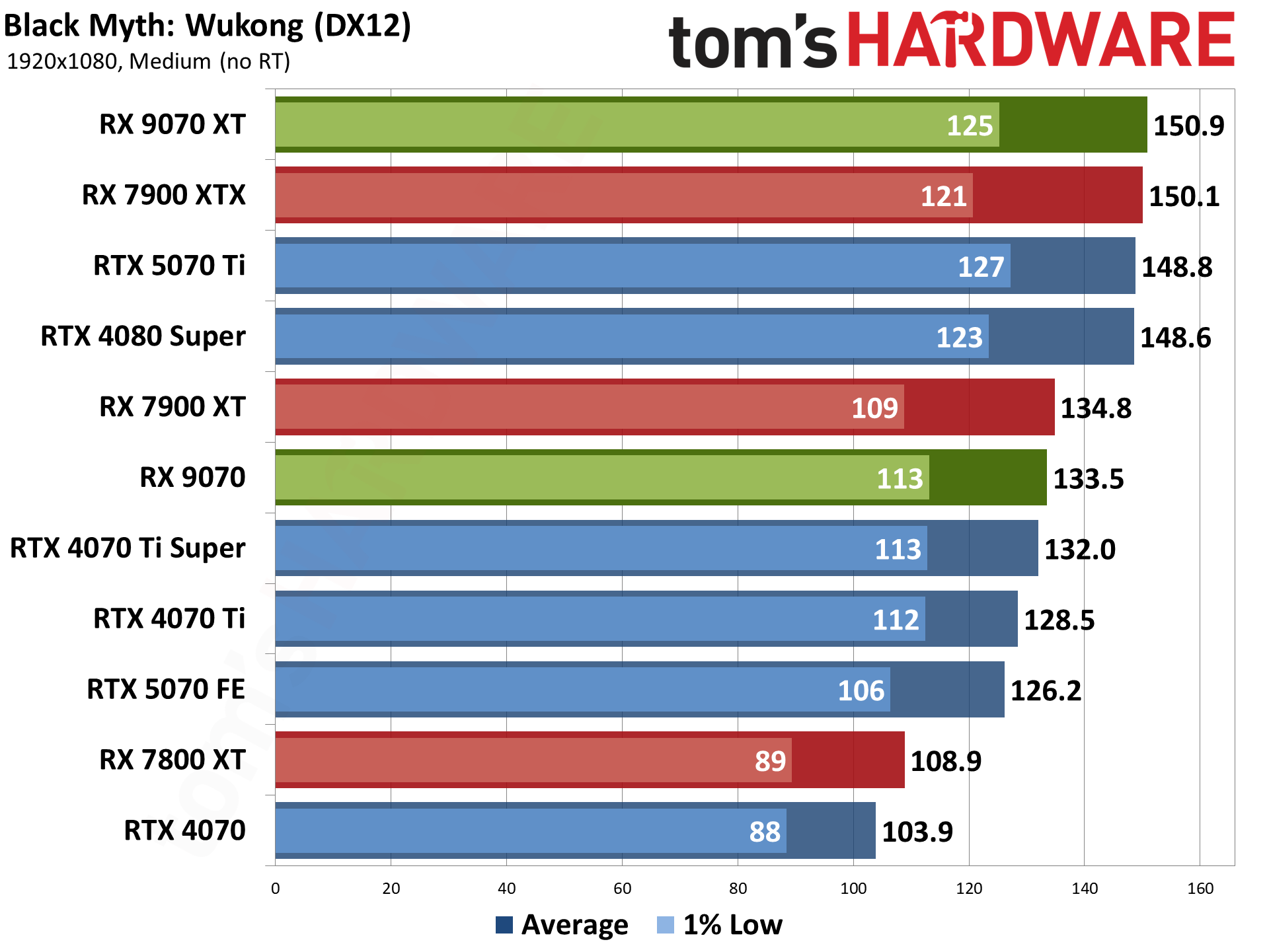

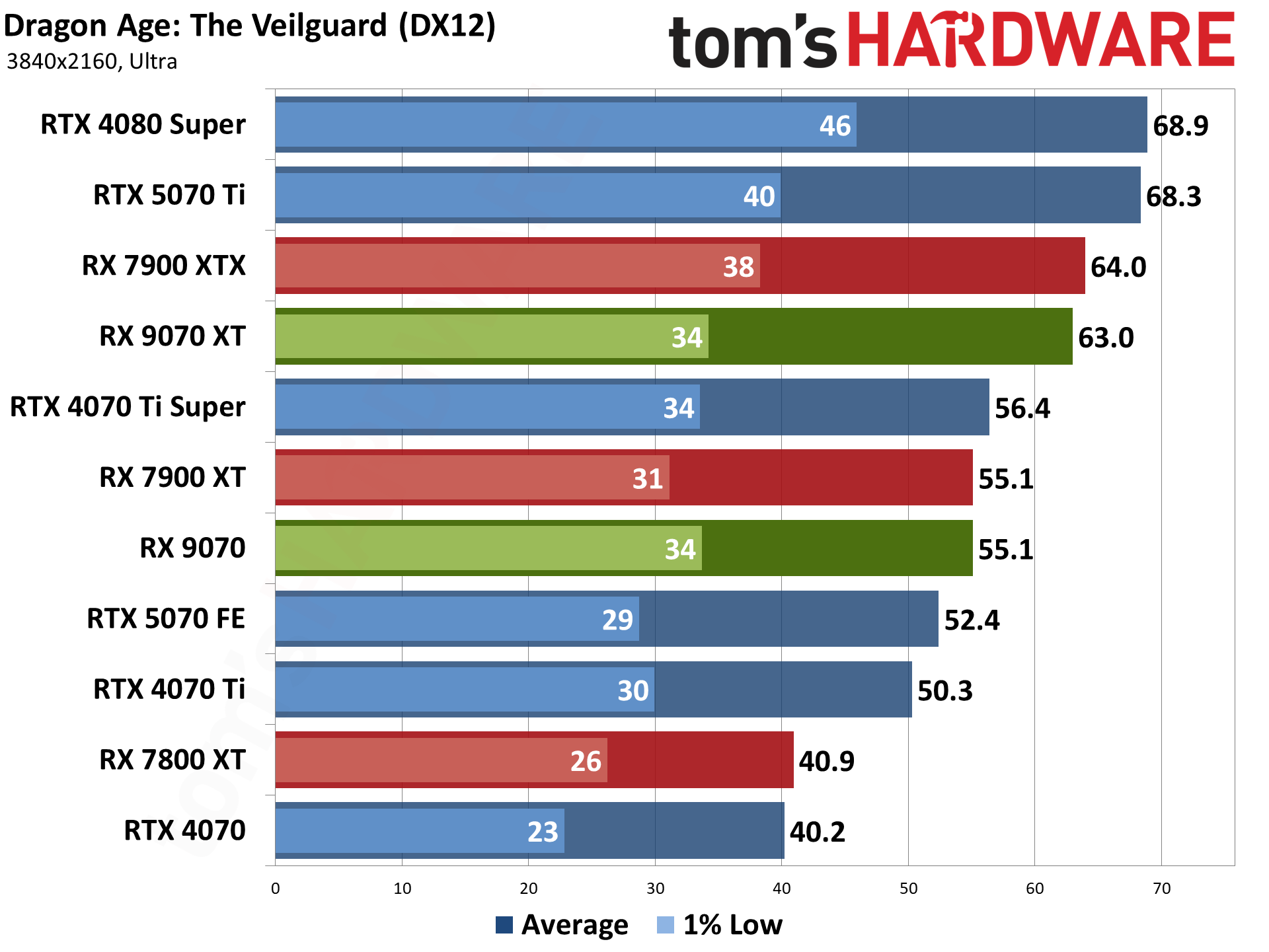

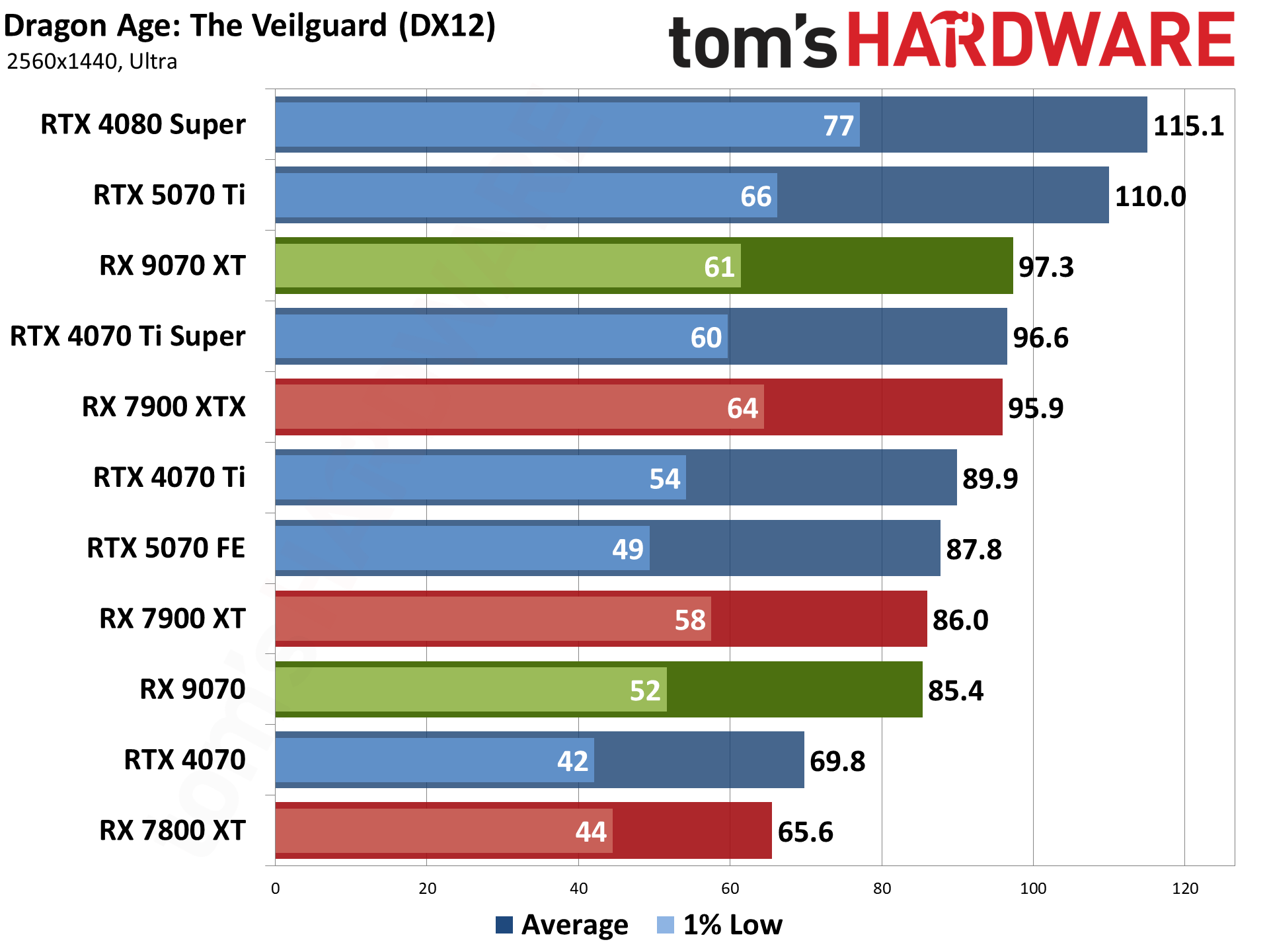

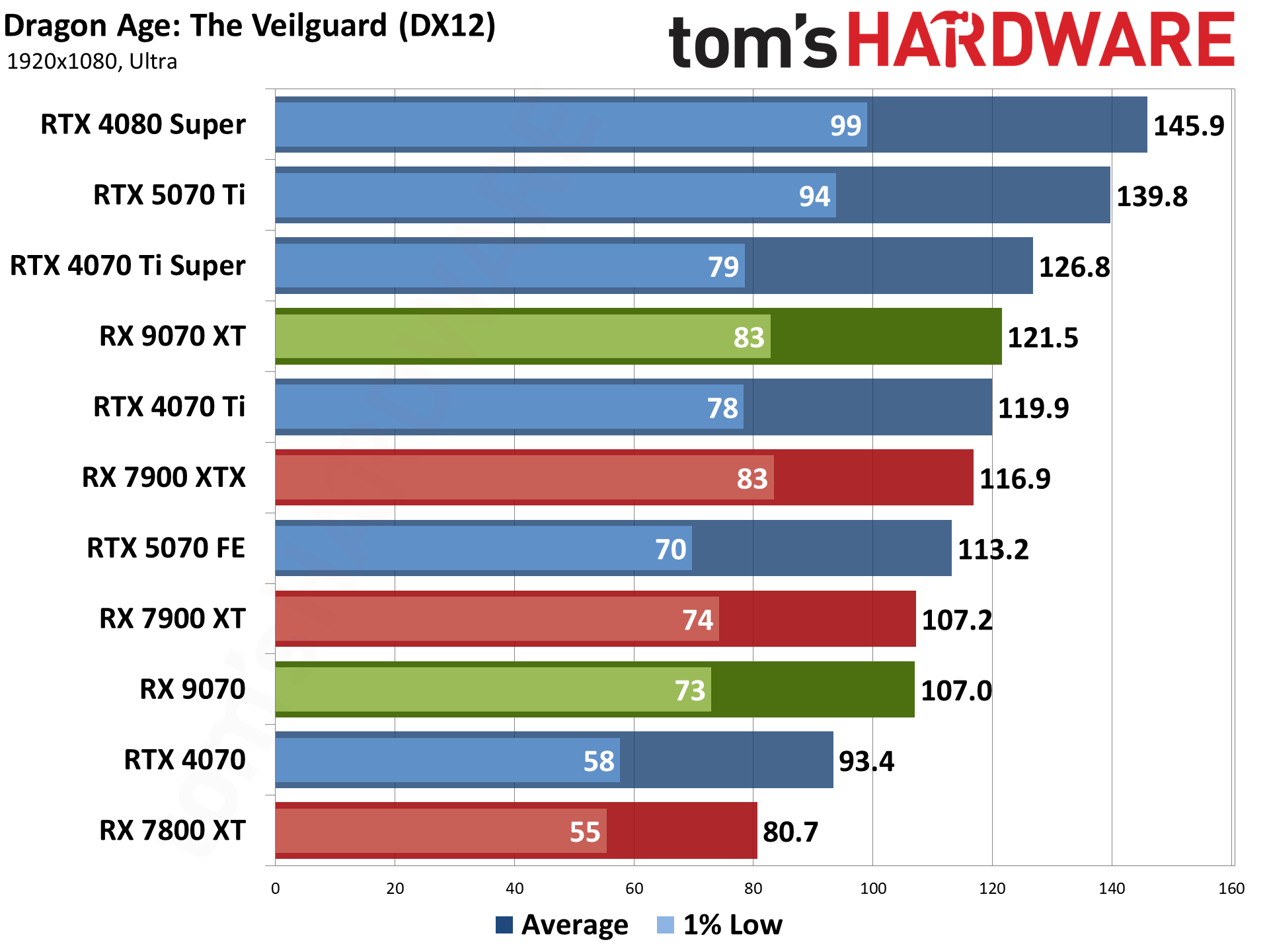

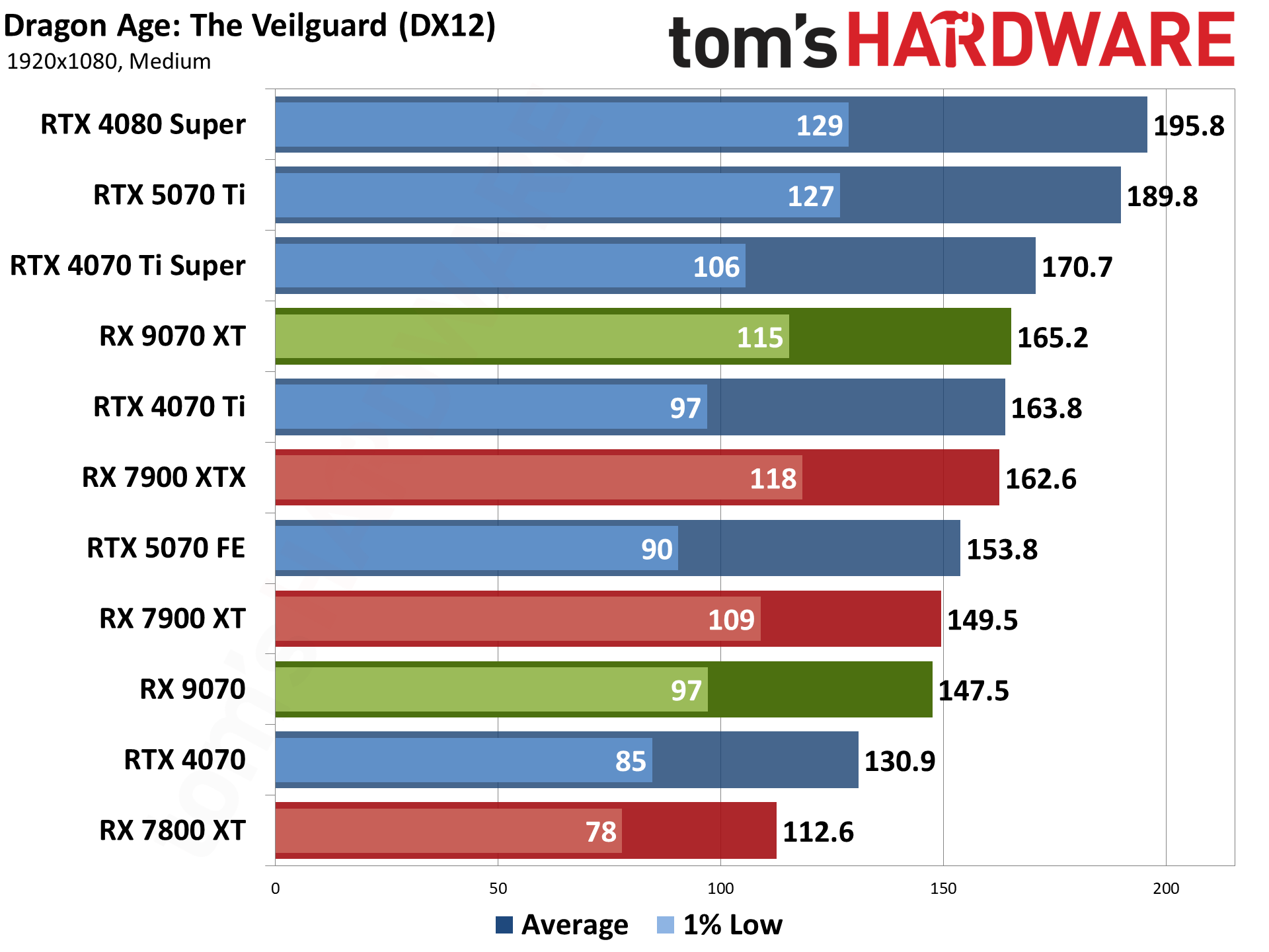

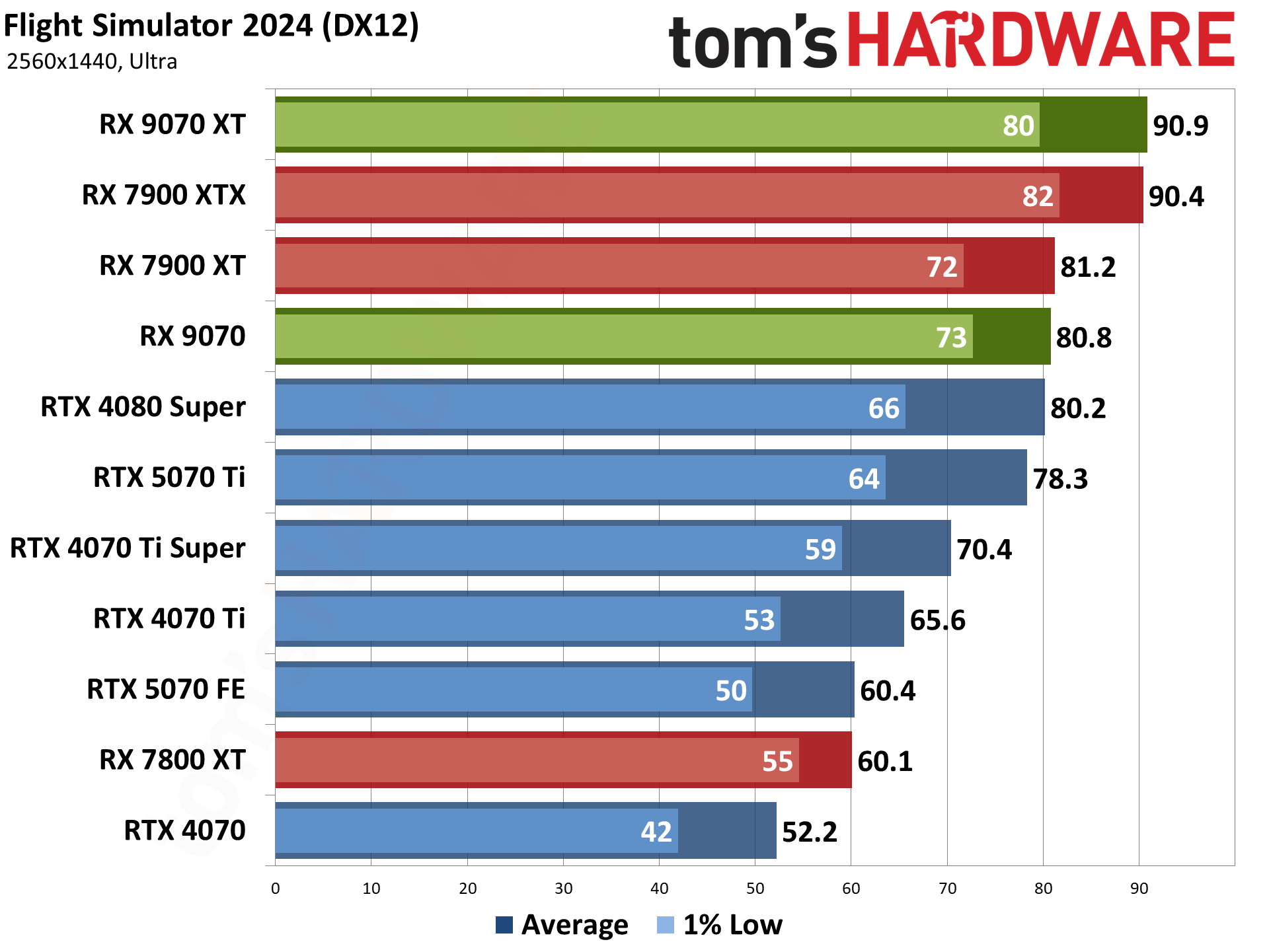

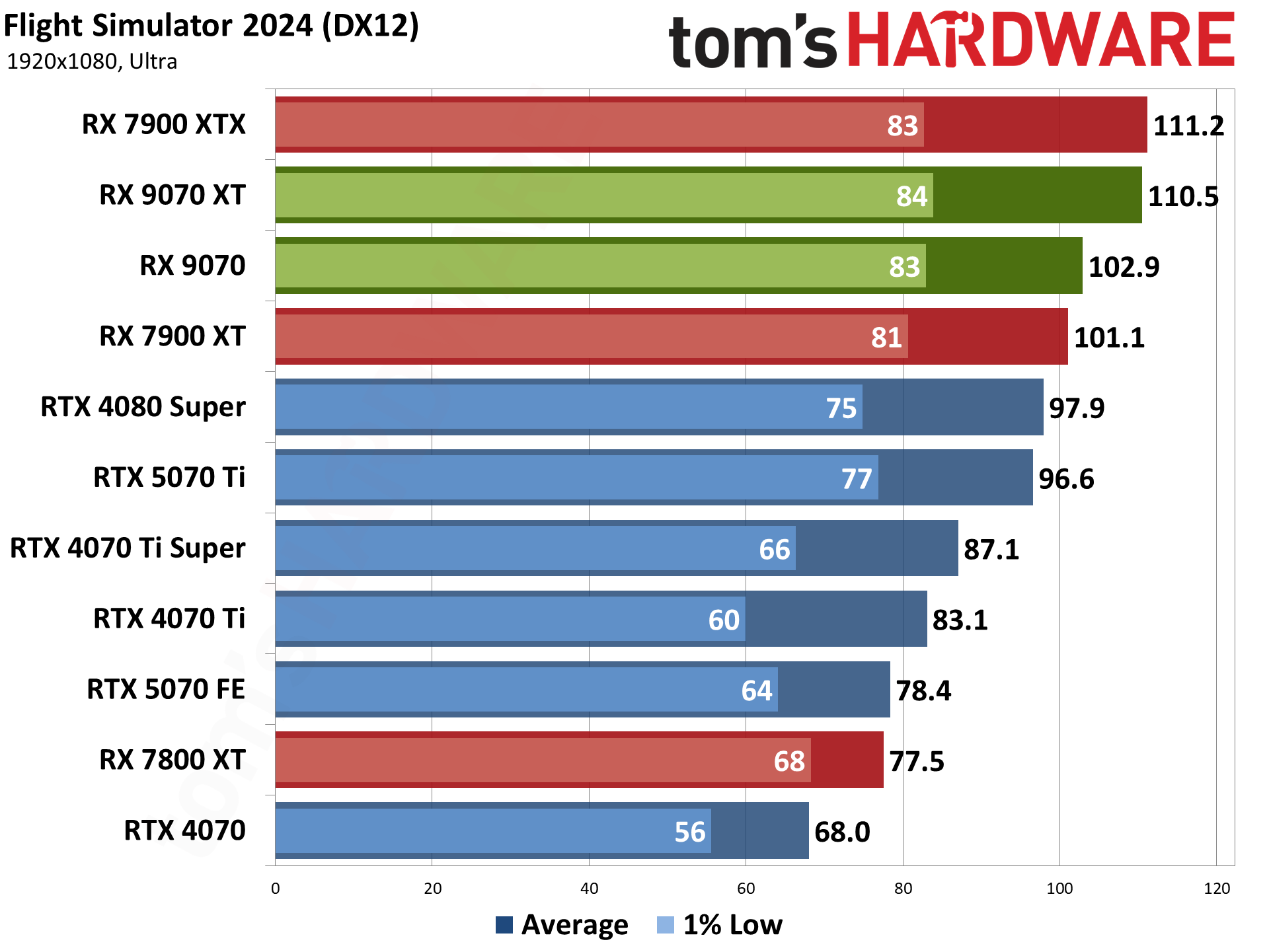

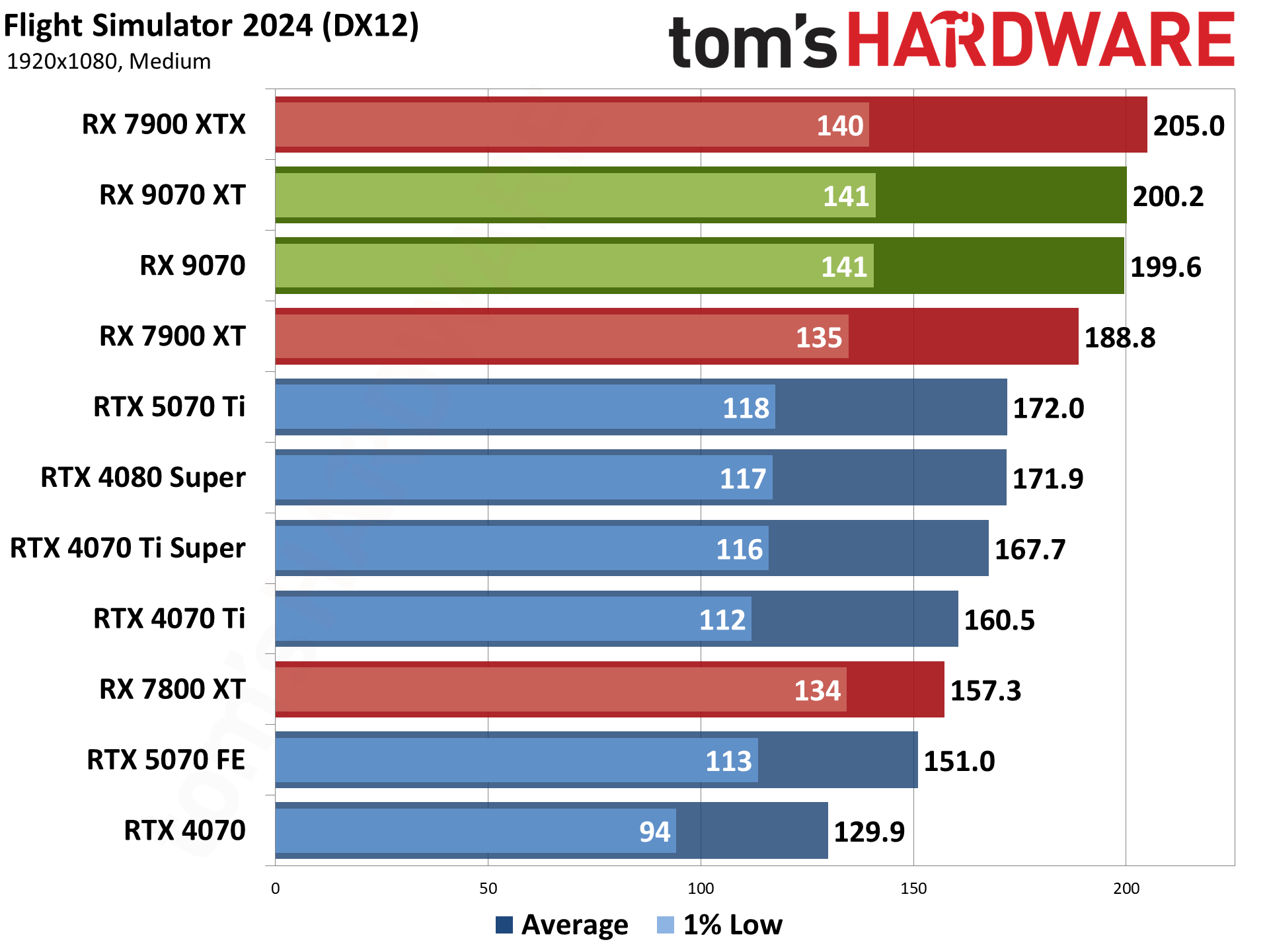

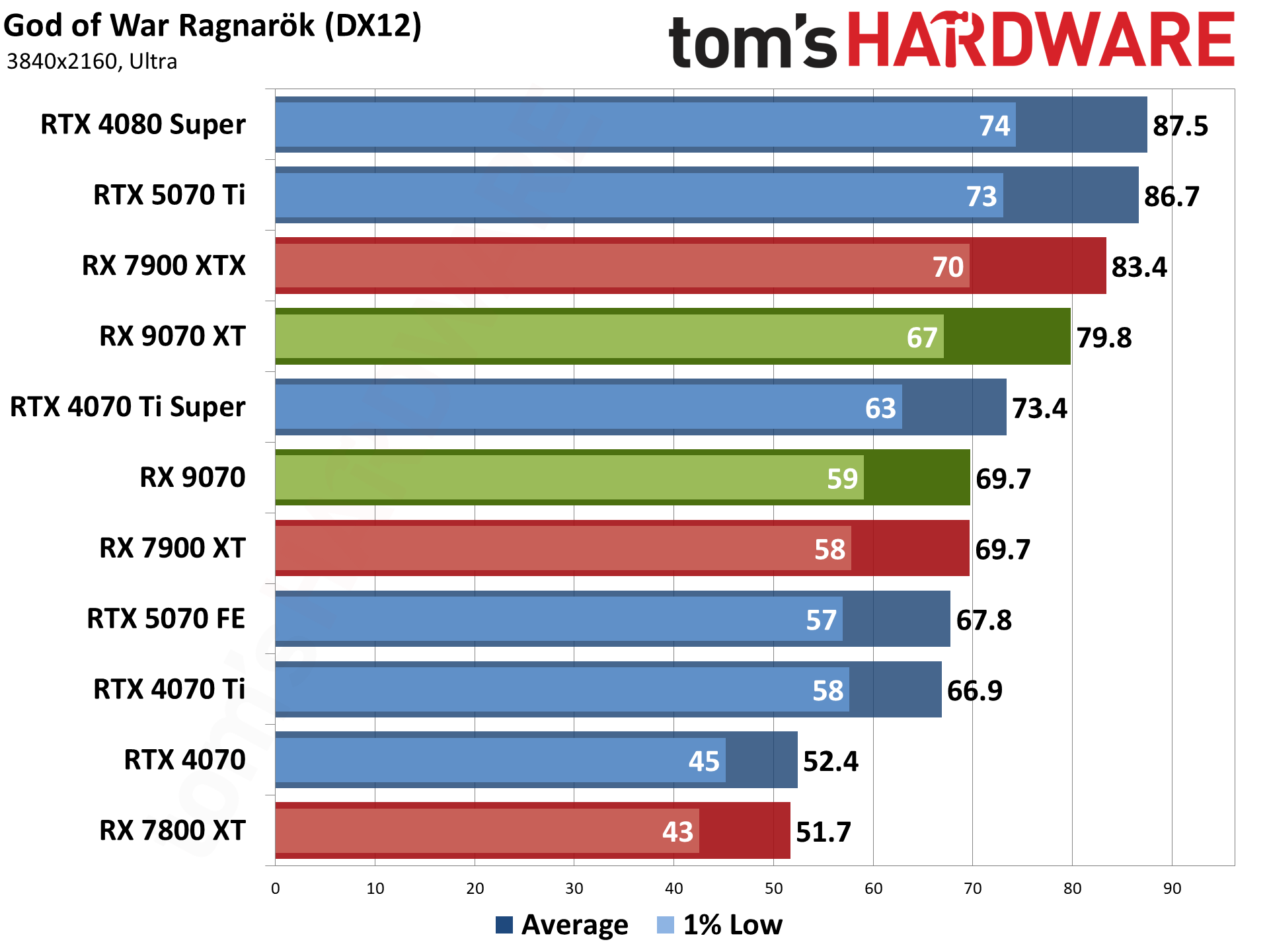

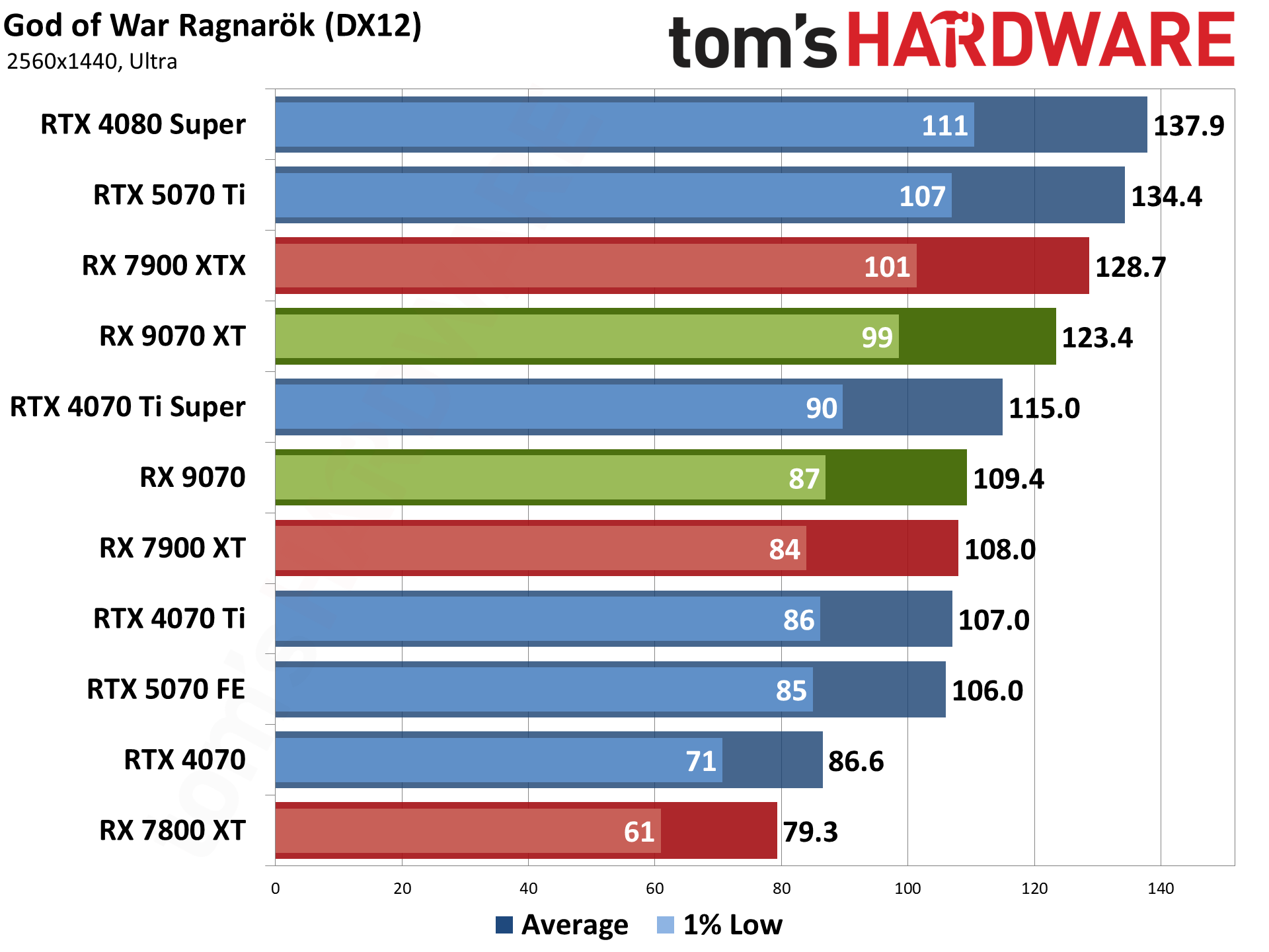

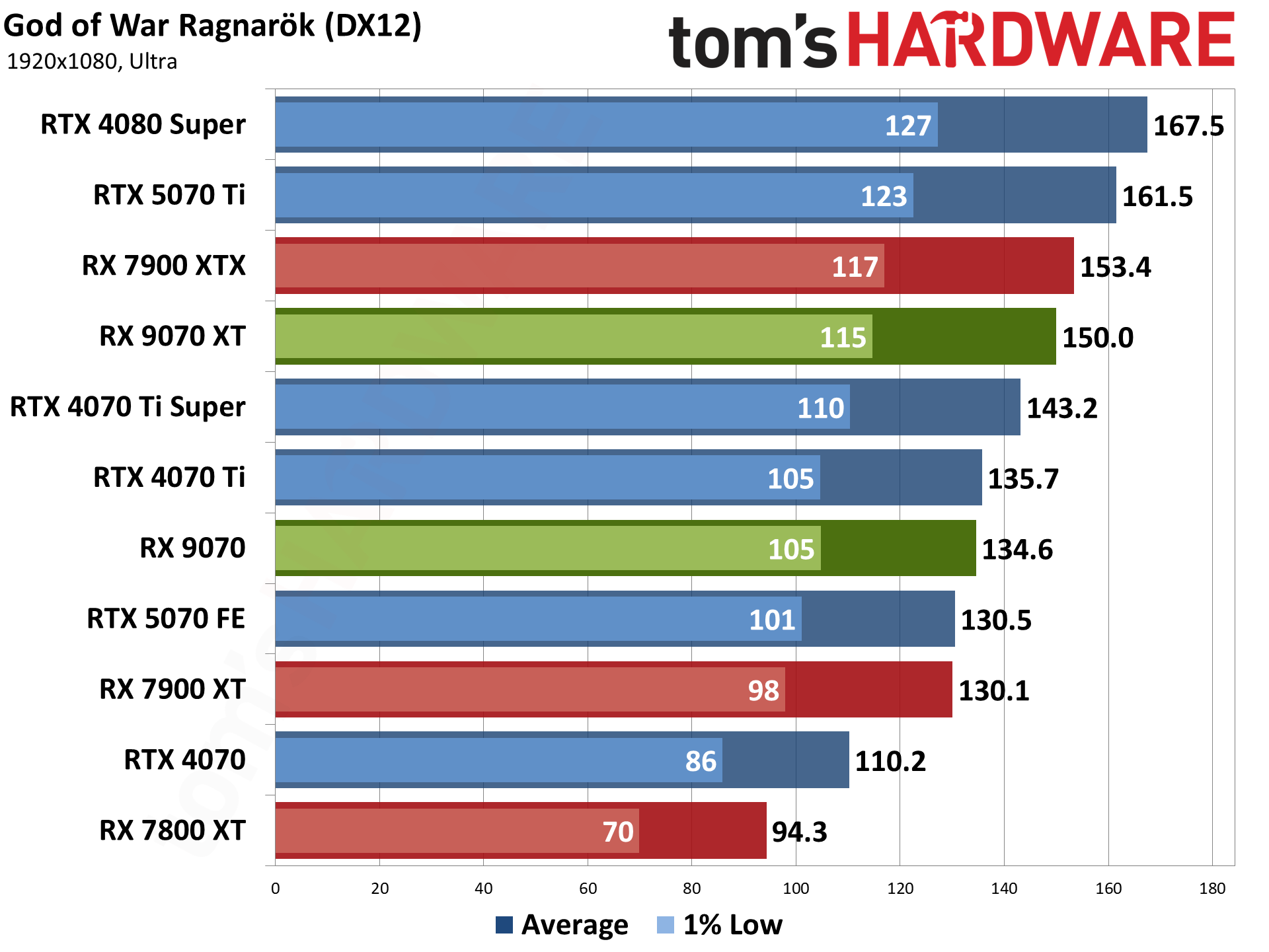

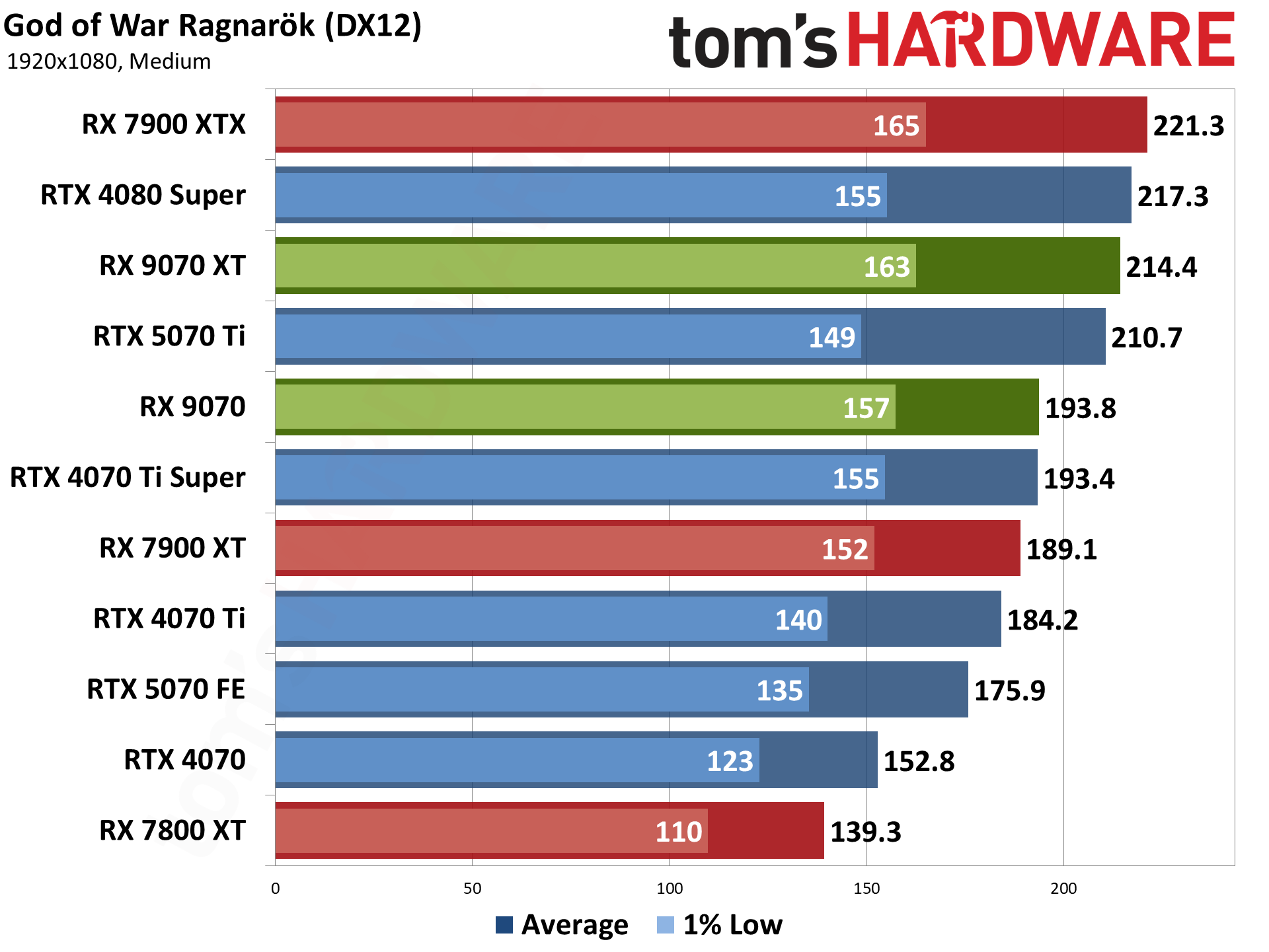

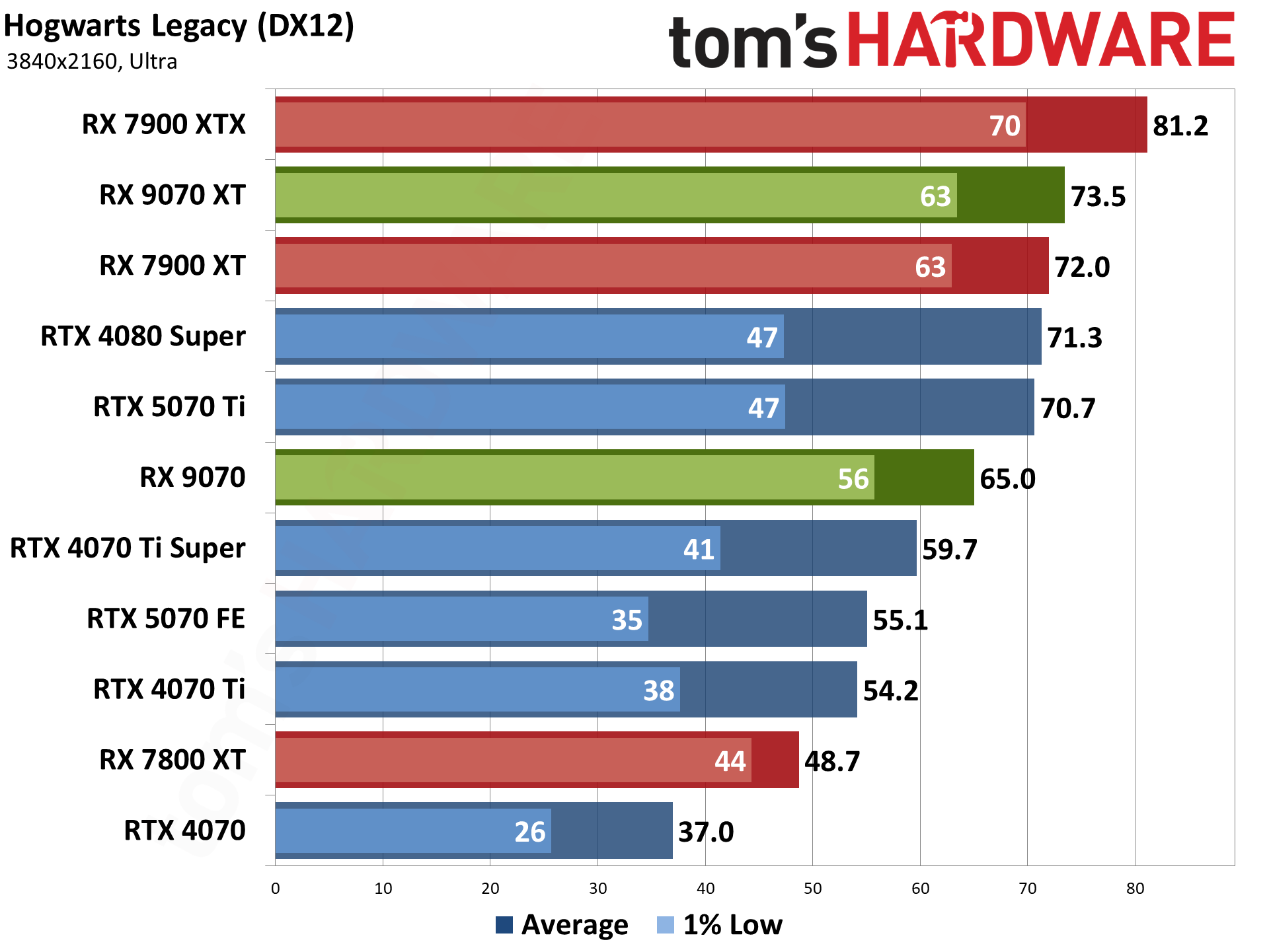

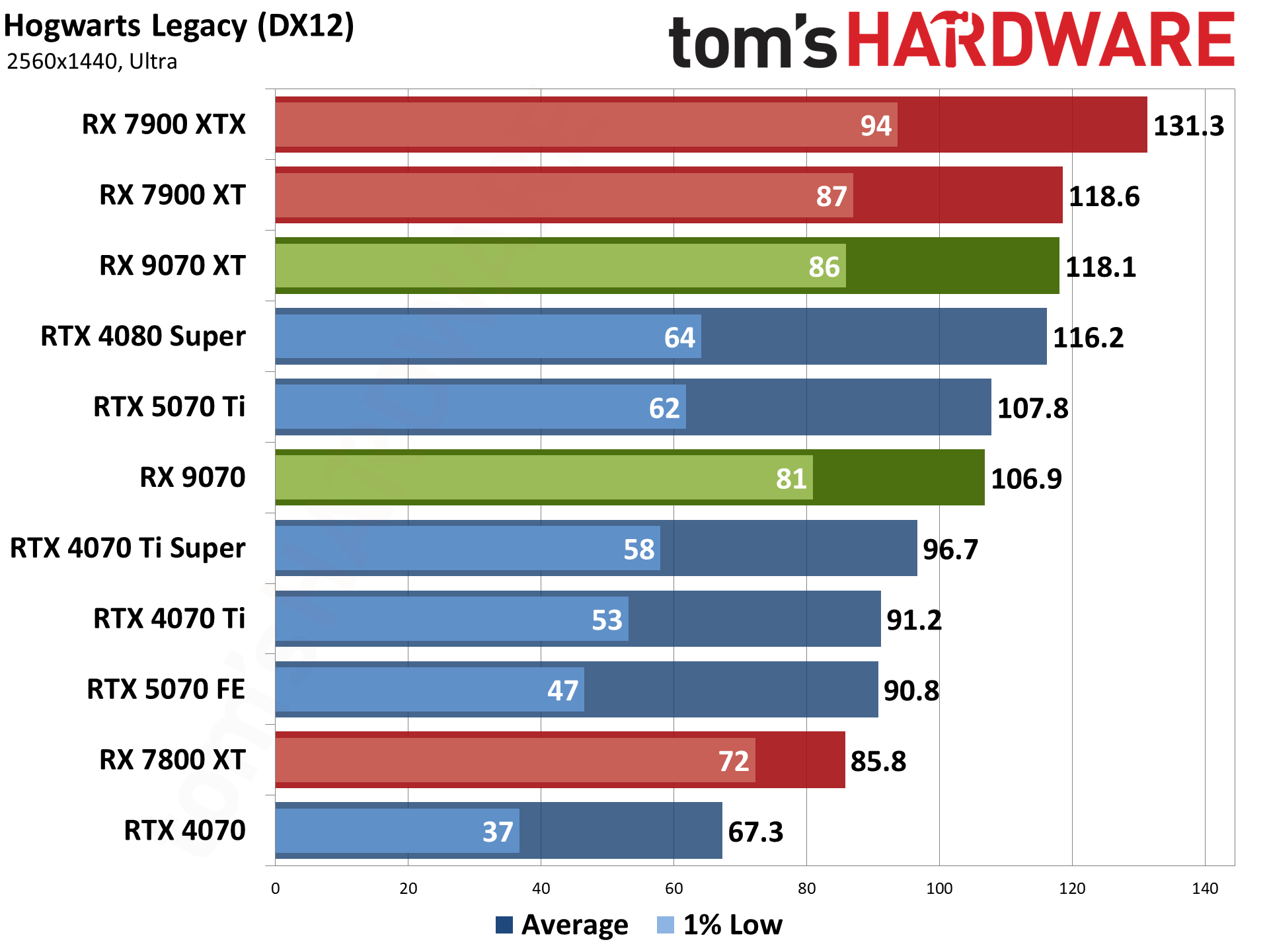

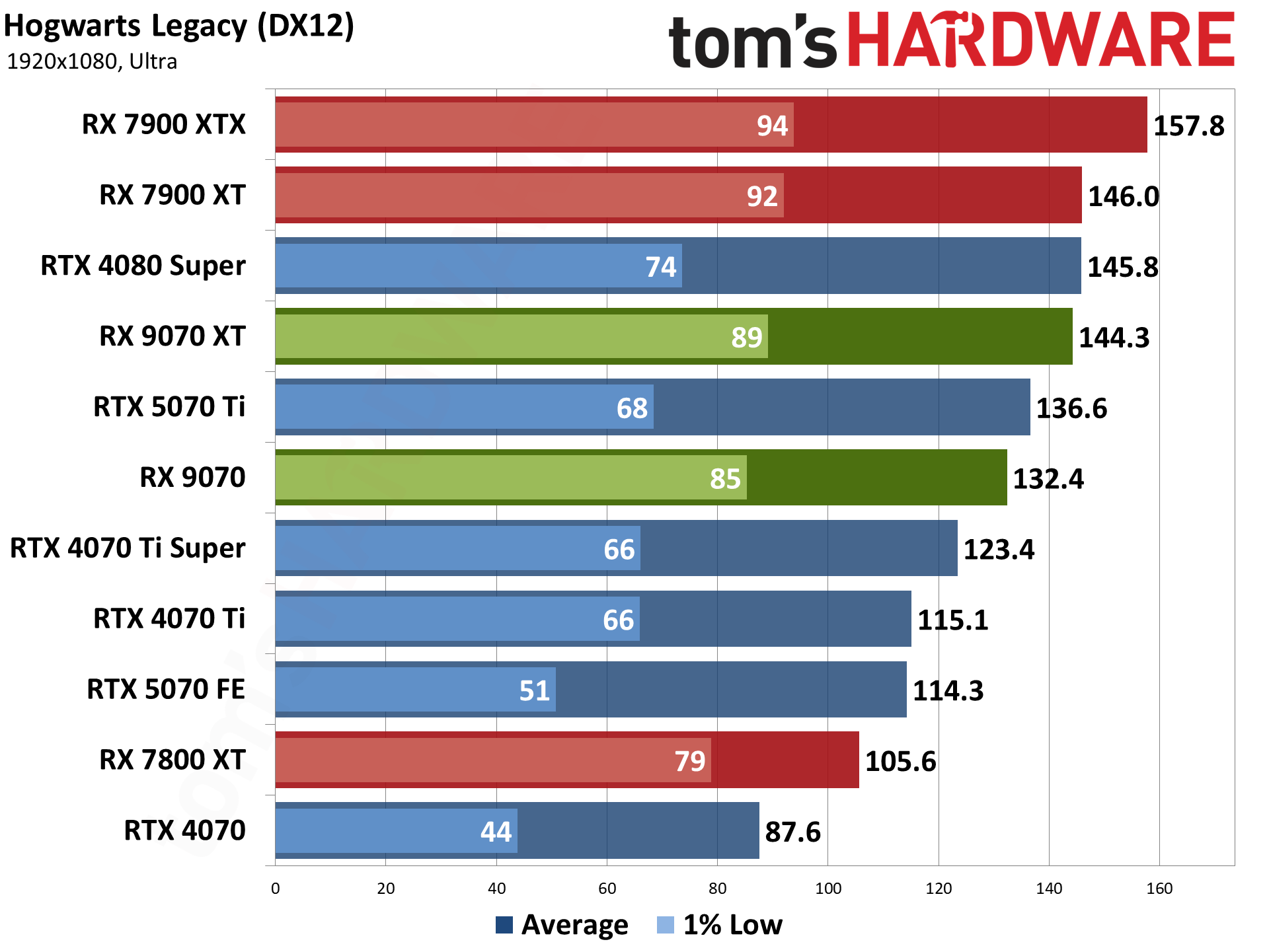

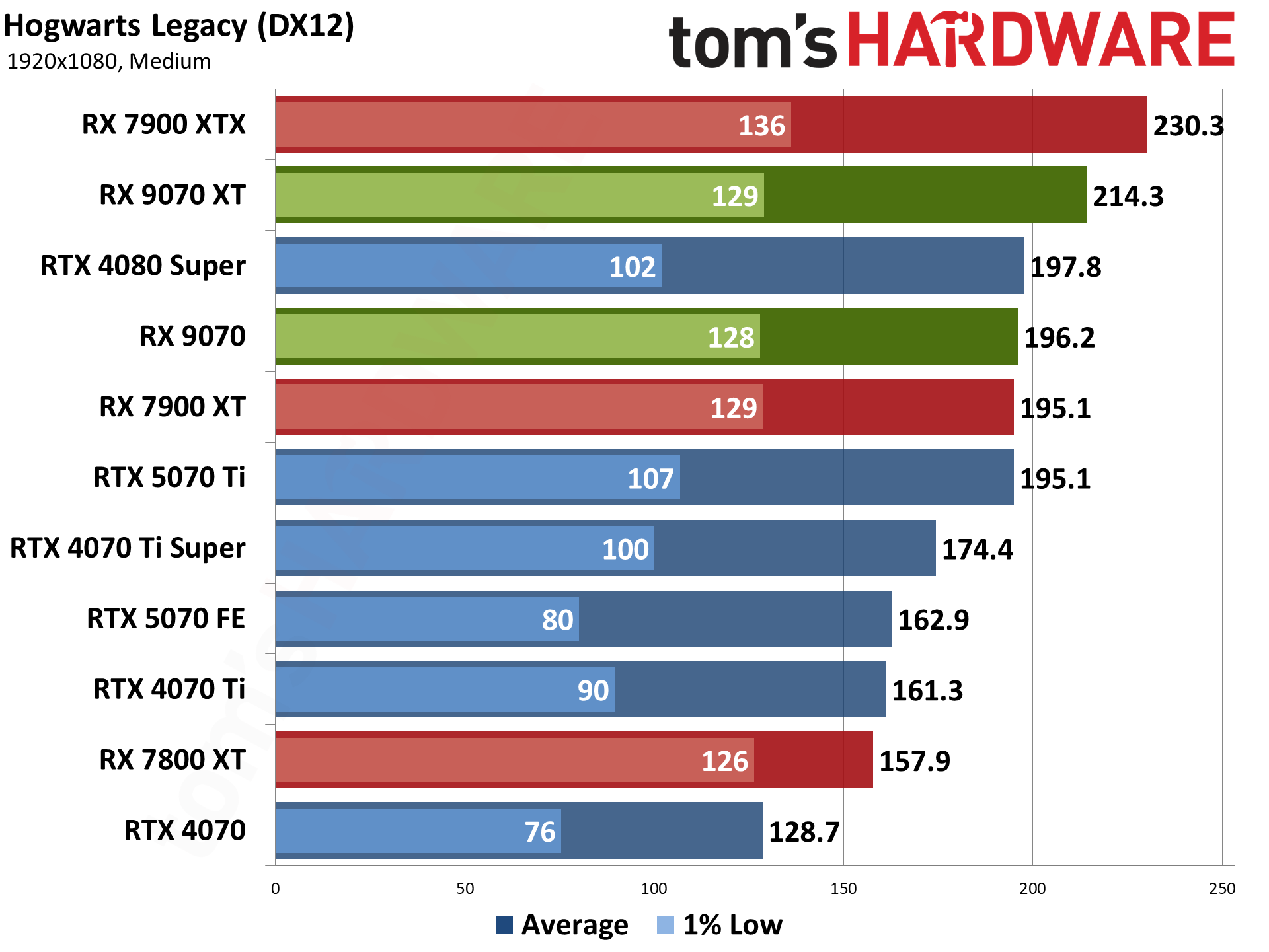

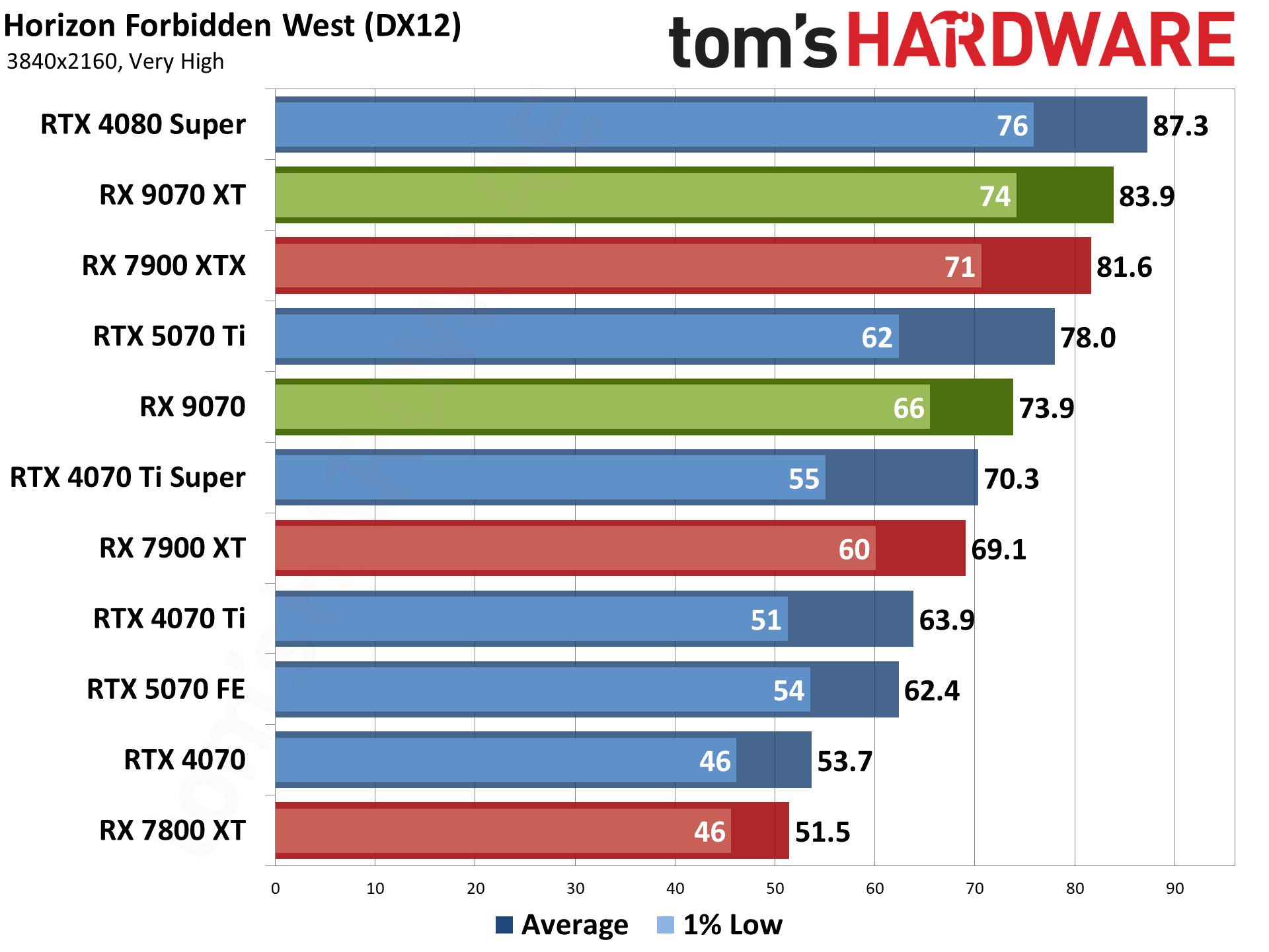

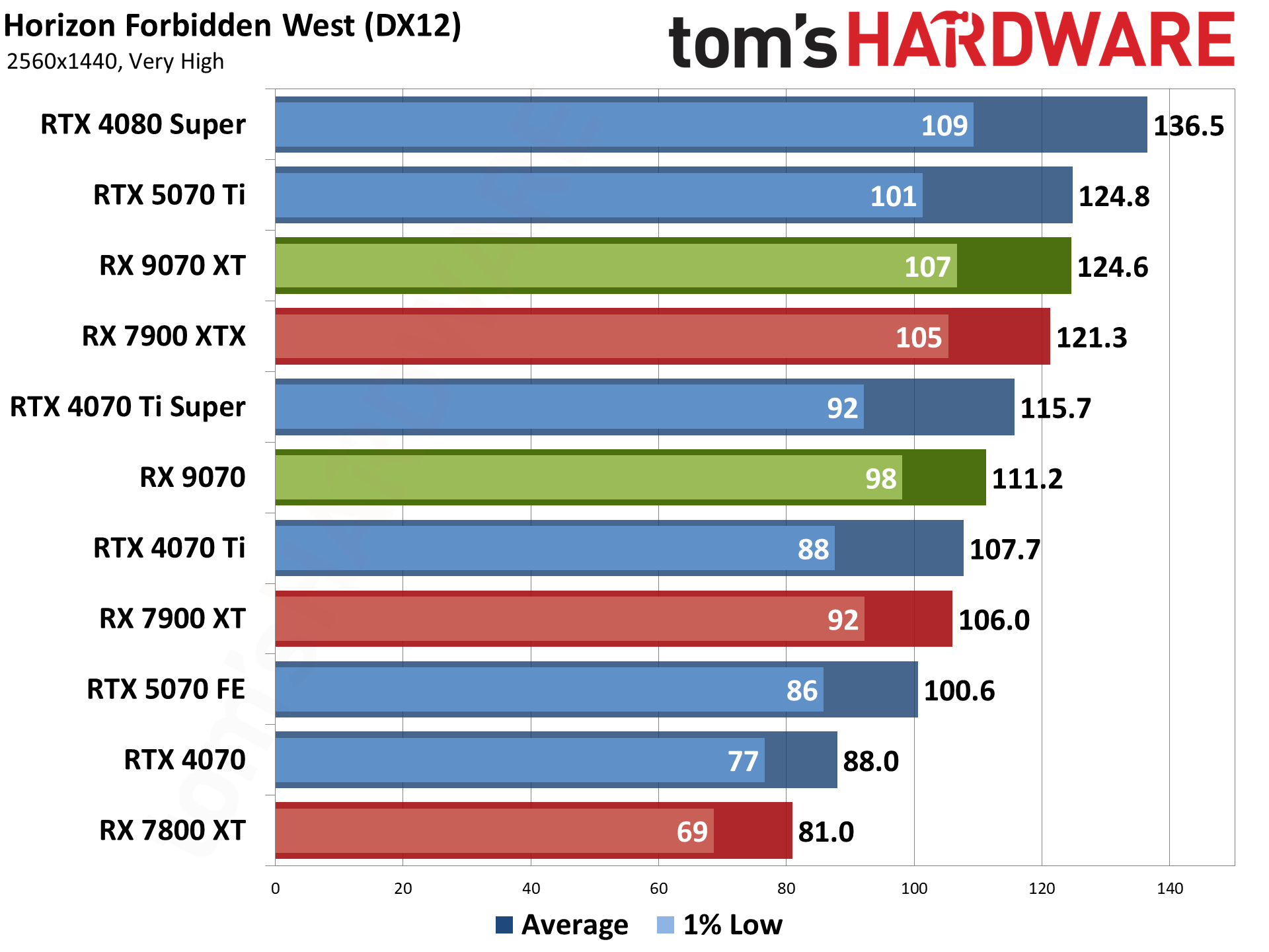

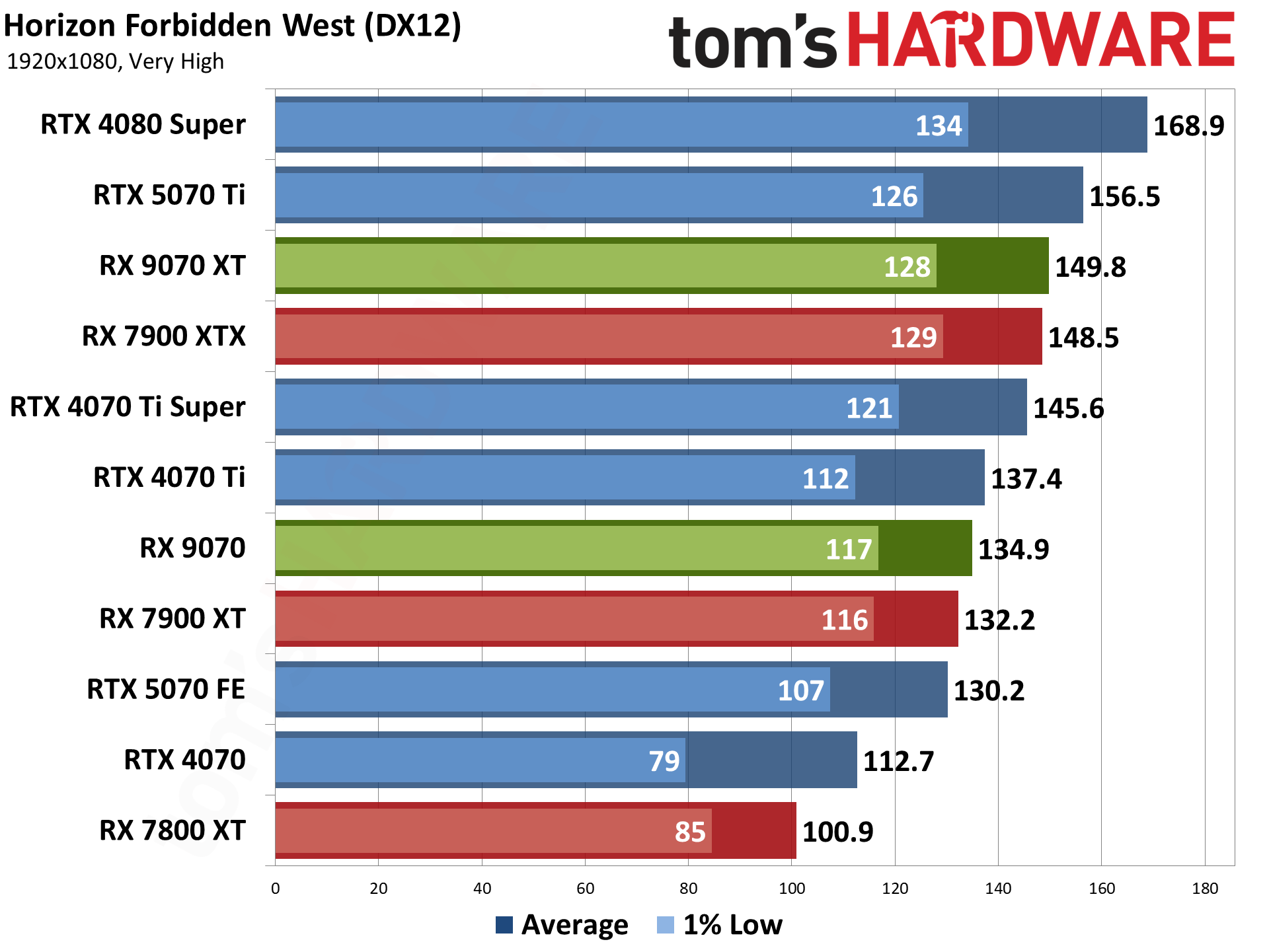

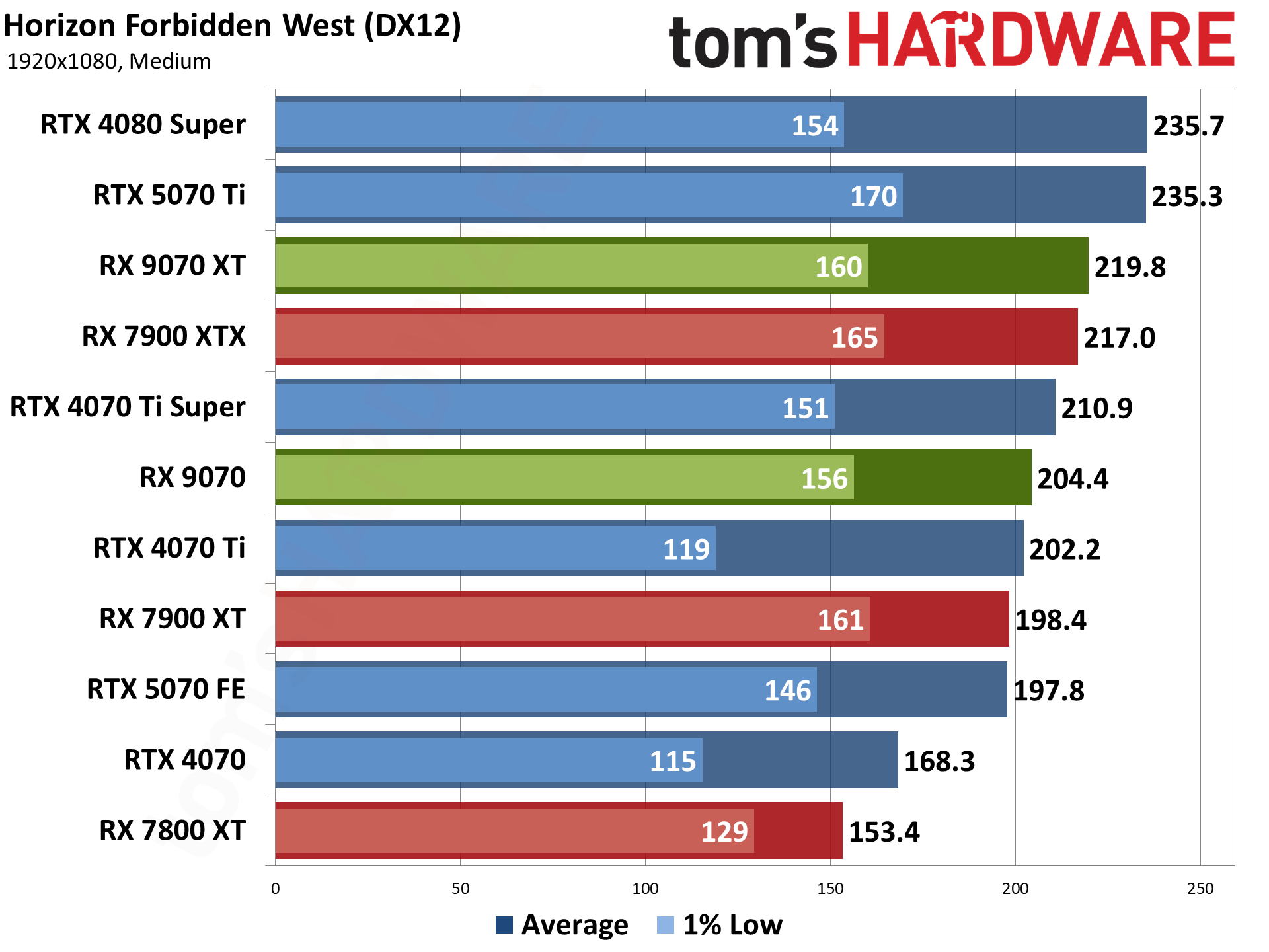

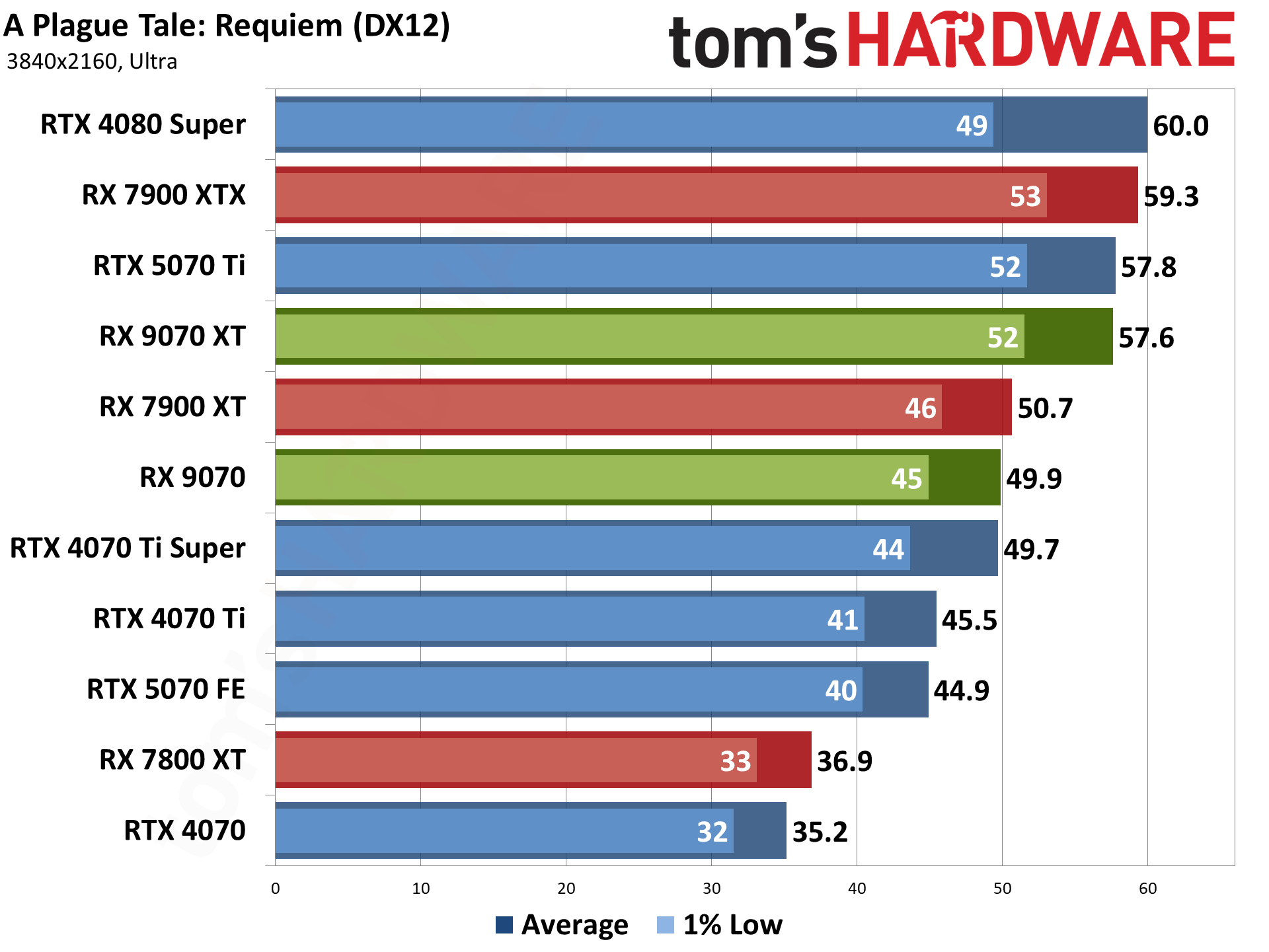

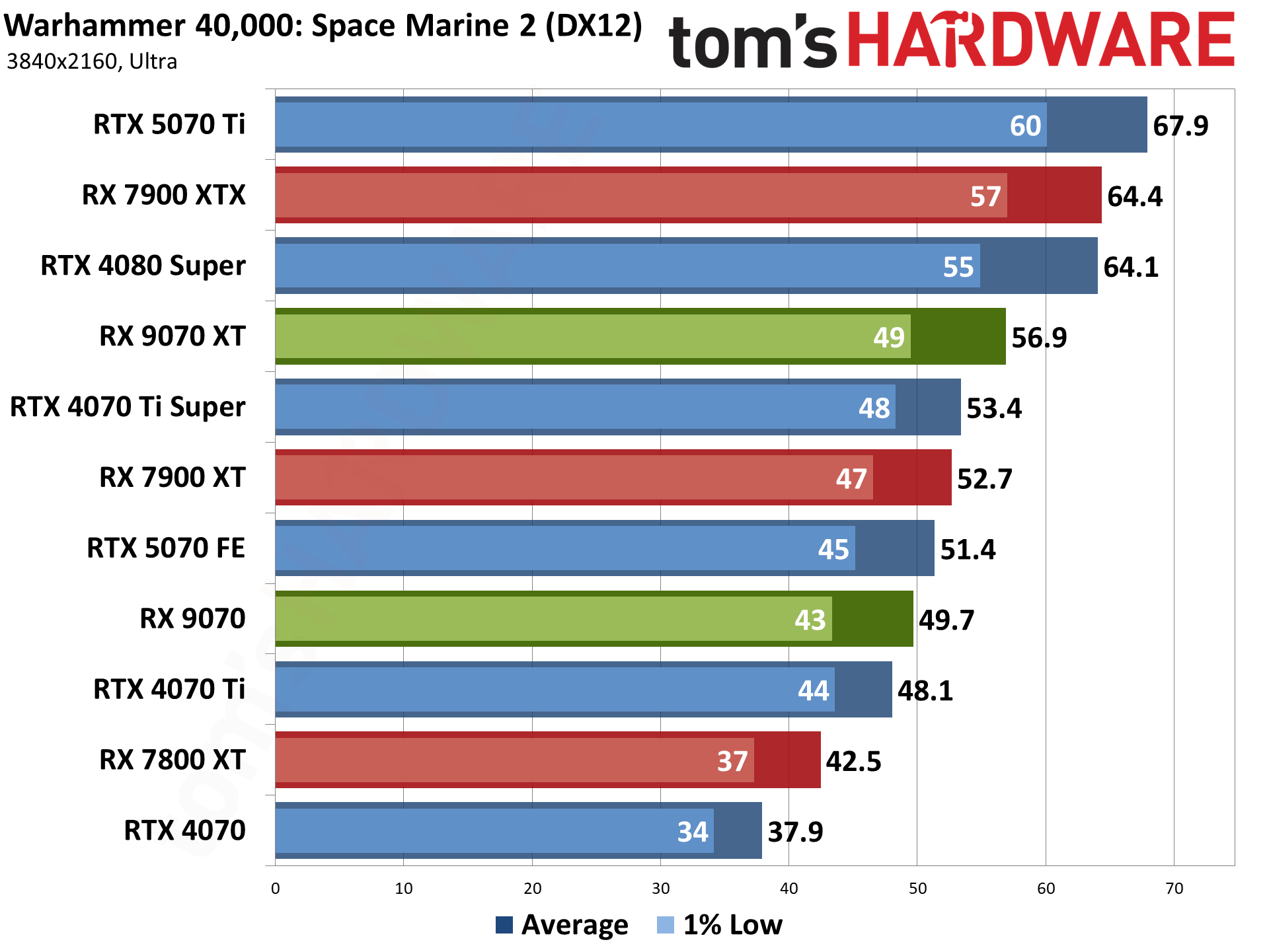

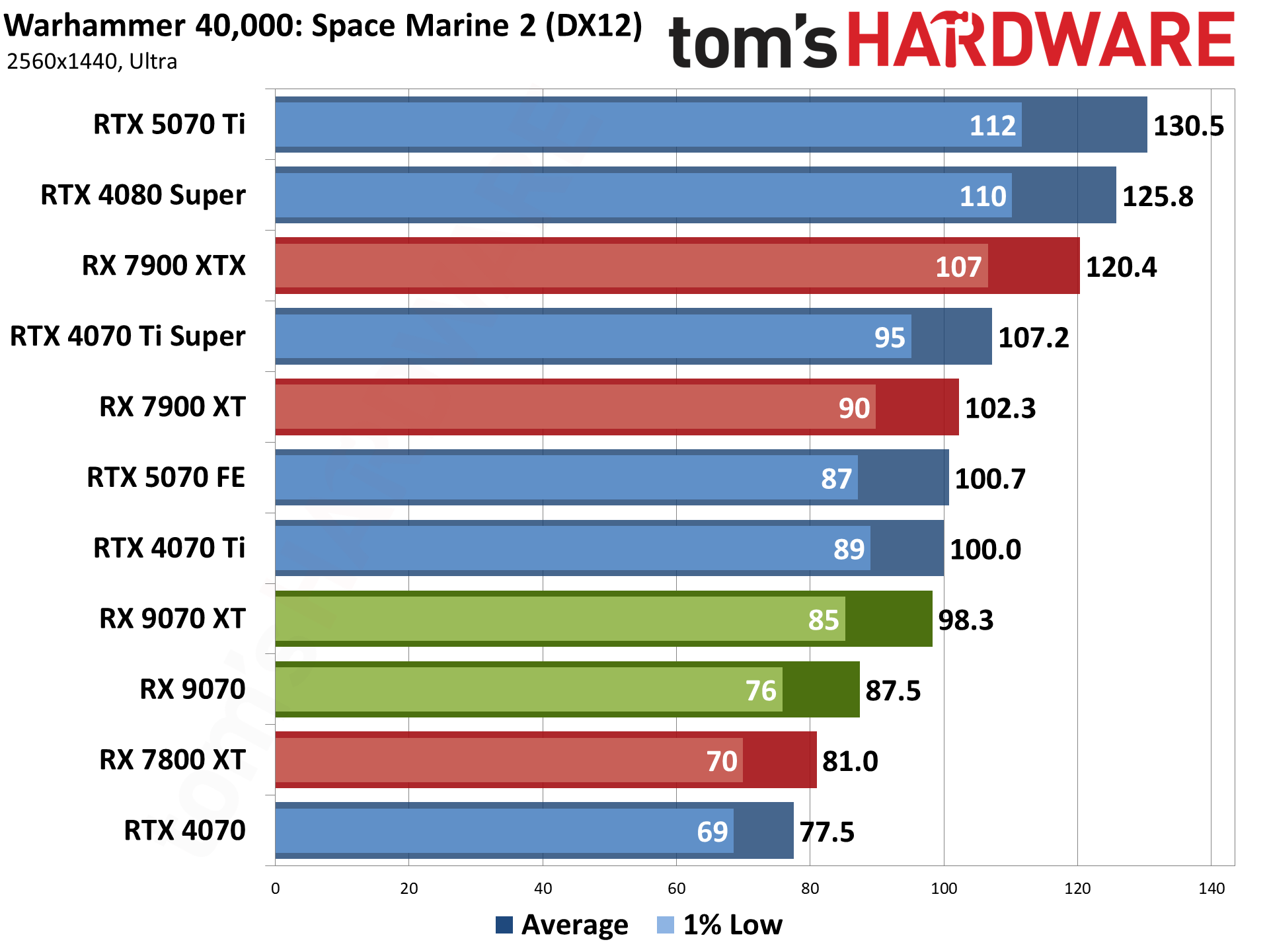

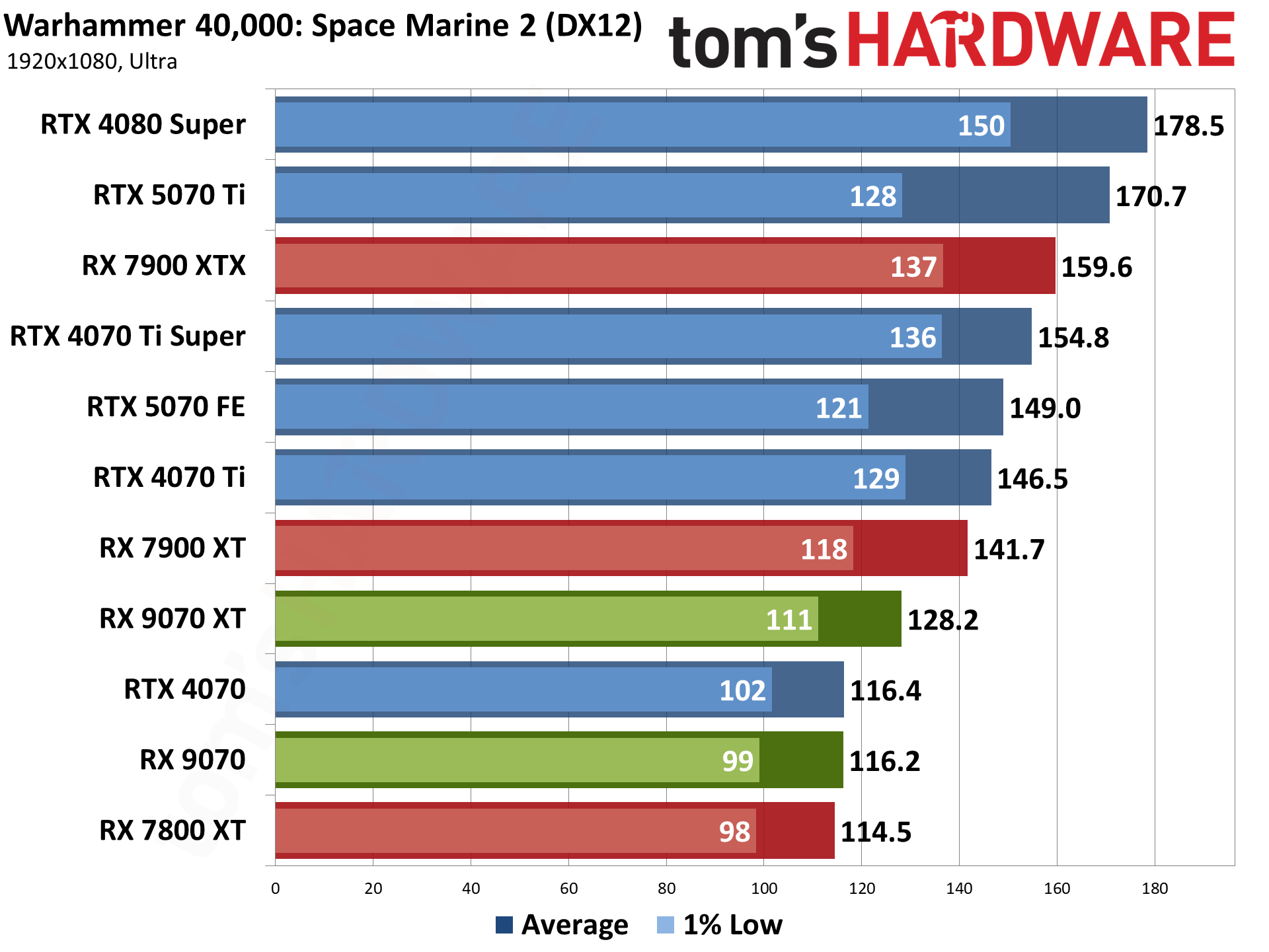

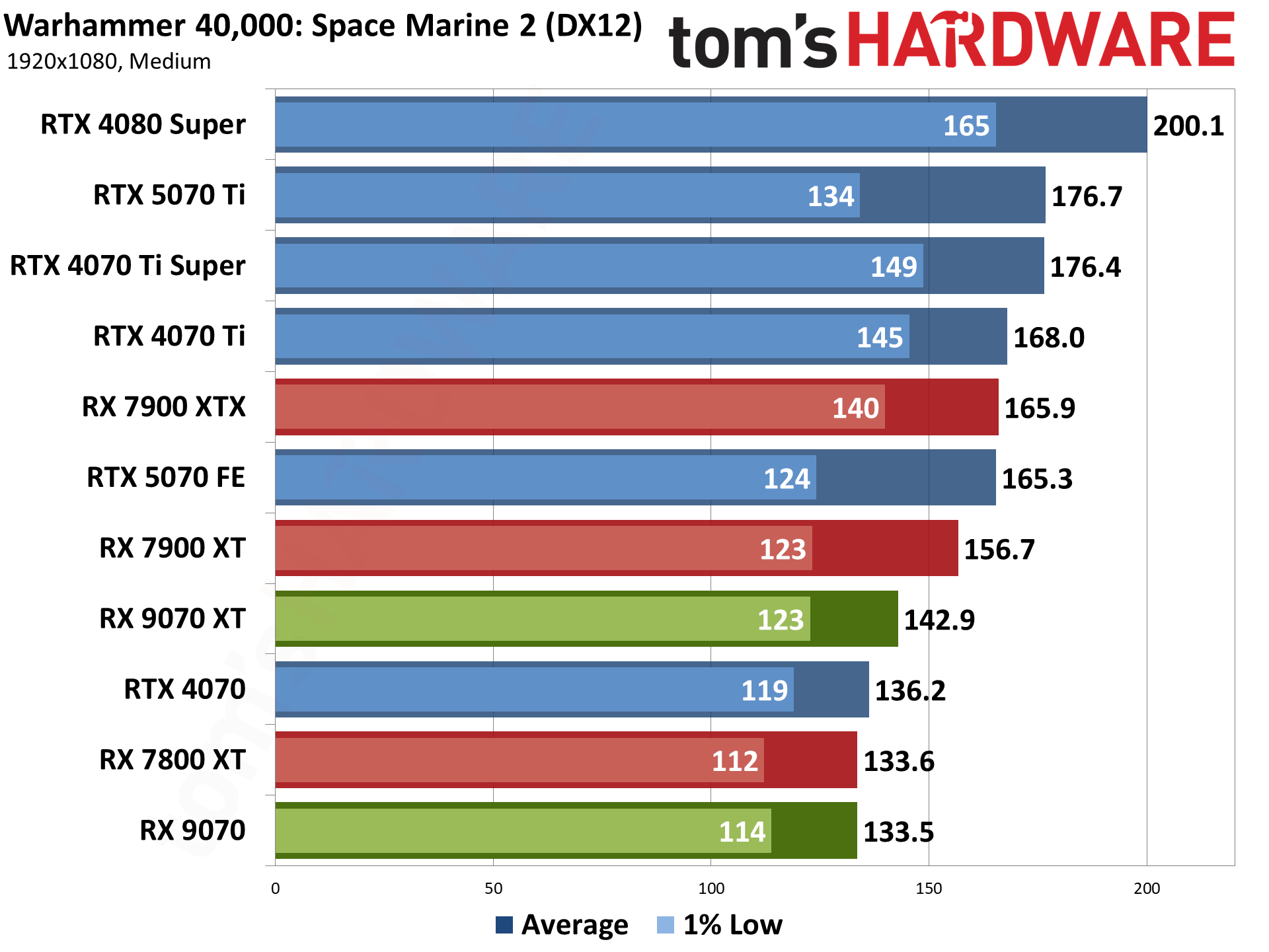

There are several important comparisons we want to look at. First is how the fastest RDNA 4 GPU, the 9070 XT, fares against the 7900 XTX. The answer: It's probably closer than you would expect based on the raw specs. The 7900 XTX ends up winning by just 5% overall at 4K — and also 5% at 1440p and 1080p ultra, with a slightly lesser 3% lead at 1080p medium, where CPU bottlenecks become a bigger factor. The 9070 XT is also consistently 5~10 percent faster than the RX 7900 XT, so that's higher performance than the prior generation's nominally $750 part with a price of $600.

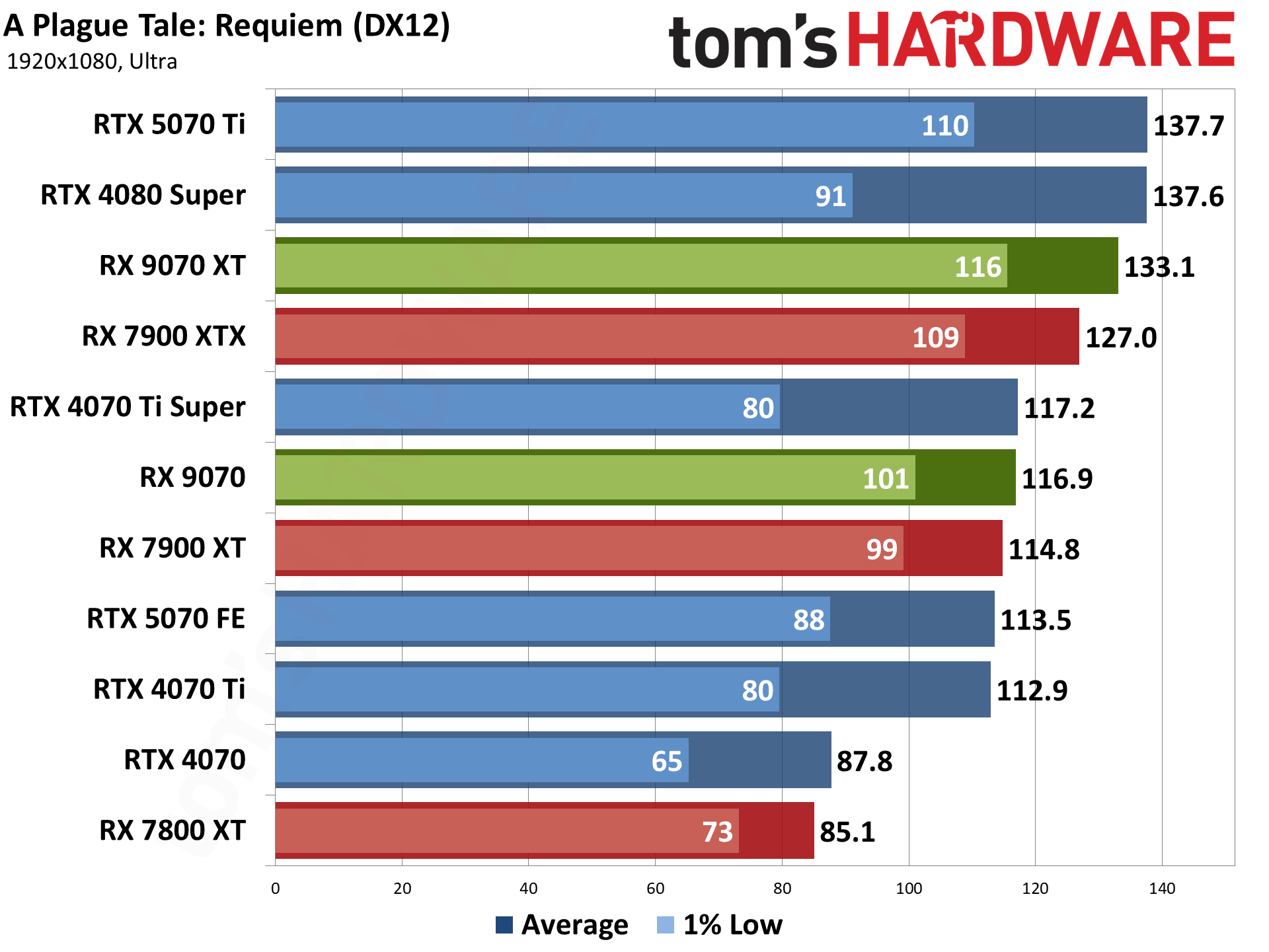

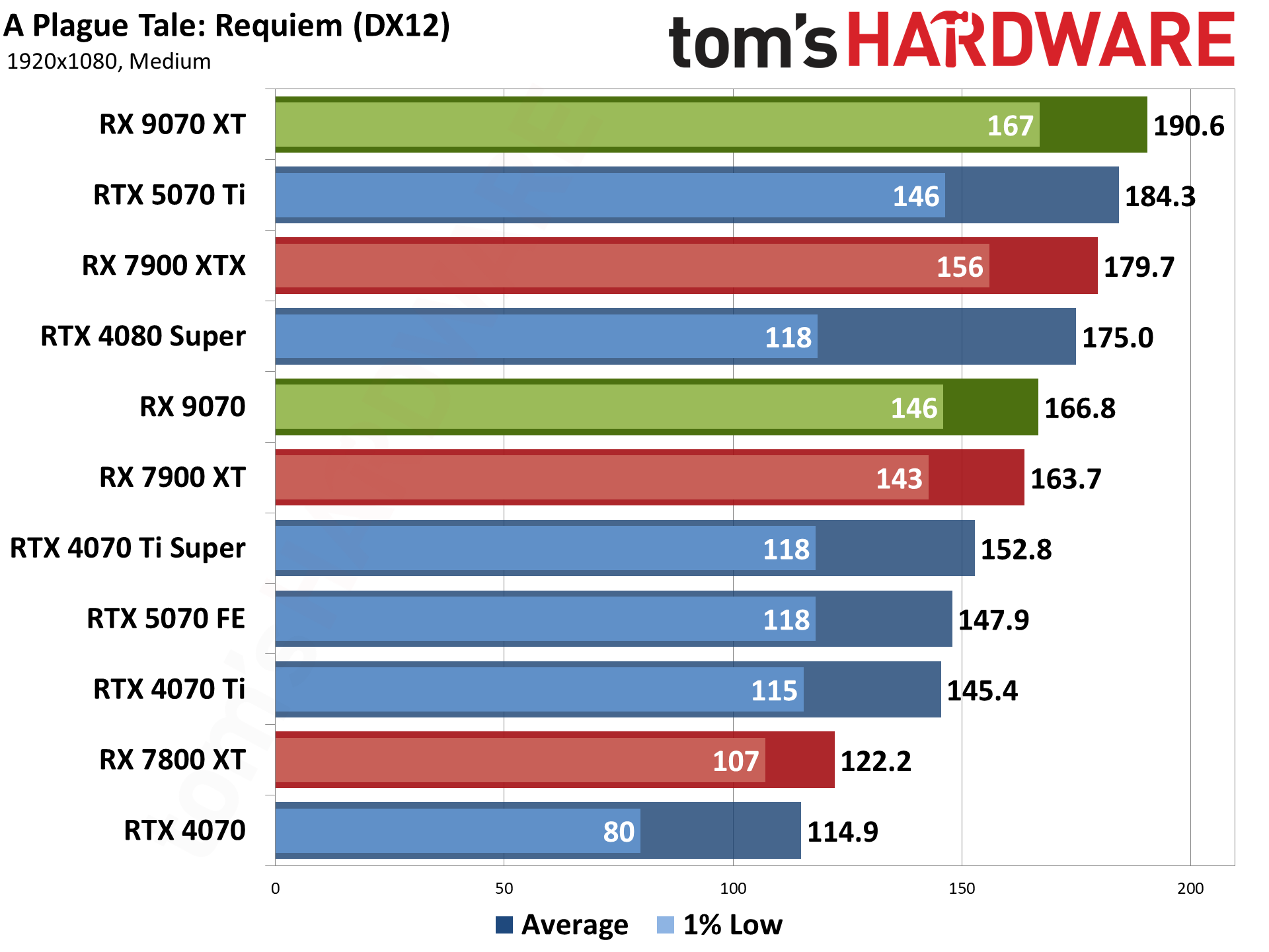

Next up, let's look at the 9070 XT versus 9070. The XT costs just $50 more, a 9% price increase, with a theoretical 35% advantage in raw compute. Except that raw compute assumes the GPUs are running at their boost clocks, and that's not always the case. The vanilla 9070 tends to exceed its boost clock in many of our tests, particularly at lower resolutions... but the same goes for the 9070 XT. Overall, the XT leads by 15% at 4K, 13% at 1440p, and 10%/8% at 1080p ultra/medium. That means that, as many surmised before today's review embargo, the RX 9070 XT is the better value.

But what a lot of people really want to know is how the AMD versus Nvidia matchup shakes out. Based on MSRPs, the RTX 5070 Ti should be the fastest of the new cards, and it is. However, the margin of victory isn't very large at all, considering the $150 price difference. We're talking low single digit percentages for our rasterization tests: 0 to 4 percent across our suite, with the biggest lead of 4% coming at 1080p ultra. That's pretty surprising, considering the 5070 Ti has 40% more memory bandwidth thanks to GDDR7.

That of course means the matchup between the RX 9070 XT and the RTX 5070 ends up being a relative blowout. For $50 more — on paper — the RX 9070 XT beats the RTX 5070 by 29% at 4K, 21% at 1440p, and 14–15 percent at 1080p. It's not even close. There's only one game in our rasterization suite that the 5070 wins by a decent amount at 1080p, Warhammer 40K: Space Marine 2, which seems to be lacking in the AMD driver optimizations arena — and the 9070 XT is still 11% faster at 4K ultra.

And finally, what about the RX 9070 versus the RTX 5070, both nominally priced at $549? If you're at all good at math and were paying attention above, you'll already know that the 9070 comes out ahead. It's 12% faster at 4K, 8% faster at 1440p, and 4%/7% faster at 1080p. There are five games where the 5070 manages any lead at all, with Space Marine 2 being the biggest margin of victory and the only one where the 5070 leads at 4K. In general, though, the RX 9070 is clearly better for rasterization performance at native resolution.

Below are the 16 rasterization game results, in alphabetical order, with short notes on the testing where something worth pointing out is present.

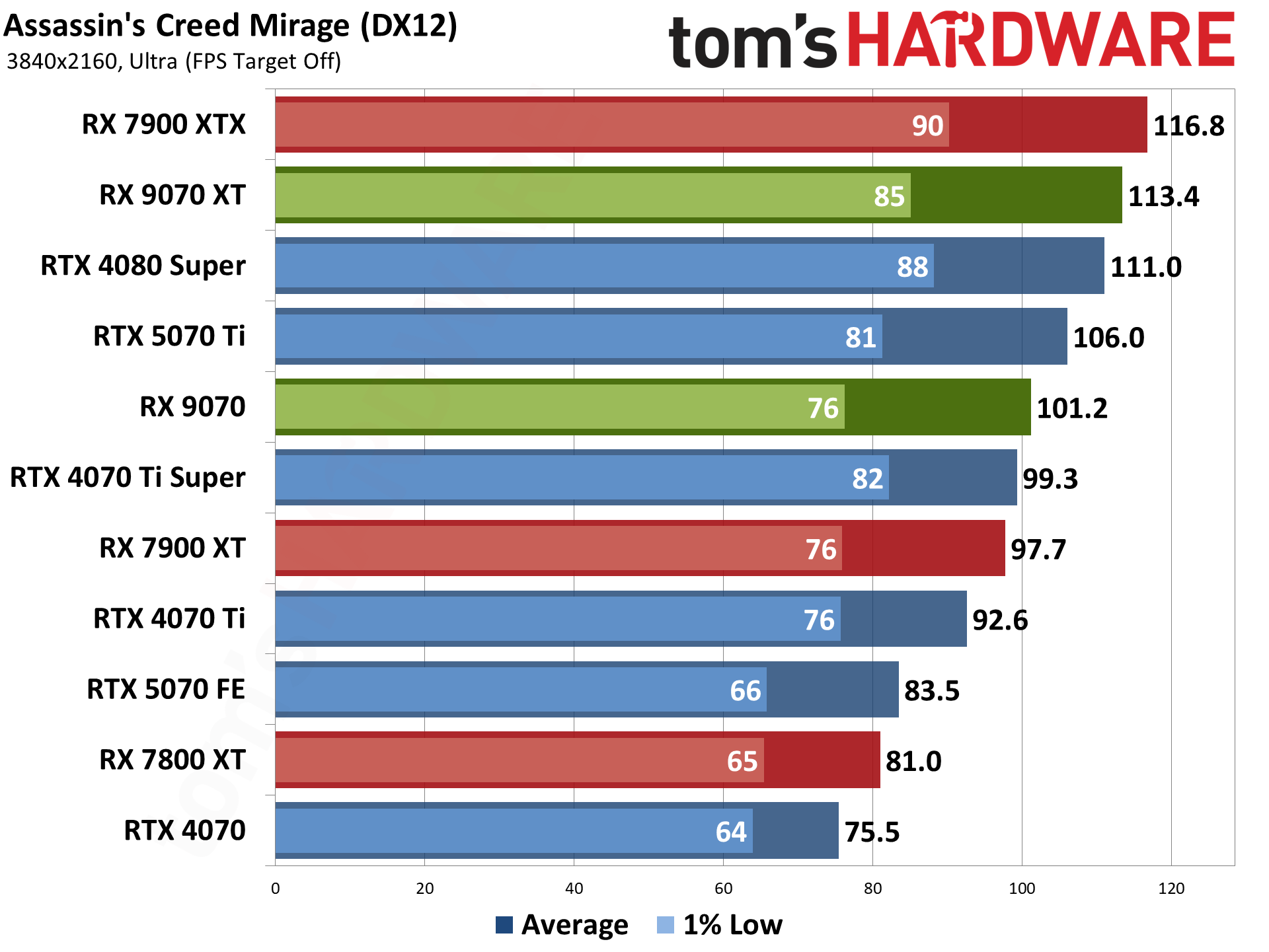

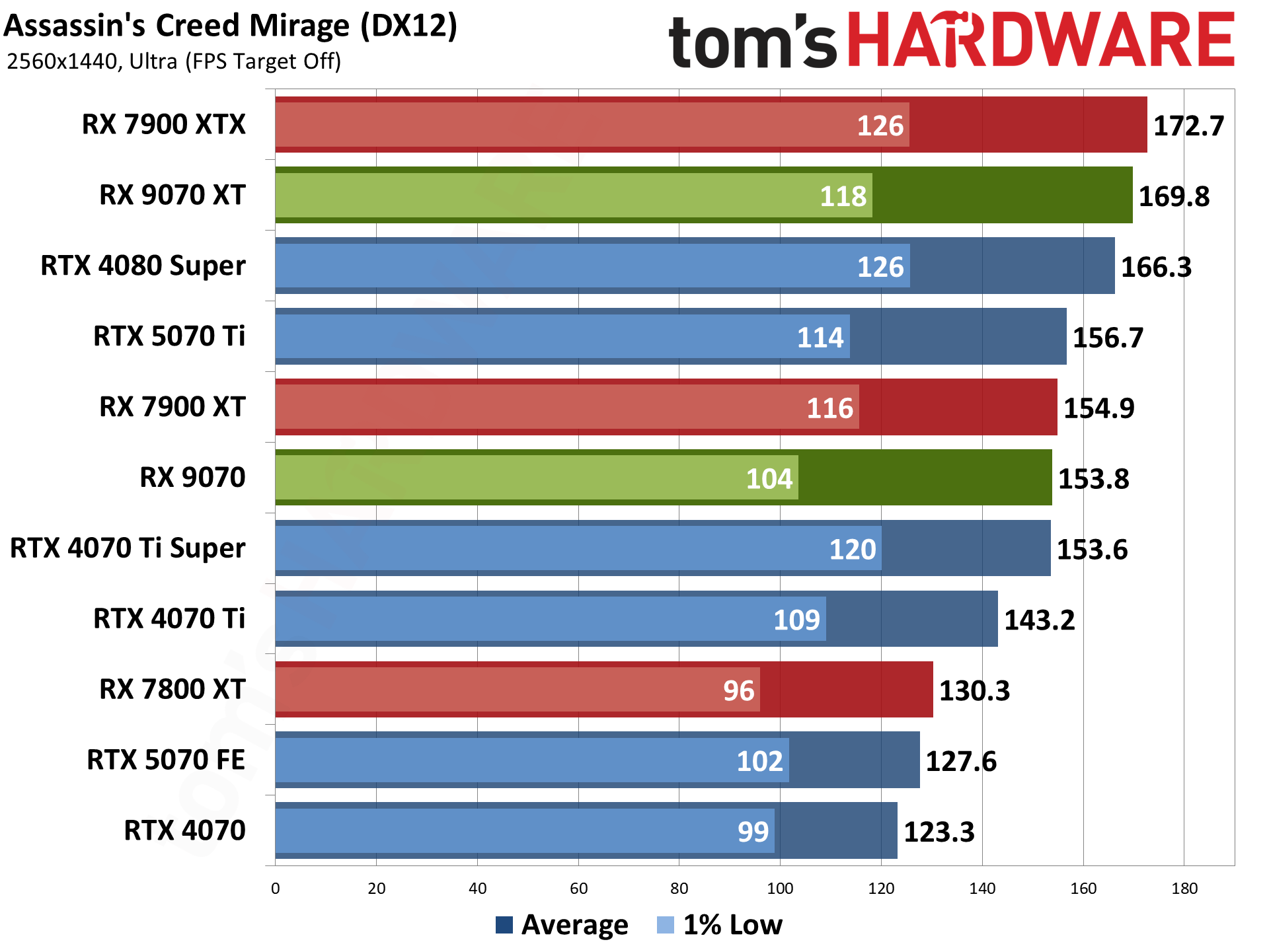

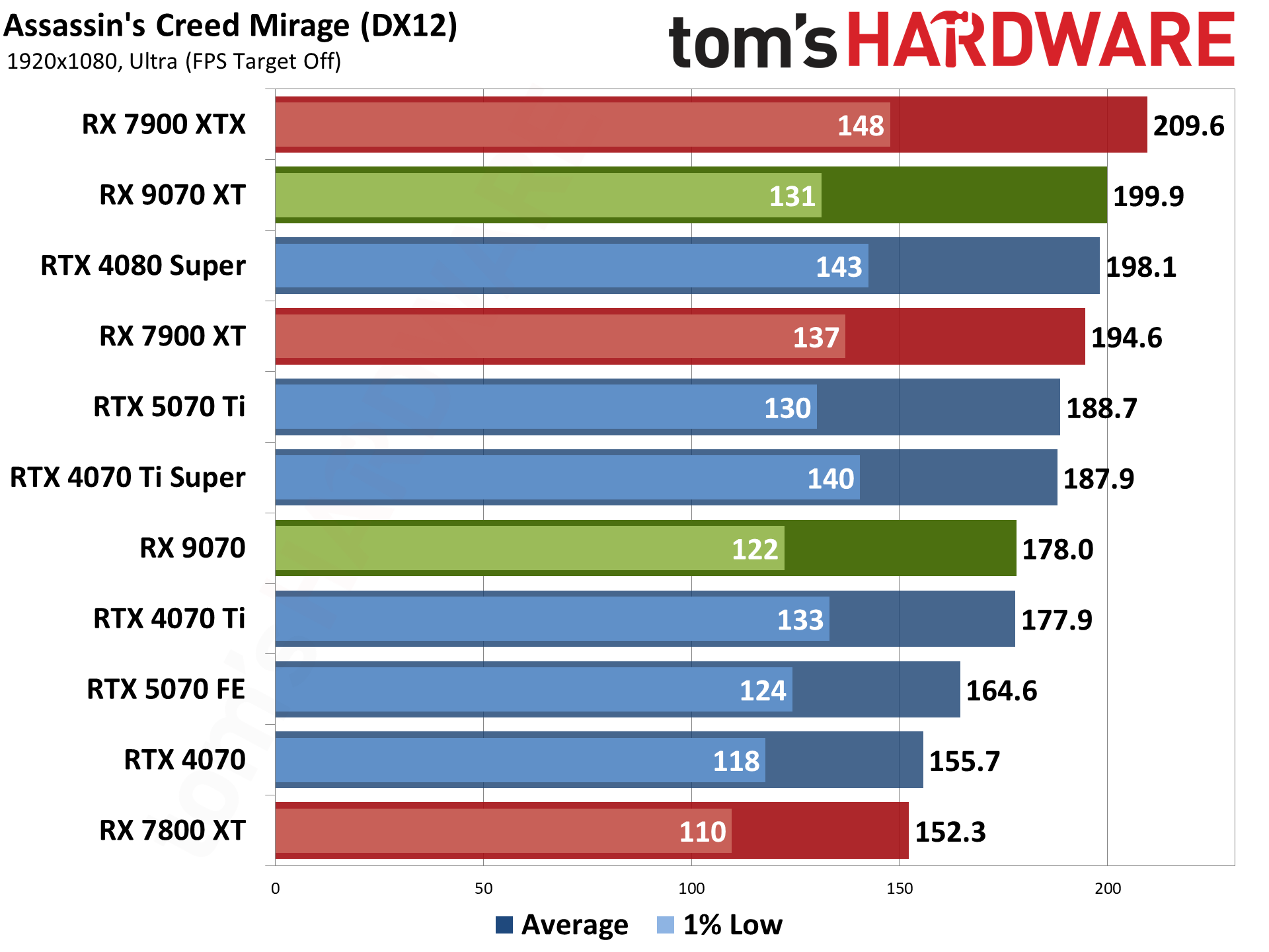

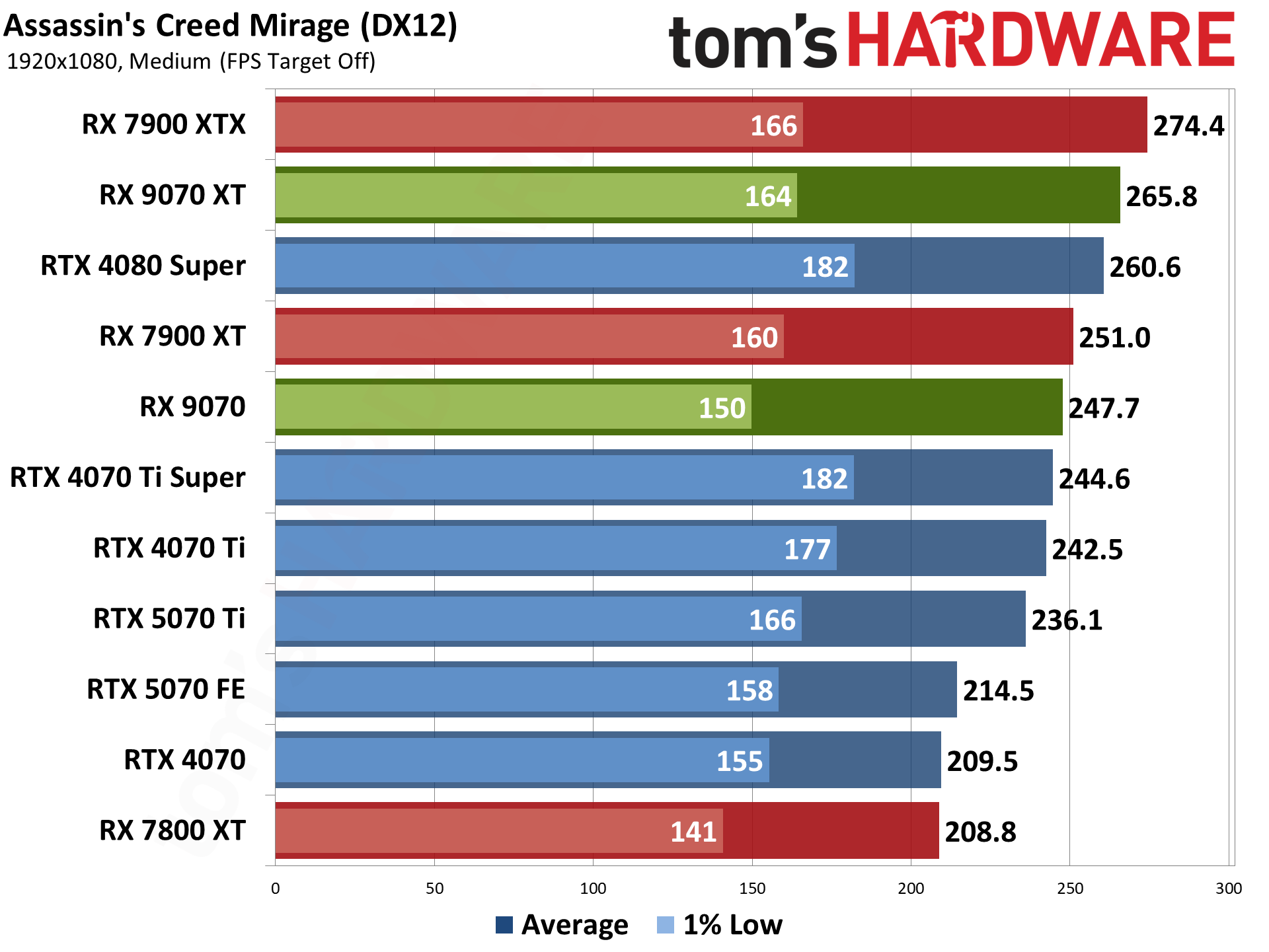

Assassin's Creed Mirage uses the Ubisoft Anvil engine and DirectX 12. It's also an AMD-promoted game, though these days, that doesn't necessarily mean it always runs better on AMD GPUs. It could be CPU optimizations for Ryzen, or more often, it just means a game has FSR2 or FSR3 support — FSR2 in this case. It also supports DLSS and XeSS upscaling.

Baldur's Gate 3 is our sole DirectX 11 holdout — it also supports Vulkan, but that performed worse on the GPUs we checked, so we opted to stick with DX11. Built on Larian Studios' Divinity Engine, it's a top-down perspective game, which is a nice change of pace from the many first-person games in our test suite. The faster GPUs are hitting CPU bottlenecks in this game.

Black Myth: Wukong is one of the newer games in our test suite. Built on Unreal Engine 5, which supports full ray tracing as a high-end option, we opted to test using pure rasterization mode. Full RT may look a bit nicer, but the performance hit is quite severe. (Check our linked article for our initial launch benchmarks if you want to see how it runs with full RT enabled. We've got supplemental testing coming as well.)

Dragon Age: The Veilguard uses the Frostbite engine and runs via the DX12 API. It's one of the newest games in my test suite, having launched this past Halloween. It's been received quite well, though, and in terms of visuals, I'd put it right up there with Unreal Engine 5 games — without some of the LOD pop-in that happens so frequently with UE5.

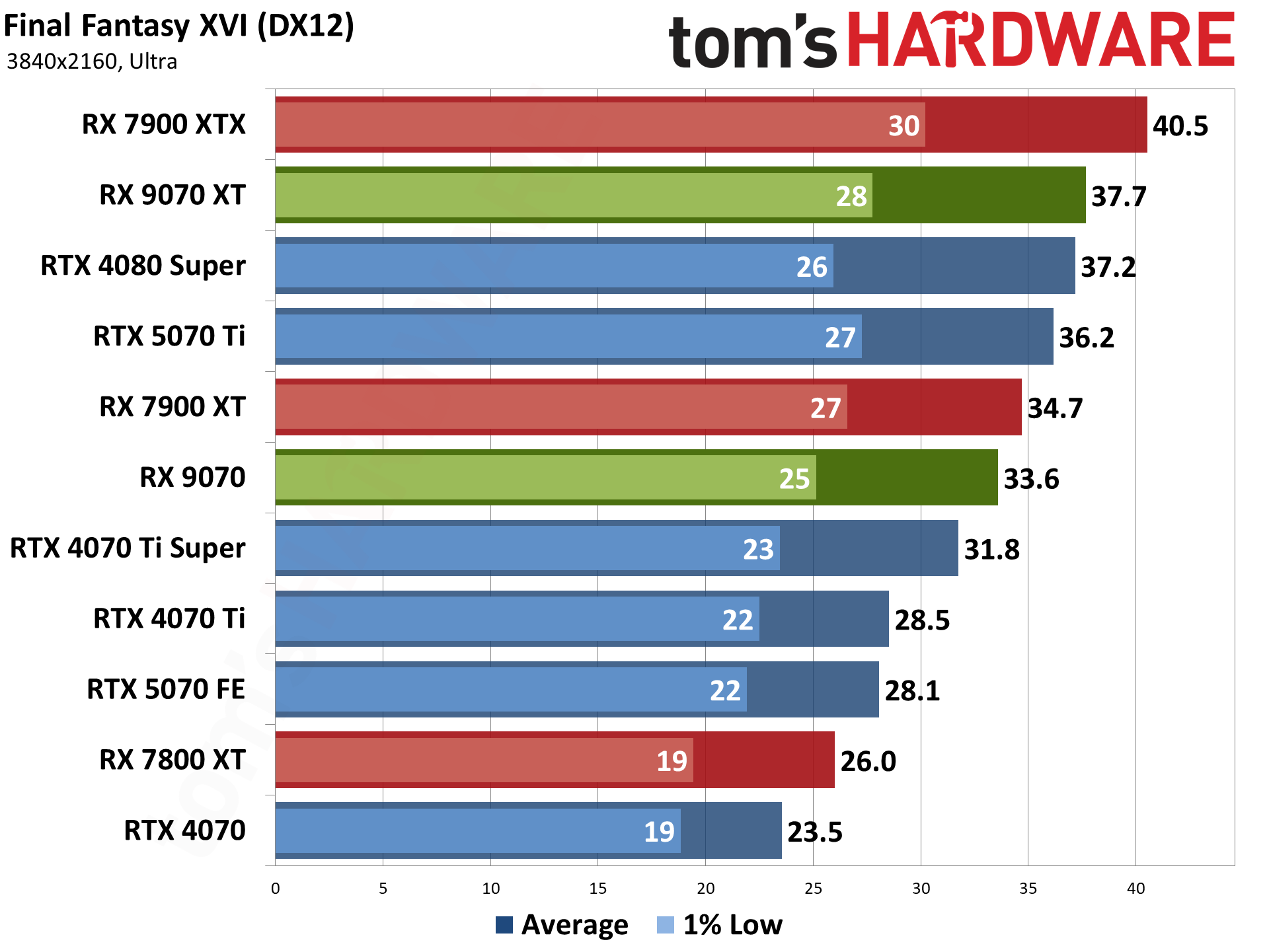

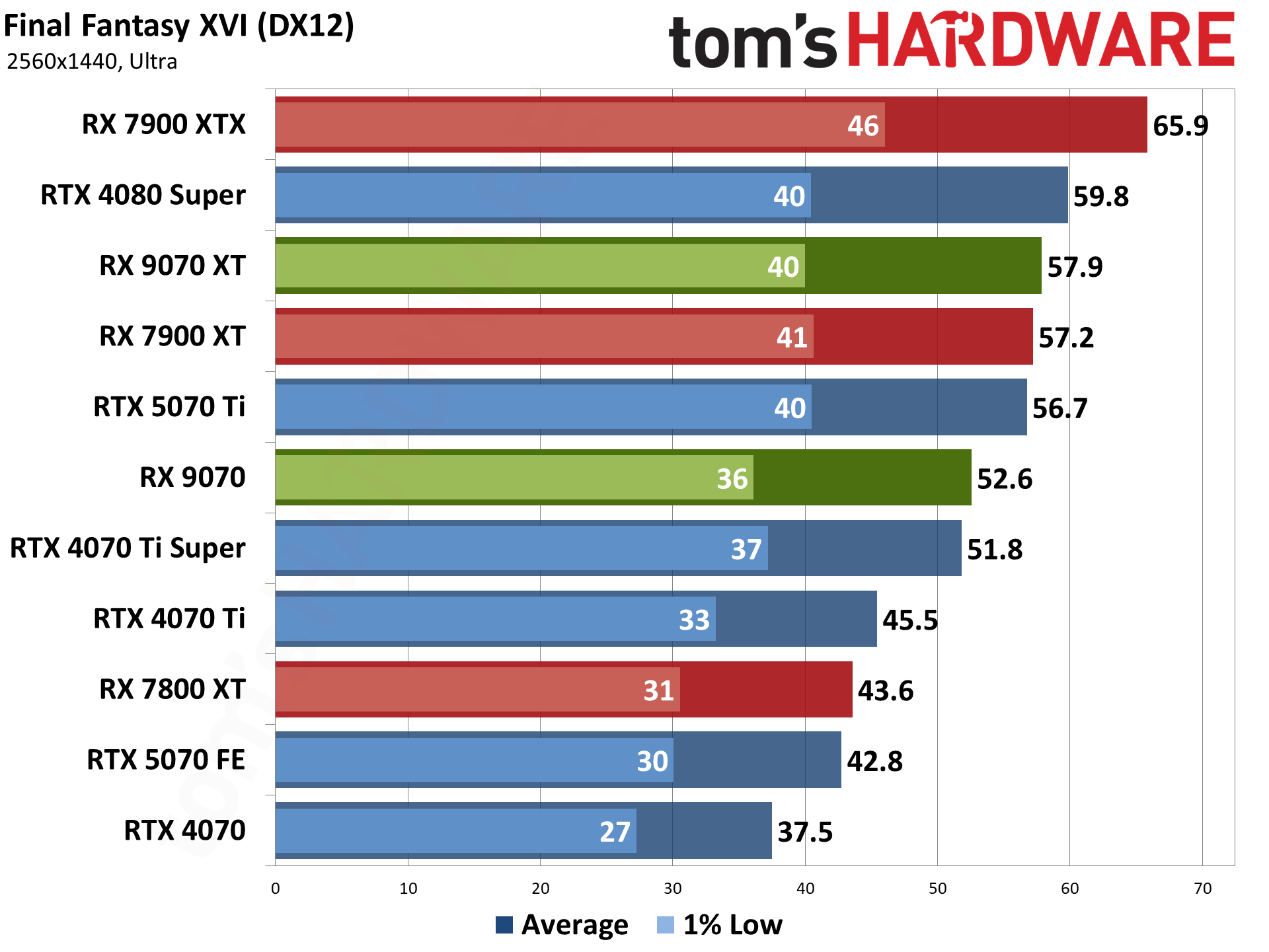

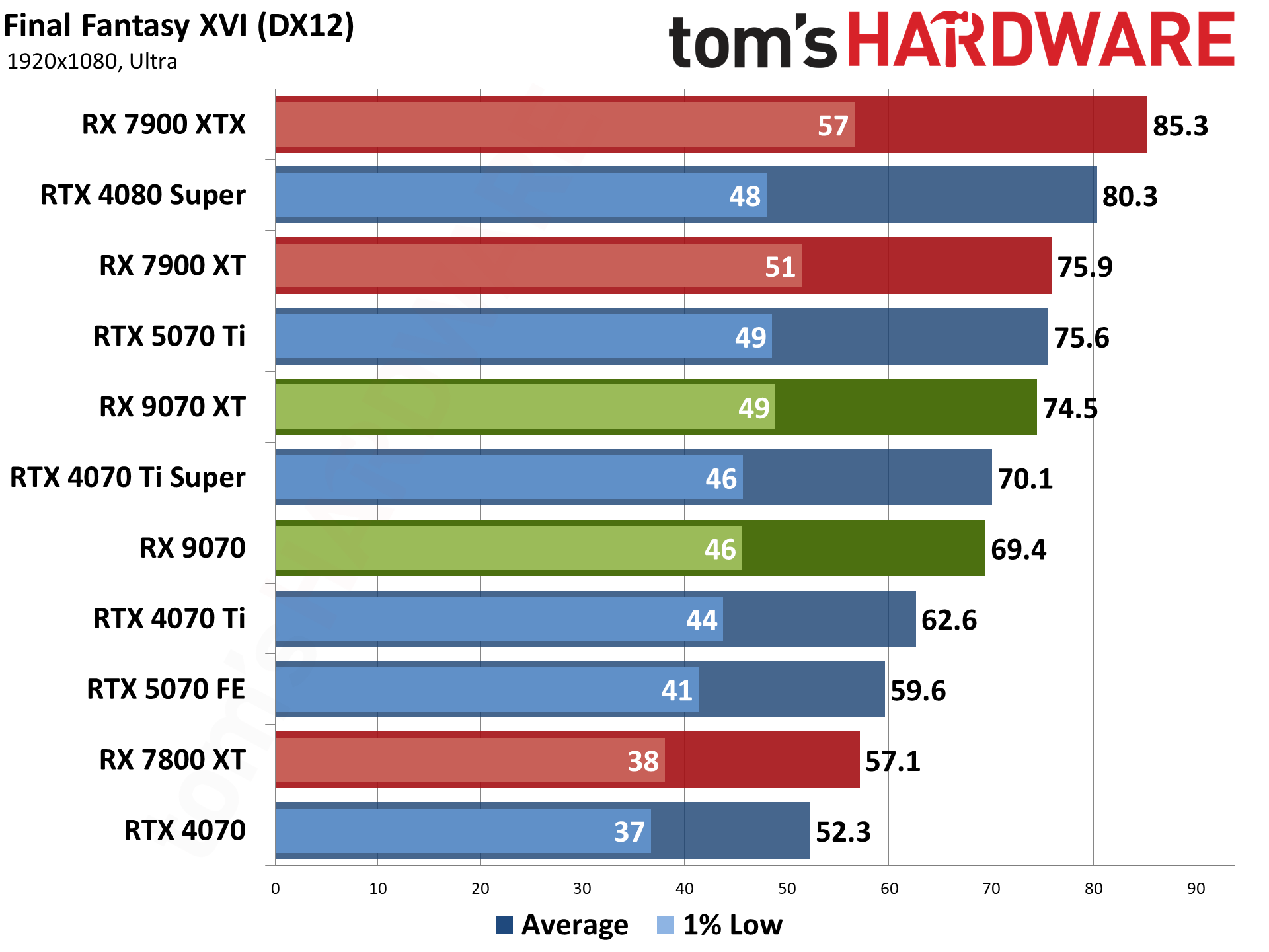

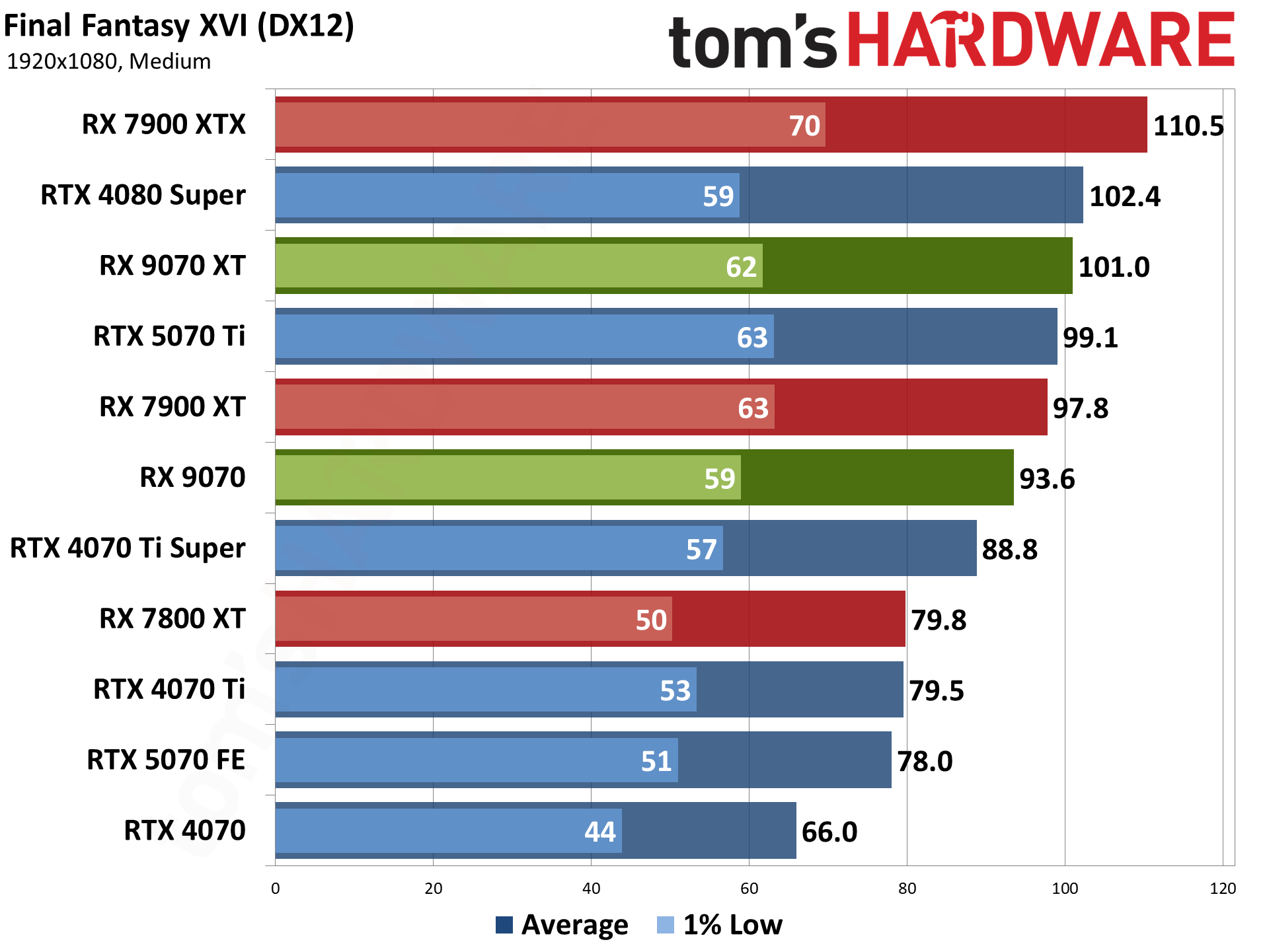

Final Fantasy XVI came out for the PS5 last year, but it only recently saw a Windows release. It's also either incredibly demanding or quite poorly optimized (or both), but it does tend to be very GPU limited. Our test sequence consists of running a set path around the town of Lost Wing.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

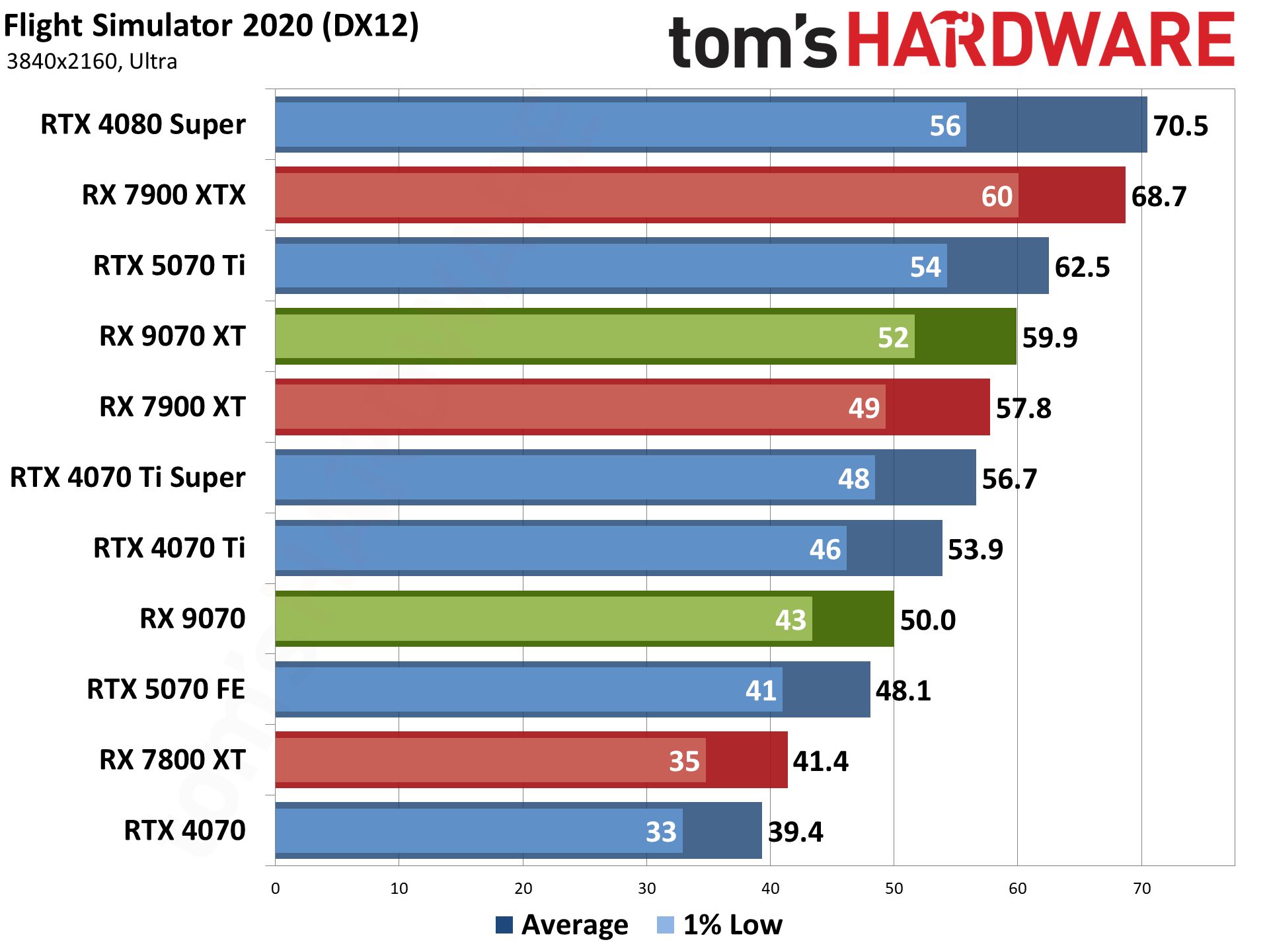

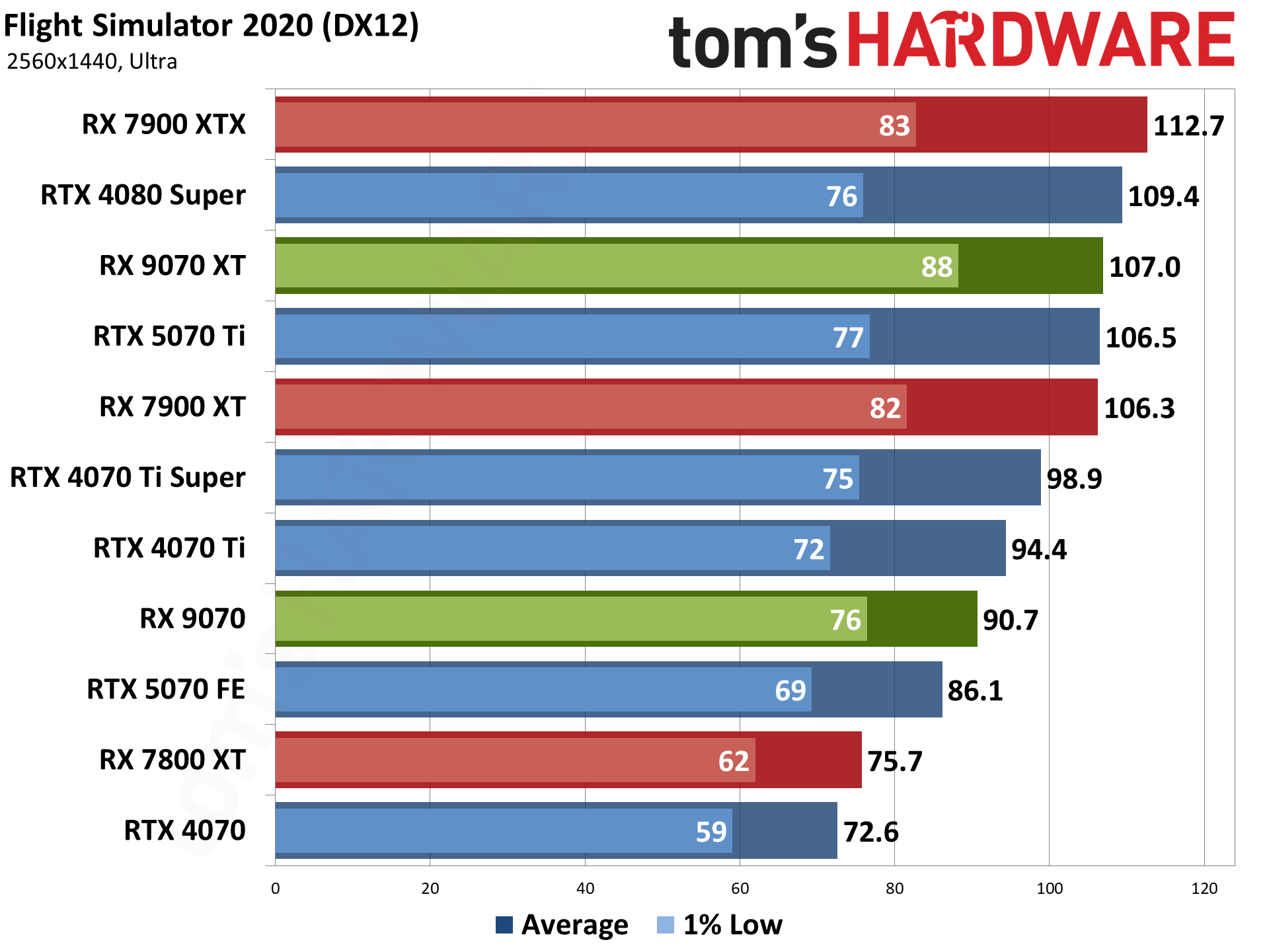

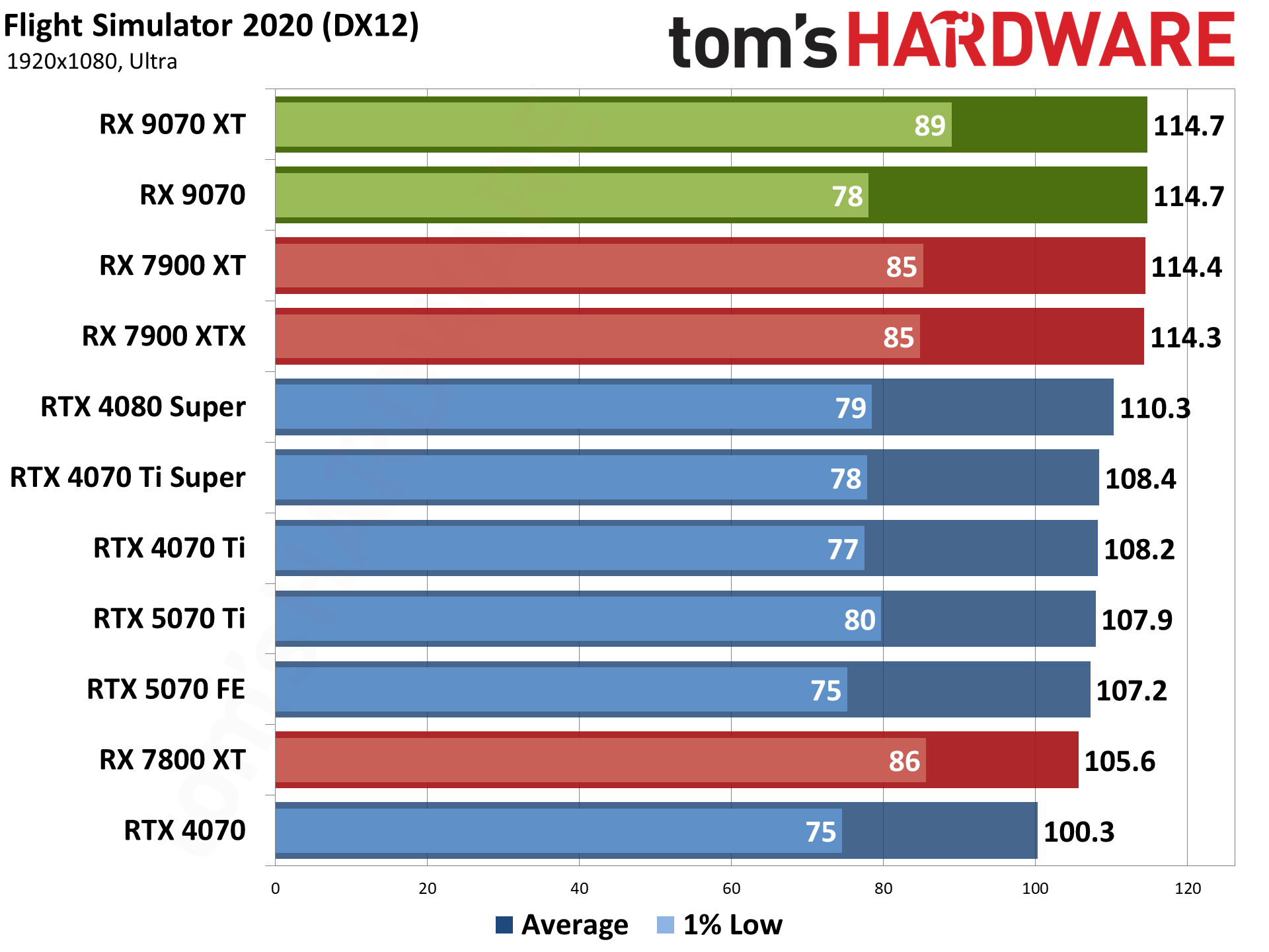

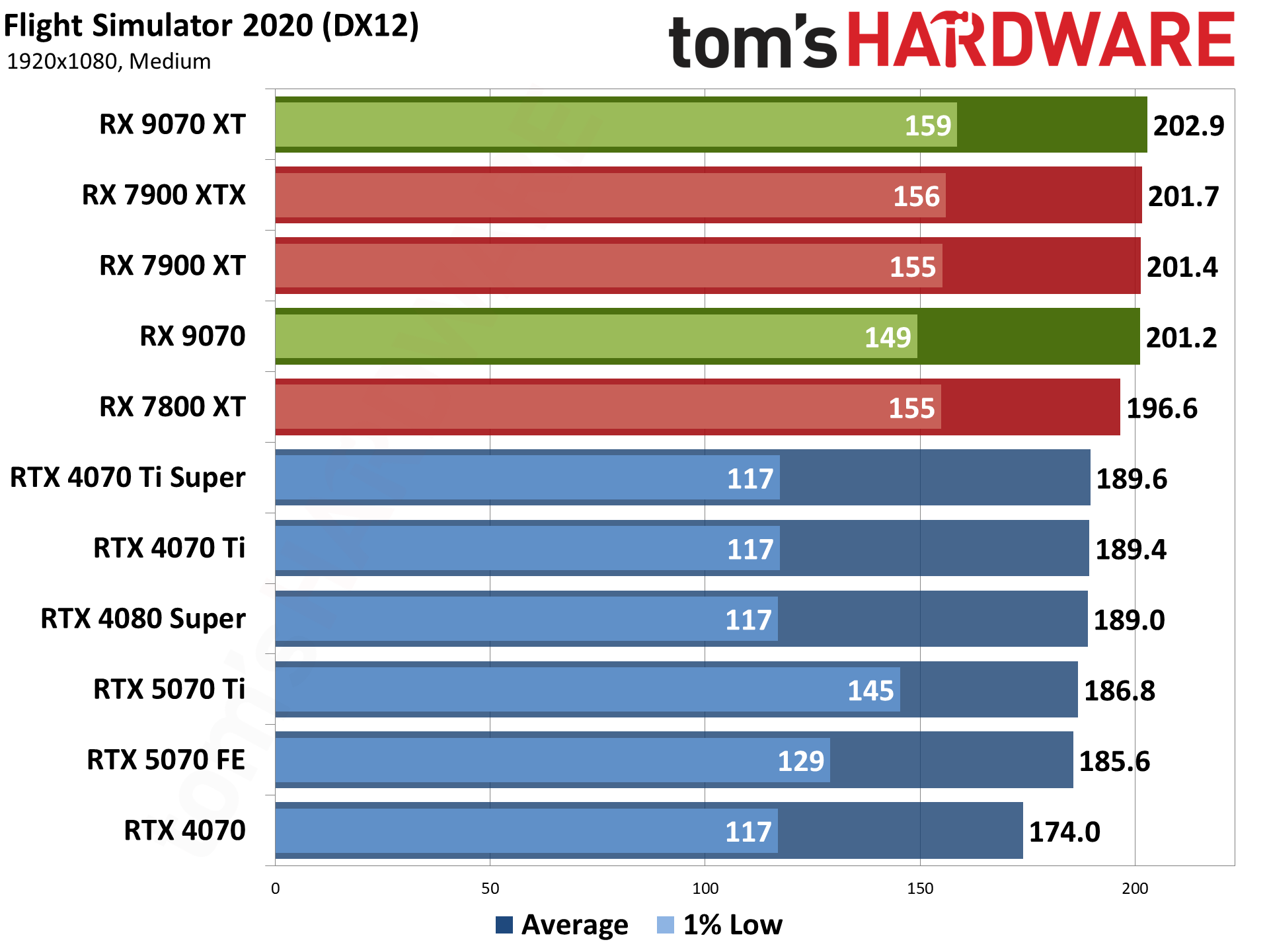

We've been using Flight Simulator 2020 for several years, and there's a new release below. But it's so new that we also wanted to keep the original around a bit longer as a point of reference. We've switched to using the 'beta' (eternal beta) DX12 path for our testing now, as it's required for DLSS frame generation, even if it runs a bit slower on Nvidia GPUs.

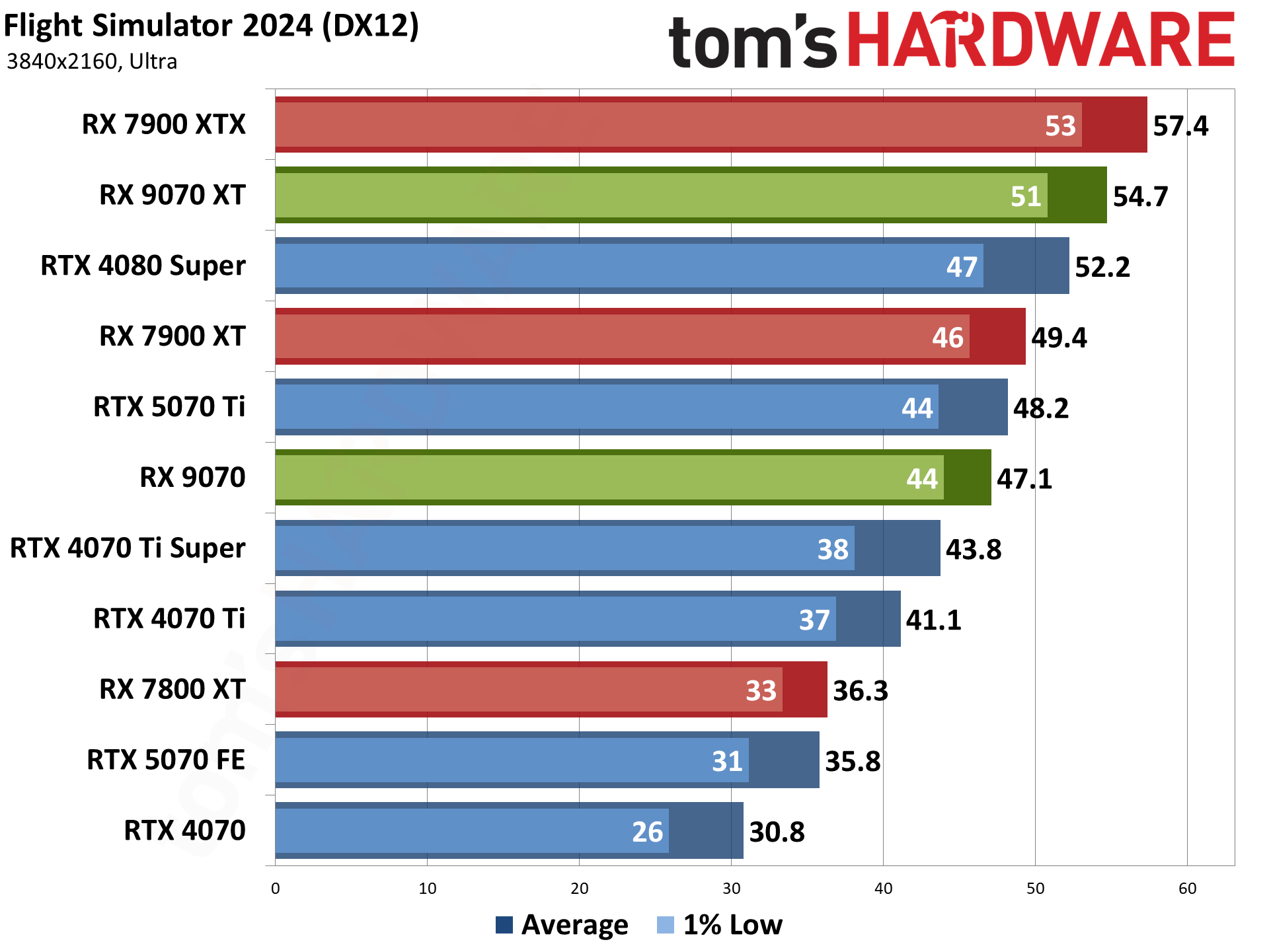

Flight Simulator 2024 is the latest release of the storied franchise, and it's even more demanding than the above 2020 release — with some differences in what sort of hardware it seems to like best. Where the 2020 version really appreciated AMD's X3D processors, the 2024 release tends to be more forgiving to Intel CPUs, thanks to improved DirectX 12 code (DX11 is no longer supported).

God of War Ragnarök released for the PlayStation two years ago and only recently saw a Windows version. It's AMD promoted, but it also supports DLSS and XeSS alongside FSR3. We run around the village of Svartalfheim, which is one of the most demanding areas in the game that we've encountered.

Hogwarts Legacy came out in early 2023 and it uses Unreal Engine 4. Like so many Unreal Engine games, it can look quite nice but also has some performance issues with certain settings. Ray tracing, in particular, can bloat memory use, tank framerates, and also causes hitching, so we've opted to test without ray tracing. (At maximum RT settings, the 9800X3D CPU ends up getting only around 60 FPS, even at 1080p with upscaling!) We may replace this one in the coming days.

Horizon Forbidden West is another two years old PlayStation port, using the Decima engine. The graphics are good, though I've heard at least a few people think it looks worse than its predecessor — excessive blurriness being a key complaint. But after using Horizon Zero Dawn for a few years, it felt like a good time to replace it.

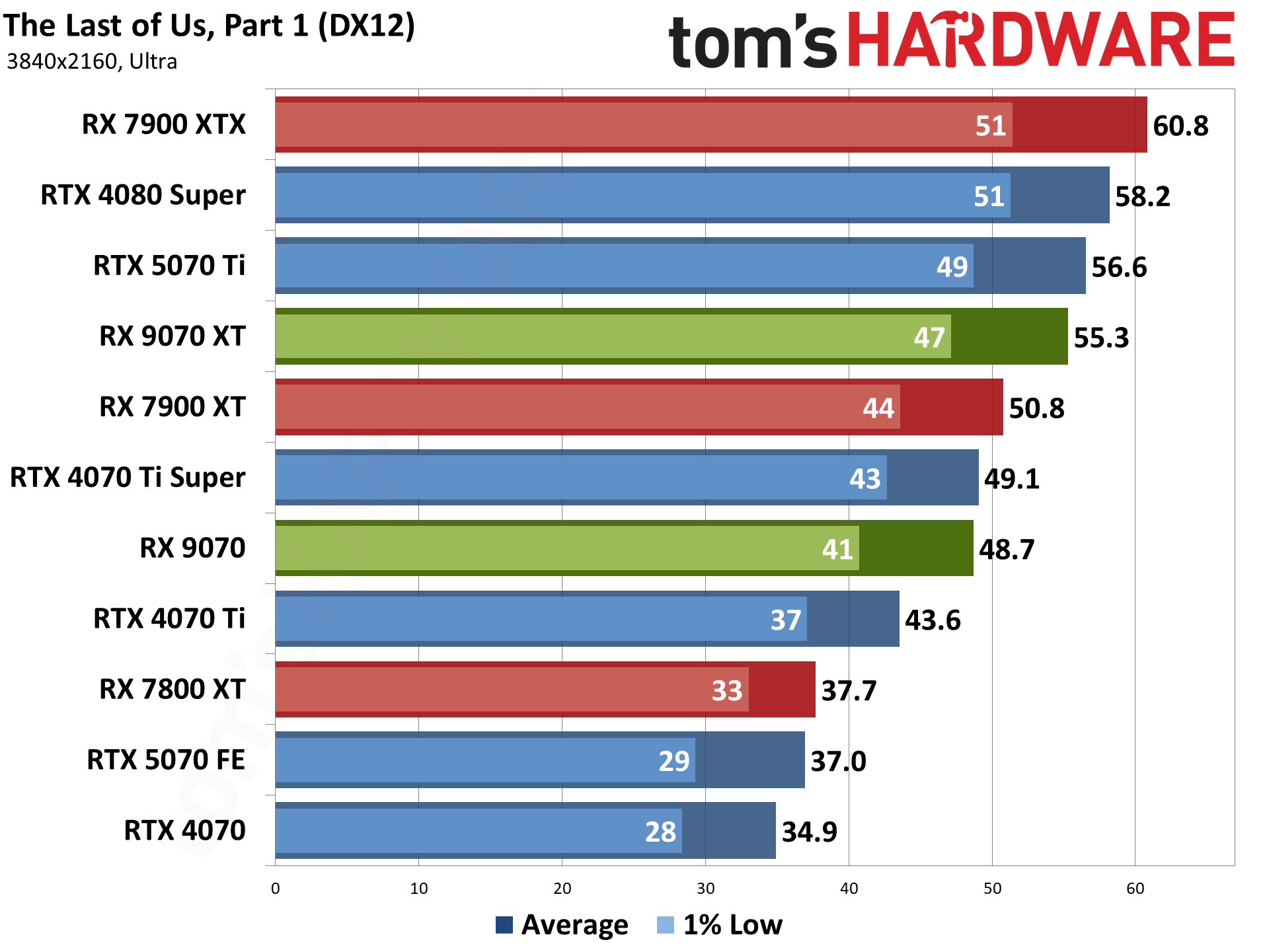

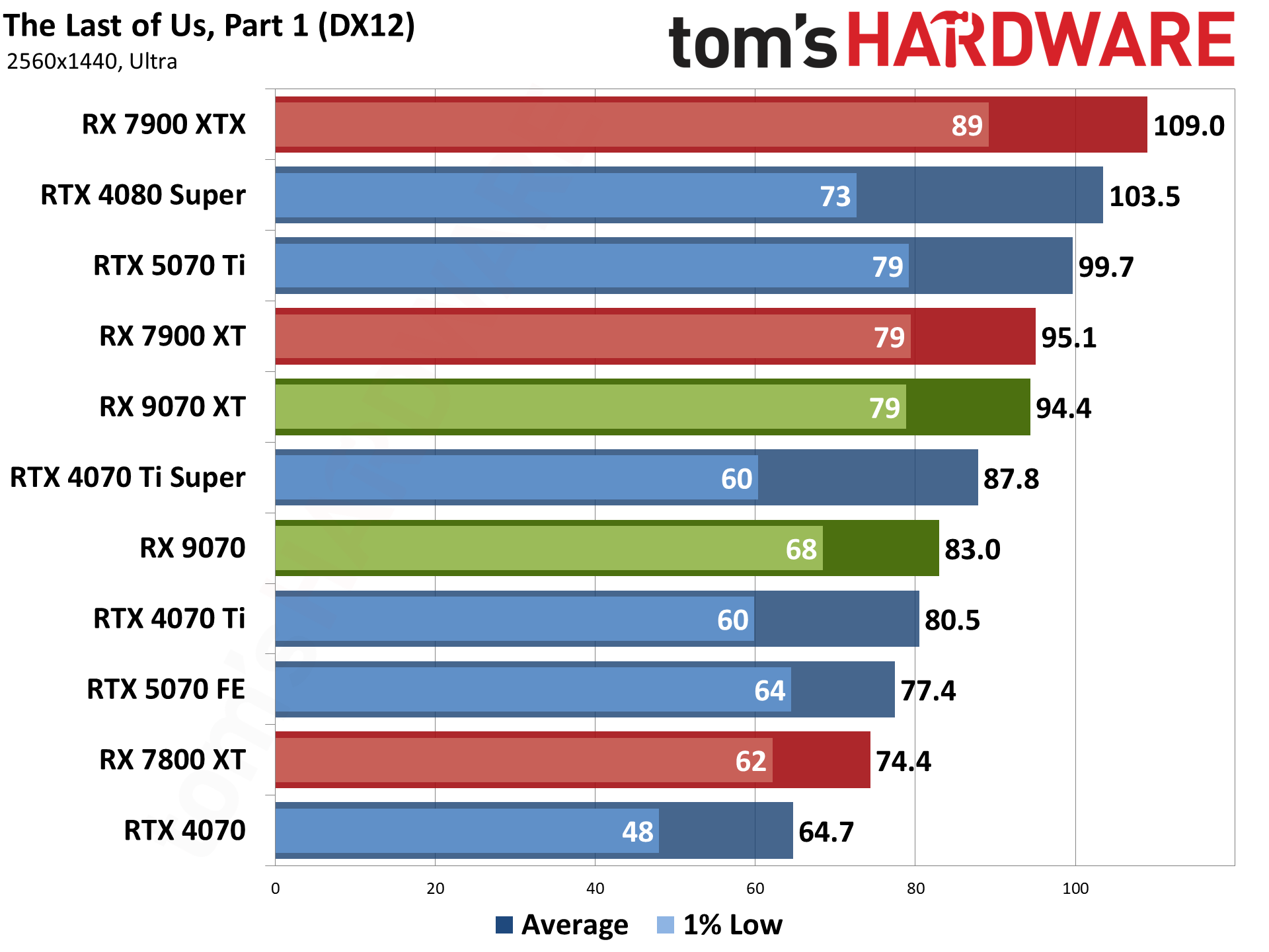

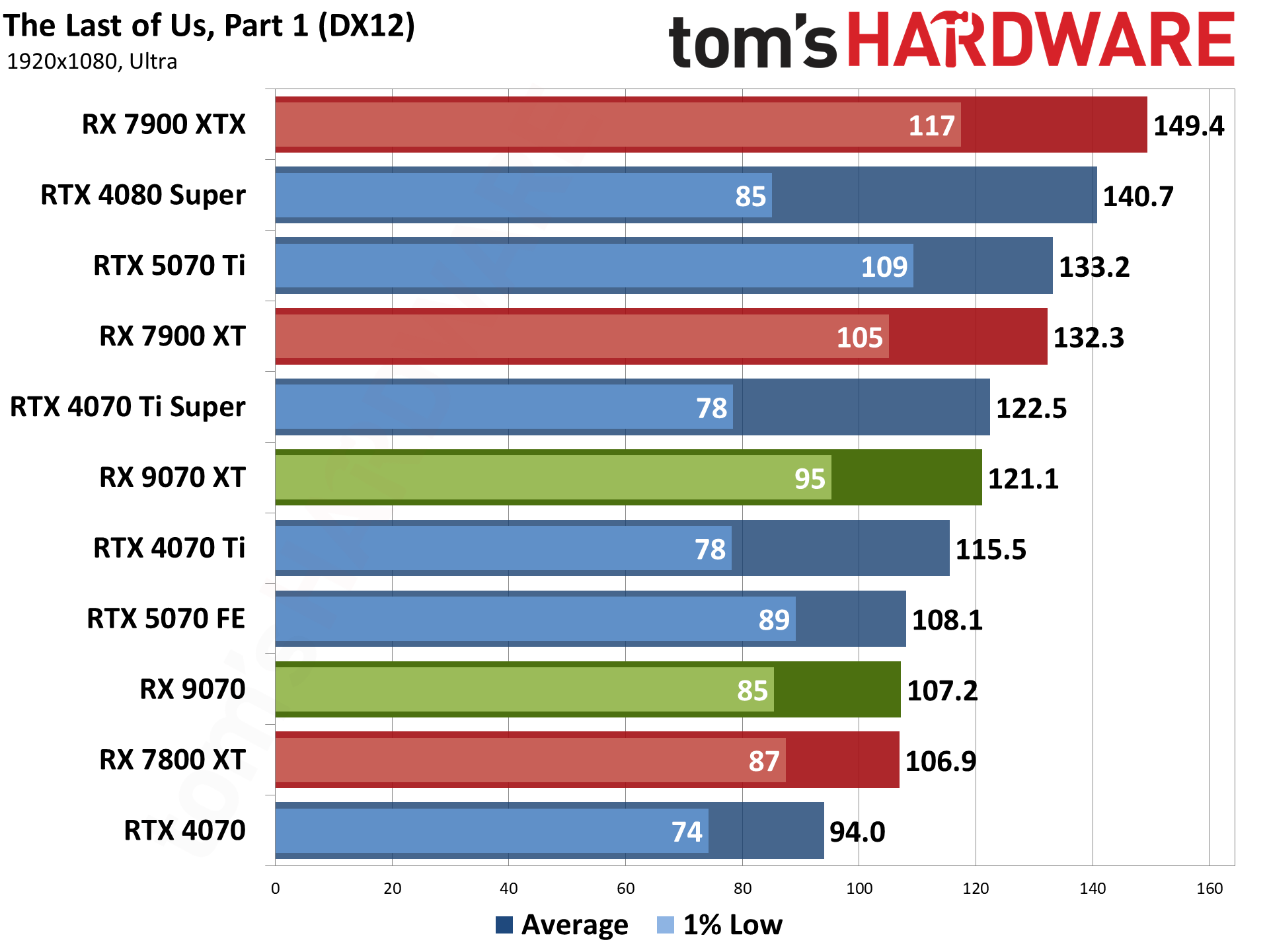

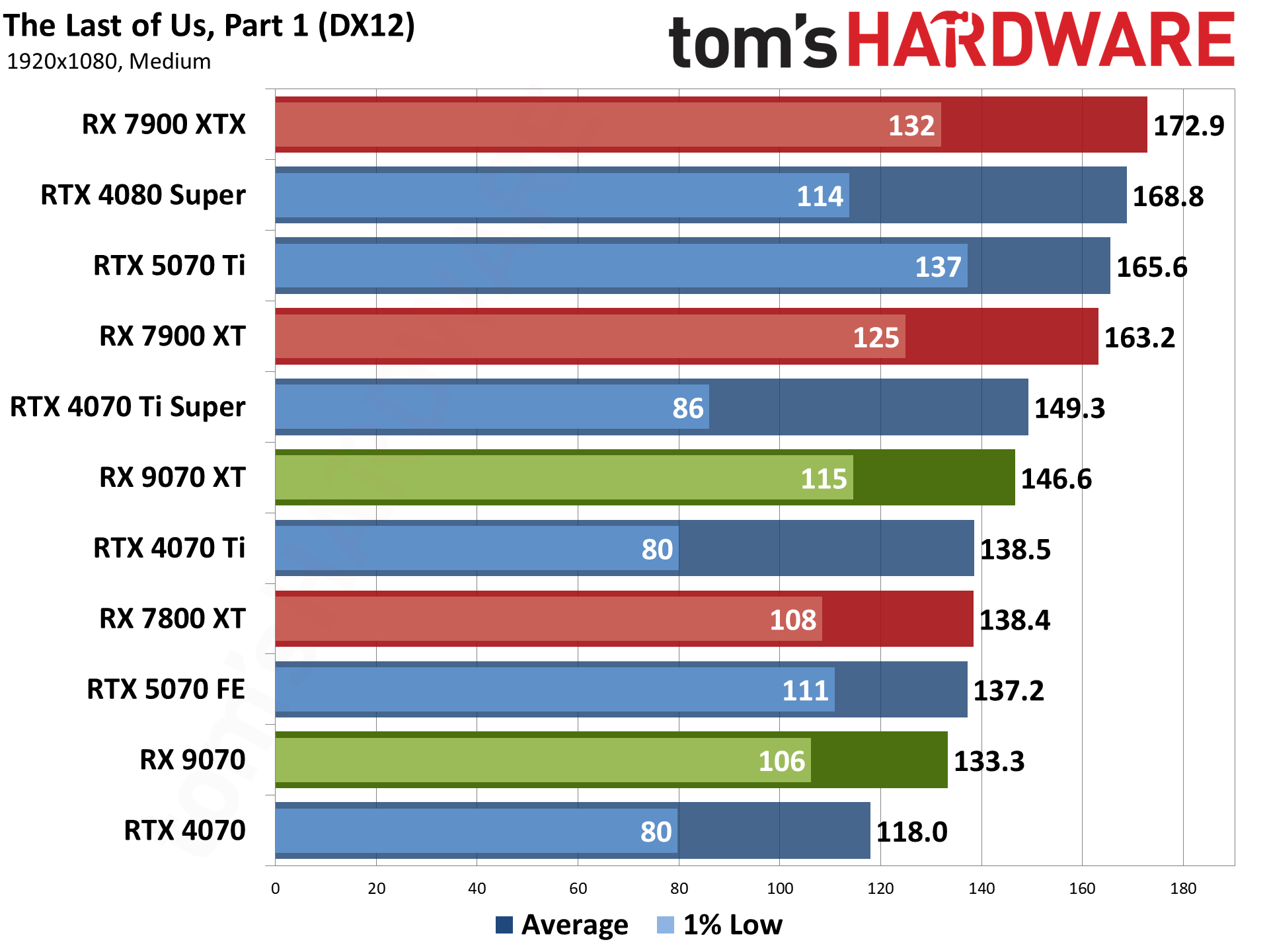

The Last of Us, Part 1 is another PlayStation port, though it's been out on PC for about 20 months now. It's also an AMD-promoted game and really hits the VRAM hard at higher-quality settings. Cards with 12GB or more memory usually do fine, and the RTX 5070 lands about where expected.

A Plague Tale: Requiem uses the Zouna engine and runs on the DirectX 12 API. It's an Nvidia-promoted game that supports DLSS 3, but neither FSR nor XeSS. (It was one of the first DLSS 3-enabled games as well.) It has RT effects, but only for shadows, so it doesn't really improve the look of the game and tanks performance.

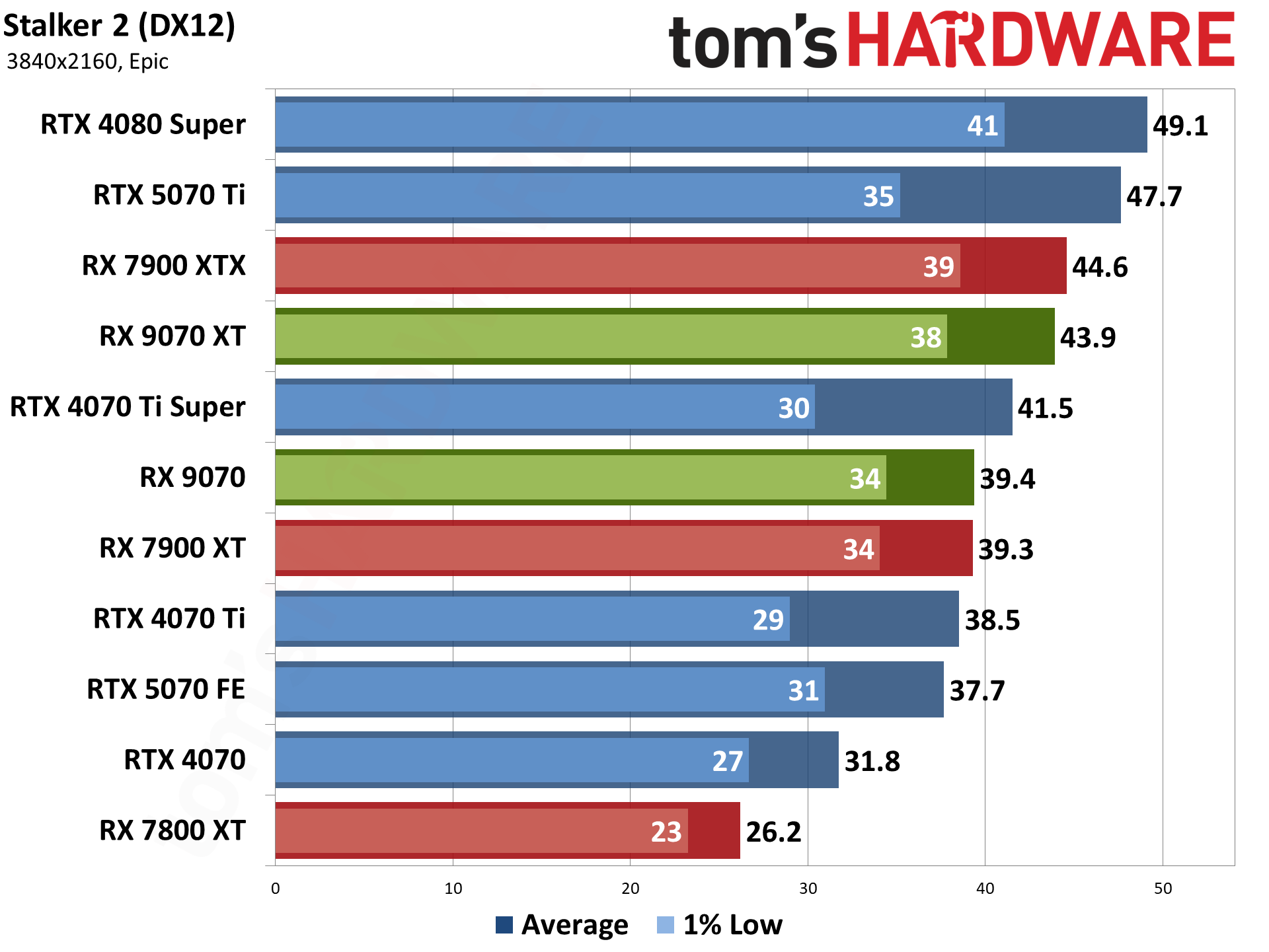

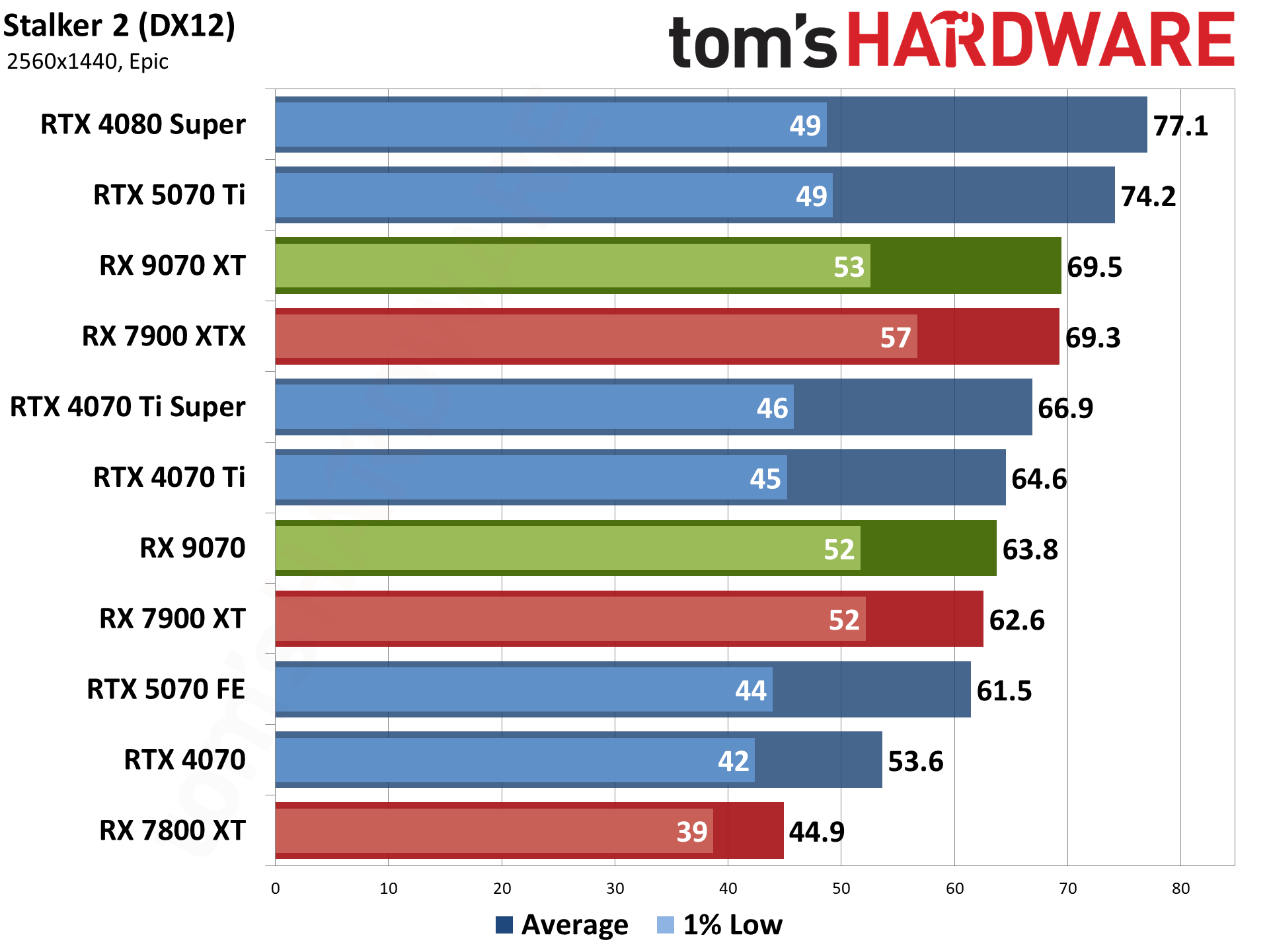

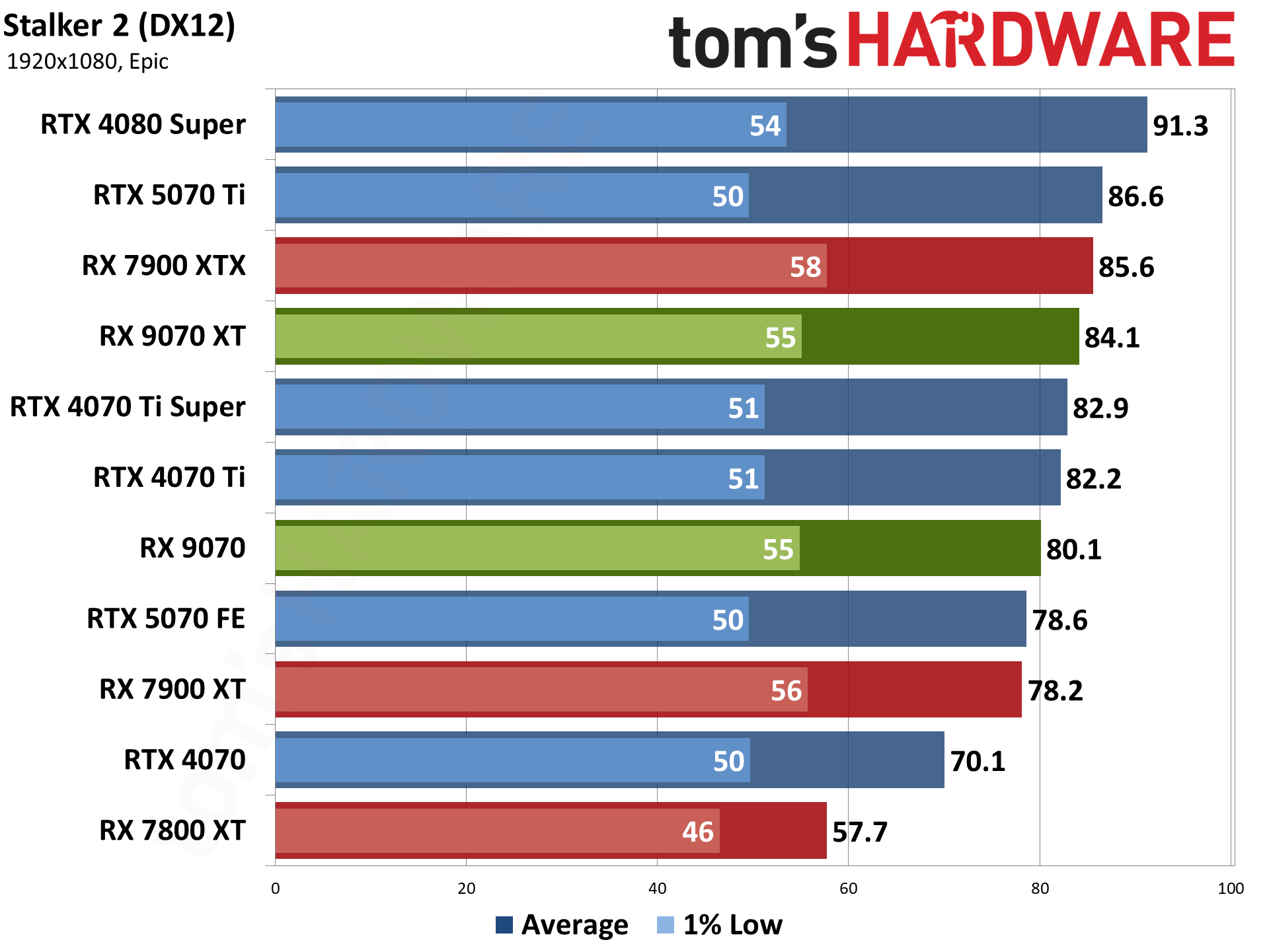

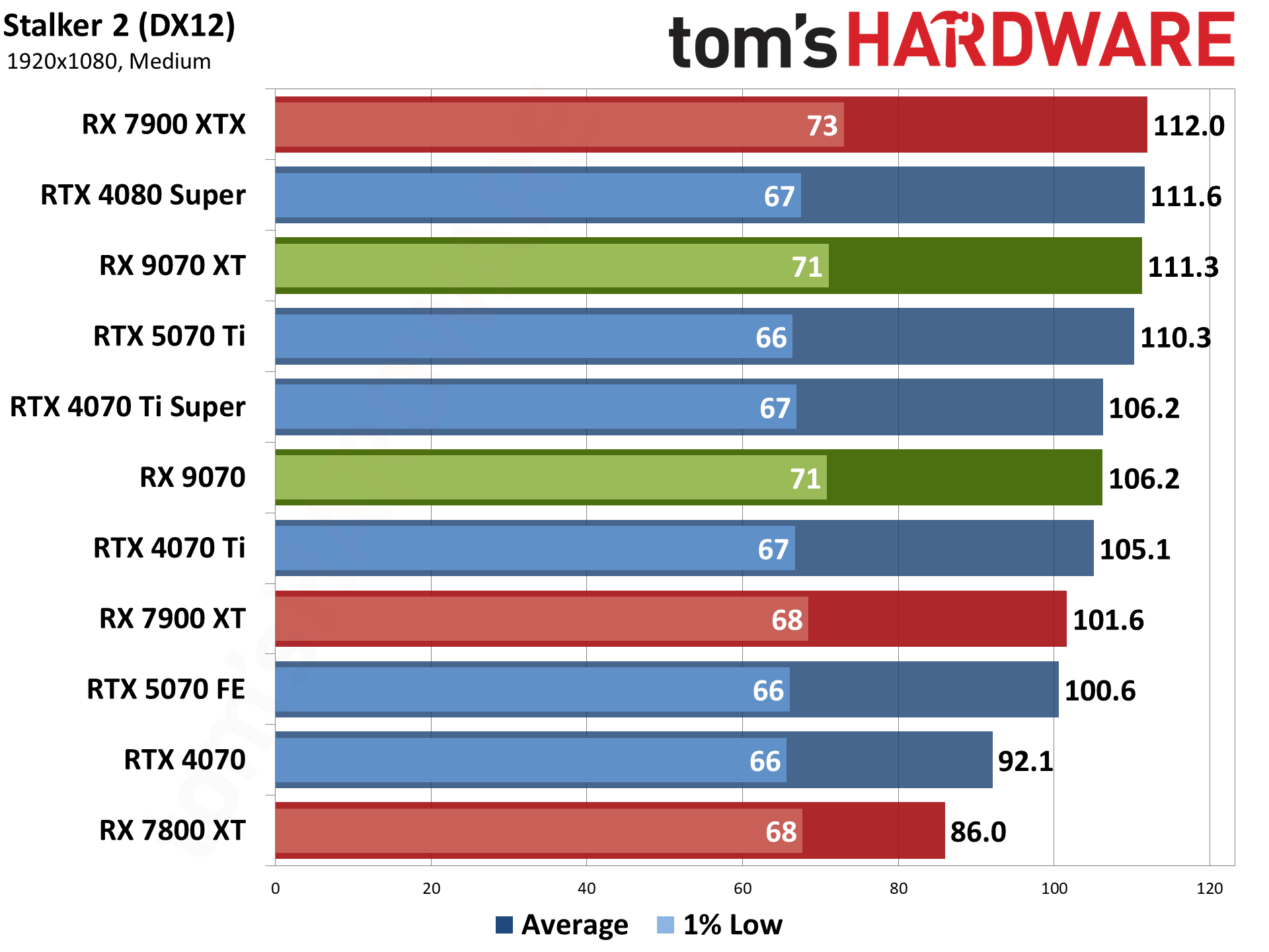

Stalker 2 is another Unreal Engine 5 game, but without any hardware ray tracing support — the Lumen engine also does "software RT" that's basically just fancy rasterization as far as the visuals are concerned, though it's still quite taxing. VRAM can also be a serious problem when trying to run the epic preset, with 8GB cards struggling at most resolutions. There's also quite a bit of microstuttering in Stalker 2, and it tends to be more CPU limited than other recent games.

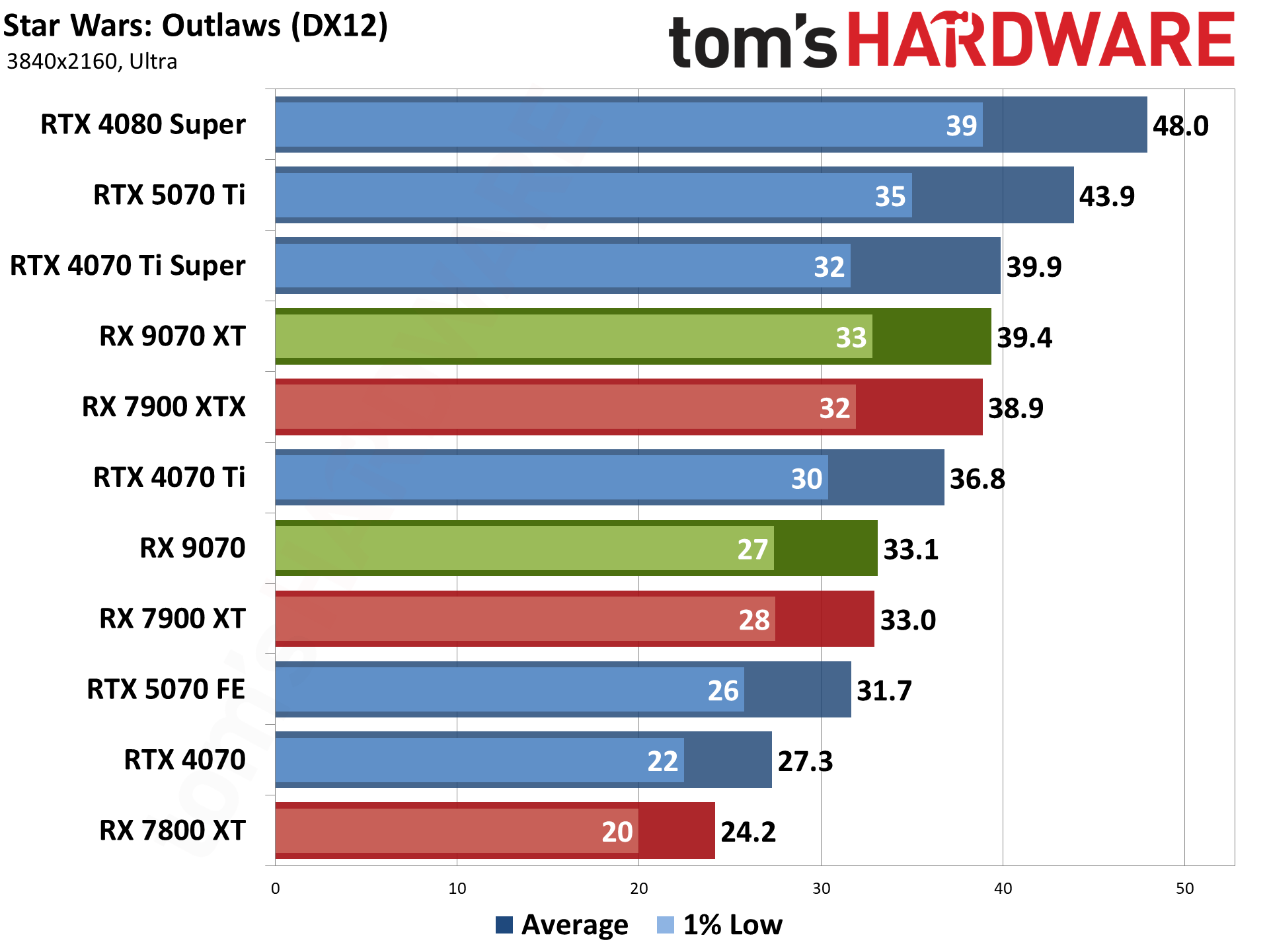

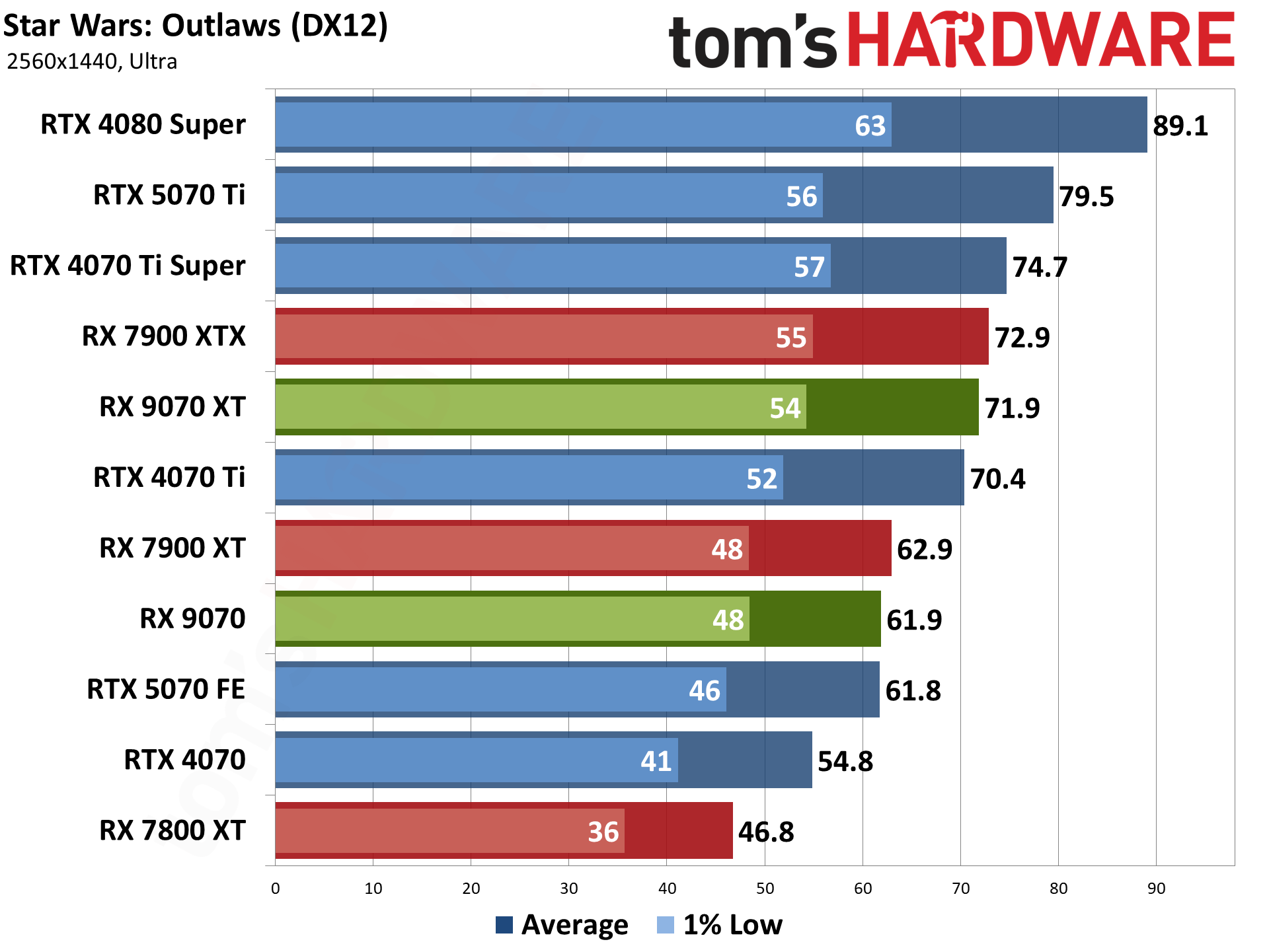

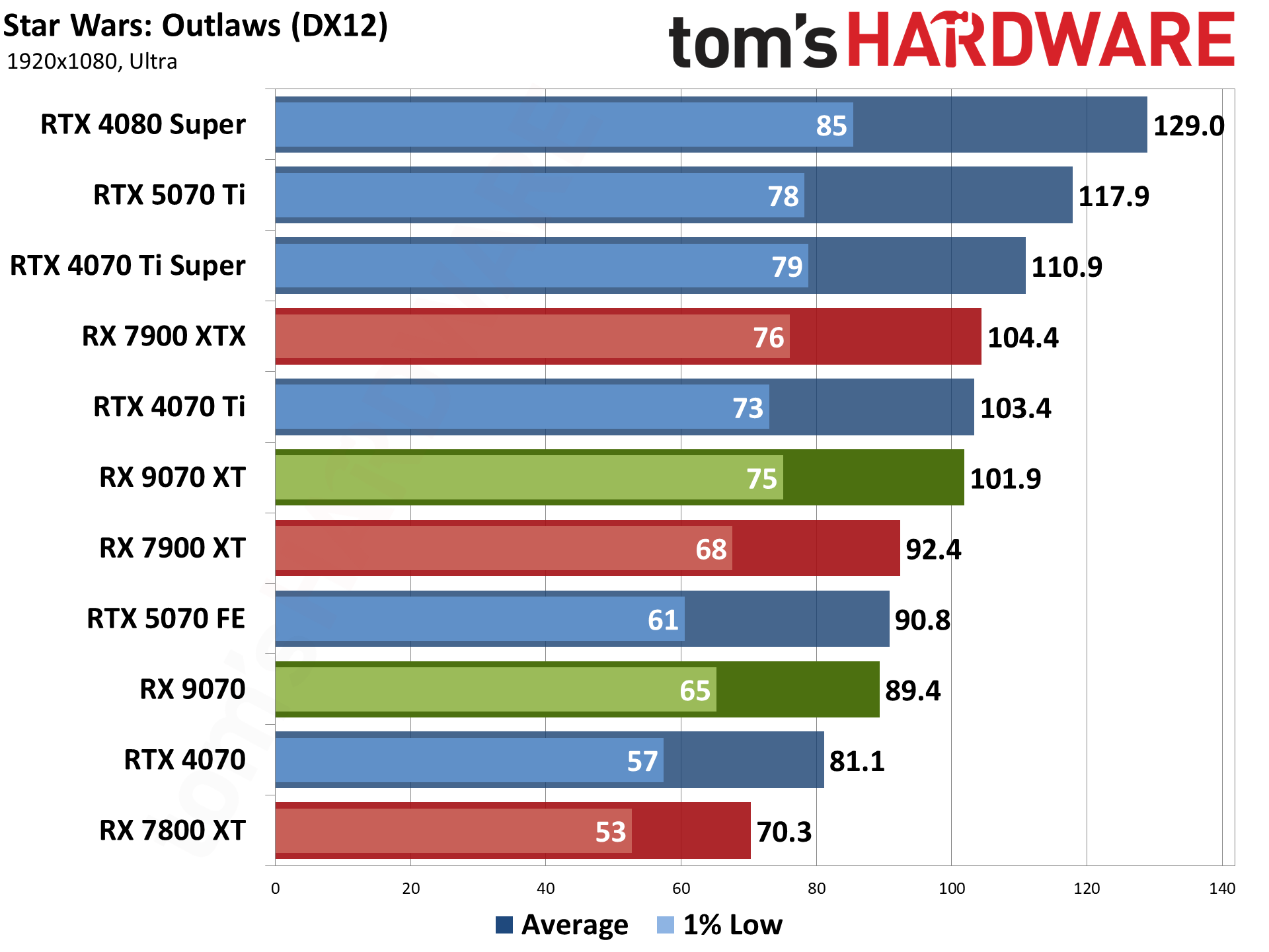

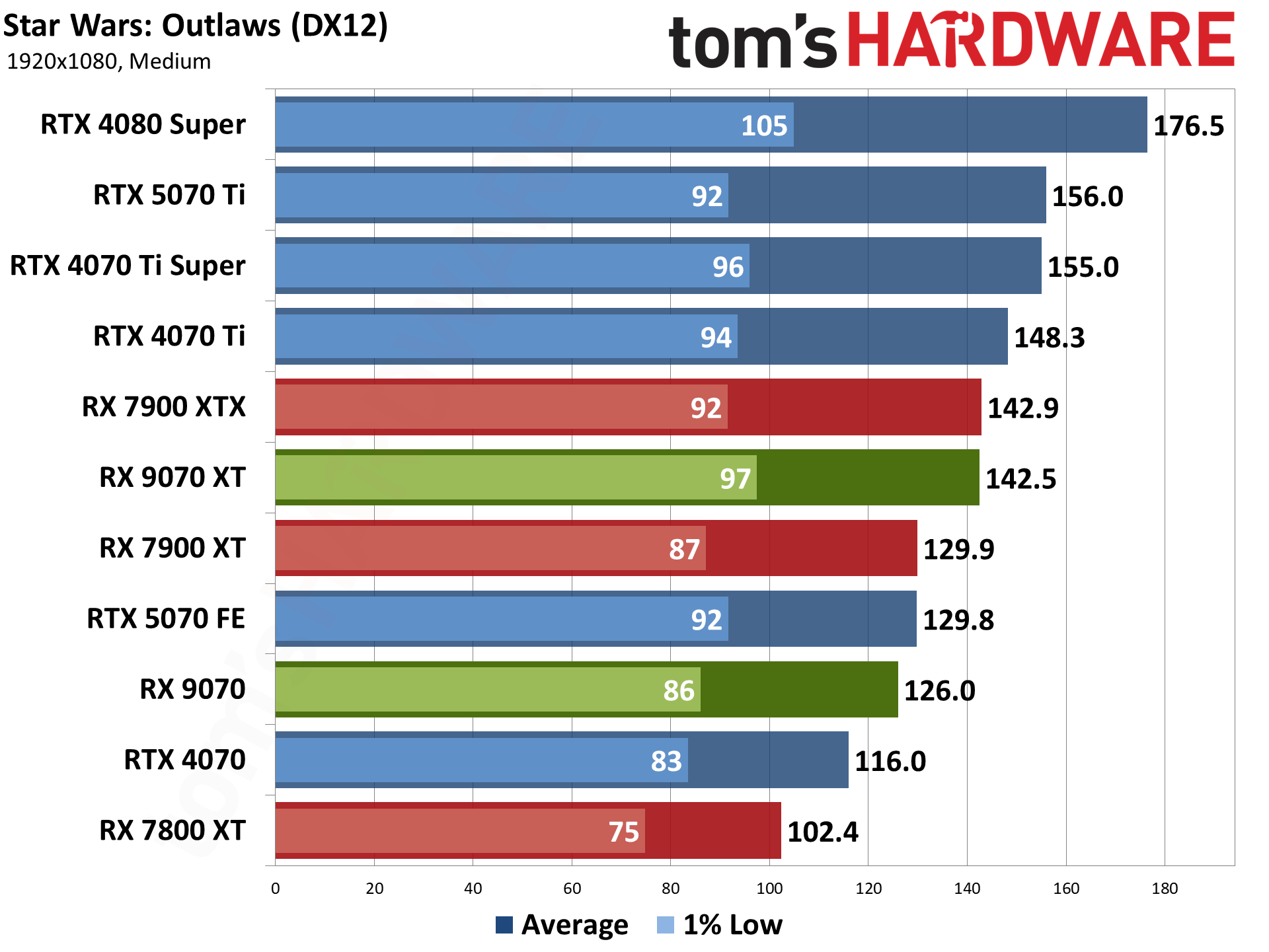

Star Wars Outlaws uses the Snowdrop engine, and we wanted to include a mix of options. It also has a bunch of RT options that we leave off four our tests. As with several other games, turning on maximum RT settings in Outlaws tends to result in a less than ideal gaming experience, with a lot of stuttering and hitching even on the fastest cards.

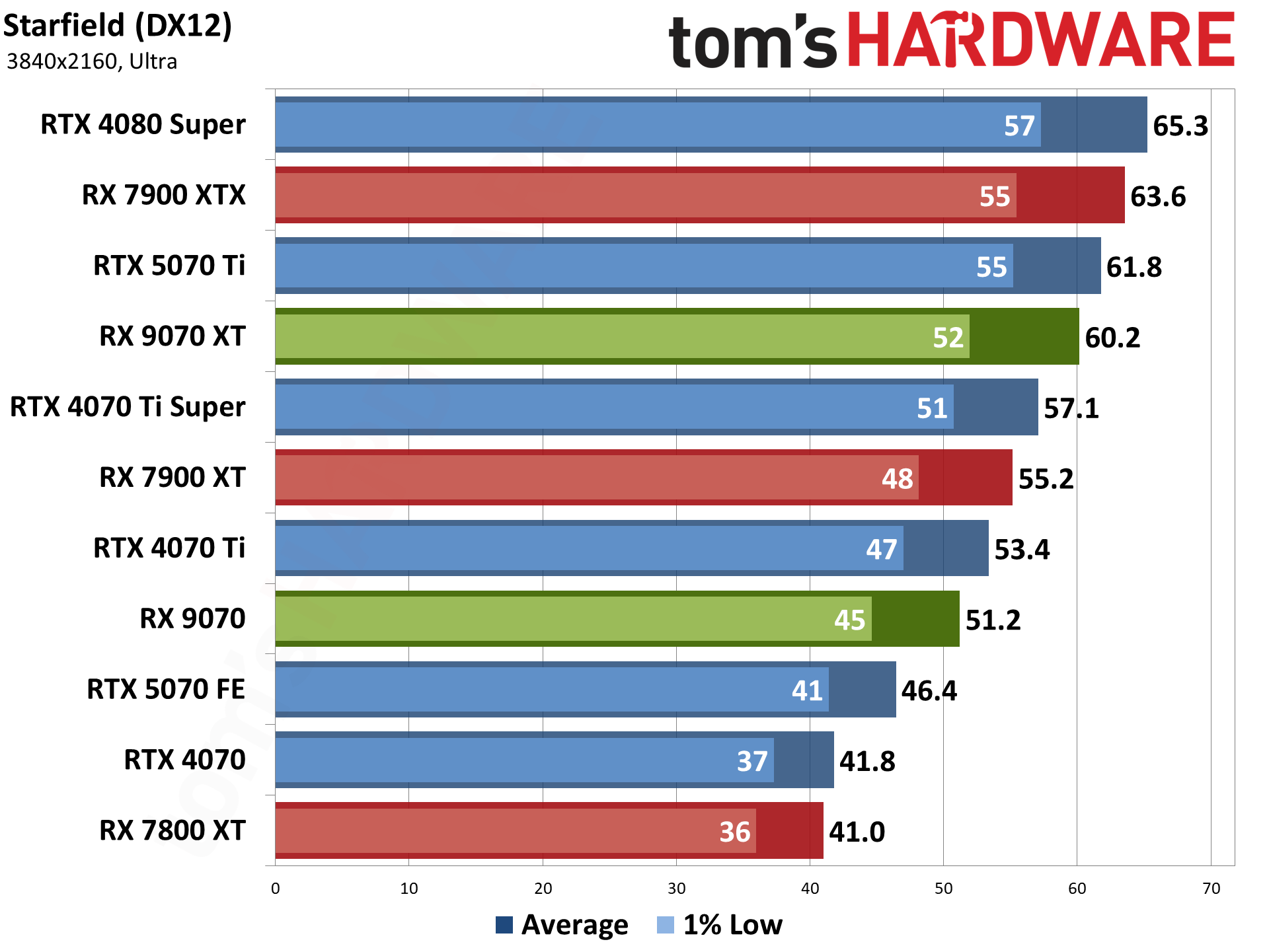

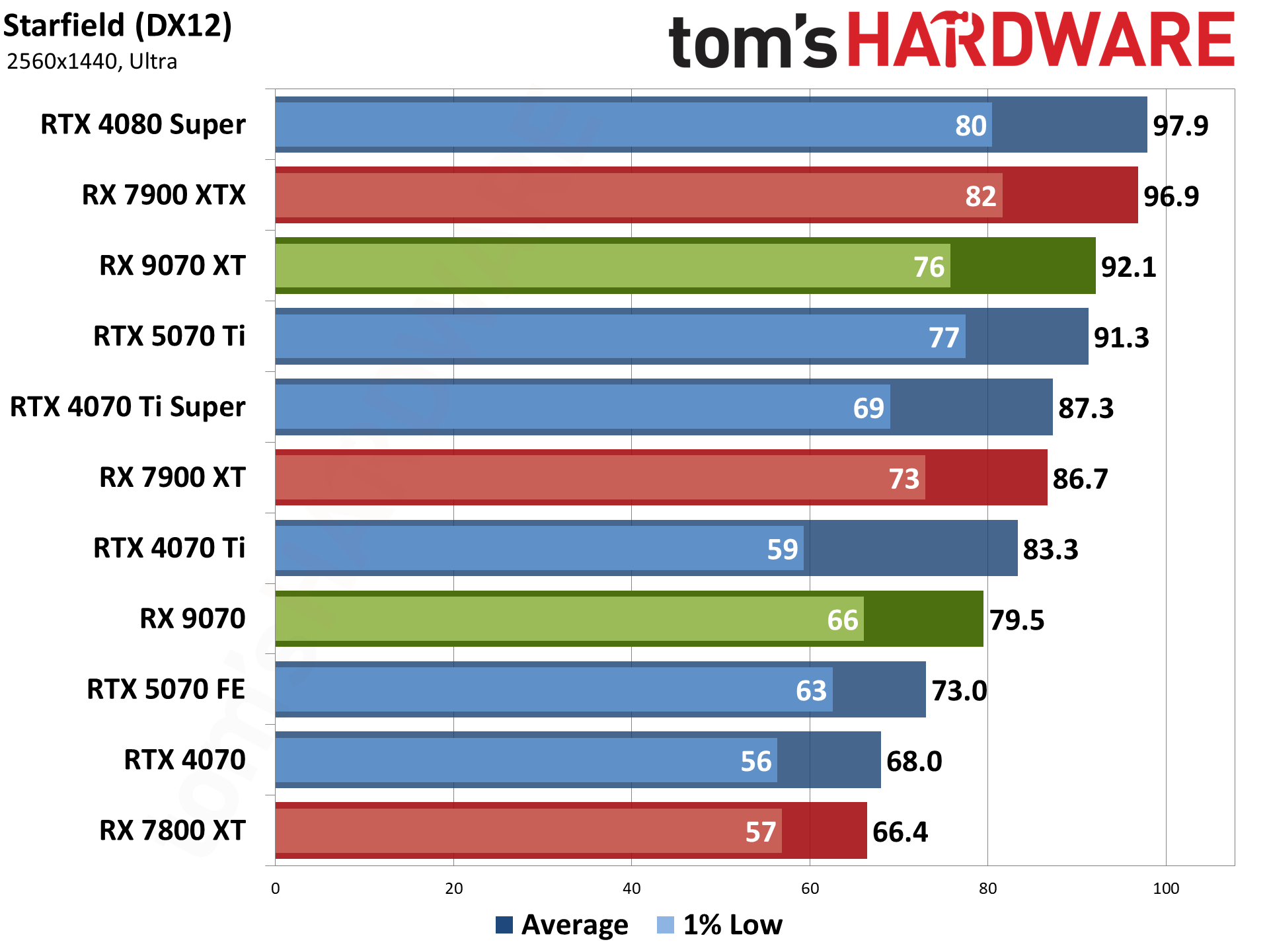

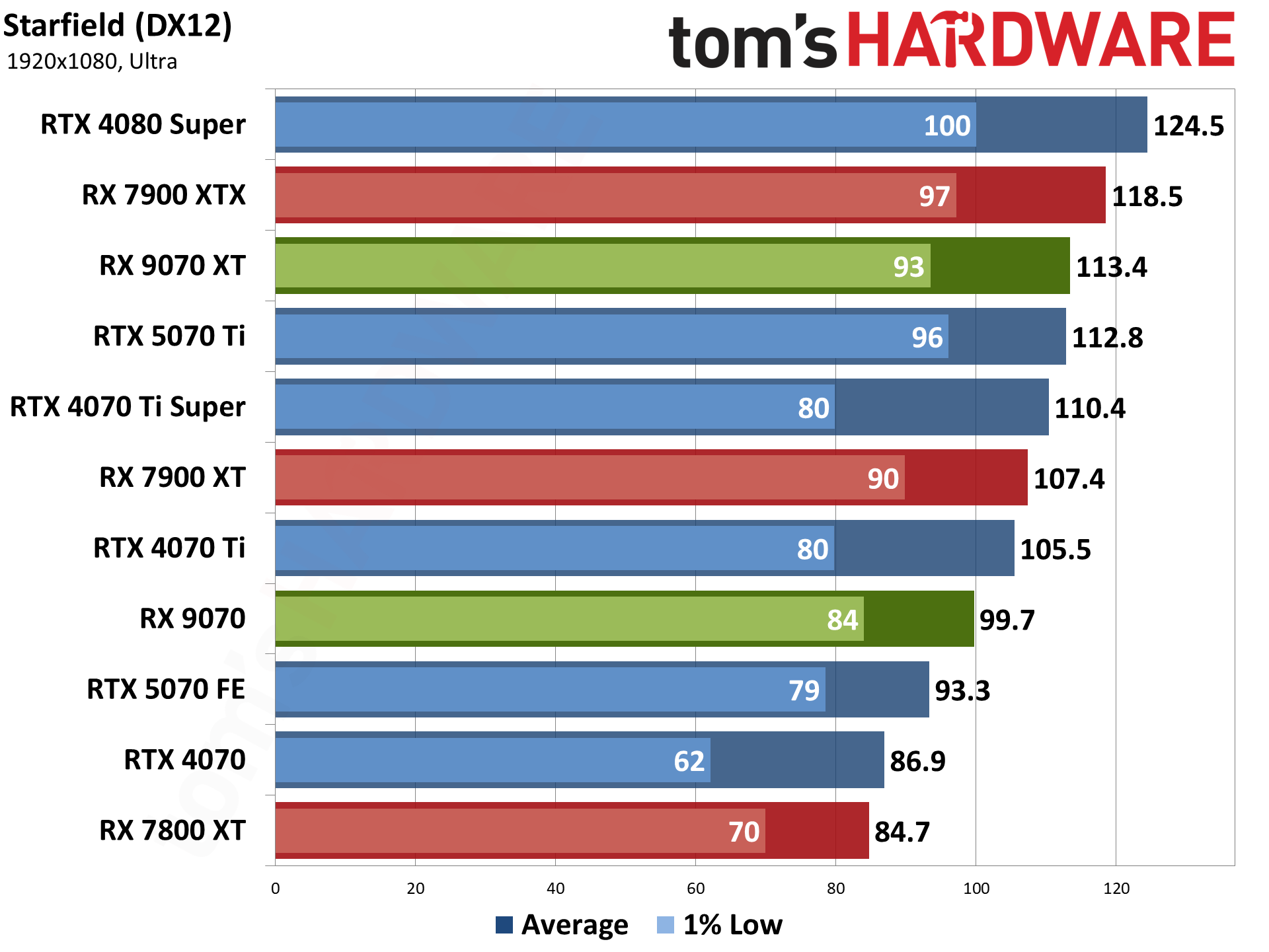

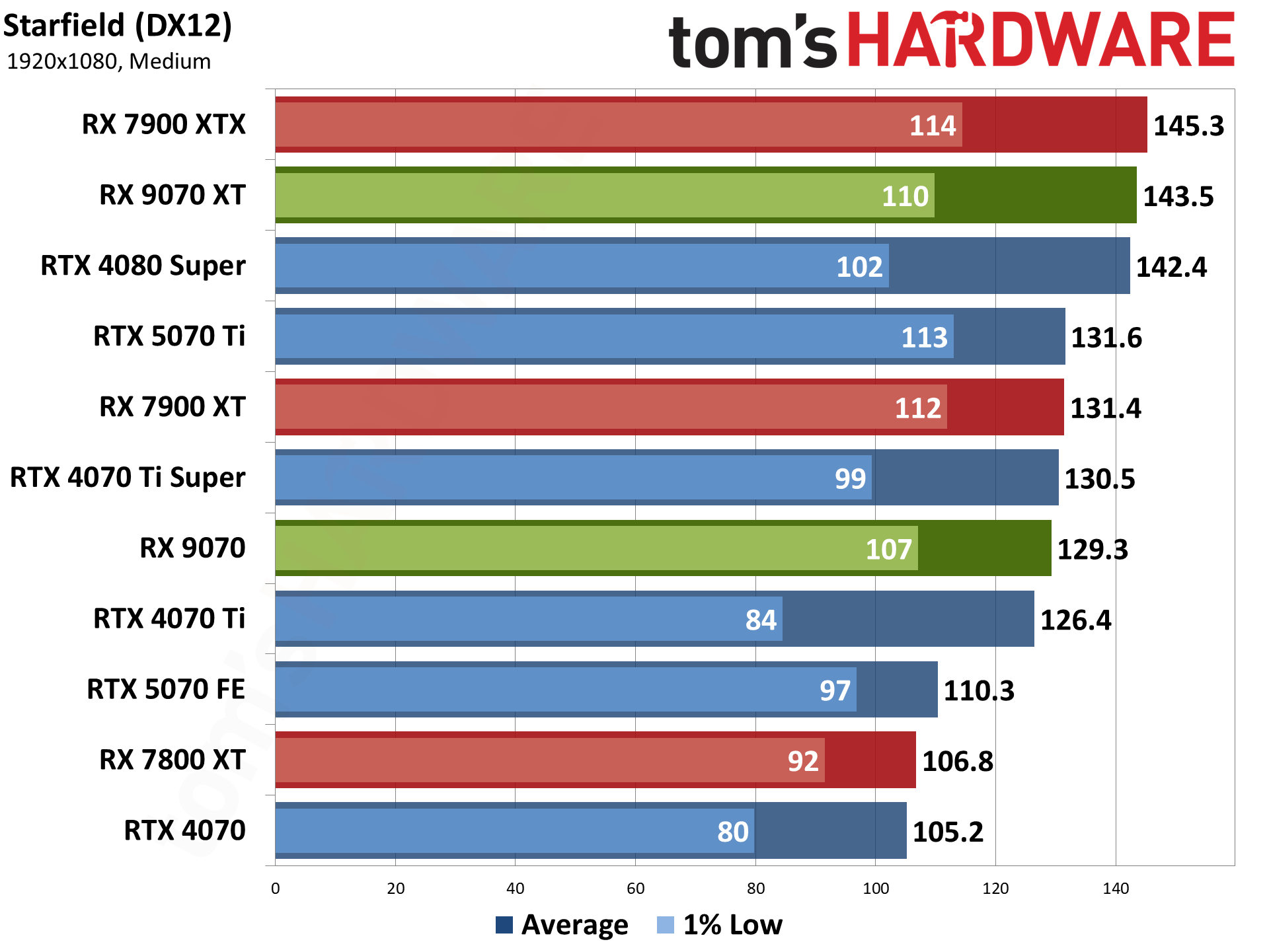

Starfield uses the Creation Engine 2, an updated engine from Bethesda, where the previous release powered the Fallout and Elder Scrolls games. It's another fairly demanding game, and we run around the city of Akila, one of the more taxing locations in the game. It's a bit more CPU limited, particularly at lower resolutions.

Wrapping things up, Warhammer 40,000: Space Marine 2 is yet another AMD-promoted game. It runs on the Swarm engine and uses DirectX 12, without any support for ray tracing hardware. We use a sequence from the introduction, which is generally less demanding than the various missions you get to later in the game but has the advantage of being repeatable and not having enemies everywhere. Curiously, the RTX 40-series cards are able to hit much higher performance at 1080p than the 50-series and AMD cards.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Current page: AMD Radeon RX 9070 XT and RX 9070 Rasterization Gaming Performance

Prev Page AMD Radeon RX 9070 XT and RX 9070 Test Setup Next Page AMD Radeon RX 9070 XT and RX 9070 Ray Tracing Gaming Performance

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Crazyy8 I don't have much money, but I might have just enough for the 9070 XT. I might finally be able to say I have high end hardware! :smile:Reply

Look forward to release, hope it isn't scalped! -

cknobman I'll be online super early to try and snag a $599 9070xt.Reply

Count me in the market share shift over to AMD. -

palladin9479 Now it's all down to availability and how much product AMD can pump into the channel. Anyone trying to buy a dGPU is left with almost no real options other then to pay ridiculous markups to scant supply. If AMD can pump enough product into the channel, then those prices will go down cause well, that's how the supply vs demand curve works.Reply -

Elusive Ruse Thank you for the detailed review and analysis as always @JarredWaltonGPU I’m glad that we finally have a proper offering at the midrange. AMD dealt a powerful blow to Nvidia, maybe a bit late but better than never.Reply

The ball is now in Nvidia’s court, let’s see how much they care about the gaming market, I also believe this is a matter of brand image as well; losing to AMD even if it’s not at the high end still hurts. -

JarredWaltonGPU Reply

Seriously! Well, more like late 2020 or early 2021. Everything is basically sold out right now (though 5070 has a few $599 to $699 models actually in stock right this second at Newegg).Gururu said:Very nice review although the setting feels like a time warp to Dec 2022. -

Colif They are nice cards but I will follow my own advice and not buy a card this generation and see what next brings. Xt beats mine in some games but not all, and since I don't play RT games anyway, I don't think its worth itReply

I am saving for a new PC so it wouldn't make sense to buy one now anyway. Maybe next gen I get one that is same color as new PC will be... -

SonoraTechnical Saw this about the Powercolor Reaper in the article:Reply

PowerColor takes the traditional approach of including three DisplayPort 2.1a ports and a single HDMI 2.1b port. However, the specifications note that only two simultaneous DP2.1 connections can be active at the same time. Also, these are UHBR13.5 (54 Gbps) ports, rather than the full 80 Gbps maximum that DisplayPort 2.1a allows for.

So, 3 monitors using display port for FS2024 is a non-starter? Have to mix technologies (DP and HDMI?) Is this going to be true for most RX 9070 XT cards? -

JarredWaltonGPU Reply

Not by my numbers. AMD said the 9070 XT would be about 10% slower than the 7900 XTX in rasterization and 10% faster in ray tracing. Even if that were accurate, it would still make the 9070 XT faster than the 7900 XT.baboma said:I watched the HUB review, since it came out first. The short of it: 9070XT = 7900XT (not XTX), both in perf and power consumption. So if you think Nvidia (Huang) lied about 5070's perf, then AMD also lied.

394

395 -

Colif The low stock and things being OOS the same day they released reminds me of the 7900 release day. I was too slow to get an XTX but I was happy with an XT.Reply