Tom's Hardware Verdict

The HighPoint Rocket 1608A AIC is an excellent storage solution if you have the right platform and drives to spare. It’s impressive, but certainly not for everyone.

Pros

- +

High peak and sustained performance

- +

Up to 8 drives with no mobo bifurcation req’d

- +

Cooling and some nice additional features

- +

PCIe 5.0 in both directions

Cons

- -

A little pricey

- -

Not as full-featured as the 7608A

- -

Requires a dedicated x16 slot, preferably PCIe 5.0

- -

Performance issues on an Intel platforms

Why you can trust Tom's Hardware

If one high-end SSD isn’t fast enough for you, then how about eight? The HighPoint Rocket 1608A AIC (add-in card) allows you to assemble up to eight PCIe 5.0 SSDs with sixteen lanes of upstream bandwidth. If the Crucial T705’s 14 GB/s just isn’t quite doing getting the job done, you can have four or more of them work together for faster transfers and insanely high IOPS. If you can deliver the right workload, that is.

Our last review of an AIC like this from HighPoint was almost seven years ago, but we’ve had our eye on the Rocket 1608A for some months. With more PCIe 5.0 SSDs and platforms coming to the market as time goes on, there’s a natural enthusiast desire to push for more bandwidth, and this AIC delivers. You don't need to use PCIe 5.0 SSDs either — today, we’re using eight PCIe 4.0 Samsung 990 Pros. You could even use a non-PCIe 5.0 slot for that matter, as you can still benefit from steady state performance improvements, higher IOPS, and some management features of the hardware. But for maximum burst performance, you'll want to have a PCIe 5.0 x16 slot available.

The Rocket 1608A is an all-in-one solution as it provides cooling, connectivity, and an on-board PCIe switch so you don’t have to rely on motherboard bifurcation. The card and switch feature everything from indicator LEDs to deeper features, like synthetic mode. It’s quite possible to get 56 GB/s or more with the right hardware, and although the price seems steep it’s not unreasonable if you consider the advantage of not needing expensive 8TB drives to reach your capacity goals.

This solution is not for everyone, though, as the Rocket 1608A’s full potential is best met in an HEDT or enterprise environment where high levels of performance are possible and at times necessary. This ideally takes advantage of a full x16 PCIe 5.0 slot and a fast CPU that can keep up. Still, it’s worth a look as an interesting product that shows how far solid state storage has come even on the consumer end of things. If it happens to fit your budget, then it’s worth fully exploring the product and what it can do.

HighPoint Rocket Specifications

| Feature | Description |

|---|---|

| Bus Interface | PCIe 5.0 x16 |

| Port Count | 8x NVMe Ports (eight devices) |

| Connector Type | M.2 (tool-less) |

| Device & Form factor | 2242, 2260, 2280 |

| Cooling | Full-Length Aluminum Heatsink w/Cooling Fan |

| Ext. Power Connector | Yes (PCIe 6-pin connector) |

| AIC Form factor | Full-Height, Single-Width (284mm x 110mm) |

| Operating System Support | Compatible with all OS that have native NVMe driver support |

| FRU | Yes (stores VPD data) |

| Hardware Secure Boot | Yes |

| Synthetic Hierarchy | Yes |

| Downstream port containment | Yes |

| Read tracking | Yes |

| Hardware Secure Boot | Yes |

| OOB Management | BMC and MCTP over PCIe |

| LED Indication | Intelligent, Self-Diagnostic LEDs |

| Working Temp. | 0°C ~ + 55°C |

| Power | 82.64W |

| MTBF | 920,585 Hours |

| Price | $1,499 |

The HighPoint Rocket 1608A is a high-end, PCIe 5.0 add-in card (AIC) designed as a multi-drive storage solution for appropriate server and workstation platforms. Its main upstream interface is 16 lanes in width, or x16, with support for up to a PCIe 5.0 link speed. That yields a theoretical maximum bandwidth of 64 GB/s in each direction, though real-world performance will tend to be slightly lower.

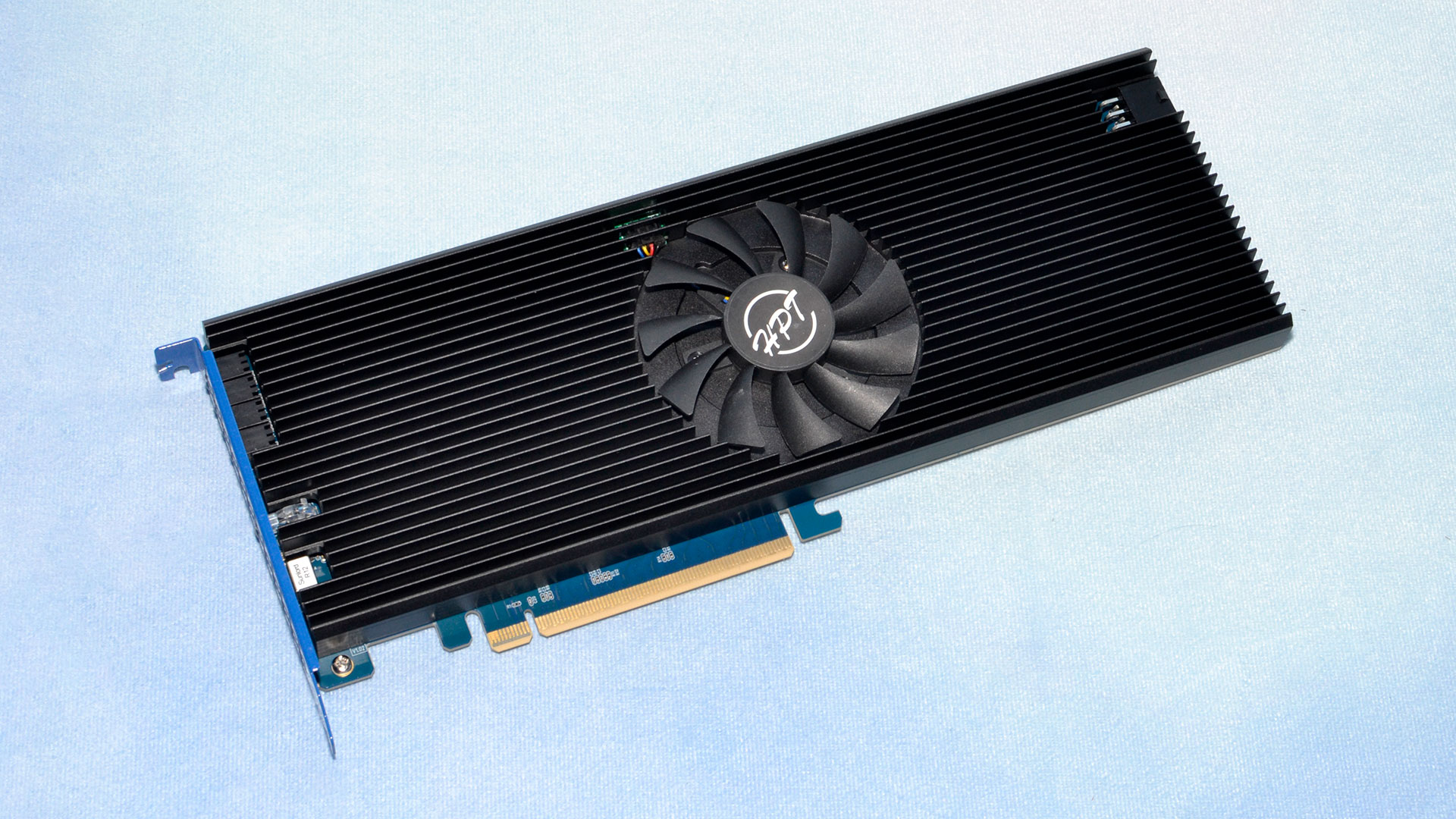

The Rocket 1608A uses a standard full-height form factor, clad with a heatsink, fan, and eight M.2 slots for NVMe SSDs. To help power the up to eight SSDs, each of which can potentially draw around 12W of peak power, there's a 6-pin PEG power connector at the back of the card — the 75W from the x16 slot would otherwise be insufficient. The card and a full drive load can pull up to 82.64W.

The AIC also has per-drive LEDs, as well as other features that we'll get into later. The AIC alone will cost you $1,499, $500 less than the 7608A version that has more features and full software support.

HighPoint Rocket Software and Accessories

Nothing special is required to install and run the HighPoint Rocket 1608A, but HighPoint does offer a number of resources and, for certain SKUs, software packages for its products. The Rocket 1608A comes with a quick install guide (QIG) but also has four other user guides available for download from HighPoint’s site, in addition to a compatibility list. These guides include a datasheet, an overall user guide, a port guide, and a guide for heatsink thermal pad replacement. The compatibility list shows tested compatible motherboards and NVMe SSDs — other hardware can work but may not perform optimally.

The Rocket 7608A, a version of the 1608A with HighPoint RAID technology support, can take advantage of HighPoint’s RAID management suites — the Storage Health and Analysis Suite and the RAID OS Driver Suite. This includes GUI, webGUI, and command line interface (CLI) management to set up the RAID features. It also allows for monitoring of the storage drives via the Storage Health Inspector (SHI), which has features such as SSD-specific temperature management, manual fan speed adjustment, sensor and alert controls, notifications, LED information, and more. The 7608A is also the SKU to get if you want OPAL encryption support, although some management features such as BMC and MCTP over PCIe may be supported by the 1608A.

For the Rocket 1608A, software RAID can be set up in the host operating system. For Windows this would be in Disk Management, Storage Spaces, or similar. The host OS does require NVMe driver support for this functionality. Different RAID configurations are possible, as is normal with multiple drives, which includes defining the stripe size if desired. HighPoint’s software for the 7608A defaults to 512KiB stripes, but you may want to change this for the 1608A depending on expected files and workload types. Certain drives may also be formatted in 4Kn over the default 512e, the former being superior for the anticipated use of this AIC with multiple high-end SSDs.

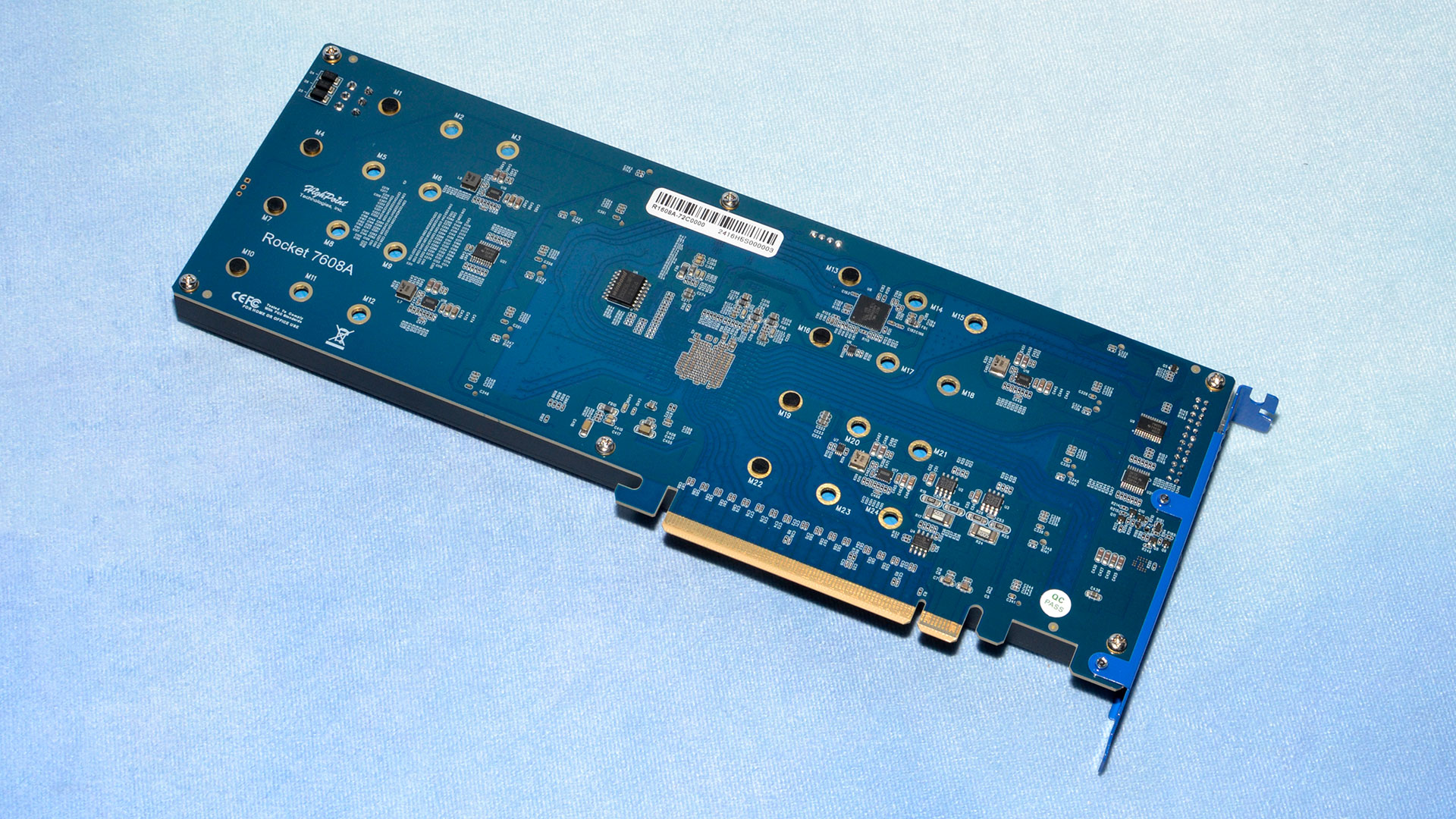

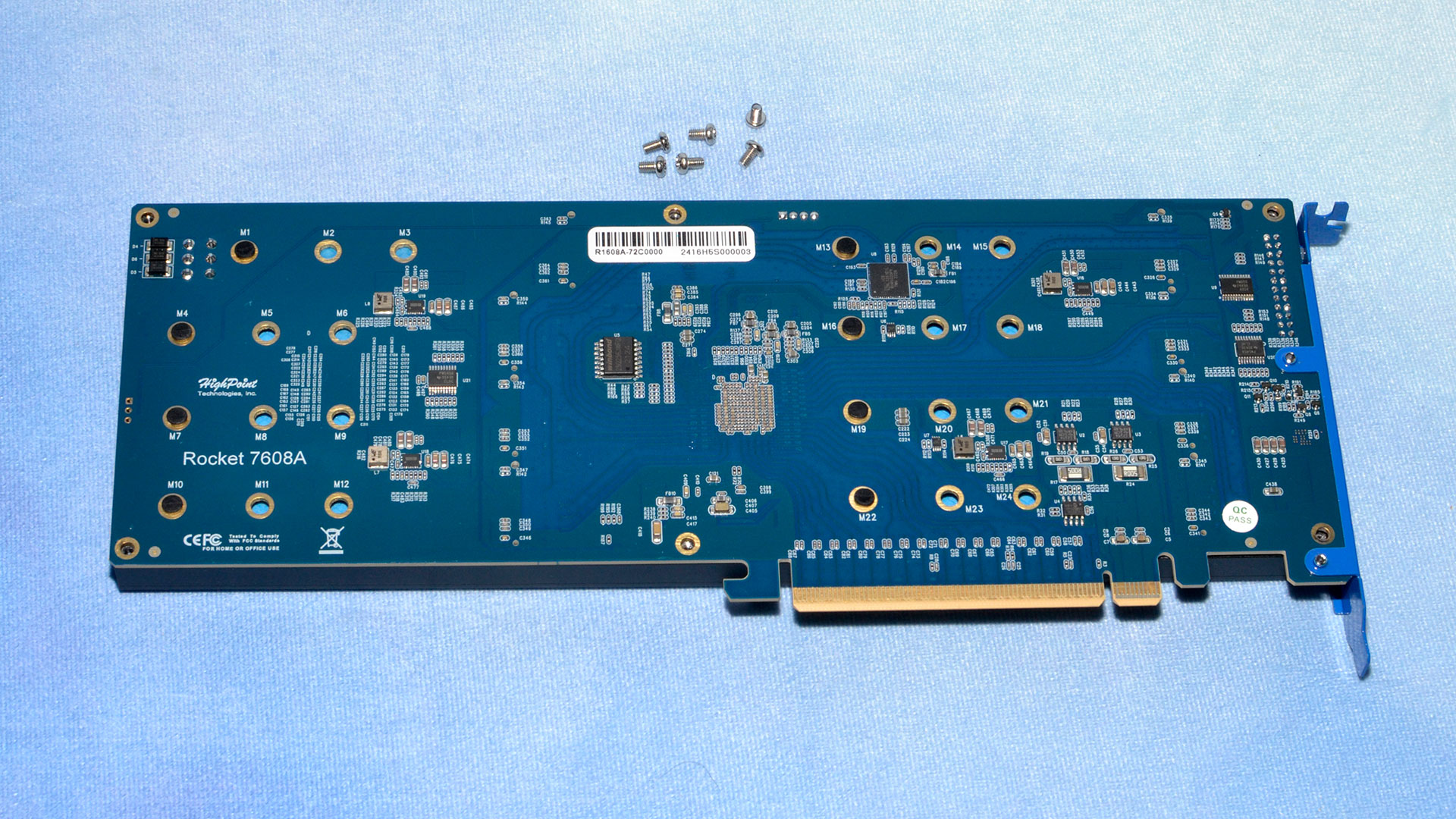

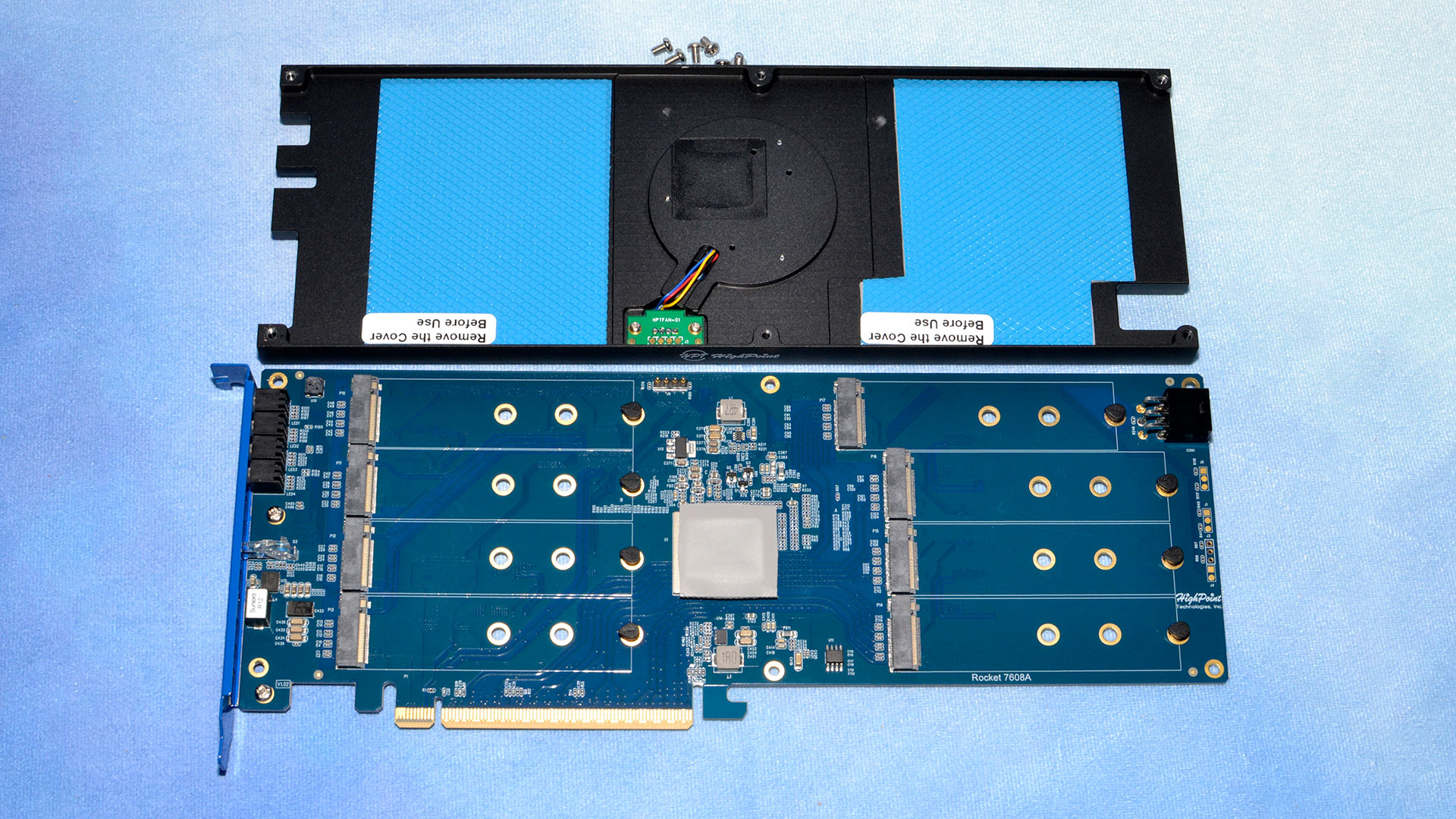

HighPoint Rocket: A Closer Look

The Rocket 1608A is a full-height, single-width AIC (284mm x 110mm) with eight M.2 slots for M.2 2242, 2260, and 2280 SSDs. M.2 2230 SSDs could be extended for use in the AIC as well. This AIC is actually shorter than many bifurcation AICs, like the ASUS Hyper M.2 x16 Gen5 AIC, as those are higher than standard, unlike the Rocket 1608A. This can improve compatibility in some cases. The Rocket 1608A has a PCIe switch on-board and does not require motherboard PCIe bifurcation support. Additionally, this AIC holds twice as many SSDs as would be possible with the standard x4/x4/x4/x4 PCIe bifurcation loadout.

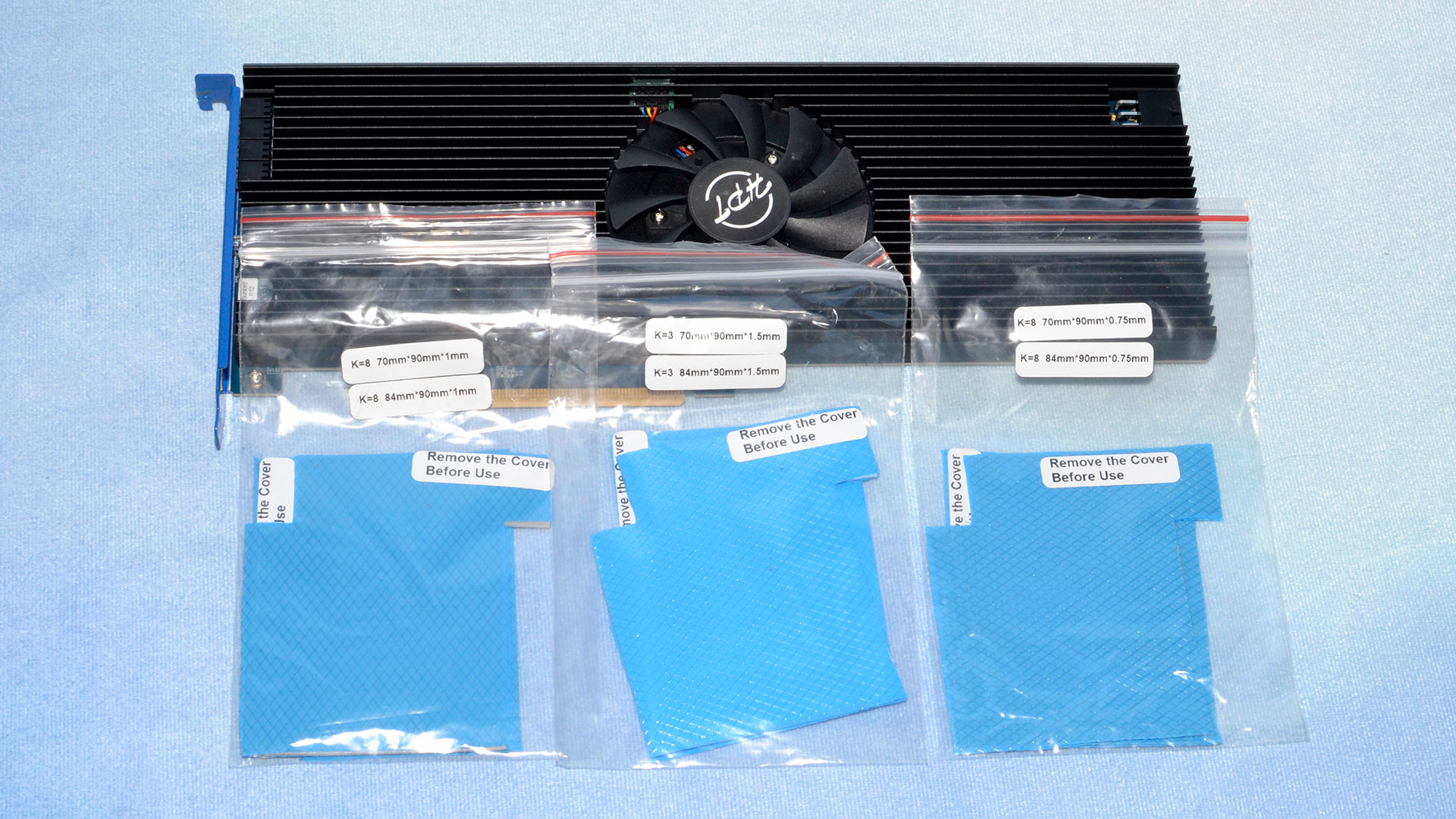

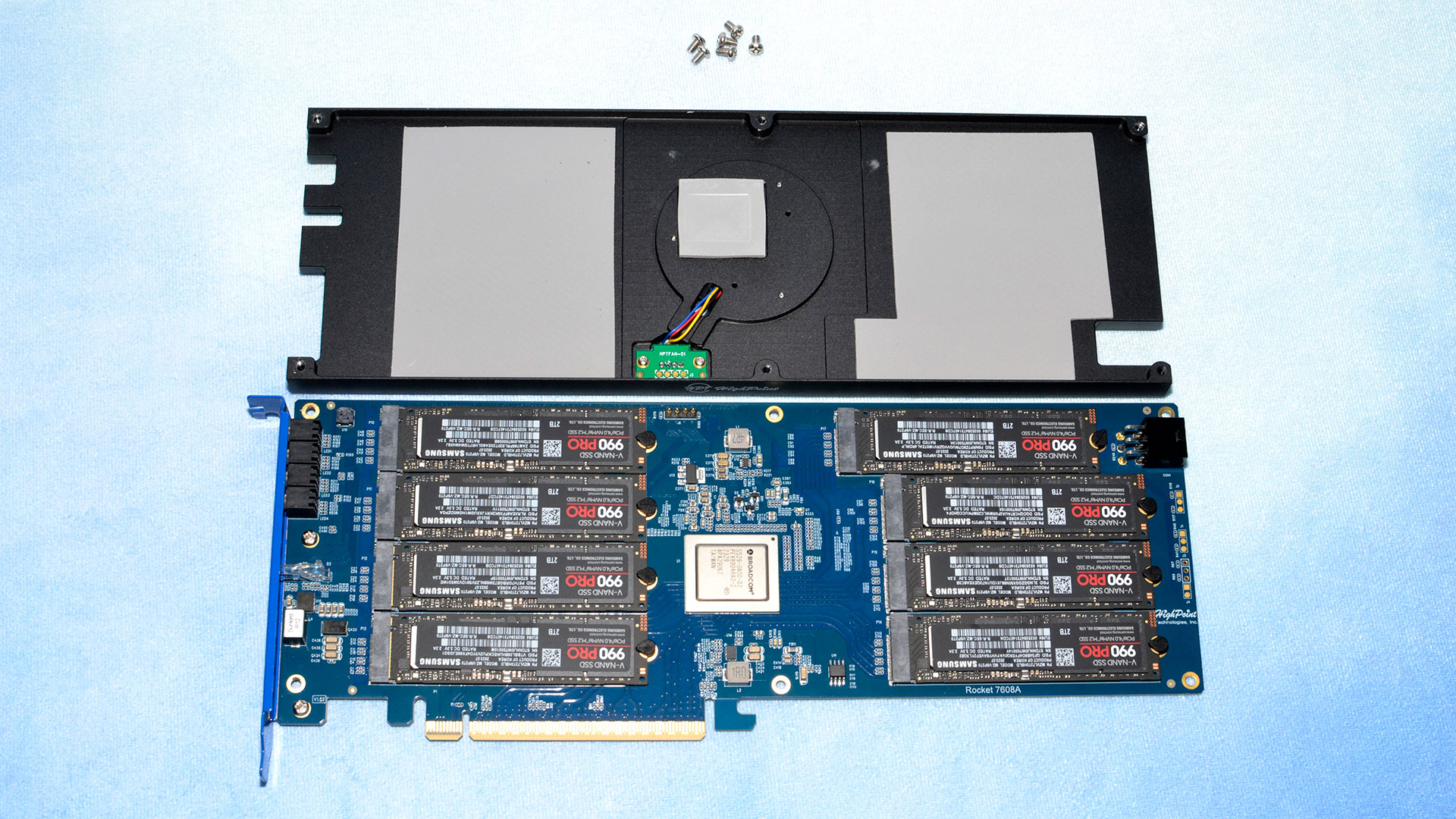

The Rocket 1608A comes with an aluminum heatsink that contains a cooling fan and a 6-pin PCIe connector for extra external power, which given the possible power draw is an important addition. The heatsink does come with replaceable thermal padding for the SSDs, which is also an important consideration as it’s possible to push four or eight SSDs to their limits simultaneously. The thermal padding can accommodate both single-sided, with K=3, and double-sided, with K=8, solid state drives, with different dimensions depending on if they are on the left or right side of the PCB. For proper operation with the included heatsink, the installed drives should be bare.

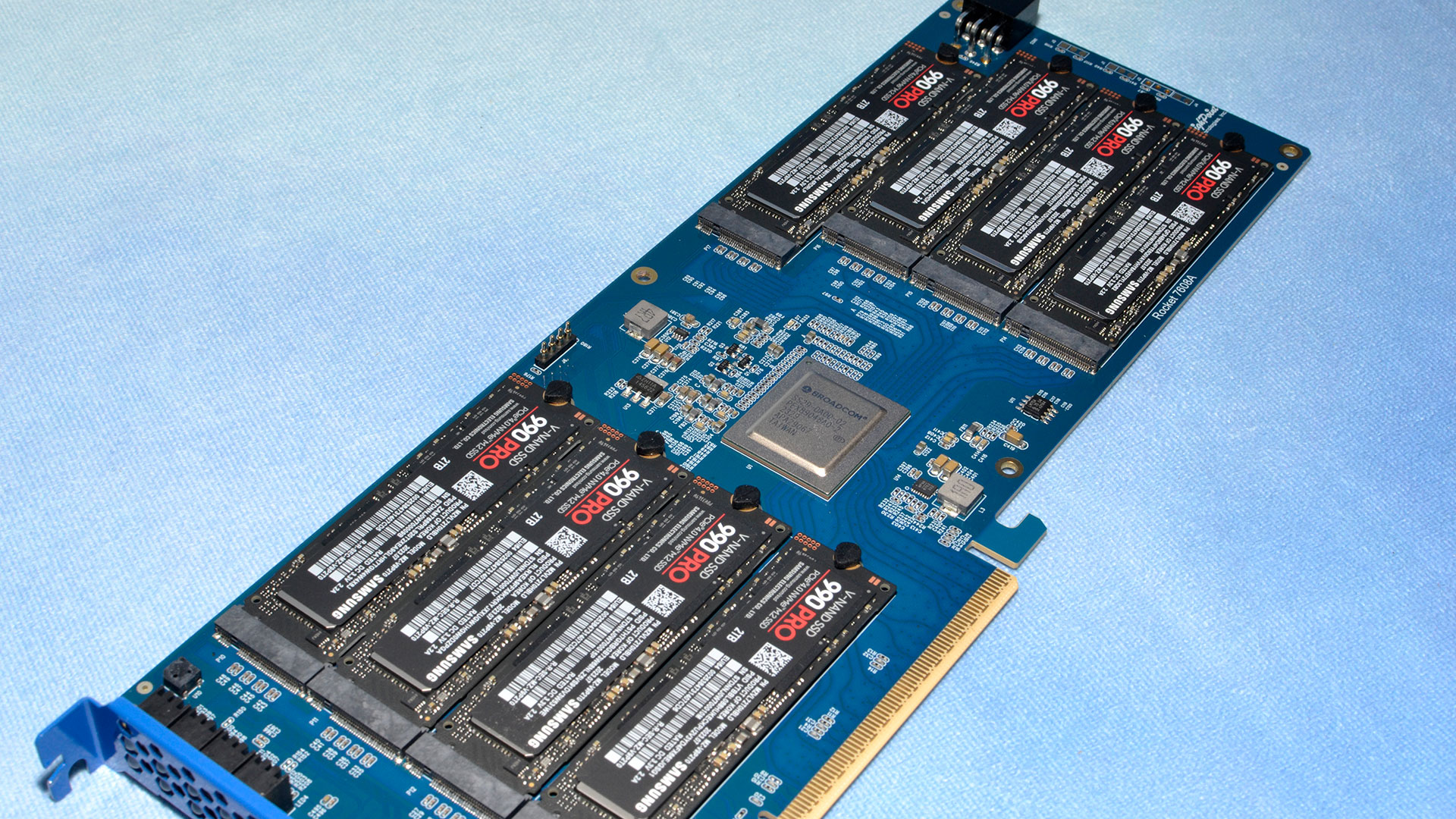

For our purposes, HighPoint sent us eight Samsung 990 Pro 2TB drive to use in testing. While it's possible to get even higher performance with PCIe 5.0 drives, even eight PCIe 4.0 drives can saturate the x16 interface when bursting data. The on-board switch provides a full 32 lanes of internal PCIe connectivity, but there's still only 16 lanes to the host system. PCIe 4.0 x4 links thus end up hitting the same theoretical 64 GB/s as the PCIe 5.0 x16 link.

The SSDs are arranged starting with port 1 at the upper left, while facing the front side of the card, then going in a counter-clockwise direction. The M.2 slots are toolless, using rubber retention clasps for installation. Drive status is tracked by eight LEDs on the externally exposed part of the AIC. There are also status and fault LEDs on this part of the card. The LEDs can be solid or may flash in different colors to communicate various drive and card condition information. An optional low-profile bracket is also included, according to the quick install guide.

The main brain of the Rocket 1608A is the Broadcom PEX89048 PCIe switch, part of the PCIe 5.0 PEX89000 series. The “48” denotes the total number of lanes. 16 of these lanes are upstream lanes, from the card to the host system. The other 32 are downstream. As there are eight M.2 slots available, each one is capable of connecting four lanes at PCIe 5.0.

As noted above, this means it’s possible to reach the maximum amount of bandwidth with either four PCIe 5.0 SSDs, like the Crucial T700 or Crucial T705, or eight PCIe 4.0 SSDs, like the Samsung 990 Pro. HighPoint provided us with the latter configuration. It's possible to use different counts and types of drives, but mixing and matching different drive types could lead to less than optimal results.

Unlike older solutions from HighPoint that used the ARM Cortex-R4, an MCU that has been used on SSDs as well, the PEX89048 is using a dual-core ARM Cortex-A15 processor. This is much more powerful and that extra horsepower is needed for the full feature set from Broadcom, the manufacturer of said switch. The switch itself pulls a typical 23.7W but if you’re adding, say, eight Samsung 990 Pro SSDs, it’s clear how HighPoint came up with a number north of 80W. The fan is part of this draw, and it has its own 4-pin connection on the PCB for power supply.

PCIe switches, which are also used on motherboards for downstream ports, do add some latency that can impact 4KB performance to a small degree. Usually the latency addition is in the 100-150ns range, with the PEX89048 listed at 115ns, which compared to the relatively long flash access latencies — from tens to hundreds of microseconds — is pretty tiny. The platform being used will also influence performance results, with Intel generally having lower latency for 4KB and AMD providing more throughput for bigger I/O. That said, an HEDT configuration based on AMD hardware would be a good baseline for prosumer use.

HighPoint’s Rocket 1608A compatibility guide lists the ASUS ProArt X670E-Creator WIFI as one consumer option, but you would have to forego a discrete GPU for maximum performance. Other systems with previous PCIe generation slots will compromise on total bandwidth. In either case, to reach maximum performance and IOPS a fast CPU may be required.

This hardware allows Broadcom to offer some extremely useful features, although these are largely optional and are of most benefit in an enterprise environment. One example that HighPoint lists is the field replacement unit (FRU) that contains the vital product data (VPD). Upon hardware failure, the FRU can be replaced with minimal downtime and a low risk of data loss, making it useful for mission-critical storage. HighPoint also touts the synthetic mode or synthetic hierarchy, which is when the switch acts like a host for I/O and resource allocation, allowing for more flexibility in storage topology and reducing server CPU overhead.

The PEX89000 series of switches has many other features that can be selectively exposed in products like HighPoint’s various AICs. Some of these are rather powerful, such as the built-in PCIe analyzer for interface diagnostics. Switching and port designation also allow for a lot of flexibility in how storage is accessed. More complex features make this hardware ideal for very large storage systems, used for example in AI applications, rather than making sense for a home rig. Check HighPoint’s and Broadcom’s sites for more information.

MORE: Best SSDs

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

MORE: Best Hard Drive

MORE: Best External SSDs

- 1

- 2

Current page: HighPoint Rocket Features and Specifications

Next Page HighPoint Rocket Performance Results

Shane Downing is a Freelance Reviewer for Tom’s Hardware US, covering consumer storage hardware.

-

Amdlova 60 seconds of speed after that slow as f.Reply

For normal user it's only burn money...

For enterprise user? Nops

For people with red cameras epic win! -

thestryker Would love to know what the issue with the Intel platforms is as I can't think of any logical reason for it.Reply

Broadcom is the reason why this card is so expensive as they massively inflated costs on PCIe switches after buying PLX Technology. There haven't been any reasonable priced switches at PCIe 3.0+ since.

I have a pair of PCIe 3.0 x4 dual M.2 cards that I imported from China because the ones available in the US were the same generic cards but twice as much money and they were still ~$50 per. Dual slot cards with PCIe switches from western brands are typically $150+ (most of these are x8 with the exception of QNAP who has some x4). -

Amdlova Reply

Can you share the model?thestryker said:Would love to know what the issue with the Intel platforms is as I can't think of any logical reason for it.

Broadcom is the reason why this card is so expensive as they massively inflated costs on PCIe switches after buying PLX Technology. There haven't been any reasonable priced switches at PCIe 3.0+ since.

I have a pair of PCIe 3.0 x4 dual M.2 cards that I imported from China because the ones available in the US were the same generic cards but twice as much money and they were still ~$50 per. Dual slot cards with PCIe switches from western brands are typically $150+ (most of these are x8 with the exception of QNAP who has some x4). -

thestryker Reply

This is the version sold in NA which has come down in price ~$20 since I got mine (uses PCIe 3.0 switch):Amdlova said:Can you share the model?

https://www.newegg.com/p/17Z-00SW-00037?Item=9SIAMKHK4H6020

You should be able to find versions of on AliExpress for $50-60 and there are also x8 cards in the same price range, just make sure they have the right switch.

The PCIe 2.0 one I got at the time this was the best pricing (you can find both versions for less elsewhere now): https://www.aliexpress.us/item/3256803722315447.htmlI didn't need PCIe 3.0 x4 worth of bandwidth because the drives I'm using are x2 which allowed me to save some more money. ASM2812 is the PCIe 3.0 switch and the ASM1812 is the PCIe 2.0 switch. -

razor512 Reply

Sadly the issue is many SSD makers stopped offering MLC NAND. Consider the massive drop in write speeds after the pSLC cache runs out. Consider even back in the Samsung 970 Pro days.Amdlova said:60 seconds of speed after that slow as f.

For normal user it's only burn money...

For enterprise user? Nops

For people with red cameras epic win!

As long as the SOC was kept cool, the 1TB 970 Pro (a drive from 2018) could maintain its 2500-2600MB/s (2300MB/s for the 512GB drive) write speeds from 0% to 100% fill.

So far we have not really seen a consumer m.2 NVMe SSD have steady state write speeds hitting even 1.8GB/s steady state until they started releasing PCIe 5.0 SSDs, and even then, you are only getting steady state write speeds exceeding that of old MLC drives at 2TB capacity; effectively requiring twice the capacity to achieve those speeds.

The Samsung 990 Pro 2TB drops to 1.4GB/s writes when the pSLC cache runs out.

If anything, I would have liked to see SSD makers continue to produce some MLC drives, since prices have come down, compared to many years ago, a modern 2TB SSD is still cheaper than 1TB drives MLC drives from those days. They could literally make a 1TB drive using faster MLC and charge the price of the 2TB TLC drives, and offer significantly higher steady state write performance for write intensive workloads. -

JarredWaltonGPU Reply

This is largely contingent on the SSDs being used. Samsung 990 Pro 2TB (which is what HighPoint provided) write at up to 6.2 GB/s or so for 25 seconds in Iometer Write Saturation testing, until the pSLC is full. Then they drop to ~1.4 GB/s, but with no "folding state" of lower performance. So best-case eight drives would be able to do 49.6 GB/s burst, and then 11.2 GB/s sustained.Amdlova said:60 seconds of speed after that slow as f.

For normal user it's only burn money...

For enterprise user? Nops

For people with red cameras epic win!

The R1608A doesn't quite hit those speeds, but having software RAID0 and a bit of other overhead is acceptable. I'm a bit bummed that we weren't provided eight Crucial T705 drives, or eight Sabrent Rocket 5 drives, because I suspect either one would have sustained ~30 GB/s in our write saturation test.

You need to look at our many other SSD reviews, where there are tons of drives that sustain way more than 1.4 GB/s. The Samsung 990 Pro simply doesn't compete well with newer drives. Samsung used to be the king of SSDs, and now it's generally just okay. 980/980 Pro were a bit of a fumble, and 990 Pro/Evo didn't really recover.razor512 said:Sadly the issue is many SSD makers stopped offering MLC NAND. Consider the massive drop in write speeds after the pSLC cache runs out. Consider even back in the Samsung 970 Pro days. As long as the SOC was kept cool, the 1TB 970 Pro (a drive from 2018) could maintain its 2500-2600MB/s (2300MB/s for the 512GB drive) write speeds from 0% to 100% fill.

So far we have not really seen a consumer m.2 NVMe SSD have steady state write speeds hitting even 1.8GB/s steady state until they started releasing PCIe 5.0 SSDs, and even then, you are only getting steady state write speeds exceeding that of old MLC drives at 2TB capacity; effectively requiring twice the capacity to achieve those speeds.

The Samsung 990 Pro 2TB drops to 1.4GB/s writes when the pSLC cache runs out.

If anything, I would have liked to see SSD makers continue to produce some MLC drives, since prices have come down, compared to many years ago, a modern 2TB SSD is still cheaper than 1TB drives MLC drives from those days. They could literally make a 1TB drive using faster MLC and charge the price of the 2TB TLC drives, and offer significantly higher steady state write performance for write intensive workloads.

There are Maxio and Phison-based drives that clearly outperform the 990 Pro in a lot of metrics. Granted, most of the drives that sustain 3 GB/s or more are Phison E26 using the same basic hardware, and most of the others are Phison E18 drives. Here's a chart showing one E26 drive (Rocket 5), plus other E18 drives that broke 3 GB/s sustained, with one 4TB Maxio MAP1602 that did 2.6 GB/s:

359 -

abufrejoval It's a real shame that now that we have PCIe switches again, which are capable of 48 PCIe v5 speeds and can actually be bought, the mainboards which would allow easy 2x8 bifurcation are suddenly gone... they were still the norm on AM4 boards.Reply

Apart from the price of the AIC, I'd just be perfectly happy to sacrifice 8 lanes of PCIe to storage, in fact that's how I've operated many of my workstations for ages using 8 lanes for smart RAID adapters and 8 for the dGPU.

One thing I still keep wondering about and for which I haven't been able to find an answer: do these PCIe switches effectively switch packets or just lanes? And having a look at the maximum size of the packet buffers along the path may also explain the bandwidth limits between AMD and Intel.

Here is what I mean:

If you put a full complement of PCIe v4 NVMe drives on the AIC, you'd only need 8 lanes of PCIe v5 to manage the bandwidth. But it would require fully buffering the packets that are being switched and negotiating PCIe bandwidths upstream and downstream independently.

And from what I've been reading in the official PCIe specs, full buffering of the relatively small packets is actually the default operational mode in PCIe, so packets could arrive at one speed on its input and leave at another on its output.

Yet what I'm afraid is happening is that lanes and PCIe versions/speeds seem to be negotiated both statically and based on the lowest common denominator. So if you have a v3 NVMe drive, it will only ever have its data delivered at the PCIe v5 slot at v3 speeds, even if within the switch four bundles of four v3 lanes could have been aggregated via packet switching to one bundle of four v5 lanes and thus deliver the data from four v3 drives in the same time slot on a v5 upstream bus.

It the crucial difference between a lane switch and a packet switch and my impression is that a fundamentally packet switch capable hardware is reduced to lane switch performance by conservative bandwidth negotiations, which are made end-point-to-end-point instead of point-to-point.

But I could have gotten it all wrong... -

JarredWaltonGPU Reply

I think it's just something to do with not properly providing the full x16 PCIe 5.0 bandwidth to non-GPU devices? Performance was bascially half of what I got from the AMD systems. And as noted, using non-HEDT hardware in both instances. Threadripper Pro or a newer Xeon (with PCIe 5.0 support) would probably do better.thestryker said:Would love to know what the issue with the Intel platforms is as I can't think of any logical reason for it.

Yeah, that's wrong. There are eight M.2 sockets, each with a 4-lane connection. So you need 32 lanes of PCIe 4.0 bandwidth for full performance... or 16 lanes of PCIe 5.0 offer the same total bandwidth. If you use eight PCIe 5.0 or 4.0 drives, you should be able to hit max burst throughput of ~56 GB/s (assuming 10% overhead for RAID and Broadcom and such). If you only have an x8 PCIe 5.0 link to the AIC, maximum throughput drops to 32 GB/s, and with overhead it would be more like ~28 GB/s.abufrejoval said:Here is what I mean:

If you put a full complement of PCIe v4 NVMe drives on the AIC, you'd only need 8 lanes of PCIe v5 to manage the bandwidth. But it would require fully buffering the packets that are being switched and negotiating PCIe bandwidths upstream and downstream independently.

If you had eight PCIe 3.0 devices, then you could do an x8 5.0 connection and have sufficient bandwidth for the drives. :) -

abufrejoval Reply

Well wrong about forgetting that it's actually 8 slots instead of the usual 4 I get on other devices: happy to live with that mistake!JarredWaltonGPU said:Yeah, that's wrong. There are eight M.2 sockets, each with a 4-lane connection. So you need 32 lanes of PCIe 4.0 bandwidth for full performance... or 16 lanes of PCIe 5.0 offer the same total bandwidth. If you use eight PCIe 5.0 or 4.0 drives, you should be able to hit max burst throughput of ~56 GB/s (assuming 10% overhead for RAID and Broadcom and such). If you only have an x8 PCIe 5.0 link to the AIC, maximum throughput drops to 32 GB/s, and with overhead it would be more like ~28 GB/s.

If you had eight PCIe 3.0 devices, then you could do an x8 5.0 connection and have sufficient bandwidth for the drives. :)

But right, in terms of the aggregation potential would be very nice!

So perhaps the performance difference can be explained by Intel and AMD using different bandwidth negotiation strategies? Intel doing end-to-end lowest common denominator and AMD something better?

HWinfo can usually tell you what exactly is being negotiated and how big the buffers are at each step.

But I guess it doesn't help identifying things when bandwidths are actually constantly being renegotiated for power management...