Why you can trust Tom's Hardware

We haven't tested a RAID adapter in quite some time, certainly not with our current SSD test rig. What's more, the HighPoint Rocket 1608A doesn't function ideally in our regular platform, and HighPoint recommends using AMD systems (or at least not the Z790 board that we have). As such, it doesn't make sense to compare performance of the 1608A with regular SSDs.

We did run our normal test suite, just for kicks, but most of the results are unremarkable — DiskBench, PCMark 10, and 3DMark don't hit the higher queue depths necessary to let the hardware shine. In fact, in some cases the use of software RAID0 can even reduce performance for the lighter storage workloads that we normally run.

To that end, we've elected to run a more limited set of tests that can push the hardware to its limits. If you just want an adapter that allows the use of eight M.2 SSDs, whether with RAID or not, the 1608A can also provide that functionality, but it's a rather expensive AIC for such purposes.

Synthetic Testing — CrystalDiskMark

CrystalDiskMark (CDM) is a free and easy-to-use storage benchmarking tool that SSD vendors commonly use to assign performance specifications to their products. It gives us insight into how each device handles different file sizes and at different queue depths.

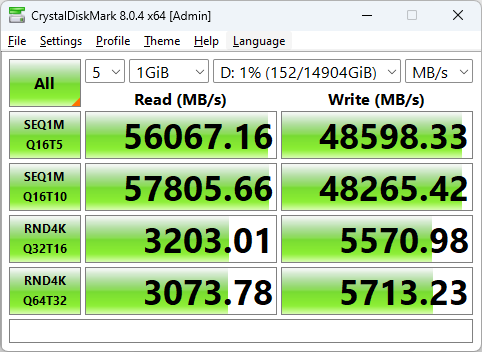

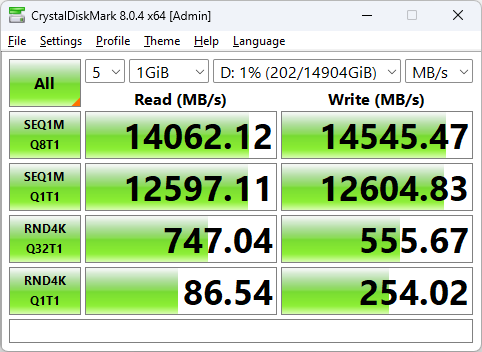

We were able to hit HighPoint’s speed of 56 GB/s with reads at the recommended queue depth of 80, with a sequential benchmark at Q16T5. This seems to be a relative limit of the hardware — we’re using PCIe 4.0 SSDs — as even a fuller array achieved a similar performance level. Sequential writes tapped out lower, as expected, at just over 48 GB/s. You may be able to get more performance out of the hardware with faster SSDs than the 990 Pro.

Running an even higher queue depth may also help and, in general, a high queue depth is required to fully make use of the Rocket 1608A. Newer, faster PCIe 5.0 drives, especially with a count in excess of four, should be able to achieve better performance than we're showing here.

This goes for smaller I/O like the coveted 4KB, as well. With a queue depth of 512 — Q32T16 — the array achieved up to 3.2 GB/s for random 4KB reads and, with queue depth of 2048 — Q64T32 — random 4KB writes reached almost 5.7 GB/s. Performance at QD1 sees no real improvement, as expected. Performance here could be improved with a smaller stripe size and 4Kn formatting, but a high queue depth will be needed with your workload to make it worthwhile. On the other hand, if you’re going for pure bandwidth then a larger I/O size is the way to go, but you will never quite hit the theoretical maximum of the interface for reads or writes.

The number of drives in use can also be relevant for performance and the desired workload. Being able to evenly balance the workload of n number of drives is a good goal, which could see improvement with an n multiple queue depth workload. With PCIe 4.0 drives, all eight will be pushed to the maximum to make up for the lower individual drive bandwidth. Four PCIe 5.0 drives may not necessarily have the same IOPS horsepower as that configuration, but using more than that many drives results in severely diminishing returns. Uneven drives — using different models or capacities — may also throw off performance results.

Sustained Write Performance and Cache Recovery

Official write specifications are only part of the performance picture. Most SSDs implement a write cache, which is a fast area of (usually) pseudo-SLC programmed flash that absorbs incoming data. Sustained write speeds can suffer tremendously once the workload spills outside of the cache and into the "native" TLC or QLC flash.

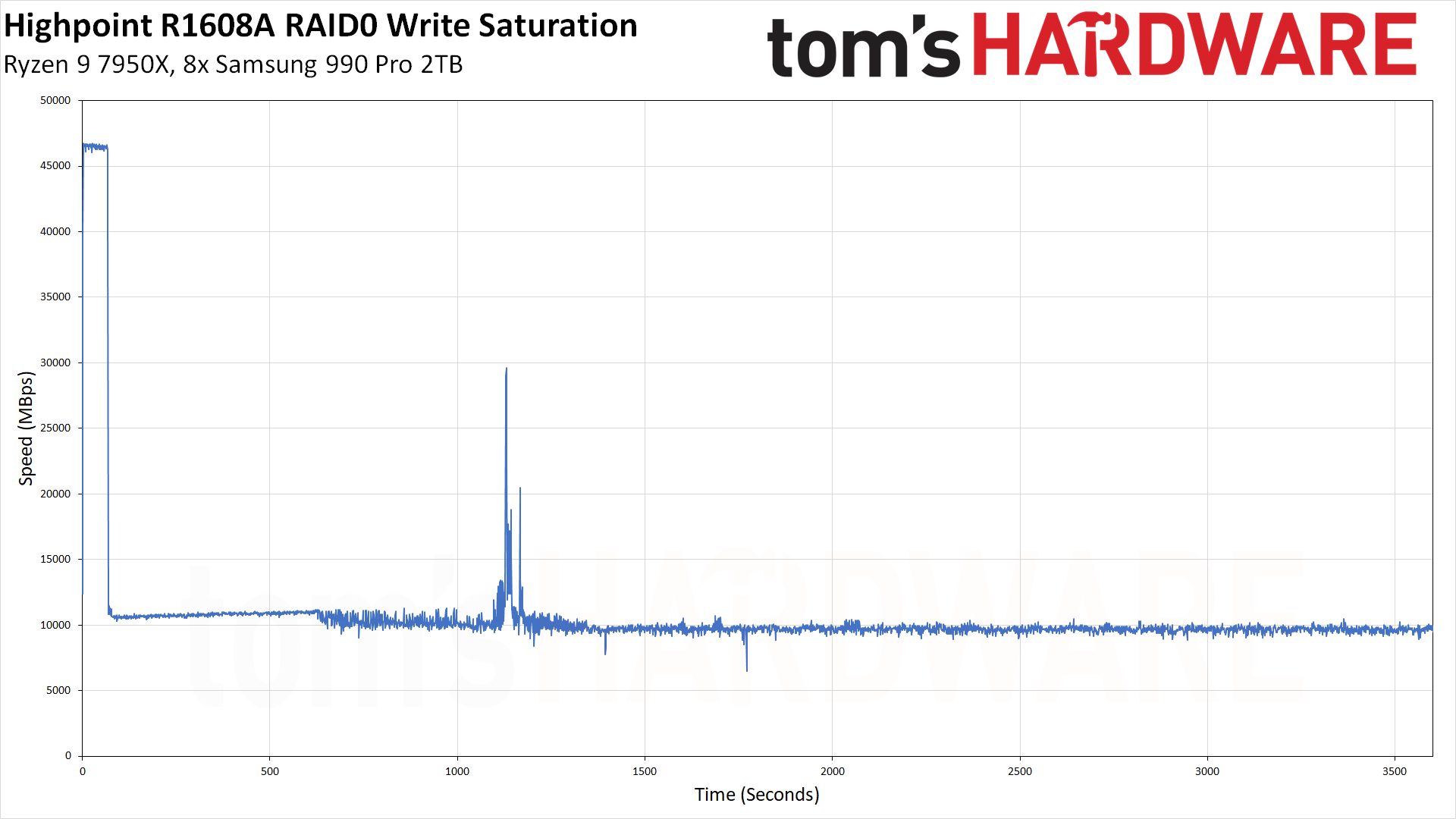

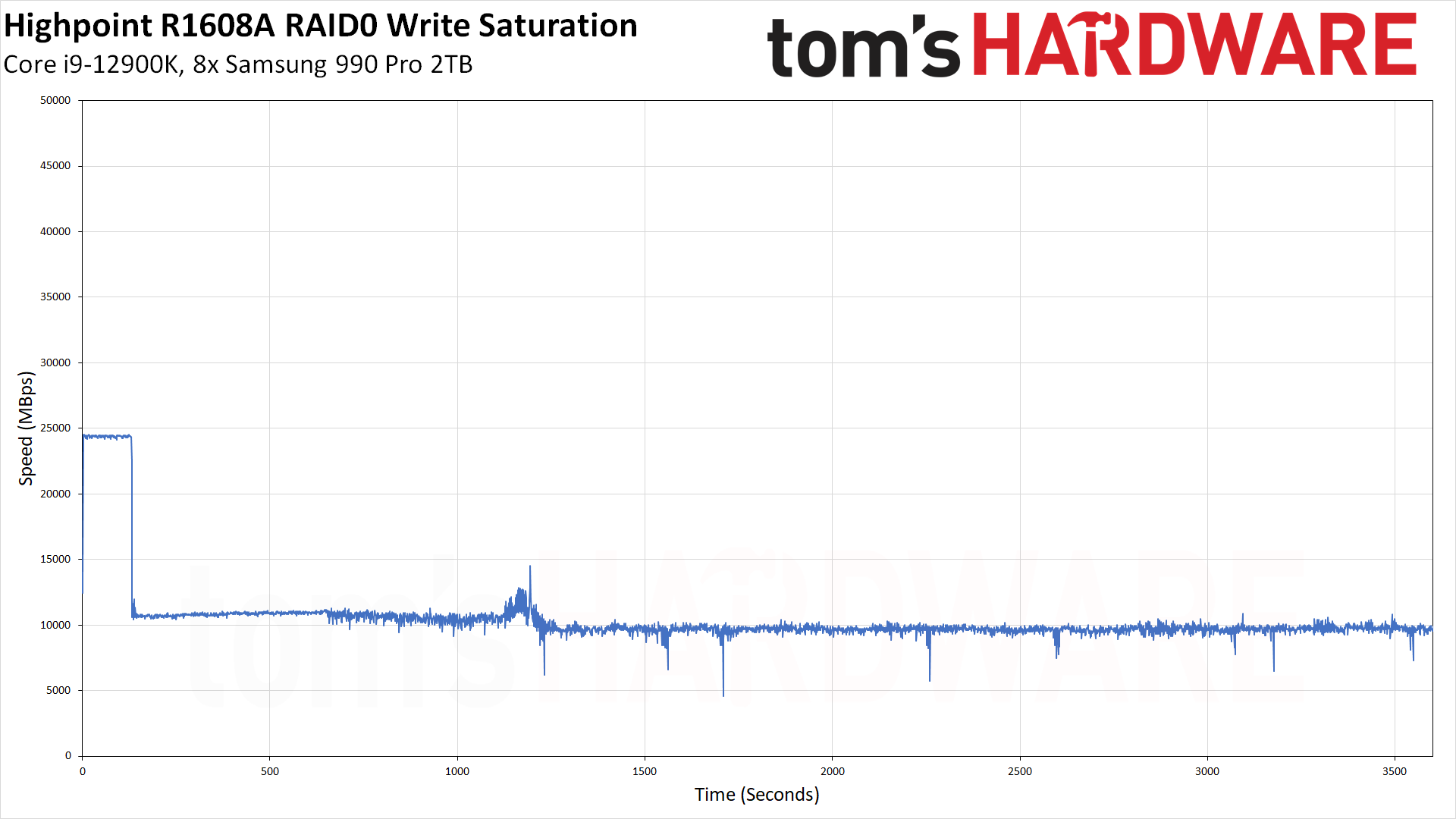

We used Iometer to hammer the HighPoint R1608A with sequential writes for two hours, which shows the size of the write cache and performance after the cache is saturated.

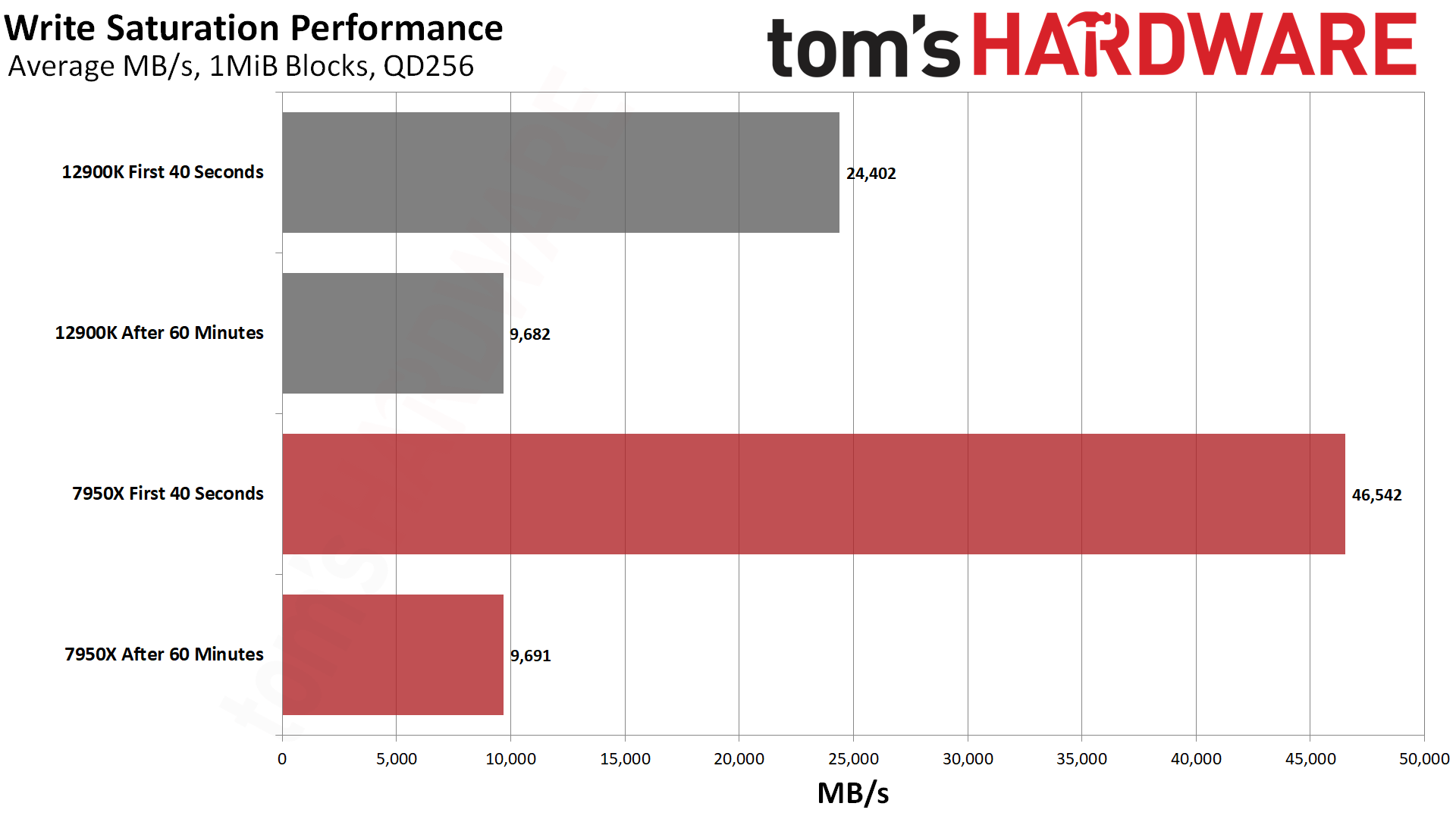

One reason you might want such an expensive array is if you really want insanely high sustained performance. These are consumer SSDs, so we’re still dealing with pSLC caching. With our eight PCIe 4.0 990 Pros this does mean it’s possible to outrun the cache as normal, with sustained pSLC writes up to 45 GB/s or so with TLC dropping to maybe a quarter of this speed. That’s not super amazing but it’s certainly possible to get more.

When using eight PCIe 5.0 drives, especially ones like the Sabrent Rocket 5 with high TLC speeds, sustained performance will be much higher. Additionally, you get the combined cache size of the drives but write speeds are capped after four or so of these drives so the pSLC mode will effectively last longer. As a result, sustained write performance could be quite high in relative terms. That said, performance of this sort requires specific workloads, with high queue depth still being beneficial, so plan accordingly.

We know from our Samsung 990 Pro 2TB review that each SSD can provide up to ~6.2 GB/s of write performance for around 40 seconds, after which the drive drops down to a steady state write throughput of around 1.4 GB/s. Multiply that by eight and we get a theoretical 59.6 GB/s of burst performance followed by a theoretical 11.2 GB/s of steady state throughput, so the Rocket 1608A doesn't quite hit that maximum throughput.

This is where using newer, faster drives could potentially help. The Crucial T705 as an example has initial burst performance of 12 GB/s for writes, and most of the Phison E26-based SSDs should be able to max out the x16 link and reach around 56 GB/s. Then performance drops to around 4 GB/s, plus there's a folding state of around 1.25 GB/s that lasted for four minutes in our testing before returning to 4 GB/s. We don't have eight identical PCIe 5.0 drives for testing, but in theory such SSDs could offer steady state performance closer to 25–30 GB/s rather than the 10 GB/s we're seeing with the Samsung 990 Pro.

Note also how peak throughput plummeted when we tested the HighPoint Rocket 1608A in our regular Intel Z790 test PC. Where the AMD X670E board allowed 46.5 GB/s of burst performance, the Z790 board peaked at just 24.4 GB/s. This is a known issue with certain Intel motherboards, so if you're after maximum thoughput you'll again want to look at AMD offerings or Intel Xeon platforms.

Test Bench and Testing Notes

| CPU | Ryzen 9 7950X |

| Motherboard | ASRock X670E Taichi |

| RAM | 2x16GB G.Skill Trident Z5 Neo DDR5-6000 CL30 |

| GPU | Integrated Radeon |

| OS SSD | Crucial T700 4TB |

| PSU | be quiet! Dark Power Pro 13 1600W |

| Case | Cooler Master TD500 Mesh V2 |

While our SSD testing normally uses an Alder Lake platform, for these tests we switched to an AMD Zen 4 system. We disable most background applications such as indexing, Windows updates, and anti-virus disabled in the OS to reduce run-to-run variability.

Most users building a high-end PC that might benefit from an AIC like the HighPoint Rocket 1608A will also want to have a graphics card, and that presents a problem on mainstream PCs. Only the primary x16 slot provides a full 16 lanes of PCIe 5.0 connectivity, and if you populate a secondary x16 slot, you'll either get PCIe 4.0 speeds or the main slot will get bifurcated into x8/x8 or x8/x4/x4.

That's why HEDT platforms that have more than 24 lanes of PCIe 5.0 connectivity are recommended for cards like the Rocket 1608A. You can still potentially get up to PCIe 5.0 x8 speeds of 32 GB/s, much faster than any single M.2 slot, but in that case you may not even want to bother with a more expensive PCIe 5.0 compatible AIC.

HighPoint Rocket Bottom Line

The HighPoint Rocket 1608A is an amazingly fast storage solution when configured with the right drives and the right platform. It’s flexible enough to be used less efficiently, depending on your hardware, but requires a significant investment to reach its full potential. Many of the Rocket 1608A’s features will be overkill for home use and only make sense in an enterprise setting. The power draw alone means planning ahead. That said, it’s impossible to deny the high performance levels that are possible, if your workload has sufficient queue depth.

In CrystalDiskMark, we reached over 56 GB/s. That sounds like a lot, but we’ve seen this 56 GB/s number achieved before with the Apex X16 Rocket 5 Destroyer, which is based on a different switch and can use up to 16 downstream SSDs. In that case, the switch is a Switchtec PM50084 from Microchip, which can address more lanes but fundamentally is still limited to 16 PCIe 5.0 upstream lanes in this type of AIC configuration. That’s certainly overkill and, while the PM50084 is even more featureful than the Rocket 1608A’s Broadcom-based solution, the latter makes more sense for HEDT use.

The price of $1,499 sounds like a lot until you look back and price high-end 8TB consumer NVMe SSDs: the Rocket 1608A can be paired with multiple smaller, less expensive drives and achieve outstanding performance, without the drawbacks of QLC NAND or higher cost 8TB drives. The eight Samsung 990 Pro 2TB SSDs we tested combined only cost around $1,360, less than the AIC itself. But we were only testing the card; we suspect most people and companies planning to use such an adapter will be more interested in populating the card with eight 4TB drives.

Adding just one drive to a system with an adapter is no big deal. Adding more can bring frustration, as many of the AICs on the market require motherboard PCIe bifurcation support. This, among other things, usually precludes the use of a discrete GPU. It’s possible to get a slower PCIe switch with up to four drives, but if you need PCIe 4.0 or 5.0 performance from the drives and want up to eight drives — plus the option of up to 64 GB/s bandwidth upstream — then the Rocket 1608A makes a lot of sense. At least, if you’re looking at M.2 SSDs and do not require the full suite of management features and software. HighPoint has different SKUs for more serious projects.

The Rocket 1608A isn’t for everyone but its presence in HighPoint’s lineup means that PCIe 5.0 storage is starting to mature. More efficient drives that will work in laptops, based on Phison’s E31T controller, were shown off at Computex, as were drives based on non-Phison controllers that can push PCIe 5.0 bandwidth. Even without them, fast 4.0 drives can be assembled to get the full upstream bandwidth out of the Rocket 1608A. For enthusiasts who want to add more M.2 drives or enjoy high-performance storage on their servers, more options are coming to the market, and even the relatively limited Rocket 1608A is a powerful addition in its own right — especially with faster platforms becoming the norm.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

MORE: Best SSDs

MORE: Best Hard Drive

MORE: Best External SSDs

- 1

- 2

Current page: HighPoint Rocket Performance Results

Prev Page HighPoint Rocket Features and Specifications

Shane Downing is a Freelance Reviewer for Tom’s Hardware US, covering consumer storage hardware.

-

Amdlova 60 seconds of speed after that slow as f.Reply

For normal user it's only burn money...

For enterprise user? Nops

For people with red cameras epic win! -

thestryker Would love to know what the issue with the Intel platforms is as I can't think of any logical reason for it.Reply

Broadcom is the reason why this card is so expensive as they massively inflated costs on PCIe switches after buying PLX Technology. There haven't been any reasonable priced switches at PCIe 3.0+ since.

I have a pair of PCIe 3.0 x4 dual M.2 cards that I imported from China because the ones available in the US were the same generic cards but twice as much money and they were still ~$50 per. Dual slot cards with PCIe switches from western brands are typically $150+ (most of these are x8 with the exception of QNAP who has some x4). -

Amdlova Reply

Can you share the model?thestryker said:Would love to know what the issue with the Intel platforms is as I can't think of any logical reason for it.

Broadcom is the reason why this card is so expensive as they massively inflated costs on PCIe switches after buying PLX Technology. There haven't been any reasonable priced switches at PCIe 3.0+ since.

I have a pair of PCIe 3.0 x4 dual M.2 cards that I imported from China because the ones available in the US were the same generic cards but twice as much money and they were still ~$50 per. Dual slot cards with PCIe switches from western brands are typically $150+ (most of these are x8 with the exception of QNAP who has some x4). -

thestryker Reply

This is the version sold in NA which has come down in price ~$20 since I got mine (uses PCIe 3.0 switch):Amdlova said:Can you share the model?

https://www.newegg.com/p/17Z-00SW-00037?Item=9SIAMKHK4H6020

You should be able to find versions of on AliExpress for $50-60 and there are also x8 cards in the same price range, just make sure they have the right switch.

The PCIe 2.0 one I got at the time this was the best pricing (you can find both versions for less elsewhere now): https://www.aliexpress.us/item/3256803722315447.htmlI didn't need PCIe 3.0 x4 worth of bandwidth because the drives I'm using are x2 which allowed me to save some more money. ASM2812 is the PCIe 3.0 switch and the ASM1812 is the PCIe 2.0 switch. -

razor512 Reply

Sadly the issue is many SSD makers stopped offering MLC NAND. Consider the massive drop in write speeds after the pSLC cache runs out. Consider even back in the Samsung 970 Pro days.Amdlova said:60 seconds of speed after that slow as f.

For normal user it's only burn money...

For enterprise user? Nops

For people with red cameras epic win!

As long as the SOC was kept cool, the 1TB 970 Pro (a drive from 2018) could maintain its 2500-2600MB/s (2300MB/s for the 512GB drive) write speeds from 0% to 100% fill.

So far we have not really seen a consumer m.2 NVMe SSD have steady state write speeds hitting even 1.8GB/s steady state until they started releasing PCIe 5.0 SSDs, and even then, you are only getting steady state write speeds exceeding that of old MLC drives at 2TB capacity; effectively requiring twice the capacity to achieve those speeds.

The Samsung 990 Pro 2TB drops to 1.4GB/s writes when the pSLC cache runs out.

If anything, I would have liked to see SSD makers continue to produce some MLC drives, since prices have come down, compared to many years ago, a modern 2TB SSD is still cheaper than 1TB drives MLC drives from those days. They could literally make a 1TB drive using faster MLC and charge the price of the 2TB TLC drives, and offer significantly higher steady state write performance for write intensive workloads. -

JarredWaltonGPU Reply

This is largely contingent on the SSDs being used. Samsung 990 Pro 2TB (which is what HighPoint provided) write at up to 6.2 GB/s or so for 25 seconds in Iometer Write Saturation testing, until the pSLC is full. Then they drop to ~1.4 GB/s, but with no "folding state" of lower performance. So best-case eight drives would be able to do 49.6 GB/s burst, and then 11.2 GB/s sustained.Amdlova said:60 seconds of speed after that slow as f.

For normal user it's only burn money...

For enterprise user? Nops

For people with red cameras epic win!

The R1608A doesn't quite hit those speeds, but having software RAID0 and a bit of other overhead is acceptable. I'm a bit bummed that we weren't provided eight Crucial T705 drives, or eight Sabrent Rocket 5 drives, because I suspect either one would have sustained ~30 GB/s in our write saturation test.

You need to look at our many other SSD reviews, where there are tons of drives that sustain way more than 1.4 GB/s. The Samsung 990 Pro simply doesn't compete well with newer drives. Samsung used to be the king of SSDs, and now it's generally just okay. 980/980 Pro were a bit of a fumble, and 990 Pro/Evo didn't really recover.razor512 said:Sadly the issue is many SSD makers stopped offering MLC NAND. Consider the massive drop in write speeds after the pSLC cache runs out. Consider even back in the Samsung 970 Pro days. As long as the SOC was kept cool, the 1TB 970 Pro (a drive from 2018) could maintain its 2500-2600MB/s (2300MB/s for the 512GB drive) write speeds from 0% to 100% fill.

So far we have not really seen a consumer m.2 NVMe SSD have steady state write speeds hitting even 1.8GB/s steady state until they started releasing PCIe 5.0 SSDs, and even then, you are only getting steady state write speeds exceeding that of old MLC drives at 2TB capacity; effectively requiring twice the capacity to achieve those speeds.

The Samsung 990 Pro 2TB drops to 1.4GB/s writes when the pSLC cache runs out.

If anything, I would have liked to see SSD makers continue to produce some MLC drives, since prices have come down, compared to many years ago, a modern 2TB SSD is still cheaper than 1TB drives MLC drives from those days. They could literally make a 1TB drive using faster MLC and charge the price of the 2TB TLC drives, and offer significantly higher steady state write performance for write intensive workloads.

There are Maxio and Phison-based drives that clearly outperform the 990 Pro in a lot of metrics. Granted, most of the drives that sustain 3 GB/s or more are Phison E26 using the same basic hardware, and most of the others are Phison E18 drives. Here's a chart showing one E26 drive (Rocket 5), plus other E18 drives that broke 3 GB/s sustained, with one 4TB Maxio MAP1602 that did 2.6 GB/s:

359 -

abufrejoval It's a real shame that now that we have PCIe switches again, which are capable of 48 PCIe v5 speeds and can actually be bought, the mainboards which would allow easy 2x8 bifurcation are suddenly gone... they were still the norm on AM4 boards.Reply

Apart from the price of the AIC, I'd just be perfectly happy to sacrifice 8 lanes of PCIe to storage, in fact that's how I've operated many of my workstations for ages using 8 lanes for smart RAID adapters and 8 for the dGPU.

One thing I still keep wondering about and for which I haven't been able to find an answer: do these PCIe switches effectively switch packets or just lanes? And having a look at the maximum size of the packet buffers along the path may also explain the bandwidth limits between AMD and Intel.

Here is what I mean:

If you put a full complement of PCIe v4 NVMe drives on the AIC, you'd only need 8 lanes of PCIe v5 to manage the bandwidth. But it would require fully buffering the packets that are being switched and negotiating PCIe bandwidths upstream and downstream independently.

And from what I've been reading in the official PCIe specs, full buffering of the relatively small packets is actually the default operational mode in PCIe, so packets could arrive at one speed on its input and leave at another on its output.

Yet what I'm afraid is happening is that lanes and PCIe versions/speeds seem to be negotiated both statically and based on the lowest common denominator. So if you have a v3 NVMe drive, it will only ever have its data delivered at the PCIe v5 slot at v3 speeds, even if within the switch four bundles of four v3 lanes could have been aggregated via packet switching to one bundle of four v5 lanes and thus deliver the data from four v3 drives in the same time slot on a v5 upstream bus.

It the crucial difference between a lane switch and a packet switch and my impression is that a fundamentally packet switch capable hardware is reduced to lane switch performance by conservative bandwidth negotiations, which are made end-point-to-end-point instead of point-to-point.

But I could have gotten it all wrong... -

JarredWaltonGPU Reply

I think it's just something to do with not properly providing the full x16 PCIe 5.0 bandwidth to non-GPU devices? Performance was bascially half of what I got from the AMD systems. And as noted, using non-HEDT hardware in both instances. Threadripper Pro or a newer Xeon (with PCIe 5.0 support) would probably do better.thestryker said:Would love to know what the issue with the Intel platforms is as I can't think of any logical reason for it.

Yeah, that's wrong. There are eight M.2 sockets, each with a 4-lane connection. So you need 32 lanes of PCIe 4.0 bandwidth for full performance... or 16 lanes of PCIe 5.0 offer the same total bandwidth. If you use eight PCIe 5.0 or 4.0 drives, you should be able to hit max burst throughput of ~56 GB/s (assuming 10% overhead for RAID and Broadcom and such). If you only have an x8 PCIe 5.0 link to the AIC, maximum throughput drops to 32 GB/s, and with overhead it would be more like ~28 GB/s.abufrejoval said:Here is what I mean:

If you put a full complement of PCIe v4 NVMe drives on the AIC, you'd only need 8 lanes of PCIe v5 to manage the bandwidth. But it would require fully buffering the packets that are being switched and negotiating PCIe bandwidths upstream and downstream independently.

If you had eight PCIe 3.0 devices, then you could do an x8 5.0 connection and have sufficient bandwidth for the drives. :) -

abufrejoval Reply

Well wrong about forgetting that it's actually 8 slots instead of the usual 4 I get on other devices: happy to live with that mistake!JarredWaltonGPU said:Yeah, that's wrong. There are eight M.2 sockets, each with a 4-lane connection. So you need 32 lanes of PCIe 4.0 bandwidth for full performance... or 16 lanes of PCIe 5.0 offer the same total bandwidth. If you use eight PCIe 5.0 or 4.0 drives, you should be able to hit max burst throughput of ~56 GB/s (assuming 10% overhead for RAID and Broadcom and such). If you only have an x8 PCIe 5.0 link to the AIC, maximum throughput drops to 32 GB/s, and with overhead it would be more like ~28 GB/s.

If you had eight PCIe 3.0 devices, then you could do an x8 5.0 connection and have sufficient bandwidth for the drives. :)

But right, in terms of the aggregation potential would be very nice!

So perhaps the performance difference can be explained by Intel and AMD using different bandwidth negotiation strategies? Intel doing end-to-end lowest common denominator and AMD something better?

HWinfo can usually tell you what exactly is being negotiated and how big the buffers are at each step.

But I guess it doesn't help identifying things when bandwidths are actually constantly being renegotiated for power management...