Display Testing Explained: How We Test PC Monitors

Our display benchmarks help you decide what monitor to put on your desktop.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

HDR Testing

To test HDR-capable monitors, we follow a similar procedure to our benchmarks for color, grayscale and gamma.

Calman has a special workflow designed for HDR10 displays that measures grayscale in 5% brightness increments, the EOTF curve and color saturation tracking for the DCI-P3 and BT.2020 color gamuts.

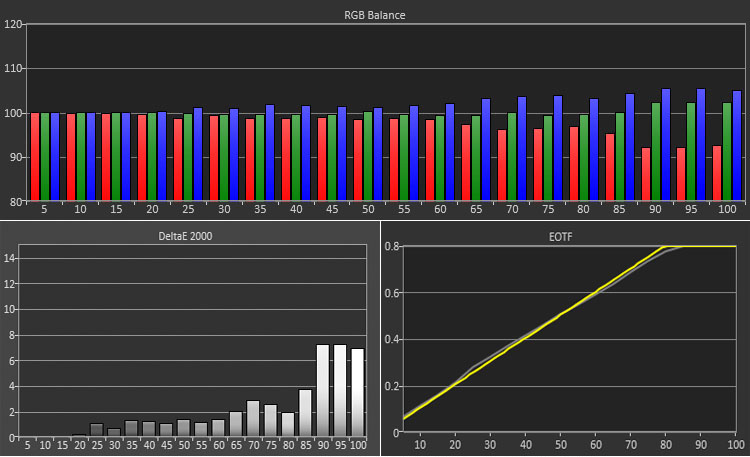

Grayscale accuracy for HDR and SDR is measured the same way. 6500 Kelvins (D65) is the standard for both signal types, and our test determines how close a monitor comes to that color temperature at all brightness levels. Above, you can see the errors both in the RGB chart and the Delta E graph. Any brightness level above 3dE has a visible error.

EOTF is the HDR version of gamma, and like that metric, it measures the amount of light at each brightness level and plots it on a curve. In our chart, the yellow line represents the standard, and the white trace is our measurement. You can see that the line takes a sharp bend at around the 75% mark. This is the point where the monitor takes over tone mapping from the HDR signal’s metadata. That data tells the monitor what luminance level to assign to each brightness step. Ideally, the white and yellow lines should be the same. The example above is a nearly perfect measurement run. If the white line is below the yellow, that means the light level is darker than spec.

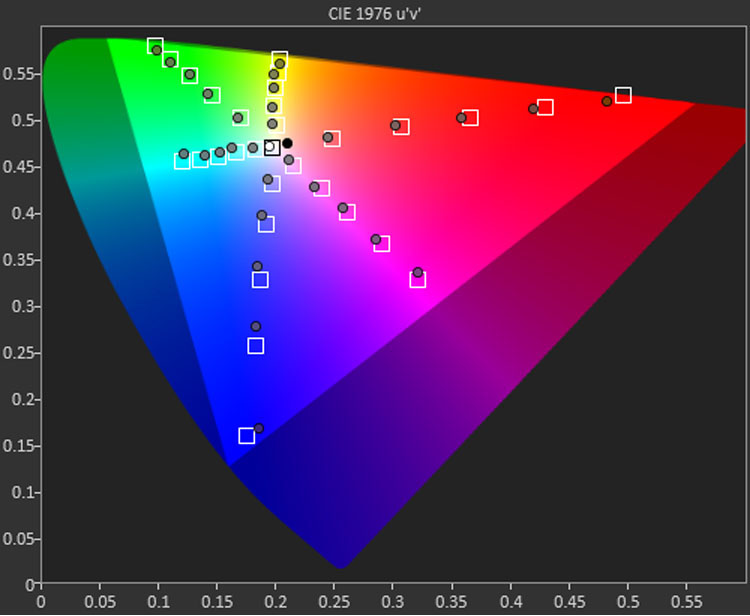

In the gamut chart, we measure color saturation and hue for all primary and secondary colors at 20, 40, 60, 80 and 100% levels. The goal is simply to put the dot inside the target box. HDR monitors should cover the DCI-P3 spec, so we measure against that standard.

Final Thoughts

We hope this gives you a clear understanding of our testing methods and why the results are important.

Which tests have more meaning will depend on your particular application. For gamers, contrast and panel speed are likely to be the deciding factors in a purchase decision rather than color accuracy. If you’re a photographer, color accuracy and gamut volume will matter more than input lag or viewing angles. For more help making your decision, read our PC monitor buying guide.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: HDR Testing and Final Thoughts

Prev Page Grayscale Tracking, Gamma Response, and Color Gamut

Christian Eberle is a Contributing Editor for Tom's Hardware US. He's a veteran reviewer of A/V equipment, specializing in monitors. Christian began his obsession with tech when he built his first PC in 1991, a 286 running DOS 3.0 at a blazing 12MHz. In 2006, he undertook training from the Imaging Science Foundation in video calibration and testing and thus started a passion for precise imaging that persists to this day. He is also a professional musician with a degree from the New England Conservatory as a classical bassoonist which he used to good effect as a performer with the West Point Army Band from 1987 to 2013. He enjoys watching movies and listening to high-end audio in his custom-built home theater and can be seen riding trails near his home on a race-ready ICE VTX recumbent trike. Christian enjoys the endless summer in Florida where he lives with his wife and Chihuahua and plays with orchestras around the state.

-

dputtick Hi! For the input lag tests for monitors with refresh rates greater than 60hz, do you test multiple times and take the average (to rule out variability coming from the USB driver, buffering in the GPU, etc)? Have you investigated how much latency all of that adds? Would be nice to have an apples-to-apples comparison with the tests done via the pattern generator. Also curious if you've looked into getting a pattern generator that pushes more than 60hz, or if such a thing exists. Thanks so much for doing all of these tests!Reply