LG unveils world's first 540 Hz OLED monitor with 720p dual mode that can boost up to 720 Hz — Features a 27-inch 4th Gen Tandem OLED panel with QHD resolution and up to 1,500 nits of peak brightness

The "world's fastest gaming OLED."

OLED monitors are all the rage these days and for good reason. Their inherent benefits such as instantaneous response times and infinite contrast ratio offer unparalleled (at least until Micro LED is mainstream) image quality that has only gotten cheaper over time. Monitor manufacturers employ OLED as their frontier for innovation that eventually drives down costs or takes the next step forward for the tech. As such, LG Display has just announced what it's touting as the "World's Fastest Gaming OLED" — a 27-inch 540 Hz monitor with QHD resolution. The existence of such a panel was confirmed in June by LG itself, and now we've arrived at the unveiling.

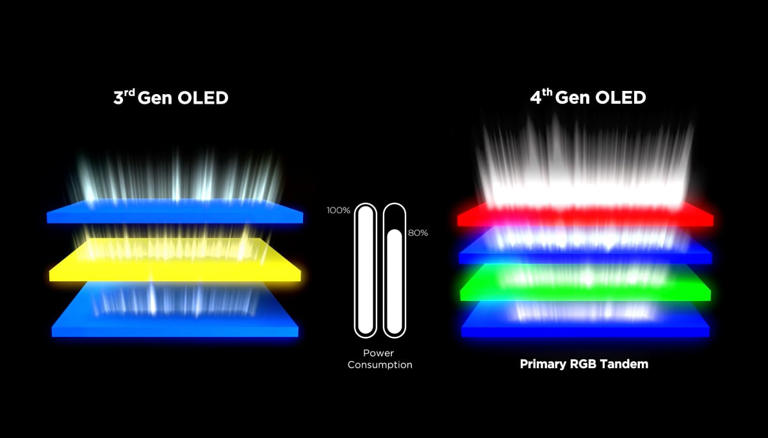

The new monitor, shown recently at the K-Display trade show in South Korea, features LG's 4th Gen Tandem OLED with Primary RGB Tandem technology. This design stacks red, green, and blue light-emitting layers as independent sources, unlike traditional OLEDs that combine emitters into a single layer. The result is significantly higher peak brightness—up to 4,000 nits—and improved color luminance (more brightness for colors), something that QD-OLED is more known for. This differs from the dual-stack Tandem OLED used in iPads, which sandwiches two similar OLED layers to boost efficiency and lifespan, but does not use separate RGB layers.

Anyhow, LG's 540 Hz OLED monitor does indeed snatch the crown for the fastest OLED monitor in the world from Samsung, who previously held the world record for their 500 Hz panels, but those panels didn't have this other trick up their sleeves. While it's a 1440p panel, the LG monitor features dual mode functionality that will allow you to boost its 540 Hz native refresh rate to 720 Hz at HD resolution (read: 720p). It's hard to imagine anyone playing at 720p anymore, even esports professionals, but it's still nice to have the ability to do so in your back pocket.

Moreover, it boasts a highly impressive 99.5% coverage of the DCI-P3 color space, along with a peak brightness of 1,500 nits. It's important to note that only LG's Tandem OLED TVs can get as bright as 4,000 nits—that too at only the small 2% windows—while the monitor is "stuck" at 1,500 nits, which is still plenty bright for that HDR effect. There are no pricing or availability details for this monitor yet, but we did see LG's other Tandem OLED monitor launch a few days ago in China for a converted price of $510 USD. That was only a 1440p 280 Hz panel so expect this dual-mode 540 Hz beast to cost a lot more.

Also on display (no pun intended) at the show was LG's new 45" ultrawide monitor with a 5K2K resolution. The company is calling this the sharpest OLED monitor to date because it has a 125 PPI pixel density that's unusually high for a non-Apple monitor. It uses the LG's 3rd Gen MLA+ panel because it's not a new product launch; you can already find it on sale on Amazon for the low-low price of $1,799. Regardless, it features a 165 Hz native refresh rate that can double to 330 Hz at half the resolution, as part of its dual mode functionality.

Lastly, the company also showed off an 83-inch OLED TV with the same Primary RGB Tandem tech discussed earlier. This marks the debut of Tandem OLED on a large format display and it, along with the 540 Hz monitor, are the only two displays with LG's cutting-edge 4th Gen Tandem OLED panel. This is LG Display's latest, bold answer to Samsung, its main competitor in this field. Both frequently try to one-up each other with different approaches to the same underlying OLED tech. You can read our WOLED vs QD-OLED feature to learn more, but the main takeaway is that both are ultimately great at the end of the day.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Hassam Nasir is a die-hard hardware enthusiast with years of experience as a tech editor and writer, focusing on detailed CPU comparisons and general hardware news. When he’s not working, you’ll find him bending tubes for his ever-evolving custom water-loop gaming rig or benchmarking the latest CPUs and GPUs just for fun.

-

A Stoner My guess is that there are <200 humans on the planet that can benefit from >300 hz images and 0 who benefit from going from 480 to anything higher. At this point it is starting to get stupid.Reply -

helper800 Reply

Cant wait for the future 1khz 4k OLEDs.A Stoner said:My guess is that there are <200 humans on the planet that can benefit from >300 hz images and 0 who benefit from going from 480 to anything higher. At this point it is starting to get stupid. -

edzieba Reply

Only if you stick to sample-and-hold full-panel refreshing. Increasing refresh rates allows you trend back towards what CRTs were capable of, with line-by-line impulse display, and the ability to 'chase the beam' with rendering (producing the image for that line just before the line is scanned out to the display).A Stoner said:My guess is that there are <200 humans on the planet that can benefit from >300 hz images and 0 who benefit from going from 480 to anything higher. At this point it is starting to get stupid.

Even if frame rate remains at a 'pedestrian' 120Hz, higher refresh rates allow these sorts of latency-reducing and persistence-reducing driving modes. And the great part about this being the native panel rate is that you can do this in software rather than needing an expensive panel-side processor (e.g. the original GH-Sync FPGA module). -

Alex/AT I'm more concerned about color curves of this. Yes, FPS freaks would freak even if they cannot see them, but well, who needs it if the color representation is far from normal, not talking good even closely.Reply -

UnforcedERROR Reply

It's not really about what you can see but also about things like input delay. Not that sub 10ms is something to fight about, but reducing latency to 0 is something that people will strive for.A Stoner said:My guess is that there are <200 humans on the planet that can benefit from >300 hz images and 0 who benefit from going from 480 to anything higher. At this point it is starting to get stupid. -

Alex/AT Reply

1/60 delay is 0.016s. 1/120 delay is 0.008s. 1/540 delay is 0.0018s.UnforcedERROR said:It's not really about what you can see but also about things like input delay. Not that sub 10ms is something to fight about, but reducing latency to 0 is something that people will strive for.

These numbers are just marketing and self-convincing. And making money from that.

Human body does not work that fast, typical eye to movement reaction time is on the scale of 0.05s-1s.

Basically, you won't notice the difference *unless* you imagine yourself doing it (placebo effect).

---

P.S.

The bottom line is, our eye-brain channel and reception/recognition has finite and pretty much measurable 'speed'. That's why we are able to have a 'stroboscope effect' under disco lamps. That's why car quickly rotating wheels suddenly start 'rotating in other direction' visually. That's why quickly rotating fans look like shady circles. That's why we perceive CRTs, LED displays and lamps (especially LED) not as blinking mess but as a stable moving picture or light source. That's why fast (especially bright/contrast) moving objects become lines in our sight. That's why we can watch anime without puking even if it's mostly 5-15 FPS at all. Etc. Etc.

It's non-linear. It all depends on overall light levels, scene contrast, distance & active eye focus, concentration on object, their position and speed of movement, our condition, age and level of fatigue, etc. Hell, even the vision position. Center is 'faster' than 'edges'. Eye sensors need to recharge as well, although they do that in an alternating patterns.

But overall, it's measured to 30-40 FPS at average. The problem with i.e. 30-40 Hz of lighting being 'blinky' is that our vision is also 'framed', and so if lighting up does not match our 'frame', we do see it only partially.

And that's it. Almost any complex motion over 60 FPS is bound not to be different. Except if it's a medium sized object (let's say apple sized) exactly in the center of vision at medium (3-5m) distances. Yes, you can perceive such objects faster. Yes, you can perceive full scene light up and down faster. And there were 'tests' and 'articles' on that. But you can't do this constantly in a minute or more time frame. Or on more than full scene toggling light up and down or a single object moving. Eyes have their limits. Brain has its limits.

Then how 30 to 60 FPS are that smoother, and 120 FPS are a bit even more smoother?

Interpolation, sir.

Eye sensors are 'charging' (or should I say 'depleting') with light hitting them and so they accumulate the effect, much like the photo cameras (yes, exposure time, exactly) do. Thus, changes happening at speed eye can't perceive are averaged together into a single 'frame' in each of sensitive elements of our eye.

Let's talk MSAA, on a good resolution display and appropriate distance.

No MSAA to 2x MSAA is usually hugely different and better, yes? That's our '30 FPS' (on the average of vision speed). Yes, these 30->60 FPS is that 'MSAA 2x' for the vision speed that makes moving things much smoother, like MSAA 2x does for the subpixel resolution that cannot be rendered.

Now take 60->120 FPS. That's MSAA 2x->4x. Yes, some will notice the difference. But many will tell it's not worth the hassle and extra resources or it gives nothing.

Now take MSAA 4x->8x... You know... No difference. Almost. And that's what happens with FPS bloat as well. The more we bloat, the less differences there are.

---

P.P.S.

Personally, I'd appreciate 8K more than any of >120 FPS on commonly available displays. And yes, better color calibration. That's because photos do look like awry on poorly calibrated displays. And because 4K dots are still distinctly perceivable, so not even close to the eye limit even on 32". While 120 FPS is already times over the top.

---

P.P.P.S.

What I wrote is very simplistic aggregation of the principles. It's not exactly correct if we go into detail, it's just the layman basics. If one wants, one can study more on the matter - and find different proofs and some divergencies to that. Or one can even just believe 240-1000 FPS will magically work improving one's reaction time, what's interesting here is that if this belief is strong and self-convincing enough, it will. :) Just 'cause brain likes stimulus. So yeah, it may still work for some even if it works in a bit different way. -

A Stoner Reply

True about input delay, or likely output delay, and speed from reality to viewable that you can respond to. That I can appreciate as a benefit, but again, I think the number of people who can take advantage going from an output lag of 10ms to 3ms is small and likely the number of people who can appreciate the change from 3ms to 0ms is absolute 0 or at the very least, unmeasurable.UnforcedERROR said:It's not really about what you can see but also about things like input delay. Not that sub 10ms is something to fight about, but reducing latency to 0 is something that people will strive for. -

edzieba Reply

If I had a penny every time somebody equated the flicker-fusion threshold to the limits of motion perception I'd have enough to buy a 5090. It remains a myth, as the flicker-fusion threshold only applies to illuminants, not images. Actual motion perception is far more complex.Alex/AT said:But overall, it's measured to 30-40 FPS at average. The problem with i.e. 30-40 Hz of lighting being 'blinky' is that our vision is also 'framed', and so if lighting up does not match our 'frame', we do see it only partially.

And that's it. Almost any complex motion over 60 FPS is bound not to be different.

That is not remotely how rod and cone cells work, let alone how the visual cortex processes action potentials.Alex/AT said:Eye sensors are 'charging' (or should I say 'depleting') with light hitting them and so they accumulate the effect, much like the photo cameras (yes, exposure time, exactly) do. -

Alex/AT Reply

That is how they work. Especially rods have very high delays, their reaction time is almost immediate but recharge time is slow. Cones are times faster, but still need to recharge.edzieba said:If I had a penny every time somebody equated the flicker-fusion threshold to the limits of motion perception I'd have enough to buy a 5090. It remains a myth, as the flicker-fusion threshold only applies to illuminants, not images. Actual motion perception is far more complex.

That is not remotely how rod and cone cells work, let alone how the visual cortex processes action potentials.

And no. It's not about flicker fusion or level change speed, more so not about equating the two. I especially distincted between the two in my large above post (flicker Hz and motion FPS). But the thing is, while available speed margins of these effects are different, the underlying mechanisms that cause both flicker fusion and motion averaging (interpolation) are the same in the eye.

Immediate WtB or BtW is fast, but then WtBtWtBtW... or BtWtBtWtB... is very slow on the second and consecutive stages (although as mentioned, the first WtB/BtW transition is extremely fast). And it also averages! GtG is kinda speedy :) but has its own quirks like reduced contrast and pulse sensivity. And is also averaging, just at a higher rate.

Also remember we are talking chemistry and analog levels, so reaction is not a distinct pulse, just a change of pulsation level and/or intervals. With reception level adaptivity in the brain to make it more puzzling (it's not only eyes that adapt to the light/darkness, it's also brain adjusting contrast levels...).

Just google for photoactivation mechanics studies, not myths or myth busters. It's something to read and take note of. If you google further, you can even find reaction time studies. There is no concrete answer to 'how exactly', but overall sensors signal (photon) accumulation and need to recharge is not anything new. When i.e. rod receives photon, it reacts, but it does not mean reaction stops if it receives more photons. Levels still change, but slower, averaging the effect.

https://pubmed.ncbi.nlm.nih.gov/2355261/https://www.sciencedirect.com/science/article/abs/pii/S1095643308007022https://pmc.ncbi.nlm.nih.gov/articles/PMC3398183/etc.

Brain motion perception is adaptive, not only vision related (yeah sound does play a role - and even a kind of reverse channel is present: https://www.illusionsindex.org/i/skipping-pylon ), thus extremely complex, I think no concrete studies explain it deep enough at the moment. -

King_V This might start to approach the needs of that one guy, can't recall if it was here or another forum, who claimed that he could see flicker/lack of smoothness at anything less than 1000Hz refresh.Reply

This product was clearly made to show that particular user that their goals shall soon be within reach. Today, 720Hz. Tomorrow, surely 4-digit refresh rates!

![[K-Display 2025] 27](https://img.youtube.com/vi/h7lwIRL9MYc/maxresdefault.jpg)