Why you can trust Tom's Hardware

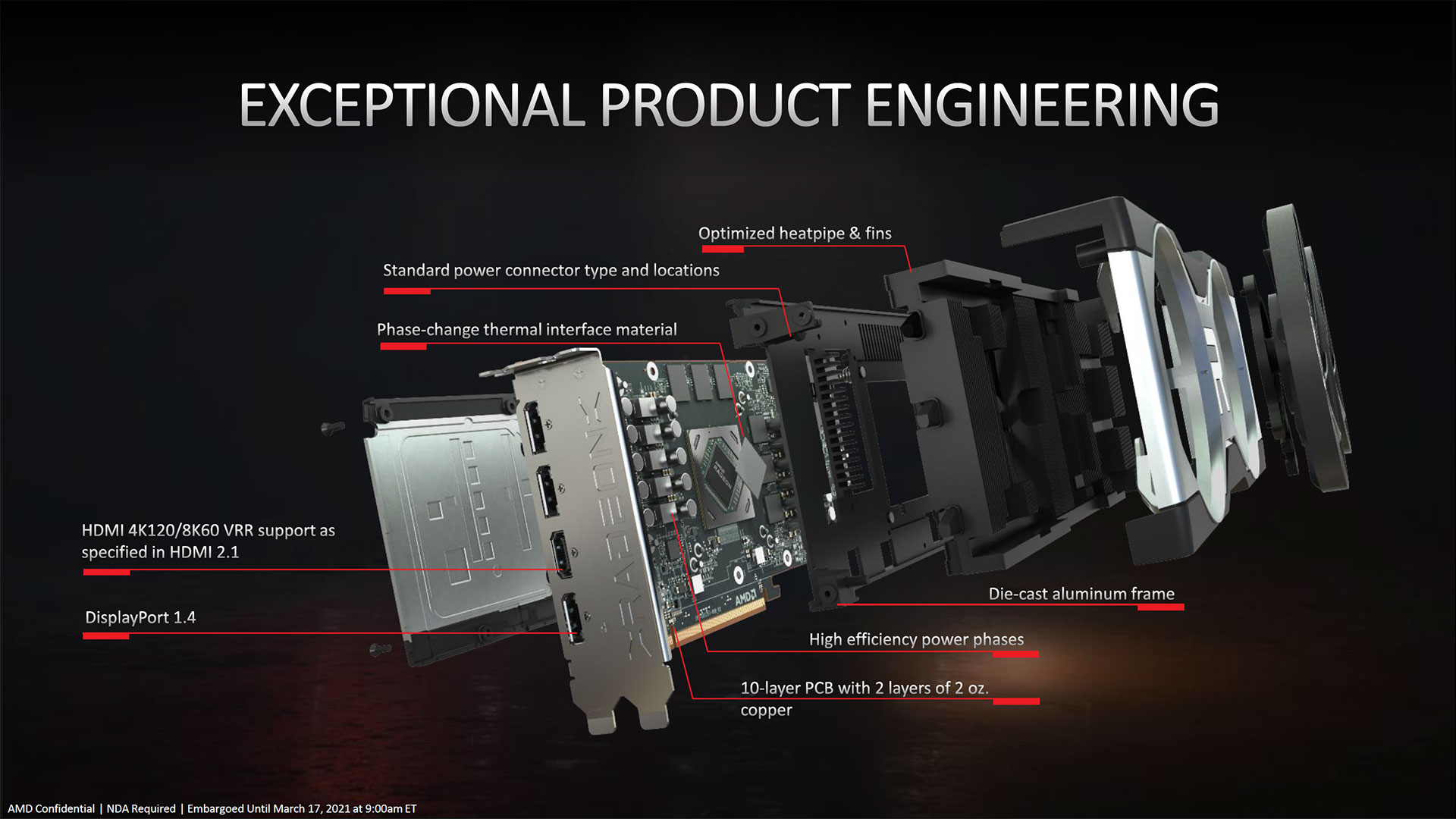

Today, we have two RX 6700 XT cards. AMD's reference card comes with the base level of performance and a design that echoes the other RX 6000 reference cards. Physically, the 6700 XT has the length and width of the RX 6800, but it's slightly shorter and significantly lighter. It measures 267x110x38mm and weighs 883g, while the RX 6800 measures 267x120x38mm and weighs 1384g. The RX 6700 XT also uses a dual-fan configuration, but it has custom 88mm fans, whereas the 6800 has custom 78mm fans. Considering the similar power ratings, we'd expect the RX 6700 XT to run a bit hotter than the other RDNA2 cards, but we'll check the details below.

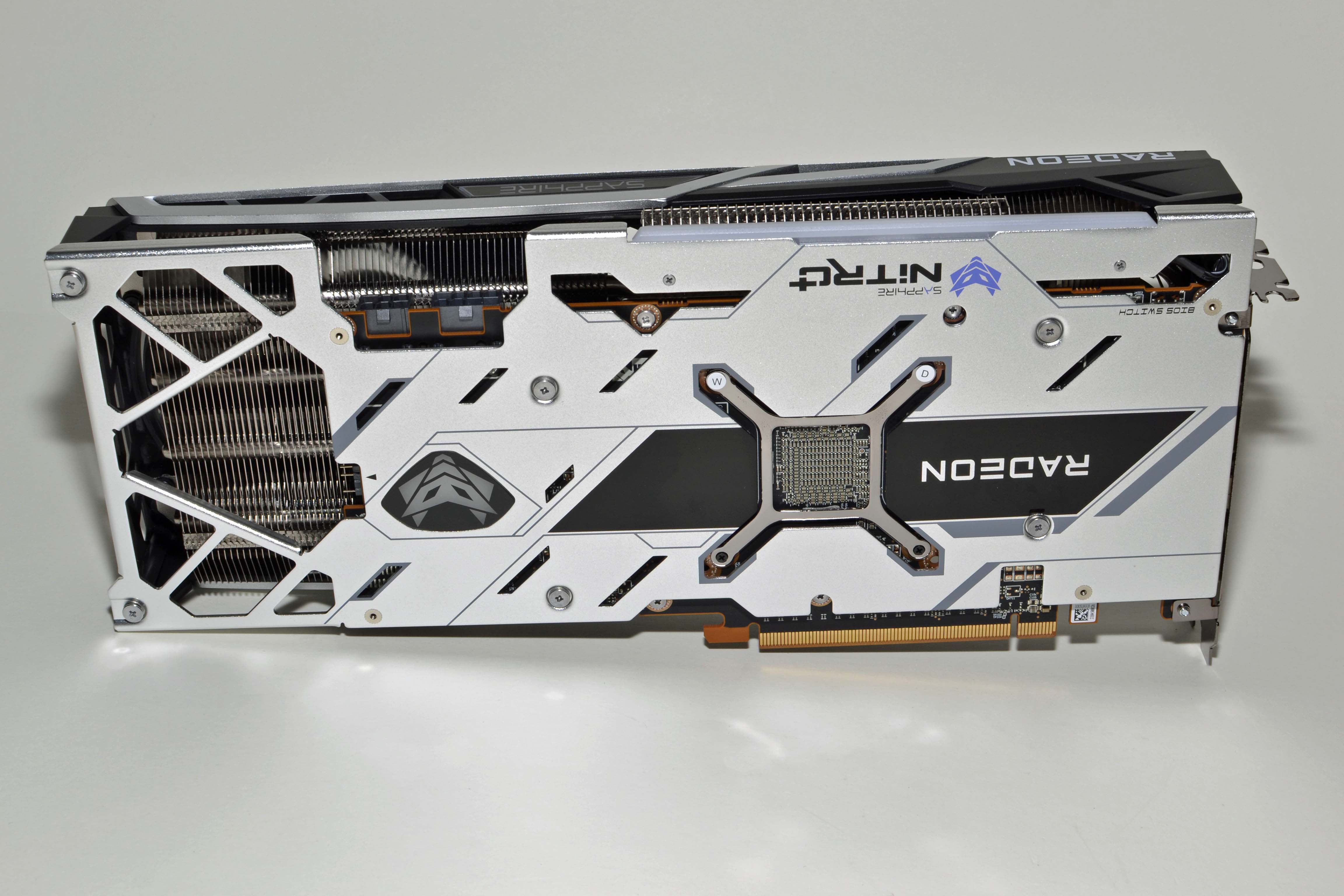

The other card we have comes from Sapphire, the RX 6700 XT Nitro+. Besides a modest factory overclock, the Sapphire card uses a much larger heatsink with triple fans. It measures 313x131x48mm, so it's a 2.5-slot width. Despite the large size, it only weighs 1020g — more than the reference card, but quite a bit less than the reference 6800 card. The two outer fans on the Nitro+ are custom 94mm diameters, while the center fan is a slightly smaller 83mm model (all measurements are mine, and could be off by about 1-2mm).

We'll have a separate write-up of the Sapphire card soon, and we'll include the performance results here as a point of reference for the various factory overclocked models that will likely be far more common than the reference design. We ran out of time during testing to do a full overclocking investigation, so the Sapphire card also stands in for those results. We'll also include performance results from a Gigabyte RTX 3060 Ti card in the charts to show how custom Nvidia cards stack up — and the Gigabyte card was tested with the latest 461.72 drivers, which mostly appears to have affected performance in Horizon Zero Dawn.

Software: FidelityFX, Radeon Boost, and More

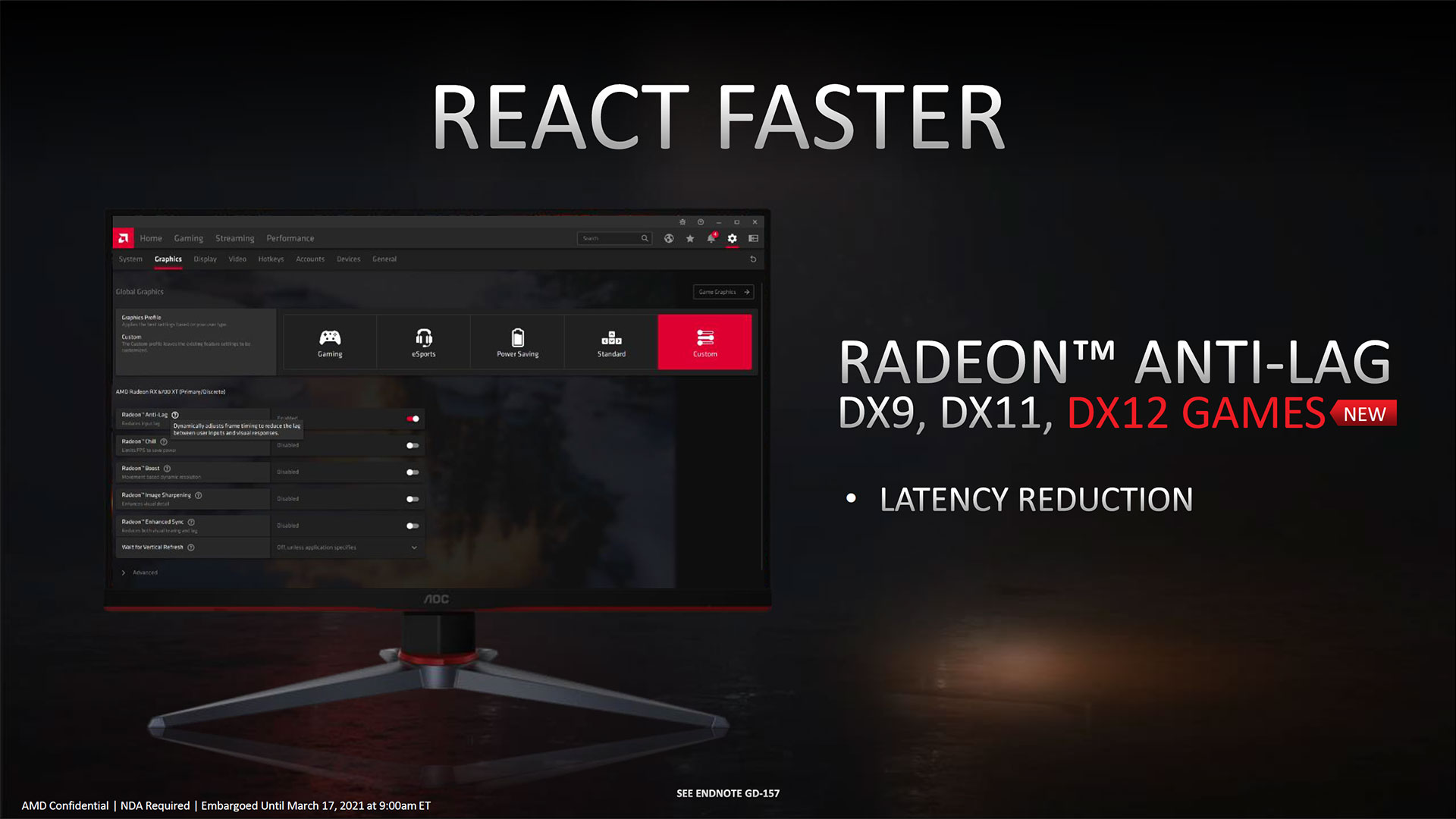

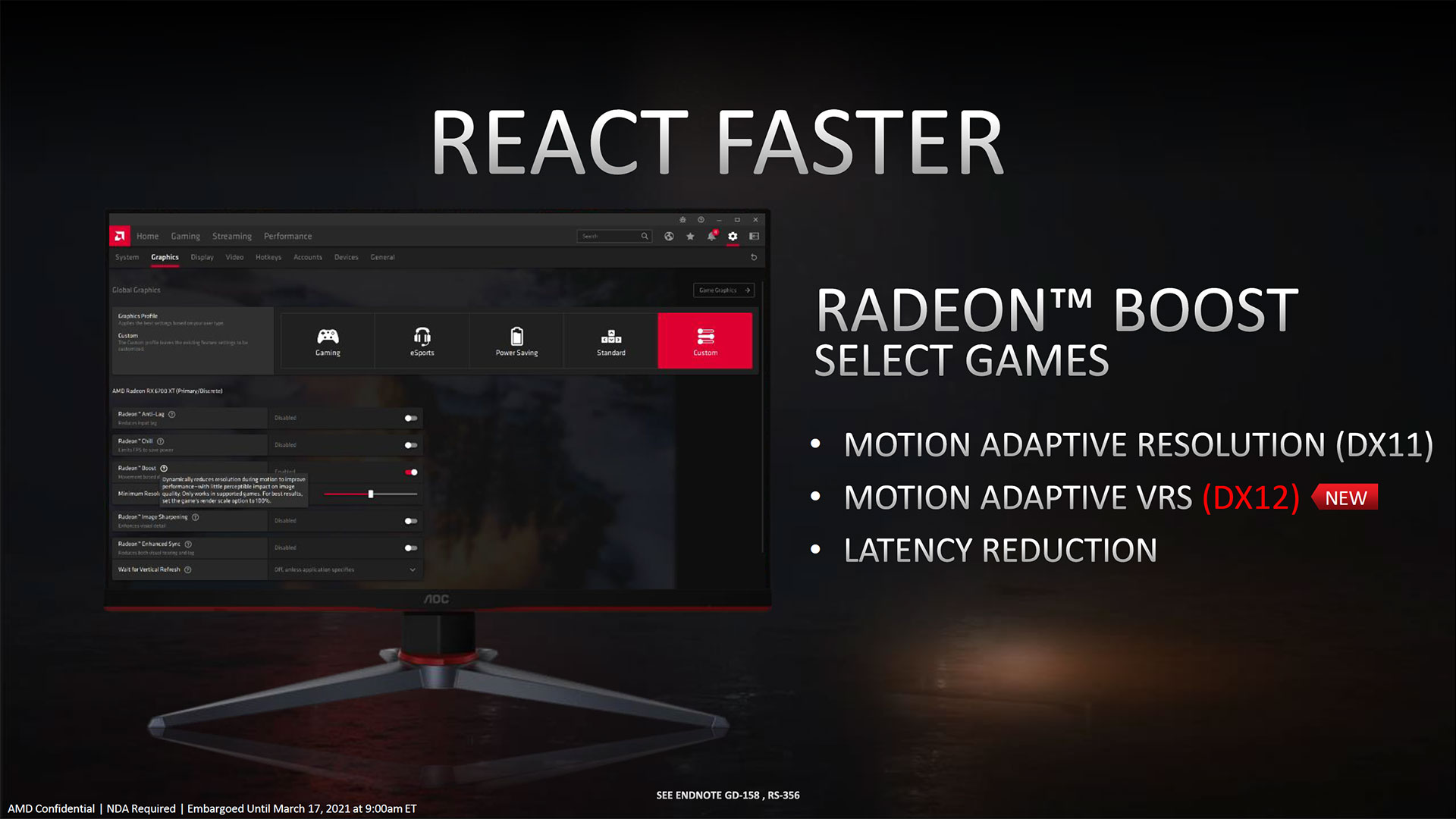

The latest AMD and Nvidia drivers are packed full of features and options. For AMD, the latest enhancements include Anti-Lag, Radeon Boost, Image Sharpening, streaming options, and more. AMD also offers other software tools, like the FidelityFX library for developers, including CAS (Contrast Aware Sharpening), ambient occlusion, and screen space reflections. AMD's Super Resolution remains a work in progress and isn't available yet, unfortunately. All of the FidelityFX libraries are royalty-free and open source and work with any compatible graphics card, including Nvidia's cards.

Time constraints preclude us from testing every feature in the software options, and turning certain options on can impact our performance measurements. We ended up benchmarking using the "Standard" profile in AMD's drivers, which turns off all extras. We've also encountered bugs in the past (e.g., with Enhanced Sync) that sometimes make the extras more trouble than they're worth. Still, we appreciate having choices for tuning performance available.

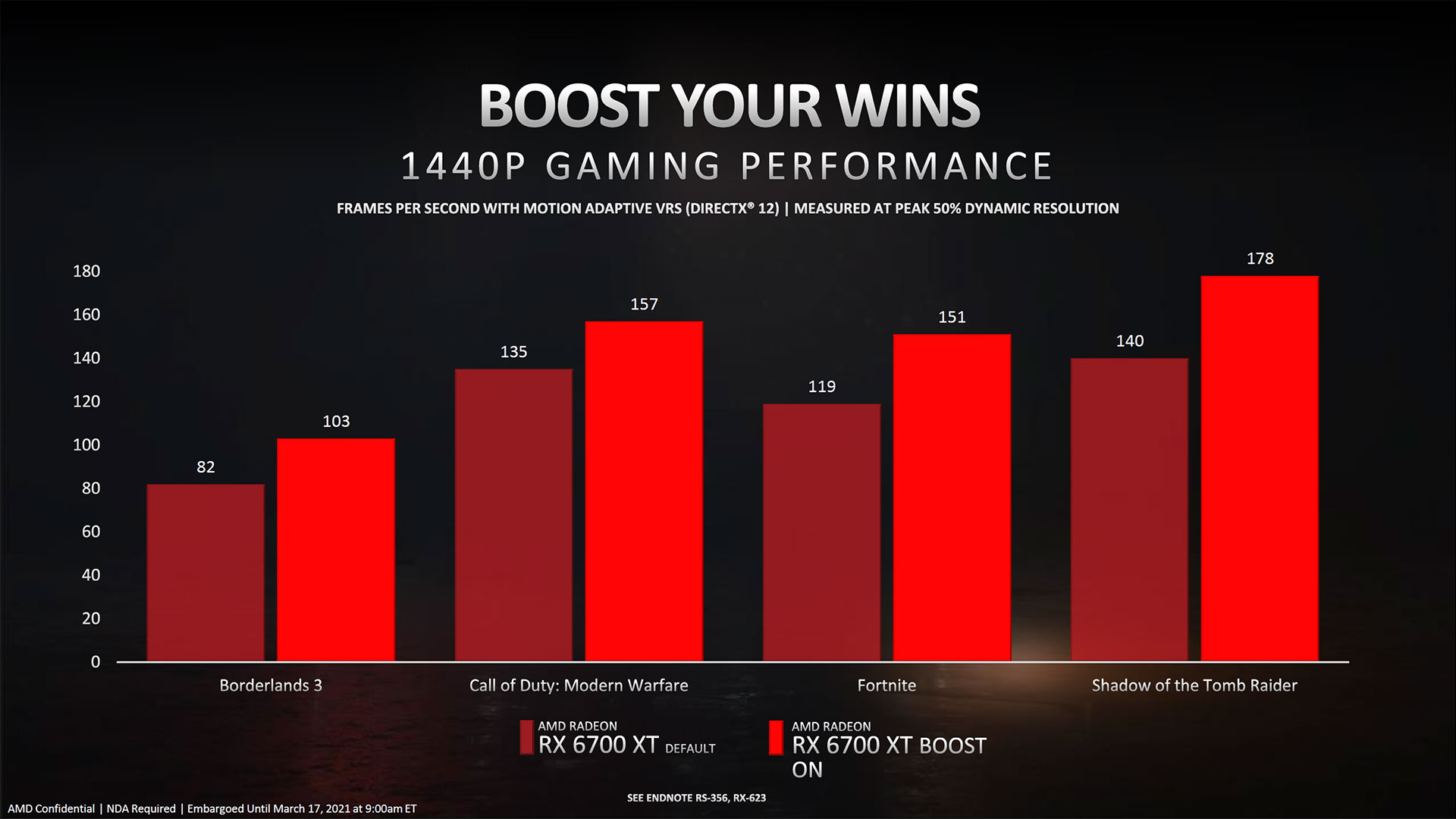

As an example, Radeon Boost combines resolution scaling with CAS to improve performance. When you're moving, and particularly turning, most games have blur effects, and it's difficult to see all of the high quality details. At the same time, turning represents an action that can benefit from higher frame rates, as it often means you're trying to aim at an enemy. Radeon Boost lowers your rendering resolution in such situations to increase fps, and the loss in image fidelity shouldn't be very noticeable. Theoretically, it's the best of both worlds… but it doesn't work well for our apples-to-apples benchmarking.

There's a whole series of testing we can look at, including things like Radeon Boost, DLSS, Anti-Lag, Reflex, Chill, and more. We're looking at ways to quantify these features, but again: Time constraints. Our advice is to try some of these features and see if you like the result. If a feature potentially drops fps slightly but improves latency, that could be a net win. Or if a feature improves fps while causing a drop in image quality that you don't actually notice, that's another potential win. AMD and Nvidia both have a bunch of tech that's worth further investigation, but we'll focus on performance in like for like testing for this initial review.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

We're interested in hearing your thoughts on what features matter most as well. We know AMD and Nvidia make plenty of noise about certain technologies, but we question how many people actually use the tech. If you have strong feelings for or against a particular tech, let us know in the comments section.

Test Setup

Our test hardware remains unchanged from previous reviews, but we have made a few updates. Specifically, we're now running the latest version of Windows 10 (20H2, build 19042.867), and we've also updated our motherboard BIOS to version 7B12v1B1, which includes beta resizable BAR support (aka, 'ReBAR').

AMD first brought attention to this existing feature of PCI Express with its RX 6800 series launch last November, dubbing it Smart Access Memory (SAM). At the time, AMD only supported the feature with the latest-generation Zen 3 CPUs, 500-series chipset motherboards, and AMD's latest RDNA2 GPUs. Since then, both Nvidia and Intel have begun supporting ReBAR as well, and AMD has extended support to other CPUs and GPUs. As a result, it's now possible for us to enable ReBAR on our primary test PC.

Speaking of which, we keep thinking it should be time to upgrade, but the gains from slightly faster CPUs aren't quite to the point where we've felt it was necessary to swap testbeds and retest everything. That's a daunting task. We looked at CPU scaling on the latest GPUs at the time of the RTX 3060 Ti launch, with a focus on the top-performing solutions (Ryzen 9 5900X, Core i9-10900K, and Core i9-9900K). While there were some differences, overall the net gain from swapping to a different CPU is only 1–2 percent, and the 9900K remains more than capable. Maybe we'll swap when the 11900K arrives later this month; more likely, waiting for Alder Lake or Zen 4 seems like a better plan.

We're using the same 13 games as well, but again with a change: Besides game patches, we've elected to drop DXR use in Dirt 5 and Watch Dogs Legion — sorry, ray tracing fans. There are several reasons for this. First, DXR support in Dirt 5 was provided via an early access code, and the support was and is still buggy. Second, the visual improvements from enabling ray tracing in these games are present, but they're not huge while the performance hit can be quite significant. Finally, we wanted to include AMD's previous-gen RX 5700 XT card in our test results, and it can't support DXR.

We'll be looking at a deeper investigation into the state of ray tracing in the coming days. It remains an interesting topic and it's not going away, but we felt it wasn't as critical a factor on the lower echelon cards. AMD's RT performance remains generally worse than Nvidia's RT performance, but some of that undoubtedly stems from Nvidia's position as the first company to provide DXR hardware and the 2-year lead it had on AMD. DLSS is something we would also need to factor into testing, and then we're back to comparing apples and AI-upscaled apples — not exactly unfair, but also not quite the same. So, stay tuned for a future article on the subject.

MORE: Best Graphics Cards

MORE: GPU Benchmarks and Hierarchy

MORE: All Graphics Content

Current page: Meet the RX 6700 XT Cards

Prev Page AMD Radeon RX 6700 XT Specs Next Page Radeon RX 6700 XT Gaming Performance

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

bigdragon These benchmarks show better performance than most of the others I've read this week. There's a few surprises in this review given that other sites showed performance in the 3060 to 3060 Ti range consistently. Seeing the 6700 XT beat the 3070 in a few tests is unexpected. I suppose that means AMD has been hard at work tweaking their drivers for better performance. The power consumption doesn't look as bad as I had been led to believe either. Looks like a solid GPU.Reply

I think the 6700 XT could be a good replacement for my 1070. However, do I really want to waste even more time fighting bots, adding to cart only to be unable to checkout, or being led to believe I have a shot at getting a GPU when I really never did? No. I'm not waking up early to watch a page that instantly flips from "coming soon" to "sold out" again. -

tennis2 Mining Performance -ReplyIn the case of the RX 6700 XT, we settled on 50% maximum GPU clocks (which doesn't actually mean 50%, but whatever — actually clocks settled in around 2.13GHz)

Sooo, you don't actually know how to detune the CPU, or? Hint - toggle that "advanced control" slider to ON.

Power efficiency is poor as expected. Meh. That tiny chainsaw whacked off a few too many CUs. -

oenomel Reply

Who really cares? They won't be available for months if ever?Admin said:The Radeon RX 6700 XT takes a step down from Big Navi, trimming the fat and coming in at $479 (in theory). Availability and actual street pricing are the keys to success, as the card otherwise looks promising. Here's our full review.

AMD Radeon RX 6700 XT Review: Big Navi Goes on a Diet : Read more -

JarredWaltonGPU Reply

And if you knew how AMD's drivers and tuning section work, you'd understand that toggling that "advanced control" slider just changes the percentage into a MHz number. Hint - toggle the "condescending tone" slider to OFF.tennis2 said:Mining Performance -

Sooo, you don't actually know how to detune the CPU, or? Hint - toggle that "advanced control" slider to ON.

Power efficiency is poor as expected. Meh. That tiny chainsaw whacked off a few too many CUs.

But I did make an error: I was looking at the memory clock, not the GPU clock, when I thought the GPU was still running at 2.13GHz. I've done a bit more investigating, now that I'm more awake (it was a late night, again — typical GPU launch). With the slider at 40% (which gives a MHz number of something like 1048MHz), I got nearly the same mining performance as with the slider at 65% (1702MHz). Here are three screenshots, showing 40%, 50%, and 65% Max Frequency settings (but with advanced control ticked on so you can see the MHz values). This is with the Sapphire Nitro+, so the clocks are slightly higher than the reference card, but the performance is pretty similar (actually, the reference card was perhaps slightly faster at mining for some reason — only like 0.3MH/s, but still.)

I've updated the text to remove the note about the max frequency not appearing to work properly. It does, my bad, the description of the tuned settings was and is still correct: 50% Max Freq, 112% power, 2150MHz GDDR6, slightly steeper fan curve, 115-120W.

82

83

84 -

Wendigo ReplyWe're interested in hearing your thoughts on what features matter most as well. We know AMD and Nvidia make plenty of noise about certain technologies, but we question how many people actually use the tech. If you have strong feelings for or against a particular tech, let us know in the comments section.

To answer your question, I would say that the most interesting AMD feature is Chill. Sure, it's useless if you're only looking for max FPS or benchmark scores. But on a practical standpoint, for the average gamer, it works wonder. This is particularly true when used with a Freesync monitor and setting the Chill min and max values to the range supported by the monitor, thus always keeping the FPS in the Freesync range. There's no obvious difference when playing most games (particularly for the average gamer not involved in competitive esports), but the card then runs significantly cooler and thus quieter, making for a overall more pleasant gaming experience. -

bigdragon Asus released their 6700 XT cards about an hour ago...for $350 over AMD's MSRP. The 6700 XT is an absolutely horrific value at $829. The stupid things still sold out almost instantly. Plenty of humans on Twitter complaining about bots buying everything up and immediately flipping the cards on Ebay. AMD and Asus have a lot of explaining to do.Reply

My 1070 is now worth double what I paid for it. I'm going to sell it this weekend and forget about AAA gaming for the rest of the year. Plenty of indie games get by happily with lower-end GPUs or iGPs. -

InvalidError Reply

No explaining to do here, it is simply the free market at work. Demand is higher than supply? Raise prices until equilibrium is reached.bigdragon said:AMD and Asus have a lot of explaining to do. -

deesider Reply

Not sure what the OP was hoping for - a golden ticket system like Willy Wonka?!InvalidError said:No explaining to do here, it is simply the free market at work. Demand is higher than supply? Raise prices until equilibrium is reached. -

bigdragon Reply

AMD's release date isn't until 9 AM EDT tomorrow morning -- not today. There were also no promised anti-bot measures in place, again, as usual. AMD's AIB's are also far more aggressive about marking up Radeon prices as compared to RTX prices.InvalidError said:No explaining to do here, it is simply the free market at work. Demand is higher than supply? Raise prices until equilibrium is reached.

Yeah, that would be swell.deesider said:Not sure what the OP was hoping for - a golden ticket system like Willy Wonka?!

What I seriously want to do is just checkout with 1 GPU without the GPU being ripped out of my cart mid-checkout or the vendor cancelling the order after it's been placed. -

jeremyj_83 Reply

I forgot what time they went on sale and checked within 5 minutes of the release and they are sold out online everywhere. You can get a couple different ones from a physical Microcenter location as they are in store only. Two of those are at MSRP even.bigdragon said:AMD's release date isn't until 9 AM EDT tomorrow morning -- not today. There were also no promised anti-bot measures in place, again, as usual. AMD's AIB's are also far more aggressive about marking up Radeon prices as compared to RTX prices.