Why you can trust Tom's Hardware

AMD RX 7900 Test Setup

We updated our GPU test PC and gaming suite in early 2022, but with the RTX 40-series launch we found more and more games were becoming CPU limited at anything below 4K. As such, we've upgraded our GPU test system… except we have a ton of existing results that were all run on our 12900K PC. AMD also wanted some testing done using its latest Ryzen 7000-series and socket AM5, and we were happy to oblige — a good way to see if there's truly a benefit to going all-in on AMD components. As such, we have three test PCs for the RX 7900 series launch.

TOM'S HARDWARE 2022 GPU TEST PC

Intel Core i9-12900K

MSI Pro Z690-A WiFi DDR4

Corsair 2x16GB DDR4-3600 CL16

Crucial P5 Plus 2TB

Cooler Master MWE 1250 V2 Gold

Corsair H150i Elite Capellix

Cooler Master HAF500

Windows 11 Pro 64-bit

TOM'S HARDWARE INTEL 13TH GEN PC

Intel Core i9-13900K

MSI MEG Z790 Ace DDR5

G.Skill Trident Z5 2x16GB DDR5-6600 CL34

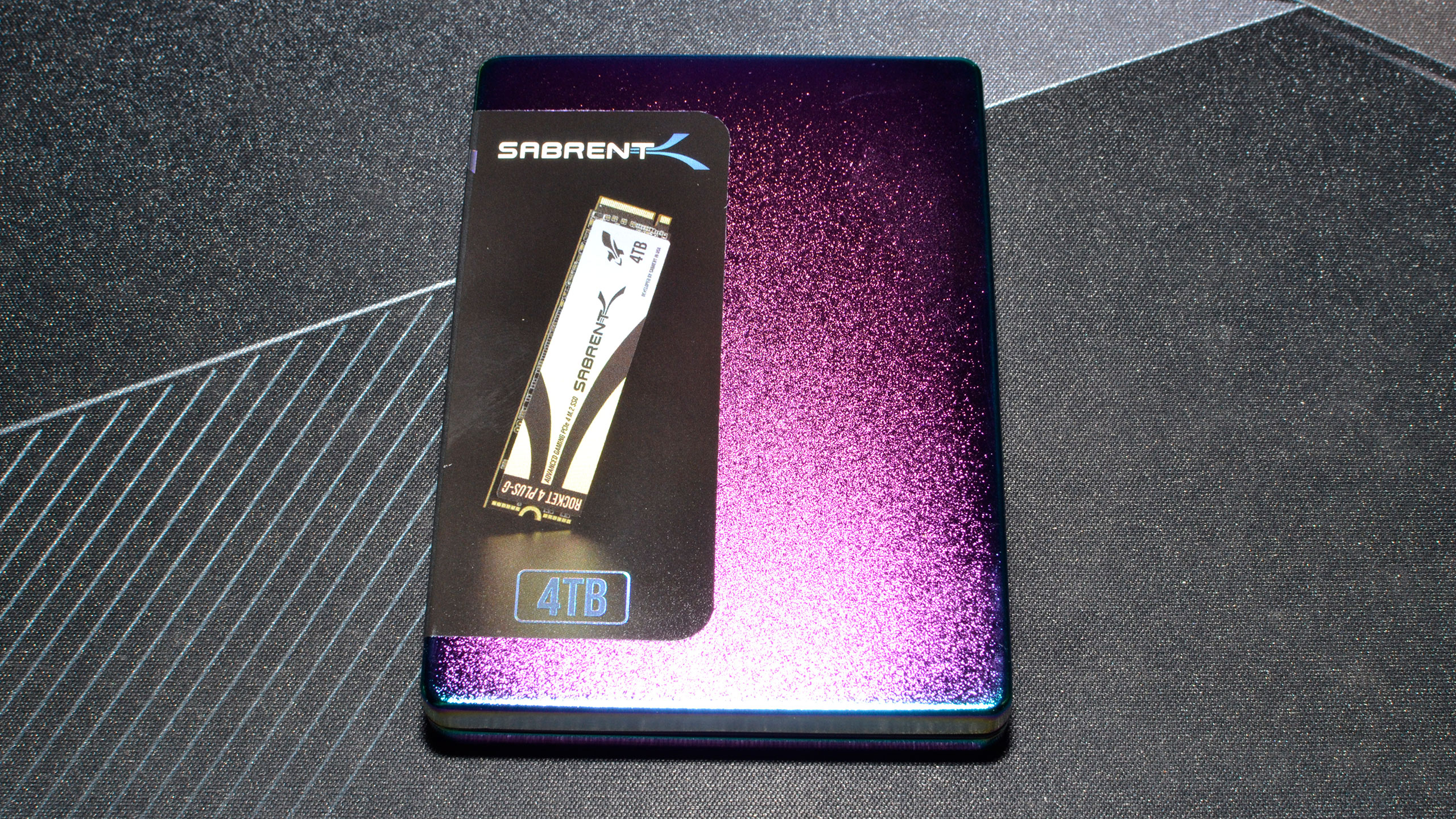

Sabrent Rocket 4 Plus-G 4TB

be quiet! 1500W Dark Power Pro 12

Cooler Master PL360 Flux

Windows 11 Pro 64-bit

TOM'S HARDWARE AMD RYZEN 7000 PC

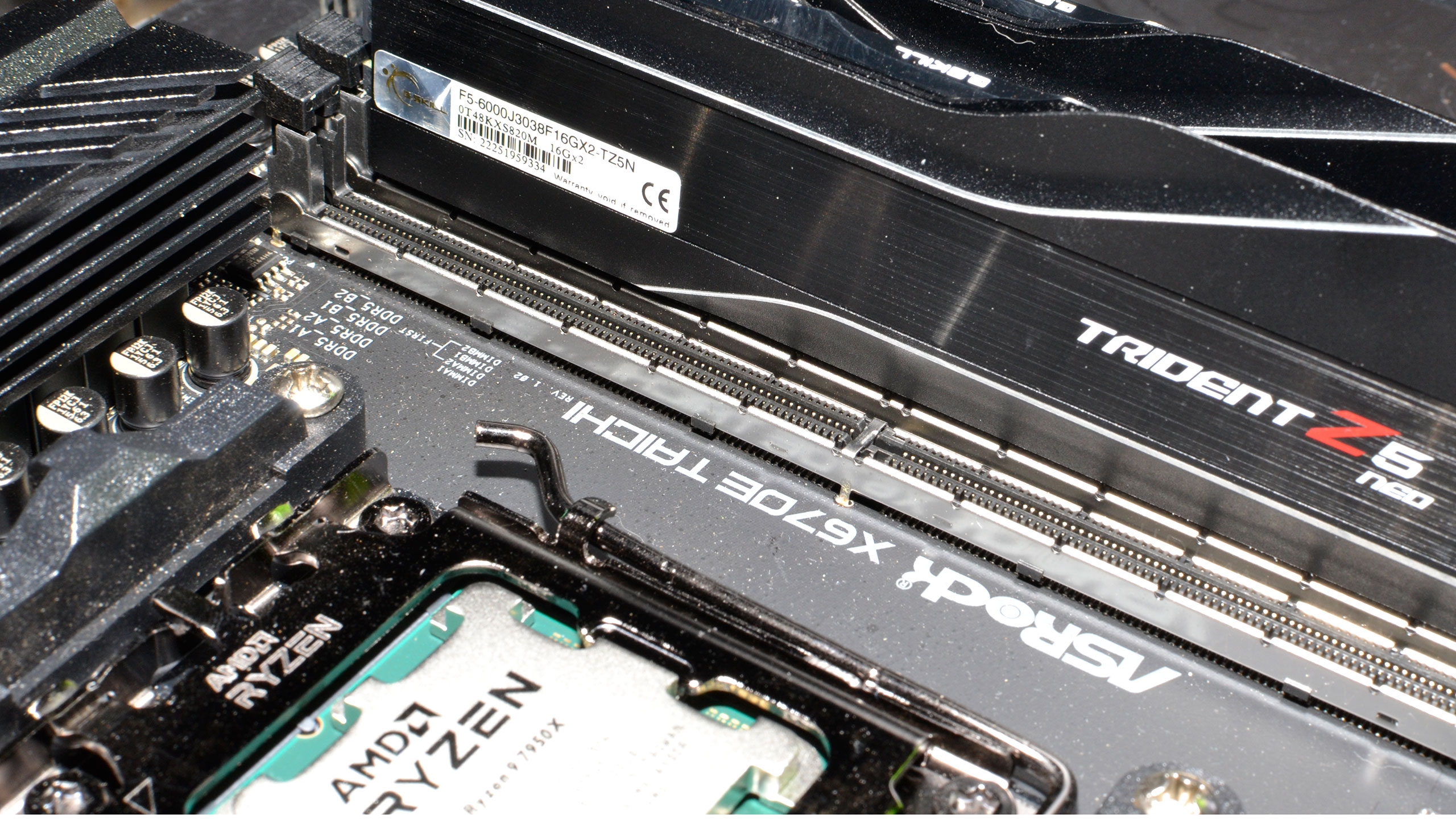

AMD Ryzen 9 7950X

ASRock X670E Taichi

G.Skill Trident Z5 Neo 2x16GB DDR5-6000 CL30

Crucial P5 Plus 2TB

Corsair HX1500i

Cooler Master ML280 Mirror

Windows 11 Pro 64-bit

AMD and Nvidia both recommend either the AMD Ryzen 9 7950X or Intel Core i9-13900K to get the most out of their new graphics cards. We'll still have some results from the 12900K as well, but we ran into some other snafus like changing drivers, game updates breaking features, etc.

For now, we're going to focus mostly on our test results using the 13900K. MSI provided the Z790 DDR5 motherboard, G.Skill gets the nod on memory, and Sabrent was good enough to send over a beefy 4TB SSD — which we promptly filled to about half its total capacity. Games are getting awfully large these days! The AMD rig has slightly different components, like the ASRock X670E Taichi motherboard, Crucial P5 Plus 2TB SSD, and G.Skill Trident Z5 Neo memory with Expo profile support for AMD systems.

Time constraints prevented us from testing every GPU on both the AMD and Intel PCs, but we did get six cards tested on the Intel PC, and both 7900 cards on the AMD as well. Going forward, we'll start running more graphics cards on the 13900K and come up with a new GPU benchmarks hierarchy test suite, but that will take a while.

Also of note is that we have PCAT v2 (Power Capture and Analysis Tool) hardware from Nvidia on both the AMD 7950X and Intel 13900K PCs, which means we can grab real power use, GPU clocks, and more during all of our gaming benchmarks. We're still trying to determine the best way to present all the data, but check the power testing page if that sort of thing interests you.

For all of our testing, we've run the latest Windows 11 updates and the latest GPU drivers from AMD and Nvidia. That means 527.56 drivers on the Nvidia GPUs, and for AMD we used 22.11.2 drivers on the previous generation 6950 XT alongside 22.40.00.57 beta drivers that only support the RX 7900 cards.

Our gaming tests now consist of a standard suite of nine games without ray tracing enabled (even if the game supports it), and a separate ray tracing suite of six games that all use multiple RT effects. We tested all of the GPUs at 4K, 1440p, and 1080p using "ultra" settings — basically the highest supported preset if there is one, and in some cases maxing out all the other settings for good measure (except for MSAA or super sampling).

Note that we've dropped Fortnite from our test suite as the latest update has broken things, again. In its place, we've added Spider-Man: Miles Morales with ray tracing effects enabled, and we've also included A Plague Tale: Requiem as one more non-RT game. Forza Horizon 5 and Total War: Warhammer 3 were also retested on all of the GPUs in the charts due to some recent patches. Forza performance on Nvidia cards seems to have dropped recently, so bear in mind that scores could go back up in the future.

Besides the gaming tests, we also have a collection of professional and content creation benchmarks that can leverage the GPU. We're using SPECviewperf 2020 v3 and Blender 3.40. We'll look at what AI benchmarks we can get working on RDNA 3 in the coming days, and we'll also see about doing some video encoding tests using the updated video engines on the various GPUs.

AMD RX 7900 Series Overclocking

Time constraints prevented us from properly exploring overclocking for this launch article. Given the high performance and limited gains, it's probably not a huge omission overall. Most GPUs can improve performance by about 5% through manually tuning, and some factory overclocked cards will provide close to that out of the box. We'll update this section once we've had a bit more time for testing.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: AMD RX 7900 Test Setup and Overclocking

Prev Page AMD RX 7900 XTX and XT Design Next Page Radeon RX 7900: 4K Gaming Performance

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

-Fran- Thanks for the review!Reply

I'm reading it now, but I've watched numbers in other places. My initial reaction is lukewarm* TBH. I expected a bit more, but they're not terrible either. They did fall short of AMD's promise though. They indeed oversell the capabilities on raster, but were pretty on point for RT increases.

Still, this card better have a "fine wine" effect down the line and the MSRP may just be well justified. This being said, it is still too expensive. for what it is.

Regards. -

spongiemaster ReplyThat's probably because this is the most competitive AMD has been in the consumer graphics market in quite some time.

Not sure how this was determined, but I would argue this is a step backwards in almost every situation from the 6000 series. Also, it should be pointed out that there is something going on with the power consumption of the 7000 series in non-gaming situations that will affect many users. Looks like the memory isn't down clocking or something.

-

JarredWaltonGPU Reply

I don't do a ton of power testing scenarios, so I'd have to look into that more... and I really need to go sleep. As for the "being competitive," AMD is pretty much on par with Nvidia's best in rasterization (similar to 6000-series), and it's at least narrowed the gap in ray tracing. Or maybe that's just my perception? Anyway, since basically Pascal, it's felt like AMD GPUs have been very behind Nvidia. Nvidia offers more performance and more features, at an admittedly higher price.spongiemaster said:Not sure how this was determined, but I would argue this is a step backwards in almost every situation from the 6000 series. Also, it should be pointed out that there is something going on with the power consumption of the 7000 series in non-gaming situations that will affect many users. Looks like the memory isn't down clocking or something. -

Colif Steve appears to have recorded a 750watt transient on the xtx which makes me sit back and wonder what PSU you need. though I am not sure if that is peak system power or just the gpu itself.Reply -

Elusive Ruse A bit of a cynical Pros/Cons section, no mention of XTX being a much better value over 4080 which is its direct competition?Reply

Performance falls within expected margins (reasonable expectations, not that of crazed fanboys). Beating 4080 in rasterization and falling short in RT and professional uses. I don't quite care for RT but the performance gap in Blender e.g. is still eyepopping. I have heard of Blender 3.5 offering big improvements, yet that's not the current reality of things. I also doubt this will be a better story for RNDA 3 in Maya (Arnold) either. -

shADy81 I assume the 750 W transient GN recorded is for the full system? TPU are showing 455W spike for the XTX and 412 for the XT, lower than 6900 and 6800 by quite some way on their charts.Reply

They also downgraded their PSU recommendation to 650 W for both cards. Was 1000W on the 6900. I think I'd feel a bit close on 650 W even allowing for a good quality unit being able to supply more than rated for short times. 650 would surely be way to close for a 13900K system, what do they know that I dont? -

zecoeco Replyspongiemaster said:Not sure how this was determined, but I would argue this is a step backwards in almost every situation from the 6000 series. Also, it should be pointed out that there is something going on with the power consumption of the 7000 series in non-gaming situations that will affect many users. Looks like the memory isn't down clocking or something.

This is actually a bug that was already reported to AMD and they're already working on a fix. -

zecoeco "Chiplets don't actually improve performance (and may hurt it)"Reply

How on earth is this even a con? who said chiplets are for performance? chiplets are for cost saving.

But what did y'all expect ? You just can't complain for this price point.. there you go, chiplets saved you $200 bucks + gave you 24GB of VRAM as bonus (versus 16GB on 4080)

It is meant for GAMING so don't expect productivity performance, for many reasons including nvidia's cuda cores that has every major software optimized for it.

RDNA is going the right direction with chiplets.. in an industry of increasing costs year after year.

Chiplet design is a solution, and not a new groundbreaking feature that's meant to boost performance.

Sadly, instead of working on the problem, nvidia decided to give excuses such as "Moore's law is dead". -

salgado18 ReplyBut for under a grand, right now the RX 7900 XTX delivers plenty to like and at least keeps pace with the more expensive RTX 4080. All you have to do is lose a good sized chunk of ray tracing performance, and hope that FSR2 can continue catching up to DLSS.

That, I believe, is the reason AMD won't increase too much their market share in this generation. Yes, rasterization is comparable, so are power, memory, price and even upscaling performance/quality. But it is a bad card for raytracing, or at least that's the message, and between a full card and a crippled card, people will prefer the fully featured one. I know designing GPUs is a monstrously complex task, but they really needed to up their RT performance by at least 3x to be competitive. Now they will keep being "bang-for-buck", which is nice, but never "the best".

Edit: by some rough calcs, if the XTX is ~40% faster without RT than the 6950, and ~50% faster with RT, then the generational improvement is ~7%? If so, then that's hardly any improvement at all. Great cards and all that, but I'm very disappointed with the lack of focus on RT. -

btmedic04 Replysalgado18 said:That, I believe, is the reason AMD won't increase too much their market share in this generation. Yes, rasterization is comparable, so are power, memory, price and even upscaling performance/quality. But it is a bad card for raytracing, or at least that's the message, and between a full card and a crippled card, people will prefer the fully featured one. I know designing GPUs is a monstrously complex task, but they really needed to up their RT performance by at least 3x to be competitive. Now they will keep being "bang-for-buck", which is nice, but never "the best".

Edit: by some rough calcs, if the XTX is ~40% faster without RT than the 6950, and ~50% faster with RT, then the generational improvement is ~7%? If so, then that's hardly any improvement at all. Great cards and all that, but I'm very disappointed with the lack of focus on RT.

AMD has a definite physical size advantage though which is something thats applicable to quite a few folks (myself included.) I hear what you are saying, but as a 3090 owner, 3090-like RT performance from the 7900xtx is still quite good for most people.