Does Undervolting Improve Radeon RX Vega 64's Efficiency?

Temperatures & A Surprise

Temperature Sensor Issues?

At idle, the Radeon RX Vega 64 reports a chilly 16°C, even though the water temperature cooling it is a more temperate 20°C. Ryzen already demonstrated that temperature sensors aren’t really AMD’s strong suit. Even so, it’s weird that the readings are this far off, especially since the sensors in previous cards reported fairly accurate data.

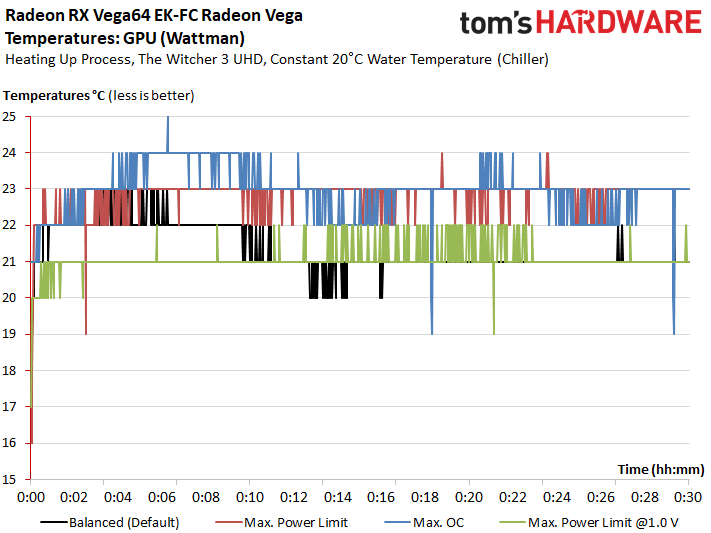

The 24°C result gives us pause, seeing that we obtained it using the maximum power limit in combination with overclocking, dissipating more than 400W in the process. Our cooling block and thermal paste alone should result in a ~4°C difference. Either way, we tabulated these “GPU temperatures” exactly the way they were reported by WattMan, GPU-Z, and others.

In case you're looking for results at 1V with AMD's default power limit, they're identical to what we found under Balanced mode, so we omitted them to make our graphs easier to read.

The Search for True Temperatures

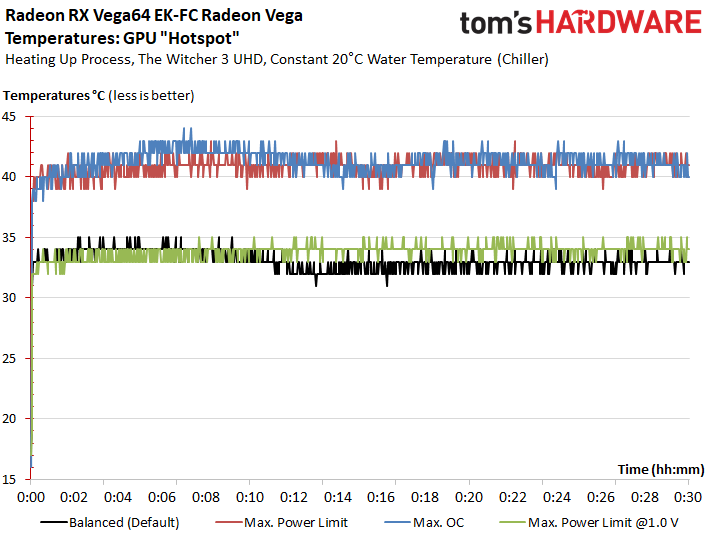

Understandably, we didn't believe our results. After talking to the author of GPU-Z, we put more stock in what his tool reports as the processor hot-spot than AMD's sensor readings. Unfortunately, AMD doesn’t provide any documentation for its value, and an analysis of the accessible sensor loop results didn’t clear things up either. However, the results certainly look more plausible than what we were seeing before:

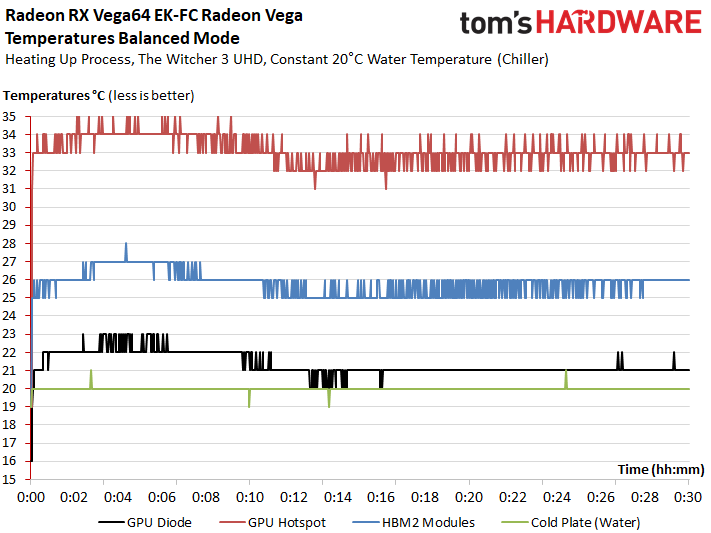

We do have data that we gathered from different GPUs using the compressor cooler and a good water block, which we can use for comparison. This leads us to believe that GPU-Z’s hot-spot results are a lot more trustworthy than the numbers provided by WattMan and older tools. A direct comparison between all of the results under Balanced mode yields the following picture:

There’s another interesting side to this. If the package’s temperatures are really higher than what's being reported, then there might be problems for air-cooled cards already showing GPU temperatures well above 80°C.

Up close, AMD uses X6S MLCC capacitors. These have an absolute temperature limit of 105°C, which even an air-cooled card shouldn’t quite reach. However, their capacity decreases significantly, which is to say up to 22 percent, at temperatures above 90°C. This could lead to instability. Using X7R or R capacitors instead would have made for a better long-term solution, especially since high ripple values cause the optimal operating temperature to decline even further. Call this one more reason to use water cooling if possible.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

That the temperatures might be higher than reported could also explain another finding: even though no errors show up on the screen, the HBM2 loses performance if it’s overclocked. This might be due to it running significantly hotter than current tools are reporting. Some of AMD’s board partners have asked the company about this, but haven't received a response. We do have one trustworthy source that puts these temperatures well above 90°C, though.

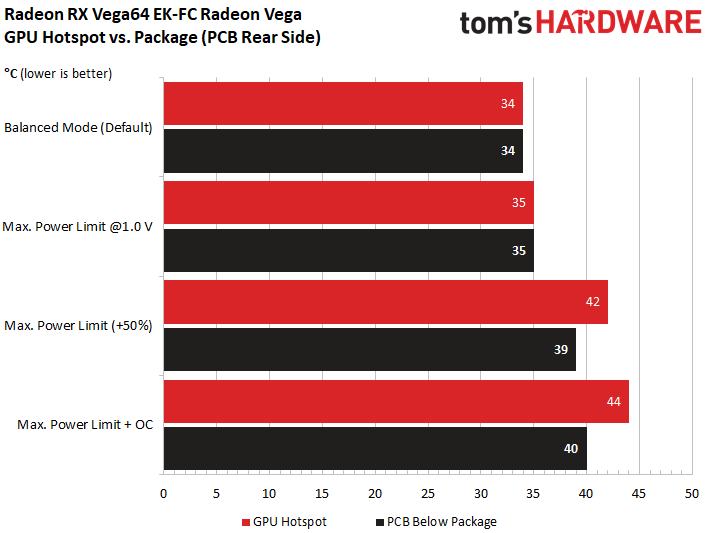

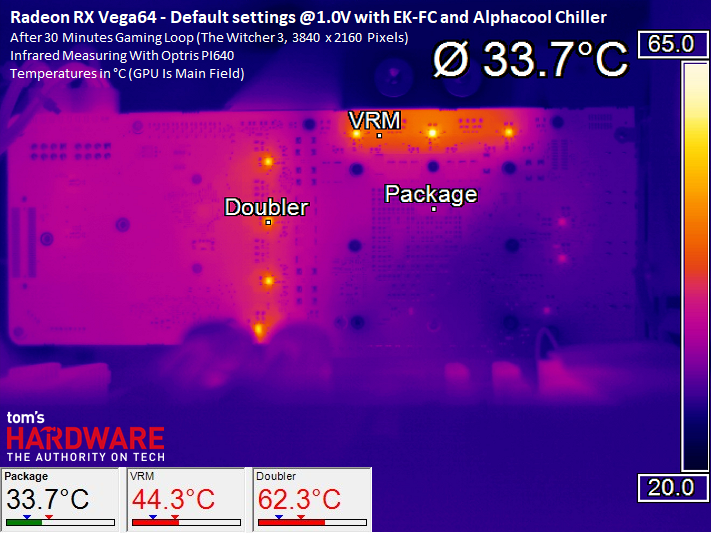

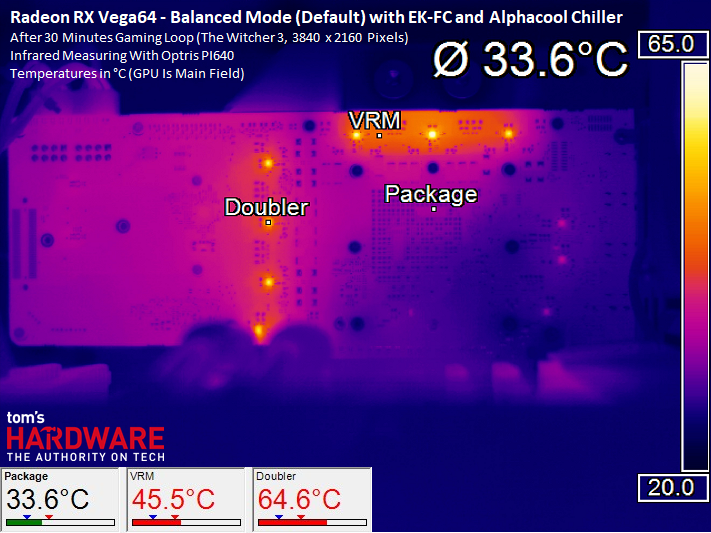

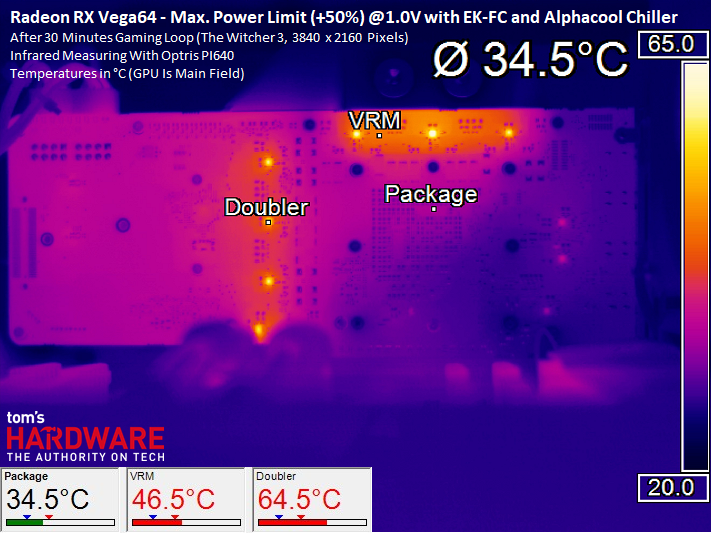

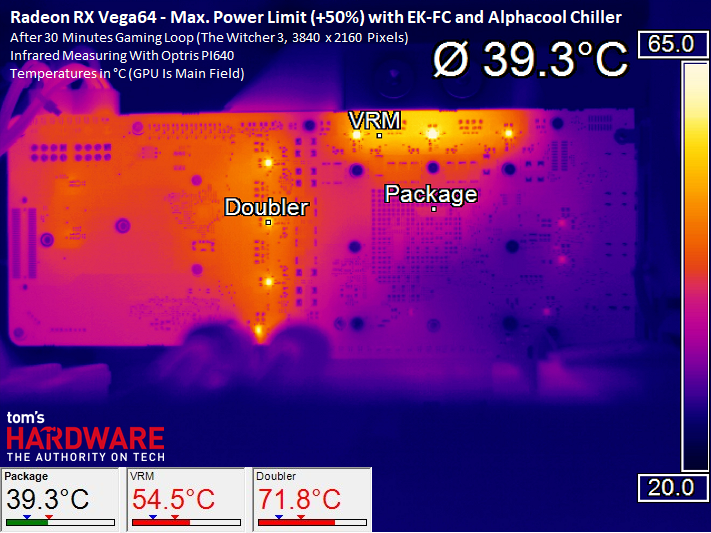

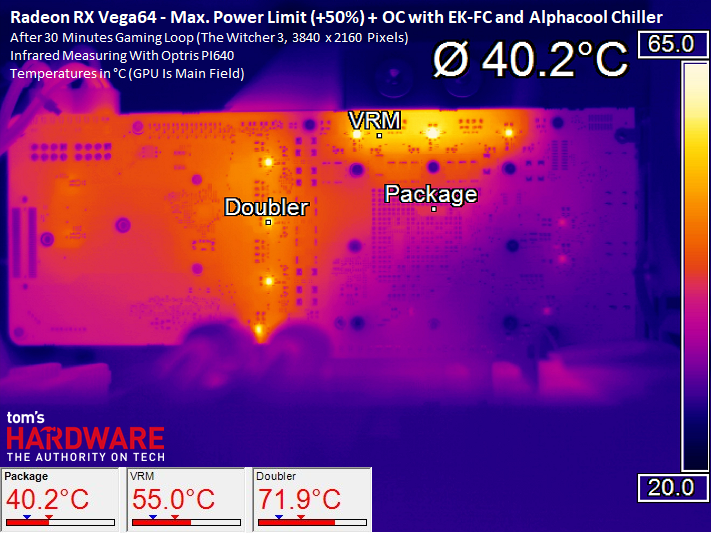

Thermal measurements from the card's back side provide additional evidence that the mysterious GPU hot-spot’s really just the GPU temperature. Even though it’s true that the temperatures are a little different at very high power consumption values, they are completely identical all the way up to 310W. The lower results under extreme conditions could be due to indirect cooling of the board via the large amount of copper contained in the circuitry, which would increase along with the power consumption.

Addendum: Infrared Measurements

It goes without saying that we meticulously documented our installation just like we always do. EKWB’s block does a great job; this should be just about as good as it gets at this point.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Current page: Temperatures & A Surprise

Prev Page Voltages, Frequencies & Power Consumption Next Page Efficiency & Conclusion

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

TJ Hooker Good article, thanks for the analysis!Reply

Was a similarly indepth look at overclocking/undervolting performance with Polaris done, that I missed? Or is there just whatever is included the various Polaris 10 review articles (e.g. RX 480 review)? If it's the latter, does anyone know which review would contain the most information regarding ths? -

Cryio I don't think this article went in-depth enough. Dunno where I've read, but I've seen Vega 64 consume just half or 2 thirds as much power while only loosing 15% performance. Which is tremendous IMO.Reply -

TJ Hooker Reply

Tom's found something similar to that in their review of the Vega 56, with a 28% reduction in power consumption only costing you an 11% performance hit. http://www.tomshardware.com/reviews/radeon-rx-vega-56,5202-22.html20238896 said:I don't think this article went in-depth enough. Dunno where I've read, but I've seen Vega 64 consume just half or 2 thirds as much power while only loosing 15% performance. Which is tremendous IMO.

But that's going into underclocking, whereas this article was very focused on undervolting and what effect that has on power consumption.

Although it would be very interesting to see an article that looks into tweaking both clockspeed and voltage to see what sort of efficiency can be achieved. -

Xorak Maybe it's too early in the morning for me, but I'm a little confused about what we actually found here.. Looks like the highest overclock was achieved using default voltage and the maximum power limit, with a 3% frequency increase.. Under the condition of using a somewhat absurd cooling solution..Reply

So are we to infer that with an air cooled card, undervolting in and of itself is not desirable, but is likely to help more than it hurts because we run out of thermal headroom before the lower voltage becomes a limiting factor? -

Wisecracker Thanks for all the bench work and reporting.Reply

Tweaking on AVFS appears to be the *Brave New Frontier* (or, "We're all beta testers for AMD" :lol: ). The fancy cooling was impressive but as noted under- clocking/volting brings out unseen efficiencies in Vega/Polaris. That's certainly not at the top of the list of many Tech Heads but gives AMD better insight going forward as they bake new wafers, fix bugs and tweak the arch.

I suspect the nVidia bunch does the same thing, but the woods are filling-up with volt- and BIOS Mods for Polaris and Vega. There's a thread at Overclock pushing 500 pages! Manipulating volt tables is becoming an art ...

More than anything, what I believe this shows is that AMD (and GloFo) boxed themselves into 'One Size Fits All' with gaming cards because of varying consistencies in the chips, and just like desktop Zen they were the last picks of the litter behind the big money makers --- enterprise, HPC/deep learning, Apple, etc.

-

Ethereum Currency The RX Vega 56 has outweighed many other graphics cards as it is fast and has superb graphics: http://rxvega56.zohosites.euReply -

photonboy Do I need a degree on Tweakology to get the most out of an AMD graphics card?Reply

I still can't comprehend how there are so many engineering issues going on with the design of VEGA, as well as the continued challenges in how to optimize an overclock.

You'd think in 2017 you could just tell the computer to figure out the optimal overclock. We get closer with NVidia; I can use EVGA Precision OC to optimize the voltage/frequency profile though even that isn't perfect as it needed to be restarted many times to finish and when done it wasn't stable in some games.

You'd think the graphics card could continually send itself known data to validate the VRAM and GPU at all times (using a very small percentage of the cycles).... oh, errors? Well, then drop to the next lowest frequency/voltage point. Is that so hard? -

ClusT3R Do you have like a manual with the values and the software I want to try to remove more power and add more clockspeed as I can I have vega 56Reply -

GoldenBunip The biggest gains on Vega is keeping the HBM cool. It will click to 1100 then (but no more) resulting in huge performance gains. Just really hope we crack the stupid bios locks soon.Reply -

zodiacfml I don't understand what is going on here.Reply

Why do we have to put an overkill cooling system for the question "cDoes Undervolting Improve Radeon RX Vega 64's Efficiency?