Opinion: AMD, Intel, And Nvidia In The Next Ten Years

What About Intel?

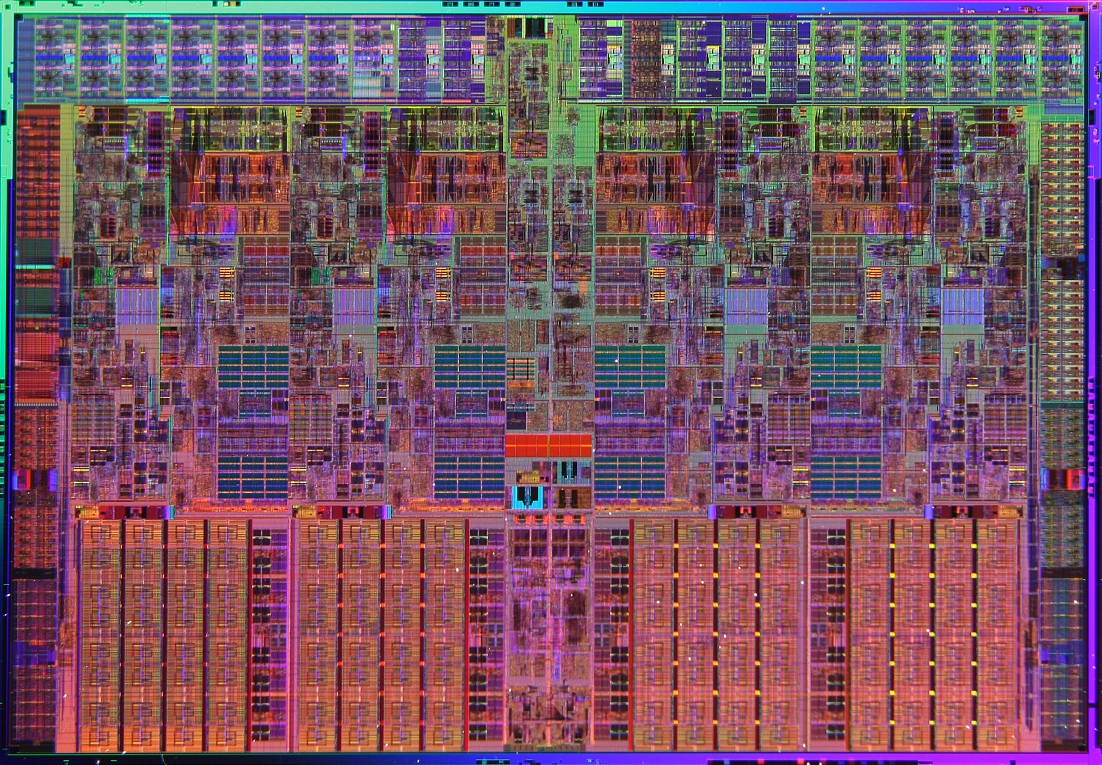

Although the Pentium 4 was also one of Intel's best-selling CPUs, its NetBurst architecture represented the rare occasion when the company had a second-place technology. Intel lost mind share and market share to AMD's superior Athlon CPUs. However, with the introduction of the Pentium M from Intel's Haifa design center and subsequent Core micro-architecture, Intel re-established its position as the CPU technology leader. Perhaps more impressive is that Core is itself an evolutionary branch from the P6 "Pentium Pro" architecture that dates back to 1995.

From a design standpoint, Intel has not kept all of its assets in one platform. Intel is one of the few companies with two profitable high-performance CPU architectures. And by that, I'm talking about Itanium. After two decades of work and a decade of commercial availability, the Itanium investment is actually paying off for Intel. The company is making money from its investment now. As impressive as Intel's Haifa design center was in bringing the P6 architecture into the modern world, many of the core engineers from the P6 were transferred to the Itanium team. Combined with the HP PA-RISC engineers, the Itanium project has some of the best CPU engineers in the world working on it.

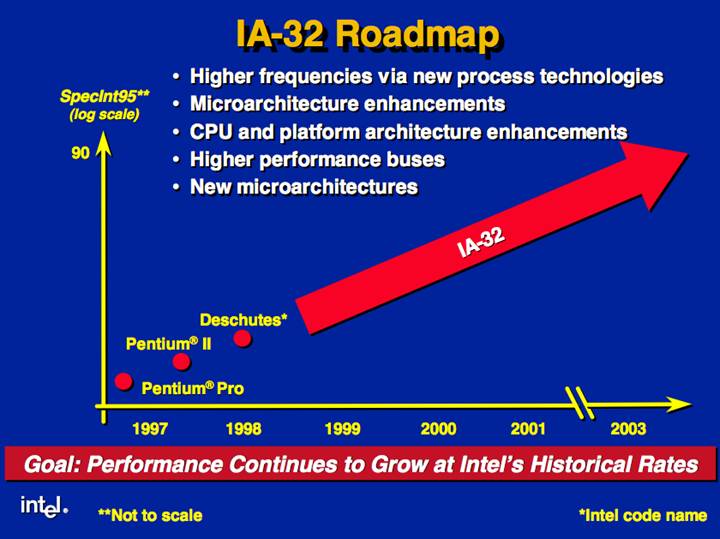

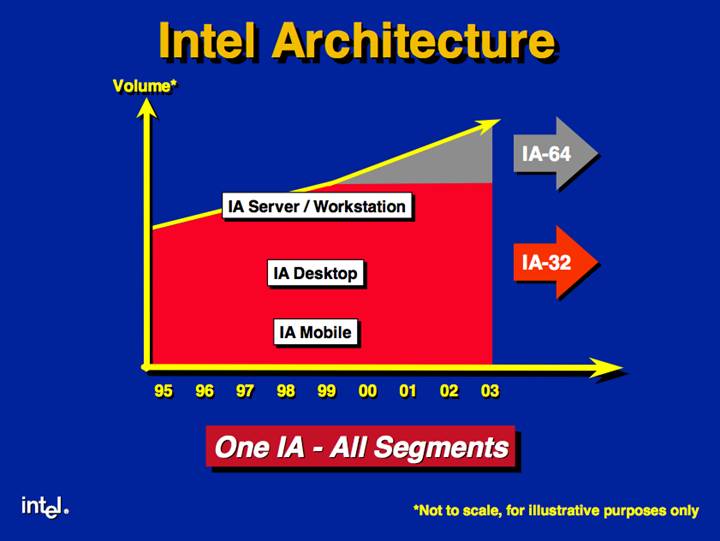

Even before the launch of Itanium, Intel outlined the future of x86 in a PowerPoint presentation: higher frequencies via new process technologies, micro-architecture enhancements, CPU and platform enhancements, and higher-performance buses. This meant things such as larger caches, faster bus frequencies with more bandwidth, and multiple cores--all things we're seeing with Nehalem and it's use of large shared L3 caches, QPI, and scalable core counts. The company saw this roadmap more than 20 years ago, and knew that it would ultimately reach a limit. Today’s CPUs have already reached higher heat density than a nuclear reactor, and maintaining Moore’s Law will be a considerable challenge once five nanometer gates are achieved.

The Itanium architecture was conceived two decades ago as insurance against that inevitable day. Unlike current CPUs, which rely on considerable die space for non-computing elements to optimize performance, such as out-of-order schedulers and branch prediction, Itanium was designed with the idea that silicon should be dedicated toward actual computational elements. Instead of trying to schedule things in real-time with only a few CPU clock cycles to make a decision, this type of optimization would be performed ahead of time, in software, during the compilation and development stage, when more time and more complex algorithms could be used. The CPU might then abandon traditional design cues and adopt a very long instruction word (VLIW) design, in which the CPU could be fed a large number of instructions with each clock cycle in order to maintain performance. It was an epic idea. So they called it EPIC (Explicitly Parallel Instruction Computing).

Unfortunately, the compiler technology to pull this off did not even exist when Intel and HP began the project. In fact, it would prove to be a more difficult challenge than originally anticipated, which led to years of lackluster sales and performance. The original Itanium, launched two years behind schedule and after more than a decade of development, was little more than a proof-of-concept. Itanium 2, launched two years behind Intel’s revised schedule, was what brought the project into profitability. Tukwila, "Itanium 3," is now three years late, based on the original time line.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

But being late doesn’t mean that it’s terrible. Intel has over 1,000 engineers working on Itanium, and it’s important to distinguish the core design of the VLIW Itanium and the weaknesses of the actual implementation that has led to the perception of Itanium as a massive failure. Dollar-for-dollar, Itanium has never been able to beat Opterons or Xeons. However, these benchmarks don’t capture the reliability features of the architecture, including core-level lockstep, which offers the equivalent of RAID mirroring for CPUs. With lock step, two processor cores can be synchronized to the same clock and asked to perform the same computations and verify the output, or even run comparisons to another CPU socket. HP Integrity NonStop systems employing Itanium boast 99.99999% up-time compared to the 99.999% offered by IBM’s mainframe line. This is the difference between three seconds of down-time per year versus five minutes. For the companies finding that level of reliability important...well, that’s why Itanium is profitable. There’s nothing to stop Intel from producing a lower-end Itanium without these features, other than the lack of a commercial market for it.

I don’t think that Itanium is going to enter the space held by Xeon or Opteron today. The Itanium market is likely to grow over the next decade as more hospitals move toward electronic medical records and digital imaging systems, where the perceived 99.99999% uptime is valuable. With the next version of Itanium supporting QPI, the same interconnect technology used in the current Nehalem-based CPUs, heterogenous systems incorporating both traditional Xeon x86-64 CPUs and Itanium IA-64 CPUs will be possible.

We may never hit the true limit of Moore’s Law within our lifetime. Once you reach 5nm gates, you start to run into issues of electron tunneling. You can’t go any smaller, and therefore have to look at things like quantum computing and stacked chips. VLIW/IA64 will be ready sooner than quantum computing, but stacked chips provide one way to maintain effective CPU cooling with very dense transistors.

The Itanium investment may also pay dividends with future versions of Larrabee. Though Larrabee’s thread-level parallelism is different than Itanium’s VLIW instruction-level parallelism, many of the compiler tools and optimizations can carry over. In fact, Nvidia’s own CUDA compiler is built from the Open64 compiler, an open-source tool originally developed for Itanium development. All in all, Intel has the enviable position of having one of the best mainstream architectures in Core i3/i5/i7, and one of the best mainframe architectures in Itanium.

Current page: What About Intel?

Prev Page AMD In The Next Decade Next Page Intel Graphics In The Next Decade-

anamaniac Alan DangAnd games will look pretty sweet, too. At least, that’s the way I see it.After several pages of technology mumbo jumbo jargon, that was a perfect closing statement. =)Reply

Wicked article Alan. Sounds like you've had an interesting last decade indeed.

I'm hoping we all get to see another decade of constant change and improvement to technology as we know it.

Also interesting is that you almost seemed to be attacking every company, you still managed to remain neutral.

Everyone has benefits and flaws, nice to see you mentioned them both for everybody.

Here's to another 10 years of success everyone! -

" Simply put, software development has not been moving as fast as hardware growth. While hardware manufacturers have to make faster and faster products to stay in business, software developers have to sell more and more games"Reply

Hardware is moving so fast and game developers just cant keep pace with it. -

Ikke_Niels What I miss in the article is the following (well it's partly told):Reply

I am allready suspecting a long time that the videocards are gonna surpass the CPU's.

You allready see it atm, videocards get cheaper, CPU's on the other hand keep going pricer for the relative performance.

In the past I had the problem with upgrading my videocard, but with that pushing my CPU to the limit and thus not using the full potential of the videocard.

In my view we're on that point again: you buy a system and if you upgrade your videocard after a year/year-and-a-half your mostlikely pushing your CPU to the limits, at least in the high-end part of the market.

Ofcourse in the lower regions these problems are smaller but still, it "might" happen sooner then we think especially if the NVidia design is as astonishing as they say and on the same time the major development of cpu's slowly break up.

-

lashton one of the most interesting and informativfe articles from toms hardware, what about another story about the smaller players, like Intel Atom and VILW chips and so onReply -

JeanLuc Out of all 3 companies Nvidia is the one that's facing the more threats. It may have a lead in the GPGPU arena but that's rather a niche market compared to consumer entertainment wouldn't you say? Nvidia are also facing problems at the low end of market with Intel now supplying integrated video on their CPU's which makes the need for low end video cards practically redundant and no doubt AMD will be supplying a smiler product with Fusion at some point in the near future.Reply -

jontseng This means that we haven’t reached the plateau in "subjective experience" either. Newer and more powerful GPUs will continue to be produced as software titles with more complex graphics are created. Only when this plateau is reached will sales of dedicated graphics chips begin to decline.Reply

I'm surprised that you've completely missed the console factor.

The reason why devs are not coding newer and more powerful games is nothing to do with budgetary constraints or lack thereof. It is because they are coding for an XBox360 / PS3 baseline hardware spec that is stuck somewhere in the GeForce 7800 era. Remember only 13% of COD:MW2 units were PC (and probably less as a % sales given PC ASPs are lower).

So your logic is flawed, or rather you have the wrong end of the stick. Because software titles with more complex graphics are not being created (because of the console baseline), newer and more powerful GPUs will not continue to produced.

Or to put it in more practical terms, because the most graphically demanding title you can possibly get is now three years old (Crysis), then NVidia has been happy to churn out G92 respins based on a 2006 spec.

Until we next generation of consoles comes through there is zero commercial incentive for a developer to build a AAA title which exploits the 13% of the market that has PCs (or the even smaller bit of that has a modern graphics card). Which means you don't get phat new GPUs, QED.

And the problem is the console cycle seems to be elongating...

J -

Swindez95 I agree with jontseng above ^. I've already made a point of this a couple of times. We will not see an increase in graphics intensity until the next generation of consoles come out simply because consoles is where the majority of games sales are. And as stated above developers are simply coding games and graphics for use on much older and less powerful hardware than the PC has available to it currently due to these last generation consoles still being the most popular venue for consumers.Reply