Opinion: AMD, Intel, And Nvidia In The Next Ten Years

Intel Graphics In The Next Decade

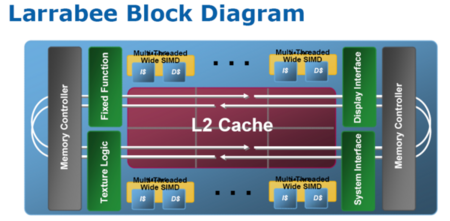

In a resounding recognition of the importance of GPU-computing principles, Intel made a commitment to the development of Larrabee. The chip consists of multiple P54C cores, and is intended to provide both a robust scientific computing platform and a high-performance enthusiast-level gaming product. But the cancellation of Larrabee as a consumer product demonstrates the difficulty in developing a flagship GPU.

Larrabee will still be released as a product for scientific computing, where the development community is responsible for developing and optimizing code. This suggests that the issue Intel faced/faces lies in developing an optimized graphics driver and shader compiler, and the relatively-poor price/performance ratio compared to AMD and Nvidia’s offerings, rather than a fundamental issue with the hardware.

Nvidia’s early successes were due in no small part to its excellent software team, led by Dwight Diercks. Under his watch, the company developed its Unified Driver Architecture and established design and testing methodologies that form the foundation of CUDA. While far from perfect, there is no question that taken as a whole, Nvidia’s drivers have historically been very stable. AMD similarly has Ben Bar-Haim. After being recruited to ATI in 2001, he launched the Catalyst program in 2002, which is credited as bringing ATI’s Radeon drivers up to a level competitive with Nvidia. Both of these teams have been able to evolve their practices and know-how over successive generations of hardware. Intel has no such experience. It does not have the same experience in developing 3D graphics drivers, and it doesn't have a software team that has been able to evolve in tandem with the hardware development. The technical expertise to develop high-performance graphics drivers on Larrabee-type architectures is something that remains to be seen from Intel. As with Itanium, there is a good chance that the hardware is ready too far in advance of the supporting software ecosystem.

Still, Intel has a broadest set of resources to succeed and the deepest pockets to allow for a long-term investment. It succeeded with Itanium, and it will succeed with Larrabee (albeit eventually). However, as with Itanium, success will be measured only with profit and in specific niches, and there is no guarantee that the company will succeed with Larrabee in the high-end gaming market. I remain cautiously optimistic.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Intel Graphics In The Next Decade

Prev Page What About Intel? Next Page Nvidia's Ambition-

anamaniac Alan DangAnd games will look pretty sweet, too. At least, that’s the way I see it.After several pages of technology mumbo jumbo jargon, that was a perfect closing statement. =)Reply

Wicked article Alan. Sounds like you've had an interesting last decade indeed.

I'm hoping we all get to see another decade of constant change and improvement to technology as we know it.

Also interesting is that you almost seemed to be attacking every company, you still managed to remain neutral.

Everyone has benefits and flaws, nice to see you mentioned them both for everybody.

Here's to another 10 years of success everyone! -

" Simply put, software development has not been moving as fast as hardware growth. While hardware manufacturers have to make faster and faster products to stay in business, software developers have to sell more and more games"Reply

Hardware is moving so fast and game developers just cant keep pace with it. -

Ikke_Niels What I miss in the article is the following (well it's partly told):Reply

I am allready suspecting a long time that the videocards are gonna surpass the CPU's.

You allready see it atm, videocards get cheaper, CPU's on the other hand keep going pricer for the relative performance.

In the past I had the problem with upgrading my videocard, but with that pushing my CPU to the limit and thus not using the full potential of the videocard.

In my view we're on that point again: you buy a system and if you upgrade your videocard after a year/year-and-a-half your mostlikely pushing your CPU to the limits, at least in the high-end part of the market.

Ofcourse in the lower regions these problems are smaller but still, it "might" happen sooner then we think especially if the NVidia design is as astonishing as they say and on the same time the major development of cpu's slowly break up.

-

lashton one of the most interesting and informativfe articles from toms hardware, what about another story about the smaller players, like Intel Atom and VILW chips and so onReply -

JeanLuc Out of all 3 companies Nvidia is the one that's facing the more threats. It may have a lead in the GPGPU arena but that's rather a niche market compared to consumer entertainment wouldn't you say? Nvidia are also facing problems at the low end of market with Intel now supplying integrated video on their CPU's which makes the need for low end video cards practically redundant and no doubt AMD will be supplying a smiler product with Fusion at some point in the near future.Reply -

jontseng This means that we haven’t reached the plateau in "subjective experience" either. Newer and more powerful GPUs will continue to be produced as software titles with more complex graphics are created. Only when this plateau is reached will sales of dedicated graphics chips begin to decline.Reply

I'm surprised that you've completely missed the console factor.

The reason why devs are not coding newer and more powerful games is nothing to do with budgetary constraints or lack thereof. It is because they are coding for an XBox360 / PS3 baseline hardware spec that is stuck somewhere in the GeForce 7800 era. Remember only 13% of COD:MW2 units were PC (and probably less as a % sales given PC ASPs are lower).

So your logic is flawed, or rather you have the wrong end of the stick. Because software titles with more complex graphics are not being created (because of the console baseline), newer and more powerful GPUs will not continue to produced.

Or to put it in more practical terms, because the most graphically demanding title you can possibly get is now three years old (Crysis), then NVidia has been happy to churn out G92 respins based on a 2006 spec.

Until we next generation of consoles comes through there is zero commercial incentive for a developer to build a AAA title which exploits the 13% of the market that has PCs (or the even smaller bit of that has a modern graphics card). Which means you don't get phat new GPUs, QED.

And the problem is the console cycle seems to be elongating...

J -

Swindez95 I agree with jontseng above ^. I've already made a point of this a couple of times. We will not see an increase in graphics intensity until the next generation of consoles come out simply because consoles is where the majority of games sales are. And as stated above developers are simply coding games and graphics for use on much older and less powerful hardware than the PC has available to it currently due to these last generation consoles still being the most popular venue for consumers.Reply