Do The Meltdown and Spectre Patches Affect PC Gaming Performance? 10 CPUs Tested

Exploiting The Unexploitable

Meltdown and Spectre are vulnerabilities exploited through side-channel attacks, which are incredibly difficult to block. These occur when an attacker observes the traits of a computer, be it the timing of certain operations or even noise and light patterns, and uses that information to compromise security.

It all starts with data, of course. A CPU loads information from main memory into its registers by requesting the contents of a virtual address, which is in turn mapped to a physical address. While fulfilling this request, the CPU verifies the address' permission bits, indicating whether the process has permission to access the memory address, or if only the kernel can access it. The system approves or denies access accordingly. The industry assumed this technique securely bifurcated memory into protected regions, so operating systems automatically map the entire kernel into the user space memory map's virtual address space. That means the CPU can attempt to access all of the virtual addresses if needed, but it also exposes the entire virtual address map to the user space.

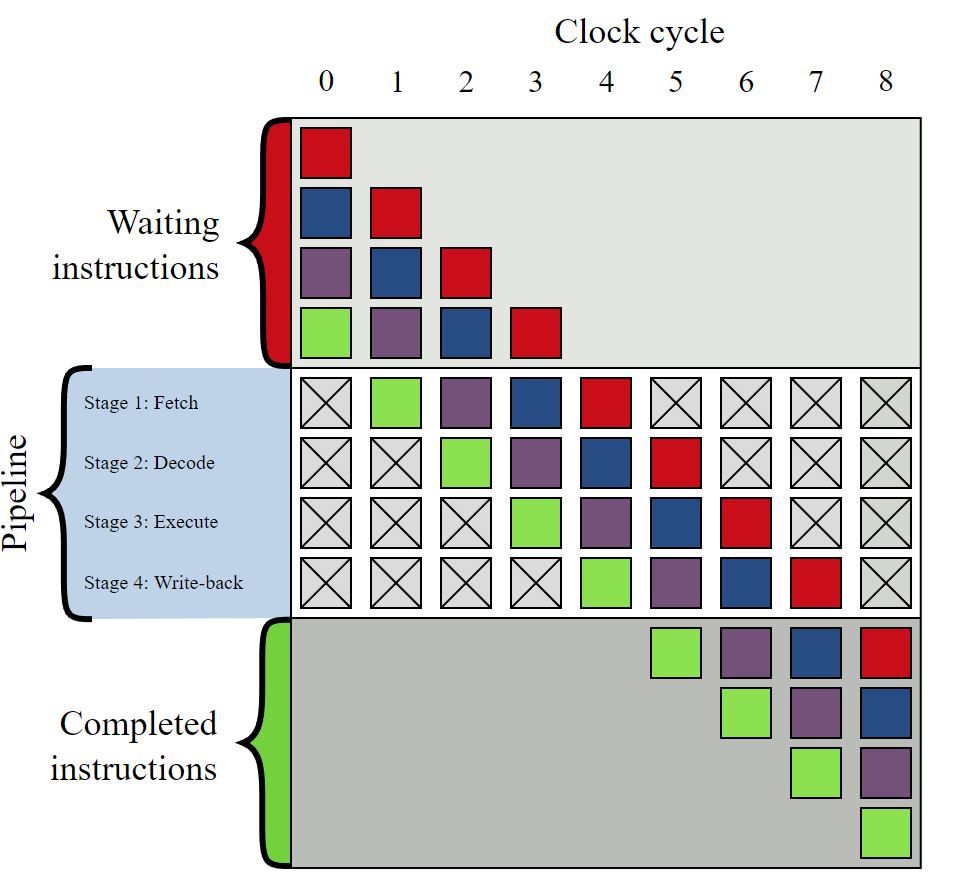

The problem has to do with speculative execution, which is part of out-of-order processing. Pipelined CPU cores process instructions in stages, such as instruction fetch, instruction decode, execute, memory access, and register write-back. Today’s processors break each of these fundamental stages down further, sometimes into 20 or more stages. This helps facilitate higher clock rates.

CPUs employ multiple pipelines to allow for parallelized instruction processing. That's why, in the image below, we see four different colors passing simultaneously through the four stages. Instruction branches can cause the pipeline to switch to another instruction sequence, creating a stall. That means the pipeline doesn’t process data for several clock cycles while it waits for inputs from memory.

To help avoid this, when the processor encounters a branch, its prediction unit tries to guess which instruction sequence the processor will need next. But it makes that determination before processing the instruction. The processor then fetches the predicted branch's instruction and speculatively executes it. This avoids the latency normally incurred if the pipeline stalls (due to the branch), and then fetching the next instruction from memory.

Depending on the address location (L1, L2, L3, RAM), fetching data from memory requires 10s to 100s of nanoseconds. That's slow compared to the sub-nanosecond latency of processor cycles, so having the instruction already in-flight speeds operation tremendously. Most operations are committed because branch predictors often have a 90%+ success rate. The processor simply discards the instruction and flushes its pipeline if the instruction isn't needed (misprediction).

Google's researchers found a sliver of opportunity in how the system handles cached memory access during speculative executions. Normal security checks that keep the user space and kernel memory separate don't happen fast enough during speculative executions that access cached memory. As a result, the processor can momentarily speculatively fetch and execute data from cached memory that it shouldn't be able to access. The system eventually does deny access and the data is discarded, but again, because this doesn't happen fast enough, a window of opportunity for an exploit is opened. Meltdown exploits the branch predictor to run code against the cache, all the while timing this process. That allows it to determine what data is held in the memory, as seen in the short video below.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

In the case of Meltdown, an attacker can read passwords, encryption keys, or other data from protected memory. That data could also be used to assume control of the system, rendering all other forms of protection useless. The biggest concern for data centers is that the exploit also allows an application resident in one virtual machine to access the memory of another virtual machine. This means an attacker could rent an instance on a public cloud and collect information from other VMs on the same server.

Attackers can exploit this vulnerability with JavaScript, so you could expose your system simply by visiting a nefarious web site. Browser developers have updated their products to reduce timing granularity. But attackers can also just use normal code delivered via malware or other avenues to execute an attack.

For now, the Meltdown operating system patch involves adding another layer of security checks during memory address accesses. That hampers the latency of system calls, thus slowing performance when applications issue kernel calls. Applications that tend to remain in the user space are less impacted. Intel's post-Broadwell processors have a PCID (Post-Context Identifiers) feature that speeds the process, so they don't experience as much of a slowdown as older models.

The Spectre exploit is much more nefarious because it can exploit a wider range of the speculative execution engine's capabilities to access kernel memory or data from other applications. Some researchers claim that fixing this exploit fully could require a fundamental re-tooling of all processor architectures, so it's possible we'll live with some form of this vulnerability for the foreseeable future. Fortunately, the exploit is extremely hard to pull off, requiring an elevated level of knowledge of the target processor and application. Intel and others have come up with patches for the current Spectre variants, but it is possible that vendors will play whack-a-mole as new Spectre derivatives arrive in the future.

MORE: CPU Security Flaw: All You Need To Know About Spectre

MORE: Best Gaming CPUs

Current page: Exploiting The Unexploitable

Prev Page Gaming With Meltdown And Spectre Next Page Test Setup

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

madvicker The majority of PC users are going to be on older CPUs than the ones you've tested. These results are only pertinent to the small % of people who have recently bought a CPU / new PC. What about the rest, the majority with older CPUs? That would be a much more useful and interesting analysis for most readers....Reply -

arielmansur Newer cpus don't get a performance penalty.. but older ones sure do get a noticeable one..Reply -

salgado18 Reply20680472 said:The majority of PC users are going to be on older CPUs than the ones you've tested. These results are only pertinent to the small % of people who have recently bought a CPU / new PC. What about the rest, the majority with older CPUs? That would be a much more useful and interesting analysis for most readers....

Last page, third paragraph:

"so it's possible that the impact on older CPUs could be minor as well (game testing on those is in-progress)." -

ddearborn007 HmmmReply

3/4 of all personal computers in the world today are NOT running windows 10. I don't know the exact percentage of gaming systems that are NOT running windows 10, but surely it is substantial.

Why wasn't the performance hit measured on the operating system running 3/4 of all PC's in the world today published immediately? To date, it appears that these numbers are being withheld from the public; the only reason has to be that the performance hit is absolutely massive in many cases.........Oh, and out of the total number of PC's used world wide, "gaming" PC's are a very small percentage, again begging the question of why tests are only being published for windows 10.... -

LORD_ORION Need to test older CPUs... or is this article designed by Intel to stop people from returning recently purchased CPUs.Reply -

RCaron Excellent article Paul!Reply

I have a question.

I read originally that AMD Zen architecture had near-immunity to Spectre variant 2 because a CPU specific code (password if you will) (that changes with each CPU) was required in order to exploit the CPU. Which is why AMD was claiming that Zen was almost immune to Spectre variant 2. Is this not the case?

AMD continues to insist that Spectre 2 is difficult to exploit due to CPU architecture. You left this out, and you continually lumped AMD with Intel with respect to Spectre 2 vulnerability.

This is misleading to your readers, and portrays a bias towards Intel.

https://www.amd.com/en/corporate/speculative-execution -

tripleX Reply20680748 said:Hmmm

3/4 of all personal computers in the world today are NOT running windows 10. I don't know the exact percentage of gaming systems that are NOT running windows 10, but surely it is substantial.

Why wasn't the performance hit measured on the operating system running 3/4 of all PC's in the world today published immediately? To date, it appears that these numbers are being withheld from the public; the only reason has to be that the performance hit is absolutely massive in many cases.........Oh, and out of the total number of PC's used world wide, "gaming" PC's are a very small percentage, again begging the question of why tests are only being published for windows 10....

Global OS penetration for Win 10 and Win 7 is effectively tied.

-

Paul Alcorn Reply20680892 said:Excellent article Paul!

I have a question.

I read originally that AMD Zen architecture had near-immunity to Spectre variant 2 because a CPU specific code (password if you will) (that changes with each CPU) was required in order to exploit the CPU. Which is why AMD was claiming that Zen was almost immune to Spectre variant 2. Is this not the case?

AMD continues to insist that Spectre 2 is difficult to exploit due to CPU architecture. You left this out, and you continually lumped AMD with Intel with respect to Spectre 2 vulnerability.

This is misleading to your readers, and portrays a bias towards Intel.

https://www.amd.com/en/corporate/speculative-execution

From the AMD page (which you linked)

Google Project Zero (GPZ) Variant 1 (Bounds Check Bypass or Spectre) is applicable to AMD processors.

And...

GPZ Variant 2 (Branch Target Injection or Spectre) is applicable to AMD processors.

While we believe that AMD’s processor architectures make it difficult to exploit Variant 2, we continue to work closely with the industry on this threat. We have defined additional steps through a combination of processor microcode updates and OS patches that we will make available to AMD customers and partners to further mitigate the threat.

AMD will make optional microcode updates available to our customers and partners for Ryzen and EPYC processors starting this week. We expect to make updates available for our previous generation products over the coming weeks. These software updates will be provided by system providers and OS vendors; please check with your supplier for the latest information on the available option for your configuration and requirements.

Linux vendors have begun to roll out OS patches for AMD systems, and we are working closely with Microsoft on the timing for distributing their patches. We are also engaging closely with the Linux community on development of “return trampoline” (Retpoline) software mitigations.

AMD hasn't released the microcode updates yet, but to its credit, it's probably better to make sure it is validated fully before release.

-

DXRick Do the OS patches (without the microcode patches) fix the two exploits? If so, why would we even want the Intel patches at all?Reply