Nvidia GeForce GTX 780 Review: Titan’s Baby Brother Is Born

At $1,000, GeForce GTX Titan only made sense for folks building small form factor PCs and multi-GPU powerhouses. Now there's another option with every bit of panache, a slightly de-tuned GPU, and a price tag $350 lower: meet Nvidia's GeForce GTX 780.

GPU Boost 2.0 And Troubleshooting Overclocking

GPU Boost 2.0

I didn’t have a chance to do a ton of testing with Nvidia’s second-generation GPU Boost technology in my GeForce GTX Titan story, but the same capabilities carry over to GeForce GTX 780. Here’s the breakdown:

GPU Boost is Nvidia’s mechanism for adapting the performance of its graphics cards based on the workloads they encounter. As you probably already know, games exact different demands on a GPU’s resources. Historically, clock rates had to be set with the worst-case scenario in mind. But, under “light” loads, performance ended up on the table. GPU Boost changes that by monitoring a number of different variables and adjusting clock rates up or down as the readings allow.

In its first iteration, GPU Boost operated within a defined power target—170 W in the case of Nvidia’s GeForce GTX 680. However, the company’s engineers figured out that they could safely exceed that power level, so long as the graphics processor’s temperature was low enough. Therefore, performance could be further optimized.

Practically, GPU Boost 2.0 is different only in that Nvidia is now speeding up its clock rate based on an 80-degree thermal target, rather than a power ceiling. That means you should see higher frequencies and voltages, up to 80 degrees, and within the fan profile you’re willing to tolerate (setting a higher fan speed pushes temperatures lower, yielding more benefit from GPU Boost). It still reacts within roughly 100 ms, so there’s plenty of room for Nvidia to make this feature more responsive in future implementations.

Of course, thermally-dependent adjustments do complicate performance testing more than the first version of GPU Boost. Anything able to nudge GK110’s temperature up or down alters the chip’s clock rate. It’s consequently difficult to achieve consistency from one benchmark run to the next. In a lab setting, the best you can hope for is a steady ambient temperature.

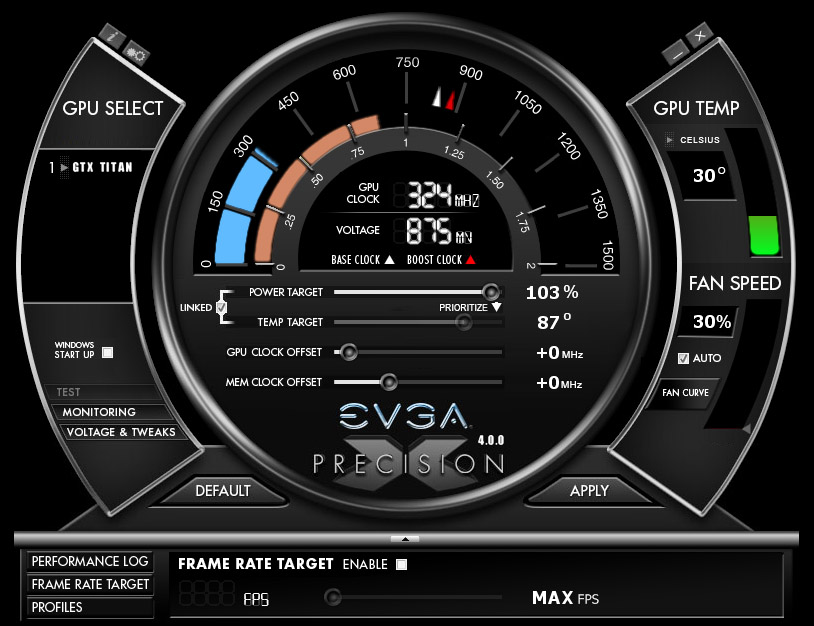

In addition to what I wrote for Titan, it should be noted that you can adjust the thermal target higher. So, for example, if you want GeForce GTX 780 to modulate clock rate and voltage based on an 85- or 90-degree ceiling, that’s a configurable setting.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Eager to keep GK110 as far away from your upper bound as possible? The 780’s fan curve is completely adjustable, allowing you to specify duty cycle over temperature.

Troubleshooting Overclocking

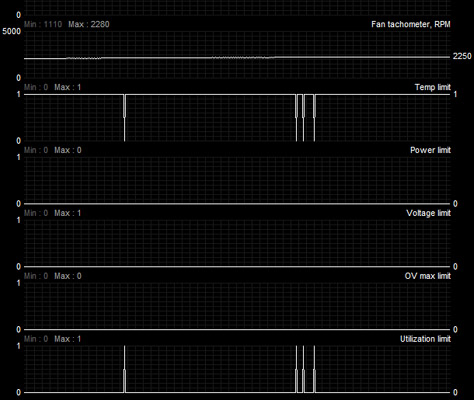

Back when Nvidia briefed me on GeForce GTX Titan, company reps showed me an internal tool able to read the status of various sensors, which made it possible to diagnose problematic behavior. If an overclock was pushing GK110’s temperature too high, causing a throttle response, it’d log that information.

The company now enables that functionality in apps like Precision X, triggering a “reasons” flag when certain boundaries are crossed, preventing an effective overclock. This is very cool; you’re no longer left guessing about bottlenecks. Also, there’s an OV max limit readout that lets you know if you’re pushing the GPU’s absolute peak voltage. If this flag pops, Nvidia says you risk frying your card. Consider that a good place to back off your overclocking effort.

Current page: GPU Boost 2.0 And Troubleshooting Overclocking

Prev Page GeForce Experience And ShadowPlay Next Page Test Setup And Benchmarks-

CrisisCauser A good alternative to the Titan. $650 was the original GTX 280 price before AMD came knocking with the Radeon 4870. I wonder if AMD has another surprise in store.Reply -

It's definitely a more reasonable priced alternative to the titan, but it's still lacking in compute. Which might disappoint some but I don't think it'll bother most people. Definitely not bad bang for buck at that price range considering how performance scales with higher priced products, but it could've been better, $550-$600 seems like a more reasonable price for this.Reply

-

hero1 This is what I have been waiting for. Nice review and I like the multi gpu tests. Thanks. Time to search the stores. Woohoo!!Reply -

natoco To much wasted silicon (just a failed high spec chip made last year, even the titan) and rebadged with all the failed sections turned off. I wanted to upgrade my gtx480 for a 780 but for the die size, the performance is to low unfortunately. It has certainly not hit the trifecta like the 680 did. Would you buy a V8 with 2 cylinders turned off even if it were cheaper? No, because it would not be as smooth as it was engineered to be, so using that analogy, No deal. customer lost till next year when they release a chip to the public that's all switched on, will never go down the turned off parts in chip route again.Reply -

EzioAs In my opinion, this card and the Titan is actually a clever product release by Nvidia. Much like the GTX 680 and GTX 670, the Titan was released at higher price (like the GTX 680) while the slightly slower GTX 780 (the GTX670 for the GTX600 series case) is at a significantly lower price but performing quite close to it's higher-end brother. We all remember when the GTX 670 launched it makes the GTX680 looks bad because the GTX 670 was 80% of the price while maintaining around 90-95% of the performance.Reply

Of course, one could argue that as we get closer to higher-end products, the performance increase is always minimal and price to performance ratio starts to increase, however, for the past 3-4 years (or so I guess), never has it been that the 2nd highest-end GPU having such low performance difference with the highest-end GPU. It's usually significant enough that the highest end GPU (GTX x80) still has it's place.

Tl;dr,

The GTX Titan was released to make the GTX 780 look incredibly good, and people (especially on the internet), will spread the news fast enough claiming the $650 release price for the GTX 780 is good and reasonable, and people who didn't even bother reading reviews and benchmarks, will take their word and pay the premium for GTX 780.

Nvidia is taking a different route to compete with AMD or one could say that they're not even trying to compete with AMD in terms of price/performance (at least for the high-end products). -

mouse24 natocoTo much wasted silicon (just a failed high spec chip made last year, even the titan) and rebadged with all the failed sections turned off. I wanted to upgrade my gtx480 for a 780 but for the die size, the performance is to low unfortunately. It has certainly not hit the trifecta like the 680 did. Would you buy a V8 with 2 cylinders turned off even if it were cheaper? No, because it would not be as smooth as it was engineered to be, so using that analogy, No deal. customer lost till next year when they release a chip to the public that's all switched on, will never go down the turned off parts in chip route again.Reply

Thats apretty bad analogy. A gpu is still smooth even with some of the cores/vram/etc turned off, it doesn't increase latency/frametimes/etc. -

godfather666 "But, I’m going to wait a week before deciding what I’d spend my money on in the high-end graphics market. "Reply

I must've missed something. Why wait a week? -

JamesSneed Natoco, your comment was so clueless. It is likely every single CPU or GPU you have ever purchased has fused off parts. Even the $1000 extreme Intel cpu has a little bit fused off since its a 6 core CPU but using a 8 core Zeon as its starting point. Your comparison to a car is idiotic.Reply -

016ive You will have to be an idiot to buy a Titan now that the 780 is here...Me, I could afford neither :)Reply