Nvidia GeForce GTX 780 Review: Titan’s Baby Brother Is Born

At $1,000, GeForce GTX Titan only made sense for folks building small form factor PCs and multi-GPU powerhouses. Now there's another option with every bit of panache, a slightly de-tuned GPU, and a price tag $350 lower: meet Nvidia's GeForce GTX 780.

Power Consumption And GPU Boost

Clock Rates and Thermal Limits

We already covered GPU Boost 2.0 and, small though they may be, the subtle changes Nvidia made to GeForce GTX 780 compared to Titan. Specifically, we see that both cards start limiting core clock rates once the GPU reaches 60°C, dialing back the performance gains attributable to GPU Boost a bit. This becomes more pronounced on the GeForce GTX 780 as its core temperature rises, though the 780 exhibits much greater and more varied clock speed fluctuations, while the GTX Titan is basically limited to three clock levels. While GeForce GTX Titan practically loses the ability to boost its clock speeds as soon as it reaches its thermal limit, the graph still shows 780’s core frequency spiking upward consistently.

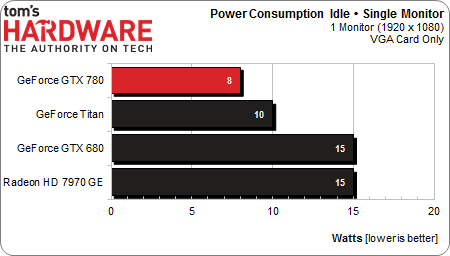

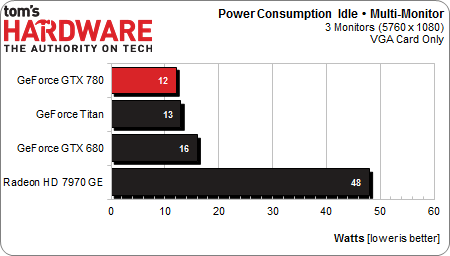

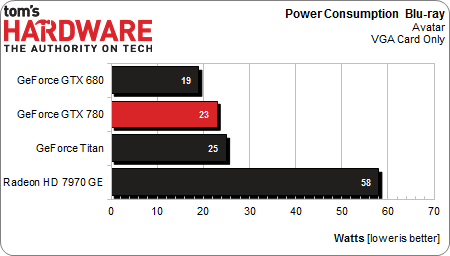

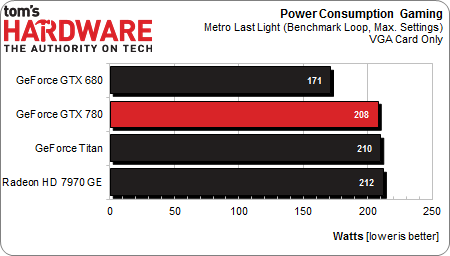

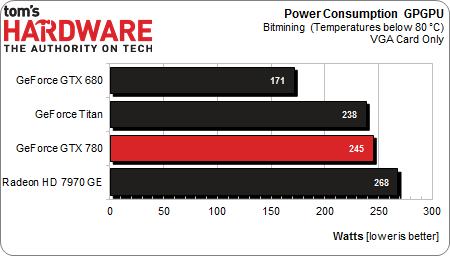

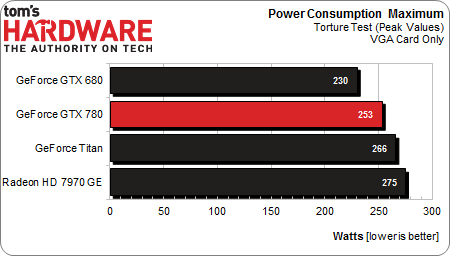

Power Consumption

In less demanding applications (including games), the GeForce GTX 780 uses slightly less power than Titan. Although both cards bear the same TDP, this is still somewhat surprising. You'd think that the pared-back hardware would be less power-hungry. The difference are in-line with what we're used to seeing from two similar cards with different amounts of RAM. In other words, it seems that the deactivated hardware blocks on the GTX 780 are still drawing power.

Also curious, the GeForce GTX 780 appears to use more power under full load than the Titan until it reaches its thermal limit. Meanwhile, the bigger card runs into its thermal limit sooner, while still offering more performance. One explanation is that the Titan operates closer to the GK110 GPU’s sweet spot, while the GTX 780 relies on higher clock speeds for its performance.

As long as the cards stay within their predefined thermal limits, they can hit power peaks beyond what their nominal TDP would allow. In practice, you will see these only rarely and very briefly at that. Still, don’t forget to take them into account and pick your power supply accordingly.

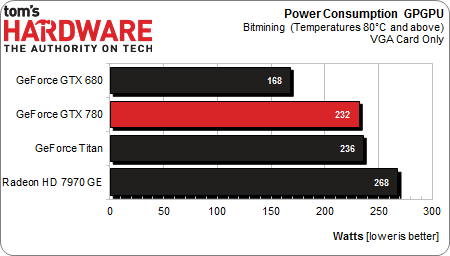

Effects of the Thermal Limit

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Lastly, let’s look at what happens when both cards hit their thermal limits after being under load for a while. The GeForce GTX 780’s power consumption drops from 245 to 232 W, while the GeForce GTX Titan only dips by 2 W, from 238 to 236 W. This is another example of how much more headroom GPU Boost 2.0 provides, extracting extra performance as long as the GPU remains cool enough.

Current page: Power Consumption And GPU Boost

Prev Page Heat, Noise, And Cooling Next Page OpenGL: 2D And 3D Performance-

CrisisCauser A good alternative to the Titan. $650 was the original GTX 280 price before AMD came knocking with the Radeon 4870. I wonder if AMD has another surprise in store.Reply -

It's definitely a more reasonable priced alternative to the titan, but it's still lacking in compute. Which might disappoint some but I don't think it'll bother most people. Definitely not bad bang for buck at that price range considering how performance scales with higher priced products, but it could've been better, $550-$600 seems like a more reasonable price for this.Reply

-

hero1 This is what I have been waiting for. Nice review and I like the multi gpu tests. Thanks. Time to search the stores. Woohoo!!Reply -

natoco To much wasted silicon (just a failed high spec chip made last year, even the titan) and rebadged with all the failed sections turned off. I wanted to upgrade my gtx480 for a 780 but for the die size, the performance is to low unfortunately. It has certainly not hit the trifecta like the 680 did. Would you buy a V8 with 2 cylinders turned off even if it were cheaper? No, because it would not be as smooth as it was engineered to be, so using that analogy, No deal. customer lost till next year when they release a chip to the public that's all switched on, will never go down the turned off parts in chip route again.Reply -

EzioAs In my opinion, this card and the Titan is actually a clever product release by Nvidia. Much like the GTX 680 and GTX 670, the Titan was released at higher price (like the GTX 680) while the slightly slower GTX 780 (the GTX670 for the GTX600 series case) is at a significantly lower price but performing quite close to it's higher-end brother. We all remember when the GTX 670 launched it makes the GTX680 looks bad because the GTX 670 was 80% of the price while maintaining around 90-95% of the performance.Reply

Of course, one could argue that as we get closer to higher-end products, the performance increase is always minimal and price to performance ratio starts to increase, however, for the past 3-4 years (or so I guess), never has it been that the 2nd highest-end GPU having such low performance difference with the highest-end GPU. It's usually significant enough that the highest end GPU (GTX x80) still has it's place.

Tl;dr,

The GTX Titan was released to make the GTX 780 look incredibly good, and people (especially on the internet), will spread the news fast enough claiming the $650 release price for the GTX 780 is good and reasonable, and people who didn't even bother reading reviews and benchmarks, will take their word and pay the premium for GTX 780.

Nvidia is taking a different route to compete with AMD or one could say that they're not even trying to compete with AMD in terms of price/performance (at least for the high-end products). -

mouse24 natocoTo much wasted silicon (just a failed high spec chip made last year, even the titan) and rebadged with all the failed sections turned off. I wanted to upgrade my gtx480 for a 780 but for the die size, the performance is to low unfortunately. It has certainly not hit the trifecta like the 680 did. Would you buy a V8 with 2 cylinders turned off even if it were cheaper? No, because it would not be as smooth as it was engineered to be, so using that analogy, No deal. customer lost till next year when they release a chip to the public that's all switched on, will never go down the turned off parts in chip route again.Reply

Thats apretty bad analogy. A gpu is still smooth even with some of the cores/vram/etc turned off, it doesn't increase latency/frametimes/etc. -

godfather666 "But, I’m going to wait a week before deciding what I’d spend my money on in the high-end graphics market. "Reply

I must've missed something. Why wait a week? -

JamesSneed Natoco, your comment was so clueless. It is likely every single CPU or GPU you have ever purchased has fused off parts. Even the $1000 extreme Intel cpu has a little bit fused off since its a 6 core CPU but using a 8 core Zeon as its starting point. Your comparison to a car is idiotic.Reply -

016ive You will have to be an idiot to buy a Titan now that the 780 is here...Me, I could afford neither :)Reply