How Much Power Does Your Graphics Card Need?

Power Supply Efficiency

Now that we’re clear on the power cost of a graphics card, we can use this as the basis for a somewhat simplified theory for the selection of a power supply class. You need to differentiate between two values: the power measurement at the outlet—which is crucial for the overall electricity cost calculation—and what the actual power supply load is that is produced by the components.

At this point we must generalize somewhat, since we can’t guarantee the product quality of each power supply manufacturer based on specifications. The following statements are relevant to brand name power supplies, but fluctuations in quality are always possible. As always, you’re better off avoiding cheap power supplies with a poor track record for performance.

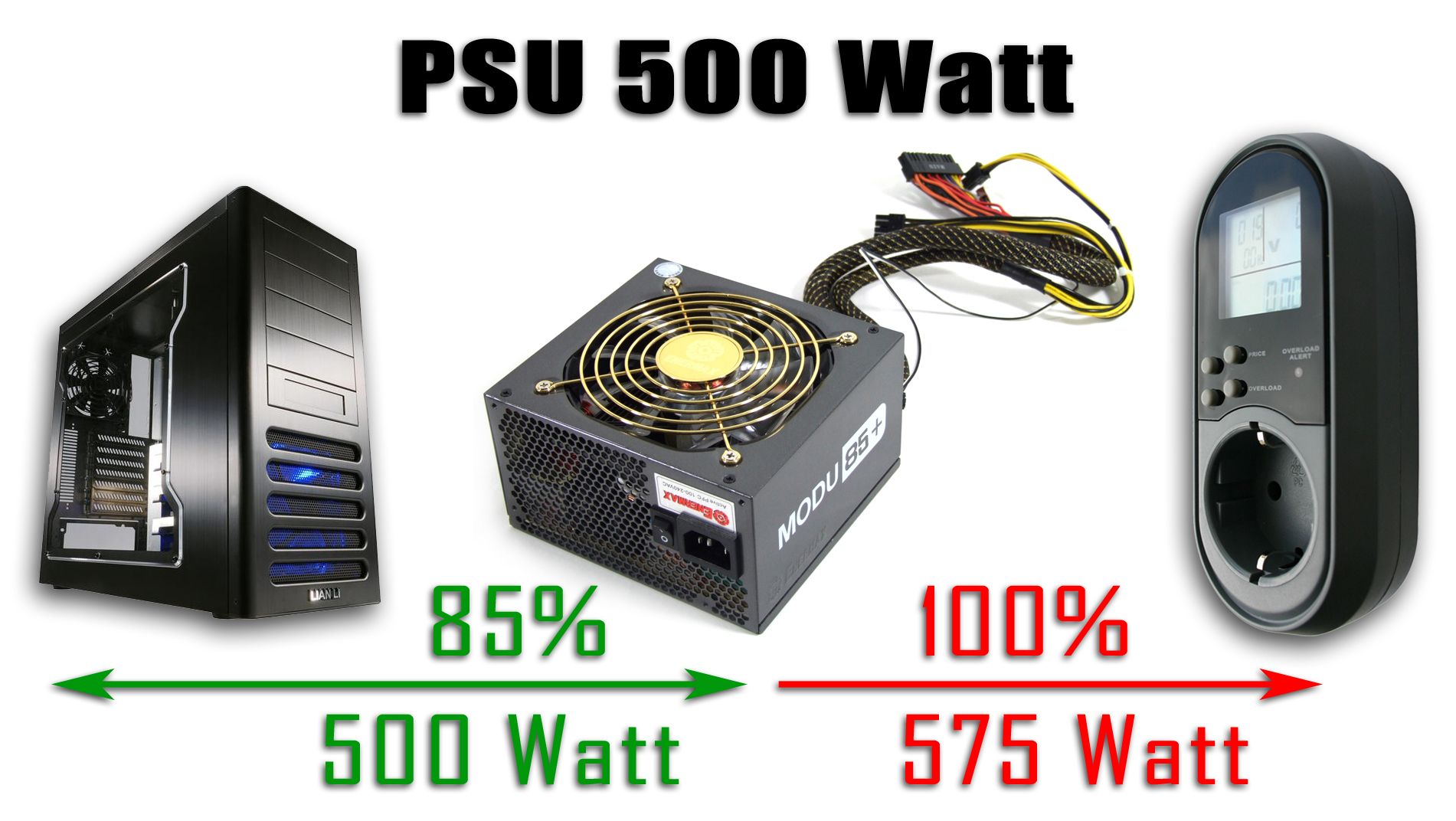

As an example, we take a 500 watt power supply, and plug into it components drawing 500 watts of power; the power supply is therefore at 100 percent load, theoretically. But the efficiency factor now determines how much the power supply absorbs from the electrical outlet and dissipates into heat. If the power supply in this example has an efficiency of 85%, this means 15% of the power taken from the outlet is wasted. So while we use 500 watts, the power meter shows 588 watts (since 588 * 85% = 500). These additional watts of power must not be included in the power supply choice; they are only relevant to the electricity cost.

A few notes:

- You should easily be able to use the wattage specification of the manufacturer: a 500-watt power supply can be loaded with 500 watts.

- The measured value at the outlet is higher and not the actual load of the components.

- Test values are almost always measurements at the outlet.

- Current brand name power supplies run at more than 80% efficiency with higher loads; lower efficiencies exist mainly with loads below 100 watts.

- The higher the efficiency, the less energy is converted into heat, so costs remain low.

- The lower the efficiency, the more energy is converted into heat, and the more cost increases, since more energy is required at the outlet.

In this table we see once again the differences between power supply (load by the components) and measured outlet (electricity cost). On the left is the power supply watt information; on the right is the theoretical number of watts, which the meter would display under full load. 85 percent efficiency is assumed.

| Power Supply Performance Class in Watts | Measurement Socket (Full Load) in Watts |

|---|---|

| 300 | 353 |

| 350 | 412 |

| 400 | 471 |

| 450 | 529 |

| 500 | 588 |

| 550 | 647 |

| 600 | 706 |

| 650 | 765 |

| 700 | 824 |

| 750 | 882 |

| 850 | 1000 |

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Power Supply Efficiency

Prev Page Power Consumption--Graphics Cards And Electricity Costs Next Page Actual Power Consumption And Current Requirements-

nukemaster This article was due. No more you need an 800 watt psu for the 4870 , core2 quad ad 1 hard drive anymore :pReply

Guest11since Core i7 920 has TDP = 130W, how can it consume 85W only?The TDP is more of a design thing. Almost all of Intels initial Core2 line had a TDP of 65 watts yet many took much less power. Intel gives a worst case of that type number and does not measure every cpu.

AMD does the same thing. They listed almost all the initial Athlon 64's at 89 watts yet many did not take that or give off that amount.

zxv9511.21 Jigawatts !!!You act like you would need a small fusion reactor or maybe a bolt of lightning to get that? -

neiroatopelcc So my system actually has a too big power supply to be effective?Reply

I'm running a 3,4ghz c2d with 5x500gb sata drives, a dvdrw and a 4870 on a p35 board.

According to the article that's not going to draw the ~400W needed to get within effective range of my corsair 620 .... -

cynewulf There's a mistake in the power under load for the 3870X2. It shows the same as the idle consumption. If only that were true! :DReply -

Inneandar The TDP (thermal design power) is meant to be a guideline for the cooling solution, not the power consumption. To qualify for a cpu with a TDP of 120W, a cooler must be able to dissipate 120W. Practically, of course, this means it is an upper bound to (sensible) power consumption.Reply

also small note: Is it just me or is it strange to see the 260 SLI consume more than the 280 SLI. maybe in need of a beefier test scene... -

zodiacfml nice collection of data. i hope many learn from this and avoid recommending too powerful supplies.Reply -

roofus better off with too much power supply than not enough. at least if you over-spec the power supply you leave some breathing room for any additional components.Reply