Nvidia’s GF100: Graphics Architecture Previewed, Still No Benchmarks

Why Re-Organize GF100? One Word: Geometry

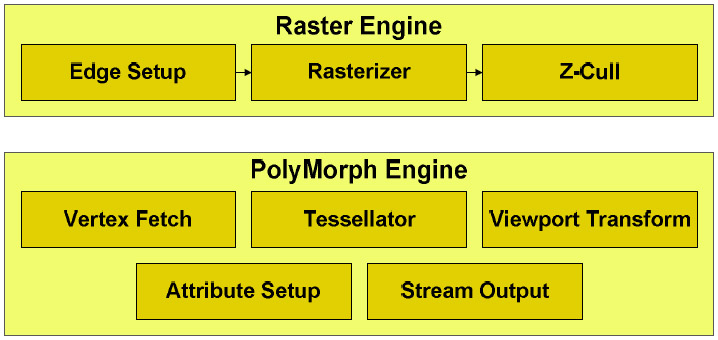

Successful architectures don’t get re-worked just to impress the ladies. There’s a rhyme and reason behind Nvidia’s decision to arm each GPC with its own raster engine and each SM with what it calls a PolyMorph engine (no, WoW players, we’re not talking about sheeping the stream processors here…).

First things first: the PolyMorph engine refers to a five stage piece of fixed-function logic that works in conjunction with the rest of the SM to fetch vertices, tessellate, perform viewport transformation, attribute setup, and output to memory. In between each stage, the SM handles vertex/hull shading and domain/geometry shading. From each PolyMorph engine, primitives are sent to the raster engine, each capable of eight pixels per clock (totaling 32 pixels per clock across the chip).

Now, why was it necessary to get more granular about the way geometry was being handled when a monolithic fixed-function front-end has worked so well in the past? After all, hasn’t ATI enabled tessellation units in something like six generations of its GPUs (as far back as TruForm in 2001)? Ah, yes. But how many games actually took advantage of tessellation between then and now? That’s the point.

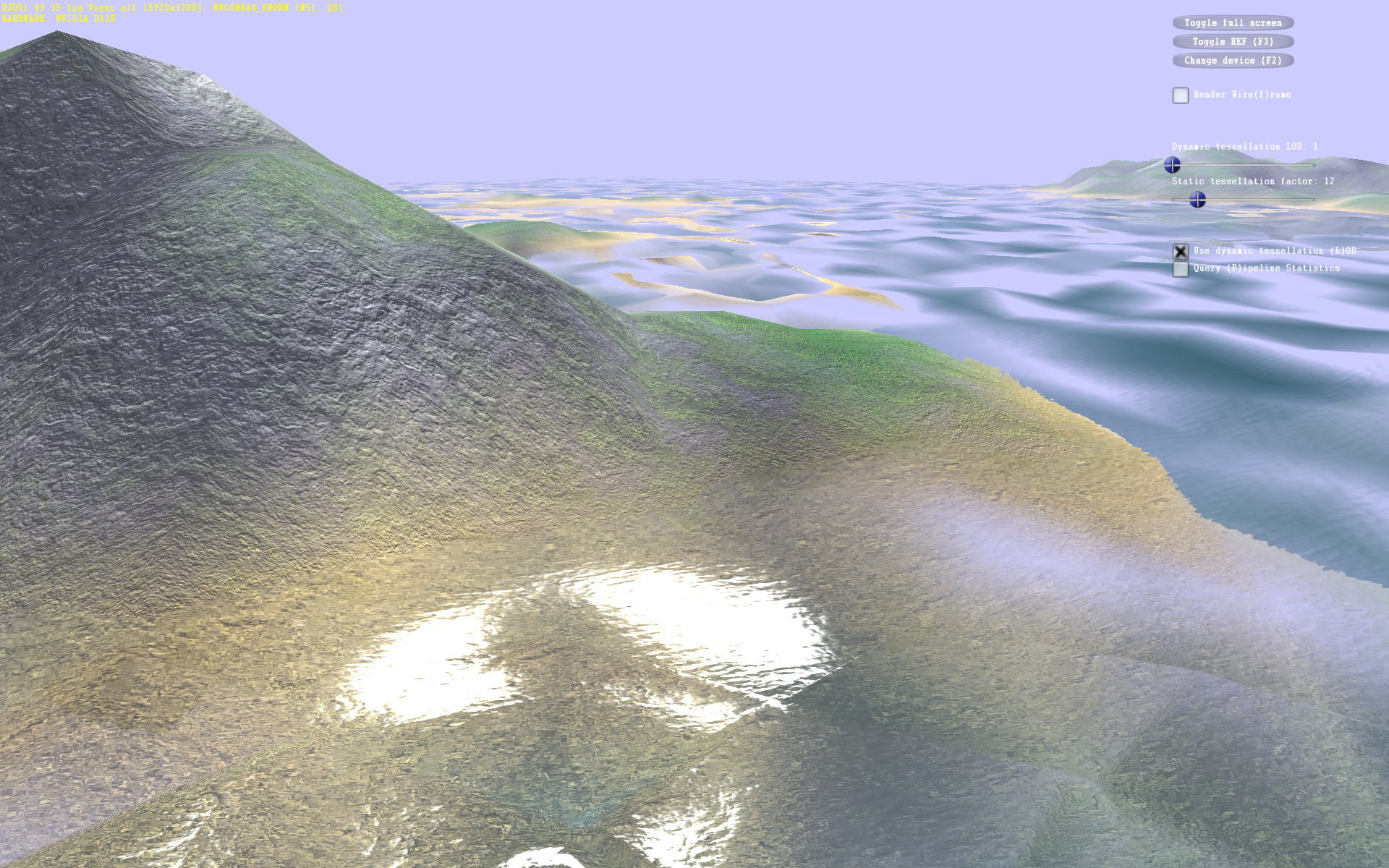

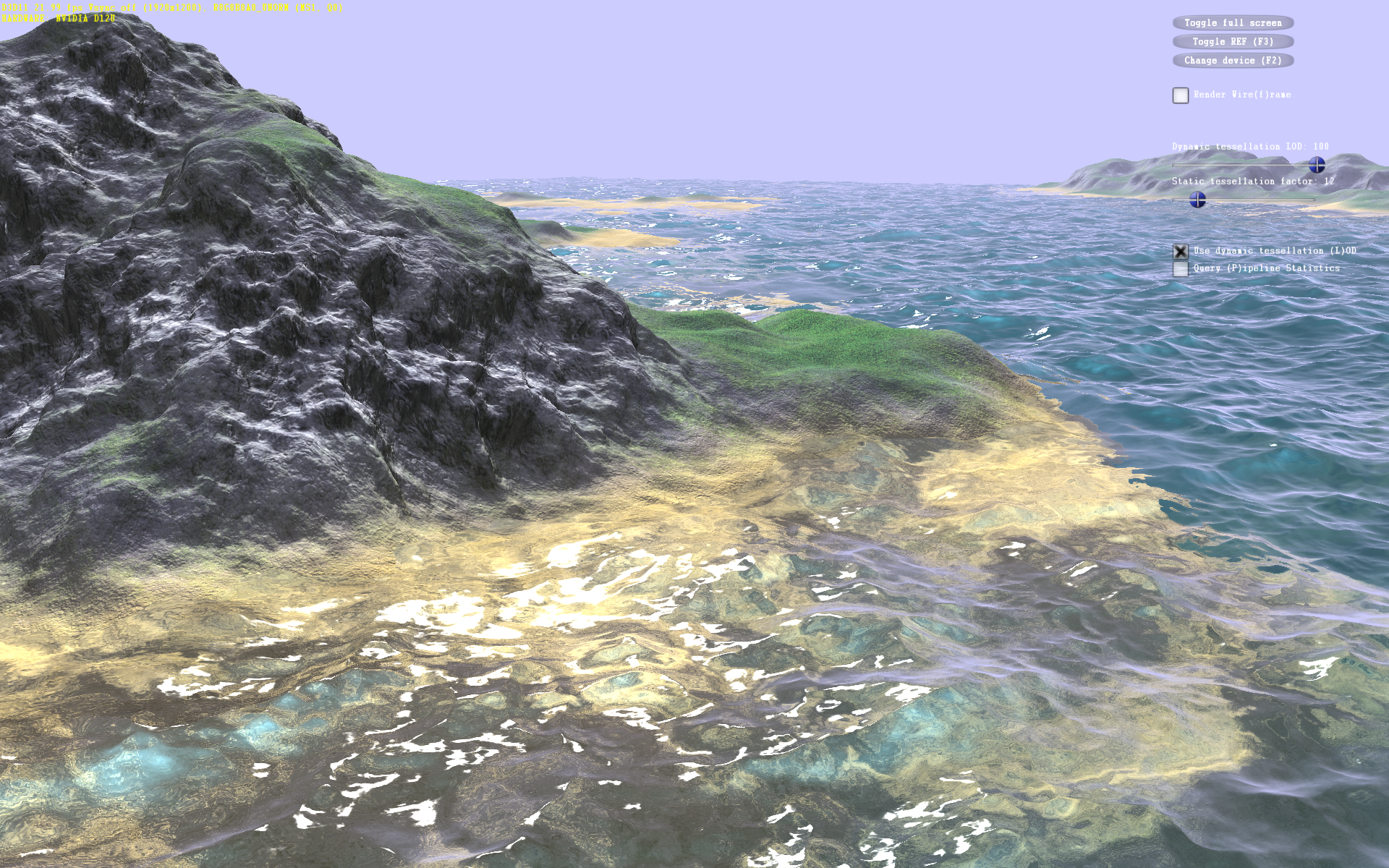

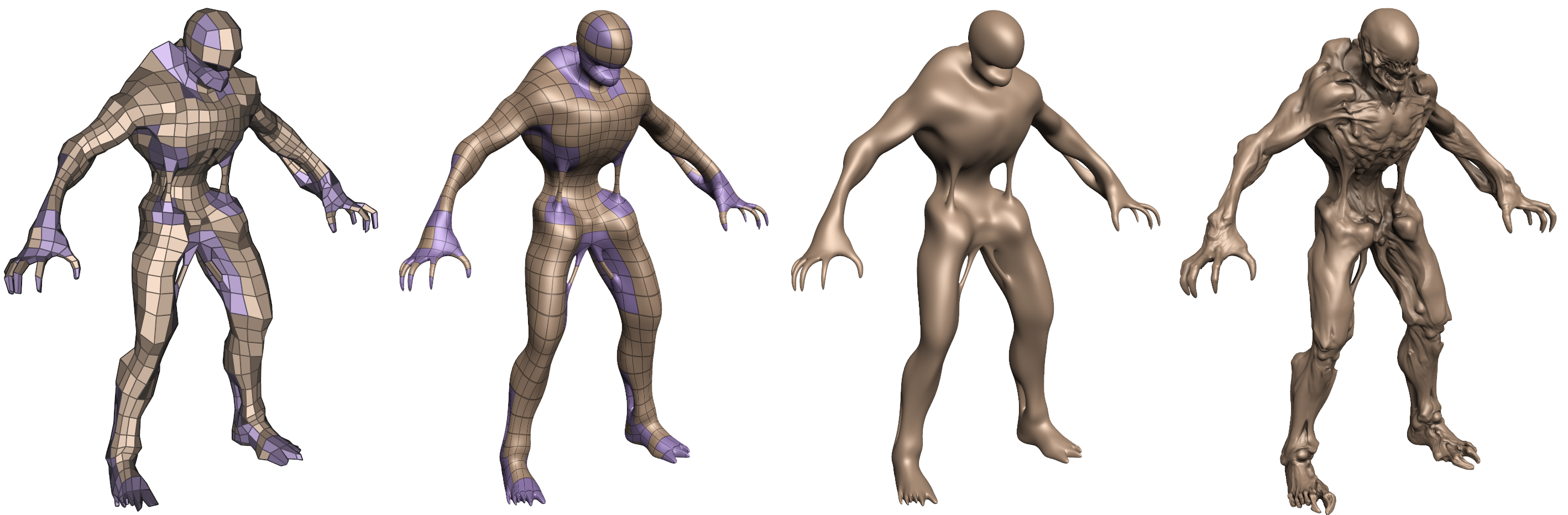

Ever since the days of Nvidia’s GeForce 2 architecture, we’ve been hearing about programmable pixel and then vertex shading. Now we’re getting some very impressive shaders able to add tremendous detail to the latest DirectX 9 and 10 games (Nvidia claims a 150x increase in shading performance from the GeForce FX 5800-series to GT200). But I know we’ve all seen some of the terri-bad geometry that totally ruins the guise of realism in our favorite games. Purportedly, the next frontier in augmenting graphics realism involves cranking the dial on geometry.

DirectX 11 posits to fix this via three new stages in the rendering pipeline: the hull shader, which computes control point transforms, the tessellator, which takes in the tessellation factors from the hull shader and outputs domain points, and the domain shader, which operates on each of those points.

But in order to facilitate the performance needed to make tessellation feasible, Nvidia had to shift away from that monolithic front-end and toward a more parallel design. Hence, the four raster and 16 PolyMorph engines. The company naturally has its own demos that show how much more efficient GF100 is versus the Cypress architecture, which employs the “bottlenecked” monolithic design—however, we’ll want to compare the performance of a title like Aliens Vs. Predator from Rebellion Developments with tessellation on and off to compare a more balanced app. Up front, though, Nvidia claims that GF100 enables up to 8x better performance in geometry-bound environments than GT200.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Why Re-Organize GF100? One Word: Geometry

Prev Page GF100: The Chip Based On Fermi Next Page Not Just A Compute Architecture-

randomizer GF100 is entering the ranks of Duke Nukem Forever. We keep seeing little glimpses but the real thing might as well not exist.Reply -

duckmanx88 dingumfOh look, no benchmarks.Reply

wth is he supposed to benchmark? nothing has been released it's just an article giving us details on what we can expect within the next two months. -

decembermouse I feel like you left some info out, whether you just never read it or didn't mention it for fear of casting doubts on GF100... I've heard (and this isn't proven) that they had to remove some shaders and weren't able to reach their target clocks even with this revision (heard the last one didn't cut the mustard which is why they're hurrying the new one along and why we have to wait till March). Also, be careful about sounding too partisan with Nvidia before we have more concrete info on this.Reply

And yes, it does matter that AMD got DX11 hardware out the gate first. Somehow, when Nvidia wins at something, whether that's being first with a technology, having the fastest card on the market, or a neato feature like Physx, it's a huge deal, but when AMD has a win, it's 'calm down people, let's not get excited, it's no big deal.' The market and public opinion, and I believe even worth of the company have all been significantly boosted by their DX11 hardware. It is a big deal. And it'll be a big deal when GF100 is faster than the 5970 too, but they are late. I believe it'll be April before we'll realistically be able to buy these without having to F5 Newegg every 10 seconds for a week, and in these months that AMD has been the only DX11 player, well, a lot of people don't want to wait that long for what might be the next best thing... all I'm trying to say is let's try not to spin things so one company sounds better. It makes me sad when I see fanboyism, whether for AMD, Intel, Nvidia, whoever, on such a high-profile review site. -

megamanx00 Well, not much new here. I wouldn't really be surprised if the 2x performance increase over the GTX285 was a reality. Still, the question is if this new card will be able to maintain as sizable a performance lead in DX11 games when Developers have been working with ATI hardware. If this GPU is as expensive to produce as rumored will nVidia be able to cope with an AMD price drop to counter them?Reply

I hope that 5850s on shorter PCBs come out around the time of the GF100 so they can drop to a price where I can afford to buy one ^_^ -

cangelini dingumfOh look, no benchmarks.Reply

*Specifically* mentioned in the title of the story, just to avoid that comment =) -

randomizer cangelini*Specifically* mentioned in the title of the story, just to avoid that comment =)You just can't win :lol:Reply -

WINTERLORD great article. im wondering though just for clarifacation, nvidia is going to look better then ati?Reply -

tacoslave Even though im a RED fan im excited because its a win win for me either way. If amd wins than im proud of them but if nvidia wins than that means price drops!!! And since they usually charge more than ati for a little performance increase than ill probably get a 5970 for 500 or less (hopefully). Anyone remember the gtx280 launch?Reply