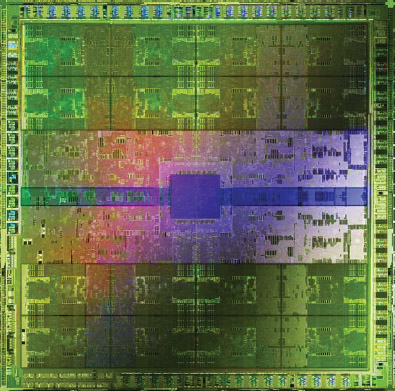

Nvidia’s GF100: Graphics Architecture Previewed, Still No Benchmarks

Not Just A Compute Architecture

Leading up to the GF100 launch, we’ve heard a lot of buzz about taking this opportunity to deemphasize its role in graphics in favor of GPU computing. Although representatives at Nvidia are the ones who first expressed concern over this “myth,” the company itself is really to blame for its spread.

The Fermi architecture was first introduced at Nvidia’s own GPU Technology Conference in late September of last year and, at the time, only details of the design’s compute capabilities were being discussed. While we had the whitepaper leaked to us prior to the embargo, such a disclosure literally one week after ATI’s Radeon HD 5870 launch was a bit much, since retail product was rumored to be more than a quarter away and AMD was already shipping the world’s first DirectX 11 hardware. Nevertheless, we read with great interest some of the most detailed overviews of the Fermi architecture’s capabilities.

Of course, now Nvidia wants everyone to know that it hasn’t backed down from a dedication to graphics performance, either. The GPC architecture, emphasis on geometry, and full DirectX 11 compliance all support such an assertion. However, it’s still easy to look at GF100 and see where the company sought balance between compute and graphics, from clear nods to double precision floating-point math to the chip’s cache architecture.

Each of the 16 SMs sports its own 64KB shared memory/L1 cache pool, which can either be configured as 16KB shared memory/48KB L1 or vice versa. GT200 included 16KB of shared memory per SM to keep data as local as possible, minimizing the need to go out to frame buffer for information. But by expanding this memory pool and making it almost-dynamically configurable, Nvidia addresses graphics and compute problems at the same time. In a physics- or ray-tracing-based compute scenario, for example, you don’t have a predictable addressing mechanism, so the small shared space/large L1 helps improve memory access. This’ll become particularly notable once game developers start taking better advantage of DirectCompute from within their titles.

| Header Cell - Column 0 | GT200 | GF100 | Benefits |

|---|---|---|---|

| L1 Texture Cache (Per Quad) | 12KB | 12KB | Texture filtering |

| Dedicated L1 Load/Store Cache | None | 16/48KB | Useful in physics/ray-tracing |

| Total Shared Memory | 16KB | 16/48KB | More data reuse among threads |

| L2 Cache | 256KB (texture read only) | 768KB (all clients read/write) | Compute performance, texture coverage |

From there you have a 768KB L2, which is significantly larger and more functional than GT200’s 256KB texture read-only cache. Because it’s unified, GF100’s L2 replaces the L2 texture cache, ROP cache, and on-chip FIFOs, as any client can read from it or write to it. This is another area where compute performance is clearly the target in Nvidia’s crosshair. However, gaming performance should see a benefit as well since SMs working on the same data will make fewer trips to memory.

It’s entirely true that GF100 represents a massive step forward in what third-party developers can do with the compute side. But it’s bolstered by the fact that DirectCompute and OpenCL are here, that AMD supports both APIs as well, and that the way we’re going to get more realistic games is through developers enabling GPU computing within their titles. Ray tracing (either used alone or with rasterization), voxel rendering, custom depth of field kernels, particle hydrodynamics, and AI path-finding are all potential applications of what we might see by virtue of compute-enabled hardware.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Not Just A Compute Architecture

Prev Page Why Re-Organize GF100? One Word: Geometry Next Page Going Surround-

randomizer GF100 is entering the ranks of Duke Nukem Forever. We keep seeing little glimpses but the real thing might as well not exist.Reply -

duckmanx88 dingumfOh look, no benchmarks.Reply

wth is he supposed to benchmark? nothing has been released it's just an article giving us details on what we can expect within the next two months. -

decembermouse I feel like you left some info out, whether you just never read it or didn't mention it for fear of casting doubts on GF100... I've heard (and this isn't proven) that they had to remove some shaders and weren't able to reach their target clocks even with this revision (heard the last one didn't cut the mustard which is why they're hurrying the new one along and why we have to wait till March). Also, be careful about sounding too partisan with Nvidia before we have more concrete info on this.Reply

And yes, it does matter that AMD got DX11 hardware out the gate first. Somehow, when Nvidia wins at something, whether that's being first with a technology, having the fastest card on the market, or a neato feature like Physx, it's a huge deal, but when AMD has a win, it's 'calm down people, let's not get excited, it's no big deal.' The market and public opinion, and I believe even worth of the company have all been significantly boosted by their DX11 hardware. It is a big deal. And it'll be a big deal when GF100 is faster than the 5970 too, but they are late. I believe it'll be April before we'll realistically be able to buy these without having to F5 Newegg every 10 seconds for a week, and in these months that AMD has been the only DX11 player, well, a lot of people don't want to wait that long for what might be the next best thing... all I'm trying to say is let's try not to spin things so one company sounds better. It makes me sad when I see fanboyism, whether for AMD, Intel, Nvidia, whoever, on such a high-profile review site. -

megamanx00 Well, not much new here. I wouldn't really be surprised if the 2x performance increase over the GTX285 was a reality. Still, the question is if this new card will be able to maintain as sizable a performance lead in DX11 games when Developers have been working with ATI hardware. If this GPU is as expensive to produce as rumored will nVidia be able to cope with an AMD price drop to counter them?Reply

I hope that 5850s on shorter PCBs come out around the time of the GF100 so they can drop to a price where I can afford to buy one ^_^ -

cangelini dingumfOh look, no benchmarks.Reply

*Specifically* mentioned in the title of the story, just to avoid that comment =) -

randomizer cangelini*Specifically* mentioned in the title of the story, just to avoid that comment =)You just can't win :lol:Reply -

WINTERLORD great article. im wondering though just for clarifacation, nvidia is going to look better then ati?Reply -

tacoslave Even though im a RED fan im excited because its a win win for me either way. If amd wins than im proud of them but if nvidia wins than that means price drops!!! And since they usually charge more than ati for a little performance increase than ill probably get a 5970 for 500 or less (hopefully). Anyone remember the gtx280 launch?Reply