Gigabyte X170-Extreme ECC, Intel C236 Motherboard Review

Accustomed to customary configurations, our AMD editor gets sideways with a prosumer Xeon platform. Will Gigabyte's X170-Extreme ECC bridge the gap between his work and hobbies?

Why you can trust Tom's Hardware

Test Results And Conclusion

To set the stage for the following data, a few caveats to keep in mind. First, I am using the new Tom's Hardware Windows 10 test image, and there are several changes. For example, 7zip is now running over a larger file, and Handbrake is rendering a larger movie. Combing back through my previous Gigabyte AMD 970 article, these differences have forced me to retest that platform with the latest test image. Although I trust the output of the synthetic tests regardless of the OS, the other applications can do funny things when changing the OS <coughLINUXcough>. With these new workloads as well as standardized gaming benchmarks, this extra data will help me put in perspective what a powerhouse this C236 system is compared to lower end systems.

Synthetic Benchmarks

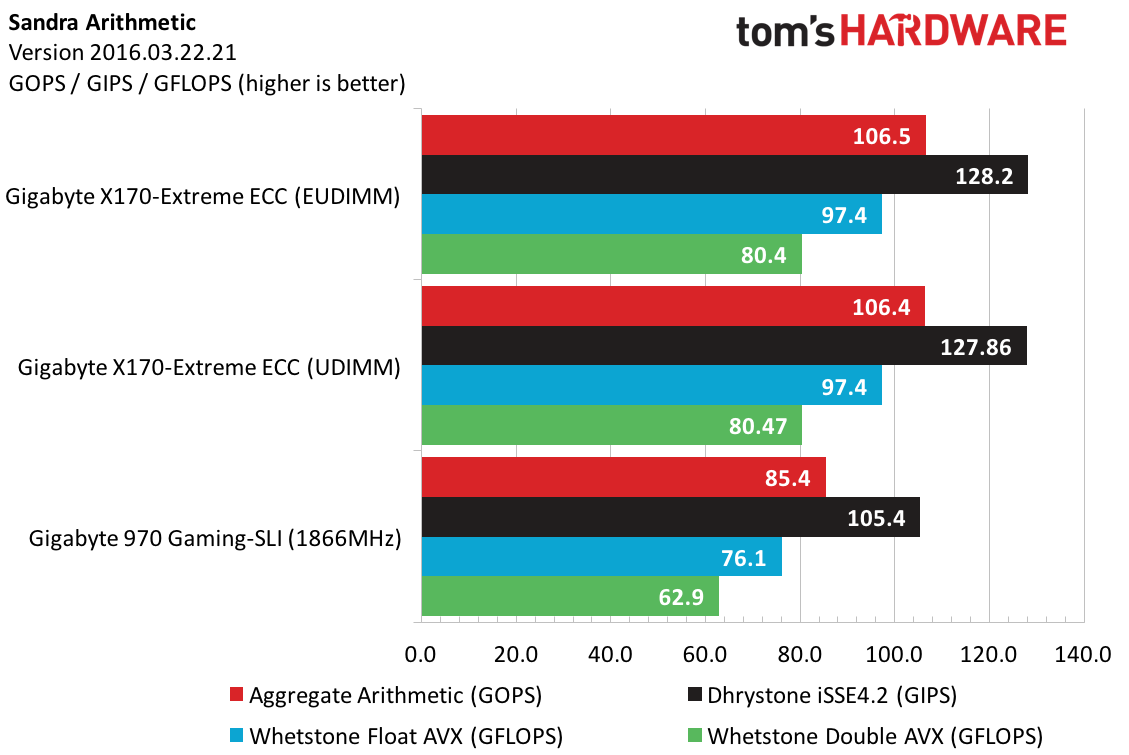

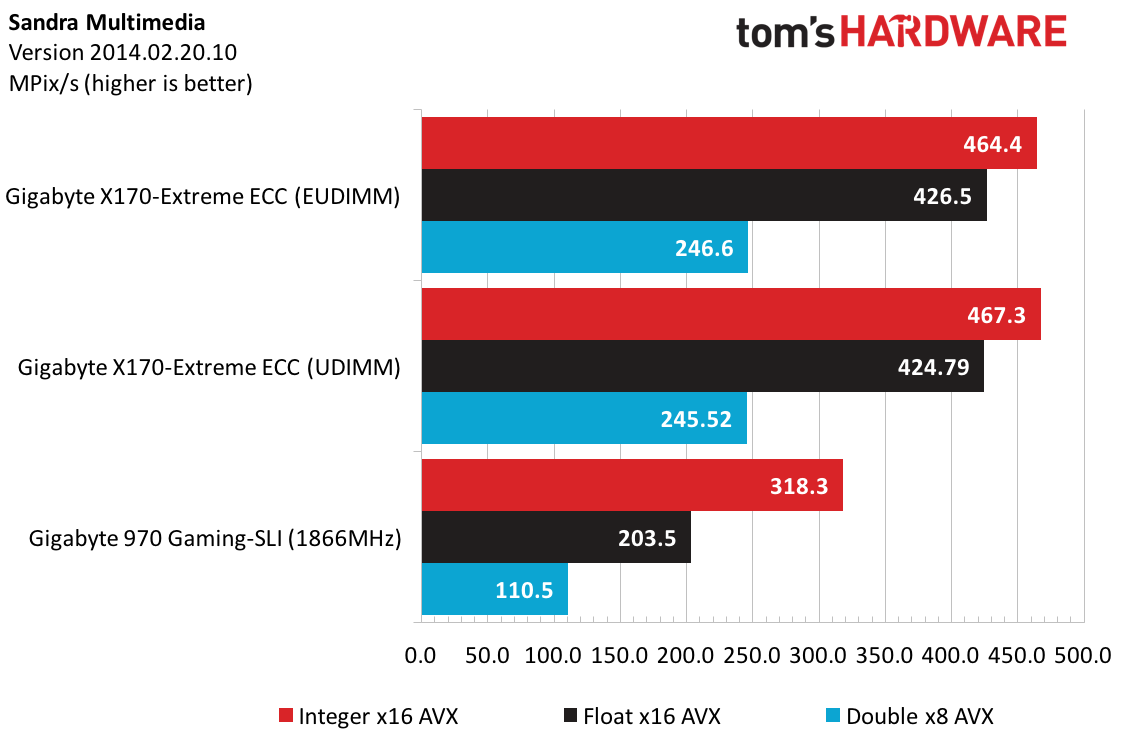

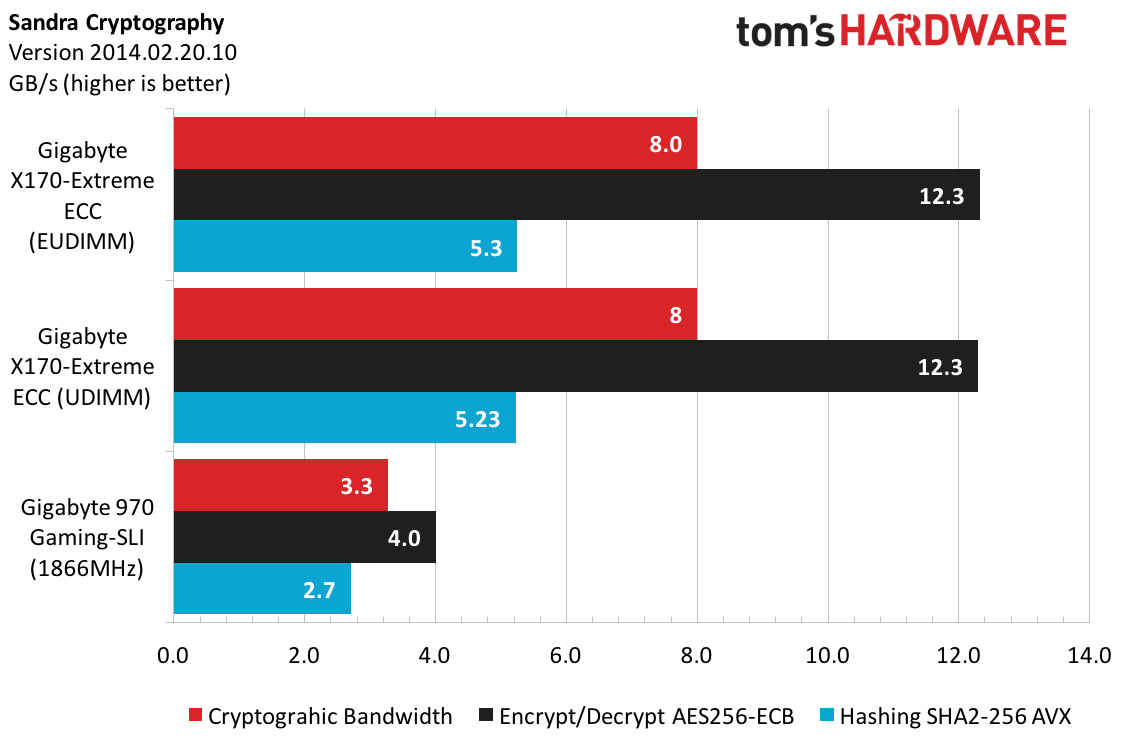

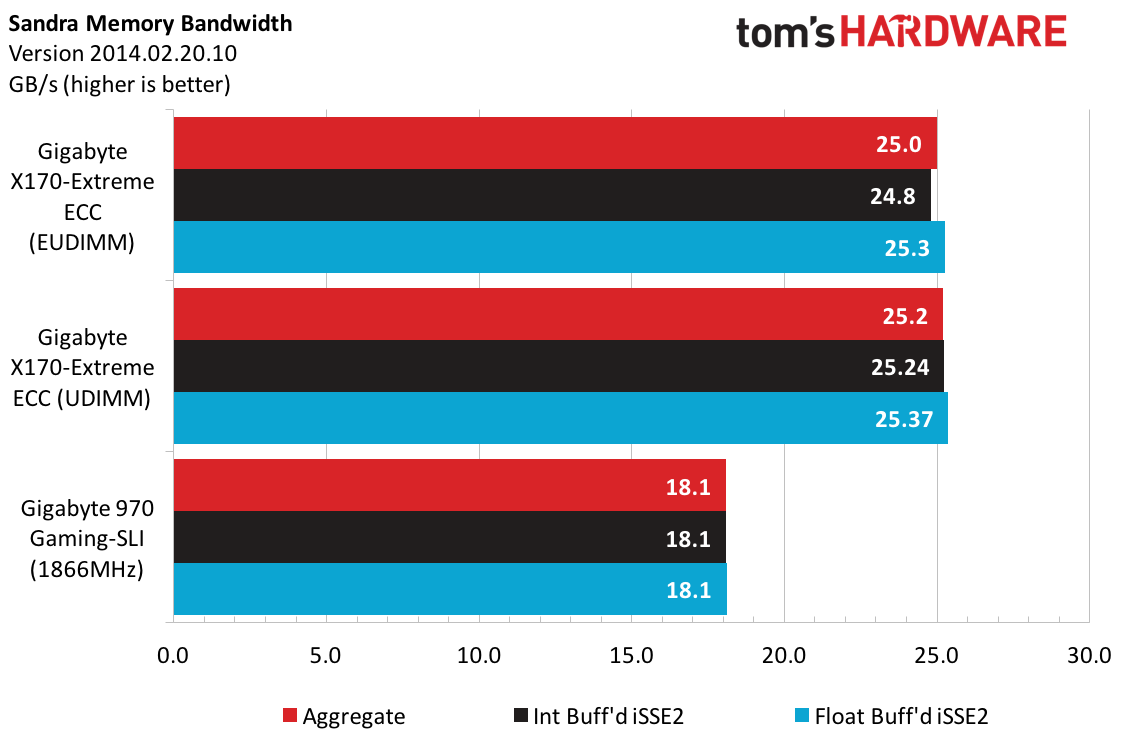

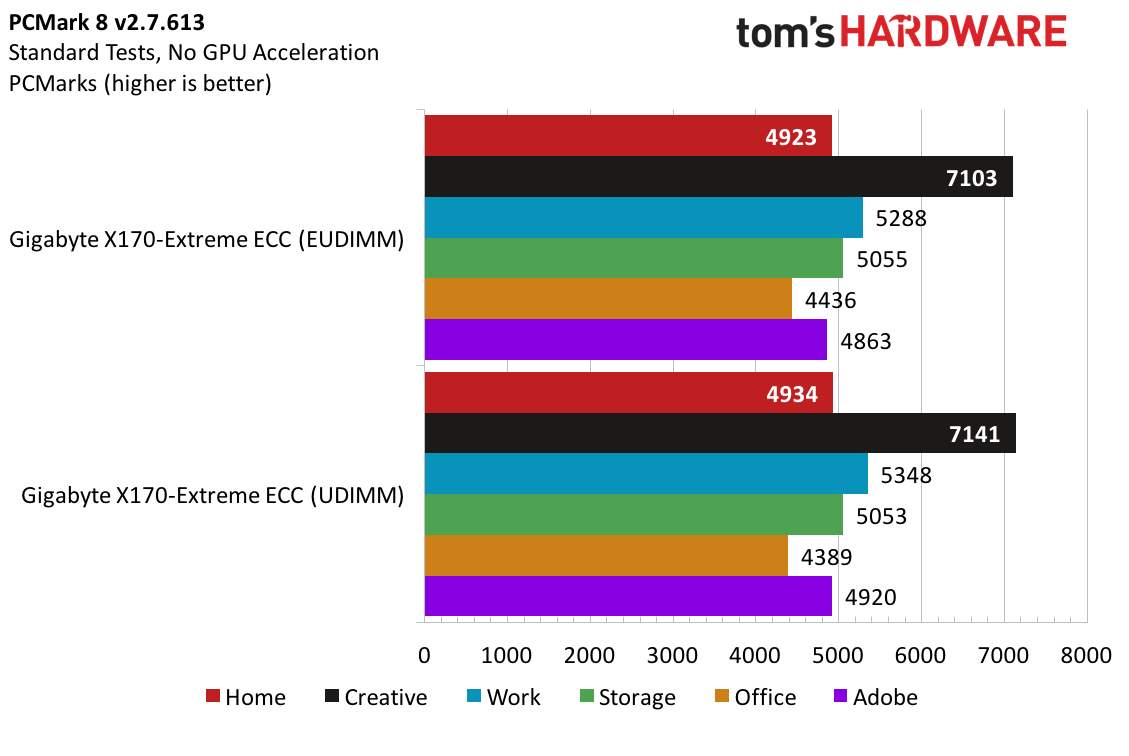

With this new test image, drawing a one-to-one comparison between these two boards is very difficult, and limited test consistency prevents me from displaying a lot of the older data. To begin, Sandra gives me a good indicator of theoretical performance. As I expect, the Xeon runs circles around the AMD FX-8350 in every test, and in many instances it doubles the performance metrics. The AMD 970 data presents similar trends in the PCMark Home, Creative, and Work workloads, though the deltas are not as dramatic. The move to DDR4 and this Gigabyte X170-Extreme ECC shows a whopping 38% increase in memory bandwidth compared to the DDR3 implemented in the Gigabyte 970 Gaming-SLI sample running at similar frequencies.

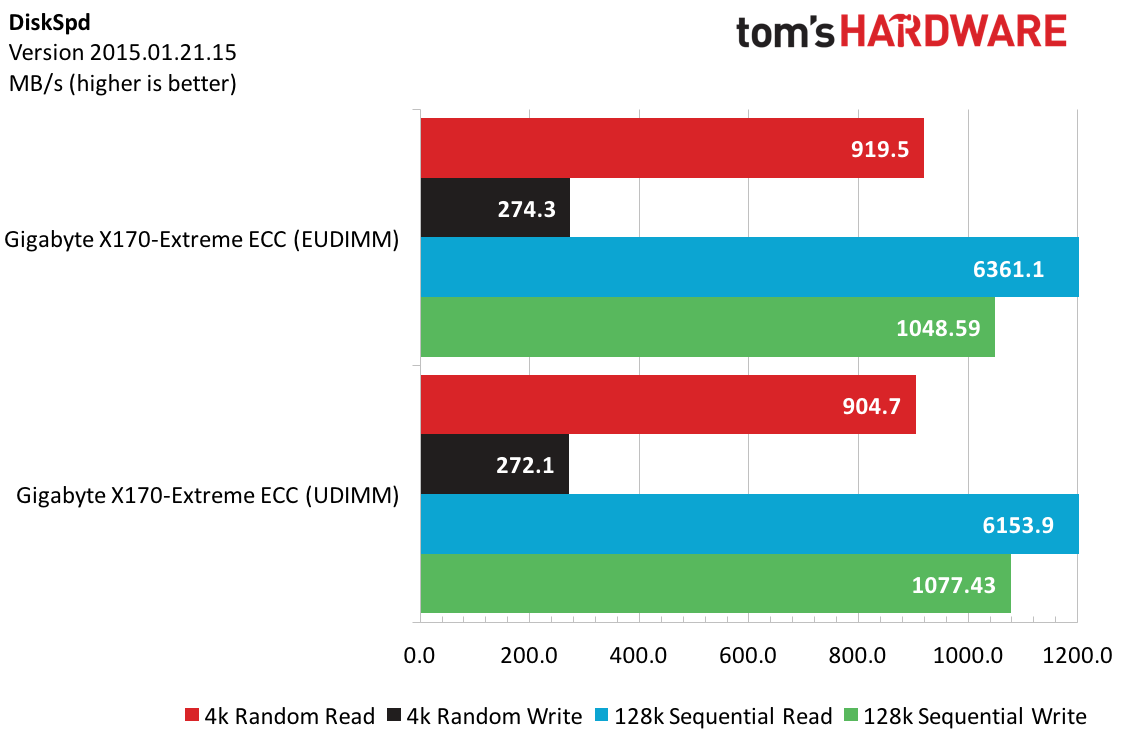

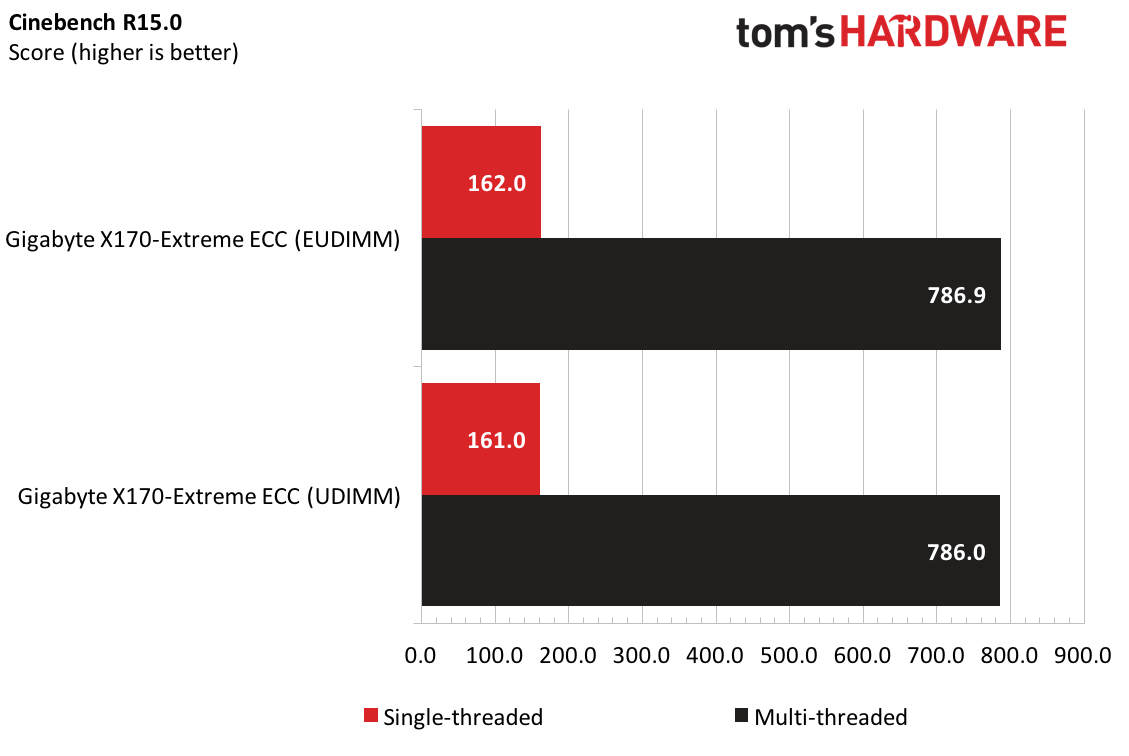

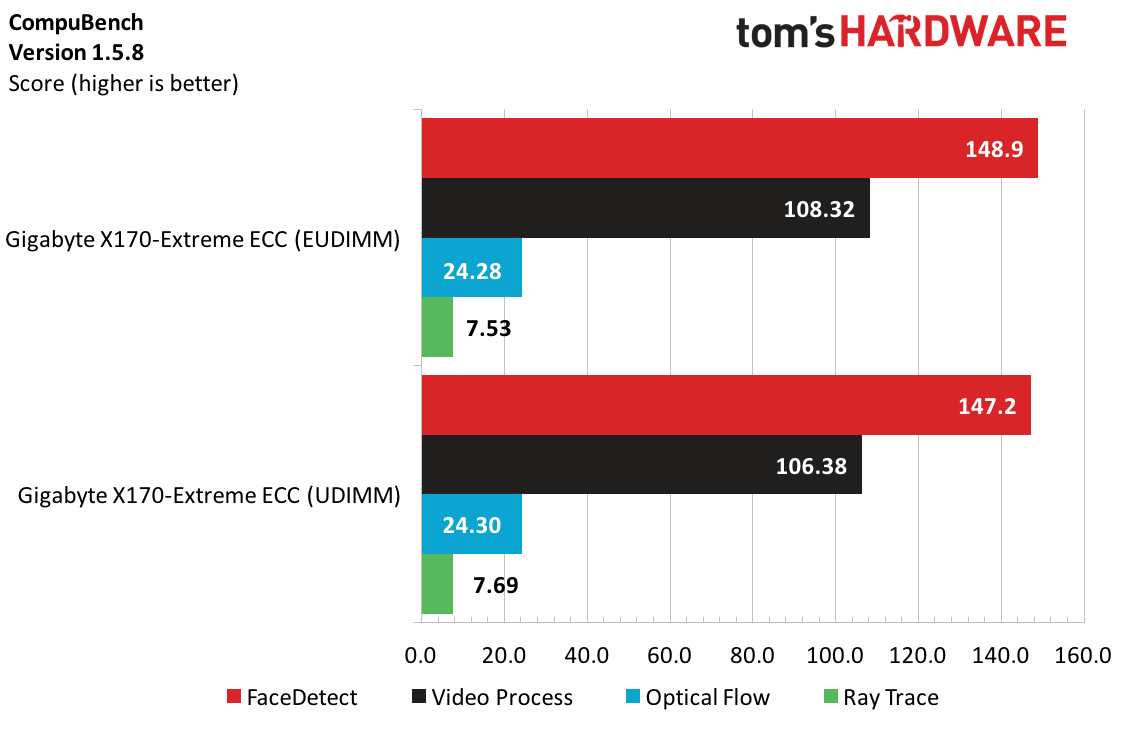

DiskSpd shows the Toshiba NVMe drive’s potential. This sample comes fairly close to the advertised sequential read and write specifications and falls within the margin of error for IOPS in random accesses. I was so excited about the results from ATTO when I first installed the drive that I posted my results to /r/pcmasterrace for some instant karma. The sequential reads were so fast that the data blew out my chart, and only by constricting the scale am I able to accurately show the other metrics. For a change of pace, the AMD FX-8350 puts up a respectable fight against the Xeon E3-1230 v5 in single-threaded performance under Cinebench, and having the extra cores helps it keep up in the multi-threaded benchmark. CompuBench rounds out the synthetics showing us that facial detection video processing workloads are no match for this prosumer platform. If these synthetics tell me anything, I am in for some good nights running this platform.

Applications and Games

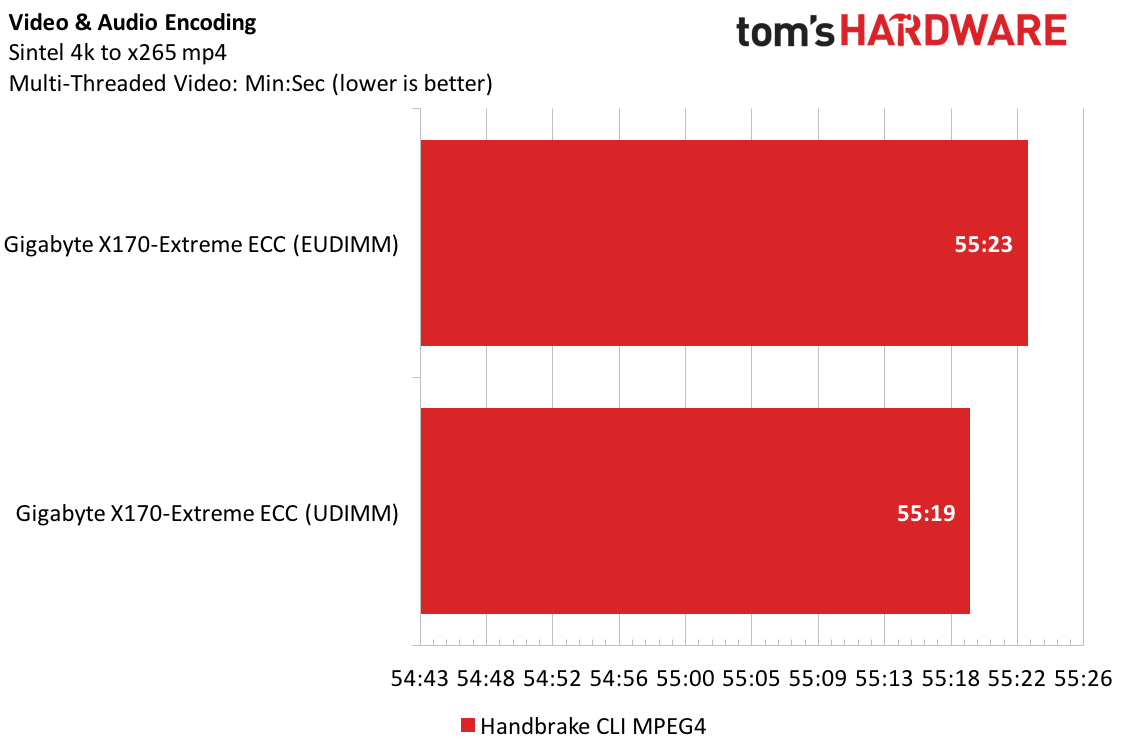

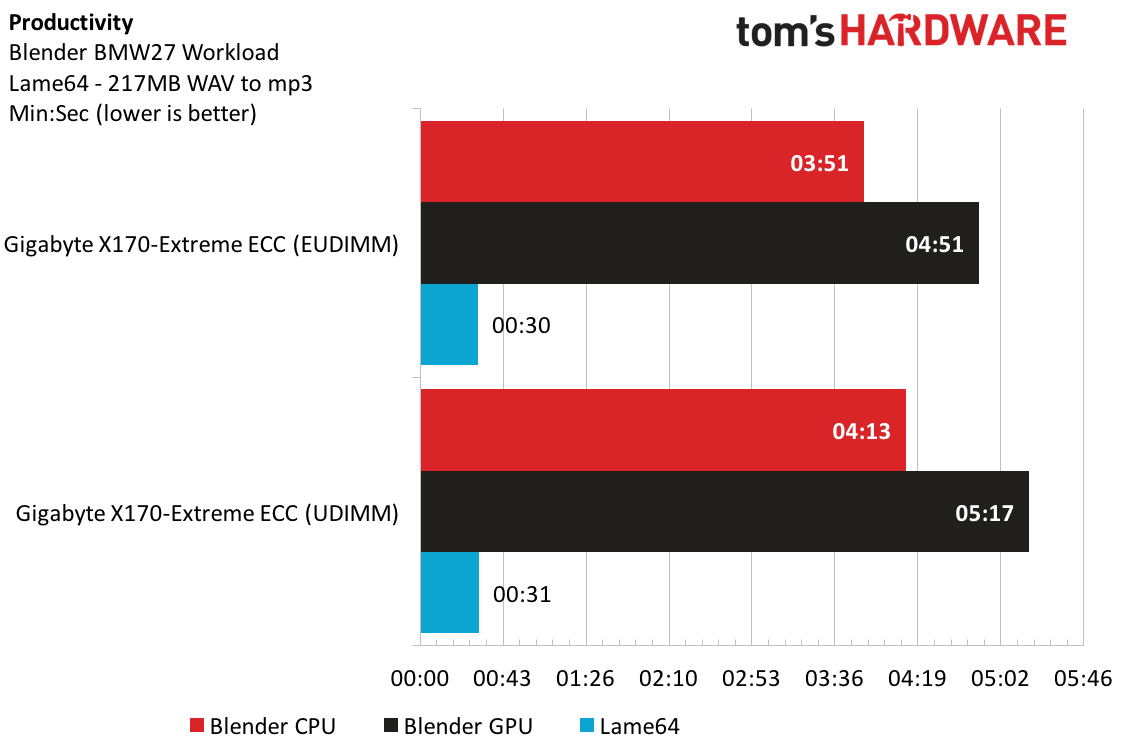

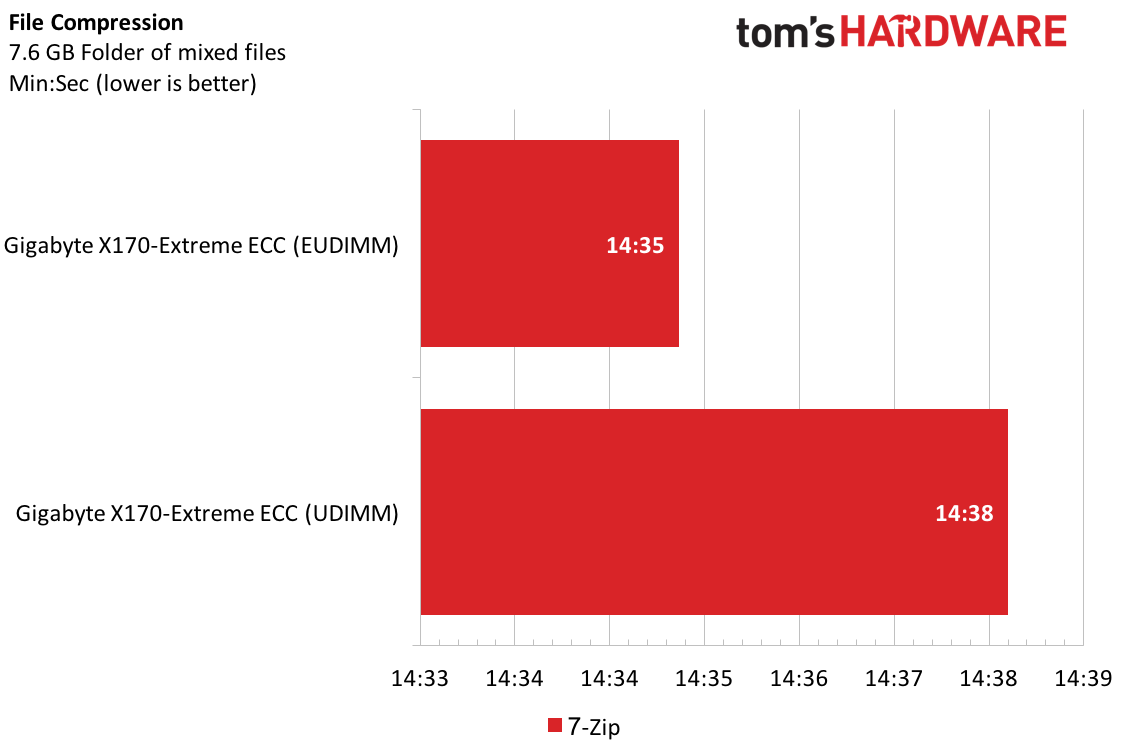

For the prosumer in all of us, this suite is the sweet spot. Finding that happy place between work and play is a delicate balance, and both my test image and hardware achieve that mindset. Comparing compression times for the Sintel Handbrake benchmark, the non-ECC RAM shaves off four seconds from the error checking RAM. ECC strikes back in the Blender workload, besting the competition by more than 20 seconds. This back and forth continues to show a one percentage point difference between both configurations when looking at the Adobe workloads, courtesy of the PCMark 8 suite. Both Lame and 7zip show negligible performance differences, and the Gigabyte and Xeon combo show repeatable results across test runs.

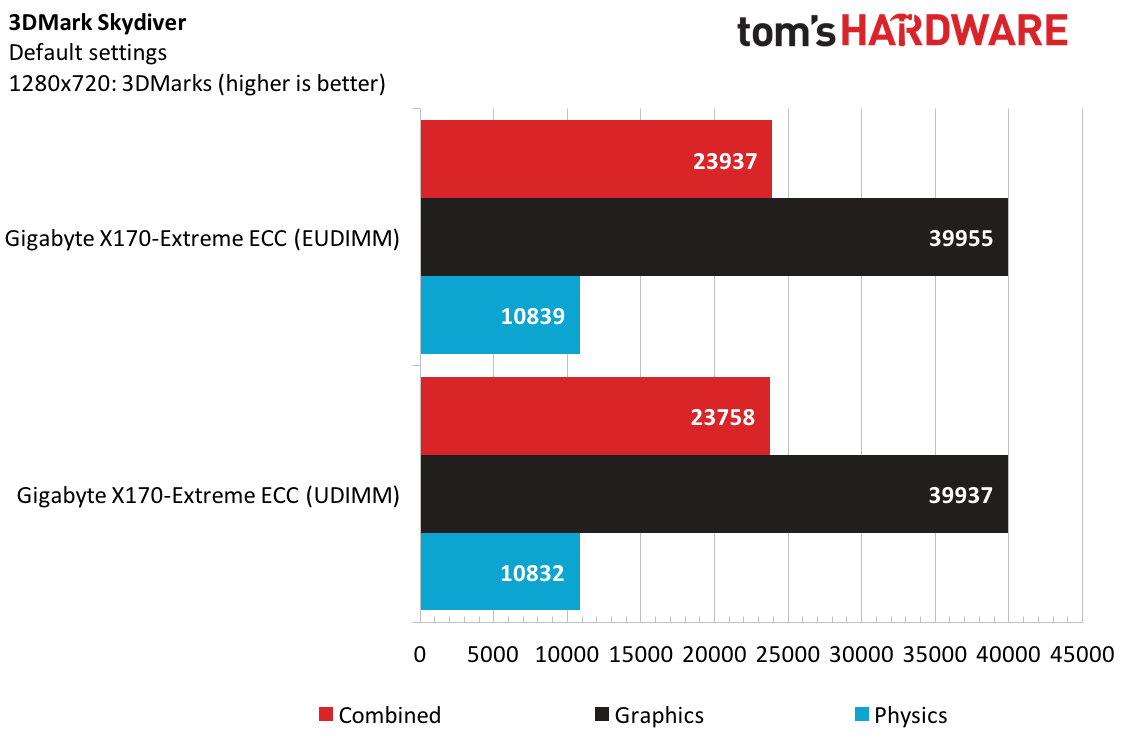

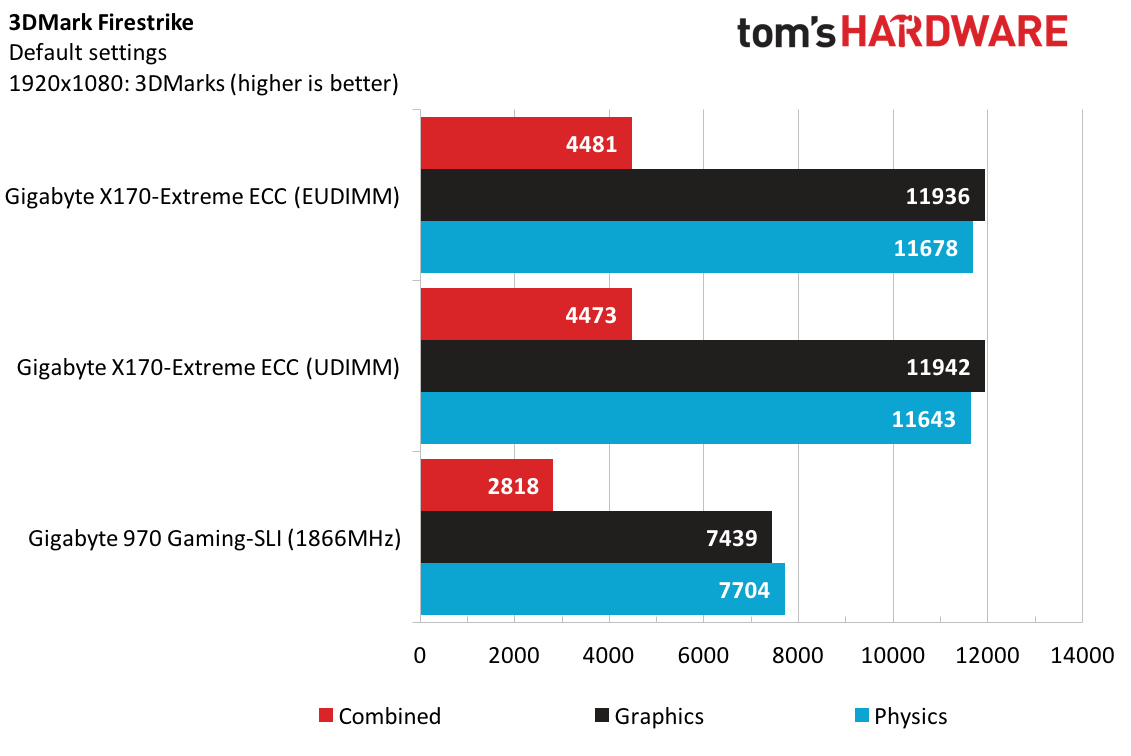

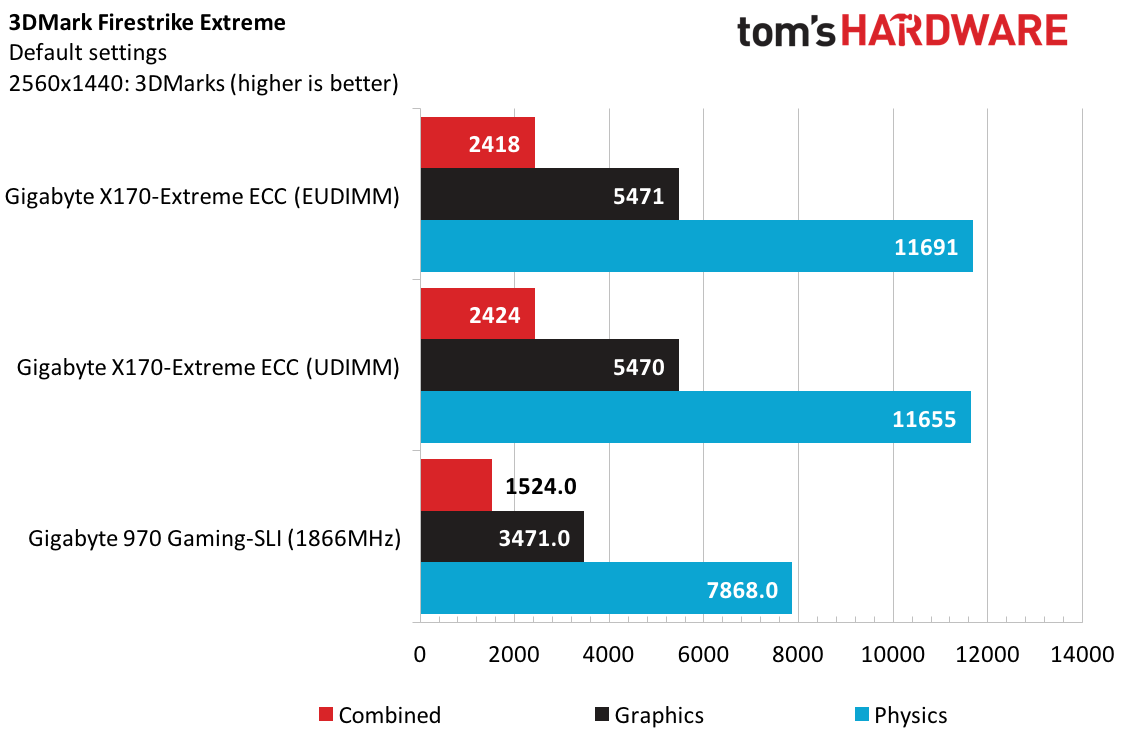

I finally have access to a standardized game benchmark suite, and I finally have a proper graphics solution to match up with the Gigabyte X170-Extreme. Looking at 3DMark, the GTX 970 and Xeon obviously outmatch the 7970 and AMD CPU deployed on the previous platform.

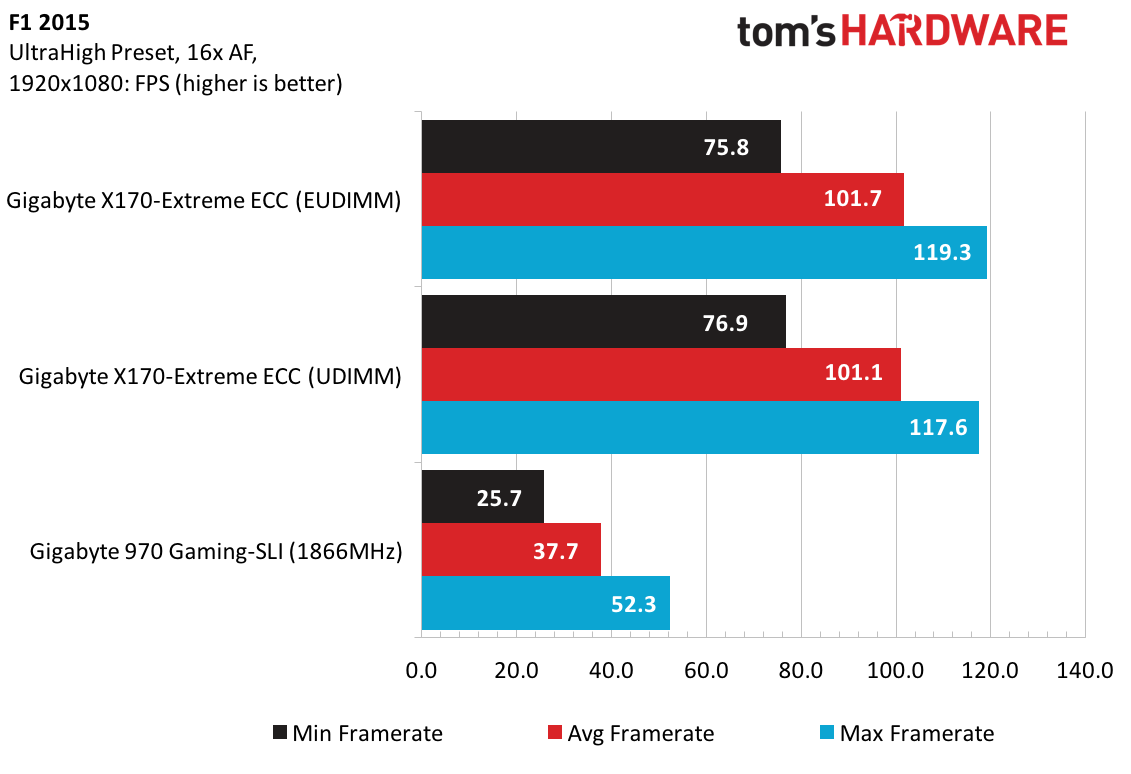

Up to this point, I have noticed very little performance difference between the memory configurations of this platform, so only F1 2015 is tested with both configurations. With rates over 100 FPS, this GTX 970 is ready for higher resolutions, and I can stick to ECC memory for the remaining games.

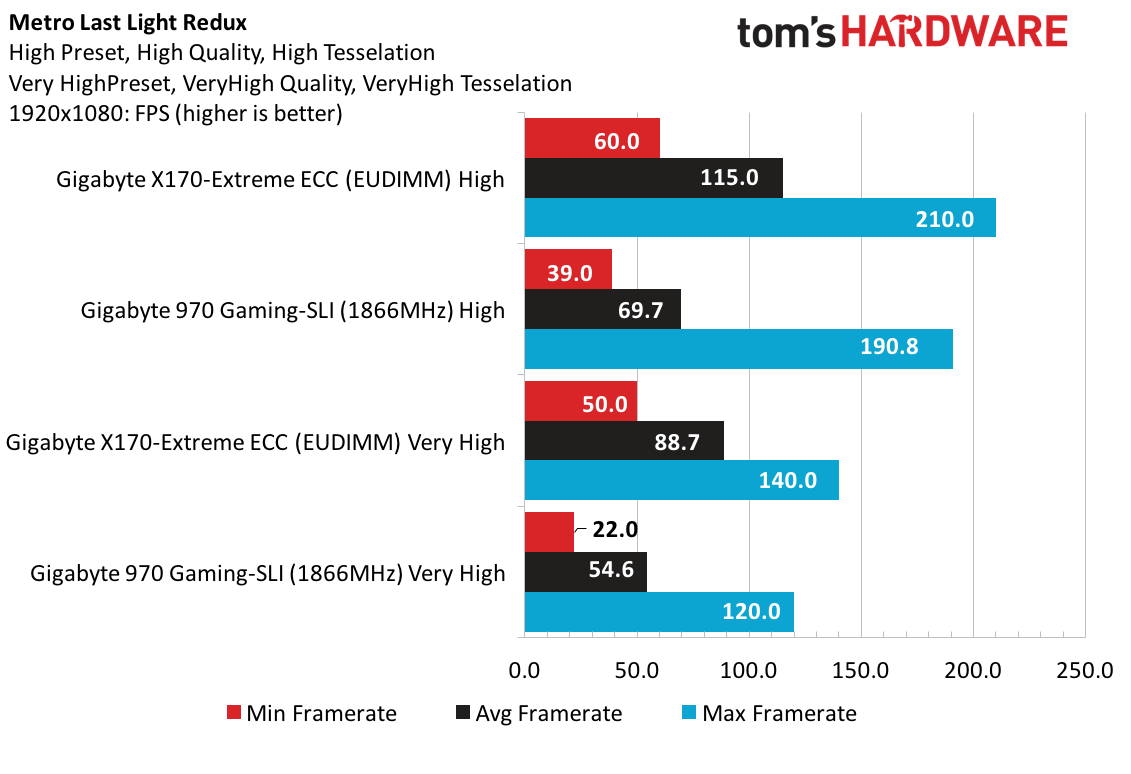

Metro Last Light shows a similar story, and as I increase the graphic settings, the average rate decreases by 26 FPS.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

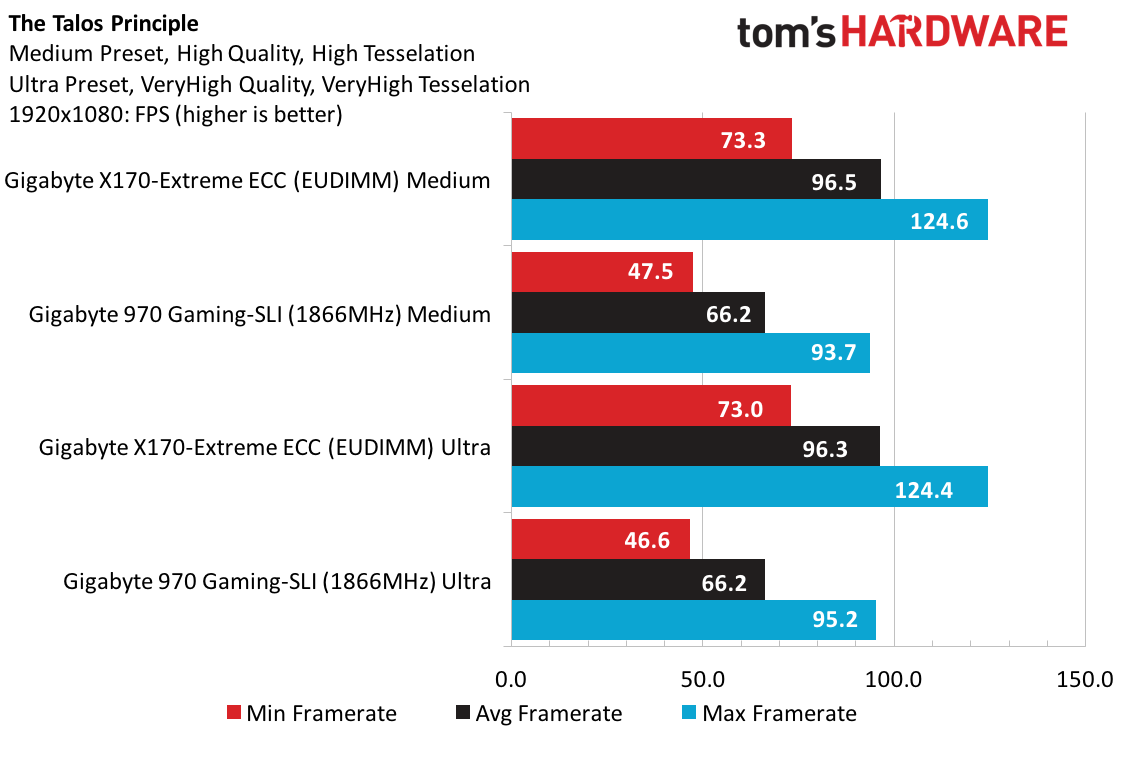

In this puzzle game, The Talos Principle runs a benchmark loop where I see higher rates while in the puzzles than when transitioning between areas. Regardless, at 96 FPS, this game is begging for a 144Hz monitor. It's also worth mentioning that the visual difference, as well as the log from the benchmark, show very little difference in graphical settings between the two test modes. Adding in the 7970 data to this analysis, I would say that there is a graphical bottleneck that might show some improvements with more GPU horsepower.

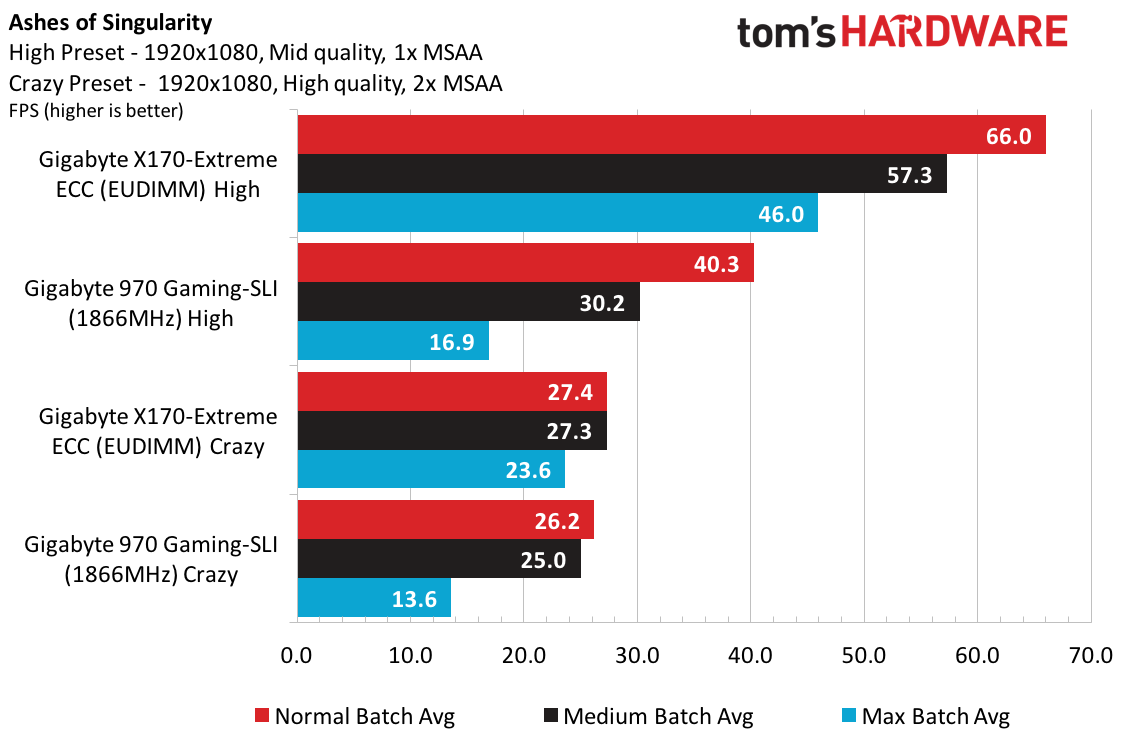

This streak of over performing comes to a screeching halt with Ashes of Singularity. In High mode, the maximum batch average framerate is humming along at 46 FPS, but as soon as I engage the high quality and additional MSAA, the 970 is put in its place, and the Xeon starts to show signs of sweat as it keeps up.

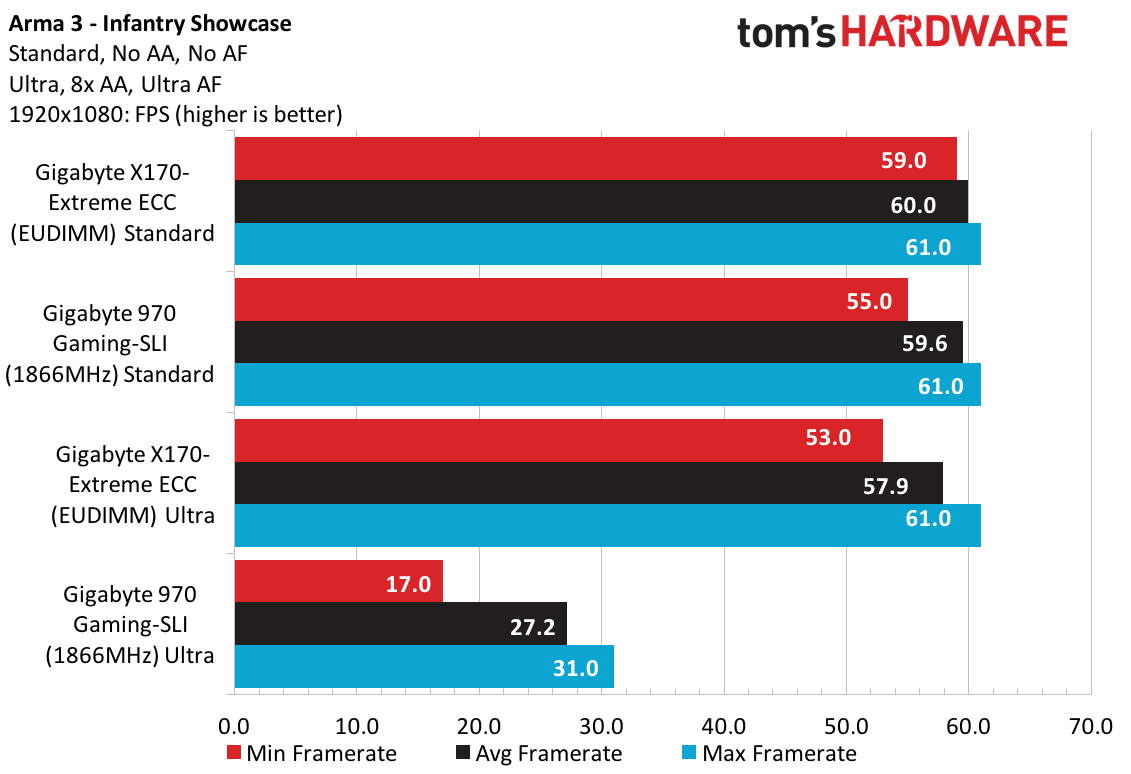

Last, what would a camo motherboard be without playing some Arma 3? Running through Stratis in the Infantry Showcase shows some good ‘ol-fashioned CPU bottlenecking with the standard preset. Flipping on Ultra increases my enjoyment of the “running simulator” while only dropping up to 6 FPS. The AMD data also supports this statement, however the 7970 just can’t keep up with the Ultra preset.

Here’s a question for the readers: Do I need to upgrade to a 1440p or 4k monitor? How about ultra-wide or three 1080p monitors? Let me know what you think in the comments.

Power and Temperatures

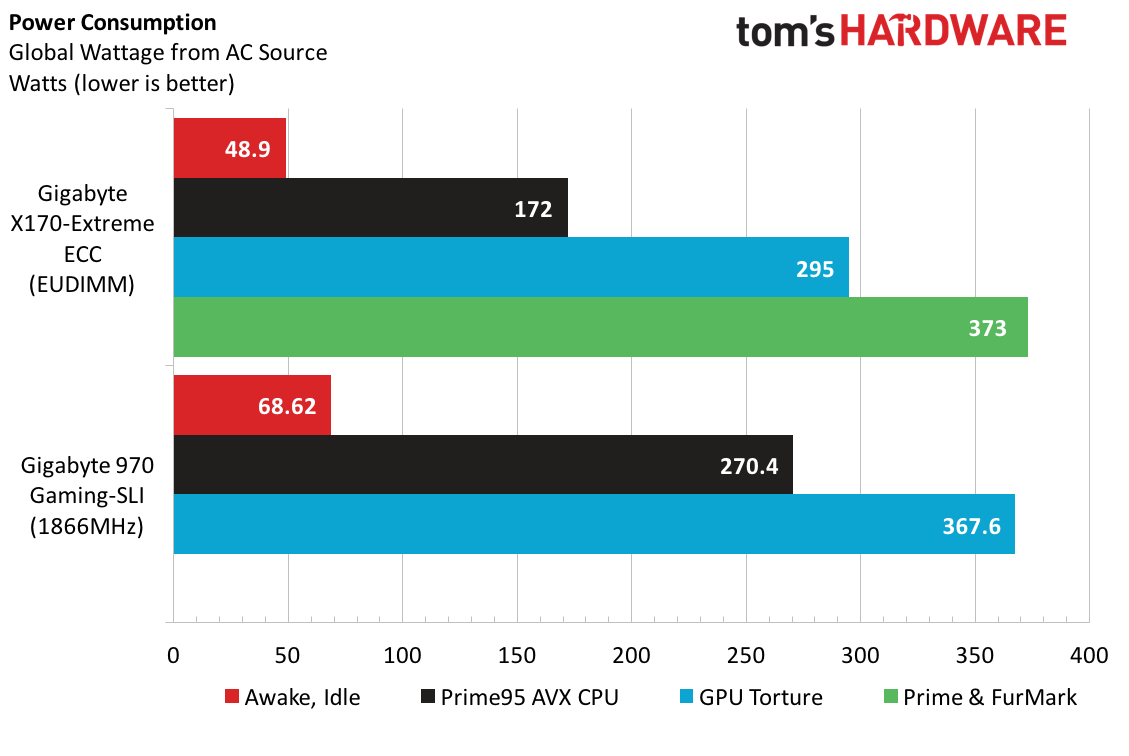

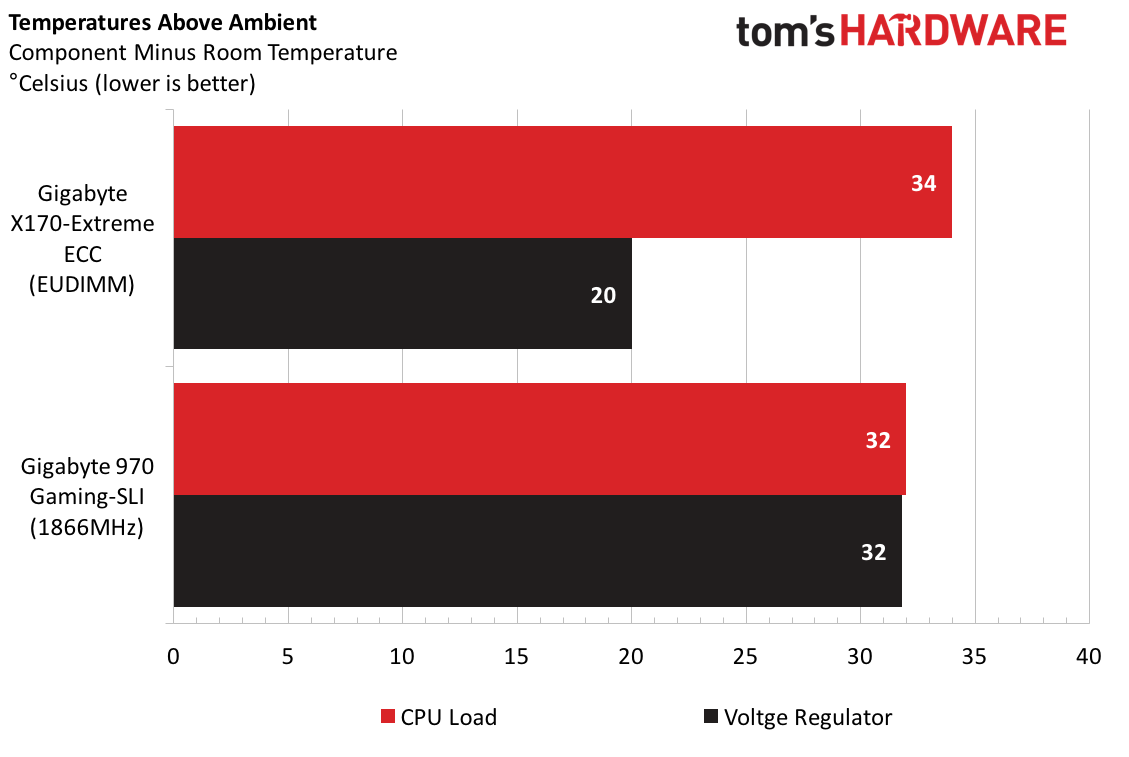

I knew the Xeon would be powerful, and I knew that this platform was going to be solid behind my AX 860. While performing my standard power and temperature tests, I am once again in awe of this platform’s efficiency. I save 20W at idle, 100W at max CPU, 70W at max GPU, and I draw only five more watts when running 100% utilization on my system (compared to my AMD system) just stressing the GPU. Tie that together with intended 24/7 utilization scenario that this platform is built for, and the prosumer truly has a rock to rely on. The heatsinks on the VRMs do a mighty fine job keeping the regulators cool and could probably handle more restrictive airflow or smaller, denser deployments.

The one downfall is what we mentioned earlier: overclocking. Given the stability of this system and its ability to regulate temperature and power delivery, I would imagine this board would have no problem squeezing out 300 or more MHz. I hope this adds fuel to the fire at Gigabyte for enabling its Turbo BCLK feature on this product!

Value Conclusion

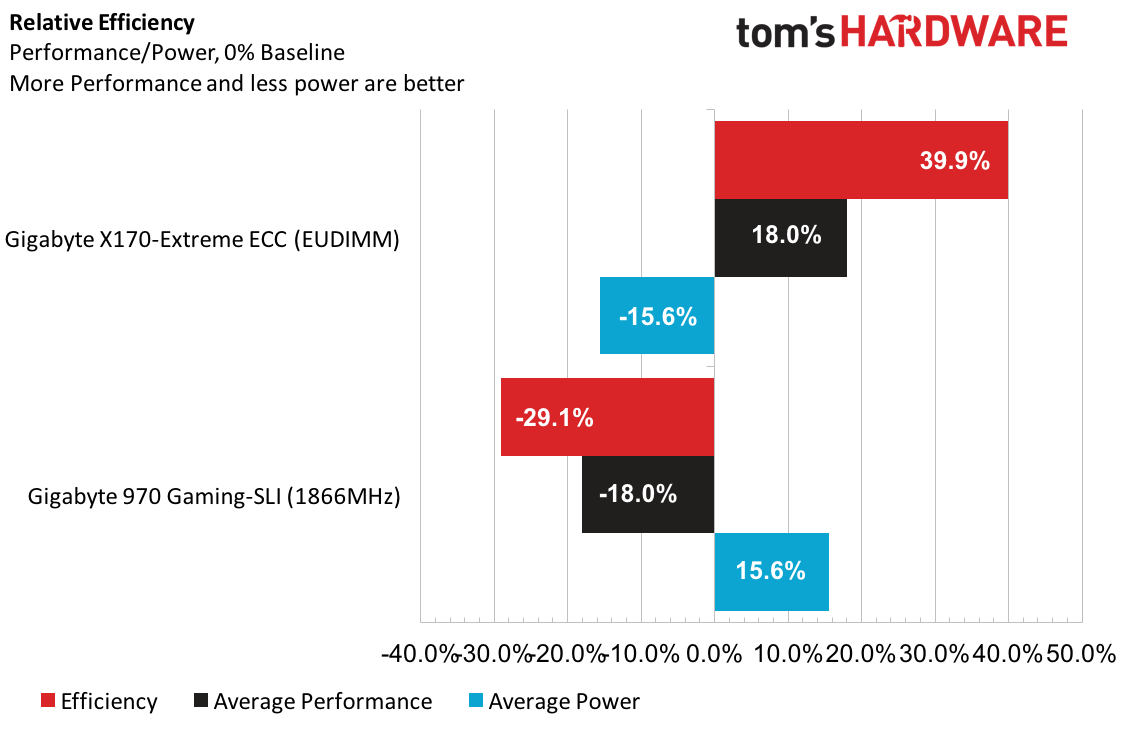

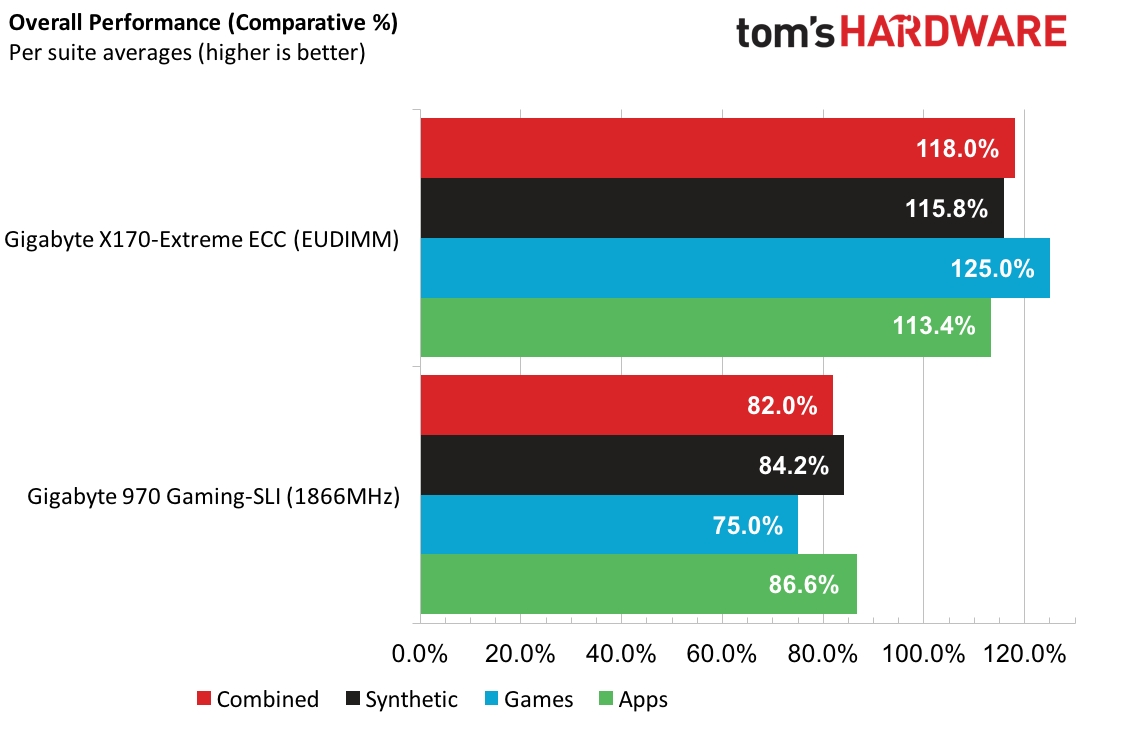

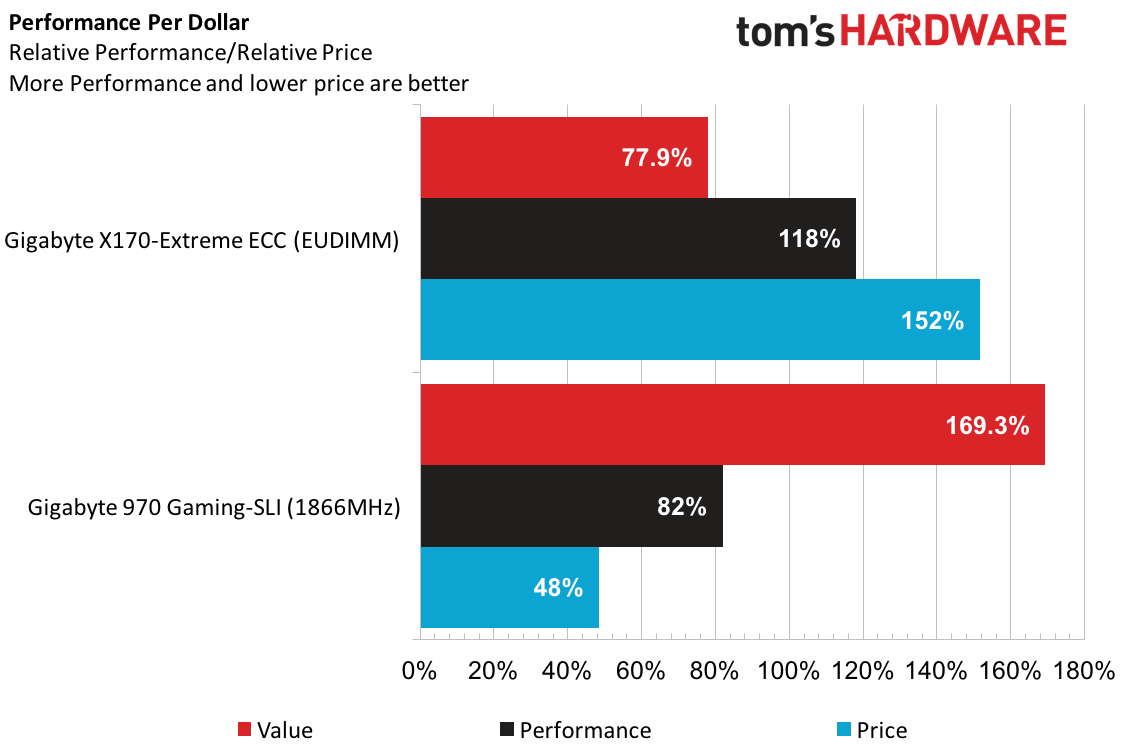

Value doesn’t really apply here, but for argument's sake, I’ll keep it in. I have done my best to only apply metrics that are consistent across the C236 and AMD 970, and it is no surprise that the Xeon is car lengths ahead of the AMD platform in terms of performance.

The C236 shows a 36% lead against the AMD 970 board for average performance, and that only compounds further when factoring in the system’s power efficiency. The only saving grace the AMD platform has is price. If I make the AMD 970 the baseline, Gigabyte’s X170-Extreme ECC comes in over three times the cost, and the platform only generates 44% more performance. This translates into a sad 46% value proposition. When milliseconds count and efficiency is critical, project managers can still find a return on investment sizing to justify the cost. Electricity savings alone may save the prosumer enough money to recoup the costs of several components.

From a PCH perspective, the C236 has its place in the market. Not everyone is interested in overclocking, and reliability and up-time are valued by all consumers to a certain degree. While Z170 can spout fancy MHz figures and attract the entire spectrum of gamers, C236 lures in the professional within all of us. Just because I crunch simulation data during the day without faults doesn’t mean streaming videos at night won’t cause failures.

As for the Gigabyte X170 Extreme ECC, it is solid. Quality components, great features, and (mostly) great design make this board a solid foundation for high-end builds and workstation deployments. If I am going to spend $300 on a board, I would have preferred dual-latched DIMM connectors, an additional fan header, and an advertised feature to actually be enabled in the UEFI.

I want to thank Gigabyte and Crucial for providing the bulk of the components for this prosumer reference system and I hope to come back and give it a Tom’s Hardware award once I get some more C232/C236 samples and test them head to head.

MORE: Best Motherboards

MORE: How To Choose A Motherboard

MORE: All Motherboard Content

Follow us on Facebook, Google+, RSS, Twitter and YouTube.

Current page: Test Results And Conclusion

Prev Page The GA-X170-EXTREME ECC UEFI: More Options, Less Overclocking-

Malik 722 this board and z170x gaming 7 looks like the very same thing same layout,same features and same connectors.although a different paint scheme.but what really makes this board more pricey than gaming 7 aside from xeon support.Reply -

andrewpong Hasn't SuperMicro's X11SAT-F supported Thunderbolt 3 on the C236 chipset for a while now?Reply -

logainofhades Still saddened by Intel forcing Xeon to use a C232/236 chipset. Been able to run Xeons, in consumer chipsets, since at least core 2. I had an X3210 @ 3.6ghz, in an Abit P35 pro, back in the day.Reply -

The Jedi Yeah, you're a hardware reviewer Jacob. That is all the excuse you need to be on the bleeding edge with a Kaby Lake system and a 4K monitor with the graphics to drive it.Reply

Good to read about this unique motherboard. However the lack of XMP is a dealbreaker for me. I think the Asus Sabertooth with the 5-year warranty vs. 3 would do just as fine. Kaby Lake is in my future anyway with that new Netflix DRM it makes available. -

TheTerk @Malik, two words - Ultra Durable. IF testing and reliability are important to you, C236 is the way to go. Otherwise, that Gaming 7 is a spitting image of this board.Reply

@AndrewPong - This board might have been released a week or two before the SuperMicro. Here's Gigabyte's press release: http://www.gigabyte.us/press-center/news-page.aspx?nid=1420

@The Jedi - Is it bad that I'm running a VM on my test rig to run some good ol fashioned Windows XP? If you guys think of something crazy to do on this thing, let me know! -

blazorthon A quote from the third page:Reply

The AMD 970 data presents similar trends in the PCMark Home, Creative, and Work workloads, though the deltas are not as dramatic. The move to DDR4 and this Gigabyte X170-Extreme ECC shows a whopping 38% increase in memory bandwidth compared to the DDR3 implemented in the Gigabyte 970 Gaming-SLI sample running at similar frequencies.

The move to DDR4 has practically nothing to do with Intel's advantage in memory bandwidth. Intel simply has a better memory controller. They've had an advantage in this since Sandy Bridge and that's easily knowable since every Intel and AMD CPU review since Sandy with memory benchmarks demonstrates it with DDR3 and with DDR4.

DDR4 does not improve bandwidth over DDR3 at the same frequency when all else (within reason) is equal. It improves bandwidth by allowing higher frequencies.