Why you can trust Tom's Hardware

One of the bigger selling points for the Arc A380, and Intel's Arc Alchemist GPUs in general, is the support for hardware accelerated AV1 and VP9 video encoding. Current AMD and Nvidia GPUs only support hybrid decoding of VP9 and AV1, where some of the work is done by the CPU, and both codecs can prove quite taxing of lesser CPUs.

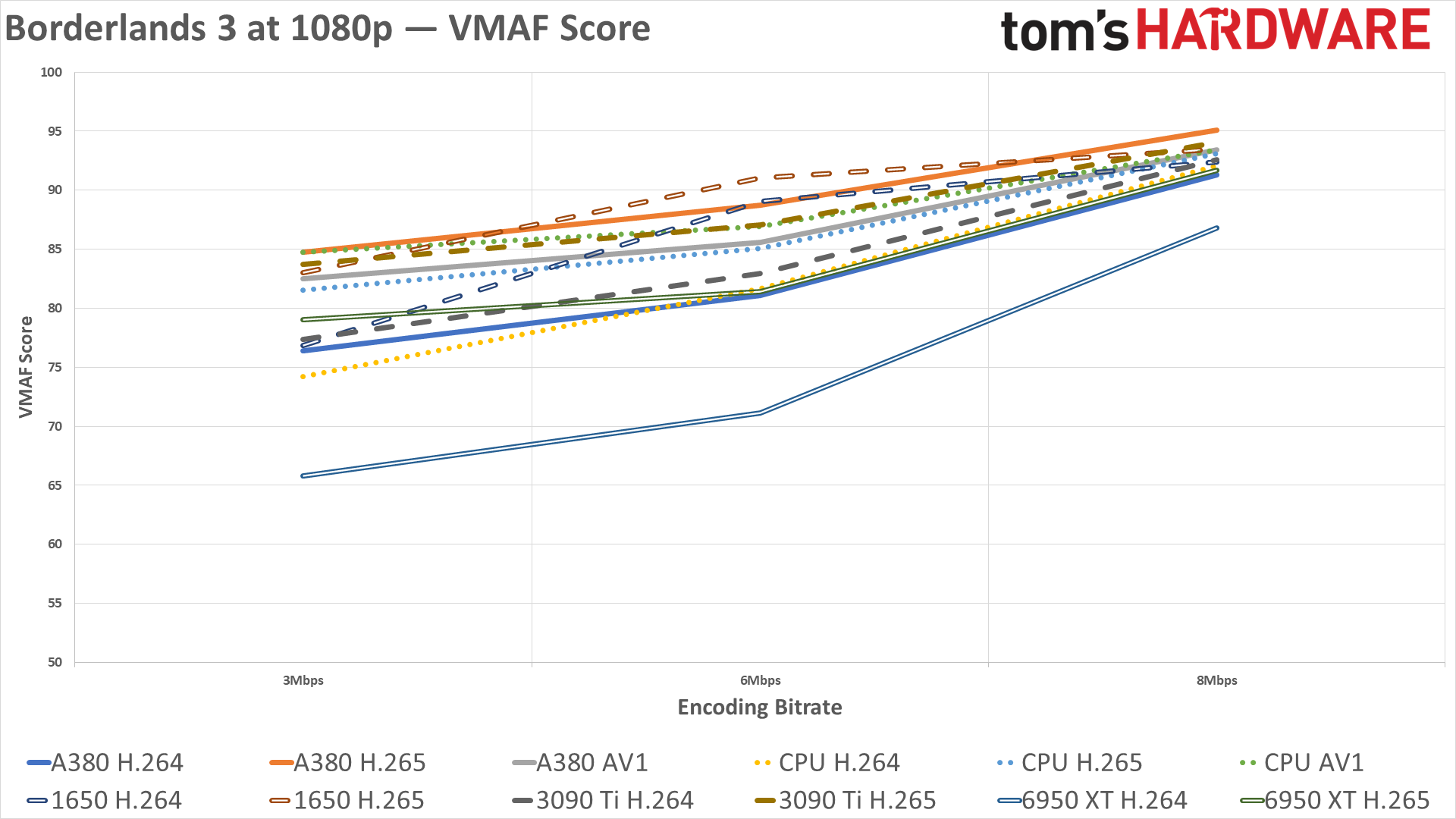

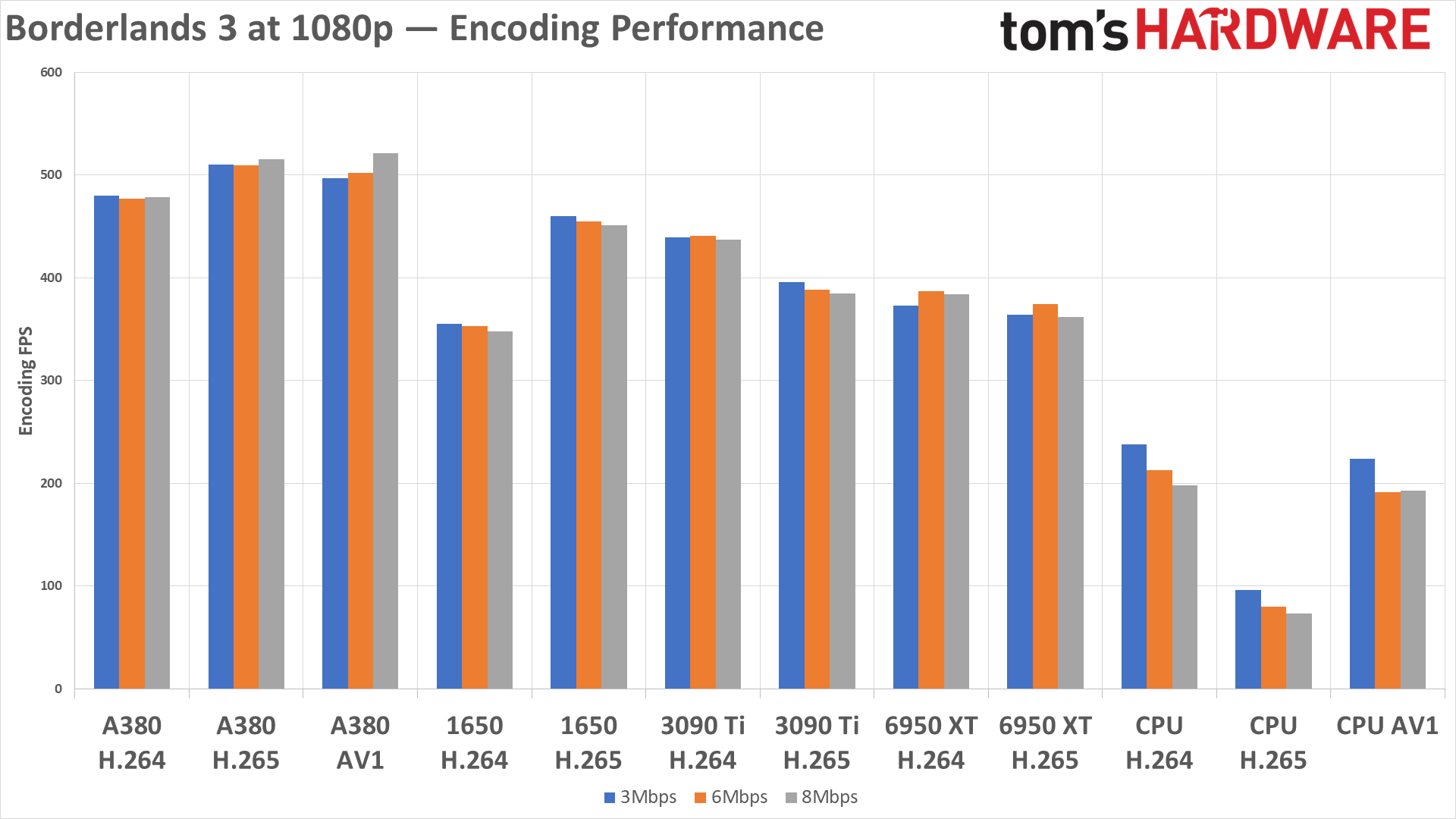

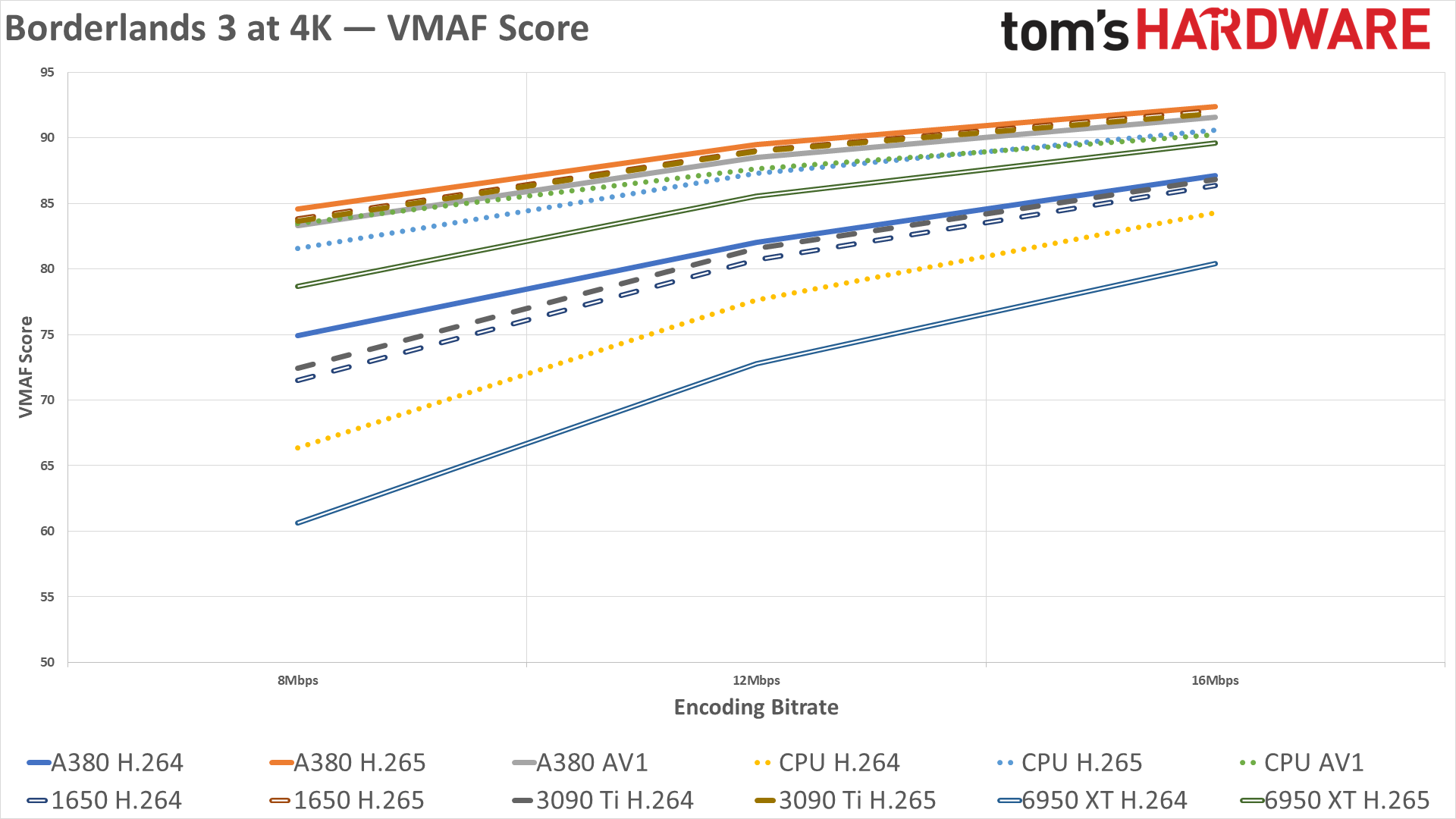

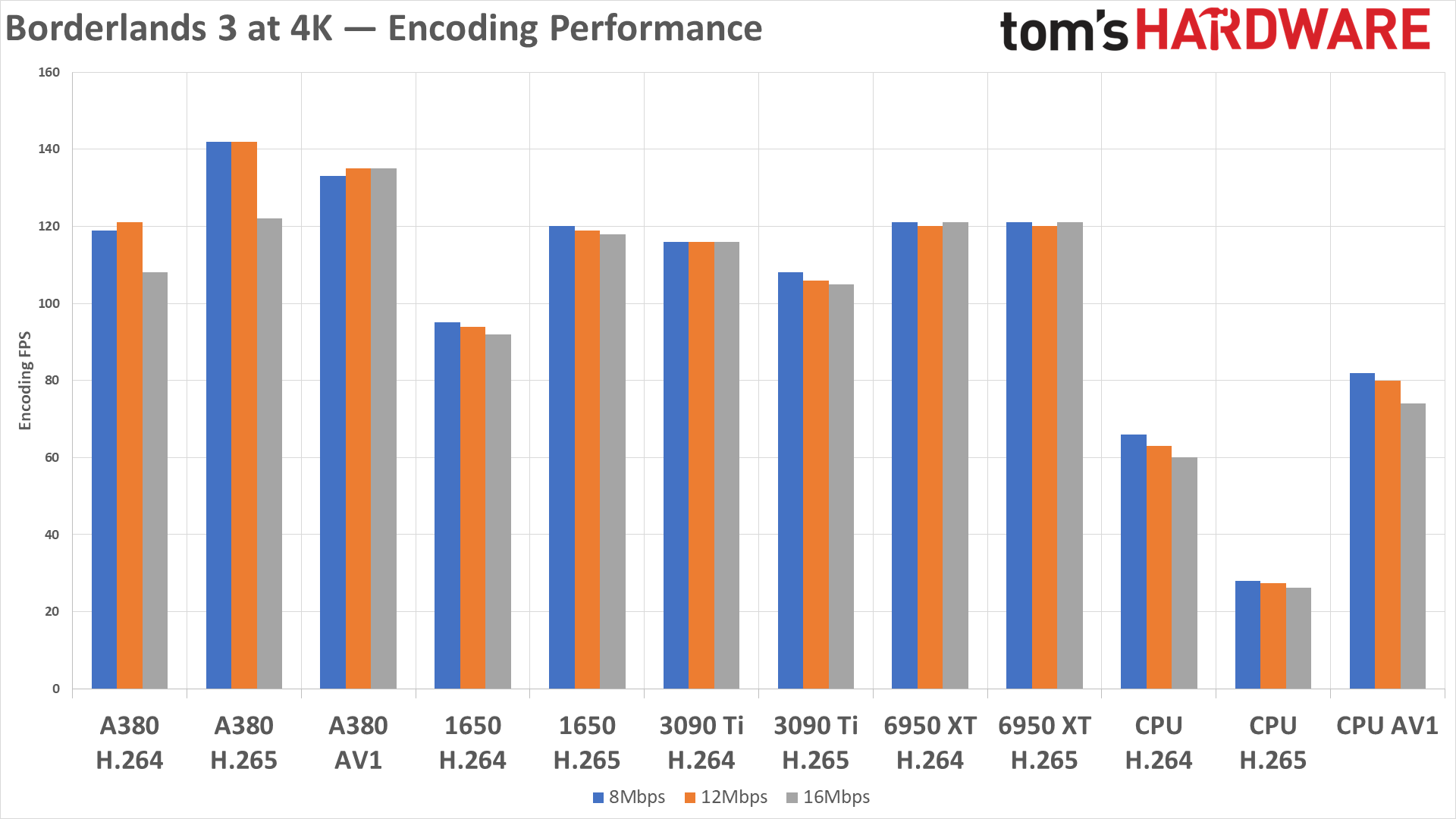

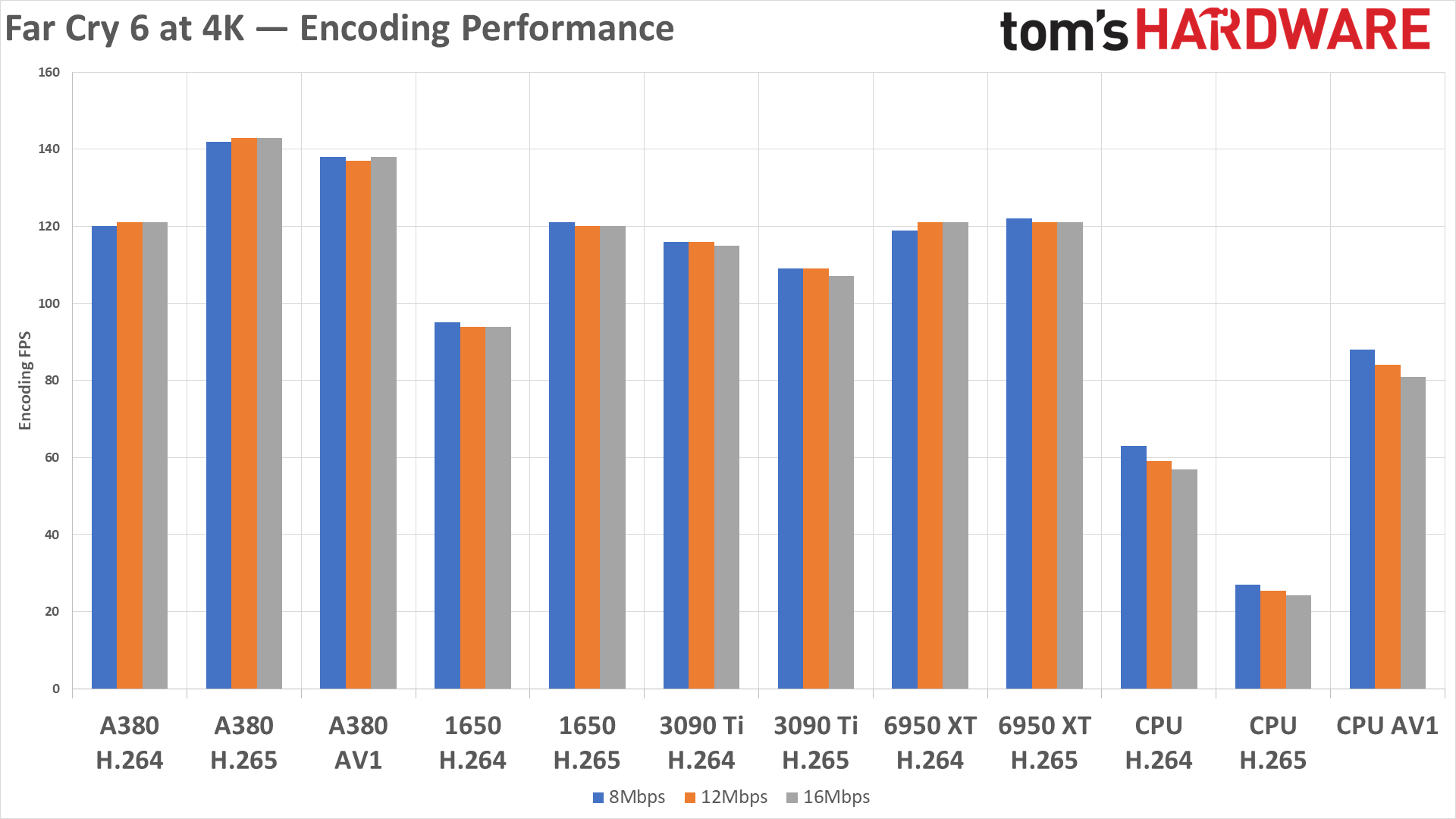

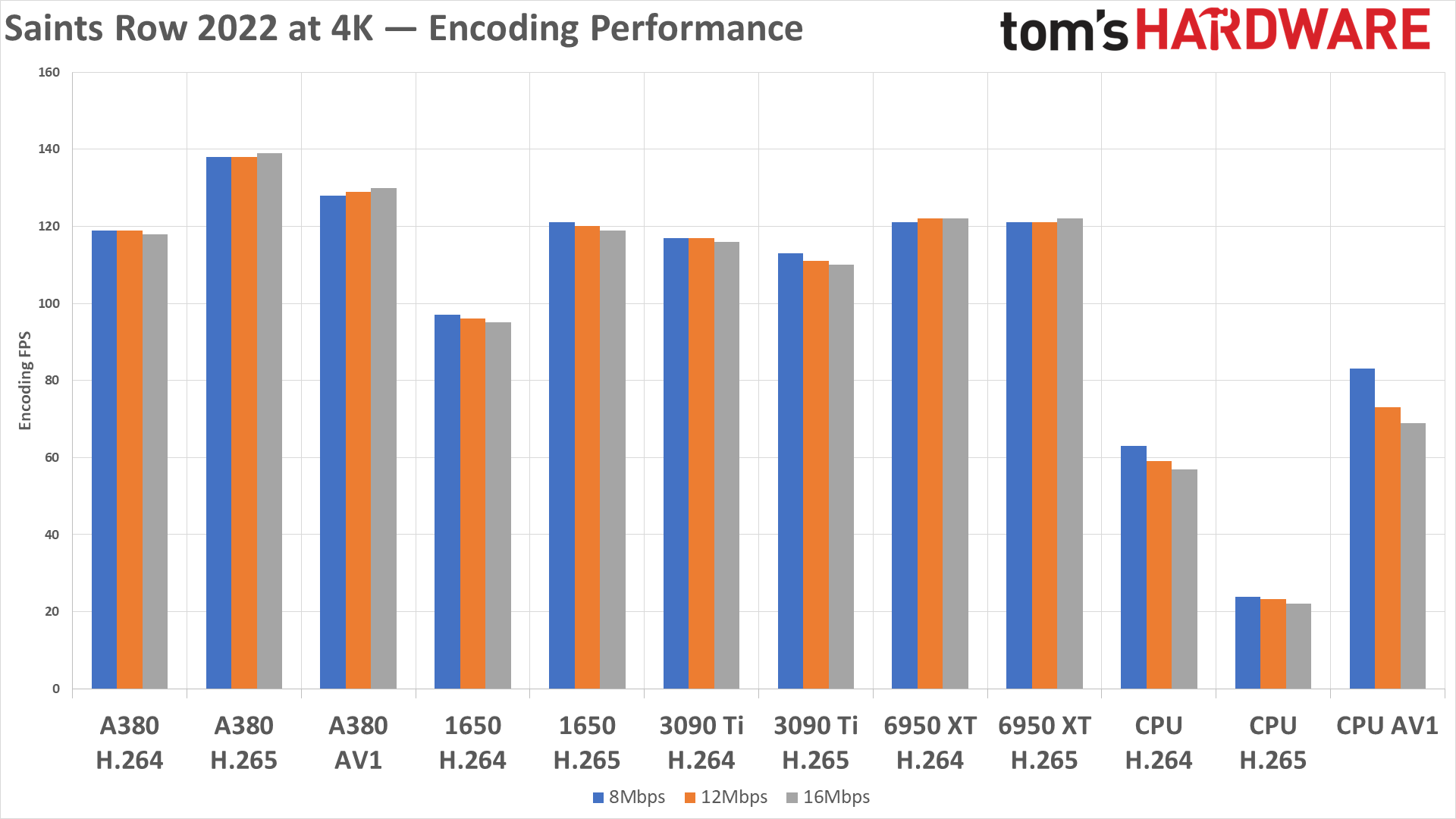

We took four video clips and then used FFmpeg to encode them at various bitrates. One of the videos is a 1080p source, while the other three are 4K videos. For 1080p, we used bitrates of 3 Mbps, 6 Mbps, and 8 Mbps; on the 4K videos, we used 8 Mbps, 12 Mbps, and 16 Mbps. Generally speaking, 4K encoding (with four times as many pixels) ends up taking three to four times longer than 1080p encoding, or if you prefer, it requires three to four times as much computational power.

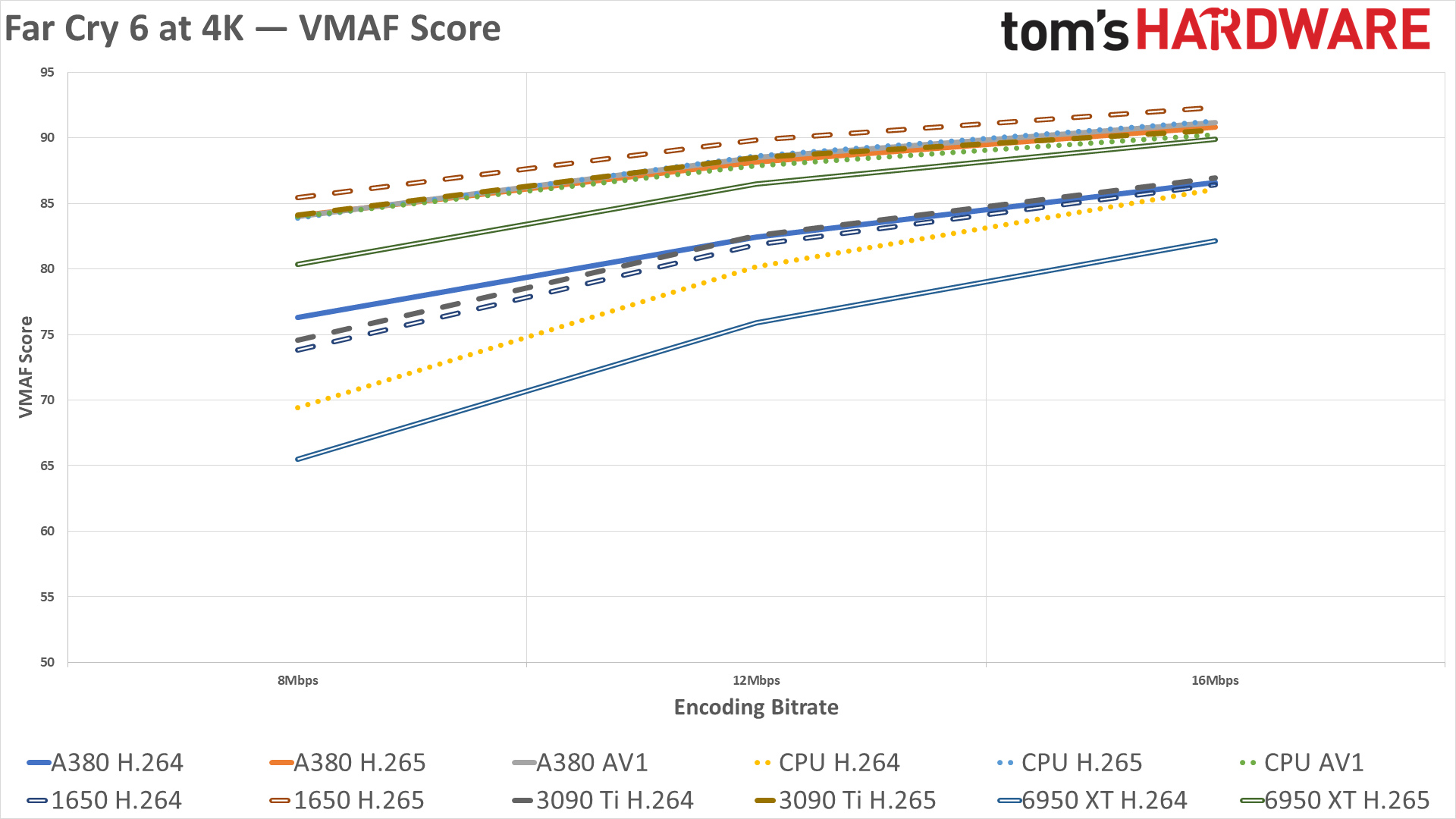

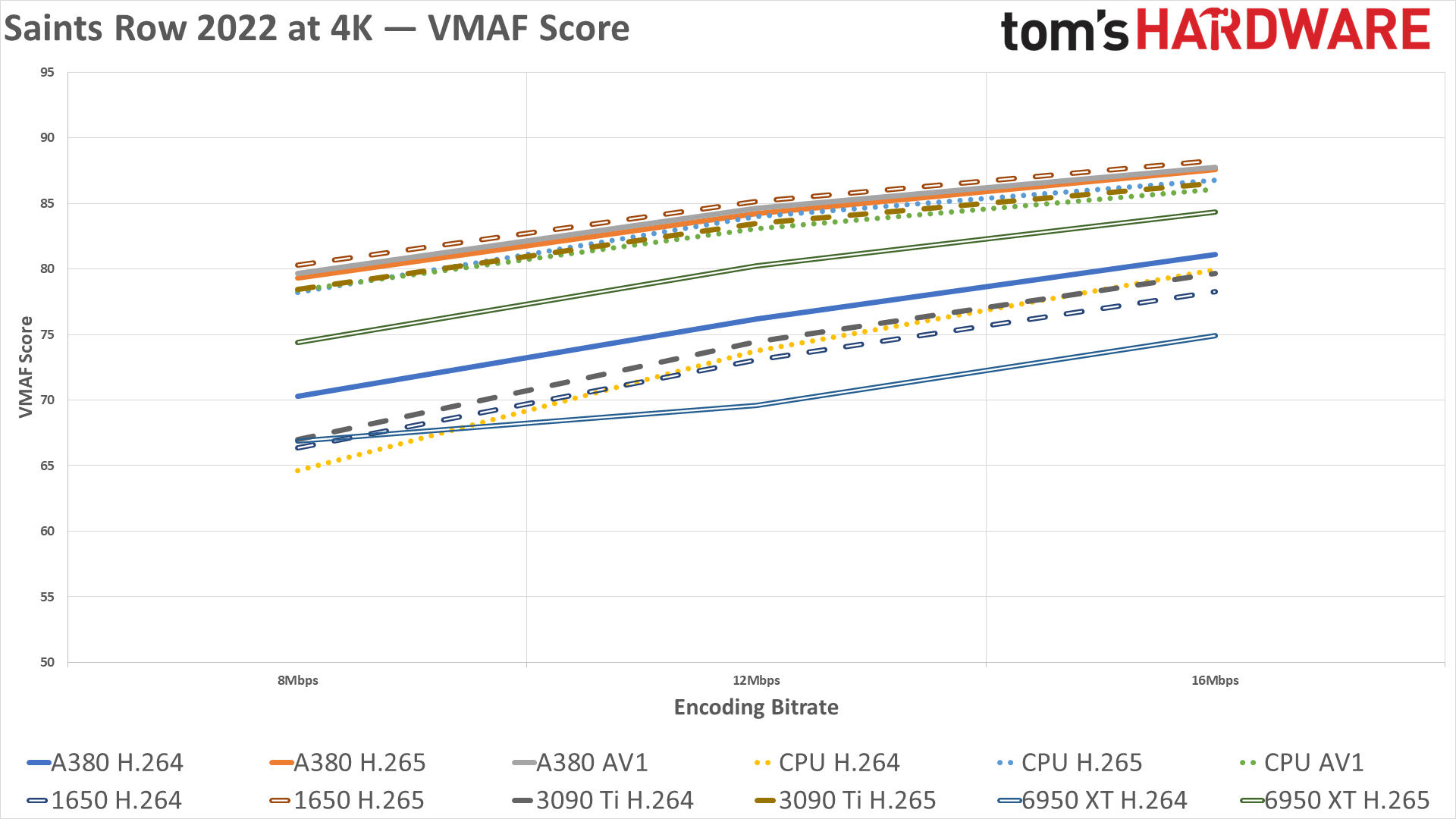

We used Intel's Cartwheel-FFmpeg for the AV1 encoding, and actually couldn't figure out the correct way to do VP9 encoding, while the latest public nightly release was used for the AMD and Nvidia GPUs. We also used FFmpeg to perform VMAF (Video Multi-Method Assessment Fusion) quality comparison of the encoded files. VMAF was created by Netflix as a way to assess the quality of various codecs and bitrates, with scores of 90 or above being "generally indistinguishable" from the source content, scores of 80–90 are good, 70–80 is adequate, and below that the blocking and artifacts become clearly visible.

We've provided the charts for the encoding speed (FPS) and encoding quality (VMAF), along with tables of the raw data for those that want the exact numbers. Other encoding software solutions may have different results. We did try using HandBrake (Nightly) for some tests as well, but ultimately ran into some difficulties and found that FFmpeg was easier to use.

| Encoder | FPS 3M | VMAF 3M | FPS 6M | VMAF 6M | FPS 8M | VMAF 8M |

|---|---|---|---|---|---|---|

| A380 H.264 | 480 | 76.4 | 477 | 81.11 | 478 | 91.31 |

| A380 H.265 | 510 | 84.74 | 509 | 88.71 | 515 | 95.12 |

| A380 AV1 | 497 | 82.5 | 502 | 85.6 | 521 | 93.4 |

| CPU H.264 | 238 | 74.19 | 213 | 81.69 | 198 | 92.05 |

| CPU H.265 | 95.9 | 81.51 | 80.25 | 85.09 | 73.5 | 93.08 |

| CPU AV1 | 224 | 84.76 | 191 | 86.93 | 193 | 93.42 |

| 1650 H.264 | 355 | 76.86 | 353 | 89.04 | 348 | 92.41 |

| 1650 H.265 | 460 | 83.02 | 455 | 91.02 | 451 | 93.49 |

| 3090 Ti H.264 | 439 | 77.38 | 441 | 82.94 | 437 | 92.62 |

| 3090 Ti H.265 | 396 | 83.75 | 388 | 87.08 | 385 | 94.07 |

| 6950 XT H.264 | 373 | 65.79 | 387 | 71.12 | 384 | 86.79 |

| 6950 XT H.265 | 364 | 79.02 | 374 | 81.39 | 362 | 91.72 |

| Encoder | FPS 8M | VMAF 8M | FPS 12M | VMAF 12M | FPS 16M | VMAF 16M |

|---|---|---|---|---|---|---|

| A380 H.264 | 119 | 74.94 | 121 | 82.02 | 108 | 87.14 |

| A380 H.265 | 142 | 84.58 | 142 | 89.49 | 122 | 92.36 |

| A380 AV1 | 133 | 83.28 | 135 | 88.5 | 135 | 91.56 |

| CPU H.264 | 66 | 66.33 | 63 | 77.65 | 60 | 84.31 |

| CPU H.265 | 28 | 81.59 | 27.5 | 87.28 | 26.3 | 90.58 |

| CPU AV1 | 82 | 83.48 | 80 | 87.66 | 74 | 90.23 |

| 1650 H.264 | 95 | 71.53 | 94 | 80.67 | 92 | 86.38 |

| 1650 H.265 | 120 | 83.81 | 119 | 89.05 | 118 | 92.08 |

| 3090 Ti H.264 | 116 | 72.42 | 116 | 81.55 | 116 | 86.85 |

| 3090 Ti H.265 | 108 | 83.62 | 106 | 88.99 | 105 | 91.86 |

| 6950 XT H.264 | 121 | 60.66 | 120 | 72.78 | 121 | 80.4 |

| 6950 XT H.265 | 121 | 78.69 | 120 | 85.57 | 121 | 89.59 |

| Encoder | FPS 8M | VMAF 8M | FPS 12M | VMAF 12M | FPS 16M | VMAF 16M |

|---|---|---|---|---|---|---|

| A380 H.264 | 120 | 76.3 | 121 | 82.44 | 121 | 86.62 |

| A380 H.265 | 142 | 84.03 | 143 | 88.15 | 143 | 90.8 |

| A380 AV1 | 138 | 84 | 137 | 88.53 | 138 | 91.16 |

| CPU H.264 | 63 | 69.45 | 59 | 80.19 | 57 | 86.07 |

| CPU H.265 | 27.1 | 83.9 | 25.5 | 88.6 | 24.2 | 91.29 |

| CPU AV1 | 88 | 83.99 | 84 | 87.89 | 81 | 90.24 |

| 1650 H.264 | 95 | 73.81 | 94 | 81.85 | 94 | 86.4 |

| 1650 H.265 | 121 | 85.43 | 120 | 89.83 | 120 | 92.32 |

| 3090 Ti H.264 | 116 | 74.59 | 116 | 82.53 | 115 | 86.97 |

| 3090 Ti H.265 | 109 | 84.11 | 109 | 88.52 | 107 | 90.56 |

| 6950 XT H.264 | 119 | 65.51 | 121 | 75.88 | 121 | 82.12 |

| 6950 XT H.265 | 122 | 80.36 | 121 | 86.5 | 121 | 89.87 |

| Encoder | FPS 8M | VMAF 8M | FPS 12M | VMAF 12M | FPS 16M | VMAF 16M |

|---|---|---|---|---|---|---|

| A380 H.264 | 119 | 70.28 | 119 | 76.2 | 118 | 81.09 |

| A380 H.265 | 138 | 79.33 | 138 | 84.24 | 139 | 87.59 |

| A380 AV1 | 128 | 79.68 | 129 | 84.64 | 130 | 87.73 |

| CPU H.264 | 63 | 64.62 | 59 | 73.75 | 57 | 79.94 |

| CPU H.265 | 23.9 | 78.2 | 23.2 | 84 | 22 | 86.78 |

| CPU AV1 | 83 | 78.39 | 73 | 83.06 | 69 | 86.07 |

| 1650 H.264 | 97 | 66.35 | 96 | 73.08 | 95 | 78.27 |

| 1650 H.265 | 121 | 80.27 | 120 | 85.17 | 119 | 88.26 |

| 3090 Ti H.264 | 117 | 66.99 | 117 | 74.44 | 116 | 79.67 |

| 3090 Ti H.265 | 113 | 78.42 | 111 | 83.47 | 110 | 86.53 |

| 6950 XT H.264 | 121 | 66.9 | 122 | 69.6 | 122 | 74.92 |

| 6950 XT H.265 | 121 | 74.38 | 121 | 80.22 | 122 | 84.33 |

One thing that's immediately obvious is just how bad AMD's H.264 encoding is compared to the competition. Performance is fine, but it's consistently last place in quality, with the only result that was in second to last being Saints Row 4K at 8 Mbps, where it tied Nvidia's H.264 result and beat the libx264 CPU result.

What you might not have expected is that Intel's AV1 GPU encoding really only matches the quality offered by Intel and Nvidia with HEVC / H.265. The problem as mentioned earlier with HEVC is that it's not royalty free, and while that's theoretically also true of H.264, royalties generally weren't as steep or perhaps even collected at all. AV1 and VP9 are royalty-free alternatives to HEVC, and as such they're more likely to win out over the long term.

YouTube has begun experimenting with AV1, as a slightly higher-quality alternative to VP9. Twitch and other services may also begin supporting AV1 streaming, which means for example that you could stream 1080p at 3 Mpbs and get better quality than H.264 at 6 Mpbs. You could also do 4K 8Mbps AV1 livestreams and still get higher or equivalent quality to H.264 16 Mbps streams.

As far as encoding performance goes, all of the GPUs are quite a bit faster than even the fastest (current) CPU, with the A380 generally doubling the performance of the 12900K. More importantly, the encoding happens in a dedicated QSV (QuickSync Video) unit on the Intel GPU, so it shouldn't impact gaming performance in any meaningful way.

We can mostly say the same for the AMD and Nvidia encoders, though Nvidia's NVENC has slightly higher performance than AMD's VCE at 1080p and lower performance at 4K. Both are well past the 60 fps mark, however, which is really all that's needed for real-time video streaming.

An interesting side note is the GTX 1650 (TU117) encoding performance and quality. H.264 clearly got faster with Turing and Ampere, but the quality didn't change much. H.265 encoding meanwhile was actually faster on the 1650, with generally slightly higher quality as well. (Incidentally, we also tested a GTX 1650 Super with TU116 and saw virtually identical results to the RTX 3090 Ti.) Our encoding fps metric doesn't include gaming in the background, however, and we understand Turing also reduced the hit to gaming fps if you're livestreaming.

As for CPU encoding, 1080p using the latest Intel AV1SVT codec and FFmpeg isn't actually too shabby. 1080p could easily be done on the "efficiency" E-cores for a CPU like the 12900K at 60 fps or more. 4K on the other hand would use almost the entire CPU to get throughput of anywhere from 69 to 88 fps, depending on the bitrate and video content, so 4K live streaming with just the CPU would be difficult to achieve in many games. The upcoming Raptor Lake CPUs with double the number of E-cores on the other hand should prove sufficient, assuming the software is smart enough to leave the P-core for the game content.

For those that want to see raw comparisons of the various encodes, we've provided a gallery of 27 images from the 1080p video, which consists of the Borderlands 3 built-in benchmark. These are all taken from the 6 Mbps encodes, sort of a "balanced" mode for 1080p. You can clearly see the compression artifacts on the H.264 encodes, while HEVC (H.265) and AV1 look much closer to the source material. The lower bitrate 3 Mpbs encodes were substantially worse looking, as you'd expect.

If you'd like to see the original video files, without any compression, I've created this personally hosted BitTorrent magnet link:

magnet:?xt=urn:btih:df241d4b80b51ebe47e82fa275385a0fde083bd6&dn=Tom%27s%20Hardware%20Video%20Encodes

For those who are interested, copying that into a BitTorrent client like qBittorrent should do the trick. (If not, let me know in the comments.) I'll try to keep that seeded for the next month or so at least, for anyone that really wants to view the results. It's 7.65GiB of data, spread over 124 files. Seed if you can.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Intel Arc A380 Video Encoding Performance and Quality

Prev Page Intel Arc A380 Gaming Performance Next Page Intel Arc A380: Power, Temps, Noise, Etc.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

cyrusfox Thanks for putting up an review on this. I really am looking for Adobe Suite performance, Photoshop and lightroom. IMy experience is even with a top of the line CPU (12900k) it chugs throuhg some GPU heavy task and was hoping ARC might already be optimized for that.Reply -

brandonjclark While it's pretty much what I expected, remember that Intel has DEEP DEEP pockets. If they stick with this division they'll work it out and pretty soon we'll have 3 serious competitors.Reply -

Giroro What settings were used for the CPU comparison encodes? I would think that the CPU encode should always be able to provide the highest quality, but possibly with unacceptable performance.Reply

I'm also having a hard time reading the charts. Is the GTX 1650 the dashed hollow blue line, or the solid hollow blue line?

A good encoder at the lowest price is not a bad option for me to have. Although, I don't have much faith that Intel will get their drivers in a good enough state before the next generation of GPUs. -

JarredWaltonGPU Reply

Are you viewing on a phone or a PC? Because I know our mobile experience can be... lacking, especially for data dense charts. On PC, you can click the arrow in the bottom-right to get the full-size charts, or at least get a larger view which you can then click the "view original" option in the bottom-right. Here are the four line charts, in full resolution, if that helps:Giroro said:What settings were used for the CPU comparison encodes? I would think that the CPU encode should always be able to provide the highest quality, but possibly with unacceptable performance.

I'm also having a hard time reading the charts. Is the GTX 1650 the dashed hollow blue line, or the solid hollow blue line?

A good encoder at the lowest price is not a bad option for me to have. Although, I don't have much faith that Intel will get their drivers in a good enough state before the next generation of GPUs.

https://cdn.mos.cms.futurecdn.net/dVSjCCgGHPoBrgScHU36vM.pnghttps://cdn.mos.cms.futurecdn.net/hGy9QffWHov4rY6XwKQTmM.pnghttps://cdn.mos.cms.futurecdn.net/d2zv239egLP9dwfKPSDh5N.pnghttps://cdn.mos.cms.futurecdn.net/PGkuG8uq25fNU7o7M8GbEN.png

The GTX 1650 is a hollow dark blue dashed line. The AMD GPU is the hollow solid line, CPU is dots, A380 is solid filled line, and Nvidia RTX 3090 Ti (or really, Turing encoder) is solid dashes. I had to switch to dashes and dots and such because the colors (for 12 lines in one chart) were also difficult to distinguish from each other, and I included the tables of the raw data just to help clarify what the various scores were if the lines still weren't entirely sensible. LOL

As for the CPU encoding, it was done with the same constraints as the GPU: single pass and the specified bitrate, which is generally how you would set things up for streaming (AFAIK, because I'm not really a streamer). 2-pass encoding can greatly improve quality, but of course it takes about twice as long and can't be done with livestreaming. I did not look into other options that might improve the quality at the cost of CPU encoding time, and I also didn't look if there were other options that could improve the GPU encoding quality.

I suspect Arc won't help much at all with Photoshop or Lightroom compared to whatever GPU you're currently using (unless you're using integrated graphics I suppose). Adobe's CC apps have GPU accelerated functions for certain tasks, but complex stuff still chugs pretty badly in my experience. If you want to export to AV1, though, I think there's a way to get that into Premiere Pro and the Arc could greatly increase the encoding speed.cyrusfox said:Thanks for putting up an review on this. I really am looking for Adobe Suite performance, Photoshop and lightroom. IMy experience is even with a top of the line CPU (12900k) it chugs throuhg some GPU heavy task and was hoping ARC might already be optimized for that. -

magbarn Wow, 50% larger die size (much more expensive for Intel vs. AMD) and performs much worse than the 6500XT. Stick a fork in Arc, it's done.Reply -

Giroro Reply

I'm viewing on PC, just the graph legend shows a very similar blue oval for both cardsJarredWaltonGPU said:Are you viewing on a phone or a PC? Because I know our mobile experience can be... lacking, especially for data dense charts -

JarredWaltonGPU Reply

Much of the die size probably gets taken up by XMX cores, QuickSync, DisplayPort 2.0, etc. But yeah, it doesn't seem particularly small considering the performance. I can't help but think with fully optimized drivers, performance could improve another 25%, but who knows if we'll ever get such drivers?magbarn said:Wow, 50% larger die size (much more expensive for Intel vs. AMD) and performs much worse than the 6500XT. Stick a fork in Arc, it's done. -

waltc3 Considering what you had to work with, I thought this was a decent GPU review. Just a few points that occurred to me while reading...Reply

I wouldn't be surprised to see Intel once again take its marbles and go home and pull the ARCs altogether, as Intel did decades back with its ill-fated acquisition of Real3D. They are probably hoping to push it at a loss at retail to get some of their money back, but I think they will be disappointed when that doesn't happen. As far as another competitor in the GPU markets goes, yes, having a solid competitor come in would be a good thing, indeed, but only if the product meant to compete actually competes. This one does not. ATi/AMD have decades of experience in the designing and manufacturing of GPUs, as does nVidia, and in the software they require, and the thought that Intel could immediately equal either company's products enough to compete--even after five years of R&D on ARC--doesn't seem particularly sound, to me. So I'm not surprised, as it's exactly what I thought it would amount to.

I wondered why you didn't test with an AMD CPU...was that a condition set by Intel for the review? Not that it matters, but It seems silly, and I wonder if it would have made a difference of some kind. I thought the review was fine as far it goes, but one thing that I felt was unnecessarily confusing was the comparison of the A380 in "ray tracing" with much more expensive nVidia solutions. You started off restricting the A380 to the 1650/Super, which doesn't ray trace at all, and the entry level AMD GPUs which do (but not to any desirable degree, imo)--which was fine as they are very closely priced. But then you went off on a tangent with 3060's 3050's, 2080's, etc. because of "ray tracing"--which I cannot believe the A380 is any good at doing at all.

The only thing I can say that might be a little illuminating is that Intel can call its cores and rt hardware whatever it wants to call them, but what matters is the image quality and the performance at the end of the day. I think Intel used the term "tensor core" to make it appear to be using "tensor cores" like those in the RTX 2000/3000 series, when they are not the identical tensor cores at all...;) I was glad to see the notation because it demonstrates that anyone can make his own "tensor core" as "tensor" is just math. I do appreciate Intel doing this because it draws attention to the fact that "tensor cores" are not unique to nVidia, and that anyone can make them, actually--and call them anything they want--like for instance "raytrace cores"...;) -

JarredWaltonGPU Reply

Intel seems committed to doing dedicated GPUs, and it makes sense. The data center and supercomputer markets all basically use GPU-like hardware. Battlemage is supposedly well underway in development, and if Intel can iterate and get the cards out next year, with better drivers, things could get a lot more interesting. It might lose billions on Arc Alchemist, but if it can pave the way for future GPUs that end up in supercomputers in five years, that will ultimately be a big win for Intel. It could have tried to make something less GPU-like and just gone for straight compute, but then porting existing GPU programs to the design would have been more difficult, and Intel might actually (maybe) think graphics is becoming important.waltc3 said:I wouldn't be surprised to see Intel once again take its marbles and go home and pull the ARCs altogether, as Intel did decades back with its ill-fated acquisition of Real3D. They are probably hoping to push it at a loss at retail to get some of their money back, but I think they will be disappointed when that doesn't happen. As far as another competitor in the GPU markets goes, yes, having a solid competitor come in would be a good thing, indeed, but only if the product meant to compete actually competes. This one does not. ATi/AMD have decades of experience in the designing and manufacturing of GPUs, as does nVidia, and in the software they require, and the thought that Intel could immediately equal either company's products enough to compete--even after five years of R&D on ARC--doesn't seem particularly sound, to me. So I'm not surprised, as it's exactly what I thought it would amount to.

I wondered why you didn't test with an AMD CPU...was that a condition set by Intel for the review? Not that it matters, but It seems silly, and I wonder if it would have made a difference of some kind. I thought the review was fine as far it goes, but one thing that I felt was unnecessarily confusing was the comparison of the A380 in "ray tracing" with much more expensive nVidia solutions. You started off restricting the A380 to the 1650/Super, which doesn't ray trace at all, and the entry level AMD GPUs which do (but not to any desirable degree, imo)--which was fine as they are very closely priced. But then you went off on a tangent with 3060's 3050's, 2080's, etc. because of "ray tracing"--which I cannot believe the A380 is any good at doing at all.

Intel set no conditions on the review. We purchased this card, via a go-between, from China — for WAY more than the card is worth, and then it took nearly two months to get things sorted out and have the card arrive. That sucked. If you read the ray tracing section, you'll see why I did the comparison. It's not great, but it matches an RX 6500 XT and perhaps indicates Intel's RTUs are better than AMD's Ray Accelerators, and maybe even better than Nvidia's Ampere RT cores — except Nvidia has a lot more RT cores than Arc has RTUs. I restricted testing to cards priced similarly, plus the next step up, which is why the RTX 2060/3050 and RX 6600 are included.

The only thing I can say that might be a little illuminating is that Intel can call its cores and rt hardware whatever it wants to call them, but what matters is the image quality and the performance at the end of the day. I think Intel used the term "tensor core" to make it appear to be using "tensor cores" like those in the RTX 2000/3000 series, when they are not the identical tensor cores at all...;) I was glad to see the notation because it demonstrates that anyone can make his own "tensor core" as "tensor" is just math. I do appreciate Intel doing this because it draws attention to the fact that "tensor cores" are not unique to nVidia, and that anyone can make them, actually--and call them anything they want--like for instance "raytrace cores"...;)

Tensor cores refer to a specific type of hardware matrix unit. Google has TPUs, various other companies are also making tensor core-like hardware. Tensorflow is a popular tool for AI workloads, which is why the "tensor cores" name came into being AFAIK. Intel calls them Xe Matrix Engines, but the same principles apply: lots of matrix math, focusing especially on multiply and accumulate as that's what AI training tends to use. But tensor cores have literally nothing to do with "raytrace cores," which need to take DirectX Raytracing structures (or VulkanRT) to be at all useful. -

escksu The ray tracing shows good promise. The video encoder is the best. 3d performance is meh but still good enough for light gaming.Reply

If it's retails price is indeed what it shows, then I believe it will sell. Of course, Intel won't make much (if any) from these cards.