Why you can trust Tom's Hardware

The Arc A750 is primarily a 1080p, and perhaps a 1440p gaming solution. So we'll start with 1080p ultra, then 1440p ultra, and then move on to 1080p medium and 4K ultra. Those last two are more to show the full range of performance, though CPU bottlenecks tend to come into play at 1080p medium while 4K ultra mostly requires far more potent GPUs.

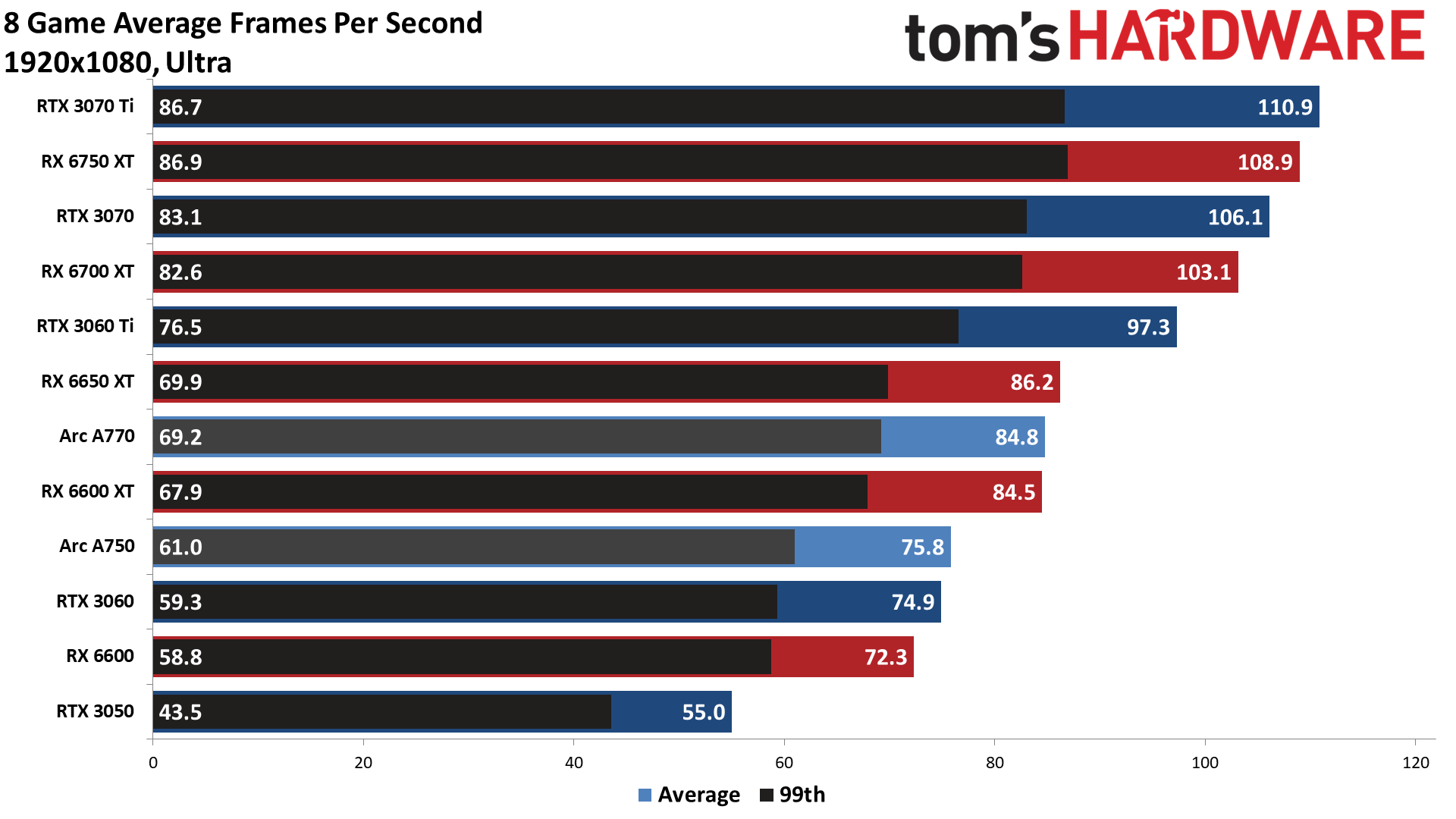

At 1080p ultra, the Arc A750 just barely edges past the RTX 3060, with 76 fps across our test suite compared to 75 fps. That also puts it a bit ahead of AMD's less expensive RX 6600, while the RX 6600 XT and 6650 XT are over 10% faster. The A750 also rips past the RTX 3050, delivering nearly 40% better performance on average.

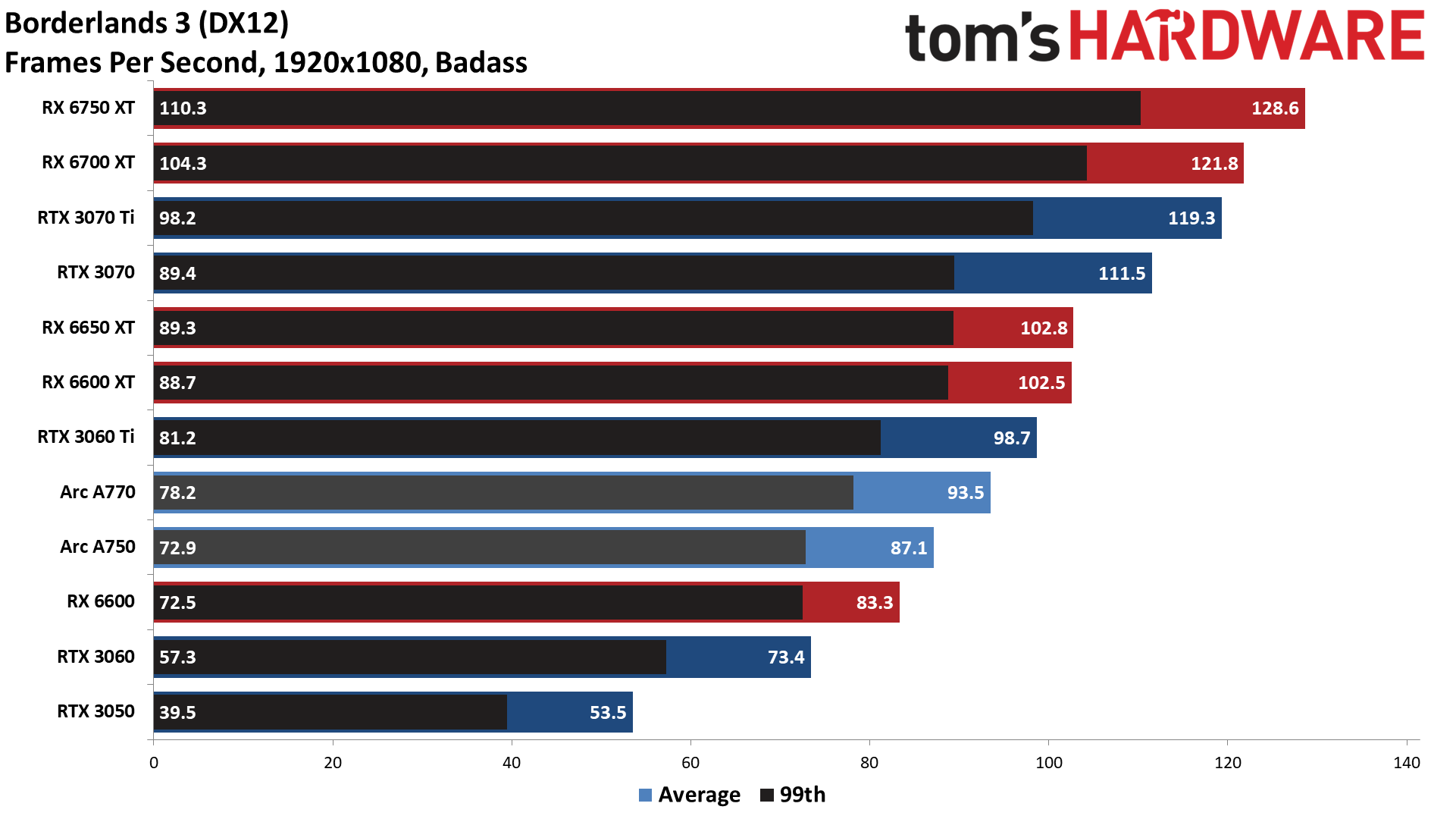

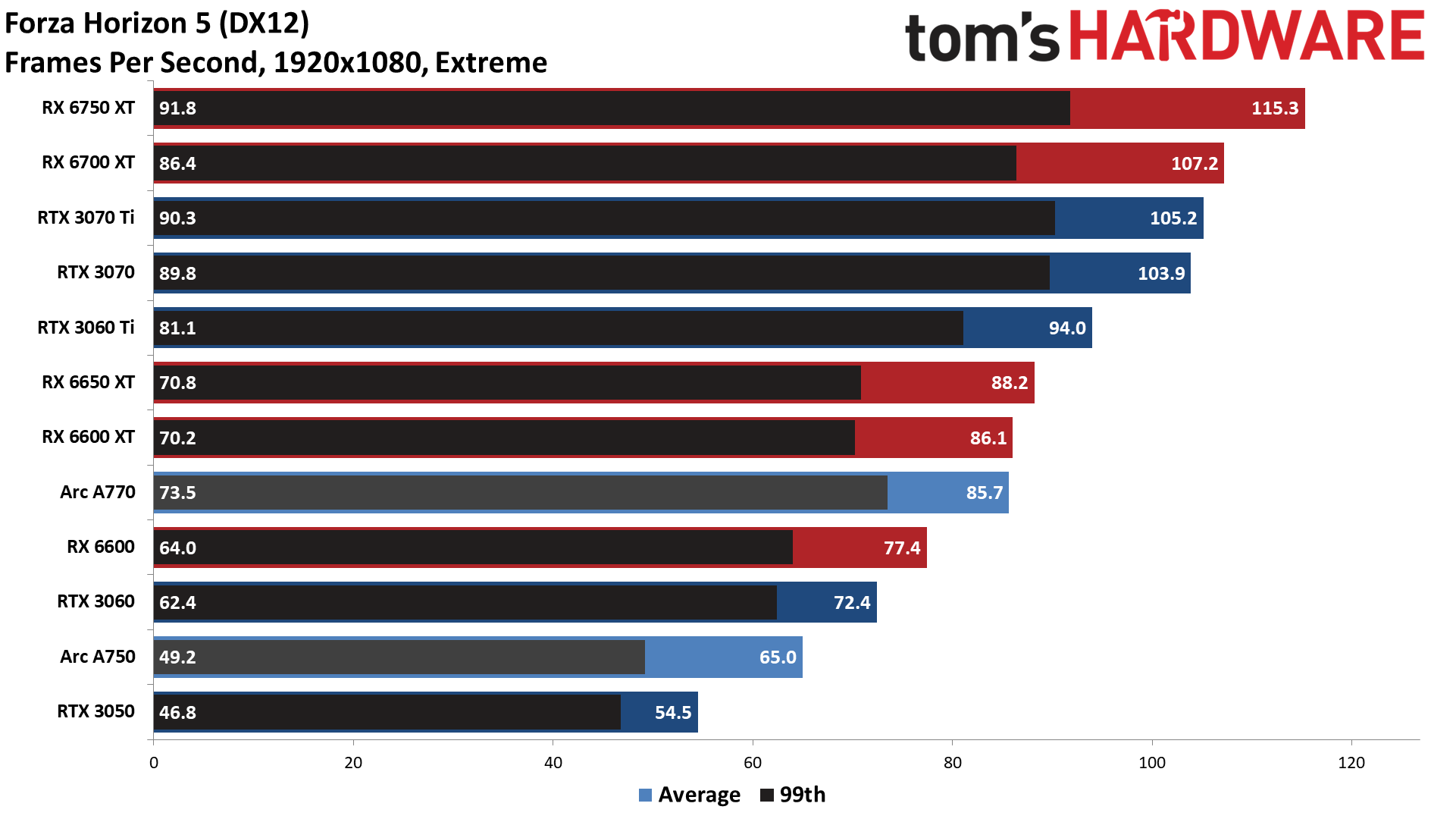

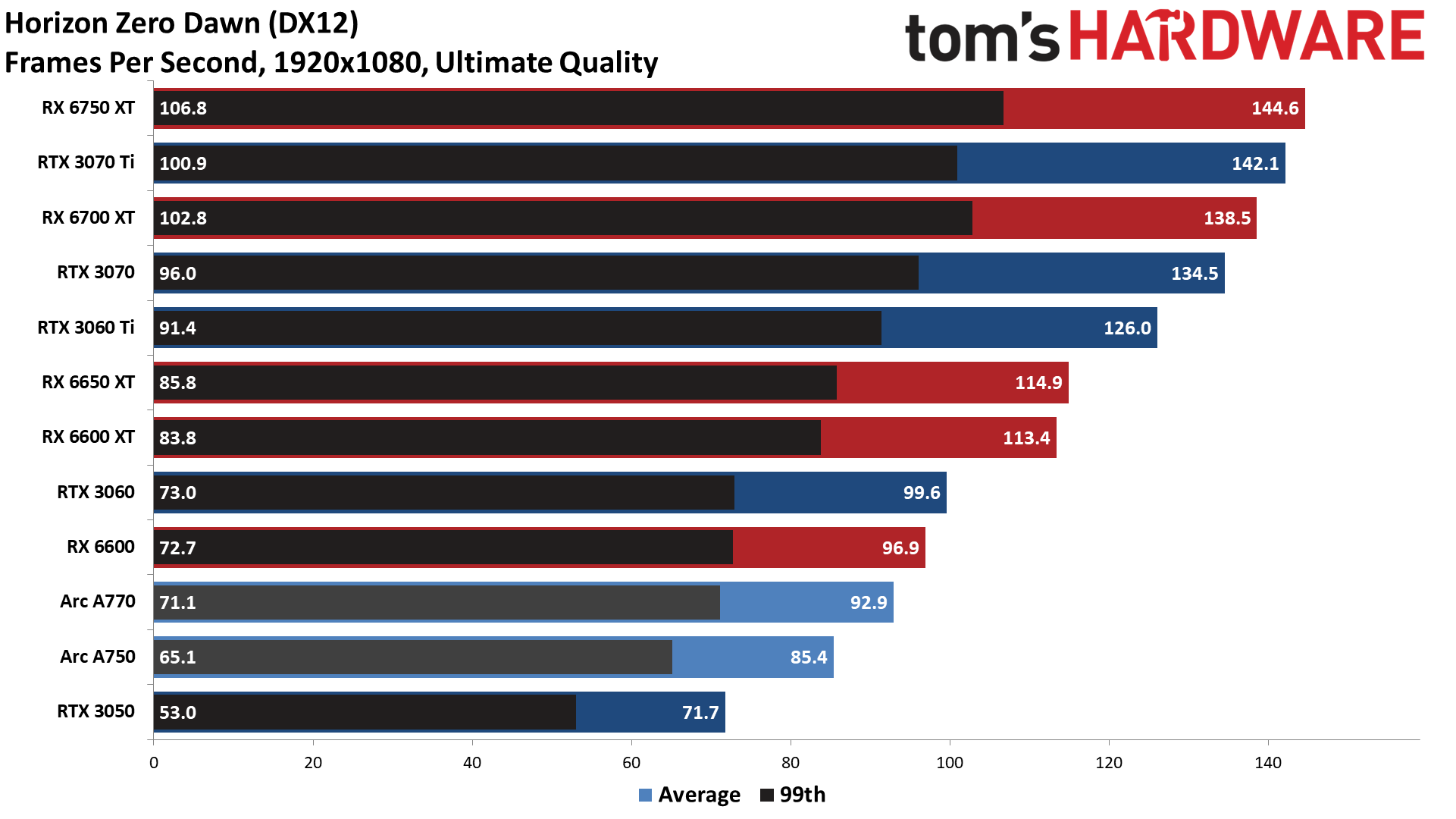

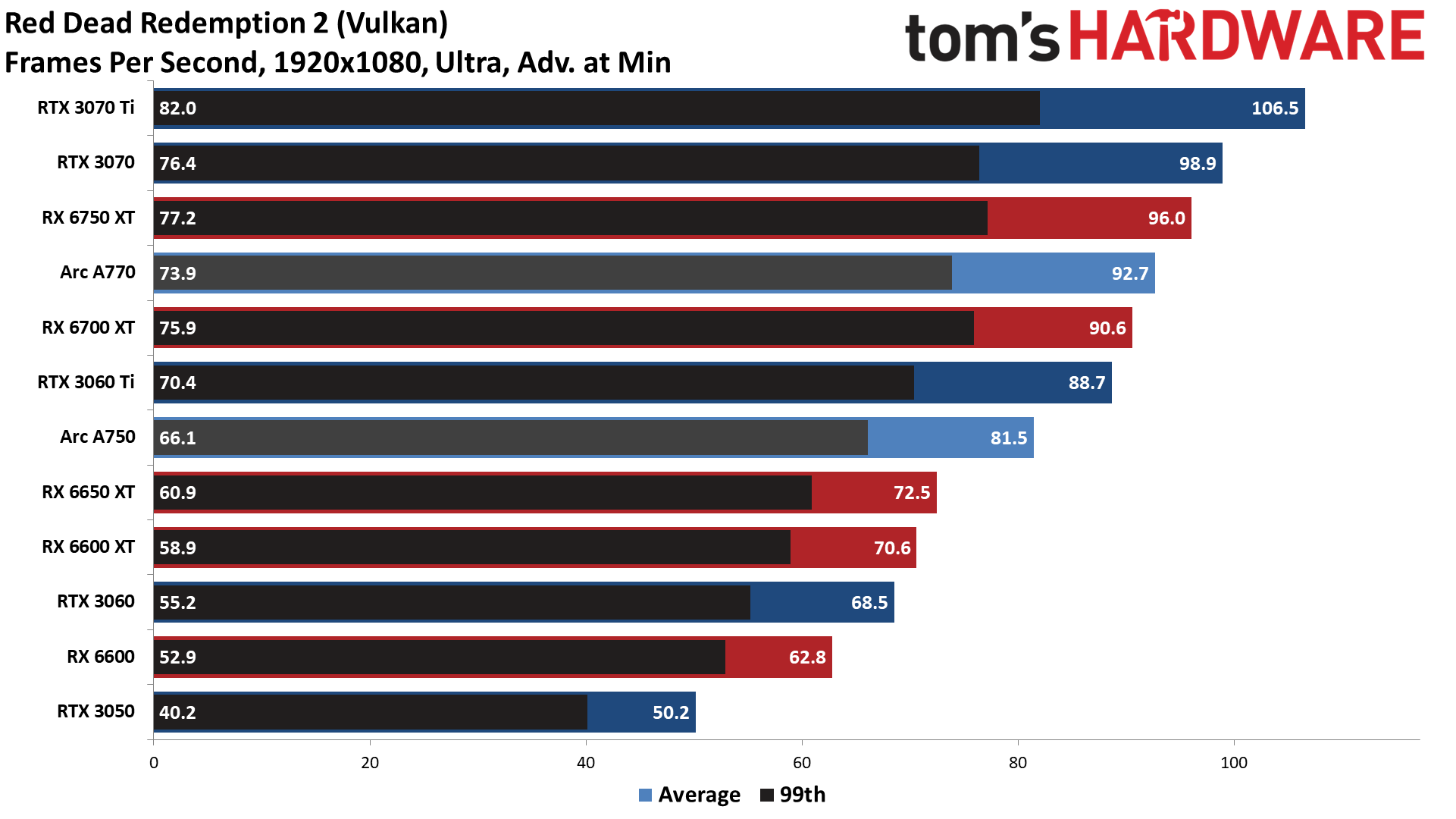

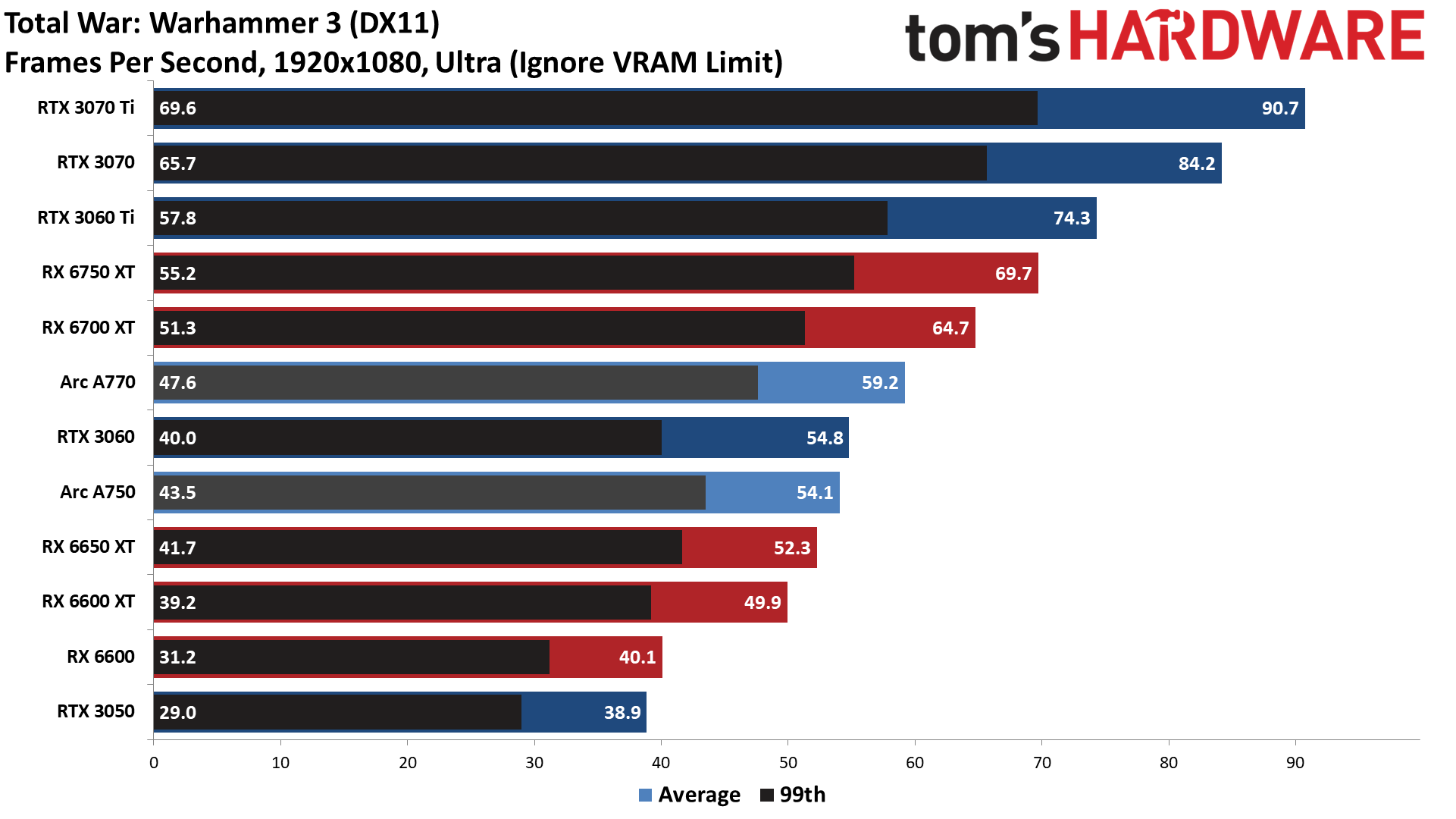

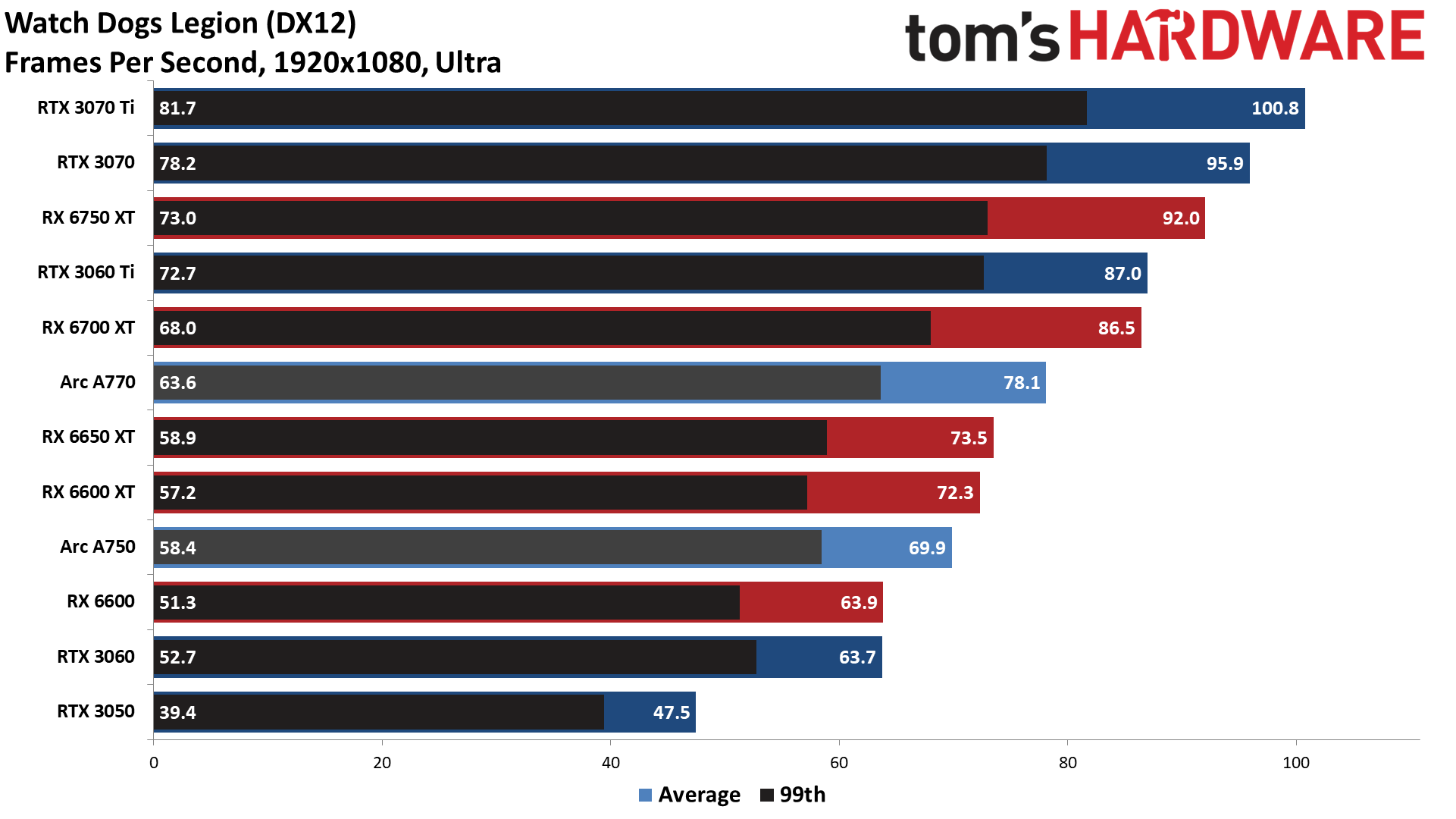

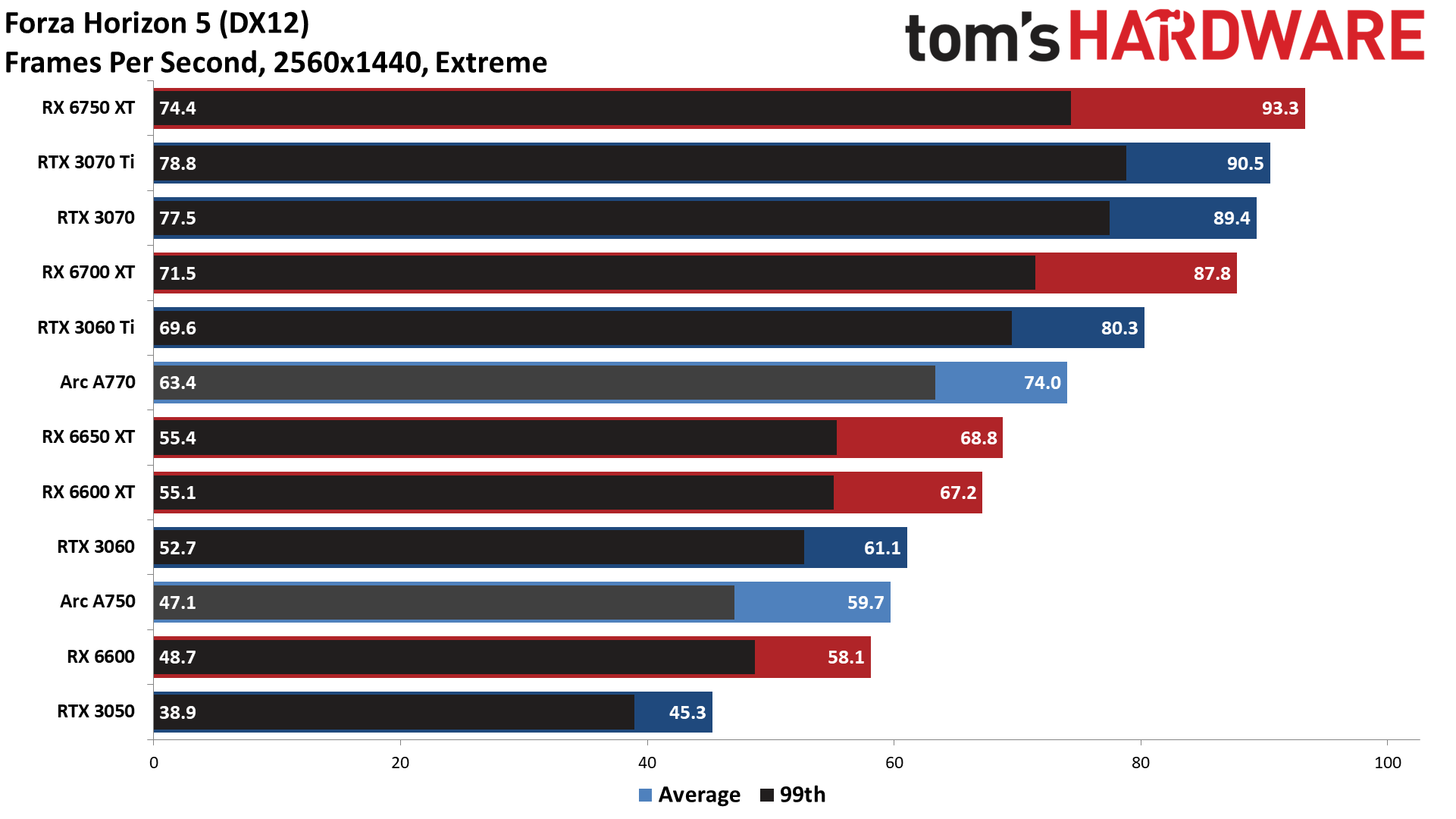

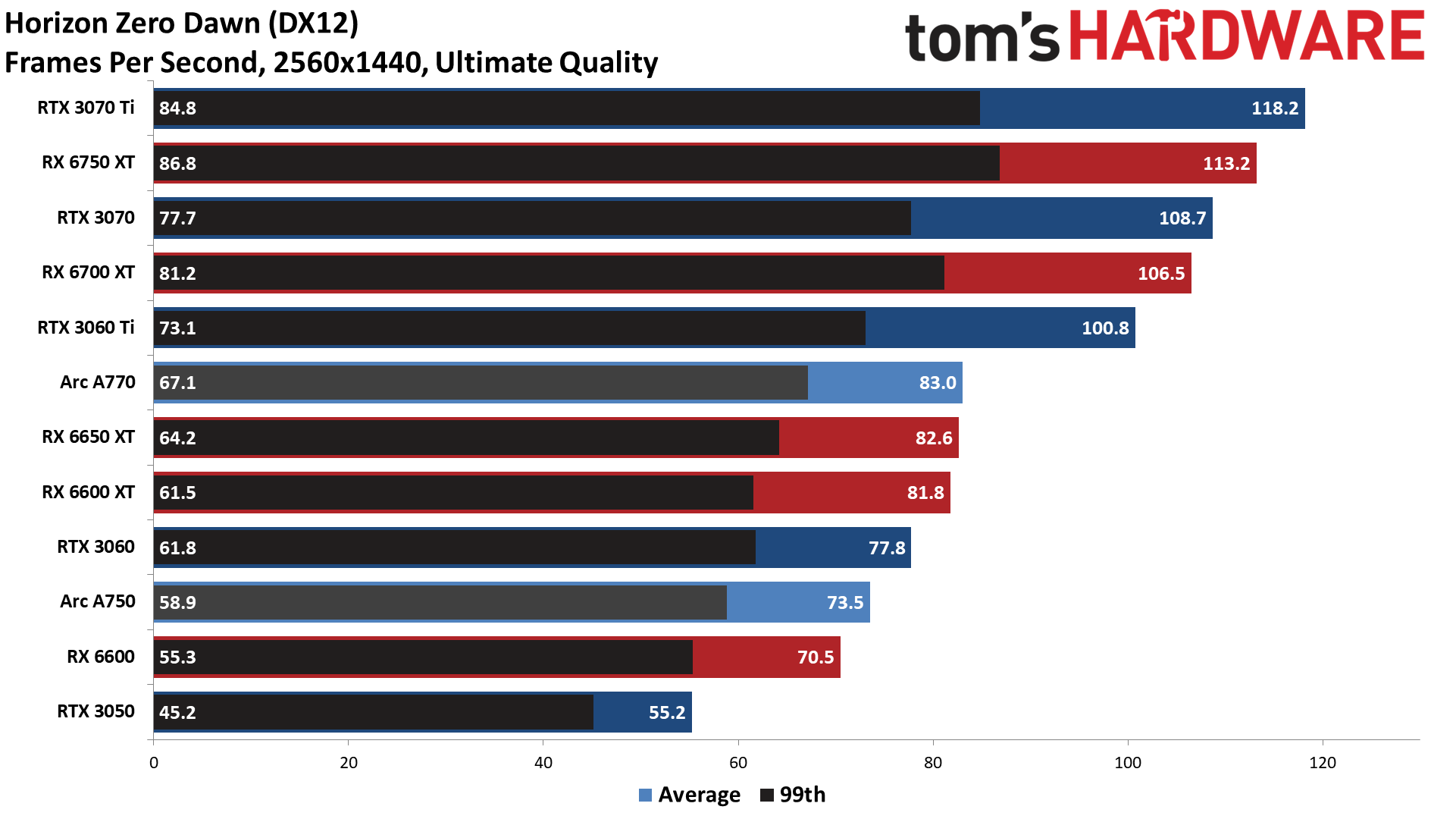

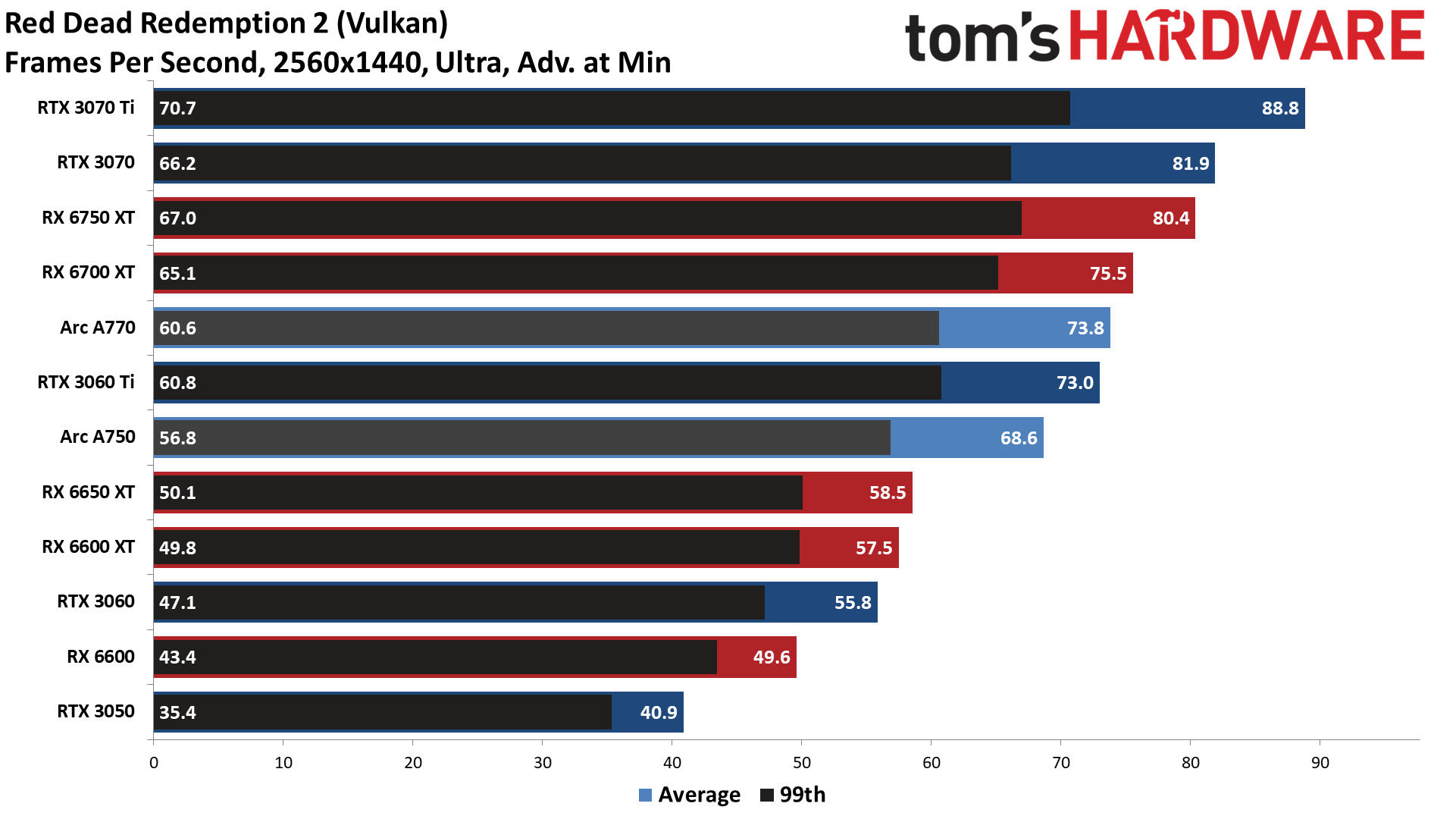

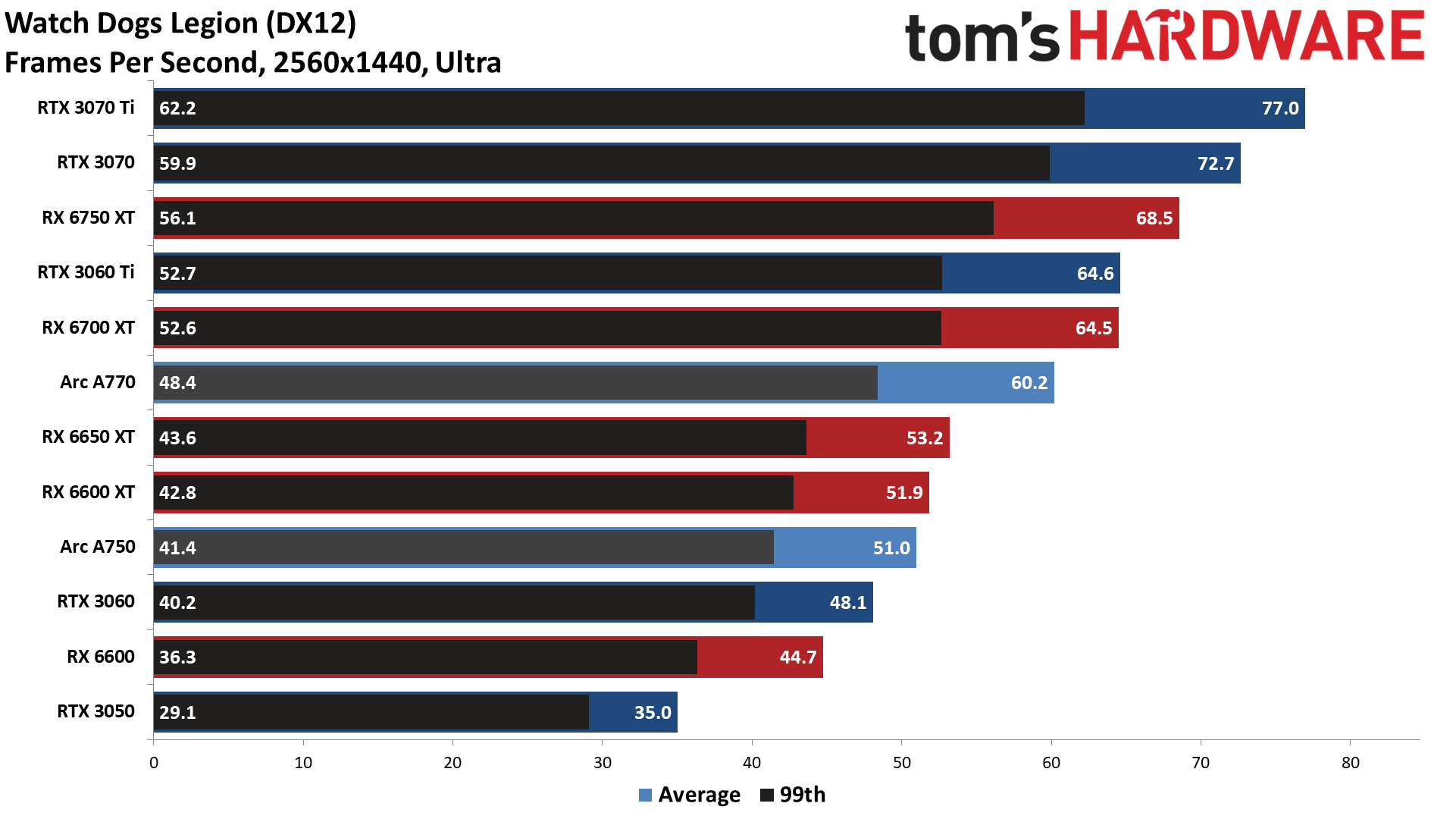

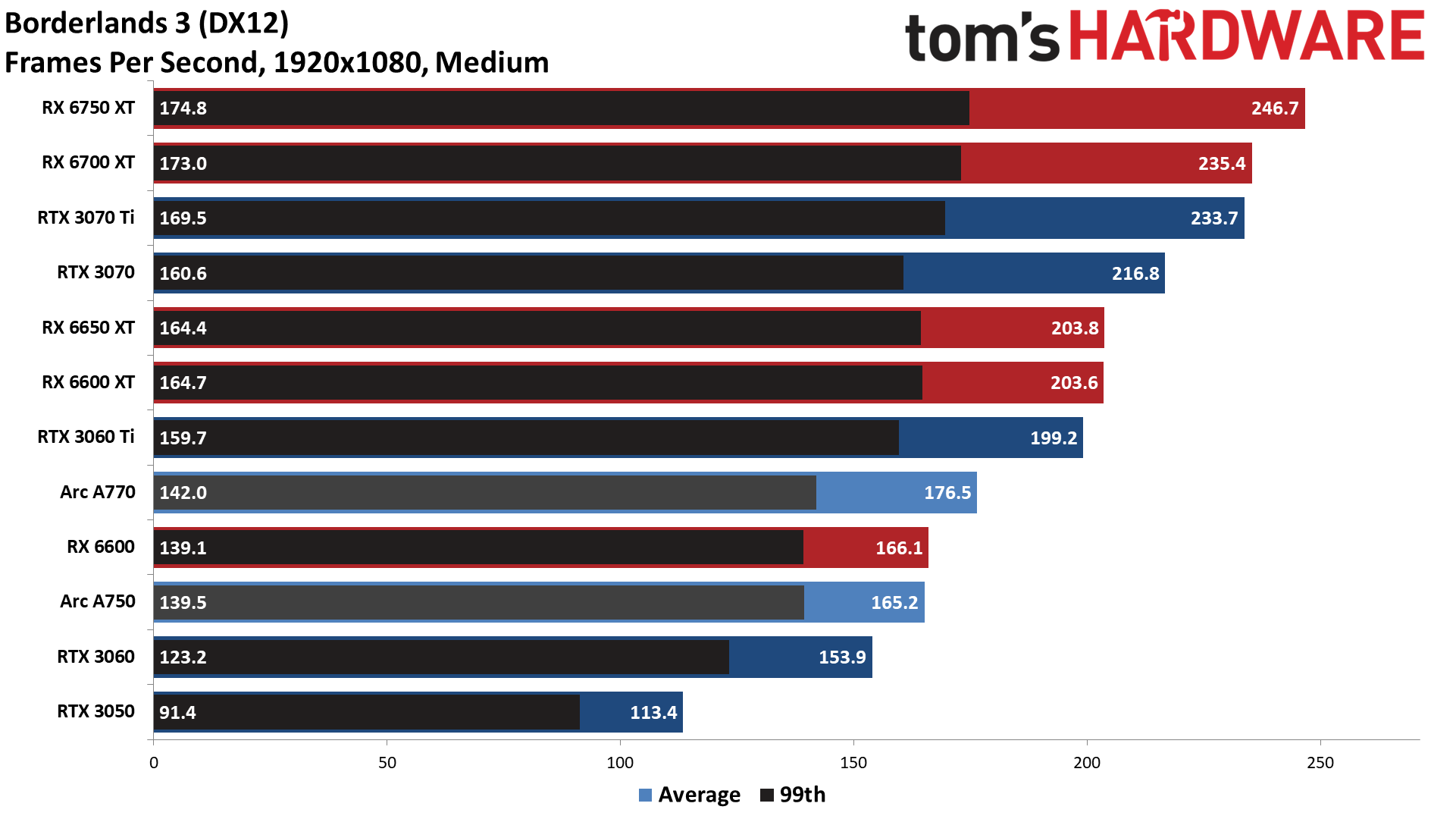

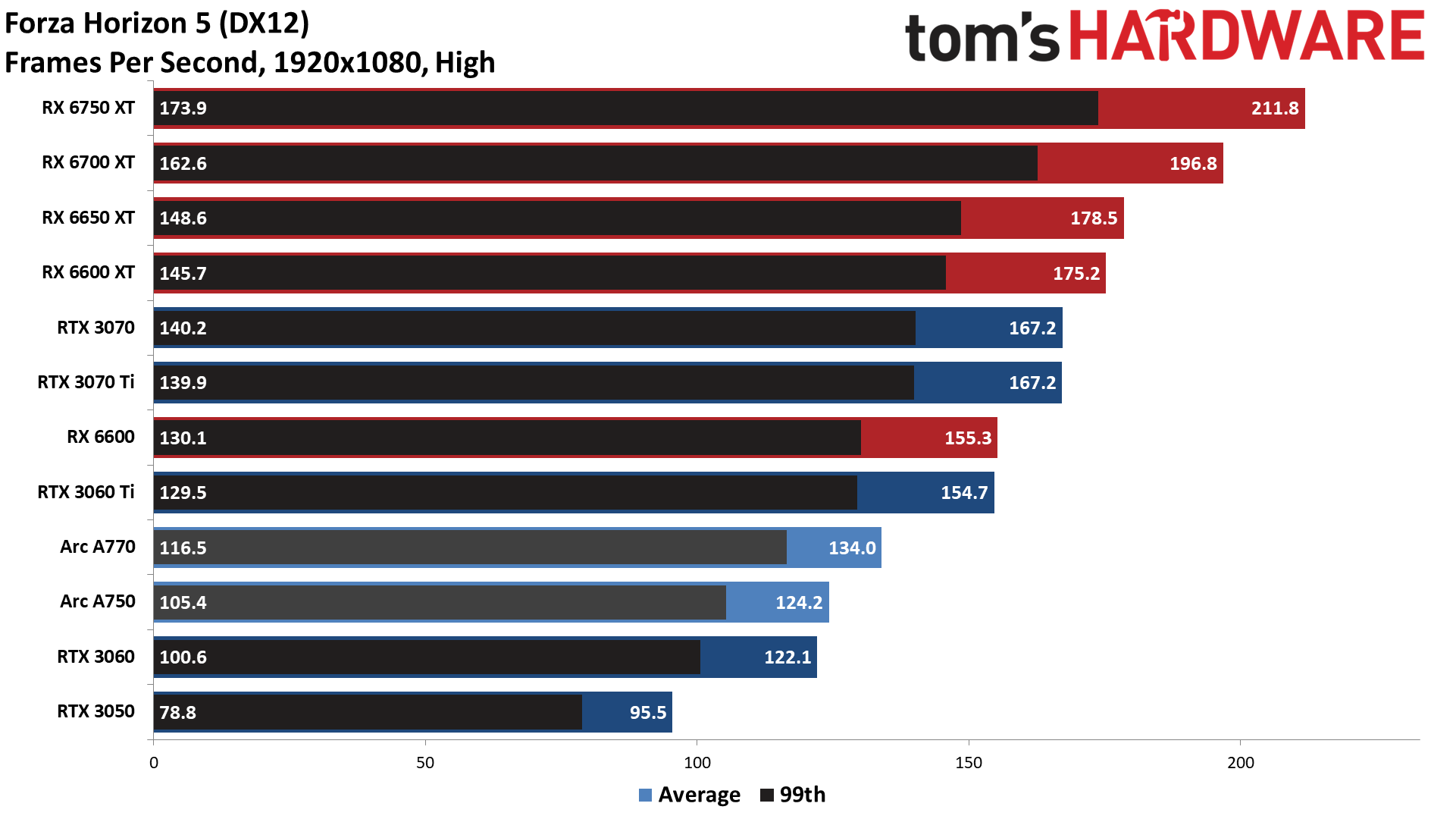

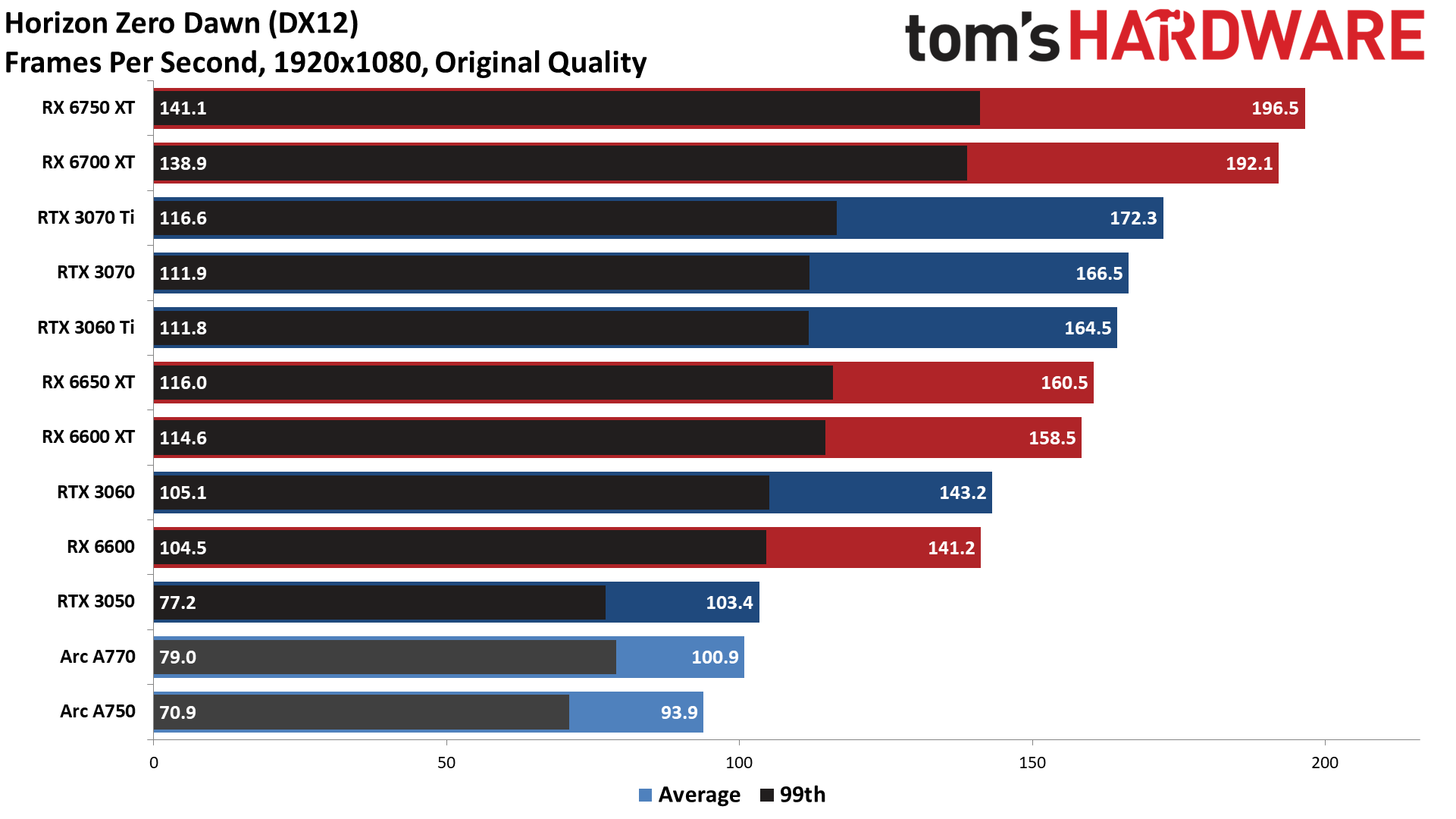

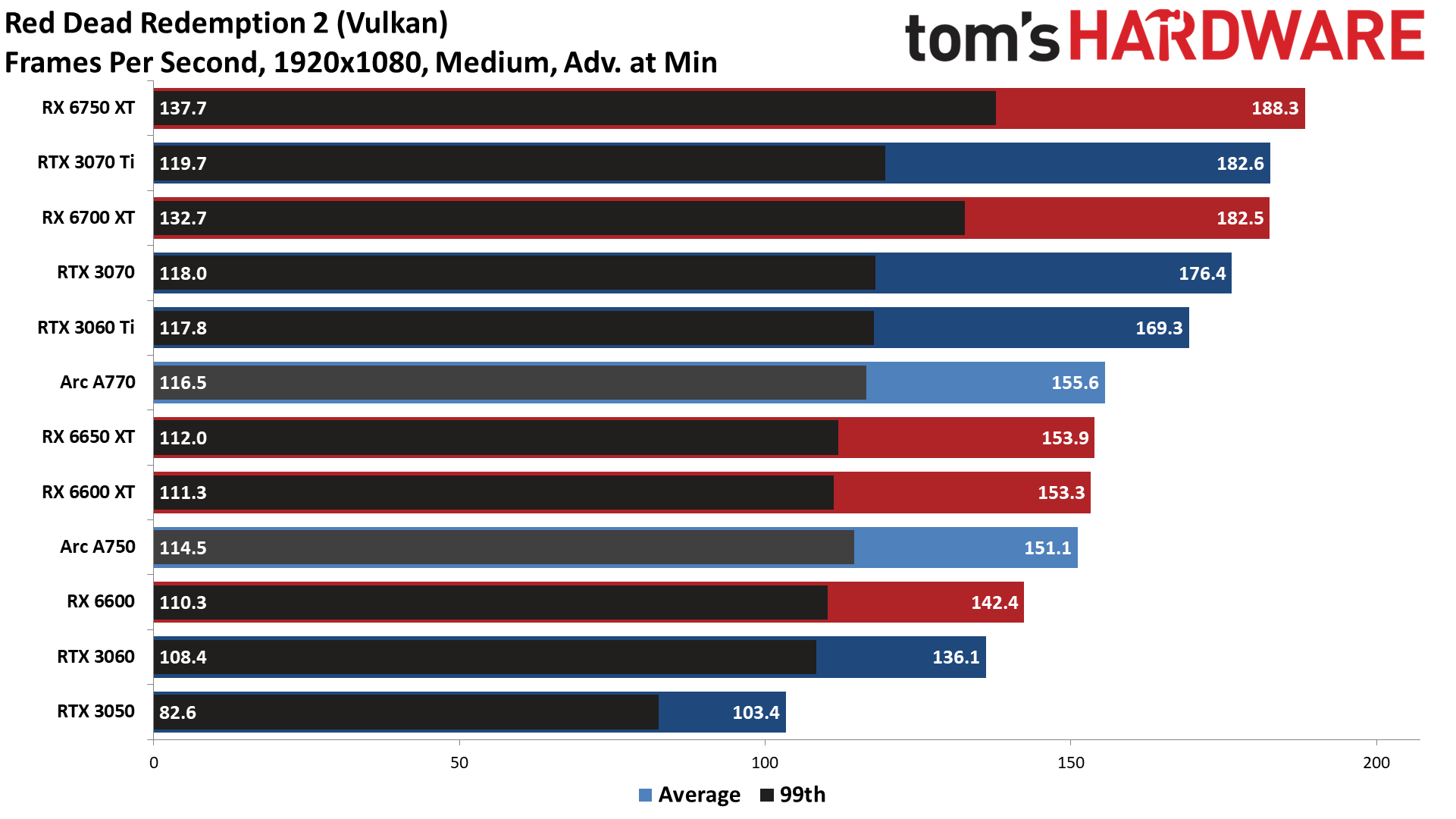

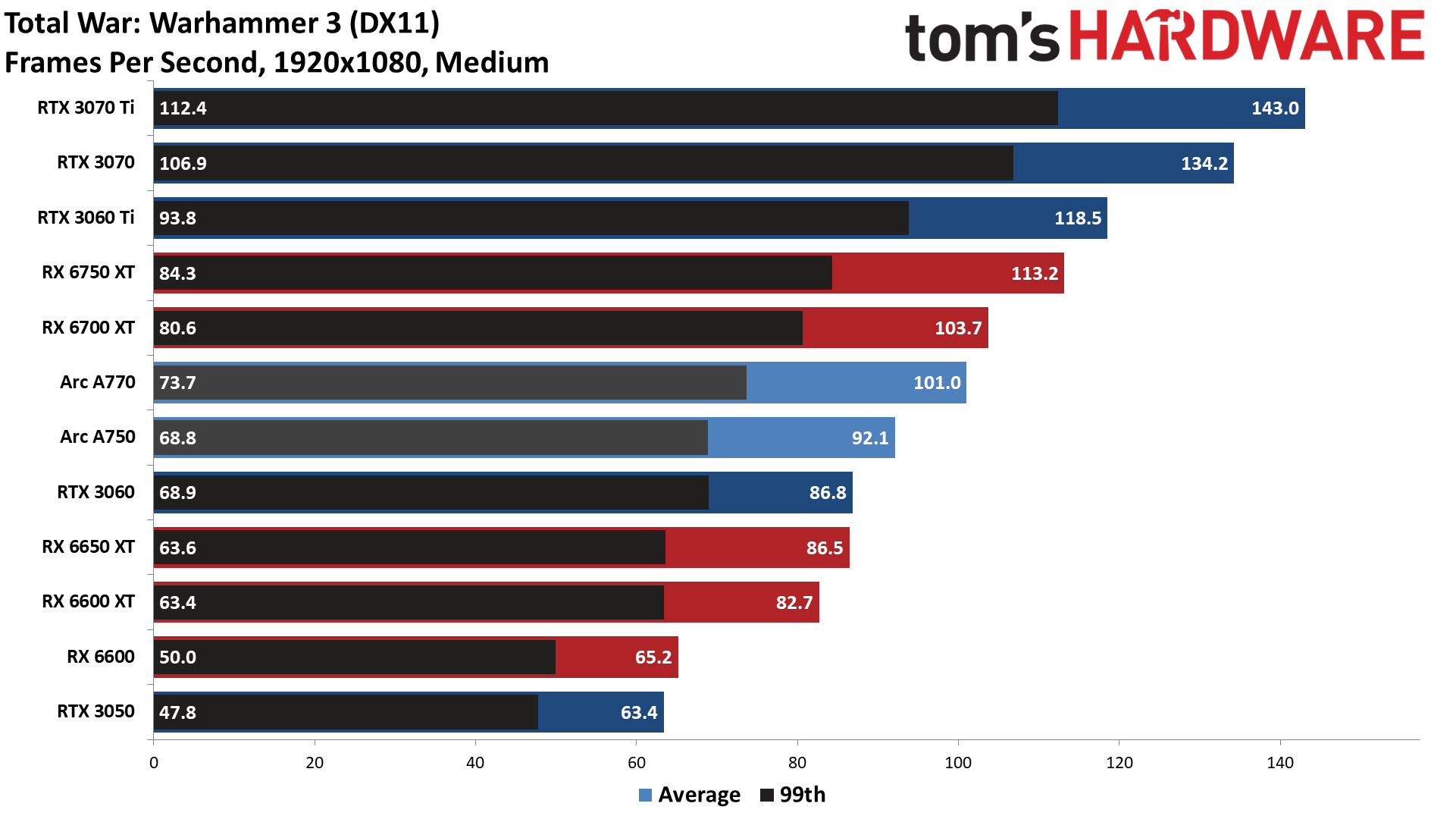

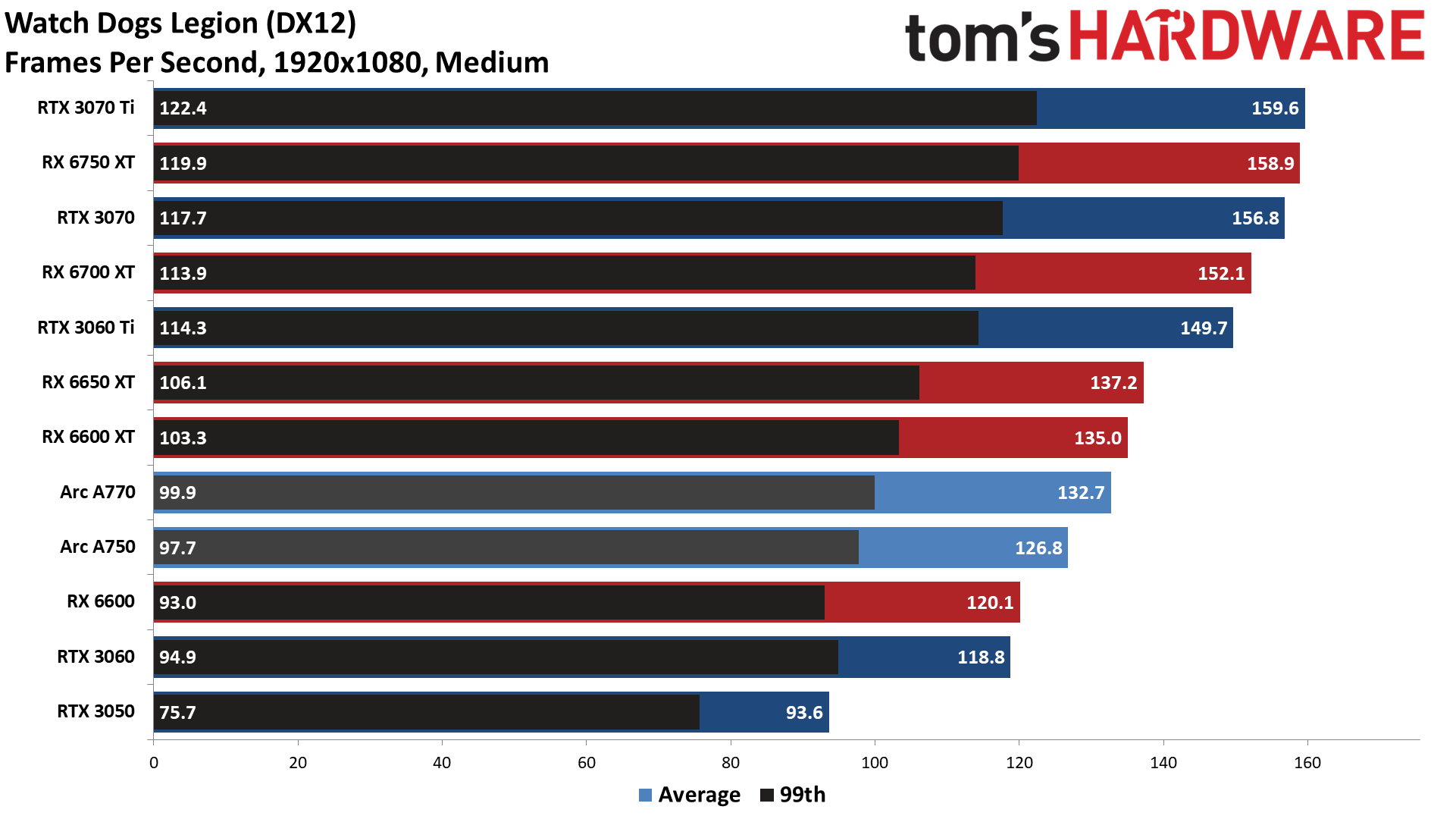

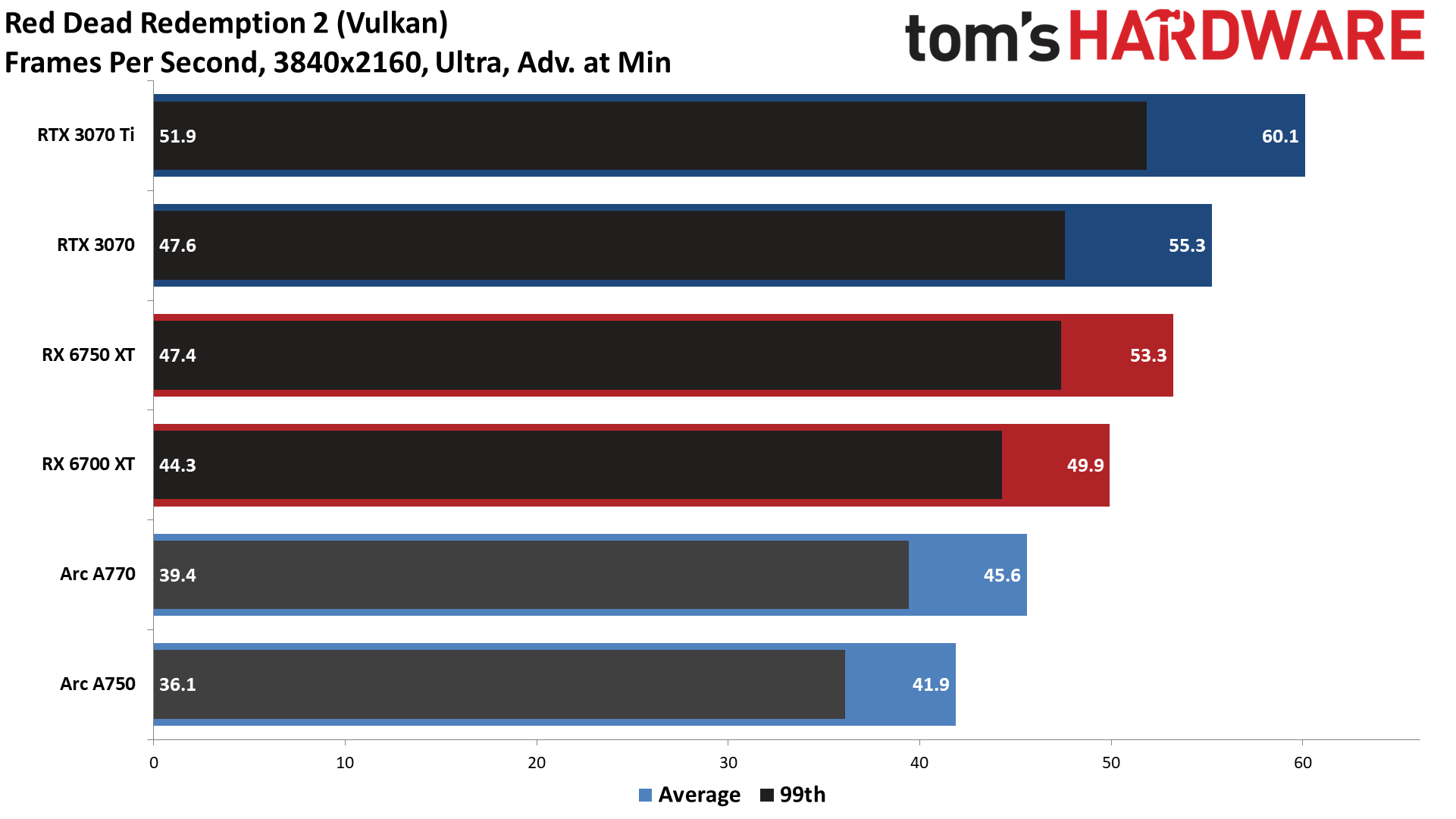

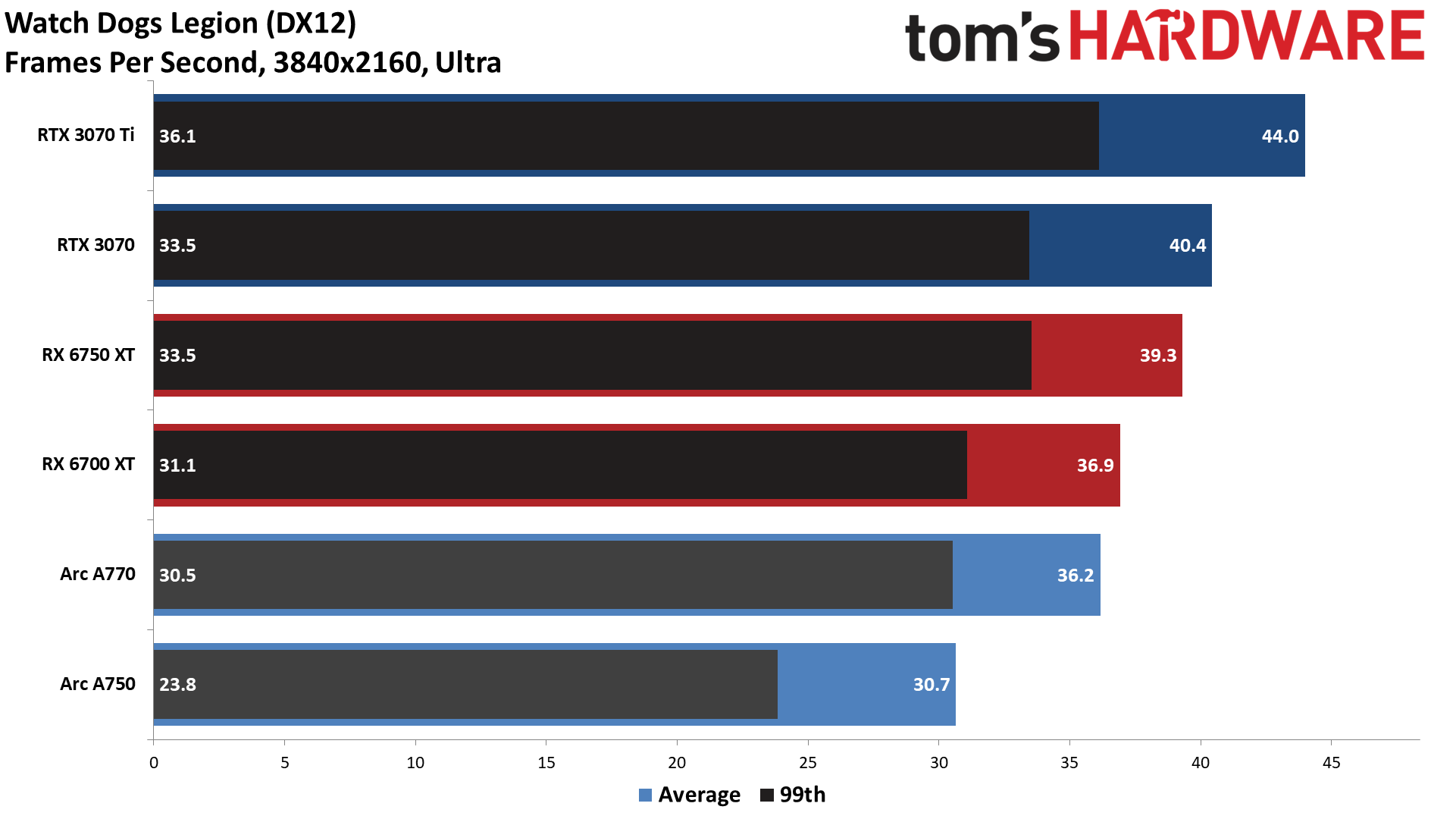

Looking at the individual game charts, Total War: Warhammer 3 is the only game where the A750 didn't break 60 fps, though it's not the type of game where buttery smooth framerates are necessary. The RTX 3060 and A750 each win in half of the games, with Borderlands 3, Red Dead Redemption 2, and Watch Dogs Legion favoring A750 by 10–20%, while Forza Horizon 5 and Horizon Zero Dawn give the 3060 a 10% or more lead.

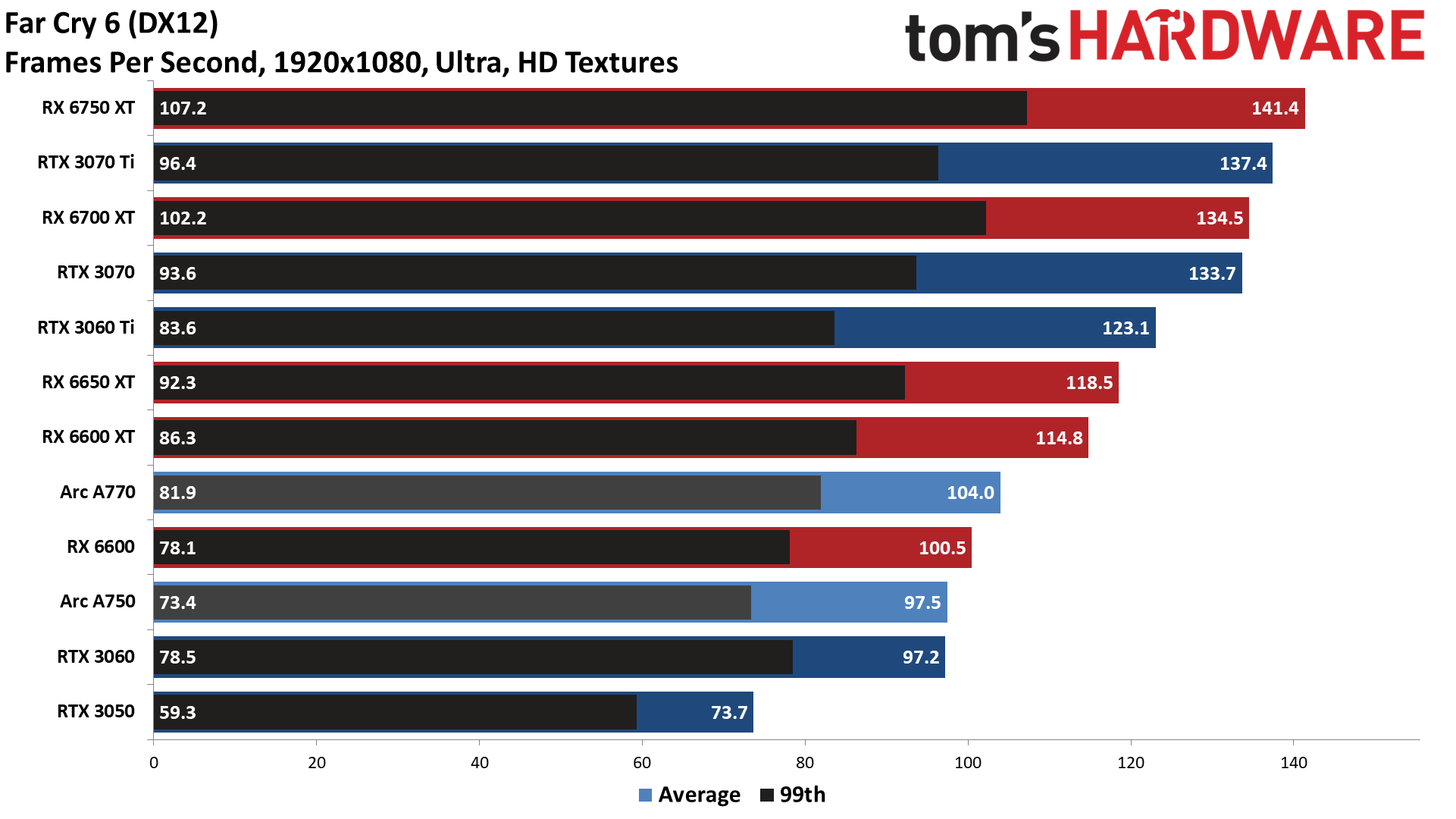

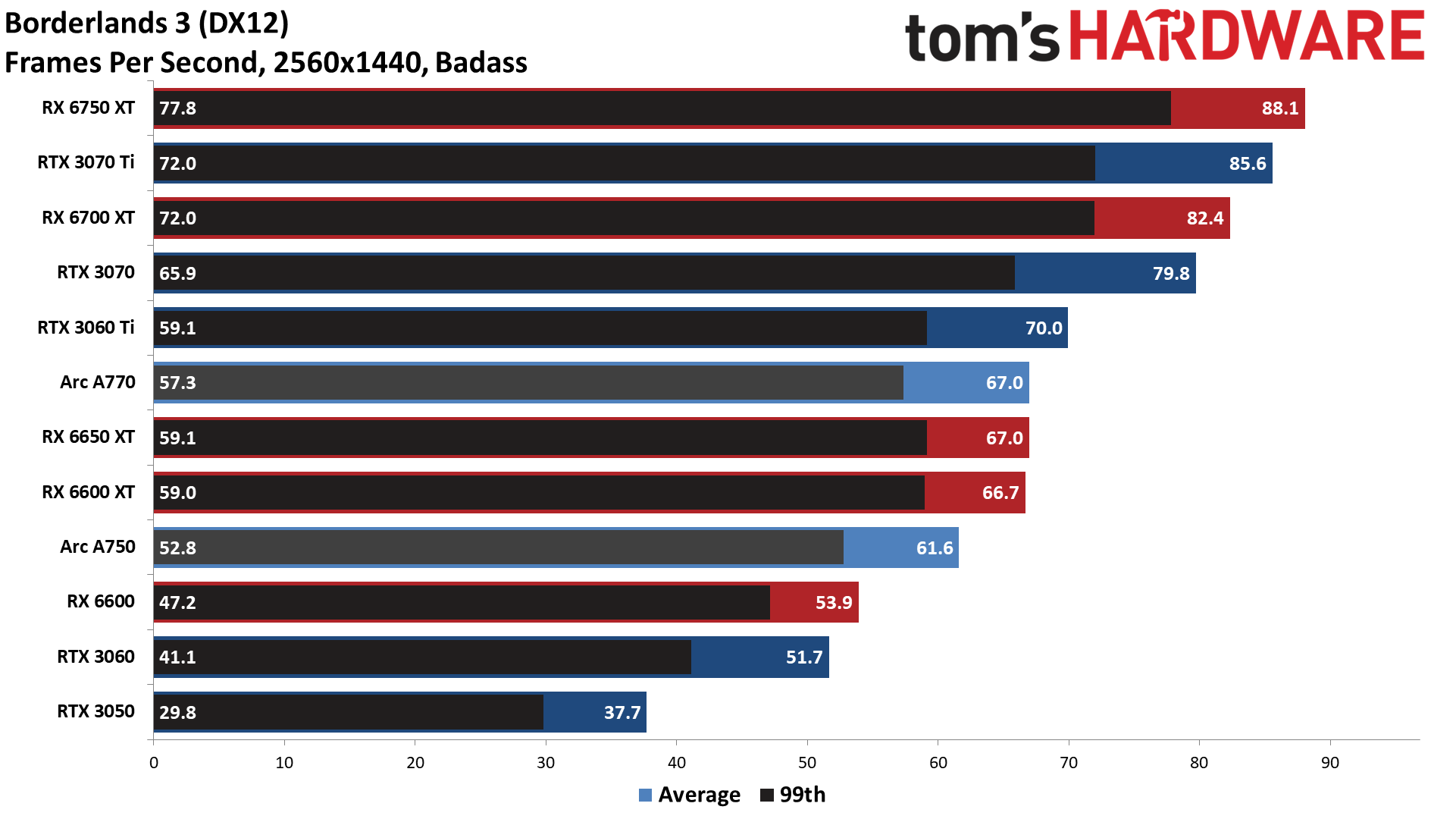

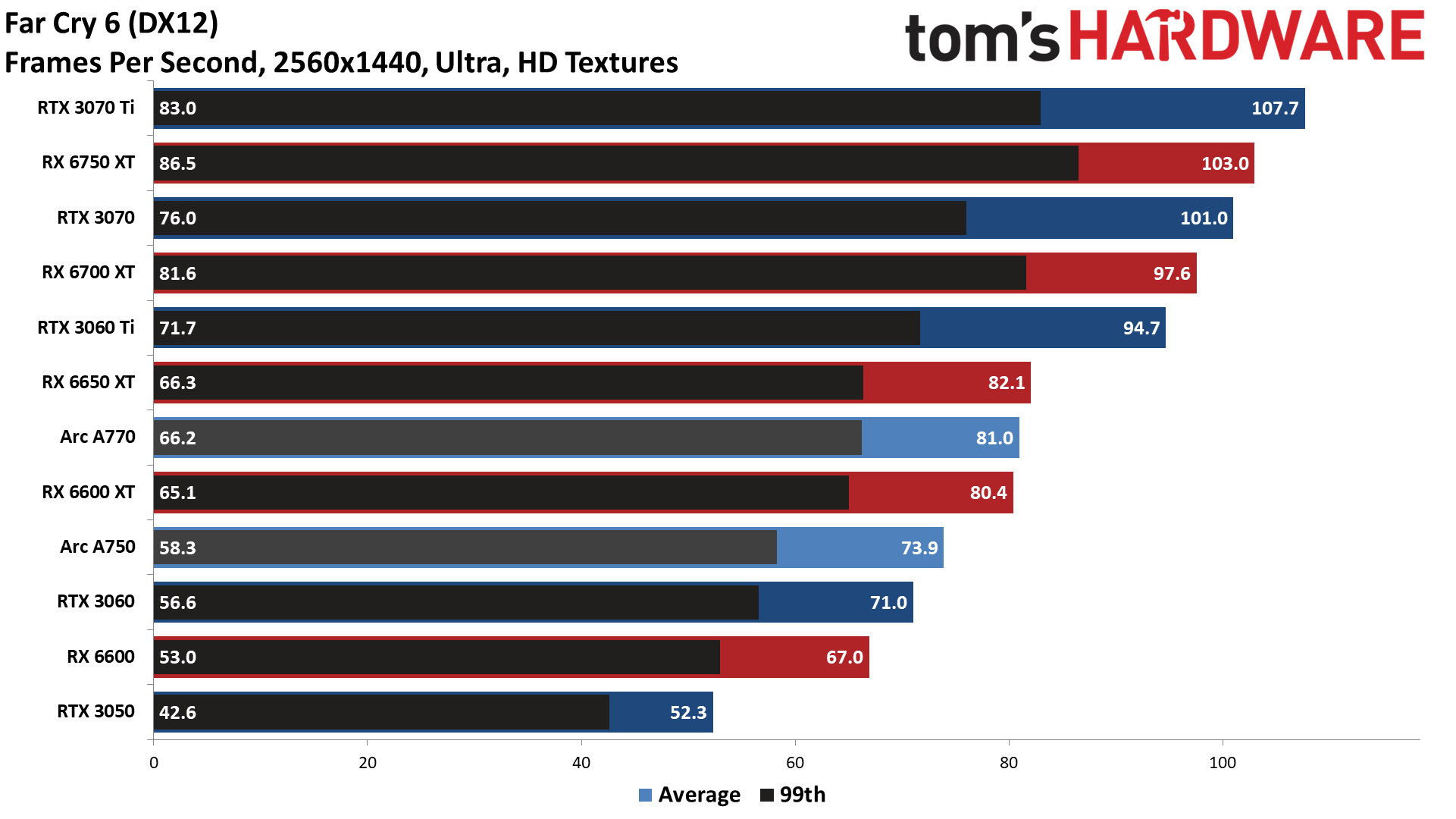

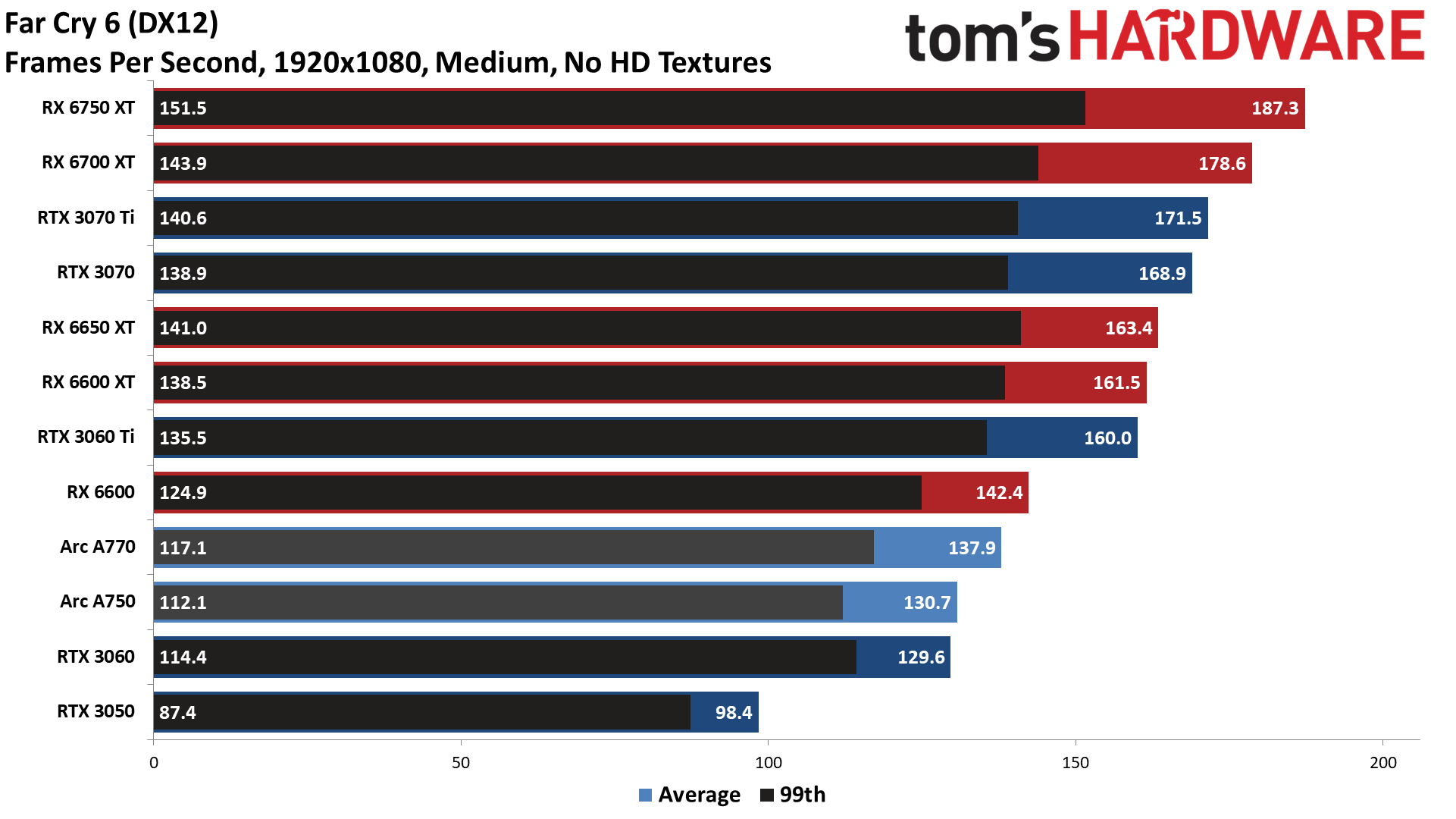

Against the AMD GPUs, the A750 only claims wins in Red Dead Redemption 2 and Total War: Warhammer 3. Meanwhile, the RX 6650 XT leads by 18–36% in Borderlands 3, Far Cry 6, Forza Horizon 5, and Horizon Zero Dawn. So if all you care about is standard (non-ray tracing) gaming performance, the RX 6650 XT gets an easy recommendation. $20 more for 15% better performance and far more mature driver support? Yes, please.

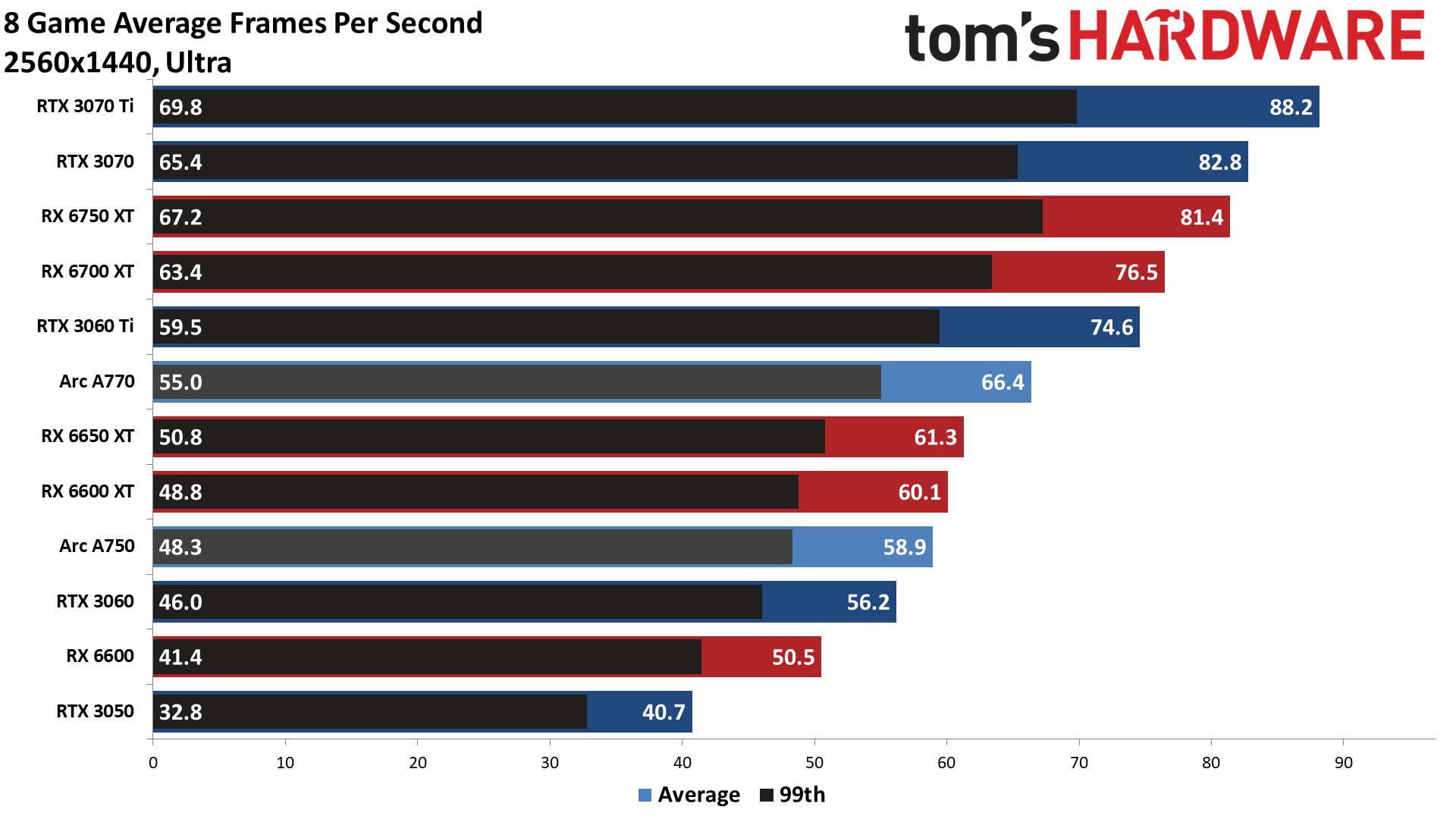

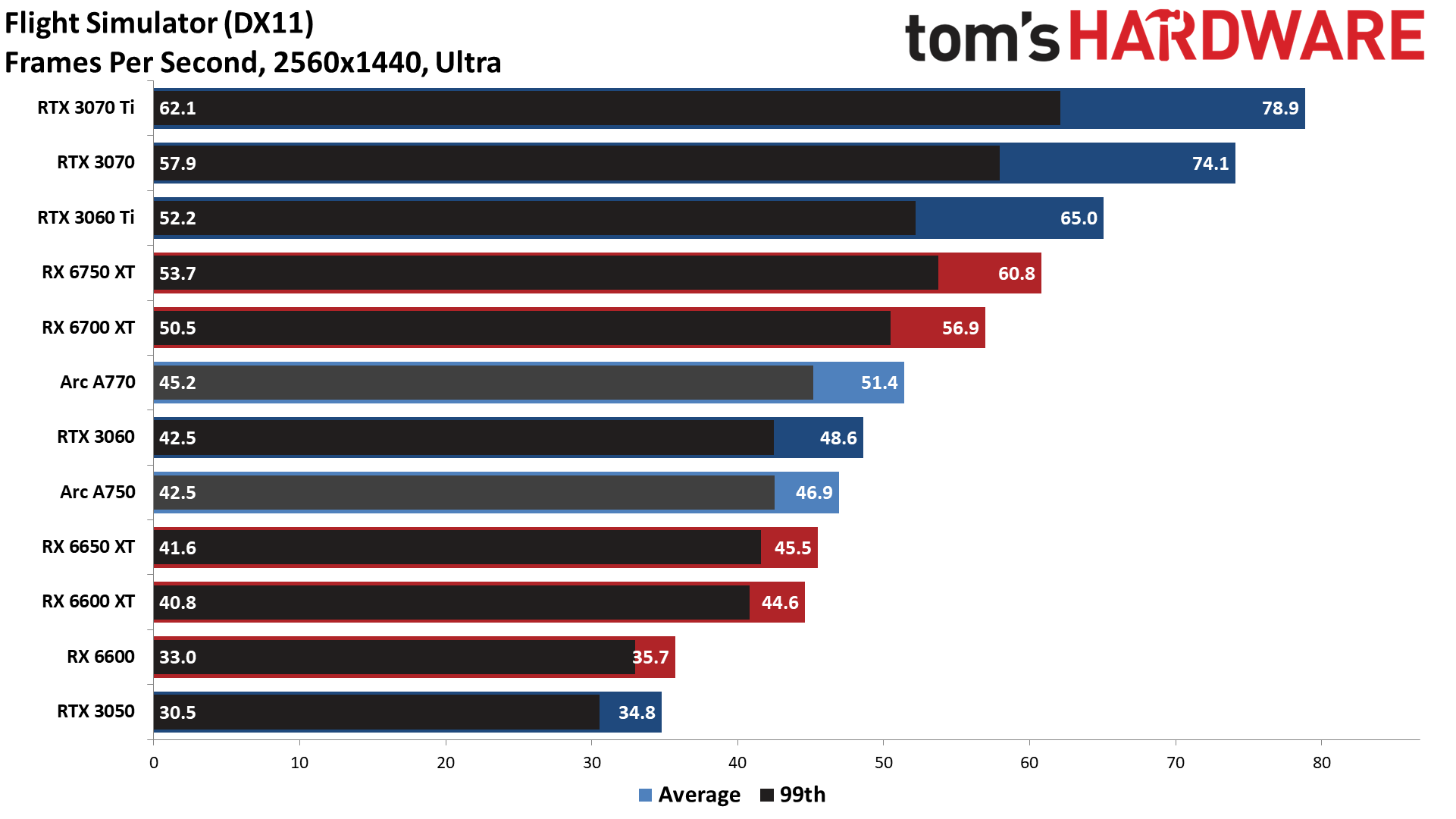

Thanks to its 256-bit memory interface, 1440p ultra improves the A750's relative standings compared to the competition. It's now 5% faster than the 3060 overall and only a few percent behind the RX 6650 XT and 6600 XT. However, across the eight games, the A750 averages just a hair under 60 fps, which means some games will be above that mark, and others could fall well short.

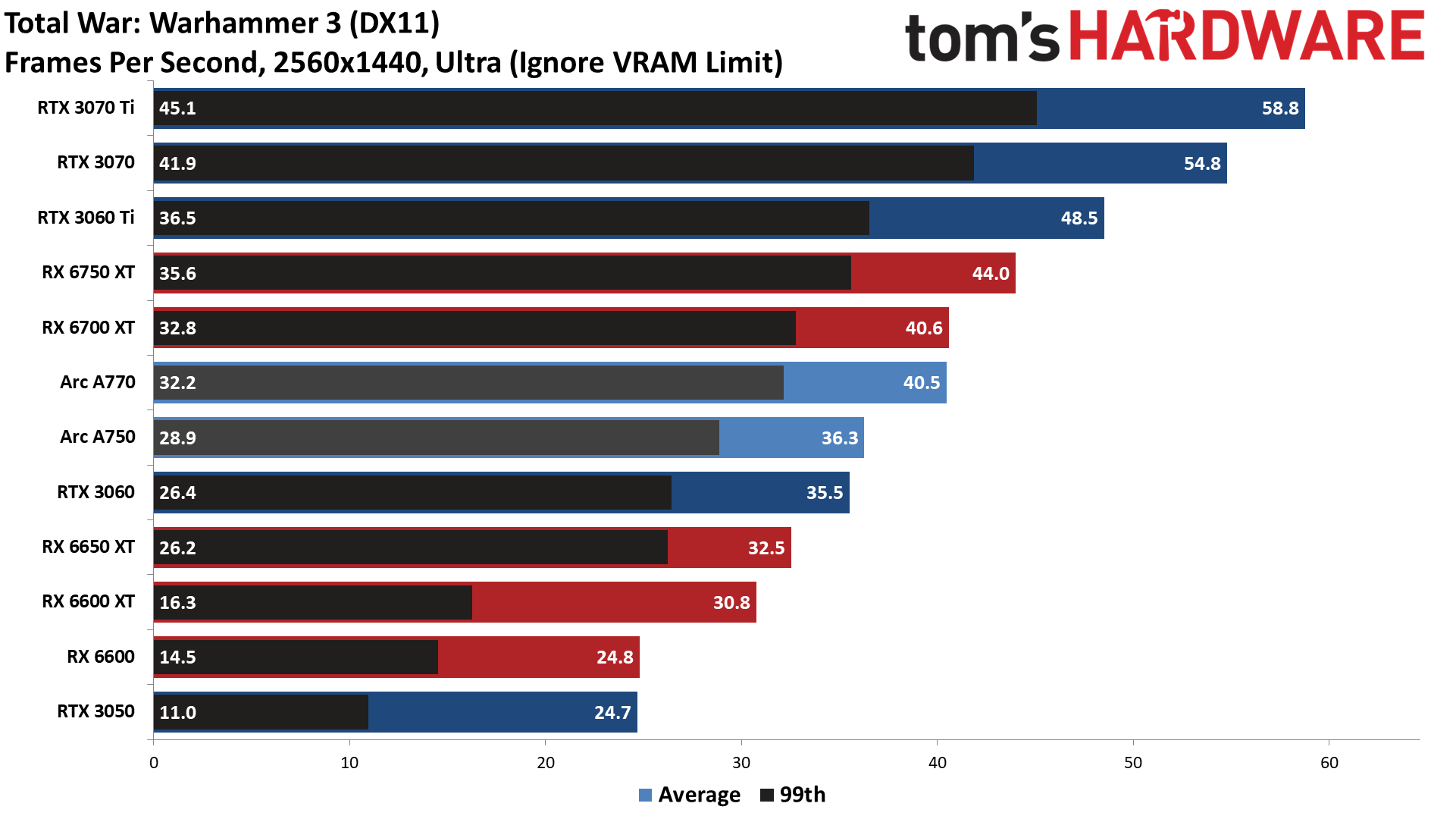

The individual games show A750 performance ranging from a low of just 36 fps (Warhammer 3) to a high of 74 fps (Far Cry 6 and Horizon Zero Dawn), so everything is still playable. If you're serious about 1440p gaming, though, we'd drop a few of the more demanding settings a notch or two just to help boost performance — shadow and lighting effects are usually good candidates.

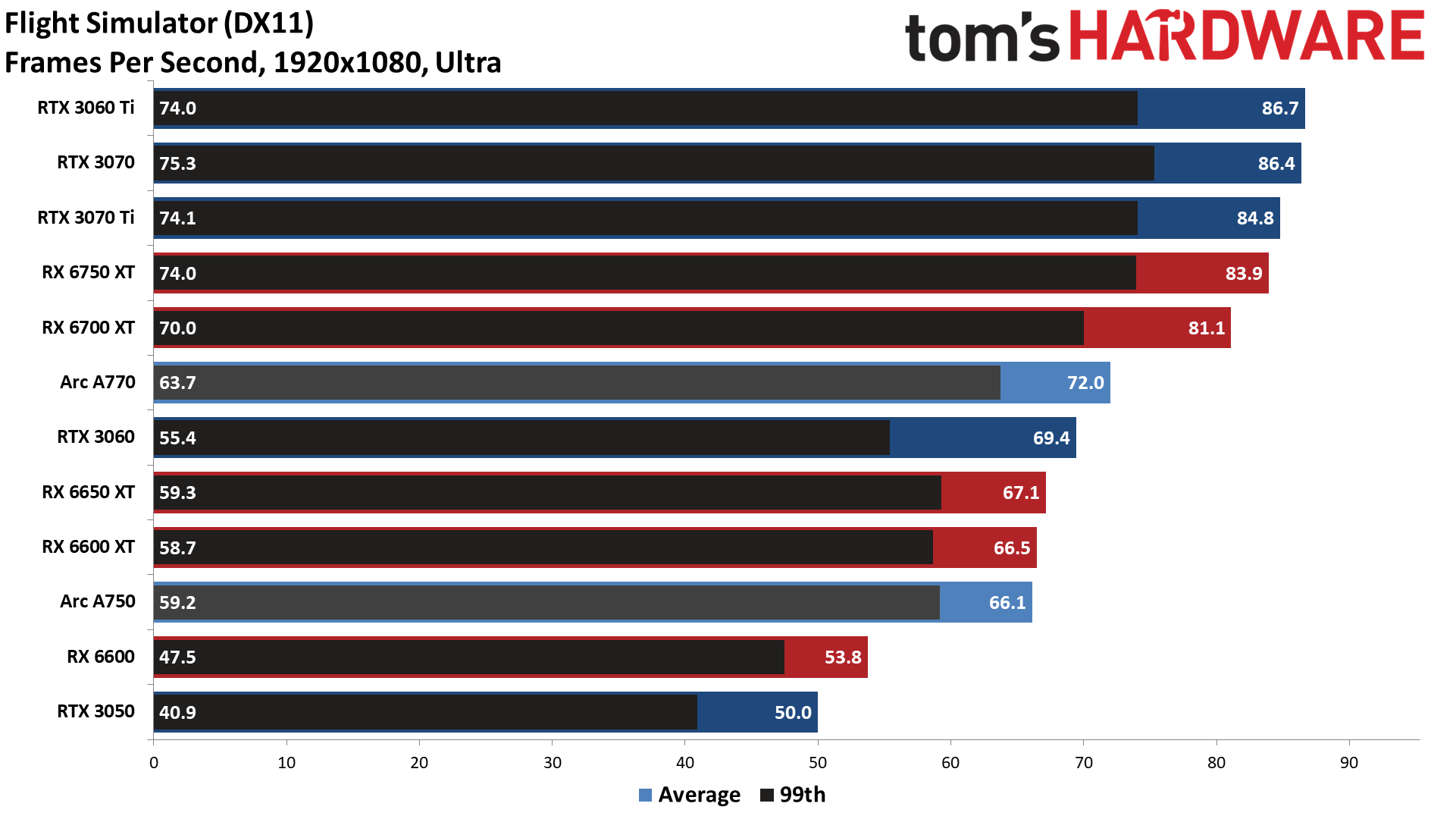

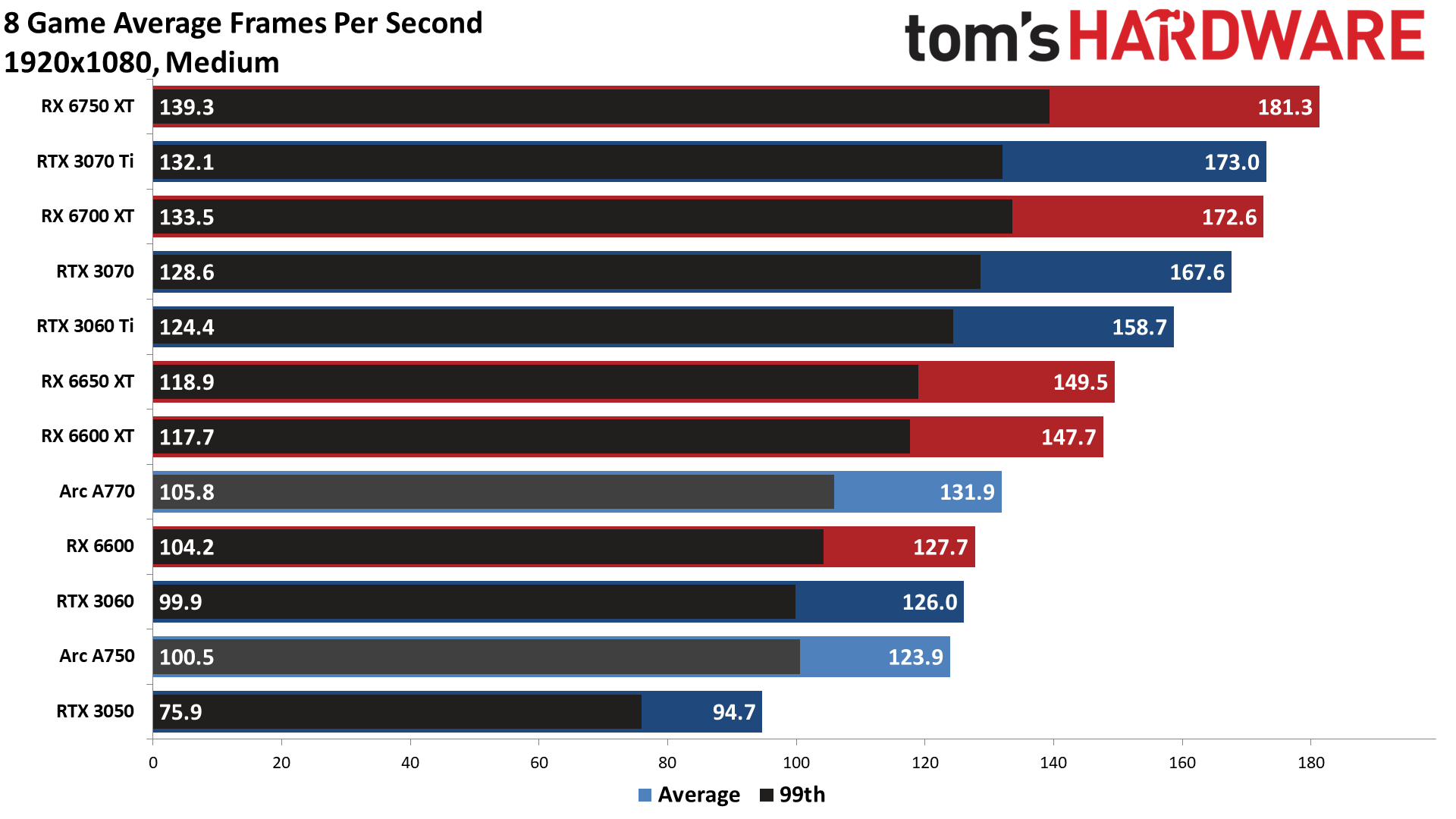

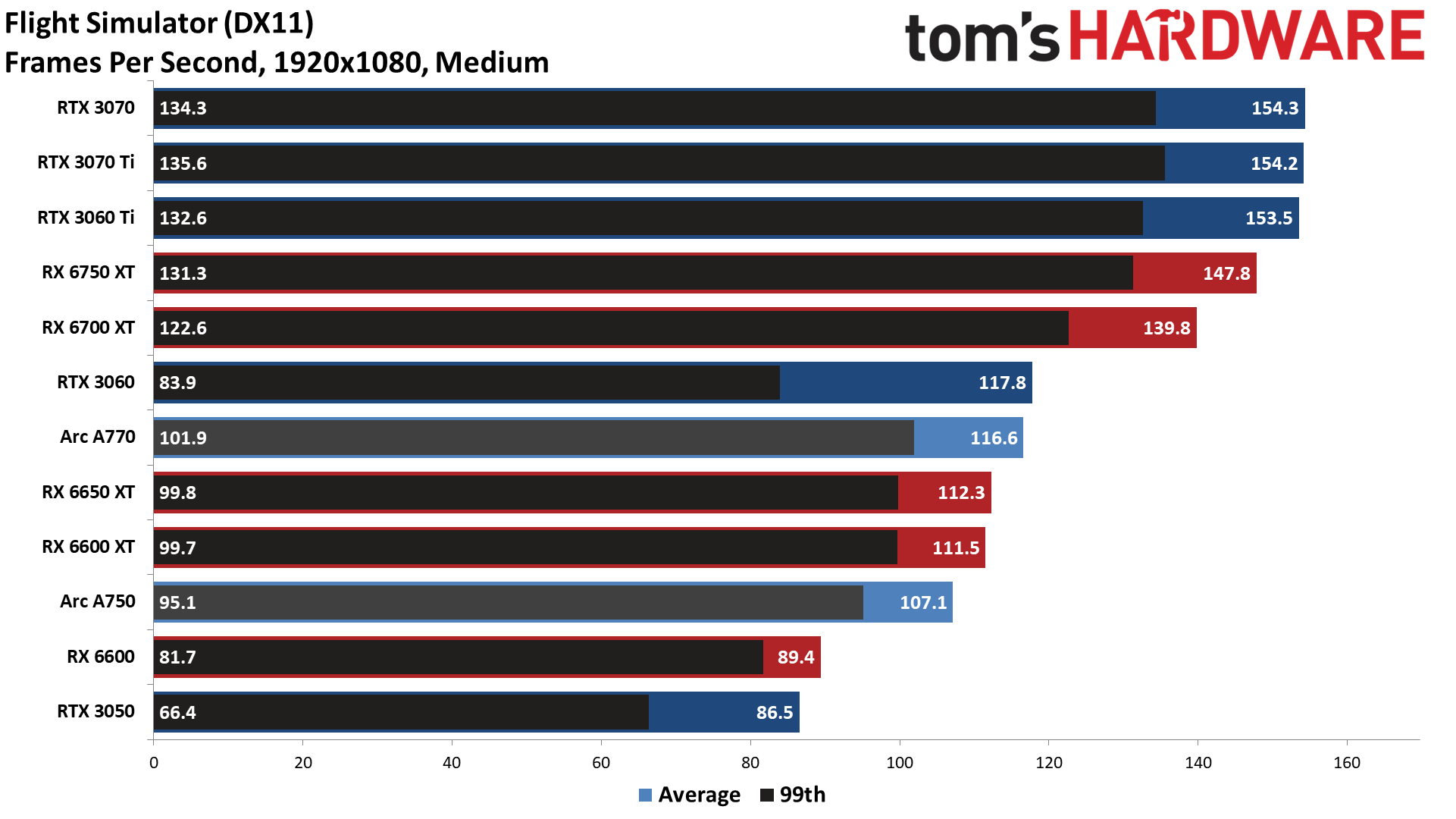

1080p medium performance isn't as strong on the A750, probably due to drivers holding it back. There are a few games where performance is still very competitive, but Horizon Zero Dawn appears to run headlong into a CPU bottleneck with Arc — it's 35% slower than an RTX 3060 in that game, and also slower than the RTX 3050.

Intel will hopefully continue to iron out any performance snags, so there's potential for the A750 to improve by 10 to 20 percent overall. Whether Intel will actually manage that level of optimizations over the coming months will be interesting to see.

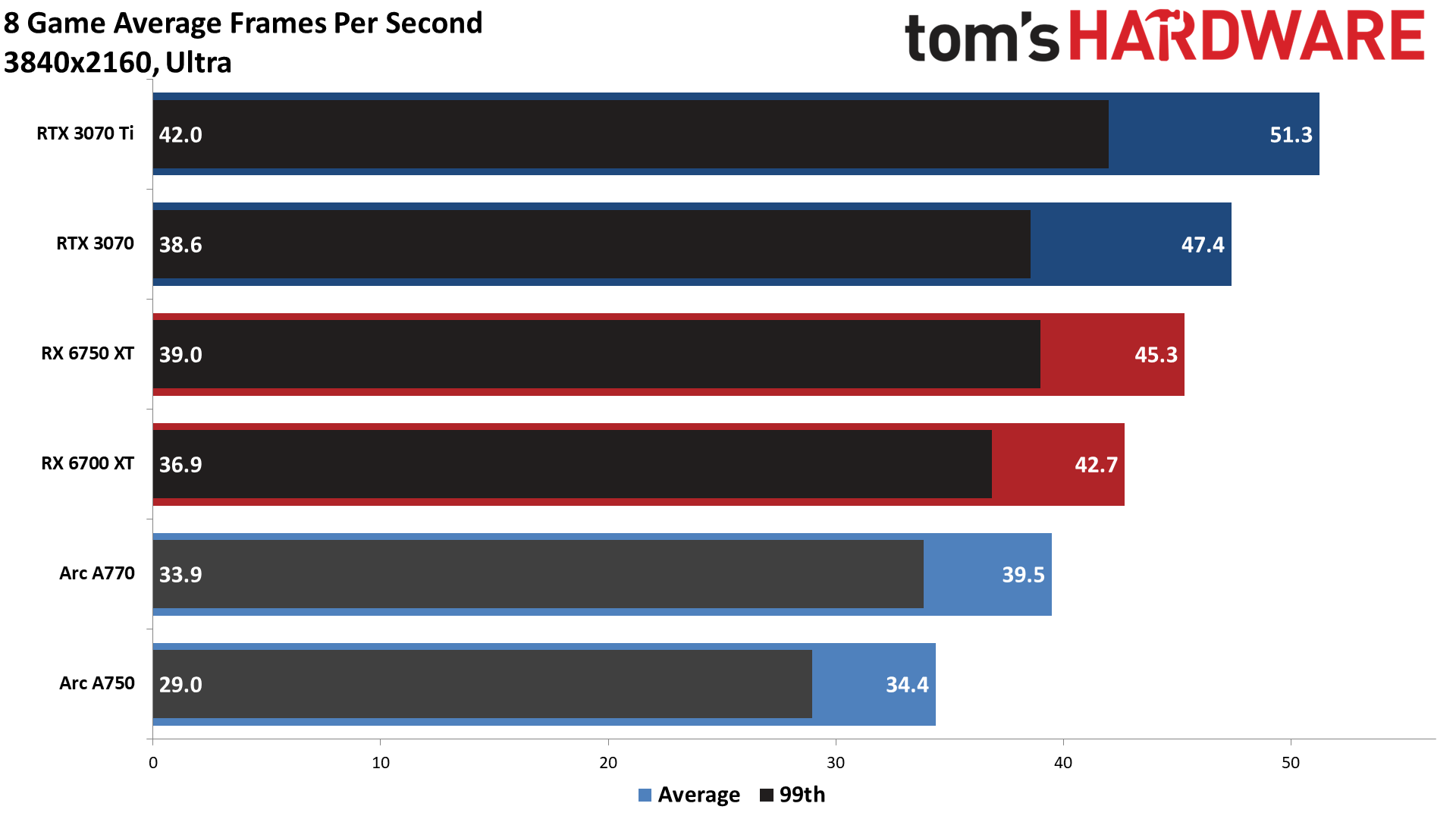

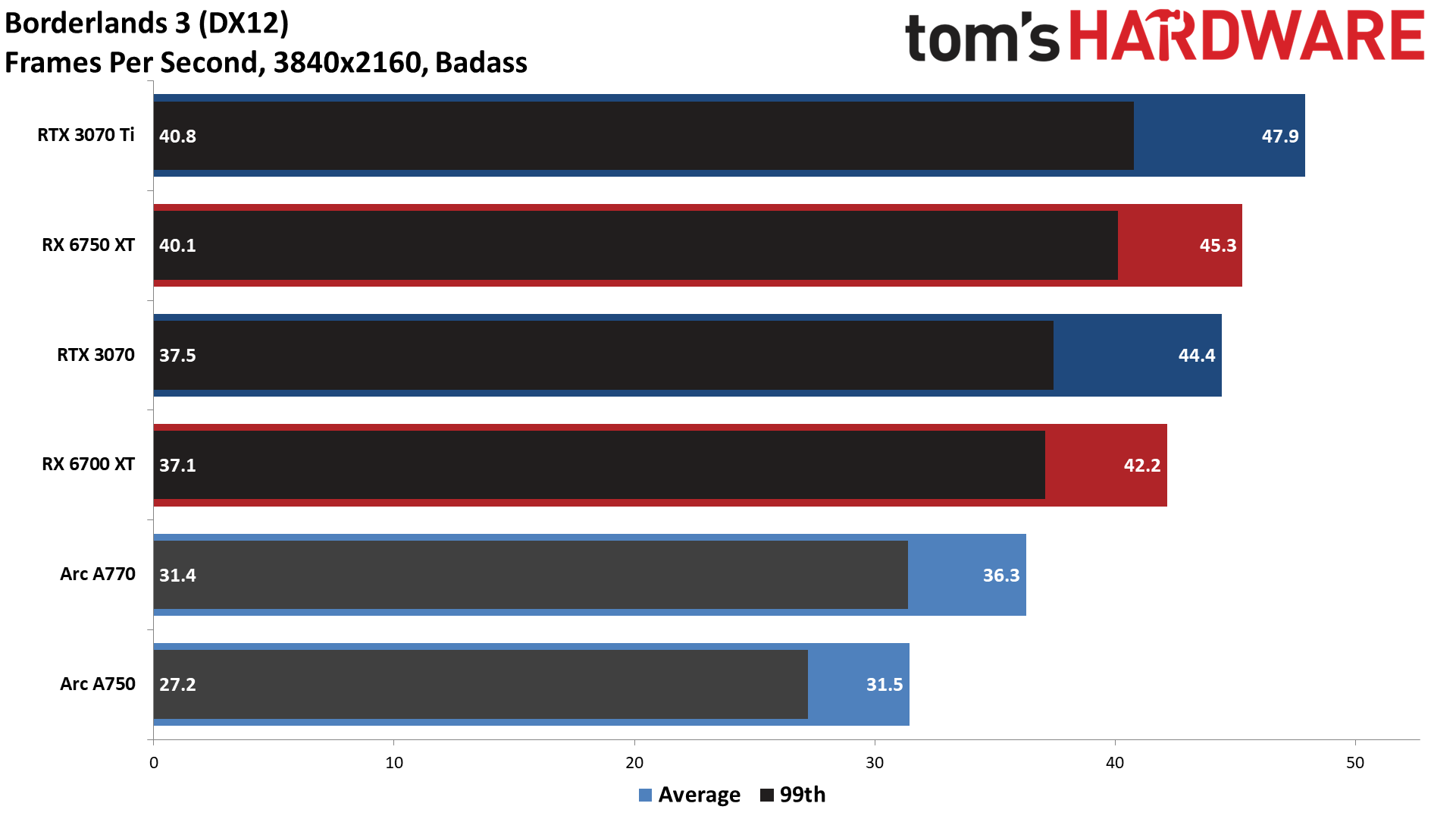

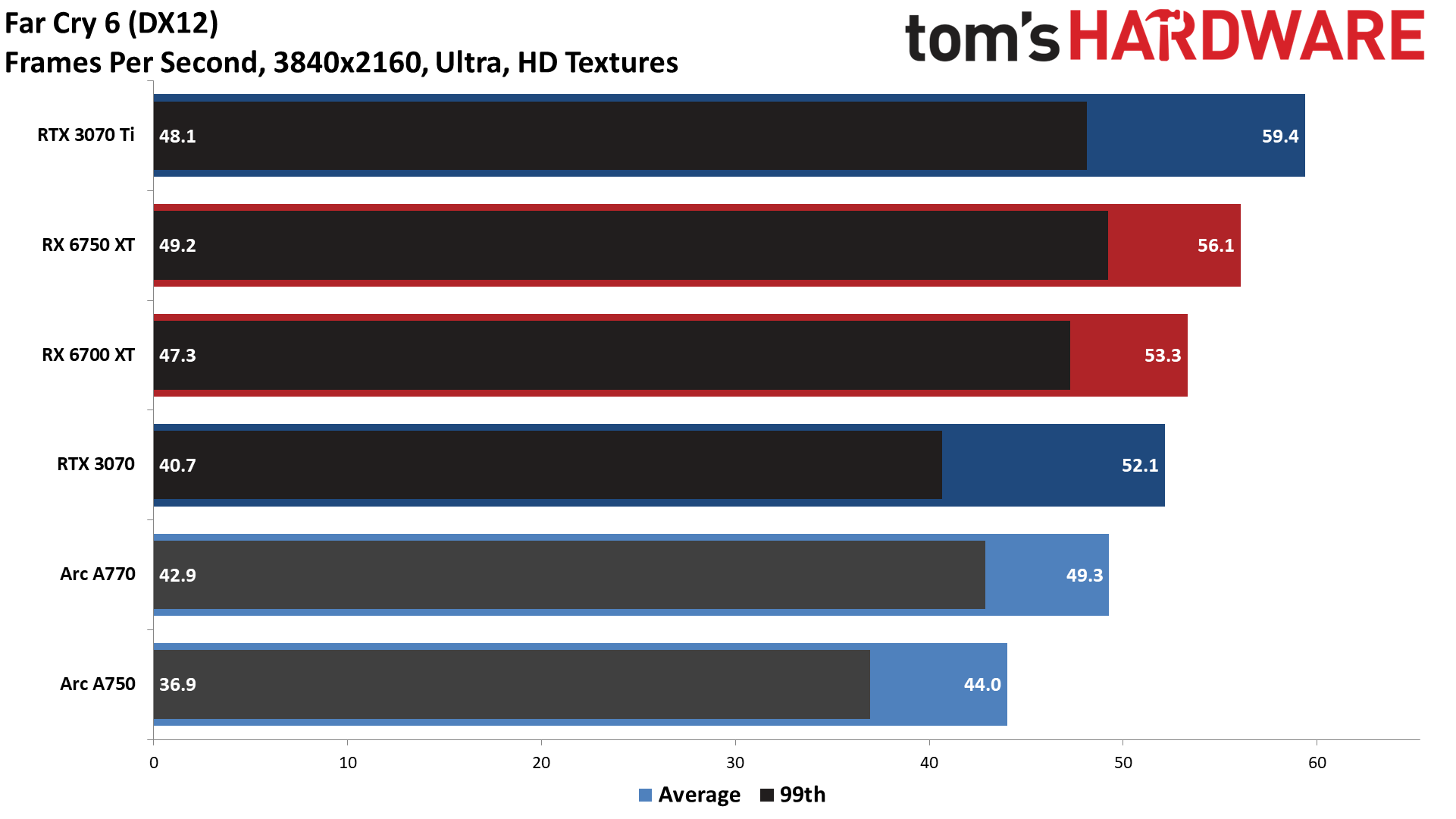

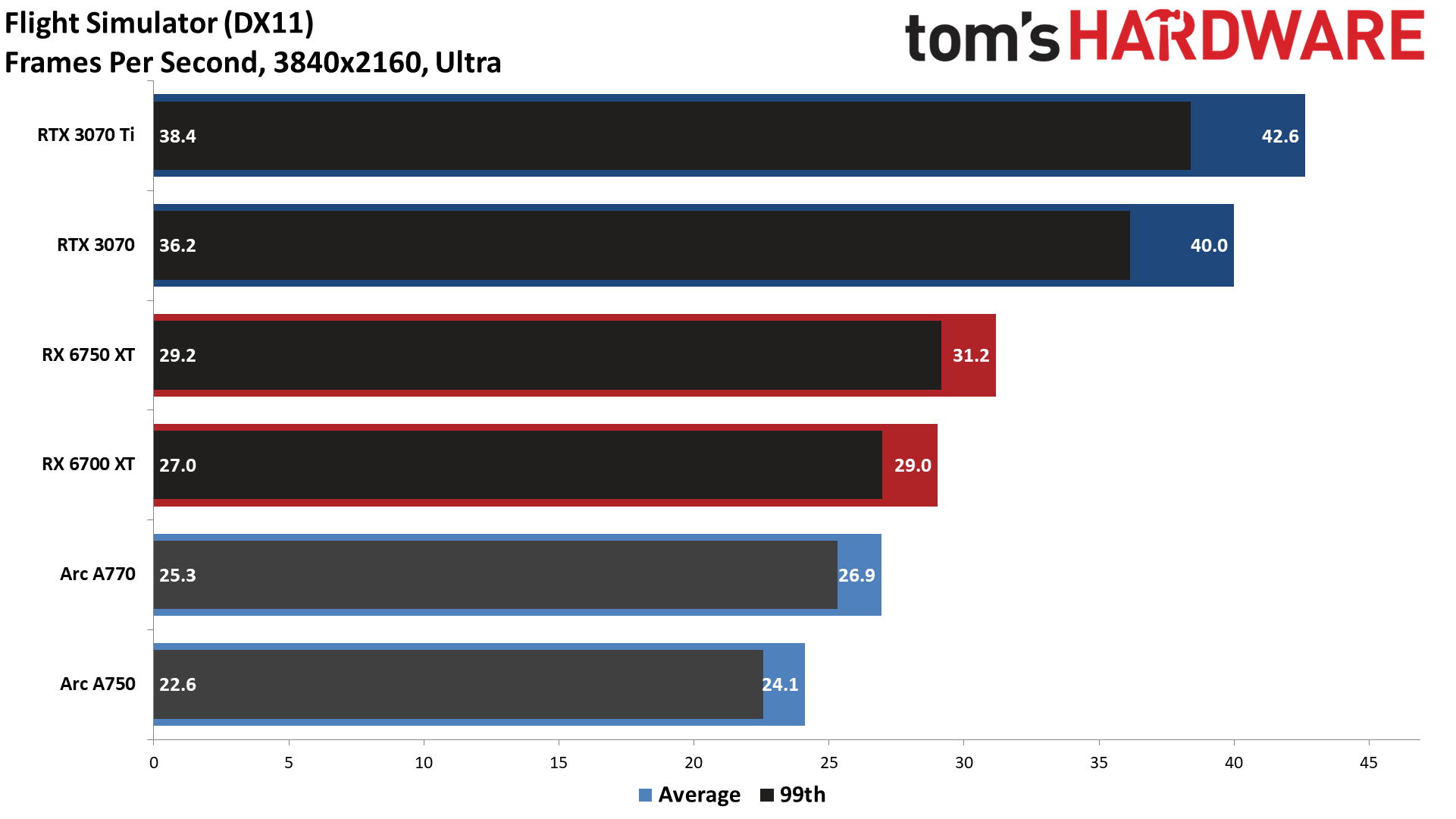

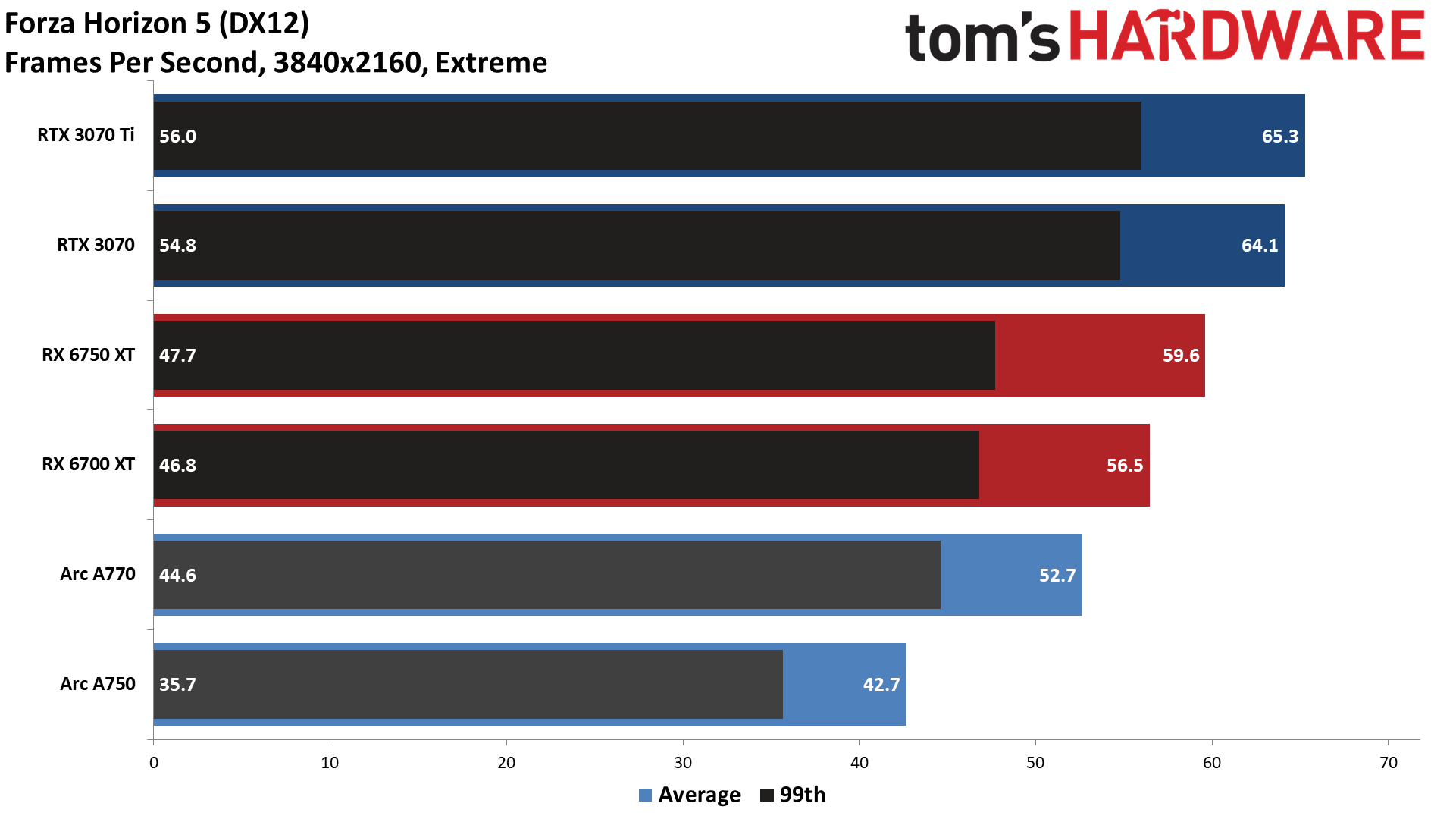

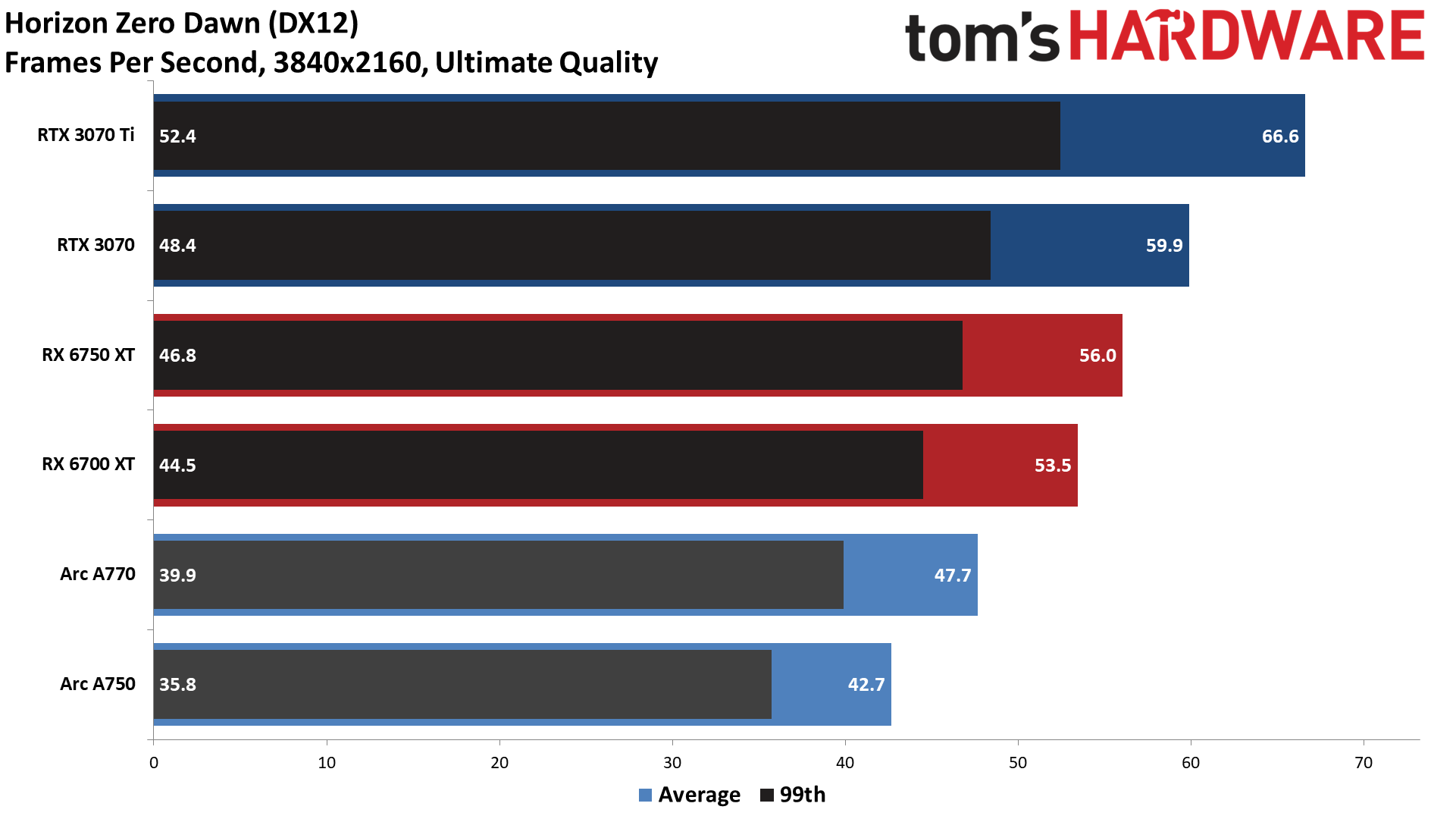

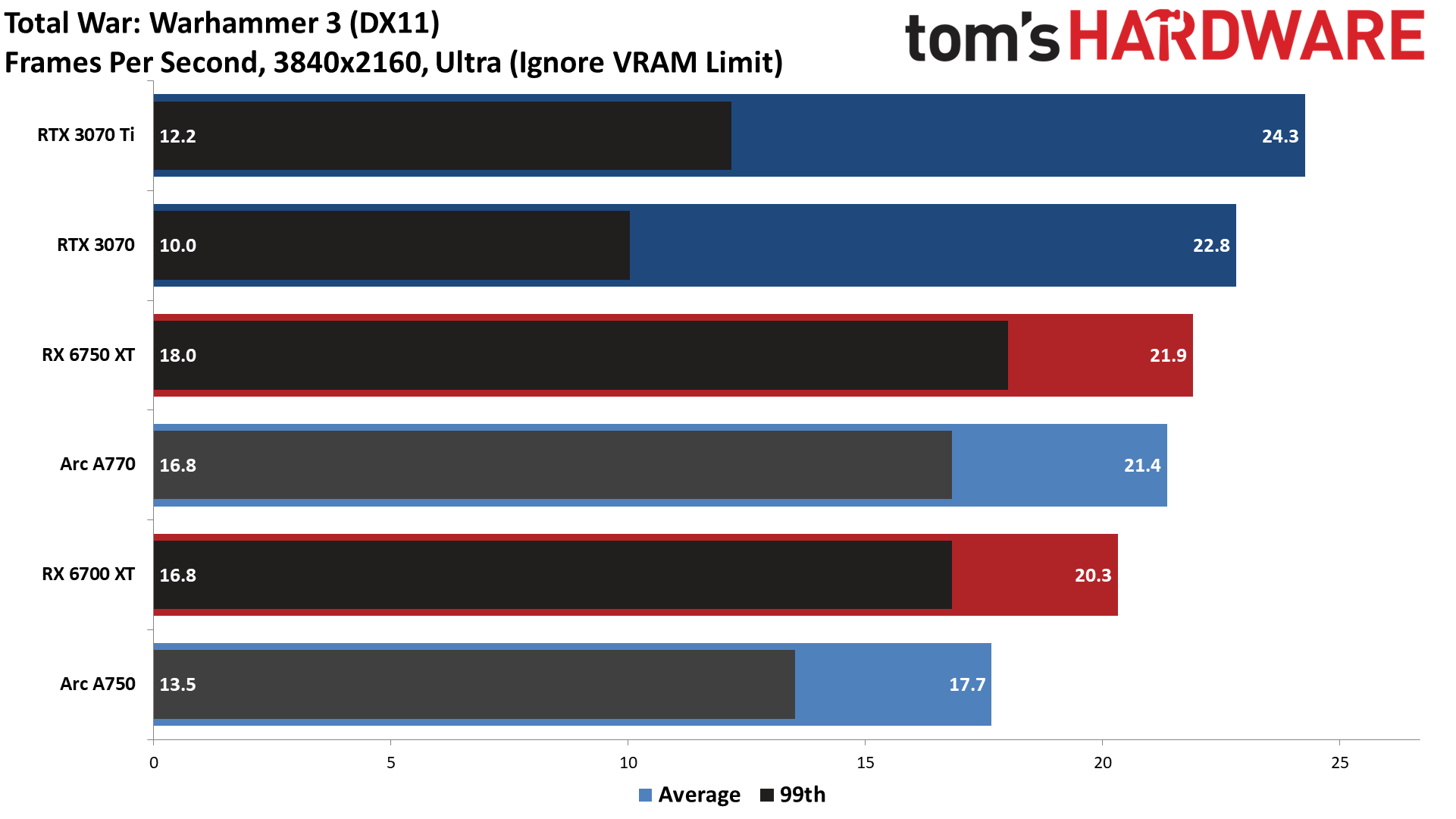

Finally, 4K ultra clearly isn't a strong point for the Arc A750. Nearly every game still cleared 30 fps in our suite, Flight Simulator and Warhammer 3 being the exceptions, but there's a reason we don't have 4K data for some of the competing GPUs. Maybe we'll fill in those results the next time we retest the cards, but 4K ultra is mostly the domain of graphics cards that cost $700 or more.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Intel Arc A750 Gaming Performance

Prev Page Intel Arc A750 Test Setup Next Page Intel Arc A750 Ray Tracing Performance

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

cknobman Performance numbers better than expected.Reply

Power usage and temperatures are less than desired. -

tennis2 TBH, not an unexpected outcome for their first product. The DX12 emulation was a strange choice, forward-thinking sure, but not at that much cost to older games they know reviewers are still testing on. Was wishing/hoping Intel's R&D budget could've gotten a little closer to market parity (I'm sure they did also for pricing) but I don't know what their R&D budget was for this project. Seems like their experience in IGP R&D could've been better extrapolated into discrete cards, but apparently not.Reply

My biggest concern is future support. They said they're committed to dGPUs, but this product line clearly didn't live up to their expectations. Unless we're all being horribly lied to on GPU pricing, it doesn't seem like Intel is making much/any money on the A750/770. Certainly not as much as they'd hoped. If next gen is a flop also.....who knows, maybe they call it quits. Then what? Would they still provide driver updates? For how long?

I do wonder what % of games released in the past 2 years (say top 100 from each year) are DX12.... -

JarredWaltonGPU Reply

Intel will continue to do integrated graphics for sure. That means they'll still make drivers. But will they keep up with changes on the dGPU side if they pull out? Probably not.tennis2 said:TBH, not an unexpected outcome for their first product. The DX12 emulation was a strange choice, forward-thinking sure, but not at that much cost to older games they know reviewers are still testing on. Was wishing/hoping Intel's R&D budget could've gotten a little closer to market parity (I'm sure they did also for pricing) but I don't know what their R&D budget was for this project. Seems like their experience in IGP R&D could've been better extrapolated into discrete cards, but apparently not.

My biggest concern is future support. They said they're committed to dGPUs, but this product line clearly didn't live up to their expectations. Unless we're all being horribly lied to on GPU pricing, it doesn't seem like Intel is making much/any money on the A750/770. Certainly not as much as they'd hoped. If next gen is a flop also.....who knows, maybe they call it quits. Then what? Would they still provide driver updates? For how long?

I do wonder what % of games released in the past 2 years (say top 100 from each year) are DX12....

I don't really think they're going to ax the GPU division, though. Intel needs high density compute, just like Nvidia needs its own CPU. There are big enterprise markets that Intel has been locked out of for years due to not having a proper solution. Larrabee was supposed to be that option, but when it morphed into Xeon Phi and then eventually got axed, Intel needed a different alternative. And x86 compatibility on something like a GPU (or Xeon Phi) is going to be more of a curse than a blessing.

I really do want Intel to stay in the GPU market. Having a third competitor will be good. Hopefully Battlemage rights many of the wrongs in Alchemist. -

InvalidError About the same performance per dollar as far more mature options in the same pricing brackets, not really worth bothering with unless you wish to own a small piece of computing history.Reply -

Giroro So what's the perf/$ chart look like without Ray Tracing results included?Reply

I mean I love Control and everything, but I've been done with it for years. I googled "upcoming ray tracing games" and the top result was still that original list from 2019.

There's so few noteworthy RT games, that I'm surprised that Intel and the next gen cards are even bothering to support it.

Also, I'm not really understanding how the hypothetical system cost that was discussed would be factored into the math. -

InvalidError Reply

Chicken-and-egg problem: game developers don't want to bother with RT because most people don't have RT-capable hardware, hardware designers limit emphasis on RT for cost-saving reasons since very little software will be using it in the foreseeable future.Giroro said:There's so few noteworthy RT games, that I'm surprised that Intel and the next gen cards are even bothering to support it.

As more affordable yet sufficiently powerful RT hardware becomes capable of pushing 60+FPS at FHD or higher resolutions, we'll see more games using.

It was the same story with pixel/vertex shaders and unified shaders. Took a while for software developers to migrate from hard-wired T&L to shaders, give it a few year and now fixed-function T&L hardware is deprecated.

Give it another 5-7 years and we'll likely get new APIs designed with RT as the primary render flow. -

drajitsh Reply

@jaredwaltonGPUAdmin said:The Intel Arc A750 goes after the sub-$300 market with compelling performance and features, with a slightly trimmed down design compared to the A770. We've tested Intel's new value oriented wunderkind and found plenty to like.

Intel Arc A750 Limited Edition Review: RTX 3050 Takedown : Read more

Hi, I have some questions and a request

Does this support PCIe 3.0x16.

For Low end GPU could you select a low end GPU like my Ryzen 5700G. this would tell me 3 things -- support for AMD, Support for PCIe 3.0, and use for low end CPU -

krumholzmax REALLY THIS IS PLENTY GOOD? Drivers not working market try to AMD and NVIDIA BETTER AND COST LEST _ WHY SO BIG CPU ON CARD 5 Years ago by performance. Who will buy it? Other checkers say all about this j...Reply -

boe rhae Replykrumholzmax said:REALLY THIS IS PLENTY GOOD? Drivers not working market try to AMD and NVIDIA BETTER AND COST LEST _ WHY SO BIG CPU ON CARD 5 Years ago by performance. Who will buy it? Other checkers say all about this j...

I have absolutely no idea what this says. -

ohio_buckeye I don't need a card at the moment since I've got a 6700xt, but the new intel cards are interesting. If they stay around with them, I might consider a purchase of one on my next upgrade if they are decent to help a 3rd player stay in.Reply