The SSD 730 Series Review: Intel Is Back With Its Own Controller

Results: Tom's Storage Bench v1.0

Storage Bench v1.0 (Background Info)

Our Storage Bench incorporates all of the I/O from a trace recorded over two weeks. The process of replaying this sequence to capture performance gives us a bunch of numbers that aren't really intuitive at first glance. Most idle time gets expunged, leaving only the time that each benchmarked drive is actually busy working on host commands. So, by taking the ratio of that busy time and the the amount of data exchanged during the trace, we arrive at an average data rate (in MB/s) metric we can use to compare drives.

It's not quite a perfect system. The original trace captures the TRIM command in transit, but since the trace is played on a drive without a file system, TRIM wouldn't work even if it were sent during the trace replay (which, sadly, it isn't). Still, trace testing is a great way to capture periods of actual storage activity, a great companion to synthetic testing like Iometer.

Incompressible Data and Storage Bench v1.0

Also worth noting is the fact that our trace testing pushes incompressible data through the system's buffers to the drive getting benchmarked. So, when the trace replay plays back write activity, it's writing largely incompressible data. If we run our storage bench on a SandForce-based SSD, we can monitor the SMART attributes for a bit more insight.

| Mushkin Chronos Deluxe 120 GBSMART Attributes | RAW Value Increase |

|---|---|

| #242 Host Reads (in GB) | 84 GB |

| #241 Host Writes (in GB) | 142 GB |

| #233 Compressed NAND Writes (in GB) | 149 GB |

Host reads are greatly outstripped by host writes to be sure. That's all baked into the trace. But with SandForce's inline deduplication/compression, you'd expect that the amount of information written to flash would be less than the host writes (unless the data is mostly incompressible, of course). For every 1 GB the host asked to be written, Mushkin's drive is forced to write 1.05 GB.

If our trace replay was just writing easy-to-compress zeros out of the buffer, we'd see writes to NAND as a fraction of host writes. This puts the tested drives on a more equal footing, regardless of the controller's ability to compress data on the fly.

Average Data Rate

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

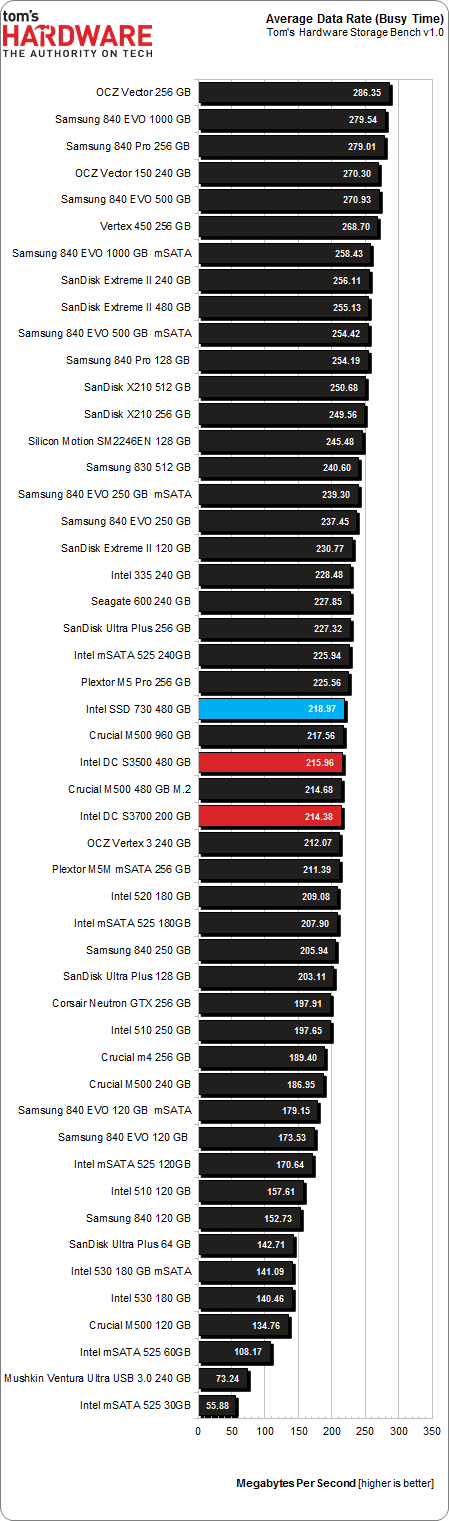

The Storage Bench trace generates more than 140 GB worth of writes during testing. Obviously, this tends to penalize drives smaller than 180 GB and reward those with more than 256 GB of capacity.

Intel's SSDs don't do magnificently, though they do well enough. Again, the SSD 730 Series is mixed up in a pack of M500s.

The average data rate consists of the total amount of busy time (the elapsed time, more or less) divided by the amount of data read and written. There's more to this trace test than that, though. We also want to look at latency, which is a good indicator when we combine it with this average data rate metric.

Current page: Results: Tom's Storage Bench v1.0

Prev Page Results: 4 KB Random Performance Next Page Results: Tom's Storage Bench v1.0, Continued-

blackmagnum That skull on an Intel SSD means this product is the Big Kahuna. Samsung just cannot crush this competition.Reply -

Amdlova that 480 drain more than a 5400rpm HDD. Samsung or sandisk for laptops. Please next SSDReply -

rokit Never expected Intel to fail like that. Samsung still offers the best performance/power consumption/$Reply

I guess that skull did the job, power of signs )

p.s. this site has level editing in non-forum mode(the one you see and use by default)

Watch the language. - G -

mamasan2000 I don't see why the Intel SSD is any good. It's midpack at best at everything. Even my cheap Sandisk is better and it was the cheapest SSD I could get around here (besides Kingston).Reply -

unityole how is samsung the best? http://www.tweaktown.com/blogs/Chris_Ramseyer/58/real-world-ssd-performance-why-time-matters-when-testing/index.htmlsandisk and toshiba SSD, look at the chart and see the performance for yourself. Evo doing well, but thats only cause of the SLC flash helping it.Reply -

eriko All this Samsung love here... I have two brand new 840 Evo 250GB drives, and they are garbage.In fact they are so poor, I had to separate all my files, and break the RAID,and have two individual volumes, so as to have Trim enabled, and also Magician running, otherwise, terrible read and write (especially) performance resulted. I did verify they were genuine drives too. As soon as you begin to fill up these 250GB Evos, performance falls off a cliff.I'm now not a believer in TLC, and wish I had waited to get the Pro's (not available in this part of the world), as I hear much better things about them.But I've had my fill of reading reviews on consumer drives, I'm going to California in a week or so, and so I will either get 2 x 400GB S3700's, or a single 800GB S3700 (and to hell with RAID). Enterprise drives are the bomb, and don't forget that. Lost way too much time and data now with 'consumer' drives...By the way, X25E 64GB still going strong without so much as a hiccup. Not even a burp... If they made a 640GB X25E, I think I'd suck their, ok, I won't say that but you get the idea.Reply -

zzzaac Just curious, this speed, would you be able to tell that it is faster, or is it just though benchmarks?.This ssd is quite expensive at my local parts shopReply