Nvidia GeForce RTX 2080 Ti Founders Edition Review: A Titan V Killer

Why you can trust Tom's Hardware

Temperatures And Clock Rates

While we don't like that Nvidia's new thermal solution kicks waste heat back into your chassis, it's clearly a superior performer. Clock rate losses aren't nearly as dramatic as the previous generation. And a cooler-running processor means GPU Boost rates could probably be quite a bit higher without an issue.

Moreover, frequency differences between the open test setup and a closed case are non-existent.

| Row 0 - Cell 0 | GeForce RTX 2080Start/End | GeForce RTX 2080 TiStart/End |

| Open Test Bench | ||

| GPU Temperature | 34 / 75 °C | 35 / 77 °C |

| GPU Clock Rate | 1905 / 1815 MHz | 1815 / 1665 MHz |

| Ambient Temperature | 22 °C | 22 °C |

| Closed Case | ||

| GPU Temperature | 35 / 75 °C | 35 / 80 °C |

| GPU Clock Rate | 1905 / 1800 MHz | 1815 / 1650 MHz |

| Ambient Temperature Inside Case | 25°C | 43°C |

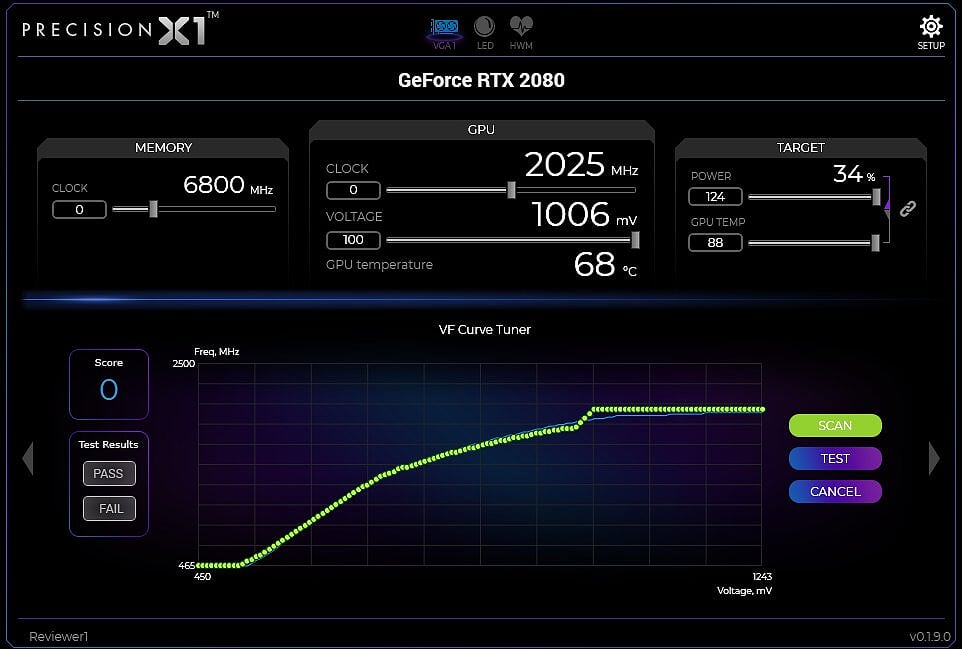

Overclocking

To the best of its ability, Nvidia is taking the trial and error out of overclocking with an API/DLL package that partners like EVGA and MSI can build into their utilities. Instead of an enthusiast going back and forth, testing one part of the frequency/voltage curve at a time and adjusting based on the stability of various workloads, Nvidia’s new Scanner runs an arithmetic-based routine in its own process, evaluating stability without user input. Although Nvidia says the metric usually encounters math errors before crashing, the fact that it’s contained means the algorithm can recover gracefully if a crash does occur. This gives the tuning software a chance to increase voltage and try the same frequency again. Once the Scanner hits its maximum voltage setting and encounters one last failure, a new frequency/voltage curve is calculated based on the known-good results.

This worked quite well for us in practice, though our results did land below what we achieved after an hour of manually tweaking. If you'd rather save time and allow this one-click approach handle overclocking, we wouldn't dissuade you; the technology does what Nvidia says it's supposed to do.

Using a beta version of EVGA's Precision X1 utility, our GeForce RTX 2080 hit a stable 2025 MHz using Nvidia's new algorithm. Unfortunately, we believe that lackluster result is due to a low-quality GPU, not a fault with the Scanner package. Meanwhile, our GeForce RTX 2080 Ti stabilized at 1935 MHz after warming up on an open bench (and without any fan curve adjustment). In a closed case, it reached 1860 MHz.

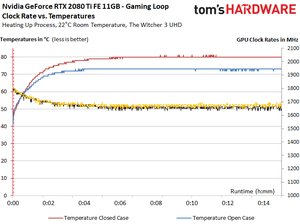

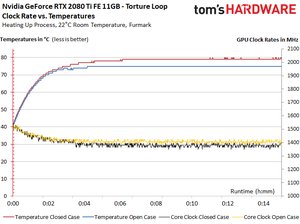

GeForce RTX 2080 Ti: Temperatures And Clock Rates

The following charts illustrate our findings over 15 minutes of warm-up time. Pay particular attention to the GPU temperature ramp on an open test bench and in a closed case. Despite the roughly eight-degree delta between both configurations, clock rates hardly change between them.

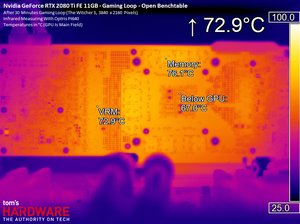

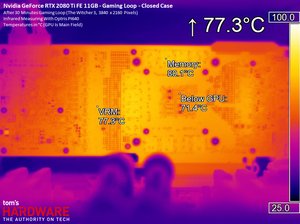

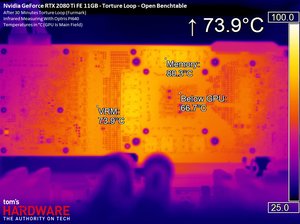

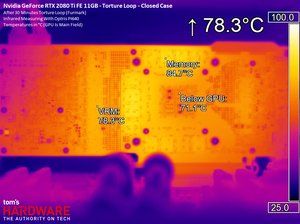

Next, we have high-resolution infrared pictures taken during our gaming loop and stress on the open bench and inside of a closed case. Large thermal deltas are apparent from both examples. However, neither workload is able to expose a problem with cooling under the GPU package. Nvidia's vapor chamber/heat sink combination again shows itself to be highly competent.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Infrared Measurements While Gaming

Infrared Measurements During Stress Test

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Current page: Temperatures And Clock Rates

Prev Page Power Consumption Next Page Nvidia's Thermal Solution, In Depth-

A Stoner Conclusion, let them hold onto these card until they can lower the price to about $700Reply -

pontiac1979 "Waste of time to write a review. "Just buy it"."Reply

Oh yeah, god forbid Tom's does an in-depth review of the latest and greatest. Keyword greatest. If you desire 4K gaming and have the funds available, why wouldn't you? -

ubercake I'm probably going to buy one... Though it's not my fault... I feel like the Russians are compelling me to do this by way of Facebook. I'm a victim in this whole Nvidia marketing scam. Don't judge.Reply

That being said, I like high-quality, high-speed graphics performance. This may also be influencing my decision.

Great review! -

AnimeMania How much of the performance increase is due to using GDDR6 memory? I know this makes the cards perform better the higher the resolution is, how does it effect other aspects of the video cards.Reply -

teknobug "Just buy it" they said...Reply

If you're in Canada, you might not want to pay the price of these. -

chaosmassive While I appreciate this very detailed and nicely written review, its kinda redundantReply

because I think Avram has already reviewed this card with his opinion on late august

with the conclusion was "just buy it" -

wiyosaya SMH I don't understand the reasoning for comparing a $3k known non-gaming card with a $1.2k gaming card. Are there really gamers our there ignorant enough, other than those with deep pockets who want bragging rights, to purchase the $3K card for gaming when they know it is not meant for gaming? Or is this to differentiate Tom's from the other tech sites in order to inspire confidence in Tom's readers?Reply

Personally, I would have rather seen the 2080 Ti compared against 1080 Ti even if it Tom's comes to the same conclusions as the other tech web sites.

The comparison in this article does not make me want to rush out and buy it because it is $1.8k cheaper than a non-gaming card. I really hate to say it, but with the premise of this review being somewhat along the lines of "lookie hereee kiddies. Heree's a gaming card for $1.2k that beets a $3k non-gaming card" turned this review into a TL;DR review for me.