Why you can trust Tom's Hardware

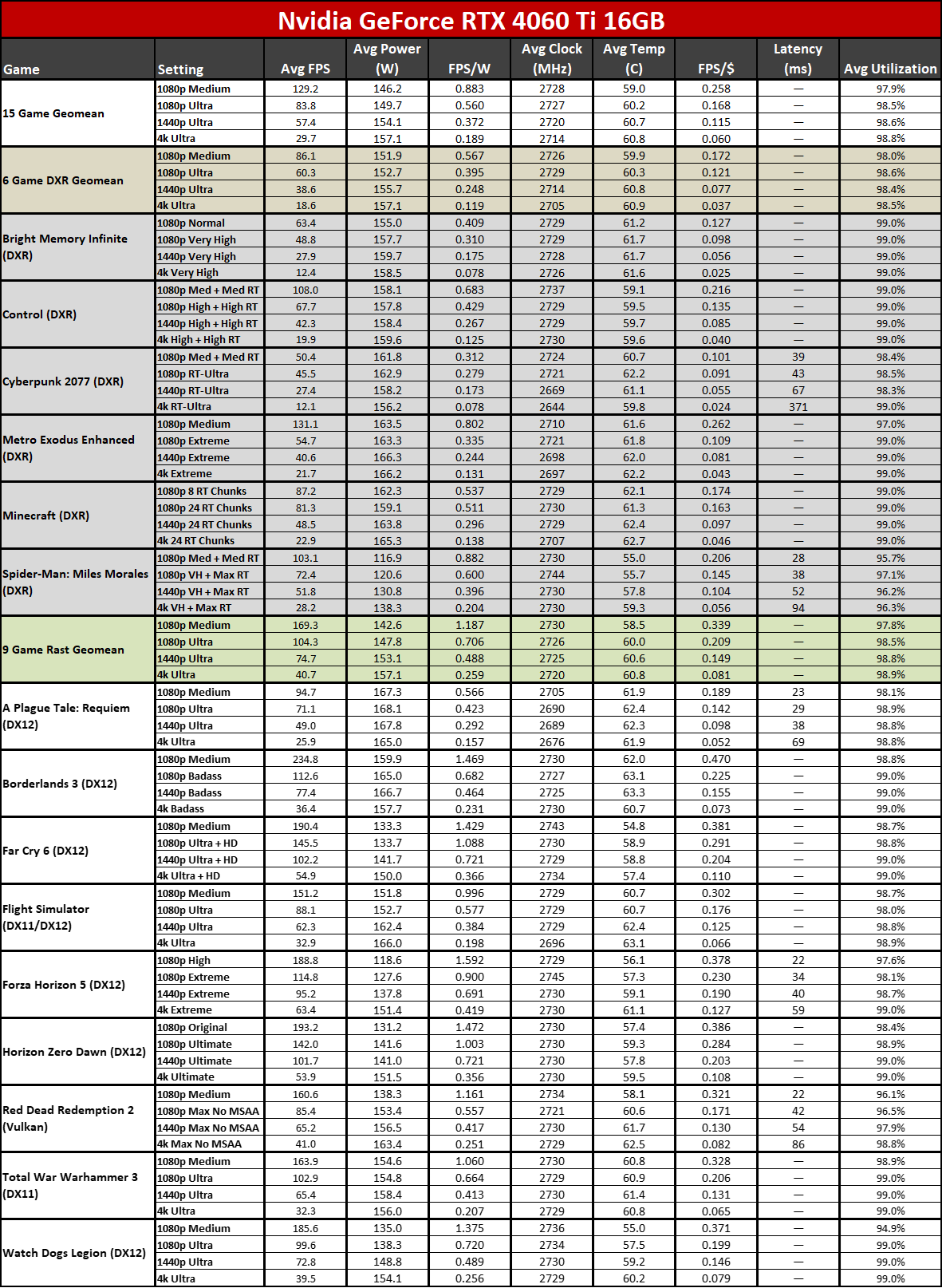

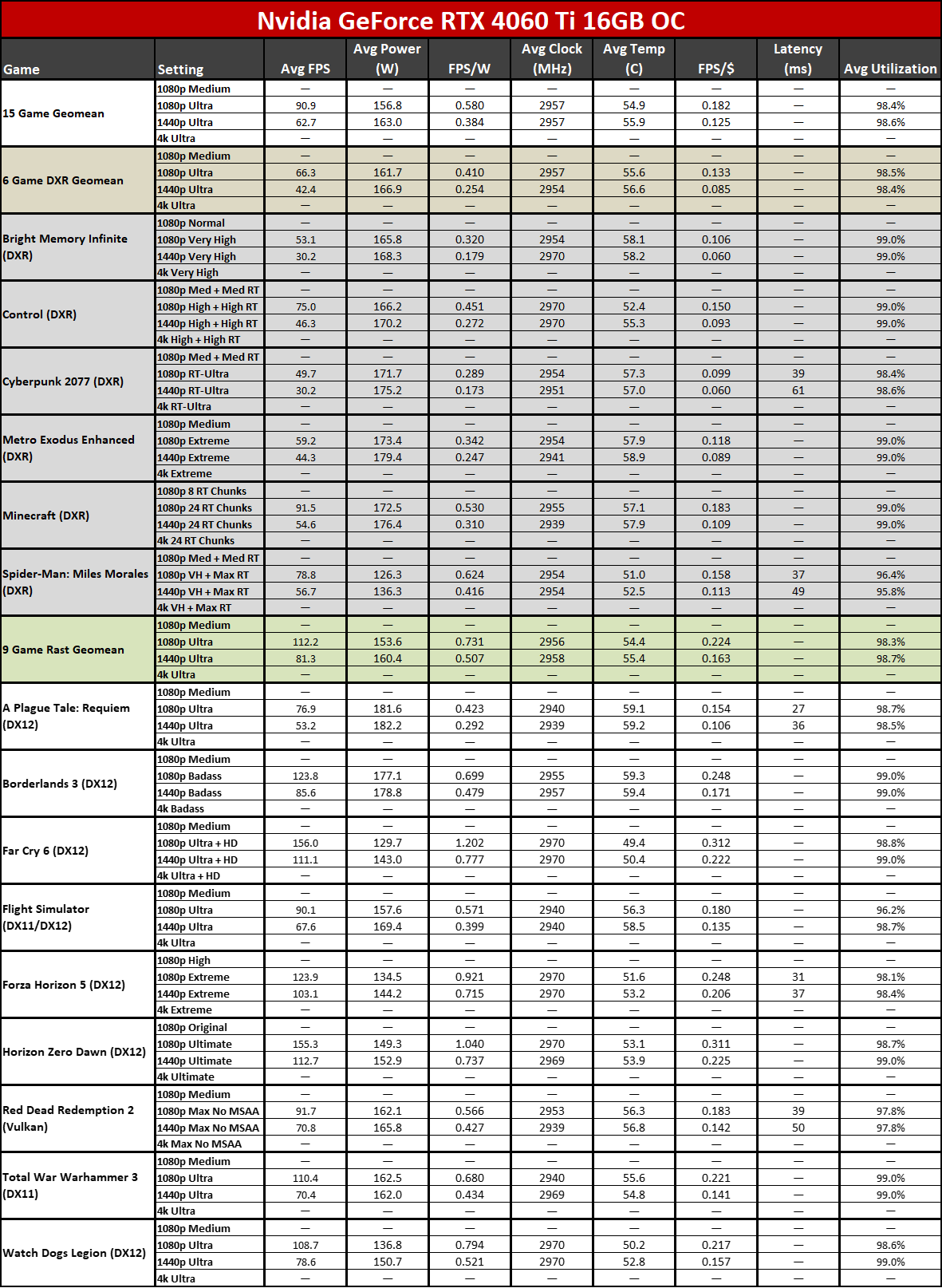

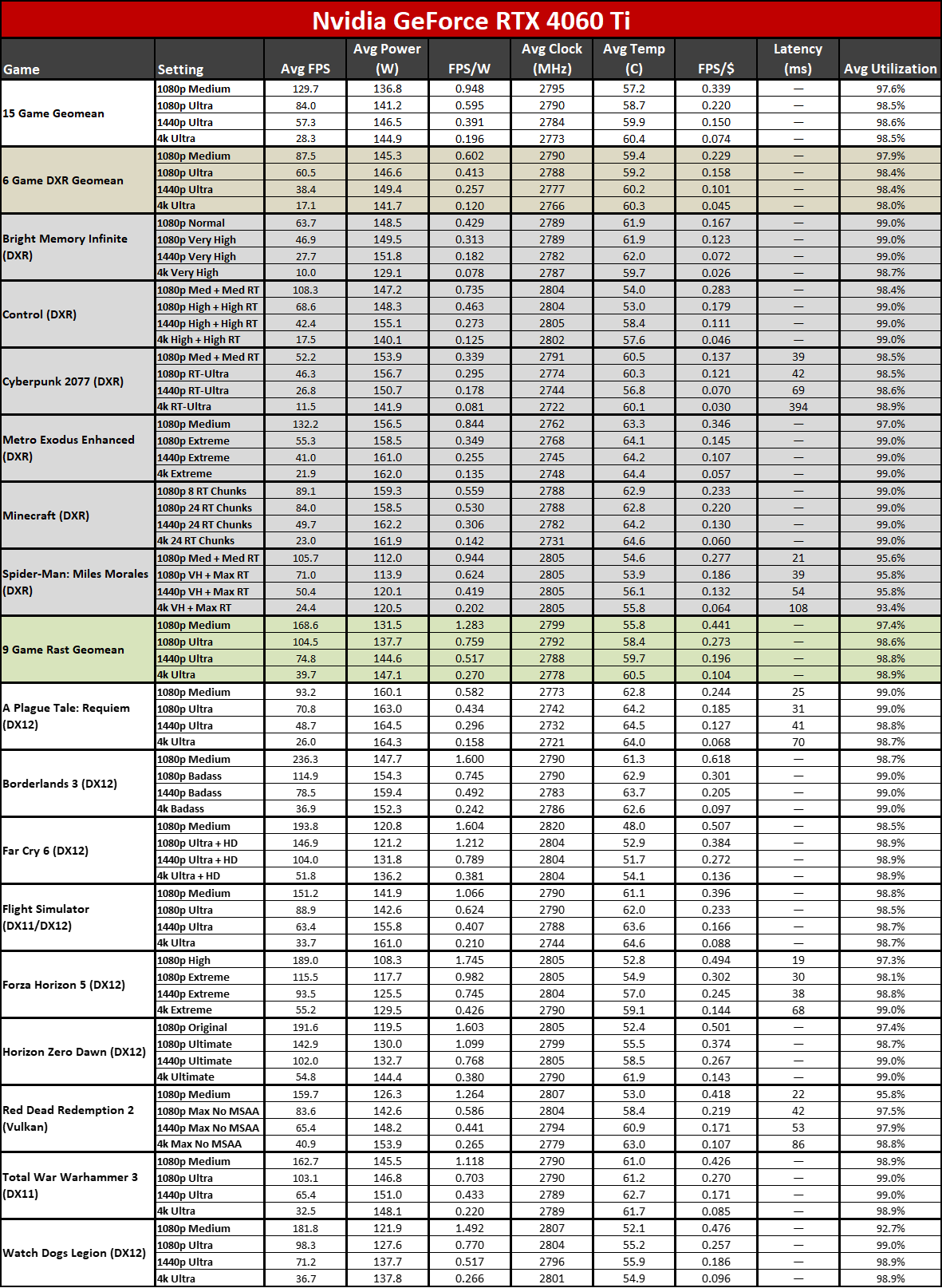

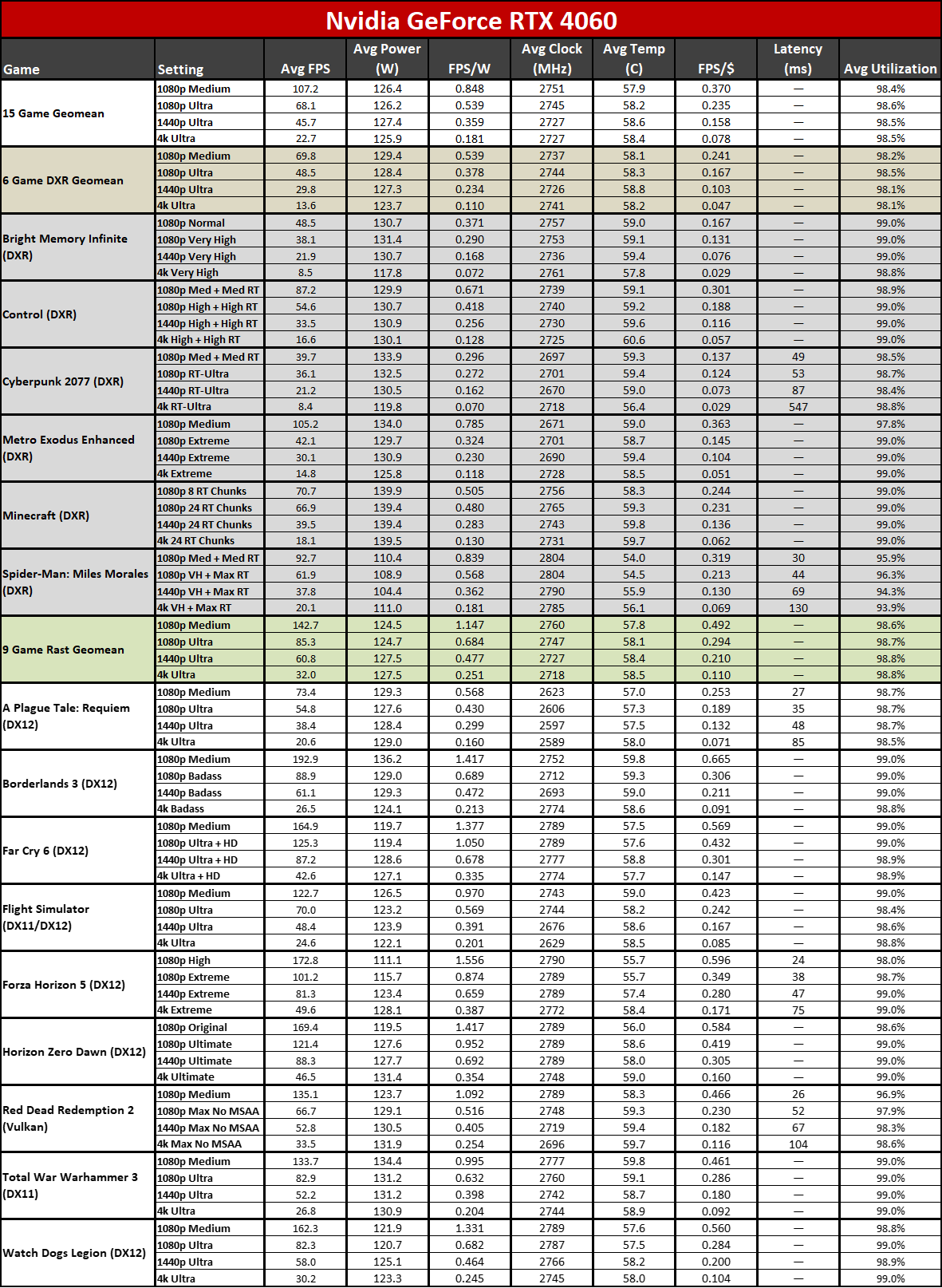

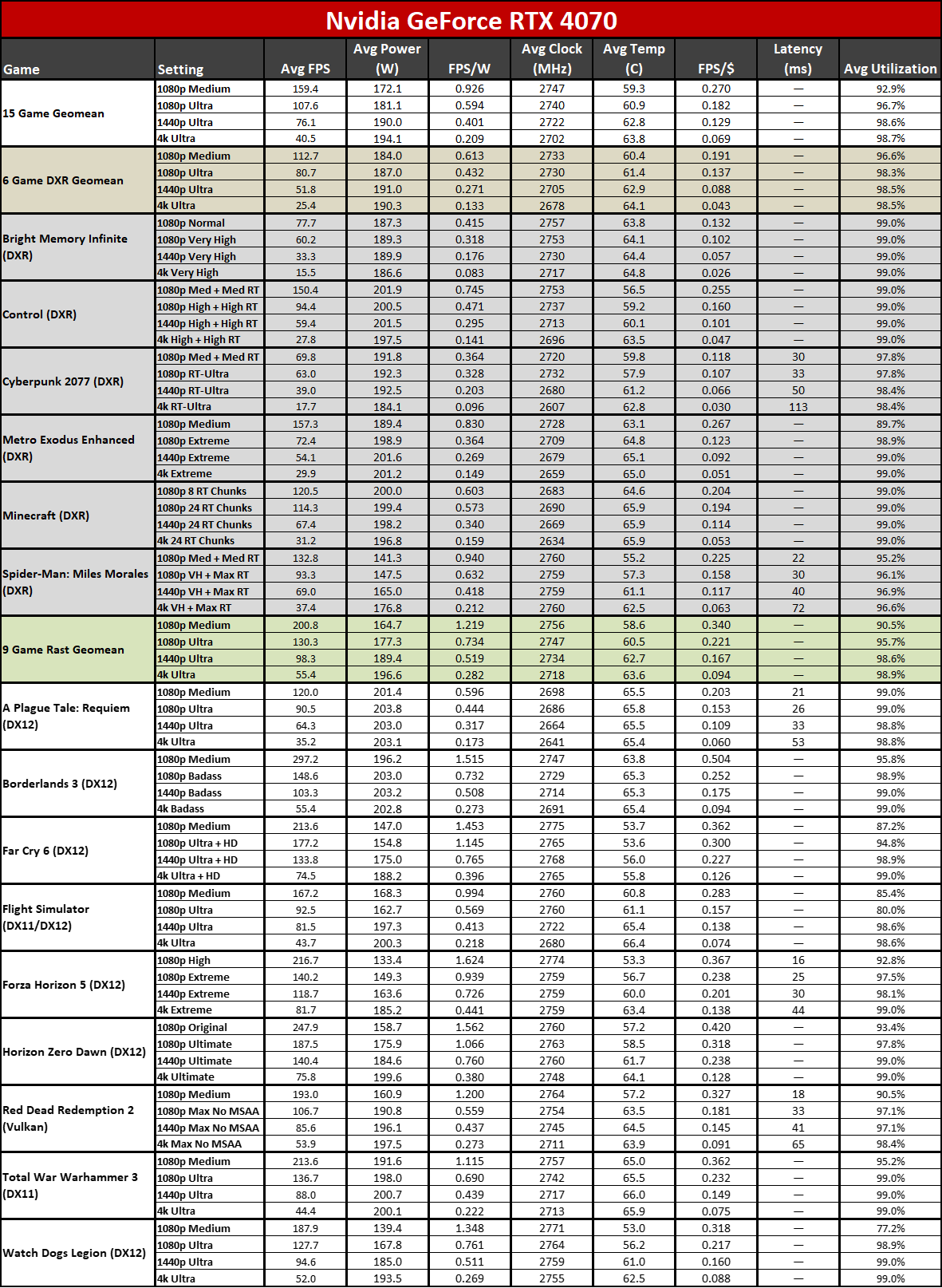

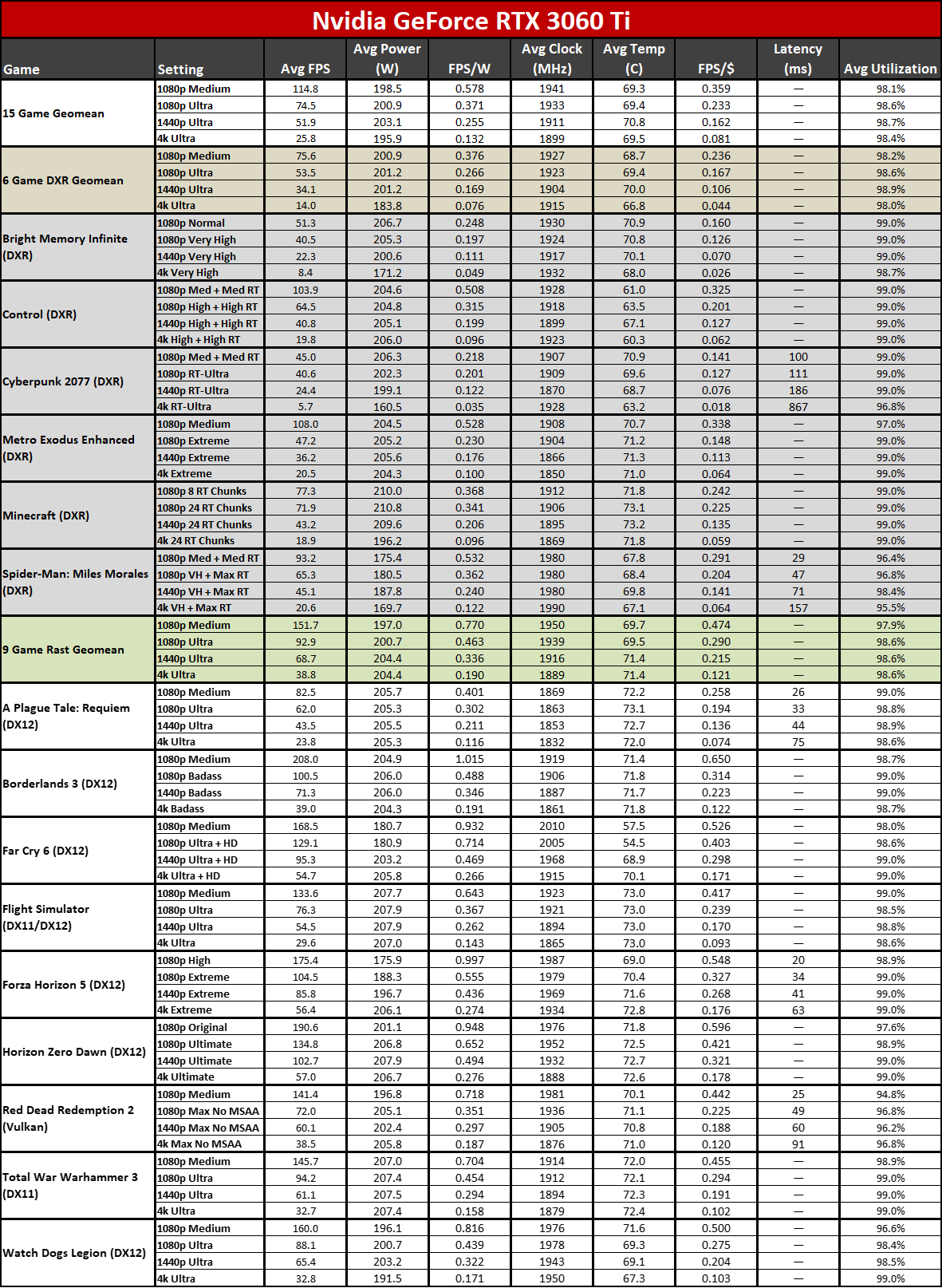

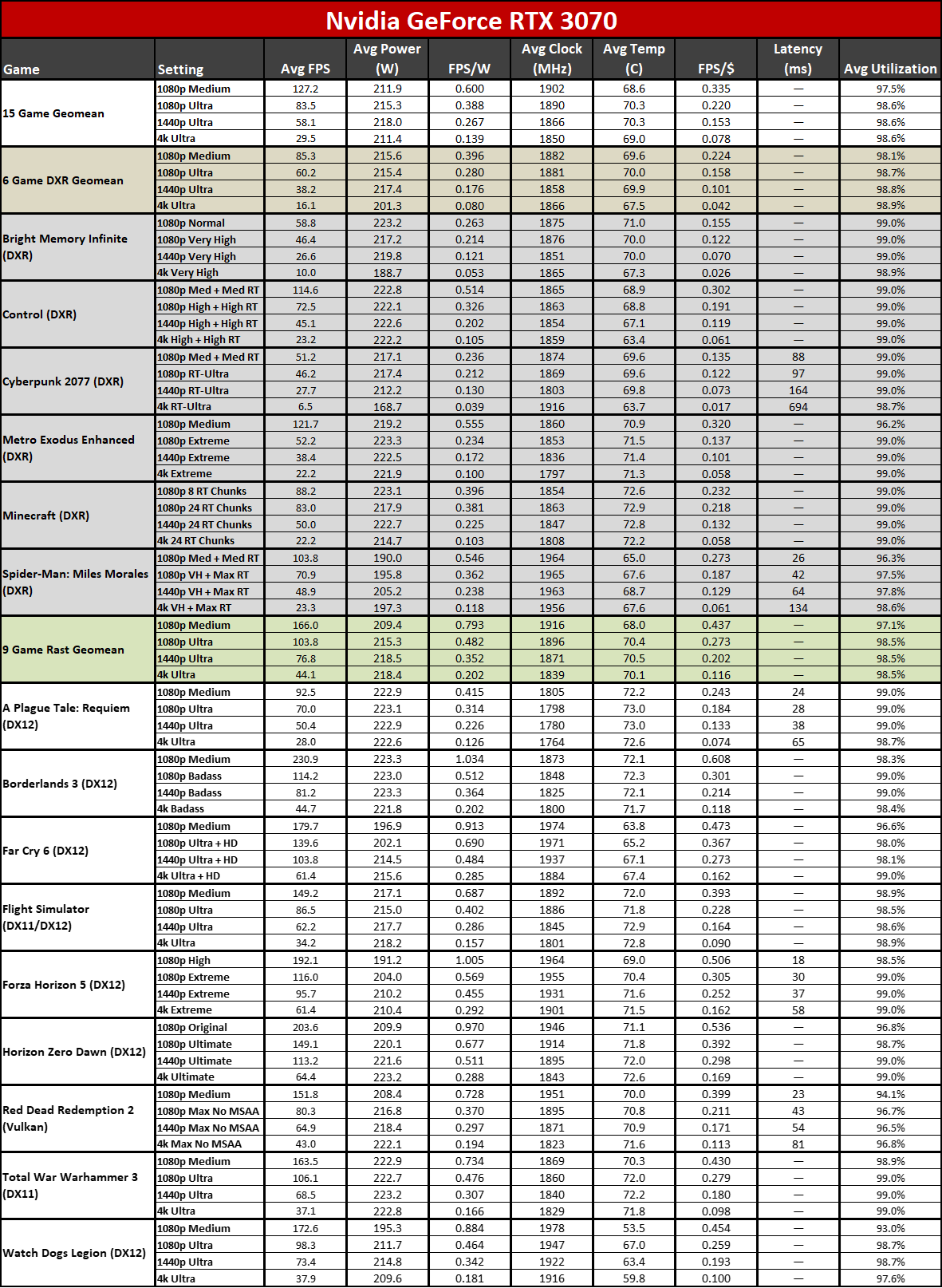

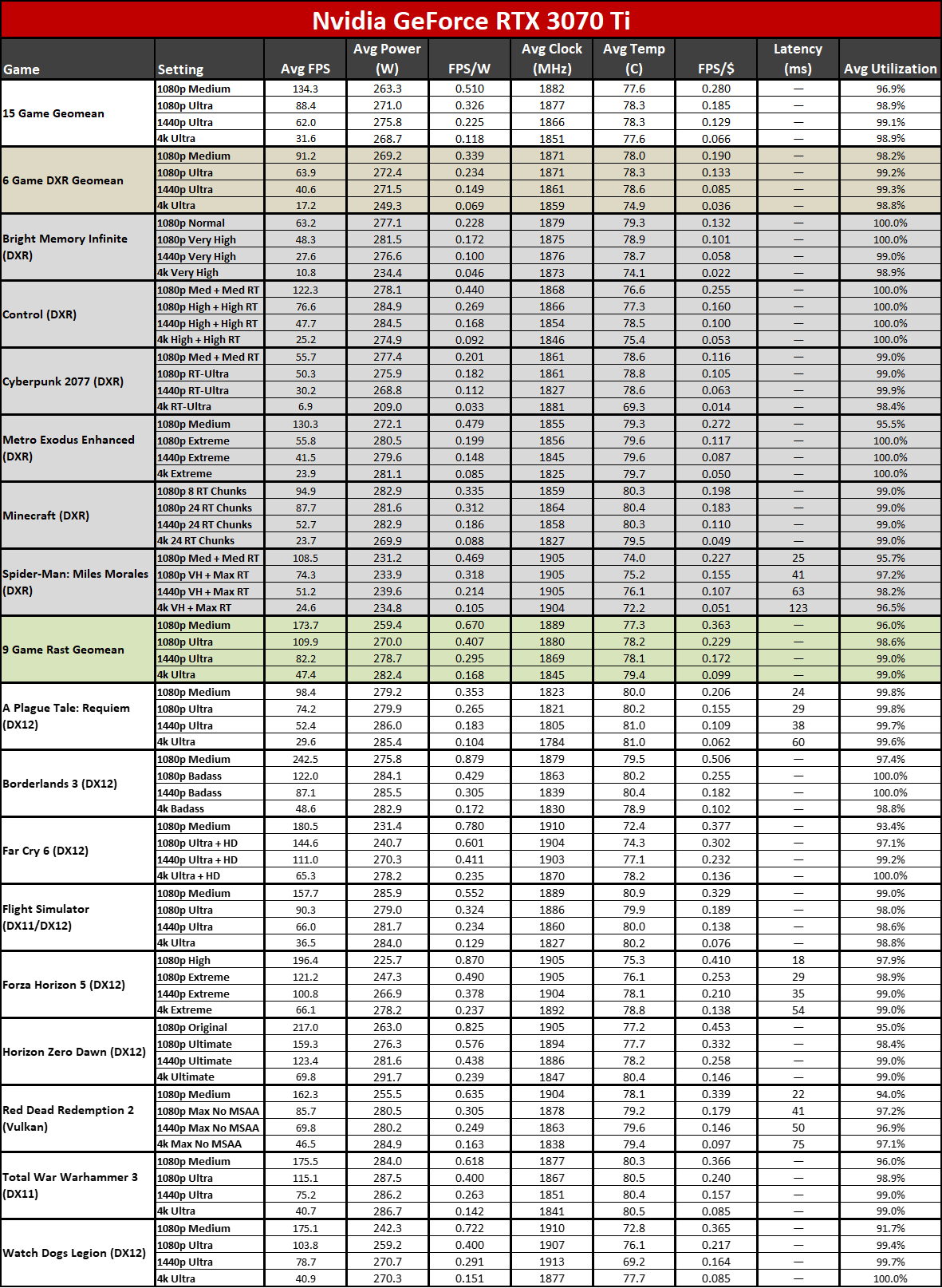

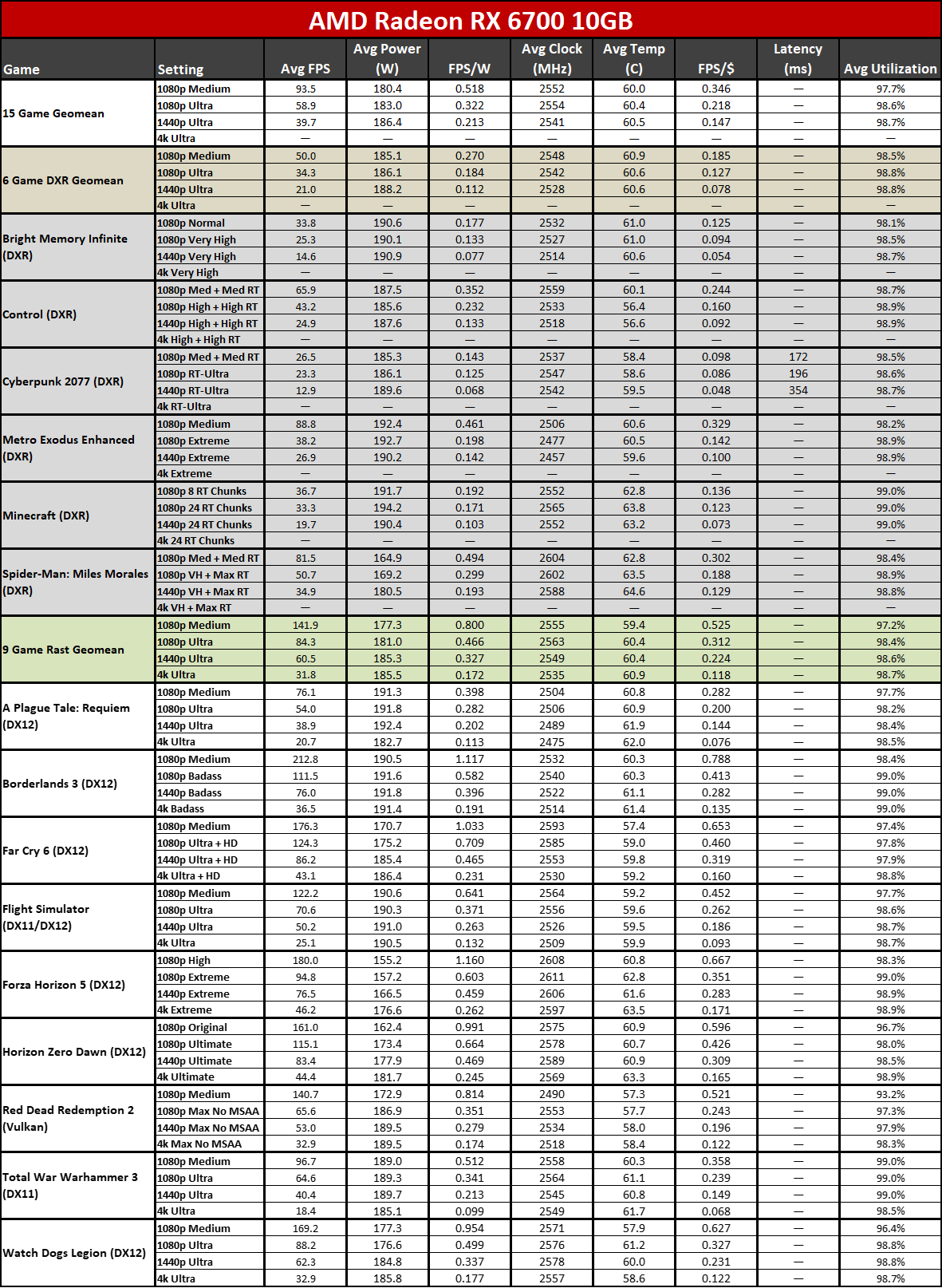

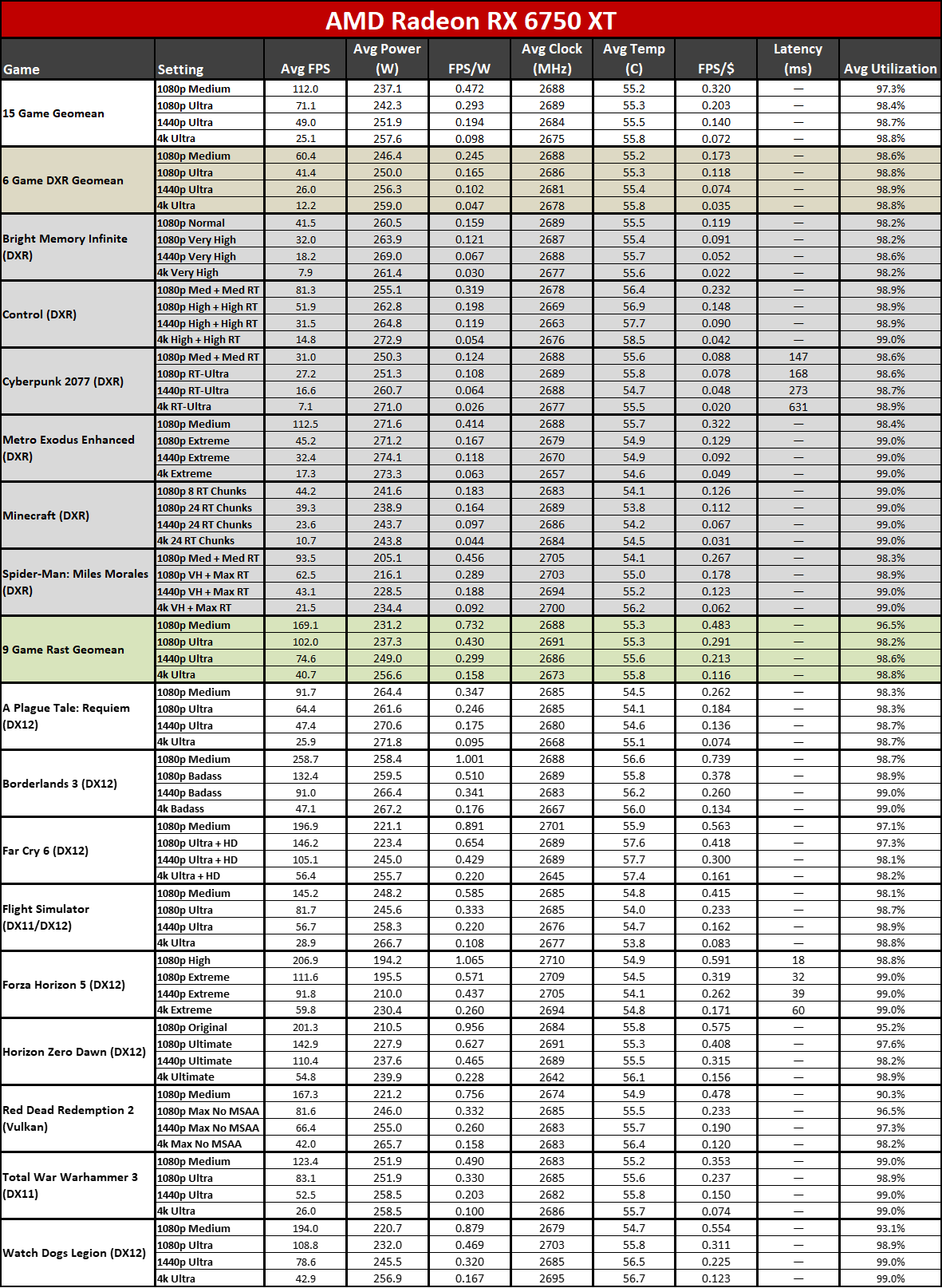

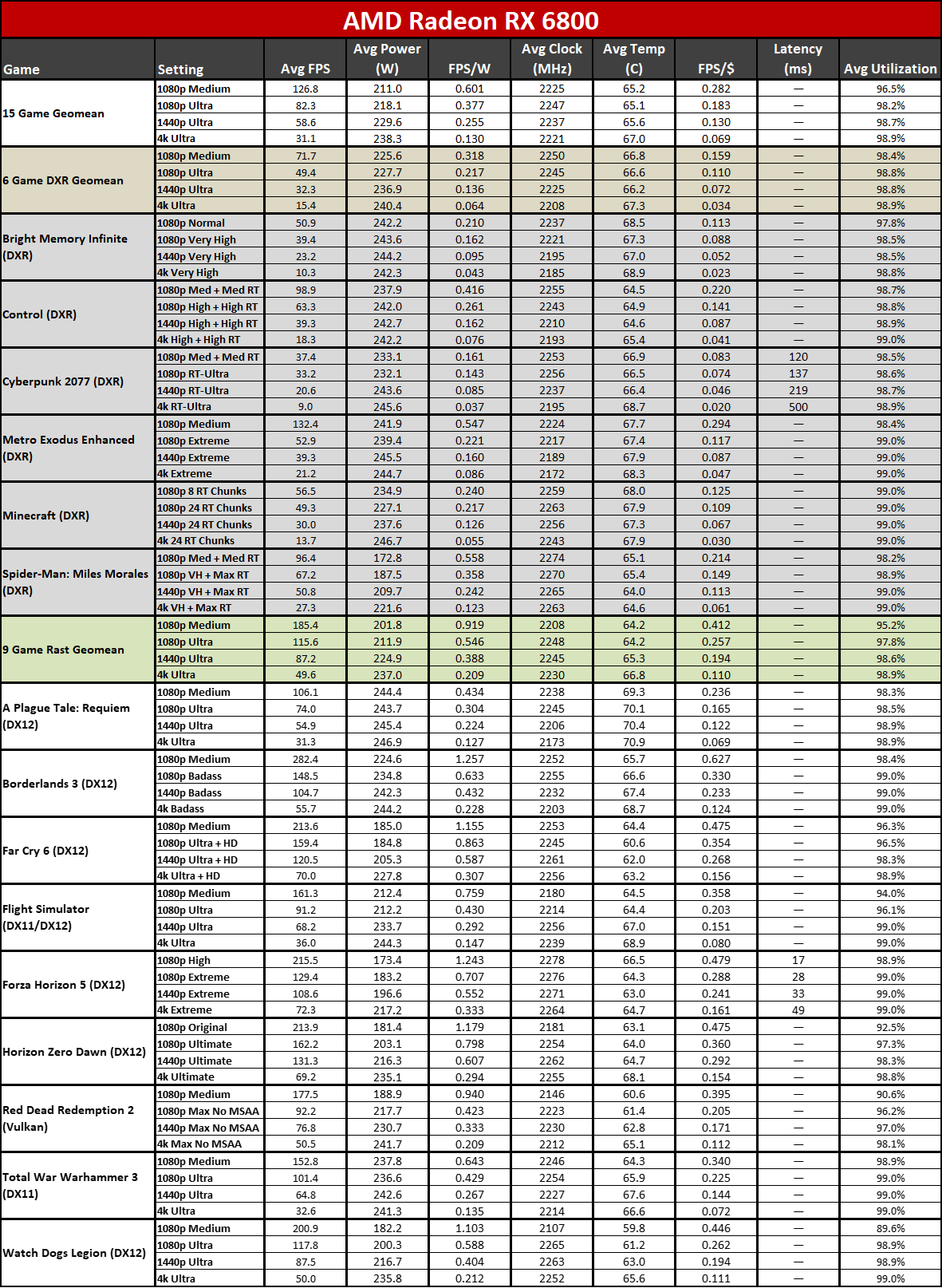

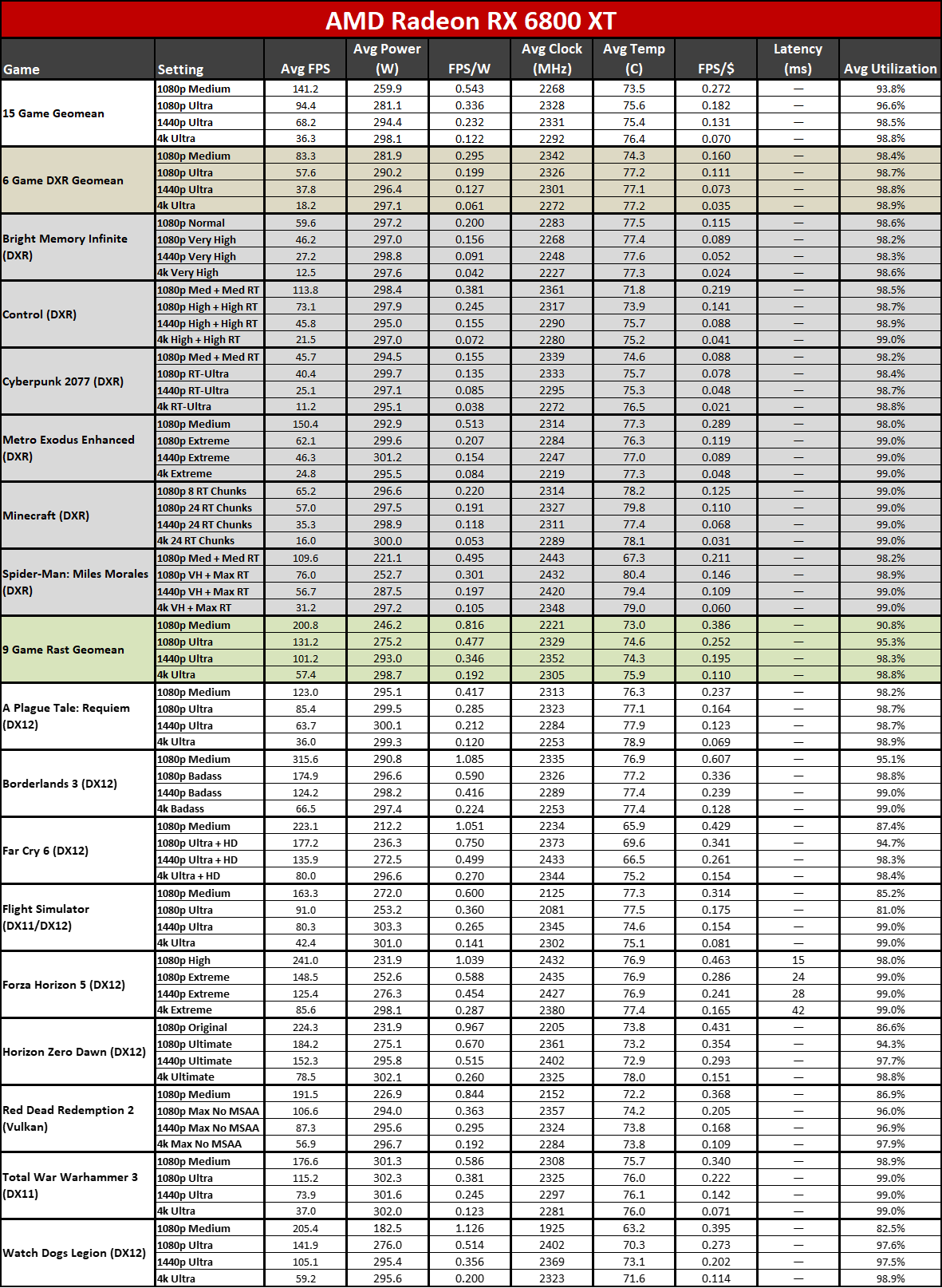

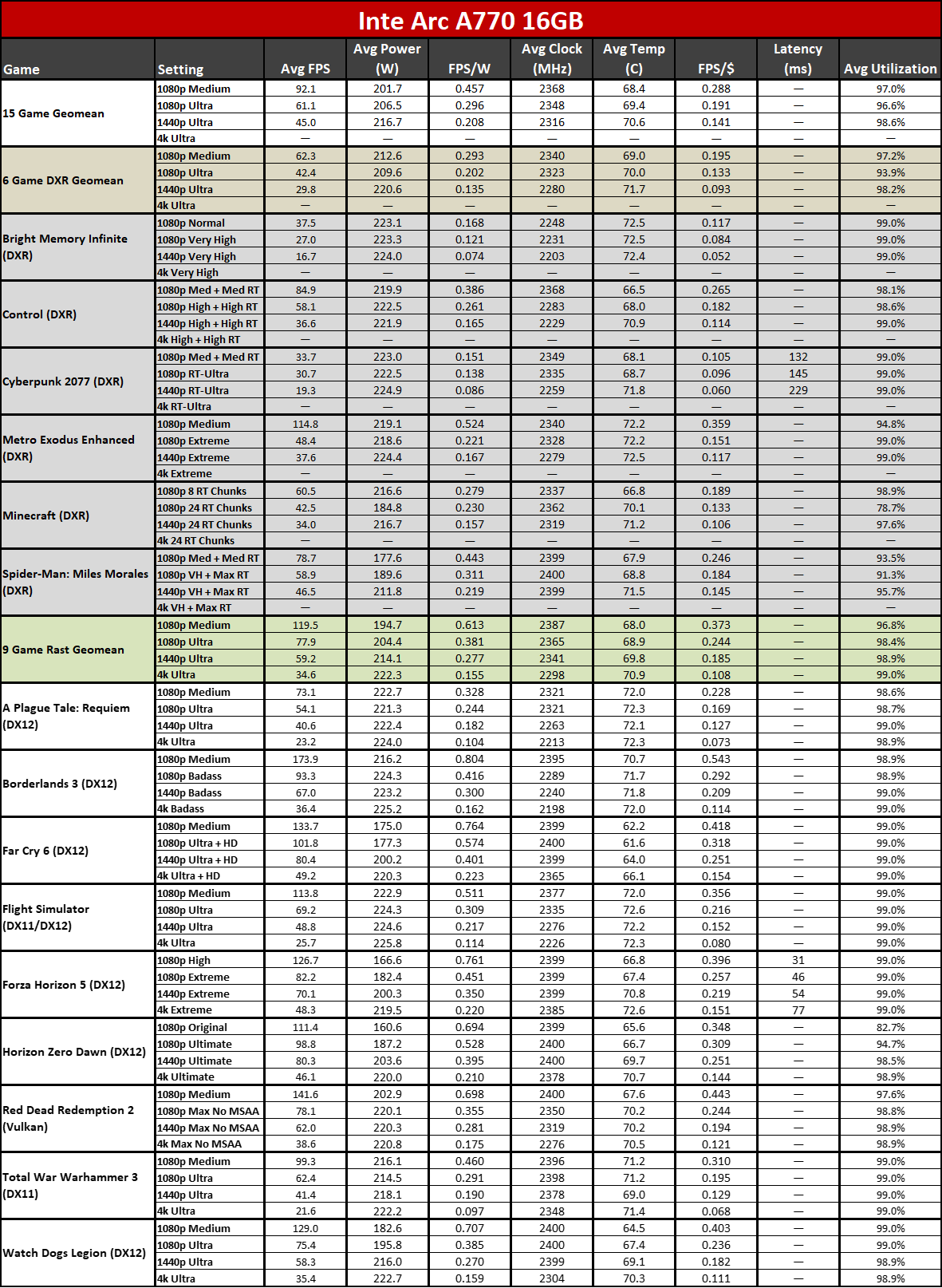

Our new test PC uses an Nvidia PCAT v2 device, and we've switched from the Powenetics hardware and software we've previously used to PCAT, as it gives us far more data without the need to run separate tests. PCAT with FrameView allows us to capture power, temperature, and clocks from our full gaming suite. The charts are the geometric mean across all 15 games, though we'll also have full tables showing the individual results — some games are more taxing than others, as you'd expect.

If you're wondering: No, PCAT does not favor Nvidia GPUs in any measurable way. We checked power with our previous setup for the same workload and compared that with the PCAT, and any differences were well within the margin of error (less than 1%).

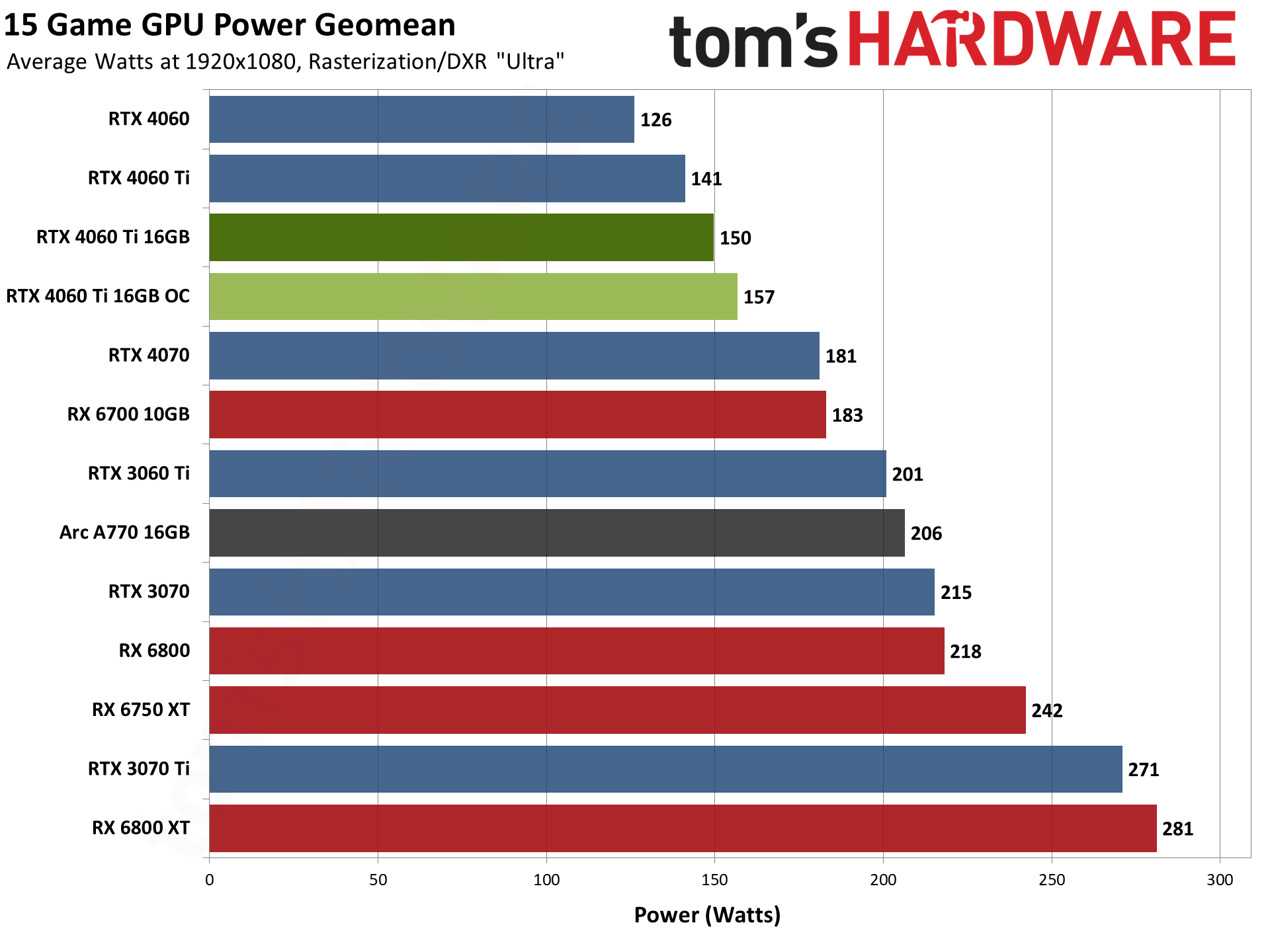

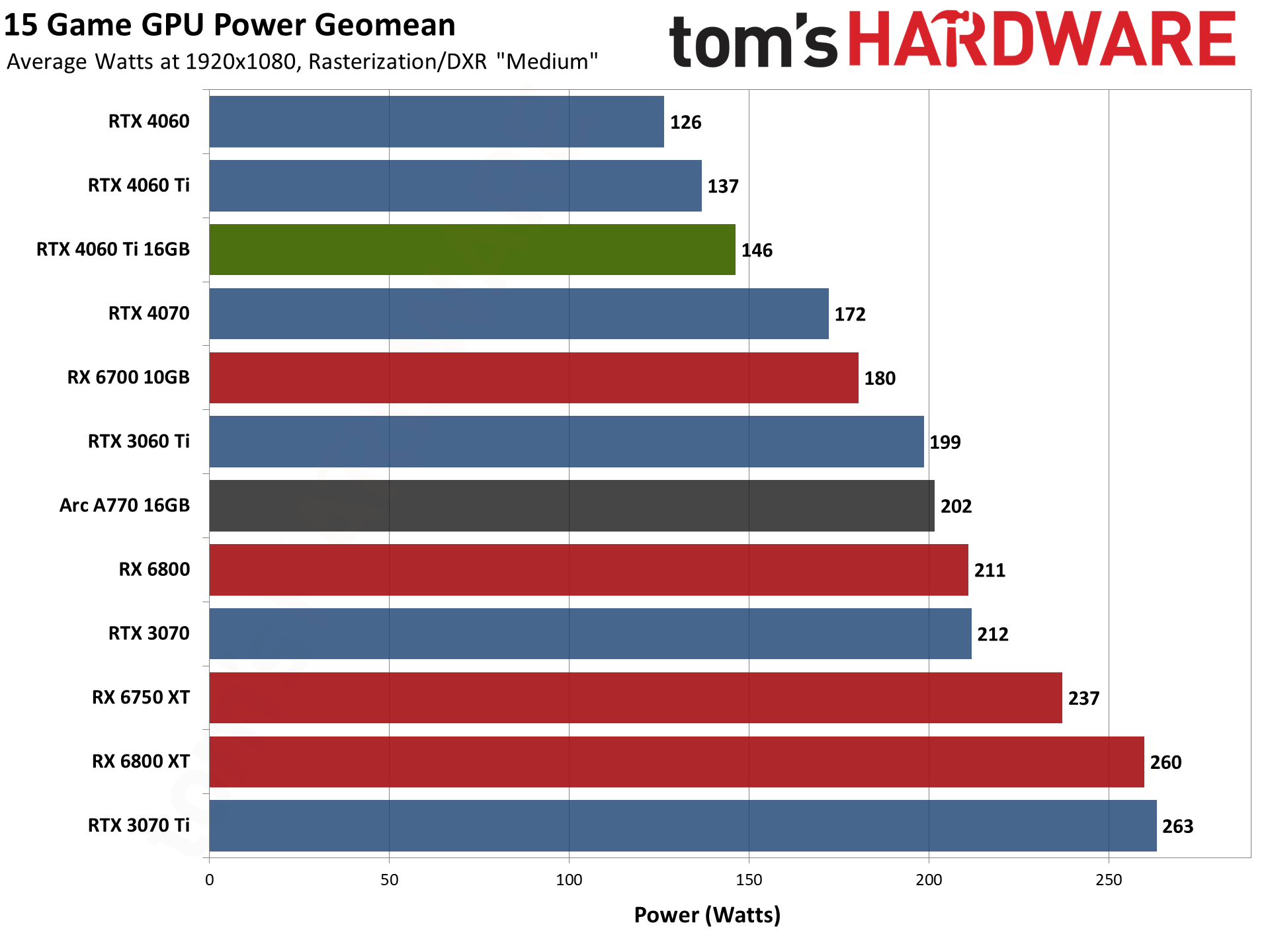

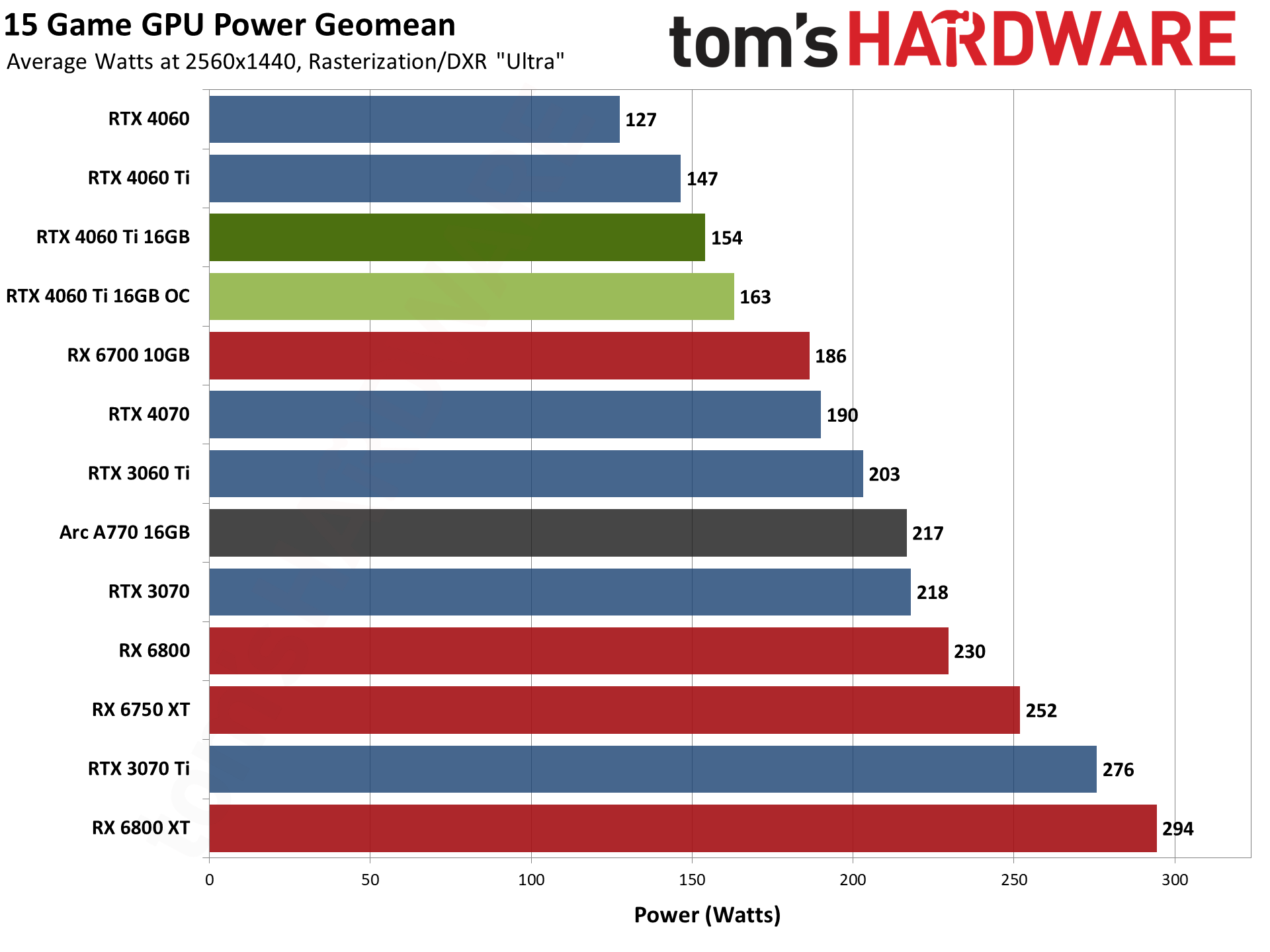

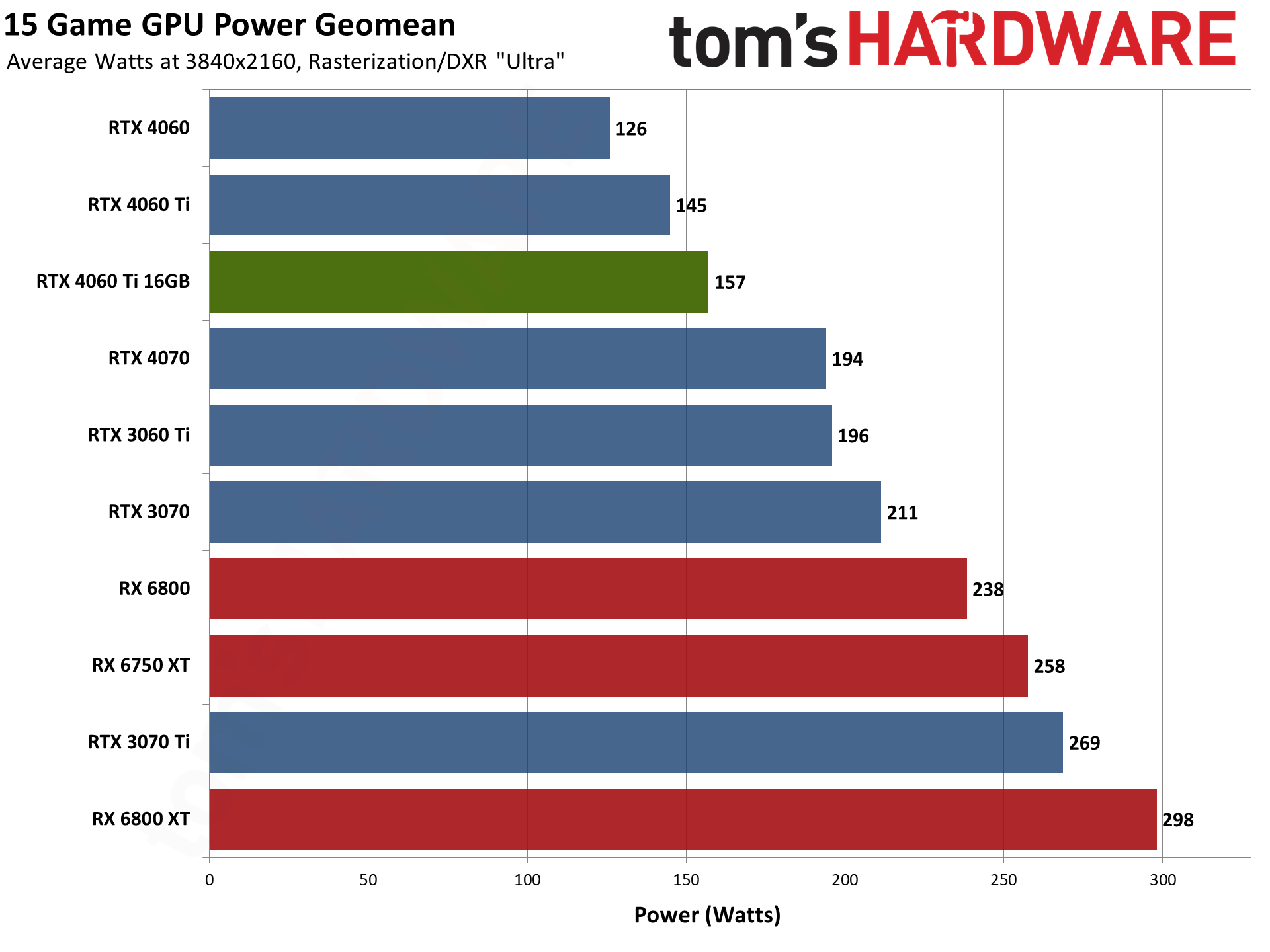

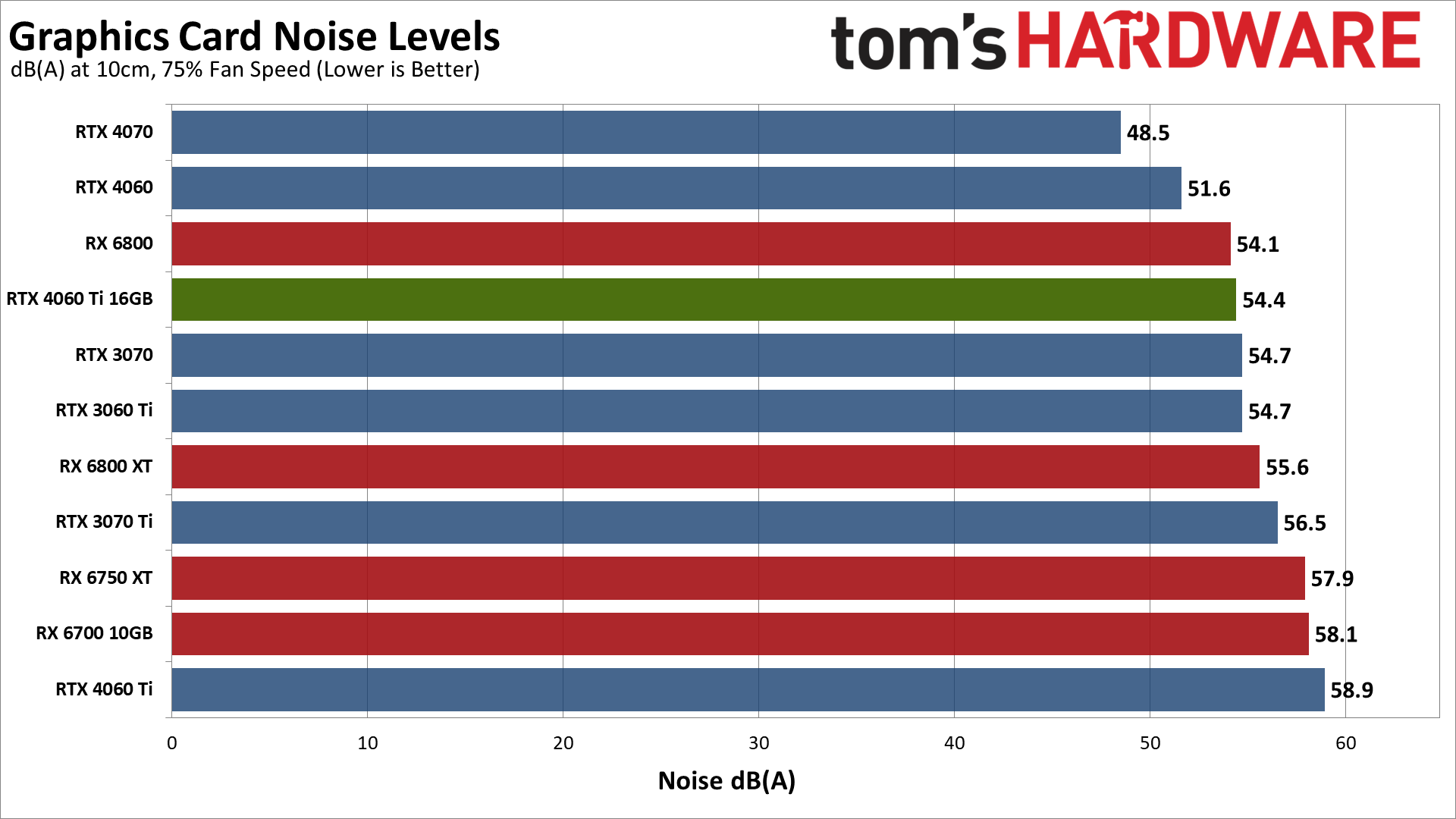

We have separate charts for 1080p ultra, 1080p medium, 1440p ultra, and 4K ultra. Besides the power testing, we also check noise levels using an SPL meter. This is done at a distance of 10cm in order to minimize noise pollution from other sources (like the CPU cooler).

Starting with power consumption, the RTX 4060 Ti 16GB and 8GB models both have a 160W TGP (Total Graphics Power) rating. However, on average, the 16GB card tends to consume about 10W more power — 9W at 1080p, 7W at 1440p, and 11W at 4K if you want to be specific. While it still fell under the 160W mark in aggregate, being closer to that limit likely restricts the amount of boost clock headroom and would explain the frequent 1–3 percent drop in performance we measured in our various tests.

Using slightly more power than the 8GB card is expected behavior, and the overall standings in terms of efficiency clearly favor Nvidia's RTX 40-series over the competition. The RX 6800 XT might have a similar retail price now, while delivering superior performance (outside of a few ray tracing games), but it also consumes 130W more power on average. That could potentially sway your purchasing decision, as the added power also means you need a bigger PSU.

The cost of the added power use in electrical bills can also add up. If you play games a lot, like 40 hours a week, that 130W difference equates to 270 kWh. Some people pay around $0.10 per kWh, so $27 per year. Other areas may pay as much as $0.30 per kWh, and in Europe, people could pay $0.50 per kWh. $135 extra in power per year could justify stepping up to an RTX 4070 instead of an RX 6800 XT, as an example. But at the lower end of the scale, and if you only average 20 hours per week, it's not critical: $13.50 per year at $0.10 per kWh.

As you can see in the tables further down the page, power use while gaming on the RTX 4060 Ti 16GB ranged from just under 120W (1080p in Spider-Man: Miles Morales) to a high of just under 170W (A Plague Tale: Requiem). The overclocking results are also interesting, in that we saw an average performance improvement of around 9%, while power use only increased by about 5%.

In terms of efficiency (FPS/W), the 4060 Ti 16GB looks pretty good, but the 8GB card does better as it was typically slightly faster while using less power. AMD's previous generation GPUs are, as expected, far behind in the efficiency metric.

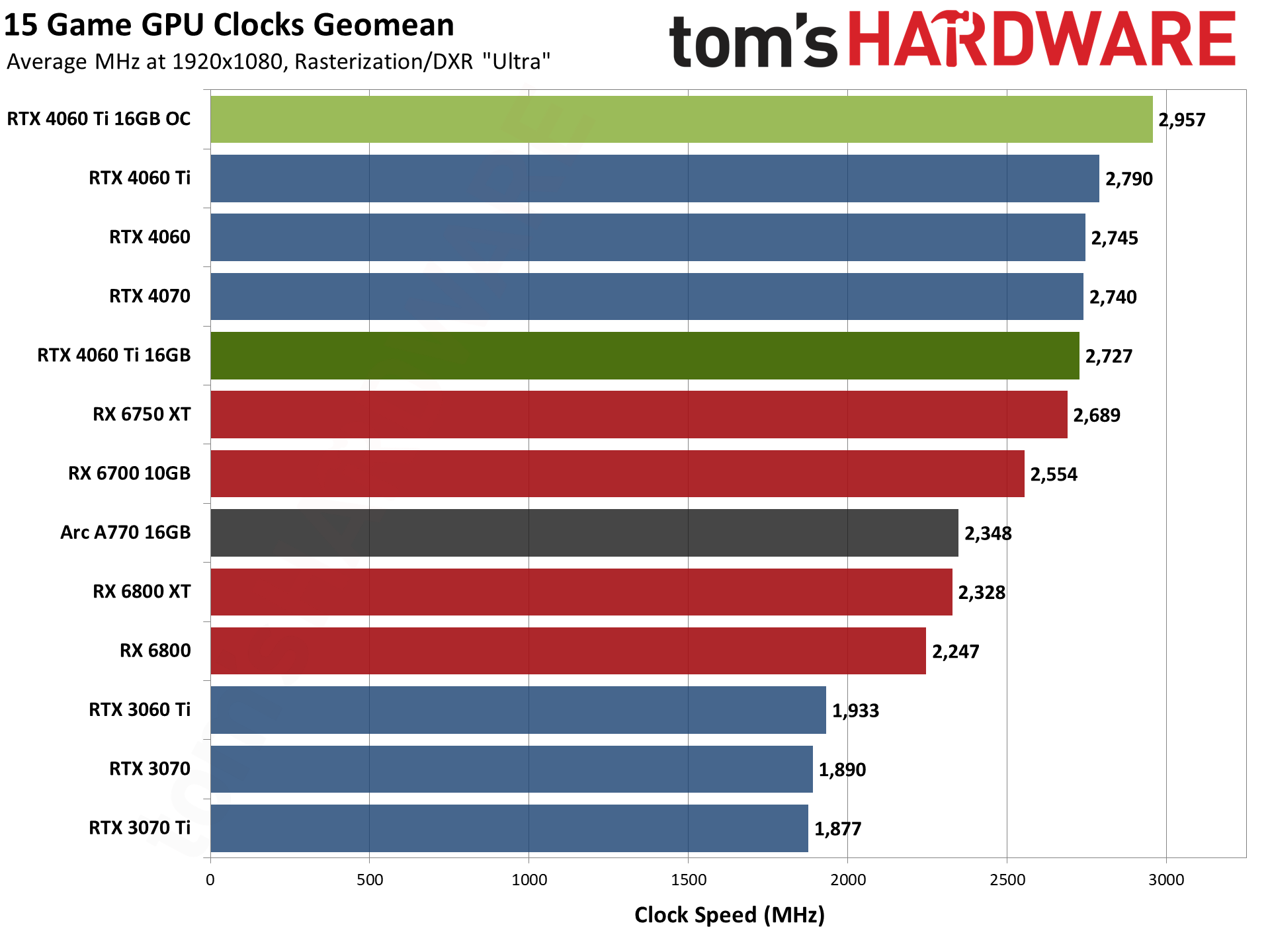

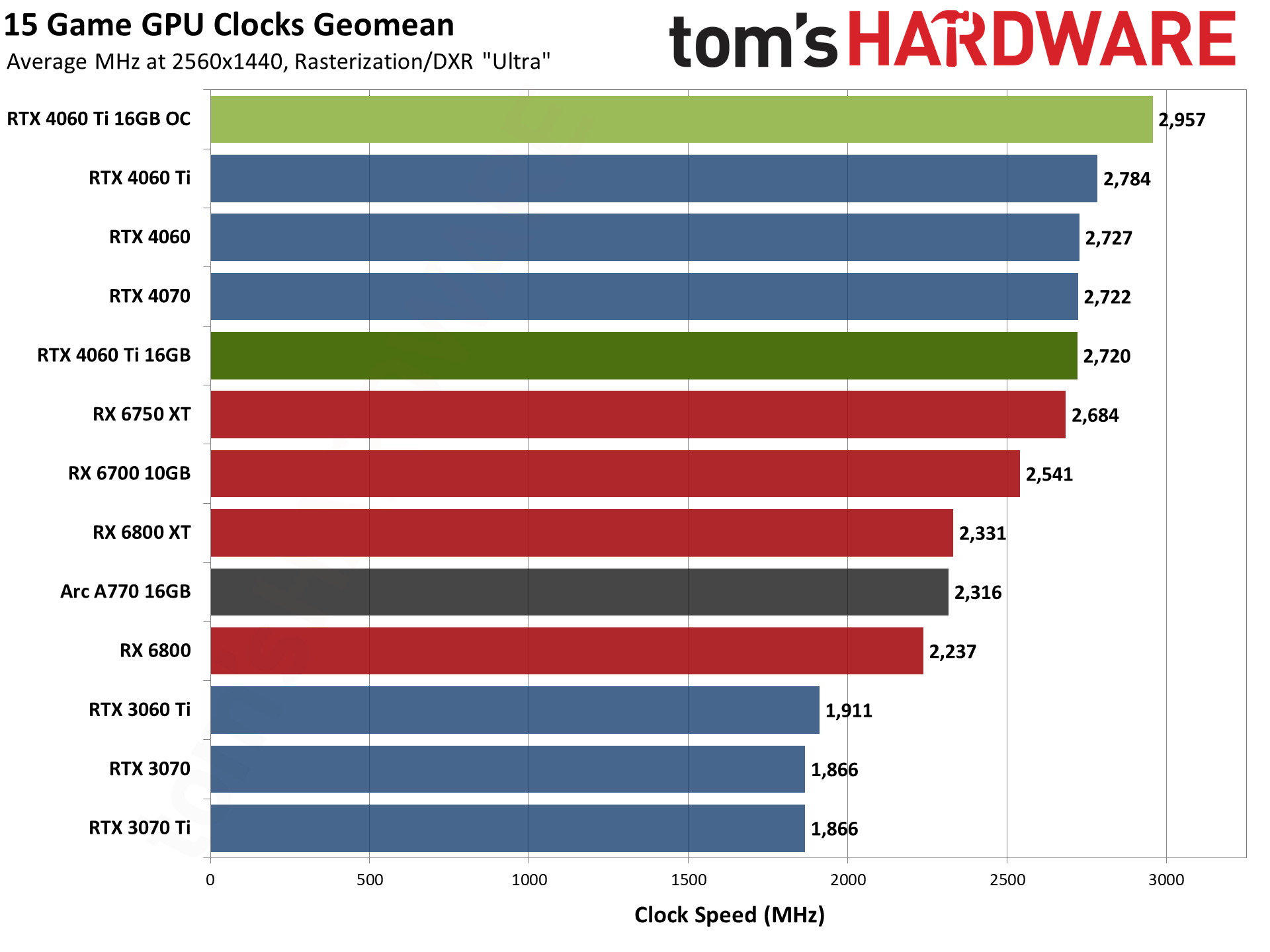

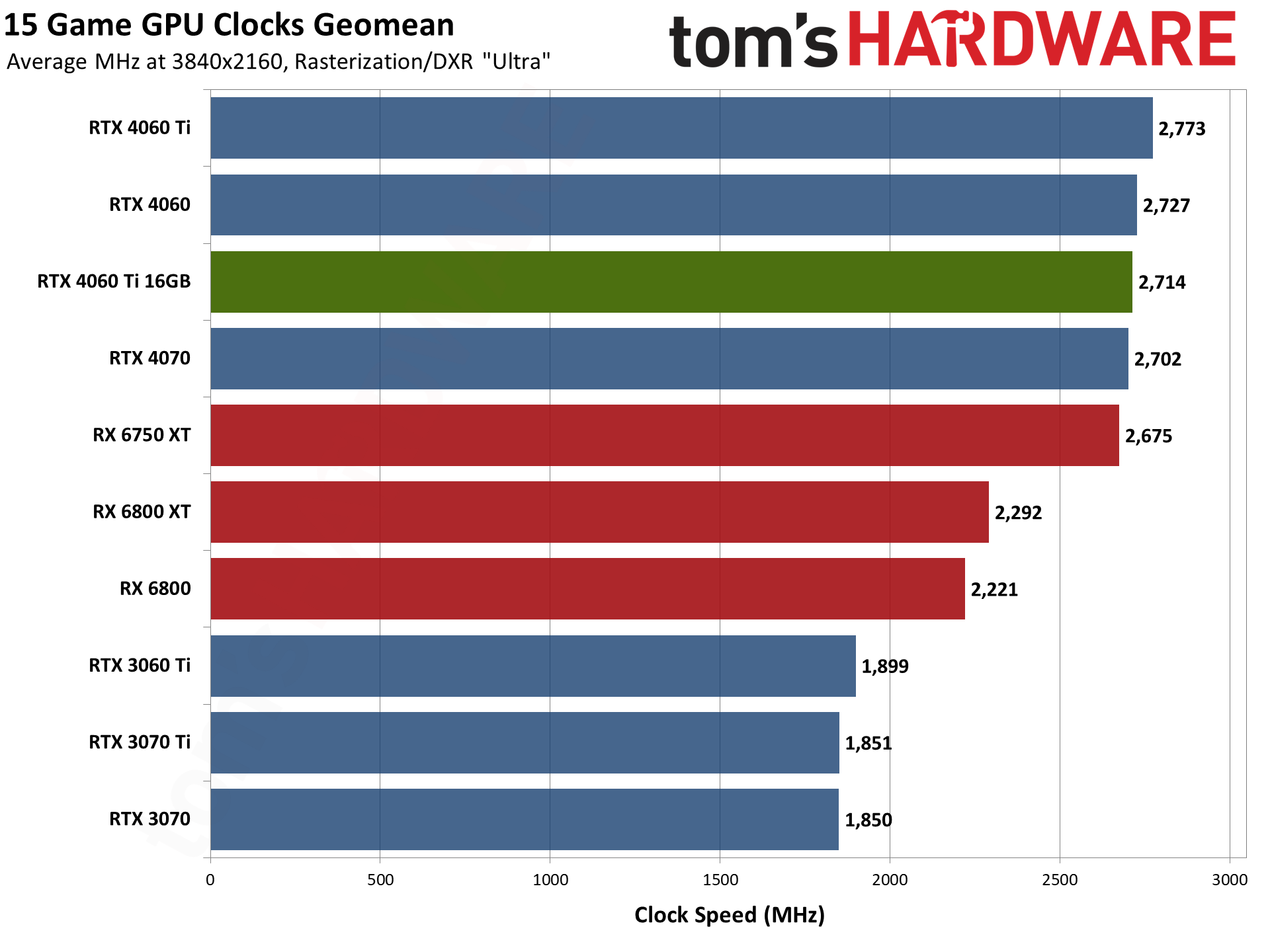

GPU clock speed comparisons aren't a major concern, unless you're comparing the same architecture and even the same core GPU. Here, the Nvidia RTX 4060 Ti Founders Edition averaged about 65 MHz higher clocks than the Gigabyte RTX 4060 Ti 16GB Gaming OC across our testing suite. That's in spite of the fact that the official boost clock of the Gigabyte card is 2595 MHz, while the Founders Edition has a 2535 MHz official spec.

There's variation among the individual games, but nothing too significant. The lowest average clock we saw across any of the tested settings and games was 2644 MHz (4K in Cyberpunk 2077), while the highest clock was 2745 MHz (Forza Horizon 5 at 1080p Extreme settings). This does imply that Gigabyte has implemented a hard limit at stock settings that are lower than the limit of the Founders Edition card.

Overclocking, as you might expect, helps a lot. The Gigabyte card didn't ever break the 3.0 GHz barrier, but it came close, averaging 2,957 MHz at 1080p and 1440p ultra settings.

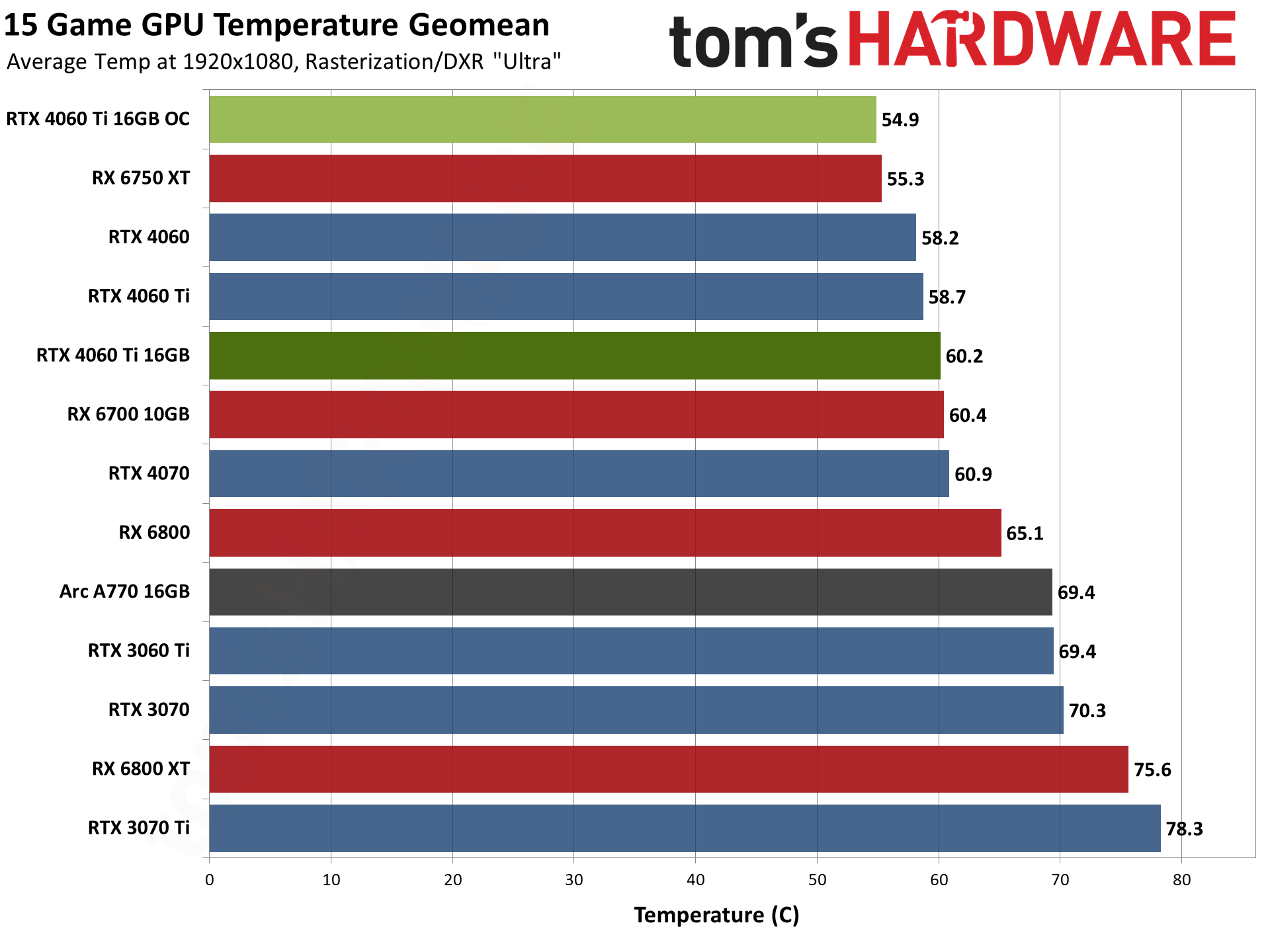

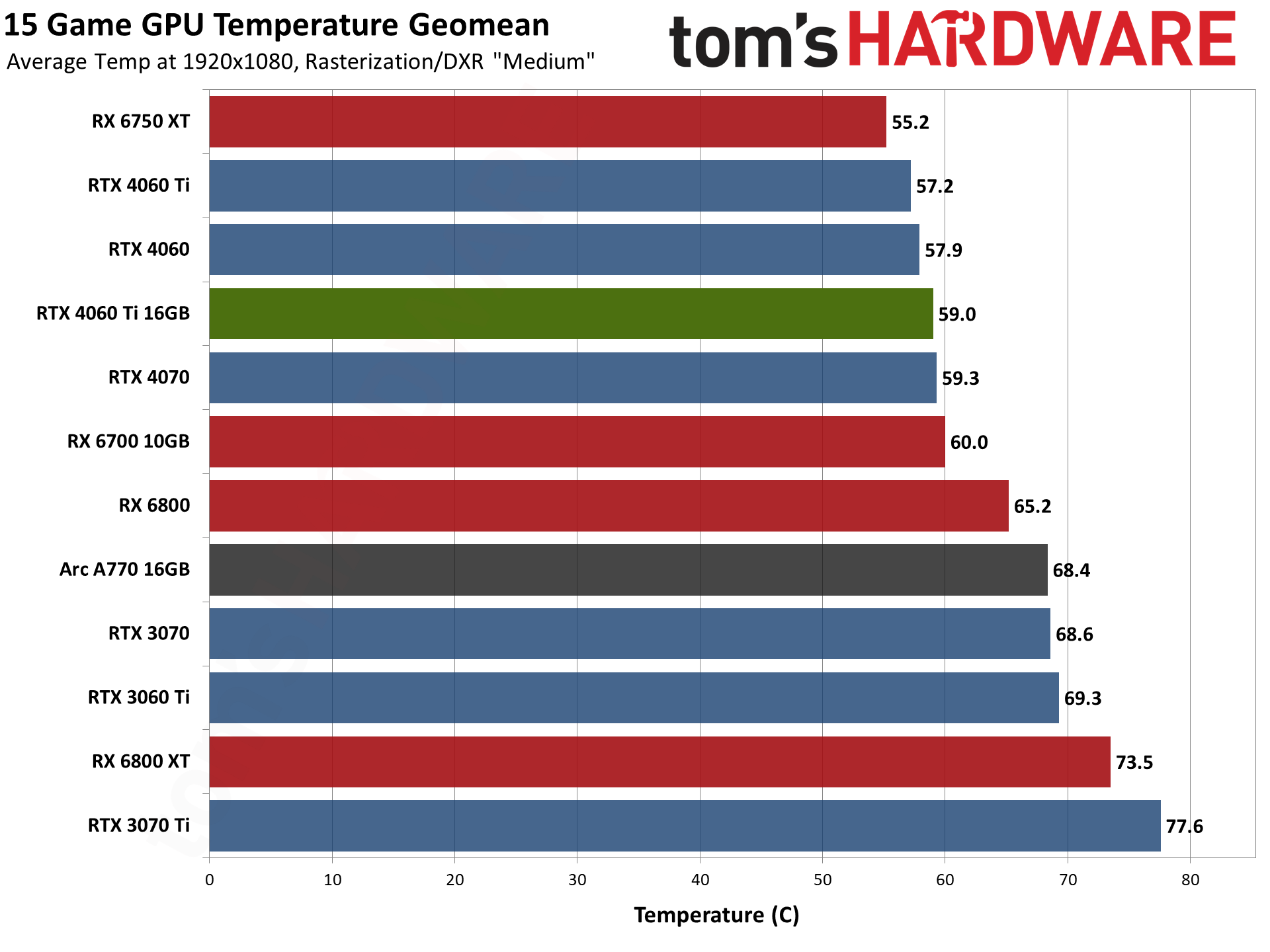

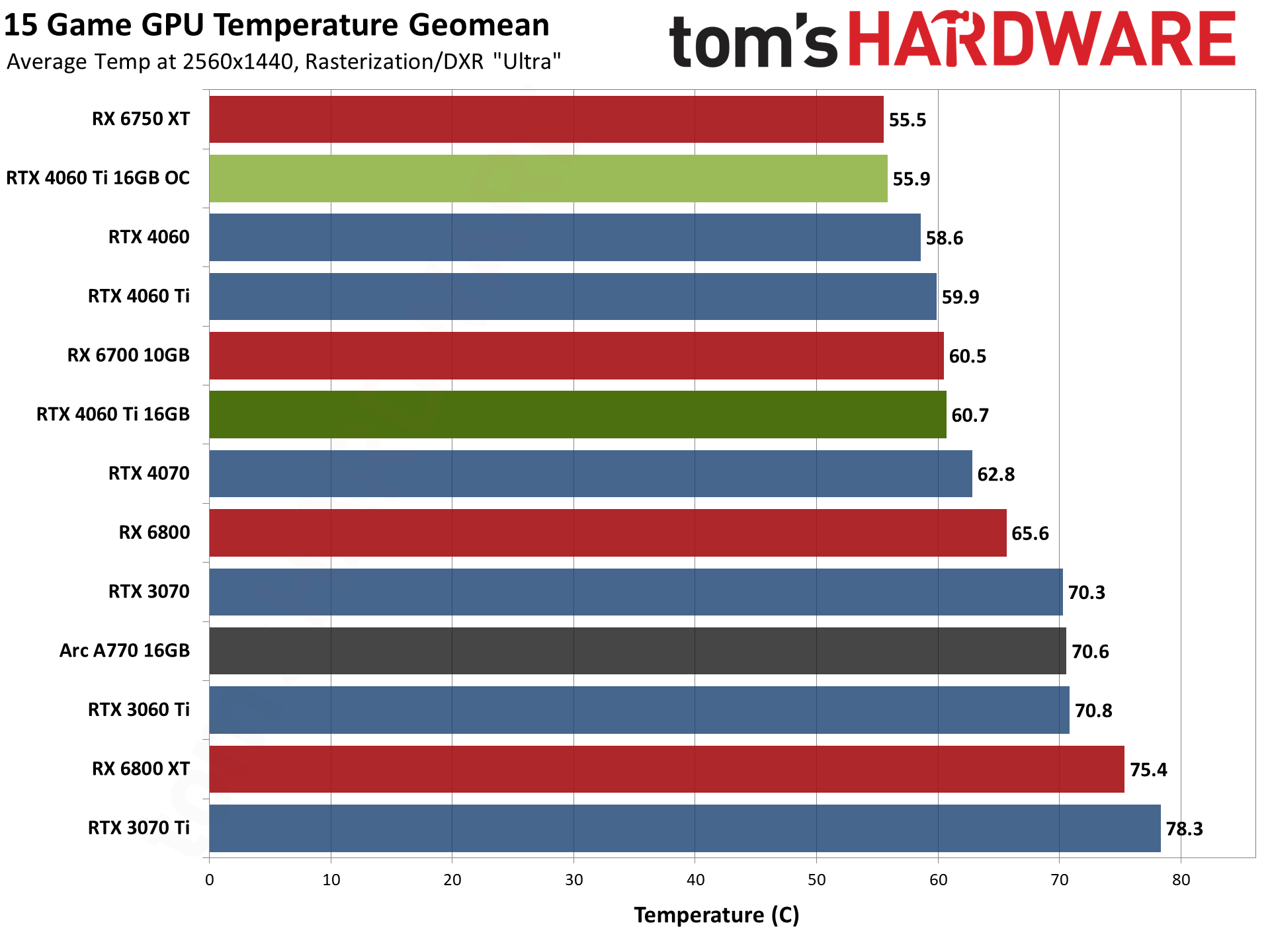

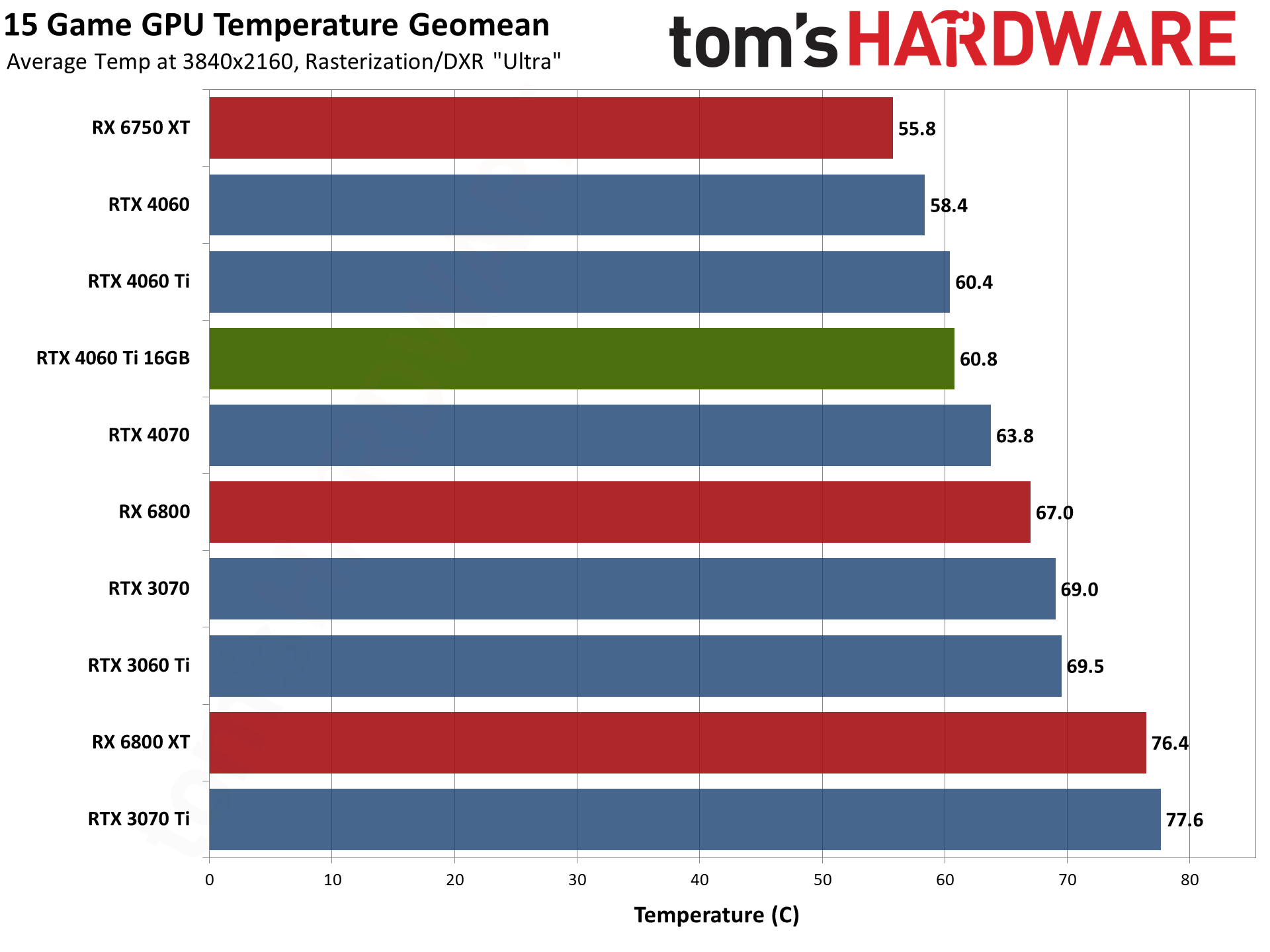

With triple fans and a 160W TGP, temperatures are also generally good. Of course, we still need to factor in noise levels, but at stock, we saw average temperatures of around 60C, with the highest result being 63.3C (Borderlands 3 at 1440p Badass settings). Our overclocking temperatures were lower, as we used a more aggressive fan speed curve.

While the Gigabyte card's temperatures are good, it's worth pointing out yet again that the Nvidia RTX 4060 Ti Founders Edition did slightly better. It's only a 1–2 degrees Celsius delta, but let's check the noise levels before drawing any final conclusions about the cooling performance...

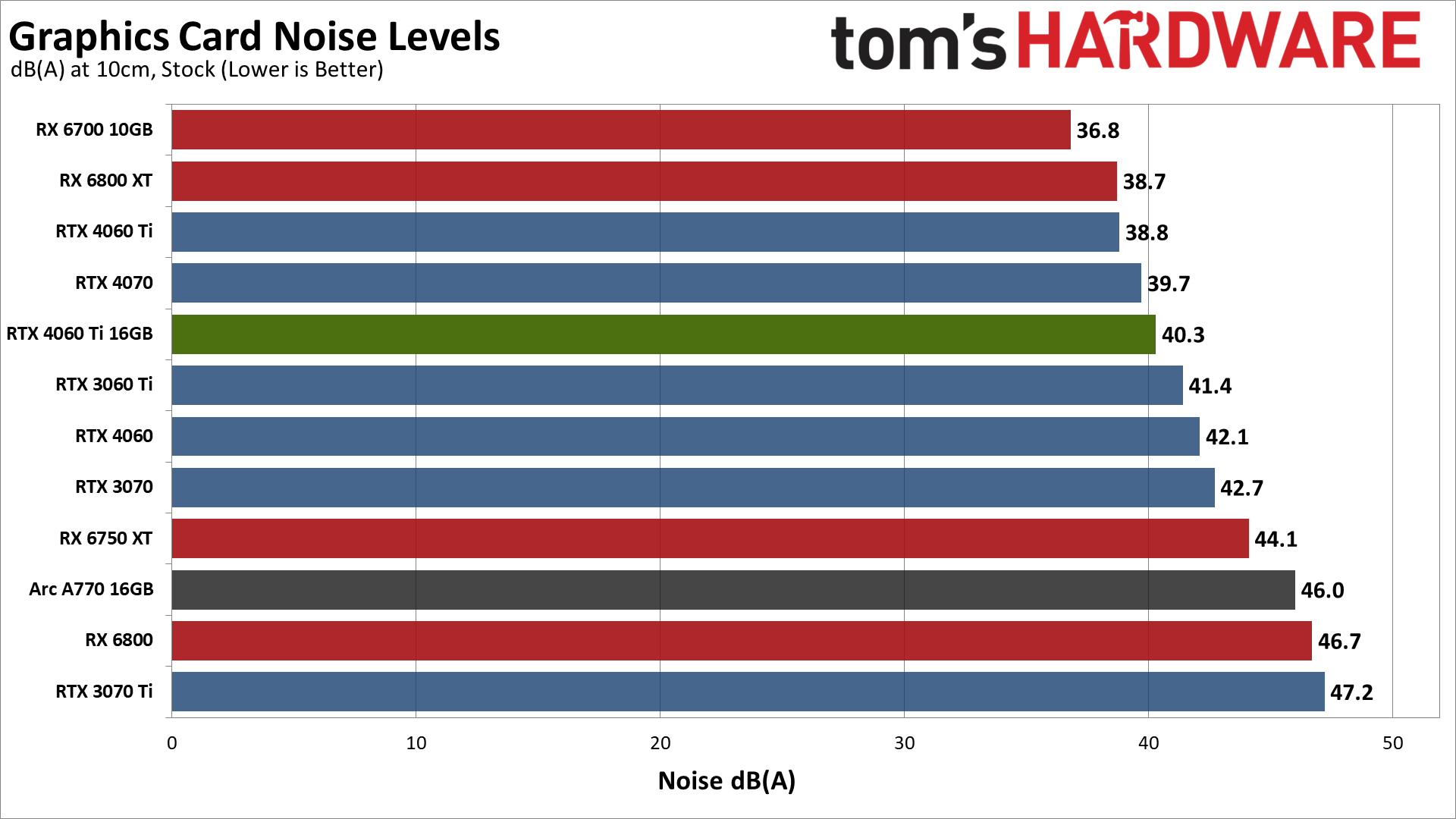

Gigabyte's RTX 4060 Ti 16GB Gaming OC does decently well in our noise testing. It's not the quietest card around, and the 4060 Ti Founders Edition still beats it by 1.5 dB(A), but considering there are three fans all on the same side of the card, compared to two fans on opposite sides with the Founders Edition, the results are good.

Good, but not exceptional. The AMD RX 6800 XT reference card has lower noise levels thanks to its thicker cooler. Nvidia's 40-series Founders Edition cards, along with the Sapphire RX 6700 10GB, also have lower noise footprints. Considering the 6700 10GB also uses about 30W more power, that means Gigabyte's competitor is able to do better with two fans and more heat than Gigabyte can do with three fans. But it's still sufficient for most people.

For reference, we run Metro Exodus Enhanced for our noise testing, as it's one of the more power hungry games, and let it sit for at least 15 minutes before checking noise levels. We place the SPL (sound pressure level) meter 10cm from the card, with the mic aimed at the center of the middle fan (or the back fan if there are only two). This helps minimize the impact of other noise sources, like the fans on the CPU cooler. The noise floor of our test environment and equipment is around 31–32 dB(A).

We also test with a static fan speed of 75%, and the Gigabyte RTX 4060 Ti 16GB generated 54.4 dB(A) of noise. That means there's plenty of headroom for additional cooling, should you want to engage in some manual overclocking.

Here's the full rundown of all of our test results, including performance per watt and performance per dollar columns. The prices are based on the best retail price we could find for a new card — which can change rapidly on now-discontinued parts like the RTX 30-series. (This doesn't include used cards on eBay, incidentally.)

You'll be absolutely shocked to discover that, based on our testing and among the twelve candidate cards used for this review, the RTX 4060 Ti 16GB delivers the worst value of all the GPUs. Makes you wonder why Nvidia and its partners didn't want to seed reviewers and influencers with the part, right? The best value of these cards is the RTX 4060, with the discounted RTX 3060 Ti right behind it. Costlier GPUs normally deliver worse values, but in this case, even the RTX 4070 beats the 4060 Ti 16GB.

But talking about value while only looking at FPS/$ from the GPU doesn't really tell the whole picture. You have to factor in what resolution and settings you use, power requirements, the cost of the rest of your PC, the games you play, and any extra features as well. Still, even at 4K, doubling the VRAM for an extra $100 represents a questionable choice.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: GeForce RTX 4060 Ti 16GB: Power, Clocks, Temps, and Noise

Prev Page GeForce RTX 4060 Ti 16GB: Professional Content Creation and AI Performance Next Page GeForce RTX 4060 Ti 16GB: Not the Card You're Looking For

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

JarredWaltonGPU I just want to clarify something here: The score is a result of both pricing as well as performance and features, plus I took a look (again) at our About Page and the scores breakdown. This is most definitely a "Meh" product right now. Some of my previous reviews may have been half a point (star) higher than warranted. I've opted to "correct" my scale and thus this lands at the 2.5-star mark.Reply

I do feel our descriptions of some of the other scores are way too close together. My previous reviews were based more on my past experience and an internal ranking that's perhaps not what the TH text would suggest. Here's how I'd break it down:

5 = Practically perfect

4.5 = Excellent

4 = Good

3.5 = Okay, possibly a bad price

3 = Okay but with serious caveats (pricing, performance, and/or other factors)

2.5 = Meh, niche use cases

...

The bottom four categories are still basically fine as described. Pretty much the TH text has everything from 3-star to 5-star as a "recommended" and that doesn't really jive with me. 🤷♂️

This would have been great as a 3060 Ti replacement if it had 12GB and a 192-bit bus with a $399 price point. Then the 4060 Ti 8GB could have been a 3060 replacement with 8GB and a 128-bit bus at the $329 price point. And RTX 4060 would have been a 3050 replacement at $249.

Fundamentally, this is a clearly worse value and specs proposition than the RTX 4060 Ti 8GB and the RTX 4070. It's way too close to the former and not close enough to the latter to warrant the $499 price tag.

All of the RTX 40-series cards have generally been a case of "good in theory, priced too high." Everything from the 4080 down to the 4060 so far got a score of 3.5 stars from me. There's definitely wiggle room, and the text is more important than just that one final score. In retrospect, I still waffle on how the various parts actually rank.

Here's an alternate ranking, based on retrospect and the other parts that have come out:

4090: 4.5 star. It's an excellent halo part that gives you basically everything. Expensive, yes, but not really any worse than the previous gen 3090 / 3090 Ti and it's actually justifiable.

4080: 3-star. It's fine on performance, but the generational price increase was just too much. 3080 Ti should have been a $999 (at most) part, and this should be $999 or less.

4070 Ti: 3-star. Basically the same story as the 4080. It's fine performance, priced way too high generationally.

4070: 3.5-star. Still higher price than I'd like, but the overall performance story is much better.

4060 Ti 16GB: 2.5-star. Clearly a problem child, and there's a reason it wasn't sampled by Nvidia or its partners. (The review would have been done a week ago but I had a scheduled vacation.) This is now on the "Jarred's adjusted ranking."

4060 Ti 8GB: 3-star. Okay, still a higher price than we'd like and the 128-bit interface is an issue.

4060: 3.5-star. This isn't an amazing GPU, but it's cheaper than the 3060 launch price and so mostly makes up for the 128-bit interface, 8GB VRAM, and 24MB L2. Generally better as an overall pick than many of the other 40-series GPUs.

AMD's RX 7000-series parts are a similar story. I think at the current prices, the 7900 XTX works as a $949 part and warrants the 4-star score. 7900 XT has dropped to $759 and also warrants the 4-star score, maybe. The 7600 at $259 is still a 3.5-star part. So, like I said, there's wiggle room. I don't think any of the charts or text are fundamentally out of line, and a half-star adjustment is basically easily justifiable on almost any review I've done. -

Lord_Moonub Jarred, thanks for this review. I do wonder if there is more silver lining on this card we might be missing though. Could it act as a good budget 4K card? What happens if users dial back settings slightly at 4K (eg no Ray tracing, no bleeding edge ultra features ) and then make the most of DLSS 3 and the extra 16GB VRAM? I wonder if users might get something visually close to top line experience at a much lower price.Reply -

JarredWaltonGPU Reply

If you do those things, the 4060 Ti 8GB will be just as fast. Basically, dialing back settings to make this run better means dialing back settings so that more than 8GB isn't needed.Lord_Moonub said:Jarred, thanks for this review. I do wonder if there is more silver lining on this card we might be missing though. Could it act as a good budget 4K card? What happens if users dial back settings slightly at 4K (eg no Ray tracing, no bleeding edge ultra features ) and then make the most of DLSS 3 and the extra 16GB VRAM? I wonder if users might get something visually close to top line experience at a much lower price. -

Elusive Ruse Damn, @JarredWaltonGPU went hard! Appreciate the review and the clarification of your scoring system.Reply -

InvalidError More memory doesn't do you much good without the bandwidth to put it to use. The 4060(Ti) needed 192bits to strike the practically perfect balance between capacity and bandwidth. It would have brought the 4060(Ti) launches from steaming garbage to at least being a consistent upgrade over the 3060(Ti).Reply -

Greg7579 Jarred, I'm building with the 4090 but love reading your GPU reviews, even the ones that are far below what I would build with because I learn something every time.Reply

I am not a gamer but a GFX Medium Format photographer and have multiple TB of high-res 200MB raw files that I work extensively with in LightRoom and Photoshop. I build every 4 years and update as I go. I build the absolute top-end of the PC arena, which is way overkill, but I do it anyway.

As you know. Lightroom has many new amazing AI masking and noise reduction features that are like magic but so many users (photographers) are now grinding to a halt on their old rigs and laptops. Photographers tend to be behind the gamers on PC / laptop power. It is common knowledge on the photo and Adobe forums that these new AI capabilities eat VRAM like Skittles and extensively use the GPU for the grind. (Adobe LR & PS was always behind on using the GPU with the CPU for its editing and export tasks but now are going at it with gusto.) When I run an AI DeNoise on a big GFX 200MB file, my old rig with the 3080 (I'm building again soon with the 4 090) takes about 12 seconds to grind out the AI DeNoise task. Others rigs photographers use take several minutes or just crash. The Adobe and LightRoom forums are full of howling and gnashing of teeth about this. I tell them to start upgrading, but here is my question.... I can't wait to see what the 4090 will do with these photography-related workflow tasks in LR.

Can you comment on this and tell me if indeed this new Lightroom AI masking and DeNoise (which is a miracle for photographers) is so VRAM intensive that doubling the VRAM on a card like this would really help alot? Isn't it true that NVidea made some decisions 3 years ago that resulted in not having enough (now far cheaper) VRAM in the 40 series? It should be double or triple what it is right? Anything you can teach me about increased GPU power and VRAM in Adobe LR for us photographers? -

hotaru251 4060ti should of been closer to a 4070.Reply

the gap between em is huge and the cost is way too high. (doubly so that it requires dlss3 support to not get crippled by the limited bus) -

atomicWAR ReplyJarredWaltonGPU said:Some of my previous reviews may have been half a point (star) higher than warranted. I've opted to "correct" my scale and thus this lands at the 2.5-star mark.

Thank you for listening Jarred. I was one of those claiming on multiple recent gpu reviews that your scores were about a half star off though not alone in that sentiment either. I was quick to defend you from trolls though as you clearly were not shilling for Nvidia either. This post proves my faith was well placed in you. Thank you for being a straight arrow!