Why you can trust Tom's Hardware

So far, the additional VRAM hasn't proven to be particularly helpful on the RTX 4060 Ti. But all the games in our standard benchmark suite are at least a year old, sometimes three or four years old. What about more modern games?

To check this, we picked three relatively recent releases: F1 2023, Hogwarts Legacy, and The Last of Us: Part 1. None of these are brand-spanking new, which was intentional. At launch, we've seen many cases over the past year or so where the launch performance of a new release was terrible, and future patches fixed things up quite a bit. But Hogwarts and The Last of Us at least still have a reputation for wanting more than 8GB of VRAM at maxed-out settings.

These three games have slightly different takes on graphics settings and APIs as well. For example, F1 2023 supports several ray tracing effects but doesn't enable them on the medium preset — only on ultra. Hogwarts Legacy has several ray tracing effects as well, but the presets never enable them. We opted to turn them all on, with lighting set to "medium" or "ultra" as appropriate. Finally, The Last of Us has no ray tracing effects and is an AMD-sponsored game. We also confined our testing to just six GPUs (RTX 4070, 4060 Ti 16GB, 4060 Ti 8GB from Nvidia, and RX 6800 XT, 6800, and 6750 XT for AMD).

We'll do a gallery of the benchmarks from each game, so swipe through the images to see the results at the various settings/resolutions.

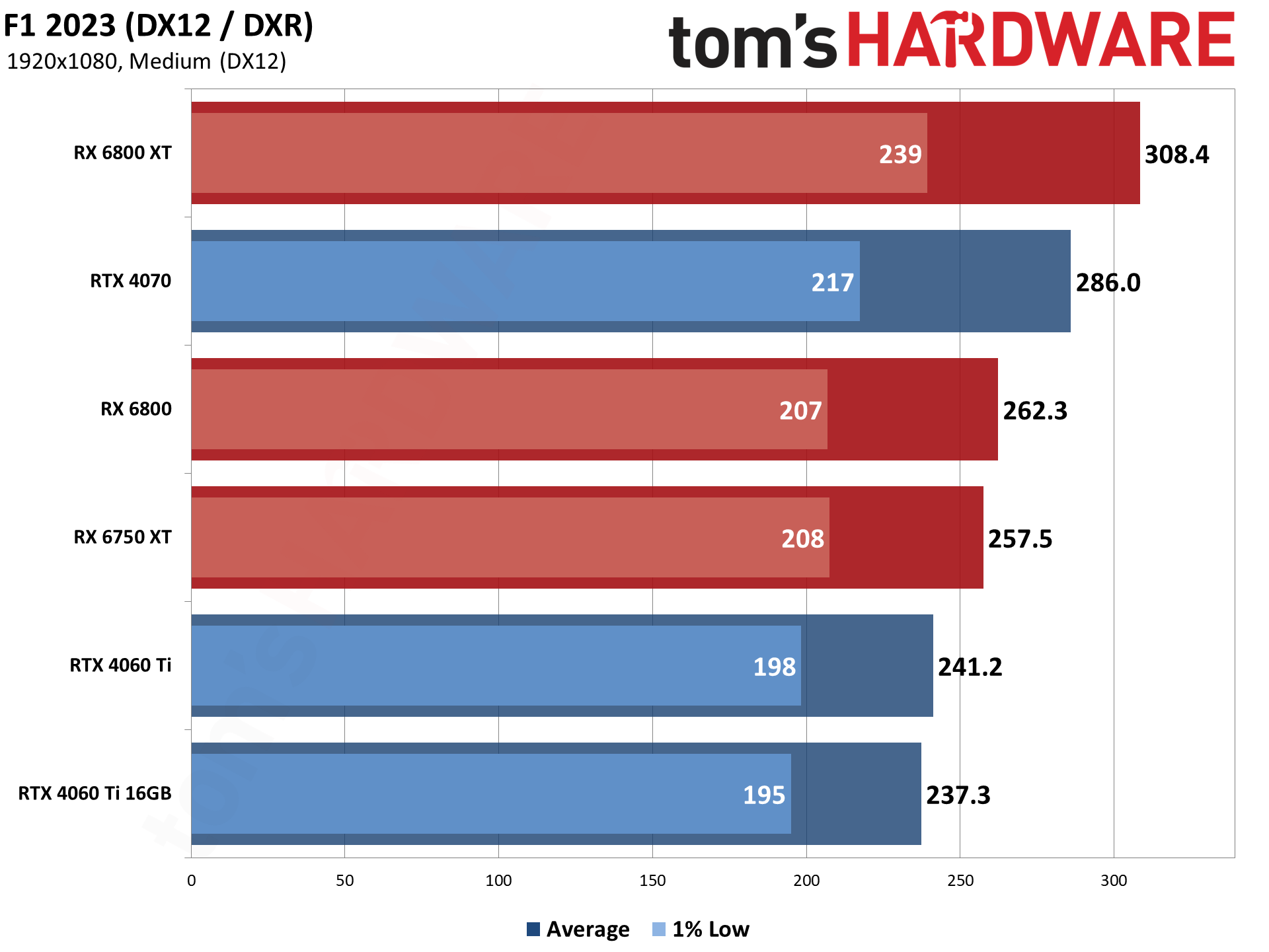

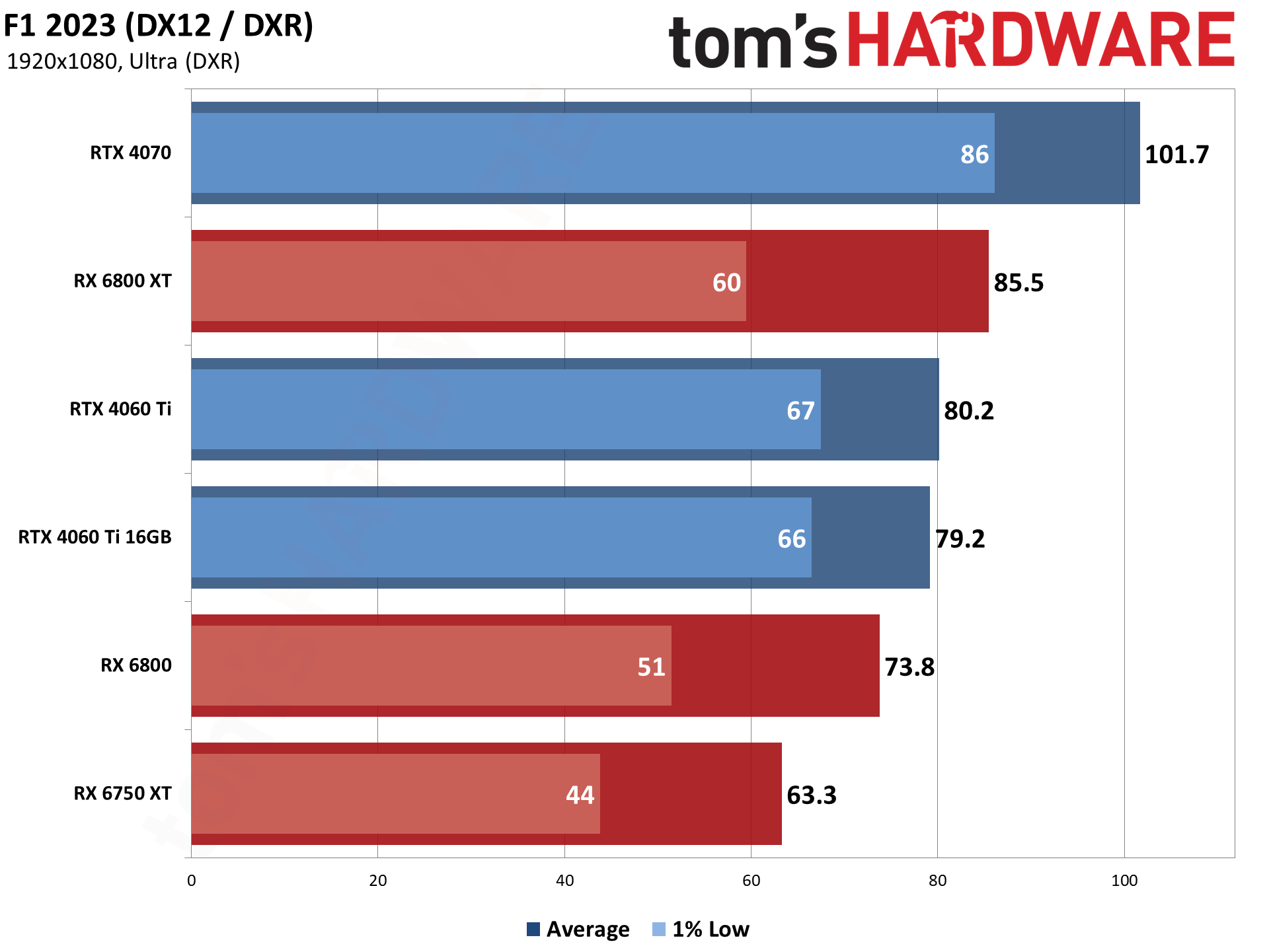

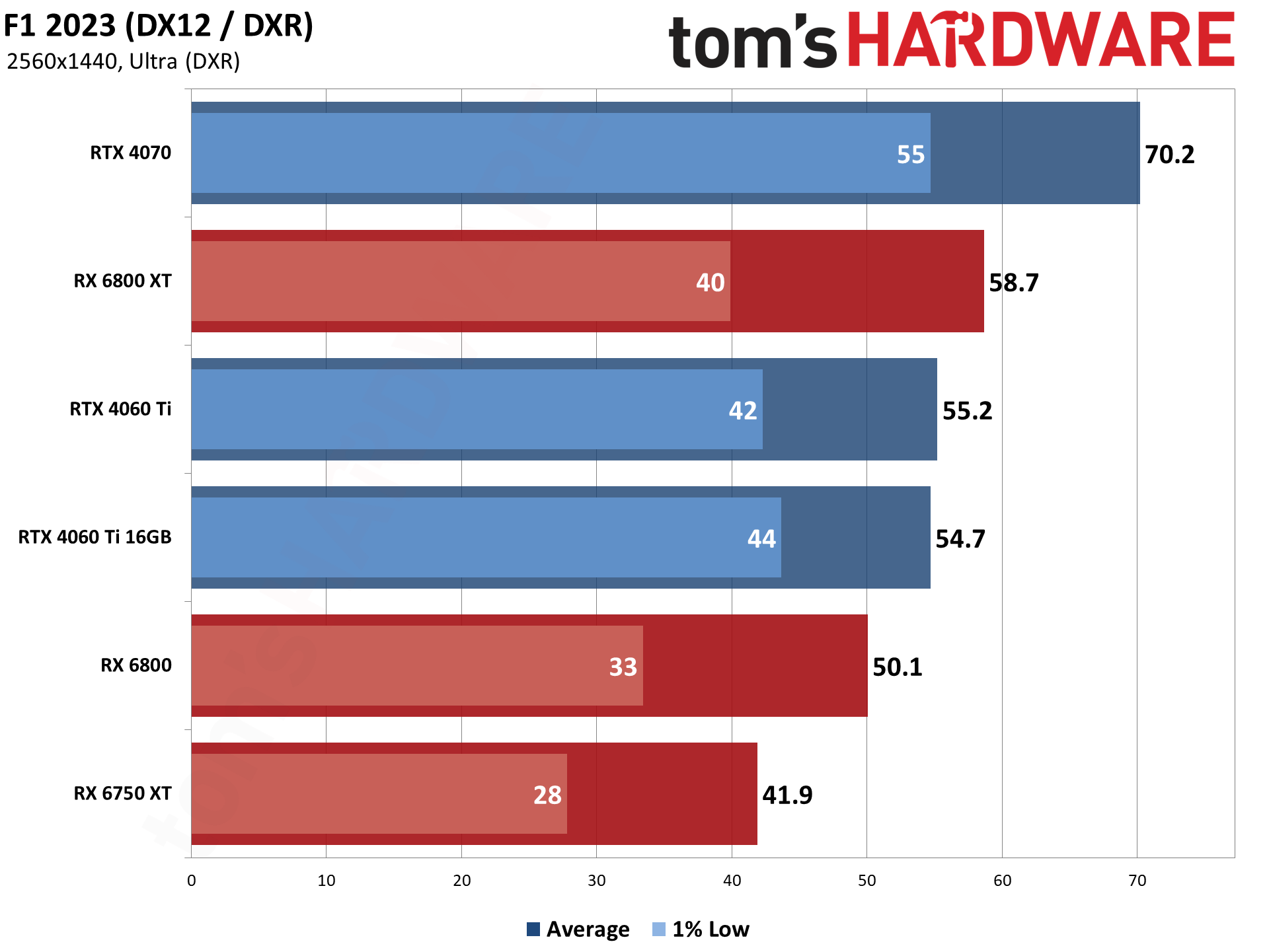

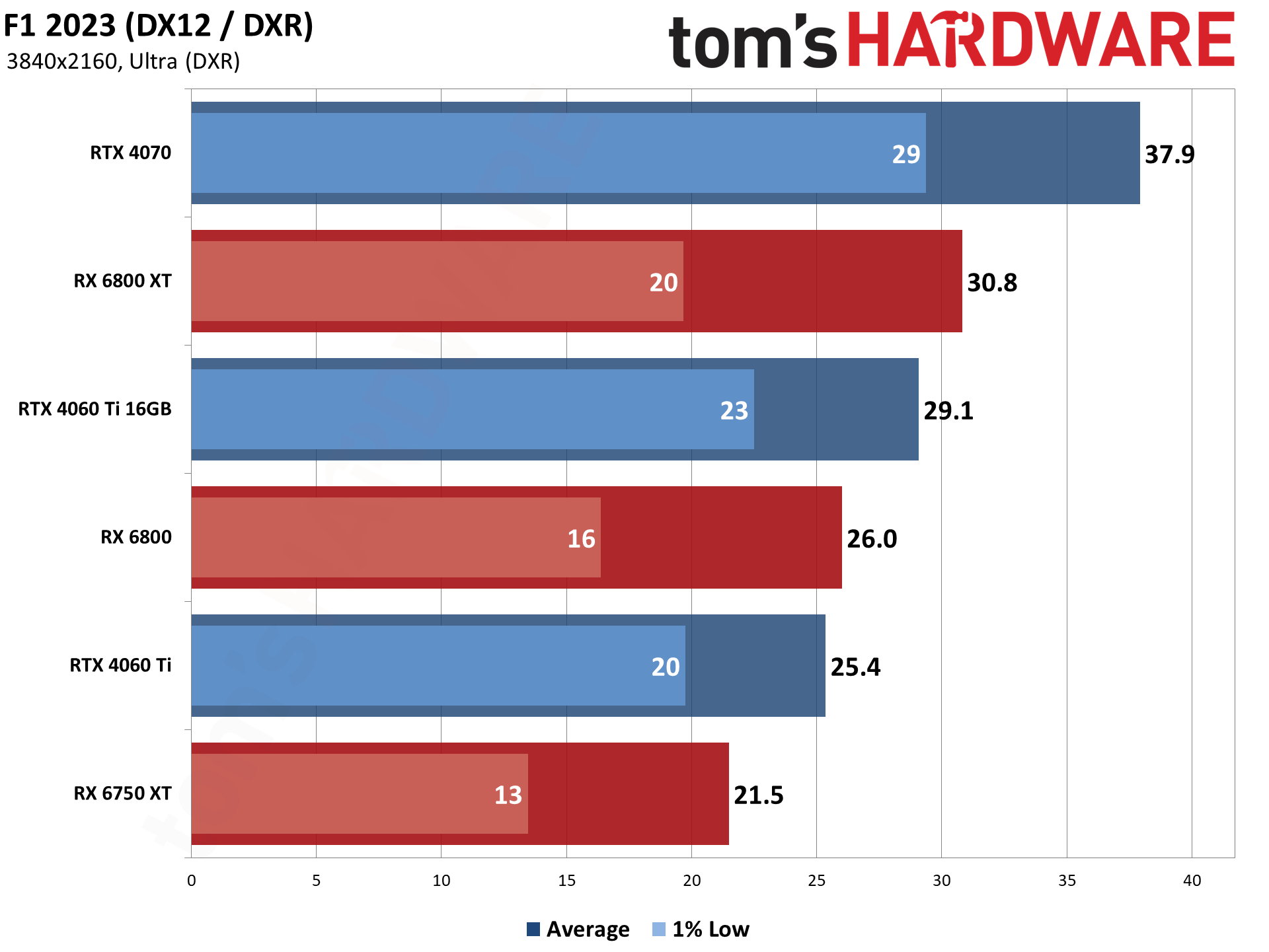

F1 2023 mostly echoes what we saw in our primary gaming tests. For reference, we tested on the Australia track, in the rain, for one loop of the track (130 seconds).

At 1080p, the extra VRAM does basically nothing — the 4060 Ti 8GB slightly outperforms the 16GB model, possibly due to differences in card design, power, or other factors. At 1440p, the 16GB card at least gains a slight lead in 1% lows, and finally, at 4K, it ends up delivering 15% higher performance.

As before, the RTX 4070 represents a relatively large step up in performance, with about 30% higher performance than the 4060 Ti at ultra settings. 1080p medium is closer, but both GPUs are breaking 200 fps there.

Against AMD, the RTX 4060 Ti 16GB universally falls behind the 6800 XT: It's 23% slower at 1080p medium, and then about 6–7 percent slower at ultra settings with ray tracing. (Side note: The ray tracing in F1 2023 doesn't seem to be particularly impressive, at least based on what I've seen so far, but maybe other tracks would put it in a better... um... light.) The RX 6800 was still about 10% faster at 1080p medium, but the 4060 Ti 16GB takes a 7–12 percent lead in the ultra tests. The RTX 4060 Ti 16GB also has substantially better 1% lows when using the ultra preset.

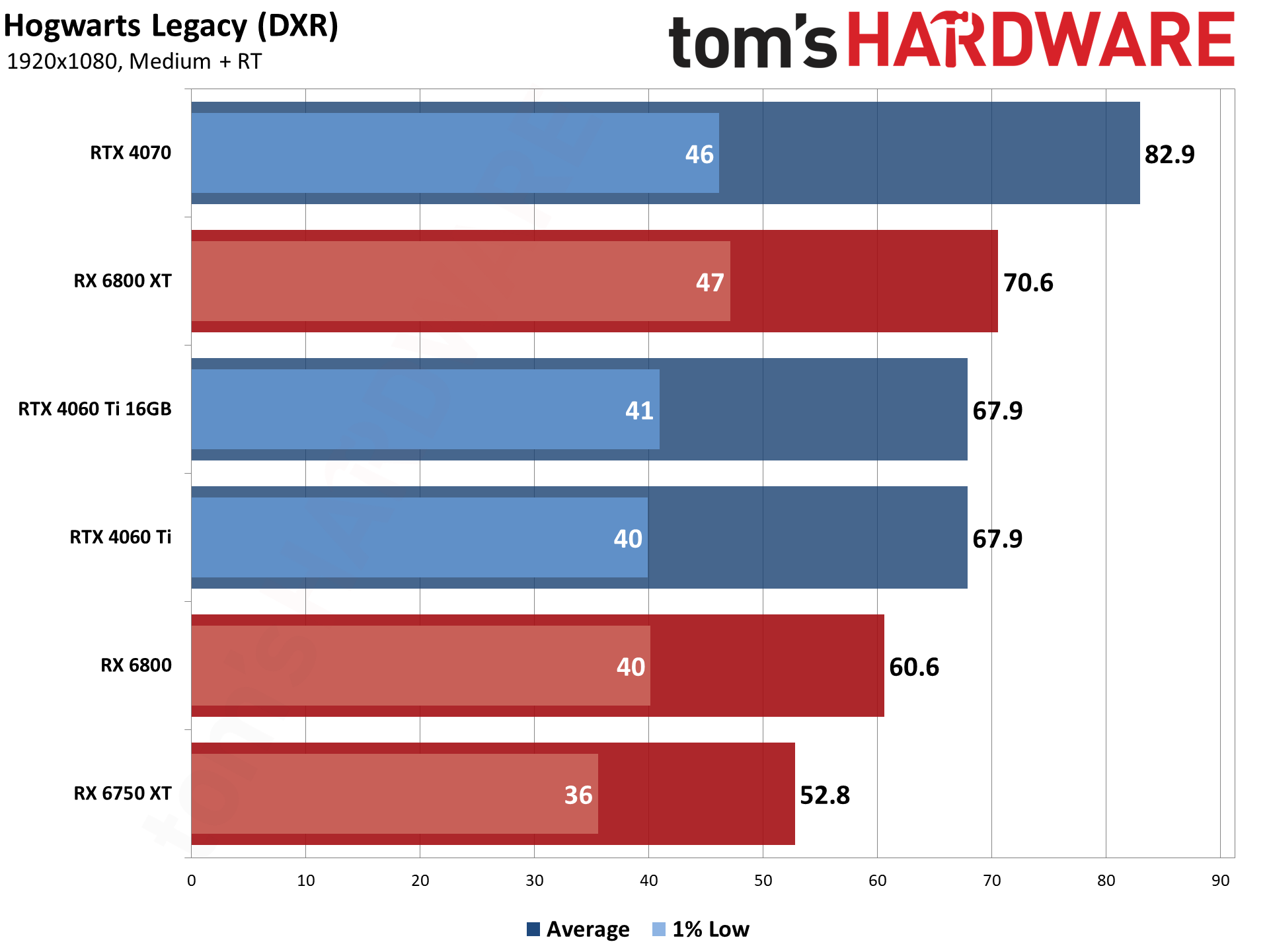

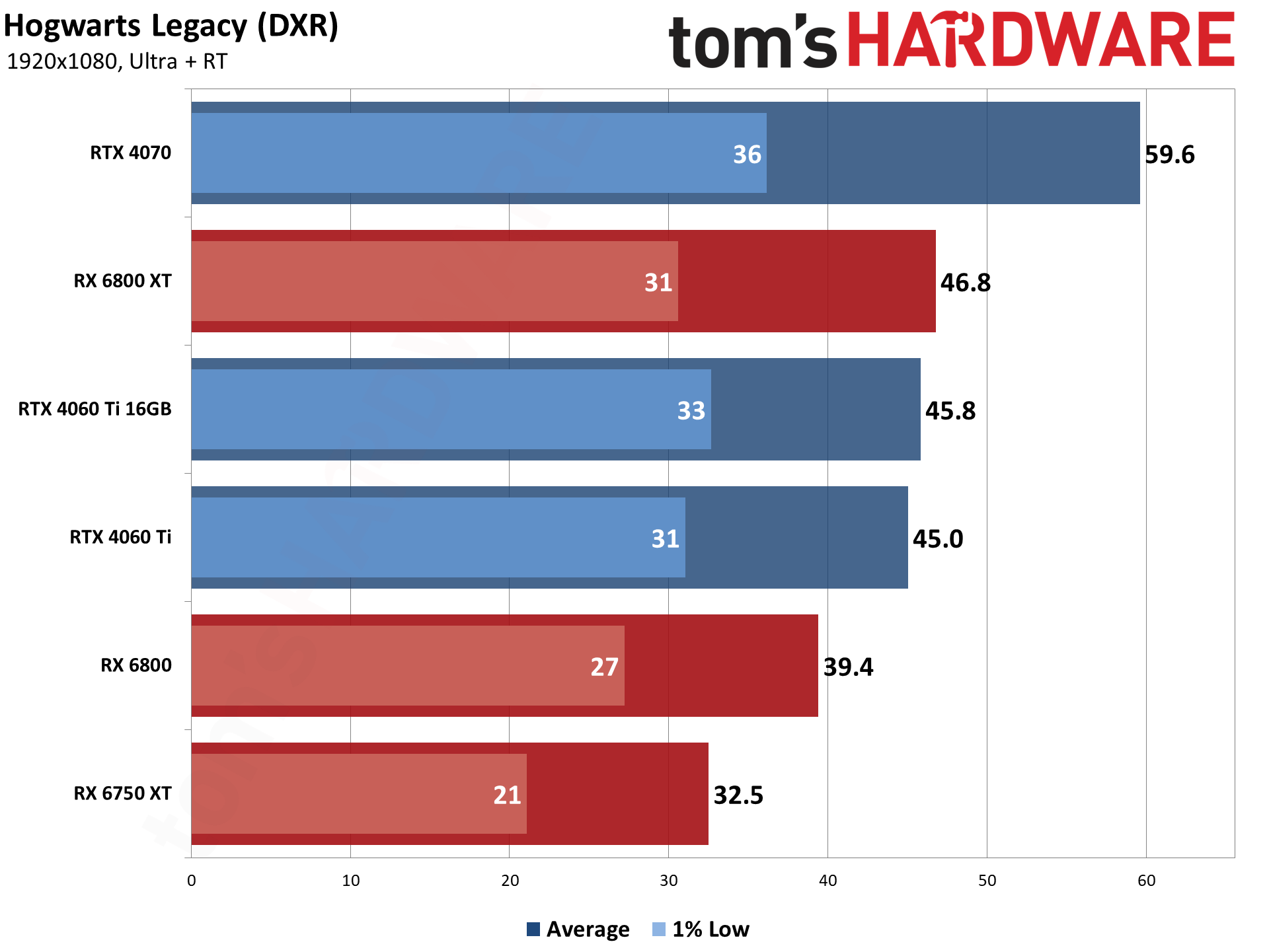

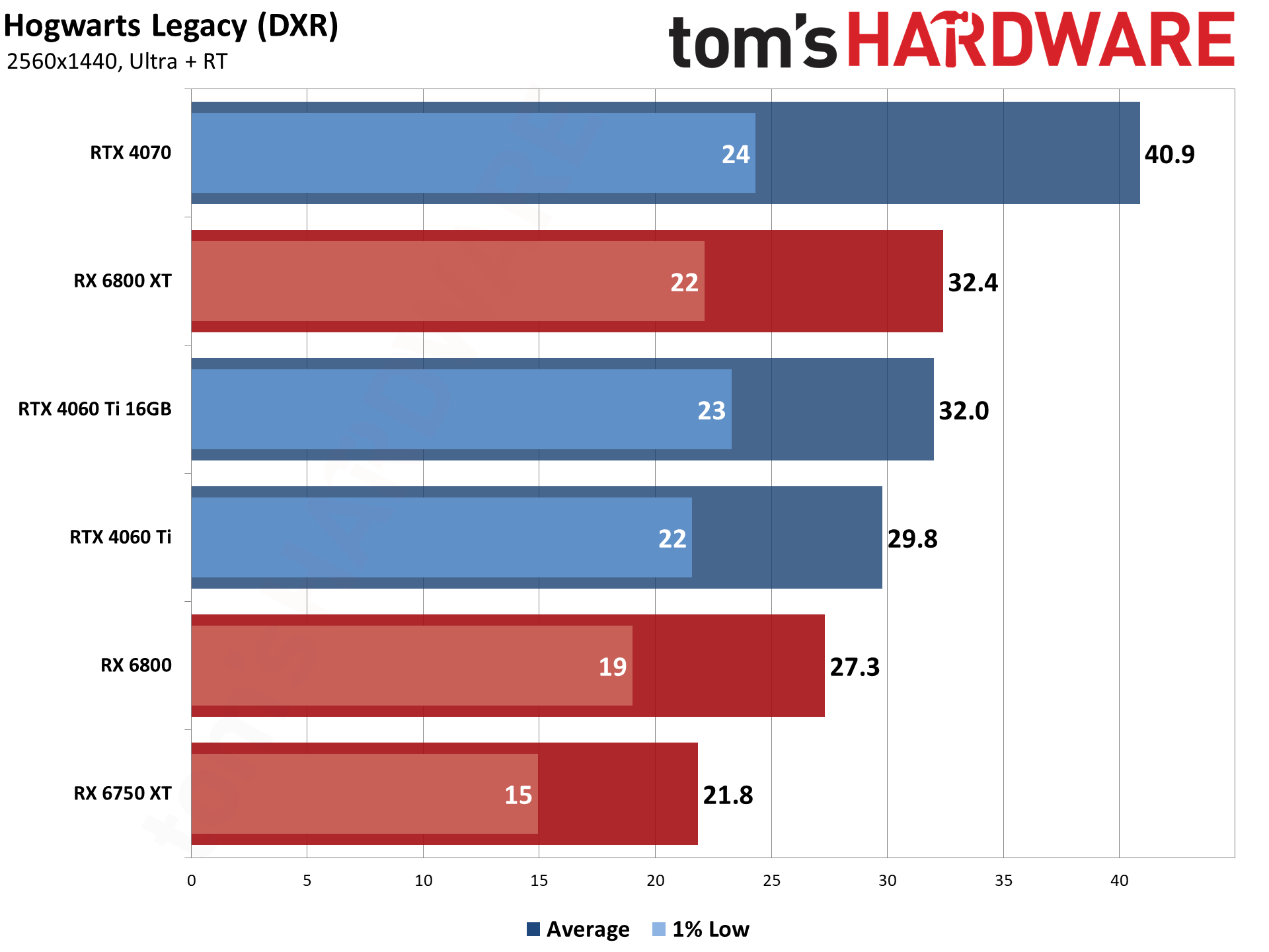

Hogwarts Legacy can be a bit finicky about the settings you use. It explicitly warned us when it felt we exceeded settings that were viable for our hardware, which basically meant all of the ultra tests with maxed-out ray tracing effects were concerned with our choices. And for good reason.

At 1080p medium — with RT reflections, RT shadows, and RT ambient occlusion, plus the overall RT quality set to medium — there was again no real difference between the 16GB and 8GB RTX 4060 Ti. Bumping up to ultra settings, with RT quality also set to ultra, was a different matter.

You might look at the charts and think, "Big deal, a 2% or 8% difference." Except, on the RTX 4060 Ti 8GB card, we experienced repeated game crashes. 1080p ultra often allowed us to complete one or two benchmark runs (running a circuit through Hogsmeade village) before dumping us to the desktop. At 1440p ultra, the crashes were so frequent as to make the game utterly unplayable.

For the AMD GPUs, the RX 6800 XT basically tied the 4060 Ti 16GB — slightly higher average fps, lower 1% lows at ultra settings. Meanwhile, the vanilla 6800 ended up trailing the RTX 4060 Ti by 11–15 percent, depending on the resolution and settings.

Curiously, even the RTX 4070 crashed at 1440p ultra. The RX 6750 XT, also with 12GB VRAM, didn't have that problem. Regardless, VRAM clearly matters for this game, particularly if you're trying to run maxed-out settings. It may not directly boost performance all that much, but cards with less than 12GB VRAM were unstable.

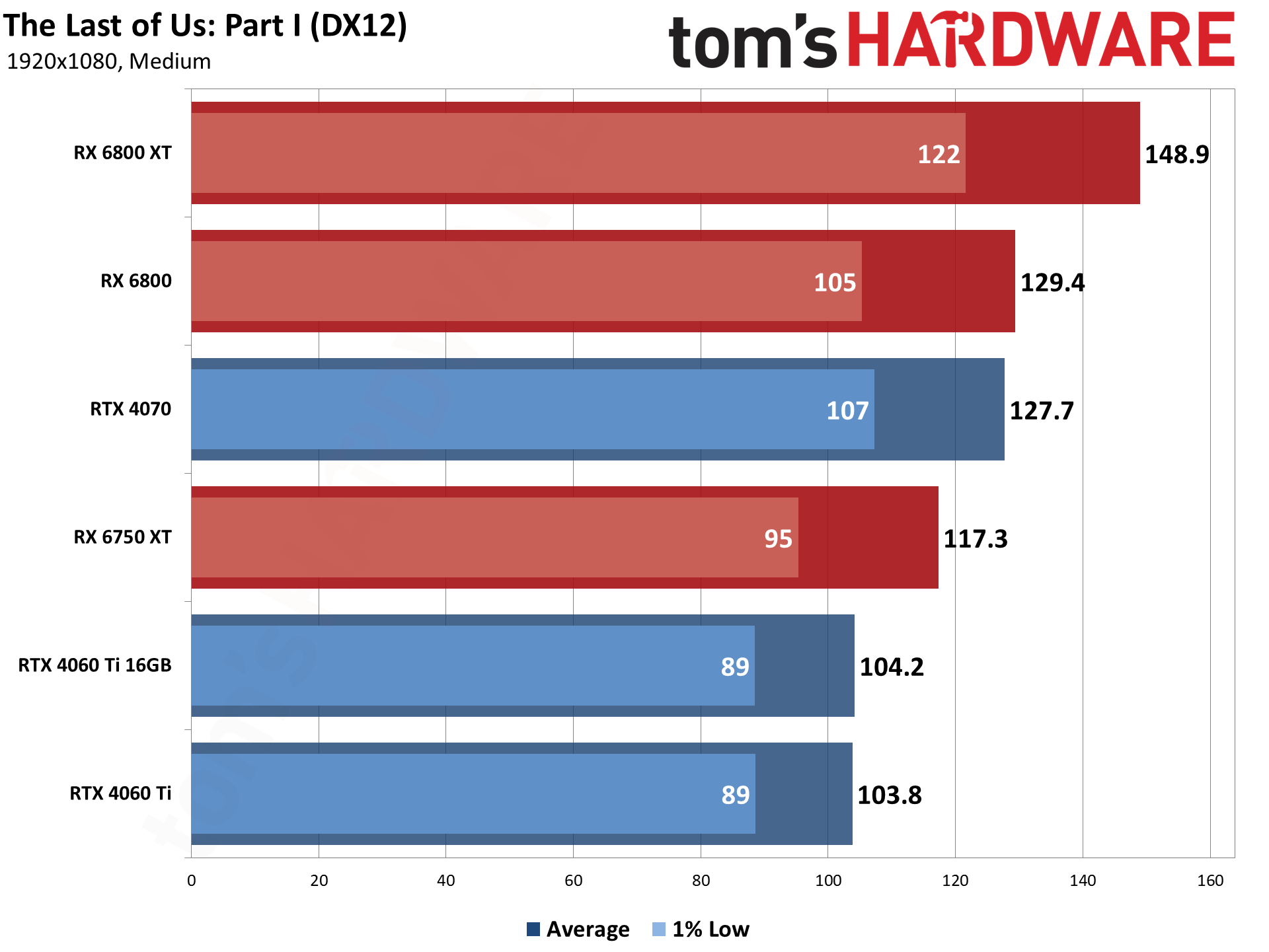

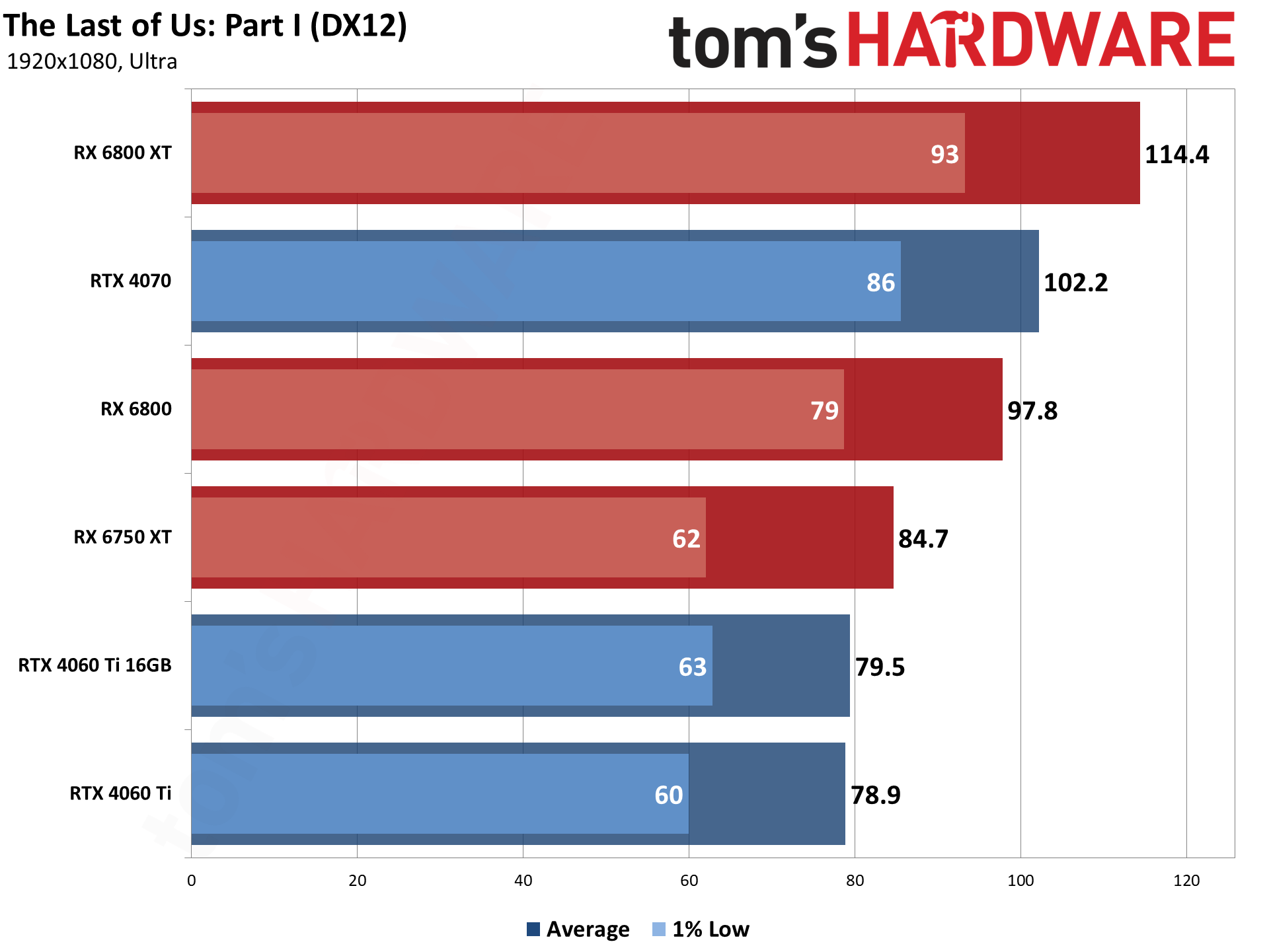

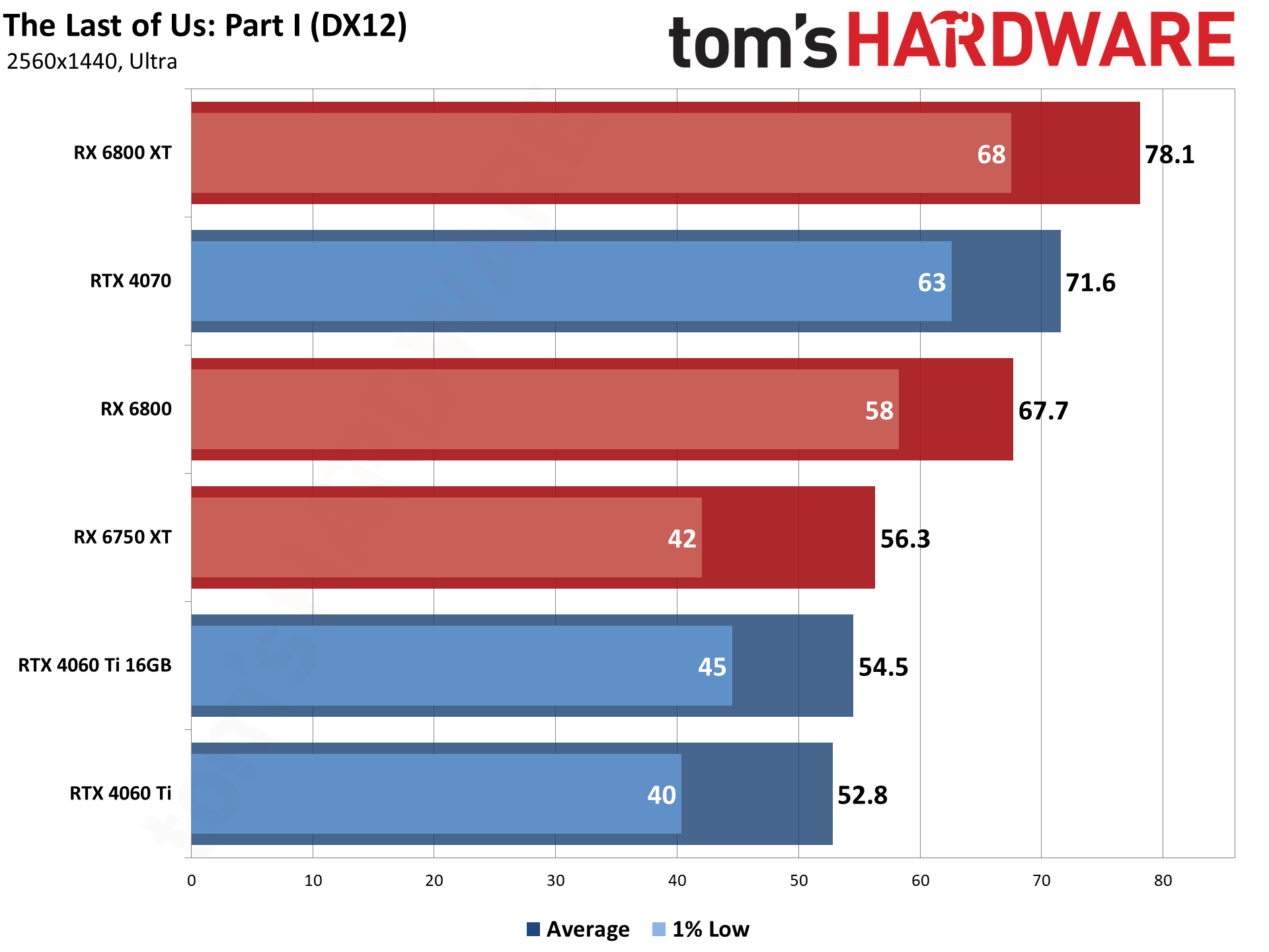

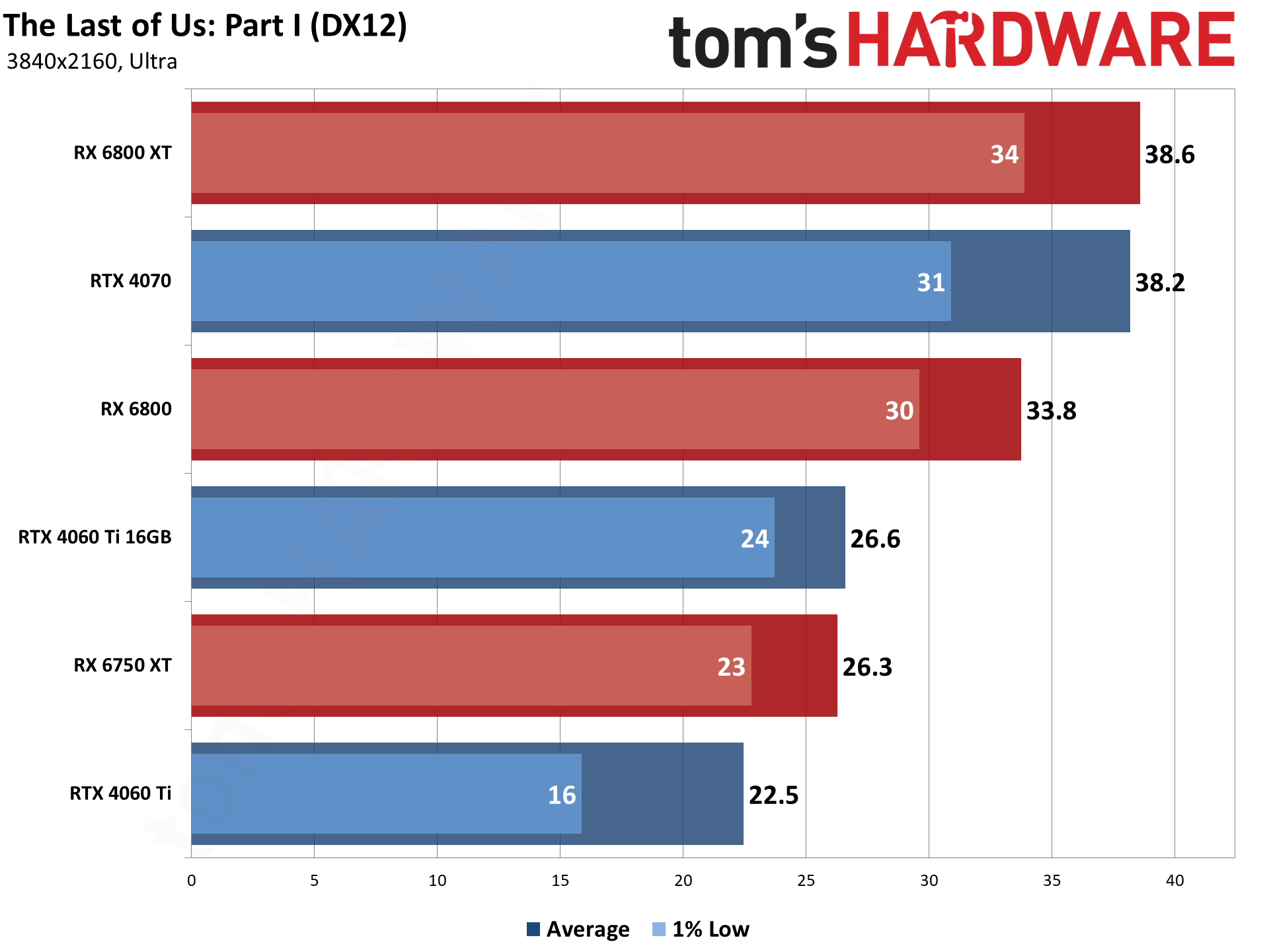

The Last of Us: Part I wraps up our bonus coverage, with a game that's widely "known" to need a lot of VRAM. Except, more recent patches seem to have curtailed the demands somewhat. The initial launch (on a "new" GPU) still takes a long time to compile all the shaders, probably around 10 minutes on the Nvidia GPUs and 15–20 minutes on the AMD GPUs. I would literally launch the game and walk away while I waited for the shader compilation to finish.

For testing, I ran a circuit around an area with a pond (on the way to the city with Ellie and Tess), as that seemed to be fairly demanding and was also void of creatures that could cause variation between benchmark runs.

At 1080p and 1440p, the benefits of 16GB versus 8GB on the 4060 Ti are again negligible. 4K shows an 18% gain in average fps, and 1% lows are up to 50% better... but neither card delivers a great experience at 4K ultra, with performance in the mid-to-low 20s. The RTX 4070 manages to clear 30 fps, which means it's playable even if it's not 60+ fps.

AMD's GPUs, meanwhile, completely demolish the Nvidia options. The RX 6800 XT is about 45% faster than the 4060 Ti 16GB, with 1% lows showing similar gains (give or take — it's a 37–52 percent spread, though 1% lows tend to be far more variable to begin with). The RX 6800 likewise beats the 4060 Ti 16GB by around 25%. Even the RX 6750 XT generally matches or exceeds the performance of the Nvidia card.

Obviously, there's more going on here than just VRAM. As an AMD-promoted game, it's not too shocking to see better performance on AMD GPUs than on Nvidia. The question is why there's such a gap, and we're not certain what the game is doing internally that favors AMD this much. But we've seen similar results elsewhere (i.e. Borderlands 3).

Recent Games Summary

Bottom line: Even in more recent games, it's hard to make a compelling case for the RTX 4060 Ti 16GB. It's never substantially worse than the 8GB model, but it's also not usually all that superior at settings and resolutions that make sense. The biggest differences inevitably come at 4K, but often with both GPUs running at unacceptably low framerates.

Could there be other games, or future games, where the extra VRAM proves more useful? Perhaps, but as usual, tuning your settings generally proves more important than VRAM capacity. Simply maxing out every setting in order to go beyond 8GB of VRAM use is possible, but at 1440p and especially 1080p in recent games — which is where a GPU with this level of performance belongs — there's not a massive benefit to having the extra memory.

Let's move on to the non-gaming tests.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: GeForce RTX 4060 Ti 16GB: Bonus Gaming Tests

Prev Page GeForce RTX 4060 Ti 16GB: 4K Ultra Gaming Performance Next Page GeForce RTX 4060 Ti 16GB: Professional Content Creation and AI Performance

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

JarredWaltonGPU I just want to clarify something here: The score is a result of both pricing as well as performance and features, plus I took a look (again) at our About Page and the scores breakdown. This is most definitely a "Meh" product right now. Some of my previous reviews may have been half a point (star) higher than warranted. I've opted to "correct" my scale and thus this lands at the 2.5-star mark.Reply

I do feel our descriptions of some of the other scores are way too close together. My previous reviews were based more on my past experience and an internal ranking that's perhaps not what the TH text would suggest. Here's how I'd break it down:

5 = Practically perfect

4.5 = Excellent

4 = Good

3.5 = Okay, possibly a bad price

3 = Okay but with serious caveats (pricing, performance, and/or other factors)

2.5 = Meh, niche use cases

...

The bottom four categories are still basically fine as described. Pretty much the TH text has everything from 3-star to 5-star as a "recommended" and that doesn't really jive with me. 🤷♂️

This would have been great as a 3060 Ti replacement if it had 12GB and a 192-bit bus with a $399 price point. Then the 4060 Ti 8GB could have been a 3060 replacement with 8GB and a 128-bit bus at the $329 price point. And RTX 4060 would have been a 3050 replacement at $249.

Fundamentally, this is a clearly worse value and specs proposition than the RTX 4060 Ti 8GB and the RTX 4070. It's way too close to the former and not close enough to the latter to warrant the $499 price tag.

All of the RTX 40-series cards have generally been a case of "good in theory, priced too high." Everything from the 4080 down to the 4060 so far got a score of 3.5 stars from me. There's definitely wiggle room, and the text is more important than just that one final score. In retrospect, I still waffle on how the various parts actually rank.

Here's an alternate ranking, based on retrospect and the other parts that have come out:

4090: 4.5 star. It's an excellent halo part that gives you basically everything. Expensive, yes, but not really any worse than the previous gen 3090 / 3090 Ti and it's actually justifiable.

4080: 3-star. It's fine on performance, but the generational price increase was just too much. 3080 Ti should have been a $999 (at most) part, and this should be $999 or less.

4070 Ti: 3-star. Basically the same story as the 4080. It's fine performance, priced way too high generationally.

4070: 3.5-star. Still higher price than I'd like, but the overall performance story is much better.

4060 Ti 16GB: 2.5-star. Clearly a problem child, and there's a reason it wasn't sampled by Nvidia or its partners. (The review would have been done a week ago but I had a scheduled vacation.) This is now on the "Jarred's adjusted ranking."

4060 Ti 8GB: 3-star. Okay, still a higher price than we'd like and the 128-bit interface is an issue.

4060: 3.5-star. This isn't an amazing GPU, but it's cheaper than the 3060 launch price and so mostly makes up for the 128-bit interface, 8GB VRAM, and 24MB L2. Generally better as an overall pick than many of the other 40-series GPUs.

AMD's RX 7000-series parts are a similar story. I think at the current prices, the 7900 XTX works as a $949 part and warrants the 4-star score. 7900 XT has dropped to $759 and also warrants the 4-star score, maybe. The 7600 at $259 is still a 3.5-star part. So, like I said, there's wiggle room. I don't think any of the charts or text are fundamentally out of line, and a half-star adjustment is basically easily justifiable on almost any review I've done. -

Lord_Moonub Jarred, thanks for this review. I do wonder if there is more silver lining on this card we might be missing though. Could it act as a good budget 4K card? What happens if users dial back settings slightly at 4K (eg no Ray tracing, no bleeding edge ultra features ) and then make the most of DLSS 3 and the extra 16GB VRAM? I wonder if users might get something visually close to top line experience at a much lower price.Reply -

JarredWaltonGPU Reply

If you do those things, the 4060 Ti 8GB will be just as fast. Basically, dialing back settings to make this run better means dialing back settings so that more than 8GB isn't needed.Lord_Moonub said:Jarred, thanks for this review. I do wonder if there is more silver lining on this card we might be missing though. Could it act as a good budget 4K card? What happens if users dial back settings slightly at 4K (eg no Ray tracing, no bleeding edge ultra features ) and then make the most of DLSS 3 and the extra 16GB VRAM? I wonder if users might get something visually close to top line experience at a much lower price. -

Elusive Ruse Damn, @JarredWaltonGPU went hard! Appreciate the review and the clarification of your scoring system.Reply -

InvalidError More memory doesn't do you much good without the bandwidth to put it to use. The 4060(Ti) needed 192bits to strike the practically perfect balance between capacity and bandwidth. It would have brought the 4060(Ti) launches from steaming garbage to at least being a consistent upgrade over the 3060(Ti).Reply -

Greg7579 Jarred, I'm building with the 4090 but love reading your GPU reviews, even the ones that are far below what I would build with because I learn something every time.Reply

I am not a gamer but a GFX Medium Format photographer and have multiple TB of high-res 200MB raw files that I work extensively with in LightRoom and Photoshop. I build every 4 years and update as I go. I build the absolute top-end of the PC arena, which is way overkill, but I do it anyway.

As you know. Lightroom has many new amazing AI masking and noise reduction features that are like magic but so many users (photographers) are now grinding to a halt on their old rigs and laptops. Photographers tend to be behind the gamers on PC / laptop power. It is common knowledge on the photo and Adobe forums that these new AI capabilities eat VRAM like Skittles and extensively use the GPU for the grind. (Adobe LR & PS was always behind on using the GPU with the CPU for its editing and export tasks but now are going at it with gusto.) When I run an AI DeNoise on a big GFX 200MB file, my old rig with the 3080 (I'm building again soon with the 4 090) takes about 12 seconds to grind out the AI DeNoise task. Others rigs photographers use take several minutes or just crash. The Adobe and LightRoom forums are full of howling and gnashing of teeth about this. I tell them to start upgrading, but here is my question.... I can't wait to see what the 4090 will do with these photography-related workflow tasks in LR.

Can you comment on this and tell me if indeed this new Lightroom AI masking and DeNoise (which is a miracle for photographers) is so VRAM intensive that doubling the VRAM on a card like this would really help alot? Isn't it true that NVidea made some decisions 3 years ago that resulted in not having enough (now far cheaper) VRAM in the 40 series? It should be double or triple what it is right? Anything you can teach me about increased GPU power and VRAM in Adobe LR for us photographers? -

hotaru251 4060ti should of been closer to a 4070.Reply

the gap between em is huge and the cost is way too high. (doubly so that it requires dlss3 support to not get crippled by the limited bus) -

atomicWAR ReplyJarredWaltonGPU said:Some of my previous reviews may have been half a point (star) higher than warranted. I've opted to "correct" my scale and thus this lands at the 2.5-star mark.

Thank you for listening Jarred. I was one of those claiming on multiple recent gpu reviews that your scores were about a half star off though not alone in that sentiment either. I was quick to defend you from trolls though as you clearly were not shilling for Nvidia either. This post proves my faith was well placed in you. Thank you for being a straight arrow!