Nvidia GeForce GTX 260/280 Review

Fillrate Tester Results

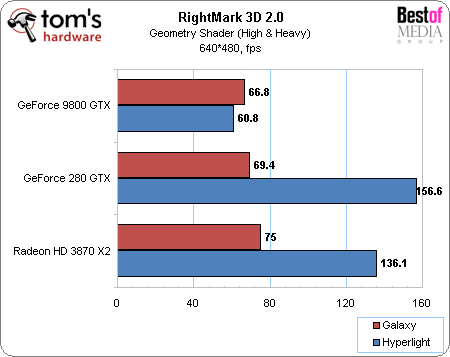

Geometry Shader

The Geometry Shading performances of the previous Nvidia Direct3D 10 GPU weren’t especially impressive, due to under-dimensioned internal buffers. Remember that according to Direct3D 10 a Geometry Shader is capable of generating up to 1,024 single-precision floating-point values per incoming vertex. Thus, with significant amplification of geometry, these buffers were quickly saturated and prevented the units from continuing calculation. With the GT200 the size of these buffers has been multiplied by a factor of six, noticeably increasing performance in certain cases, as we’ll see. To make the most of the increase in the size of these buffers, Nvidia also had to work on the scheduling of Geometry Shading threads.

On the first shader, Galaxy, the improvement was very moderate – 4%. On the other hand, it was no less than 158 with Hyperlight – evidence of the potential improvement with this type of shader, everything being dependent on their implementation and their power consumption (number of floating points generated per incoming vertex). So the GTX 280 has closed the gap and edges the 3870 X2 for this same shader.

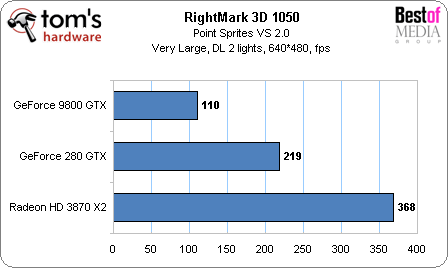

Now let’s look at the Rightmark 3D Point Sprites test (in Vertex Shading 2.0).

Why are we talking about this test in the section on Geometry Shaders? Simply because since Direct3D 10, point sprites are handled by Geometry Shaders, which explains the doubling of performance between 9800 GTX and GTX 280!

Various Improvements

Nvidia has also optimized several aspects of its architecture. The post-transformation cache memory has been increased. The role of this cache is to avoid having to retransform the same vertex several times with indexed primitives or triangle strips by saving the result of the vertex shaders.Due to the increase in the number of ROPs, performance in Early-Z rejection has been improved. The GT200 is capable of rejecting up to 32 masked pixels per cycle before applying a pixel shader. Also, Nvidia announces that they’ve optimized communication of data and commands between the driver and the front end of the GPU.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

-

Lunarion what a POS, the 9800gx2 is $150+ cheaper and performs just about the same. Let's hope the new ATI cards coming actually make a differenceReply -

foxhound009 woow,.... that's the new "high end" gpu????Reply

lolz.. 3870 x2 wil get cheaper... and nvidia gtx200 lies on the shelves providing space for dust........

(I really expectede mmore from this one... :/ ) -

thatguy2001 Pretty disappointing. And here I was thinking that the gtx 280 was supposed to put the 9800gx2 to shame. Not too good.Reply -

cappster Both cards are priced out of my price range. Mainstream decently priced cards sell better than the extreme high priced cards. I think Nvidia is going to lose this round of "next gen" cards and price to performance ratio to ATI. I am a fan of whichever company will provide a nice performing card at a decent price (sub 300 dollars).Reply -

njalterio Very disappointing, and I had to laugh when they compared the prices for the GTX 260 and the GTX 280, $450 and $600, calling the GTX 260 "nearly half the price" of the GTX 280. Way to fail at math. lol.Reply -

NarwhaleAu It is going to get owned by the 4870x2. In some cases the 3870x2 was quicker - not many, but we are talking 640 shaders total vs. 1600 total for the 4870x2.Reply -

MooseMuffin Loud, power hungry, expensive and not a huge performance improvement. Nice job nvidia.Reply -

compy386 This should be great news for AMD. The 4870 is rumored to come in at 40% above the 9800GTX so that would put it at about the 260GTX range. At $300 it would be a much better value. Plus AMD was expecting to price it in the $200s so even if it hits low, AMD can lower the price and make some money.Reply -

vochtige i think i'll get a 8800ultra. i'll be safe for the next 5 generations of nvidia! try harder nv crewReply