Nvidia’s Turing Architecture Explored: Inside the GeForce RTX 2080

Display Outputs and the Video Controller

Display Outputs: A Controller For The Future

The GeForce RTX Founders Edition cards sport three DisplayPort 1.4a connectors, one HDMI 2.0b output, and one VirtualLink interface. They support up to four monitors simultaneously, and naturally are HDCP 2.2-compatible. Why no HDMI 2.1? That standard was released in November of 2017, long after Turing was finalized.

Turing also enables Display Stream Compression (DSC) over DisplayPort, making it possible to drive an 8K (7680x4320) display over a single stream at 60 Hz. GP102 lacked this functionality. DSC is also the key to running at 4K (3840x2160) with a 120 Hz refresh and HDR.

Speaking of HDR, Turing now natively reads in HDR content through dedicated tone mapping hardware. Pascal, on the other hand, needed to apply processing that added latency.

Finally, all three Founders Edition cards include VirtualLink connectors for next-generation VR HMDs. The VirtualLink interface utilizes a USB Type-C connector but is based on an Alternate Mode with reconfigured pins to deliver four DisplayPort lanes, a bi-directional USB 3.1 Gen2 data channel for high-res sensors, and up to 27W of power. According to the VirtualLink Consortium, existing headsets typically operate within a 15W envelope, including displays, controllers, audio, power loss over a 5m cable, cable electronics, and connector losses. But this new interface is designed to support higher-power devices with improved display capabilities, better audio, higher-end cameras, and accessory ports as well. Just be aware that VirtualLink’s power delivery is not reflected in the TDP specification of GeForce RTX cards; Nvidia says using the interface requires up to an additional 35W.

Video Acceleration: Encode And Decode Improvements

Hardware-accelerated video features don’t get as much as attention as gaming, but new graphics architectures typically do add improvements that support the latest compression standards, incorporate more advanced coding tools/profiles, and offload work from the CPU.

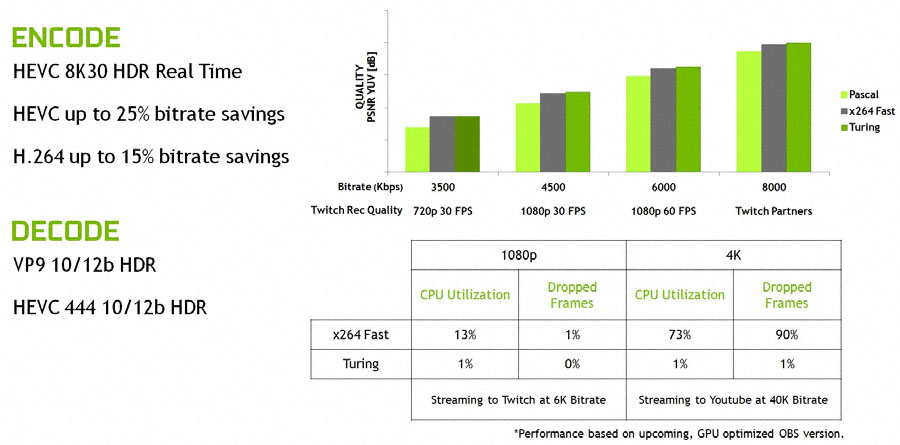

Encode performance is more important than ever for gamers streaming content to social platforms. A GPU able to handle the workload in hardware alleviates other platform resources, so the encode has a smaller impact on game frame rates. Historically, GPU-accelerated encoding didn’t quite match the quality of a software encode at a given bit rate, though. Nvidia claims Turing changes this by absorbing the workload and exceeding the quality of a software-based x264 Fast encode (according to its own peak signal-to-noise ratio benchmark). Beyond a certain point, quality improvements do offer diminishing returns. It’s notable, then, that Nvidia claims to at least match the Fast profile. But streamers are most interested in the GPU’s ability to minimize CPU utilization and bit rate.

This generation, the NVEnc encoder is fast enough for real-time 8K HDR at 30 FPS. Optimizations to the encoder facilitate bit rate savings of up to 25% in HEVC (or a corresponding quality increase at the same bit rate) and up to 15% in H.264. Hardware acceleration makes real-time encoding at 4K a viable option as well, although Nvidia doesn’t specify what CPU it tested against to generate a 73% utilization figure in its software-only comparison.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Additionally, the decode block supports VP9 10/12-bit HDR. It’s not clear how that differs from GP102-based cards though, since GeForce GTX 1080 Ti is clearly listed in Nvidia’s NVDec support matrix as having VP9 10/12-bit support as well. Similarly, HEVC 4:4:4 10/12-bit HDR is listed as a new feature for Turing.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Current page: Display Outputs and the Video Controller

Prev Page RTX-OPS: Trying to Make Sense of Performance Next Page Nvidia’s Founders Edition: Farewell, Beautiful Blower-

siege19 "And although veterans in the hardware field have their own opinions of what real-time ray tracing means to an immersive gaming experience, I’ve been around long enough to know that you cannot recommend hardware based only on promises of what’s to come."Reply

So wait, do I preorder or not? (kidding) -

jimmysmitty Well done article Chris. This is why I love you. Details and logical thinking based on the facts we have.Reply

Next up benchmarks. Can't wait to see if the improvements nVidia made come to fruition in performance worthy of the price. -

Lutfij Holding out with bated breath about performance metrics.Reply

Pricing seems to be off but the followup review should guide users as to it's worth! -

Krazie_Ivan i didn't expect the 2070 to be on TU106. as noted in the article, **106 has been a mid-range ($240-ish msrp) chip for a few generations... asking $500-600 for a mid-range GPU is insanity. esp since there's no way it'll have playable fps with RT "on" if the 2080ti struggles to maintain 60. DLSS is promisingly cool, but that's still not worth the MASSIVE cost increases.Reply -

jimmysmitty Reply21319910 said:i didn't expect the 2070 to be on TU106. as noted in the article, **106 has been a mid-range ($240-ish msrp) chip for a few generations... asking $500-600 for a mid-range GPU is insanity. esp since there's no way it'll have playable fps with RT "on" if the 2080ti struggles to maintain 60. DLSS is promisingly cool, but that's still not worth the MASSIVE cost increases.

It is possible that they are changing their lineup scheme. 106 might have become the low high end card and they might have something lower to replace it. This happens all the time. -

Lucky_SLS turing does seem to have the ability to pump up the fps if used right with all its features. I just hope that nvidia really made a card to power up its upcoming 4k 200hz hdr g sync monitors. wow, thats a mouthful!Reply -

anthonyinsd ooh man the jedi mind trick Nvidia played on hyperbolic gamers to get rid of thier overstock is gonna be EPIC!!! and just based on facts: 12nm gddr6 awesome new voltage regulation and to GAME only processes thats a win in my book. I mean if all you care is about is your rast score, then you should be on the hunt for a titan V, if it doesn't rast its trash lol. been 10 years since econ 101, but if you want to get rid of overstock you dont tell much about the new product till its out; then the people who thought they were smart getting the older product, now want o buy the new one too....Reply -

none12345 I see a lot of features that are seemingly designed to save compute resources and output lower image quality. With the promise that those savings will then be applied to increase image quality on the whole.Reply

I'm quite dubious about this. My worry is that some of the areas of computer graphics that need the most love, are going to get even worse. We can only hope that overall image quality goes up at the same frame rate. Rather then frame rate going up, and parts of the image getting worse.

I do not long to return to the day where different graphics cards output difference image quality at the same up front graphics settings. This was very annoying in the past. You had some cards that looked faster if you just looked at their fps numbers. But then you looked at the image quality and noticed that one was noticeably worse.

I worry that in the end we might end up in the age of blur. Where we have localized areas of shiny highly detailed objects/effects layered on top of an increasingly blurry background. -

CaptainTom I have to admit that since I have a high-refresh (non-Adaptive Sync) monitor, I am eyeing the 2080 Ti. DLSS would be nice if it was free in 1080p (and worked well), and I still don't need to worry about Gstink. But then again I have a sneaking suspicion that AMD is going to respond with 7nm Cards sooner than everyone expects, so we'll see.Reply

P.S. Guys the 650 Ti was a 106 card lol. Now a xx70 is a 106 card. Can't believe the tech press is actually ignoring the fact that Nvidia is relabeling their low-end offering as a xx70, and selling it for $600 (Halo product pricing). I swear Nvidia could get away with murder... -

mlee 2500 4nm is no longer considered a "Slight Density Improvement".Reply

Hasn't been for over a decade. It's only lumped in with 16 from a marketing standpoint becuase it's no longer the flagship lithography (7nm).