Nvidia’s Turing Architecture Explored: Inside the GeForce RTX 2080

RTX-OPS: Trying to Make Sense of Performance

As modern graphics processors become more complex, integrating resources that perform dissimilar functions but still affect the overall performance picture, it becomes increasingly difficult to summarize their capabilities. We already use terms like fillrate to compare how many billions of pixels or texture elements a GPU can theoretically render to screen in a second. Memory bandwidth, processing power, primitive rates—the graphics world is full of peaks that become the basis for back-of-the-envelope calculations.

Well, with the addition of Tensor and RT cores to its Turing Streaming Multiprocessors, Nvidia found it necessary to devise a new metric that’d suitably encompass the capabilities of its INT32 and FP32 math pipelines, its RT cores, and the Tensor cores. Tom’s Hardware doesn’t plan to use the resulting “RTX-OPS” specification for any of its comparisons, but since Nvidia is citing it, we want to at least describe the equation’s composition.

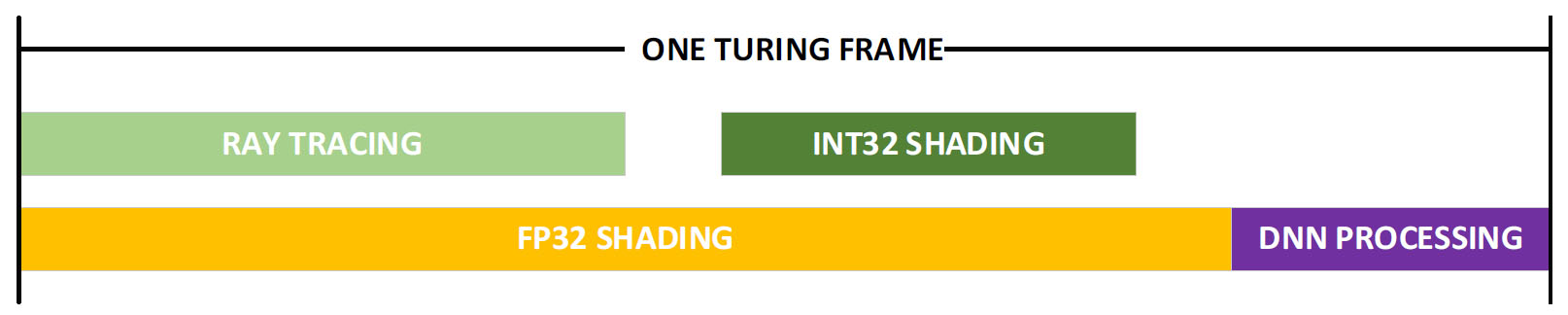

The RTX-OPS model requires utilization of all resources, which is a bold and very future-looking assumption. After all, until games broadly adopt Turing’s ray tracing and deep learning capabilities, RT and Tensor cores sit idle. As they start coming online, though, Nvidia developed its own approximation of the processing involved in one frame rendered by a Turing-based GPU.

In the diagram above, Nvidia shows roughly 80% of the frame consumed by rendering and 20% going into AI. In the slice dedicated to shading, there’s a roughly 50/50 split between ray tracing and FP32 work. Drilling down even deeper into the CUDA cores, we already mentioned that Nvidia observed roughly 36 INT32 operations for every 100 FP32 instructions across a swathe of shader traces, yielding a reasonable idea of what happens in an “ideal” scene leveraging every functional unit.

So, given that…

FP32 compute = 4352 FP32 cores * 1635 MHz clock rate (GPU Boost rating) * 2 = 14.2 TFLOPS

RT core compute = 10 TFLOPS per gigaray, assuming GeForce GTX 1080 Ti (11.3 TFLOPS FP32 at 1582 MHz) can cast 1.1 billion rays using software emulation = ~100 TFLOPS on a GeForce RTX 2080 Ti capable of casting ~10 billion rays

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

INT32 instructions per second = 4352 INT32 cores * 1635 MHz clock rate (GPU Boost rating) * 2 = 14.2 TIPS

Tensor core compute = 544 Tensor cores * 1635 MHz clock rate (GPU Boost rating) * 64 floating-point FMA operations per clock * 2 = 113.8 FP16 Tensor TFLOPS

…we can walk Nvidia’s math backwards to see how it reached a 78 RTX-OPS specification for its GeForce RTX 2080 Ti Founders Edition card:

(14 TFLOPS [FP32] * 80%) + (14 TIPS [INT32] * 28% [~35 INT32 ops for every 100 FP32 ops, which take up 80% of the workload]) + (100 TFLOPS [ray tracing] * 40% [half of 80%]) + (114 TFLOPS [FP16 Tensor] * 20%) = 77.9

Again, there are a lot of assumptions made in this model, we see no way to use it for generational or competitive comparisons, and we don’t want to get in the habit of generalizing ratings across many different resources. At the same time, it’s clear that Nvidia wanted a way to represent performance holistically and we cannot fault the company for trying, particularly since it didn’t just add the capabilities of each subsystem but rather isolated their individual contributions to a frame.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Current page: RTX-OPS: Trying to Make Sense of Performance

Prev Page Variable Rate Shading: Get Smarter About Shading, Too Next Page Display Outputs and the Video Controller-

siege19 "And although veterans in the hardware field have their own opinions of what real-time ray tracing means to an immersive gaming experience, I’ve been around long enough to know that you cannot recommend hardware based only on promises of what’s to come."Reply

So wait, do I preorder or not? (kidding) -

jimmysmitty Well done article Chris. This is why I love you. Details and logical thinking based on the facts we have.Reply

Next up benchmarks. Can't wait to see if the improvements nVidia made come to fruition in performance worthy of the price. -

Lutfij Holding out with bated breath about performance metrics.Reply

Pricing seems to be off but the followup review should guide users as to it's worth! -

Krazie_Ivan i didn't expect the 2070 to be on TU106. as noted in the article, **106 has been a mid-range ($240-ish msrp) chip for a few generations... asking $500-600 for a mid-range GPU is insanity. esp since there's no way it'll have playable fps with RT "on" if the 2080ti struggles to maintain 60. DLSS is promisingly cool, but that's still not worth the MASSIVE cost increases.Reply -

jimmysmitty Reply21319910 said:i didn't expect the 2070 to be on TU106. as noted in the article, **106 has been a mid-range ($240-ish msrp) chip for a few generations... asking $500-600 for a mid-range GPU is insanity. esp since there's no way it'll have playable fps with RT "on" if the 2080ti struggles to maintain 60. DLSS is promisingly cool, but that's still not worth the MASSIVE cost increases.

It is possible that they are changing their lineup scheme. 106 might have become the low high end card and they might have something lower to replace it. This happens all the time. -

Lucky_SLS turing does seem to have the ability to pump up the fps if used right with all its features. I just hope that nvidia really made a card to power up its upcoming 4k 200hz hdr g sync monitors. wow, thats a mouthful!Reply -

anthonyinsd ooh man the jedi mind trick Nvidia played on hyperbolic gamers to get rid of thier overstock is gonna be EPIC!!! and just based on facts: 12nm gddr6 awesome new voltage regulation and to GAME only processes thats a win in my book. I mean if all you care is about is your rast score, then you should be on the hunt for a titan V, if it doesn't rast its trash lol. been 10 years since econ 101, but if you want to get rid of overstock you dont tell much about the new product till its out; then the people who thought they were smart getting the older product, now want o buy the new one too....Reply -

none12345 I see a lot of features that are seemingly designed to save compute resources and output lower image quality. With the promise that those savings will then be applied to increase image quality on the whole.Reply

I'm quite dubious about this. My worry is that some of the areas of computer graphics that need the most love, are going to get even worse. We can only hope that overall image quality goes up at the same frame rate. Rather then frame rate going up, and parts of the image getting worse.

I do not long to return to the day where different graphics cards output difference image quality at the same up front graphics settings. This was very annoying in the past. You had some cards that looked faster if you just looked at their fps numbers. But then you looked at the image quality and noticed that one was noticeably worse.

I worry that in the end we might end up in the age of blur. Where we have localized areas of shiny highly detailed objects/effects layered on top of an increasingly blurry background. -

CaptainTom I have to admit that since I have a high-refresh (non-Adaptive Sync) monitor, I am eyeing the 2080 Ti. DLSS would be nice if it was free in 1080p (and worked well), and I still don't need to worry about Gstink. But then again I have a sneaking suspicion that AMD is going to respond with 7nm Cards sooner than everyone expects, so we'll see.Reply

P.S. Guys the 650 Ti was a 106 card lol. Now a xx70 is a 106 card. Can't believe the tech press is actually ignoring the fact that Nvidia is relabeling their low-end offering as a xx70, and selling it for $600 (Halo product pricing). I swear Nvidia could get away with murder... -

mlee 2500 4nm is no longer considered a "Slight Density Improvement".Reply

Hasn't been for over a decade. It's only lumped in with 16 from a marketing standpoint becuase it's no longer the flagship lithography (7nm).