How To: Building Your Own Render Farm

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

GPU-Based Rendering

When using software-based rendering, the graphics processor in your render nodes won't make a bit of difference in the performance or final image. You can use an integrated GPU or a spare mainstream card you have laying around, but since you'll primarily be connecting to the render nodes using a VNC client, it won't matter.

However, if you are instead using GPU-based rendering, you need to plan on adding a graphics card that the rendering solution in each node supports. If you are using Nvidia Gelato, Nvidia's solution for GPU-based 3D rendering, any GeForce FX 5200 or higher is usable as your rendering device, while Gelato Pro requires a Quadro FX.

Support for Gelato appears to be trailing off in favor of Compute Unified Device Architecture (CUDA) development. Between CUDA and OpenCL, we can expect to see at least some support for GPU-based acceleration for rendering (and simulation/dynamics) in the near future, so that instead of all of the calculations taking place on the CPU, calculations particularly suited to the GPU (especially massively parallel floating-point operations) are offloaded to it. This is essentially the same thing that was done a few years ago with dedicated digital signal processor (DSP) farm cards like the ICE BlueICE, except commodity GPUs are used instead of more expensive DSPs or custom processors.

The caveat here is that GPU-based rendering (or acceleration) is still in its infancy. If you think you might make a transition in the future, then it is important to plan accordingly when buying 1U enclosures, as discussed previously. It is thus a good idea to go ahead and acquire the matching PCIe x16 riser cards so that you can upgrade later. Of course, this also means that the motherboards in your render nodes need at least a x16 PCIe slot.

Render Controllers

In most cases, any individual 3D or compositing application will include its own support for network rendering. For example, LightWave offers ScreamerNet, 3ds Max includes Backburner (which Combustion uses as well), Maya includes Maya Satellite, and After Effects offers the Render Queue. But running all the needed separate render controllers on each one of your nodes is a comparative waste of system resources. Also, the limitations of the included render managers are numerous and they may or may not allow for monitoring of individual systems in order to check the status of a render or frame integrities on a per-system basis or to see if individual nodes have crashed.

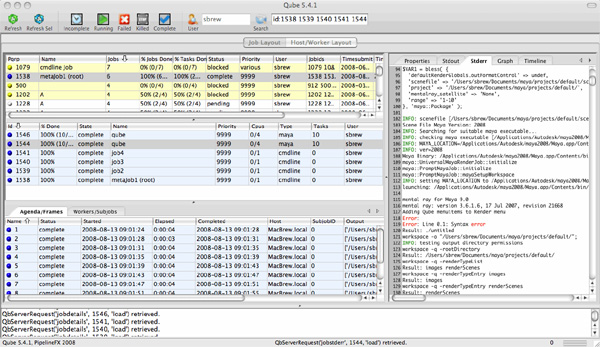

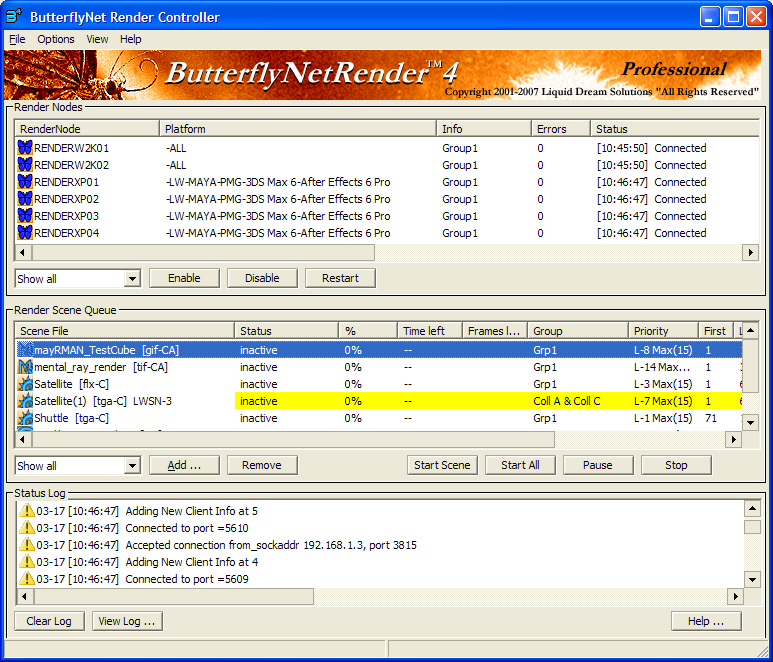

In order to efficiently manage multiple systems and multiple renderers, centralized render controllers are thus required. There are several applications that can control multiple software programs from a single render controller. To name a few, Qube!, ButterflyNetRender, Deadline, Rush, and RenderPal are all commercial products. Moreover, there are literally dozens of shareware, freeware, and open-source controllers available as well. Going over the various features of these different render controllers would be an article in itself. Suffice to say that most of them actively monitor the systems for output, check the output frames for integrity, and notify you upon completion of a job. Some also support features like sending SMS messages. More advanced solutions allow for remote management, priority assignment, and handling of some of the local setup tasks themselves.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

When shopping for a render controller, first look to see if your solution of choice supports the software you plan to use. Then, look at the features you need and compare your budget.

Current page: GPU-Based Rendering

Prev Page Buying From A Small VAR Next Page Making Slave Units For Your DAW-

borandi And soon they'll all move to graphics cards rendering. Simple. This article for now: worthless.Reply -

Draven35 People have been saying that for several years now, and Nvidia has killed Gelato. Every time that there has been an effort to move to GPU-based rendering, there has been a change to how things are rendered that has made it ineffective to do so.Reply -

borandi With the advent of OpenCL at the tail end of the year, and given that a server farm is a centre for multiparallel processes, GPGPU rendering should be around the corner. You can't ignore the power of 1.2TFlops per PCI-E slot (if you can render efficiently enough), or 2.4TFlops per kilowatt, as opposed to 10 old Pentium Dual Cores in a rack.Reply -

Draven35 Yes, but it still won't render in real time. You'll still need render time, and that means separate systems. i did not ignore that in the article, and in fact discussed GPU-based rendering and ways to prepare your nodes for that. Just because you may start rendering on a GPU, does not mean it will be in real time. TV rendering is now in high definitiion, (finished in 1080p, usually) and rendering for film is done in at least that resolution, or 2k-4k. If you think you're going to use GPU-based rendering, get boards with an x16 slot, and rsier cards, then put GPUs in the units when you start using it. Considering software development cycles, It will likely be a year before a GPGPU-based renderer made in OpenCL is available from any 3D software vendors for at least a year (i.e. SIGGRAPH 2010). Most 3D animators do not and will not develop their own renderers.Reply -

ytoledano While I never rendered any 3d scenes, I did learn a lot on building a home server rack. I'm working on a project which involves combinatorial optimization and genetic algorithms - both need a lot of processing power and can be easily split to many processing units. I was surprised to see how cheap one quad core node can be.Reply -

Draven35 Great, thanks- its very cool to hear someone cite another use of this type of setup. Hope you found some useful data.Reply -

MonsterCookie Due to my job I work on parallel computers every day.Reply

I got to say: building a cheapo C2D might be OK, but still it is better nowadays to buy cheap C2Q instead, because the price/performance ratio of the machine is considerably better.

However, please DO NOT spend more than 30% of you money on useless M$ products.

Be serious, and keep cheap things cheap, and spend your hard earned money on a better machine or on your wife/kids/bear instead.

Use linux, solaris, whatsoever ...

Better performance, better memory management, higher stability.

IN FACT, most real design/3D applications run under unixoid operating systems. -

ricstorms Actually I think if you look at a value analysis, AMD could actually give a decent value for the money. Get an old Phenom 9600 for $89 and build some ridiculously cheap workstations and nodes. The only thing that would kill you is power consumption, I don't think the 1st gen Phenoms were good at undervolting (of course they weren't good on a whole lot of things). Of course the Q8200 would trounce it, but Intel won't put their Quads south of $150 (not that they really need to).Reply -

eaclou Thanks for doing an article on workstations -- sometimes it feels like all of the articles are only concerned with gaming.Reply

I'm not to the point yet where I really need a render farm, but this information might come in handy in a year or two. (and I severely doubt GPU rendering will make CPU rendering a thing of the past in 2 years)

I look forward to future articles on workstations

-Is there any chance of a comparison between workstation graphics cards and gaming graphics cards? -

cah027 I wish these software companies would get on the ball. There are consumer level software packages that will use multiple cpu cores as well as GPU all at the same time. Then someone could build a 4 socket, 6 GPU box all in one that would do the work equal to several cheap nodes!Reply