How To: Building Your Own Render Farm

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Performance Difference

Here's where it comes down to numbers. We're going to assume that you intend to buy a workstation. The operative question here is, would it be better to buy a faster workstation or save money by purchasing a render node or two to run with it?

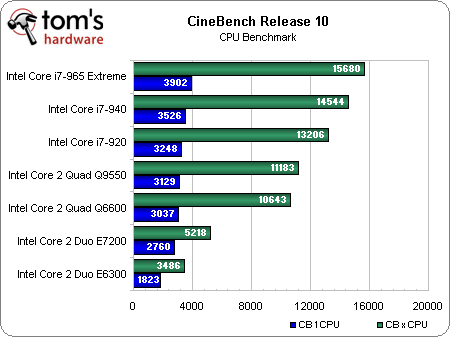

This CineBench test chart shows a fairly linear progression up the scale of processing power. Keep in mind that the bottom two processors show a sudden drop in xCPU scores (xCPU is when CineBench is running multi-threaded, while it runs as many threads as there are processor cores) because they are only dual-core CPUs. The information in this chart allows you to use simple math to see how much performance an extra node or two would offer.

Say your workstation is a Core i7-940-based machine, which turns a CineBench score of 14,544. For the price difference between that processor and the Core i7-965 Extreme, you can build an entire Core 2 Duo E7200 render node, which would give you a combined CineBench score of 19,762, far exceeding the performance of a Core i7-965. If you had a workstation using a Core i7-920 instead, the savings, when put in your render node, would allow you to build the node using a Core 2 Quad Q9550 and give you a combined CineBench score of 24,389, which is very close to doubling performance. Note: the ability to show combined performance by simply adding the CineBench scores is why it was used for this comparison.

The other performance advantage to having render nodes is that they can be rendering while you are working on your workstation, thus maintaining full interactivity and enabling you to use your processor's resources while the nodes are crunching away on a rendering task. For a complex project, this capability can become very important.

Conclusion

So there you have it, all the secrets to building your own render farm. Hopefully, many of you will be able to put this information to good use. While some specific elements of this article will be dated in six months, its general principles have been relevant for years and should continue to be useful. In the future, Tom's Hardware will look at workstations and test render nodes as well.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

-

borandi And soon they'll all move to graphics cards rendering. Simple. This article for now: worthless.Reply -

Draven35 People have been saying that for several years now, and Nvidia has killed Gelato. Every time that there has been an effort to move to GPU-based rendering, there has been a change to how things are rendered that has made it ineffective to do so.Reply -

borandi With the advent of OpenCL at the tail end of the year, and given that a server farm is a centre for multiparallel processes, GPGPU rendering should be around the corner. You can't ignore the power of 1.2TFlops per PCI-E slot (if you can render efficiently enough), or 2.4TFlops per kilowatt, as opposed to 10 old Pentium Dual Cores in a rack.Reply -

Draven35 Yes, but it still won't render in real time. You'll still need render time, and that means separate systems. i did not ignore that in the article, and in fact discussed GPU-based rendering and ways to prepare your nodes for that. Just because you may start rendering on a GPU, does not mean it will be in real time. TV rendering is now in high definitiion, (finished in 1080p, usually) and rendering for film is done in at least that resolution, or 2k-4k. If you think you're going to use GPU-based rendering, get boards with an x16 slot, and rsier cards, then put GPUs in the units when you start using it. Considering software development cycles, It will likely be a year before a GPGPU-based renderer made in OpenCL is available from any 3D software vendors for at least a year (i.e. SIGGRAPH 2010). Most 3D animators do not and will not develop their own renderers.Reply -

ytoledano While I never rendered any 3d scenes, I did learn a lot on building a home server rack. I'm working on a project which involves combinatorial optimization and genetic algorithms - both need a lot of processing power and can be easily split to many processing units. I was surprised to see how cheap one quad core node can be.Reply -

Draven35 Great, thanks- its very cool to hear someone cite another use of this type of setup. Hope you found some useful data.Reply -

MonsterCookie Due to my job I work on parallel computers every day.Reply

I got to say: building a cheapo C2D might be OK, but still it is better nowadays to buy cheap C2Q instead, because the price/performance ratio of the machine is considerably better.

However, please DO NOT spend more than 30% of you money on useless M$ products.

Be serious, and keep cheap things cheap, and spend your hard earned money on a better machine or on your wife/kids/bear instead.

Use linux, solaris, whatsoever ...

Better performance, better memory management, higher stability.

IN FACT, most real design/3D applications run under unixoid operating systems. -

ricstorms Actually I think if you look at a value analysis, AMD could actually give a decent value for the money. Get an old Phenom 9600 for $89 and build some ridiculously cheap workstations and nodes. The only thing that would kill you is power consumption, I don't think the 1st gen Phenoms were good at undervolting (of course they weren't good on a whole lot of things). Of course the Q8200 would trounce it, but Intel won't put their Quads south of $150 (not that they really need to).Reply -

eaclou Thanks for doing an article on workstations -- sometimes it feels like all of the articles are only concerned with gaming.Reply

I'm not to the point yet where I really need a render farm, but this information might come in handy in a year or two. (and I severely doubt GPU rendering will make CPU rendering a thing of the past in 2 years)

I look forward to future articles on workstations

-Is there any chance of a comparison between workstation graphics cards and gaming graphics cards? -

cah027 I wish these software companies would get on the ball. There are consumer level software packages that will use multiple cpu cores as well as GPU all at the same time. Then someone could build a 4 socket, 6 GPU box all in one that would do the work equal to several cheap nodes!Reply