Why you can trust Tom's Hardware

The U27M90 ships in its Cinema mode, which is very color accurate. A calibration data sheet came with my sample showing results for gamma and grayscale that I could match in my own tests. You’ll soon see that it does not require calibration for a very accurate picture.

Grayscale and Gamma Tracking

Our grayscale and gamma tests use Calman calibration software from Portrait Displays. We describe our grayscale and gamma tests in detail here.

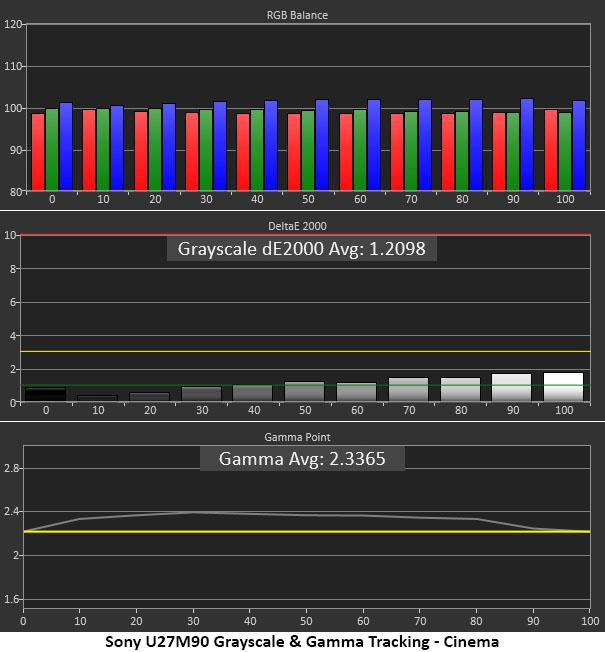

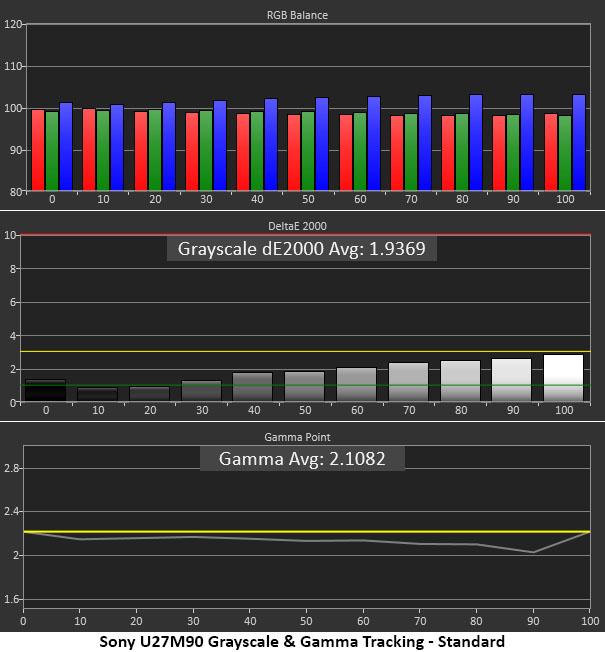

There are no visible grayscale errors in the U27M90’s Cinema or Standard modes. You can see a bit of extra blue in the Balance chart, but all errors are comfortably under 3dE, meaning they’re invisible. The only difference between the two modes is their gamma tracking. Cinema goes for a darker look with gamma around 2.33, while Standard is lighter at 2.11. In practice, Cinema looks slightly more saturated with deeper primary colors. Standard is a good choice for games because mid-tone details are a bit more visible.

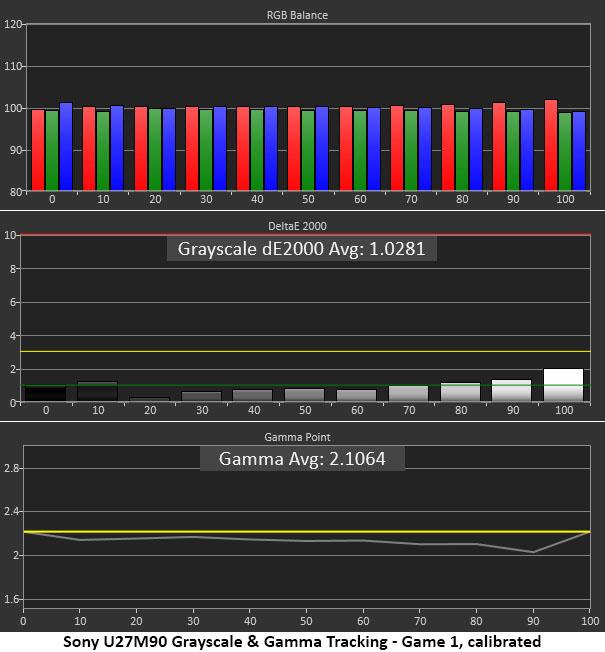

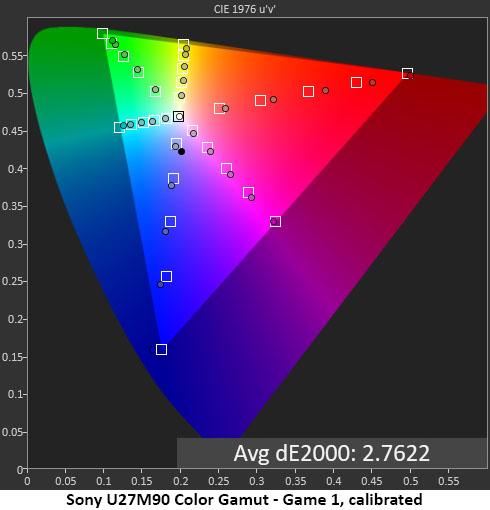

To calibrate the Game 1 mode, I adjusted the RGB sliders and left the gamma set on 2.2. That gave me similar results to Standard. The reason to adjust Game 1 is that a PS5 will automatically select that mode when connected. You can bypass this switch by turning Auto Picture Mode off.

Comparisons

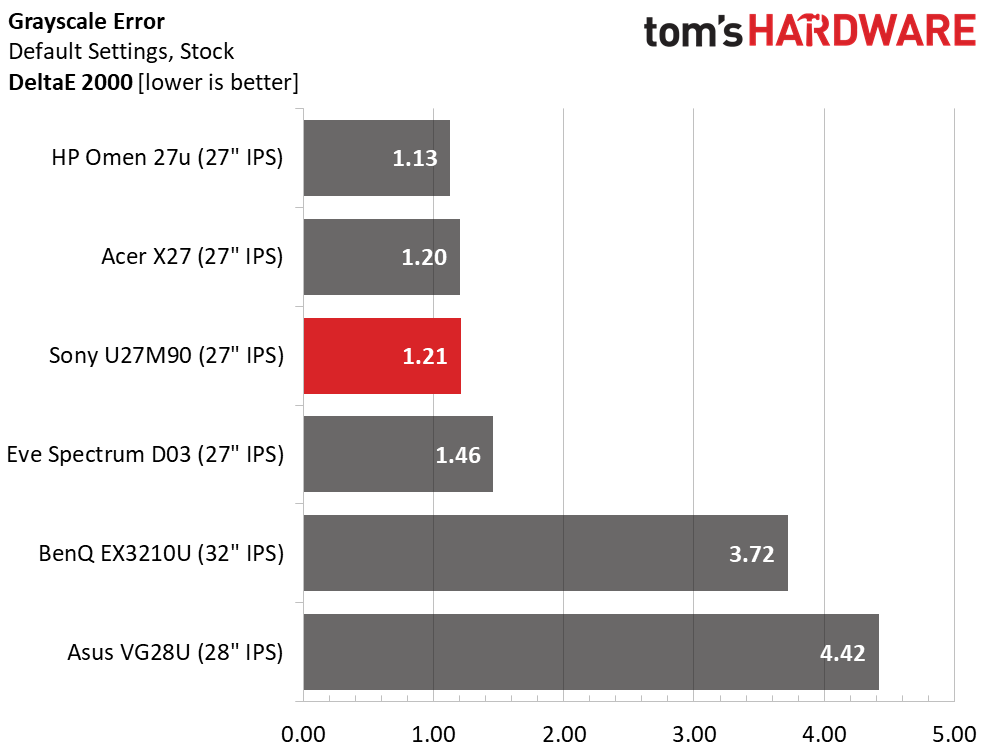

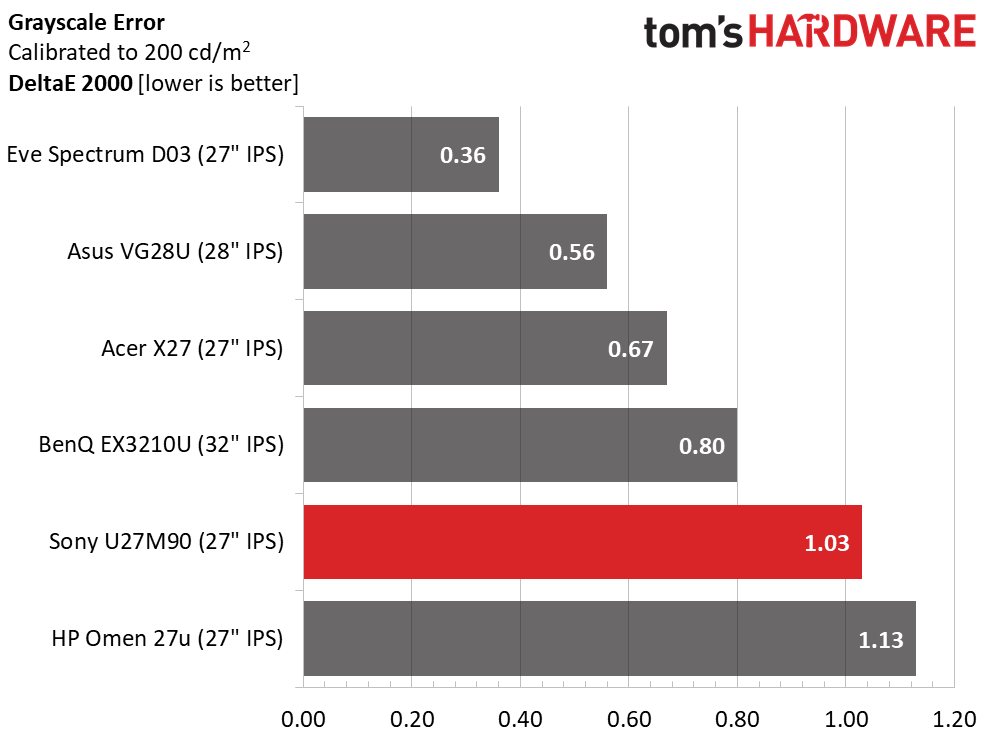

For the comparison, I charted the U27M90’s Cinema mode and its default grayscale error of 1.21dE. That puts it in the top tier for out-of-box accuracy. Remember that the X27 costs $1,800. It doesn’t get much better without calibration. After adjusting the Game 1 mode, I got the error down to 1.03dE.

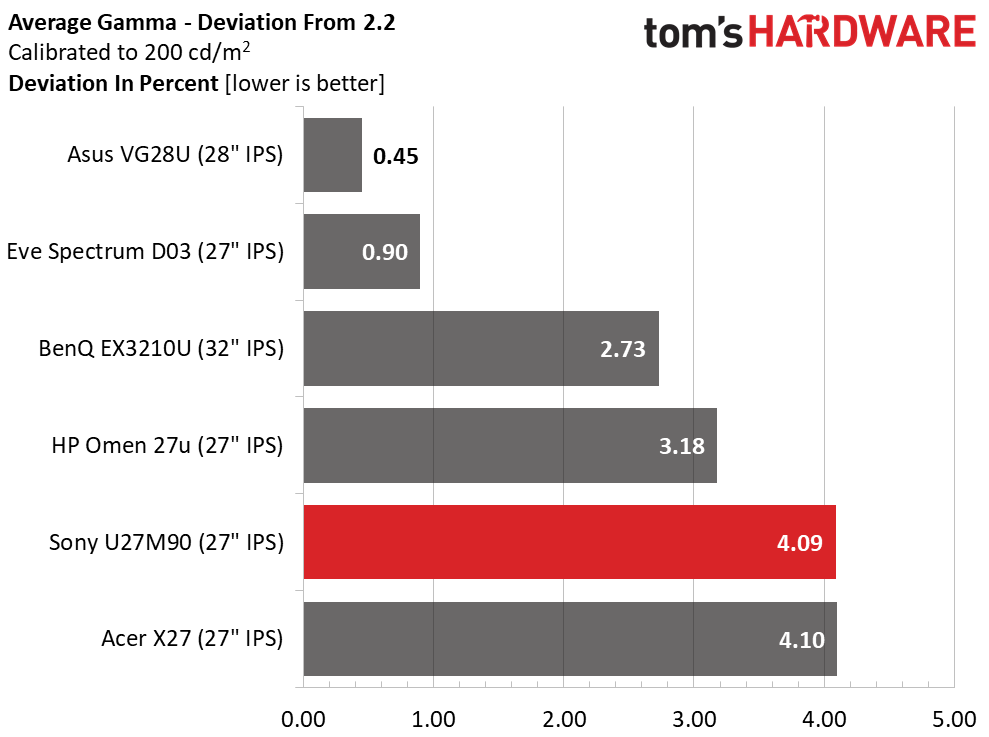

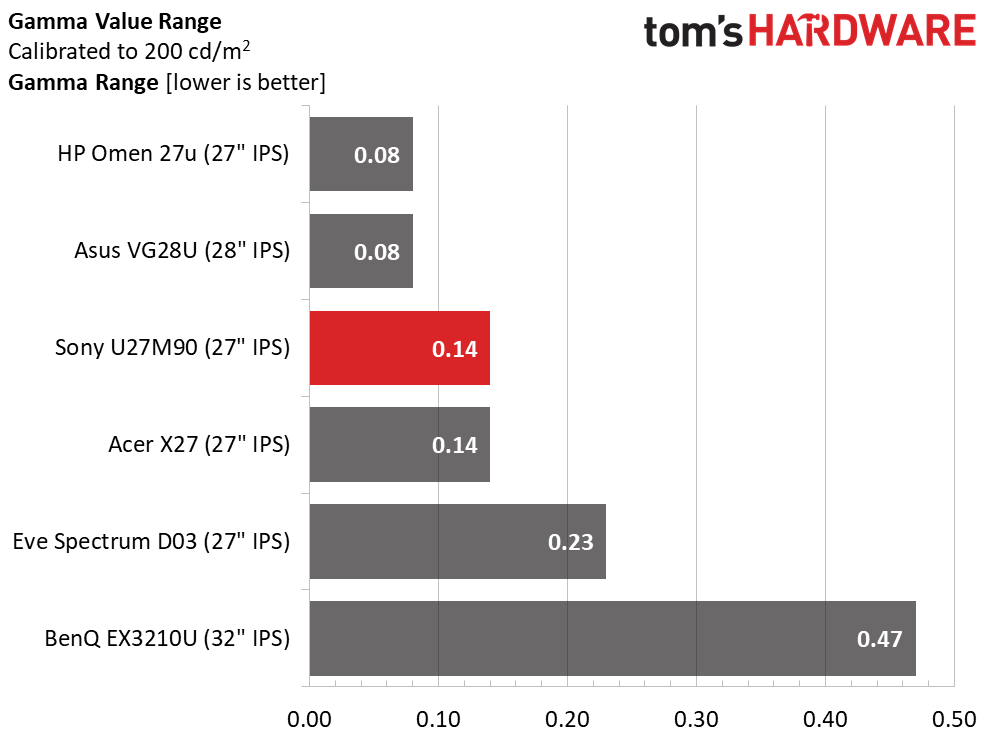

The best gamma performance is found in either Standard or Game 1 mode where the U27M90 showed a light trace averaging 2.11 for a 4.09% deviation. The value range is reasonably tight at 0.14 which is in the top tier. I have no complaints here; these are excellent results.

Color Gamut Accuracy

Our color gamut and volume testing use Portrait Displays’ Calman software. For details on our color gamut testing and volume calculations, click here.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

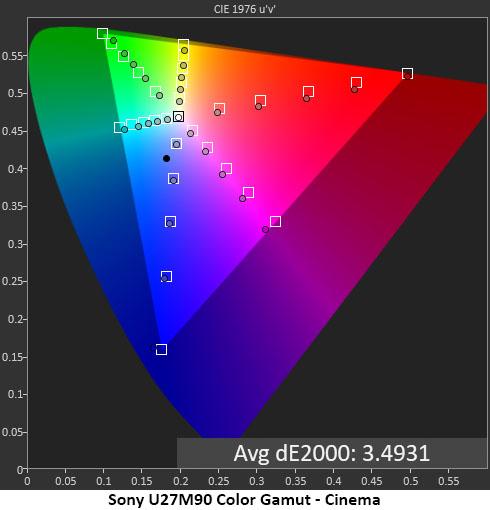

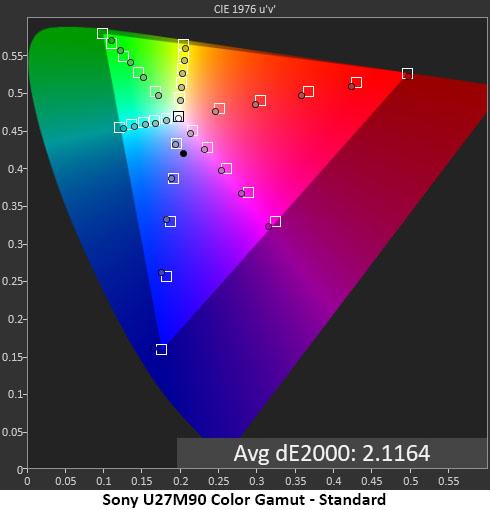

The color gamut tests yielded similar results in the three modes. Cinema looks slightly more saturated due to its darker gamma and on the chart, you can see that all points are on or close to their targets. Magenta is a little off in hue, but this error will be very hard to see in content.

Standard is a little closer and yields the lowest measured error of 2.12dE. Calibration of Game 1 adds a bit of red saturation and puts all colors perfectly in line with their hue targets. The differences I’m talking about are very subtle, so ultimately, users will have to find their preference. The good part is that the U27M90 offers no bad choices.

Comparisons

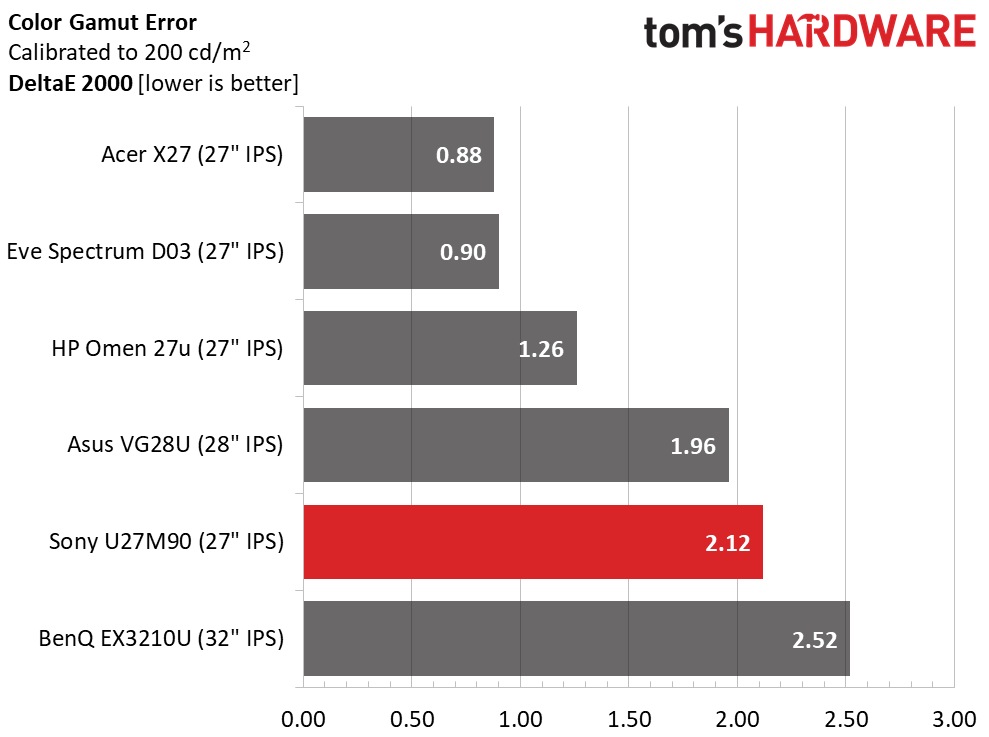

2.12dE represents the U27M90’s Standard mode. For PC gaming, that’s the best choice. It delivers a bright and colorful picture loaded with sharp detail. When Local Dimming is turned on, contrast and depth are greatly increased. Though it finishes fifth here, this is a strong group of displays, any of which will satisfy.

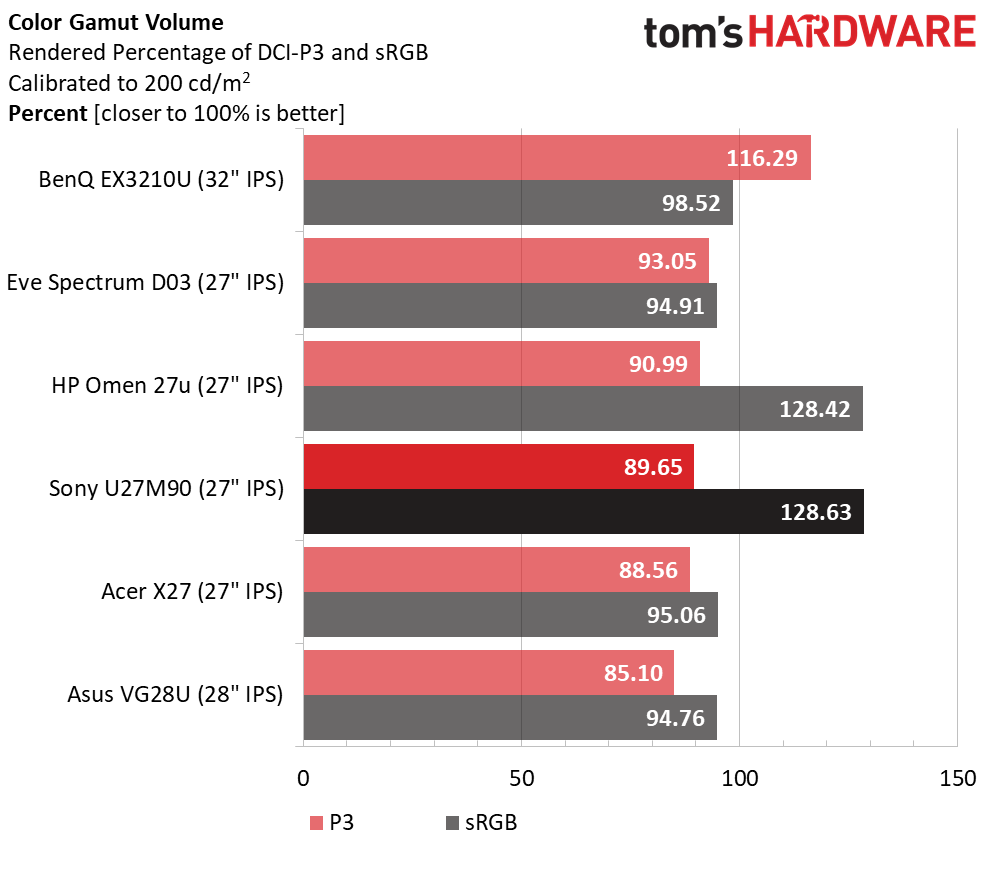

I measured just under 90% coverage of the DCI-P3 color gamut. Like nearly all wide gamut screens, green is a little under-saturated. Red and blue are fully covered and highly saturated. There is no sRGB mode available, so color-critical apps will need a software profile to rein in the extra volume.

Current page: Grayscale, Gamma and Color

Prev Page Brightness and Contrast Next Page HDR Performance

Christian Eberle is a Contributing Editor for Tom's Hardware US. He's a veteran reviewer of A/V equipment, specializing in monitors. Christian began his obsession with tech when he built his first PC in 1991, a 286 running DOS 3.0 at a blazing 12MHz. In 2006, he undertook training from the Imaging Science Foundation in video calibration and testing and thus started a passion for precise imaging that persists to this day. He is also a professional musician with a degree from the New England Conservatory as a classical bassoonist which he used to good effect as a performer with the West Point Army Band from 1987 to 2013. He enjoys watching movies and listening to high-end audio in his custom-built home theater and can be seen riding trails near his home on a race-ready ICE VTX recumbent trike. Christian enjoys the endless summer in Florida where he lives with his wife and Chihuahua and plays with orchestras around the state.

-

waltc3 Here's another one that is fairly unimpressive with HDR nits performance. My 4k Phillips provides ~700 nits SDR--certified 1000 nits HDR--actually supports three separate HDR modes. My last BenQ, 4k provided ~300 nits SDR and about 320 nits HDR (was not certified like this Sony is not certified) and the difference is night and day. Amazing when I consider I paid less for the Phillips than this Sony is retailing for, and the Phillips is a much bigger monitor...!Reply

I also had several Sony CRTs...great monitors--remember my last--a 20" "flat-screened" Trinitron that supported my Voodoo3's 1600x1200 res ROOB....;) As an aside, the ATi fury I bought at the time to test--(the original ATi Fury, not AMD's) would not do 1600x1200 stock! I had call ATi and ask them about it and one of the driver programmers I spoke with (in those days you could dial up practically anyone and actually talk to them!) asked me why I wanted to run at 1600x1200...;) I had to actually add the simple instructions into their driver structure at the time to enable 1600x1200--'cause my Trinitron supported it and I wanted to use it!...;)

Sony made great monitors in those days--they were good enough for me and x86 in those years. The Trinitron brand is well known even today, as you mentioned. Originally, it was the Trinitron TV brand. I'm sure this monitor is a good one, I'm just not enamored of the specs. Those high nits make all the difference, in the display, imo. -

anonymousdude ReplyMakaveli said:Do people fine 27 inch and 4k usable I tried it and found 32 inch to be much better.

For gaming it's fine. Productivity not so much. -

Soul_keeper ReplyMakaveli said:Do people fine 27 inch and 4k usable I tried it and found 32 inch to be much better.

It really is personal preference. I've been using a Samsung U24E590D 23.6" 4K display for a few years now.

Personally I wanted maximum pixel density, good power usage, not too bulky.

Now I think my eyes aren't as good as they once were, I might get a 27" 4K in the future, maybe something like the one reviewed.

27" could be the "sweet spot" for 4K. And greater than 60HZ refresh is a plus.

Also your distance from the screen and usage style play a big role in the decision.

I lean forward and have my face 1' from the screen to read things for example. -

wr3zzz LOL $1000 27" monitor for "PC" gaming, Sony won't even bother supporting adaptive sync for its TV until this year.Reply -

edzieba ReplyIn the 1990s, Sony marketed a line of Trinitron CRT screens. Their main draw was that they only curved on the vertical axis, which meant they were the closest thing to a flat-screen you could buy at the time.

The main draw of Trinitron tubes was their fine phosphor pitch, aligned phosphor grid (matters more for bitmapped graphics and characters than for TV where it produced crisper horizontal and vertical lines) , and lack of shadow masks producing an overall brighter image. Trinitron tubes were available as the normal 'bi curved' surface and as completely flat glass front tubes - as were shadow-mask tubes - so tube curvature was not a deciding factor in their preference. -

hotaru251 4k at 27inches is legit pointless.Reply

waste of energy to power(which costs more in pwoer bill), generates more heat (not what msot ppl want outside of the winter), and lowers frame rate for a near non discernible image quality.

1440p @ 240+ refresh rate would of been a MUCH more interesting product. -

Blacksad999 Reply

That was my thought, also. This is a pretty tough sell at it's price point. It should be $200-300 cheaper, realistically. Otherwise, there are significantly better monitors for the price. Hell, you can get a 48" LG C1 right now for less than this thing, and it's 4k, has amazing HDR, and 120hz.wr3zzz said:LOL $1000 27" monitor for "PC" gaming, Sony won't even bother supporting adaptive sync for its TV until this year. -

gg83 Why isn't Sony using they're OLED tech for gaming monitors? Is it difficult to make OLEDs smaller than 55 inches?Reply -

husker Aside from the resolution to size ratio, they made a huge mistake with the stand. The way the front center leg extends in front of the screen is going to cause some problems and is just an awful design decision. The stand should be functional and out of the way, not sticking out in your face as if to say, "Look at me! I kinda look like a PS5 but I'm just the leg of your stand! Sorry if I'm in the way! Buy a PS5!". Also, apparently computer stands don't know how to judiciously use exclamation marks.Reply