Almost 20 TB (Or $50,000) Of SSD DC S3700 Drives, Benchmarked

We've already reviewed Intel's SSD DC S3700 and determined it to be a fast, consistent performer. But what happens when we take two-dozen (or about $50,000) worth of them and create a massive RAID 0 array? Come along as we play around in storage heaven.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

24 SSD DC S3700s: So Choice. If You Have The Means...

Modern SATA drives are already bumping up against the 6 Gb/s SATA interface's limits. For now, there's little way around that, though the horizon is filled with fast new interfaces and form factors. Today, circumventing the SATA performance ceiling somewhat defeats the purpose of client storage, and is entirely incompatible with the the SSD DC S3700's mission. It's all about giving buyers the right mix of affordability, flexibility, and features in a SATA-based drive.

Of course, affordability is in the eye of the beholder. But the SSD DC S3700 was designed for organizations looking for a cost-effective repository for large deployments. Intel's older client drives are being used in datacenters of all sizes, and the DC S3700 is aimed at the folks making buying decisions in those environments. It gives buyers more capacity and form options at a price similar to where the 3 Gb/s SSD 320 family debuted.

Naturally, building an almost-18 TB array with 800 GB SSDs isn't cheap. Each drive sells for $2,000 online. Want your own beastly array? Be ready to spend close to $50,000 for the privilege (and that's before the price of a suitably-fast server). Sure, you're spending a pretty penny. But relative to what similar capacity and performance would have cost previously, the SSD DC S3700 clearly benefits from faster, more economical MLC NAND manufactured using a high-yield 25 nm process.

Just because these drives aren't cheap doesn't mean we can't have fun with them. Without question, we can extract superb performance from just a few of Intel's SSDs. Scale up to 24, and you're talking about some serious speed.

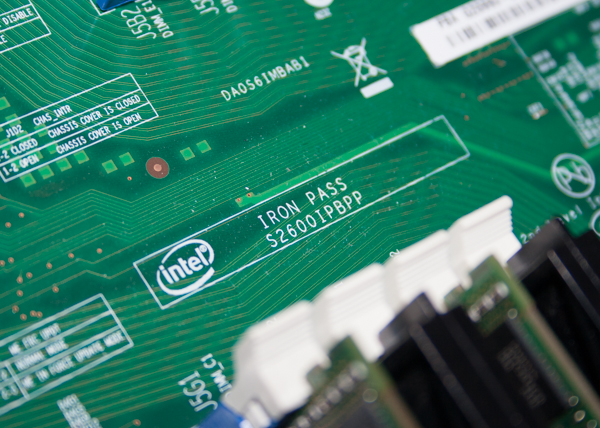

All of this could have gone horribly pear-shaped without a proper server to drop the disks in. For what we wanted to do, we needed all of the performance our Xeon E5s could give us. We successfully found the limit of what the SSDs and our test platform could do together, and the result was on the (very) high side. The RAID 0 calculations performed by the host are fairly lightweight, but creating enough of a workload to stress the storage subsystem is fairly taxing.

The rewards really are worth the effort. We were able to turn those 17,880 GiB into a single high-performance volume capable of heroic performance. Getting to 1,000,000 4 KB read IOPS is nice, but witnessing almost 1,000,000 write IOPS is even better. Sequential performance is huge all-around. Software RAID has never been so nice.

And that might be the biggest surprise of all. If we were stuck benchmarking software RAID under Windows, we would have been stuck at a tiny fraction of the performance seen using mdadm. At the very least, this is a topic worth further exploration. Faster hardware and open source development have created a software RAID solution that might be a viable alternative to hardware in many situations. Because mdadm's options are numerous, and there isn't much documentation of user experiences when it comes to SSD arrays, it's entirely possible that further tweaking could yield even better results. Sadly, our time with the SSD DC S3700s was all too brief, and there are so many unexplored areas to tackle. That's going to have to be a story for another day though, because Intel already received our little care package back.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: 24 SSD DC S3700s: So Choice. If You Have The Means...

Prev Page Results: Going For Broke-

sodaant Those graphs should be labeled IOPS, there's no way you are getting a terabyte per second of throughput.Reply -

cryan mayankleoboy1IIRC, Intel has enabled TRIM for RAID 0 setups. Doesnt that work here too?Reply

Intel has implemented TRIM in RAID, but you need to be using TRIM-enabled SSDs attached to their 7 series motherboards. Then, you have to be using Intel's latest 11.x RST drivers. If you're feeling frisky, you can update most recent motherboards with UEFI ROMs injected with the proper OROMs for some black market TRIM. Works like a charm.

In this case, we used host bus adapters, not Intel onboard PHYs, so Intel's TRIM in RAID doesn't really apply here.

Regards,

Christopher Ryan -

cryan DarkSableIdbuaha.I want.Reply

And I want it back! Intel needed the drives back, so off they went. I can't say I blame them since 24 800GB S3700s is basically the entire GDP of Canada.

techcuriousI like the 3D graphs..

Thanks! I think they complement the line charts and bar charts well. That, and they look pretty bitchin'.

Regards,

Christopher Ryan

-

utroz That sucks about your backplanes holding you back, and yes trying to do it with regular breakout cables and power cables would have been a total nightmare, possible only if you made special holding racks for the drives and had multiple power suppy units to have enough sata power connectors. (unless you used the dreaded y-connectors that are know to be iffy and are not commercial grade) I still would have been interested in someone doing that if someone is crazy enough to do it just for testing purposes to see how much the backplanes are holding performance back... But thanks for all the hard work, this type of benching is by no means easy. I remember doing my first Raid with Iwill 2 port ATA-66 Raid controller with 4 30GB 7200RPM drives and it hit the limits of PCI at 133MB/sec. I tried Raid 0, 1, and 0+1. You had to have all the same exact drives or it would be slower than single drives. The thing took forever to build the arrays and if you shut off the computer wrong it would cause huge issues in raid 0... Fun times...Reply