Almost 20 TB (Or $50,000) Of SSD DC S3700 Drives, Benchmarked

We've already reviewed Intel's SSD DC S3700 and determined it to be a fast, consistent performer. But what happens when we take two-dozen (or about $50,000) worth of them and create a massive RAID 0 array? Come along as we play around in storage heaven.

Results: 4 KB Random Performance Scaling In RAID 0

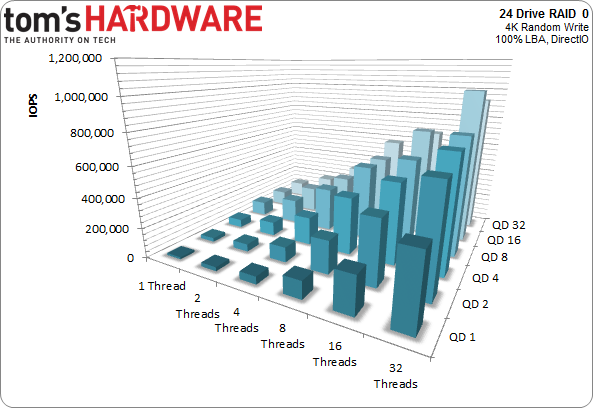

Thread Count vs. Queue Depth

If we were just testing one SSD, we could simply step through increasing queue depths. One system process would stack commands, creating various QDs. That works well for SATA-enabled SSDs, particularly since there isn't much point to testing them at queue depths greater than 32. That all goes out the window when it comes to testing PCIe-based SSDs and RAID arrays. Eventually, workload generators begin running out of steam at higher QDs with only a single thread. Instead, we have to run multiple threads at multiple QDs to extract maximum performance.

It helps to have a visual representation this dynamic in action, and that's exactly what this chart represents. We start with a queue depth of one using one thread, and eventually arrive at 32 simultaneous threads each bombarding the array at a QD of 32. With just one thread, a 4K random write peters out just north of 100,000 IOPS. If we throw 32 threads at the array, we can beat 1,000,000 IOPS. That's why we're locking the thread count down at 32 and varying queue depth from here on out.

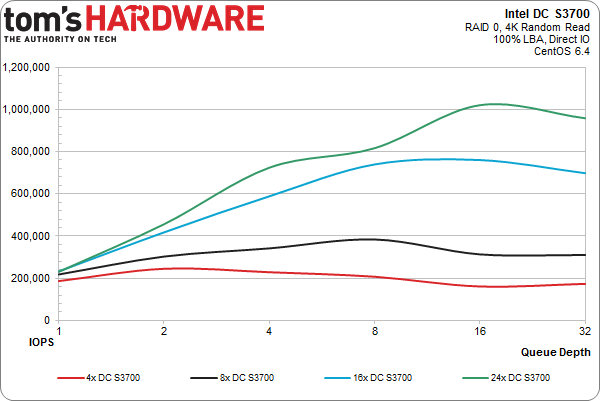

4 KB Random Read Performance Scaling in RAID 0

One thing you definitely want to see in a RAID array is scaling. As the number of drives increases, we want to observe a commensurate increase in performance. Then, as we apply a more demanding workload, we want performance to rise in tandem with intensity. That shouldn't be too hard to accomplish in RAID 0. After all, there isn't much overhead, and no pesky fault tolerance to slow us down.

Right out of the gate, we can glean a few tidbits from this chart. First, getting to 1,010,755 IOPS with a 24-drive RAID 0 array is extremely satisfying on a personal level. That's almost exactly 4 GB/s of throughput. Second, the gulf between 8 x SSD DC S3700s and 16 drives is enormous. It's almost 100%, or 400,000 IOPS. That's also the same percentage increase from four to eight drives. The bump from 16x to 24x should be close to 50%, but we're clearly hitting a bottleneck, achieving a comparatively modest 25% increase over the 16-drive array.

That's still excellent scaling in the grand scheme of things. It's completely unreasonable to expect exactly 4x/8x/16x/24x the performance of one drive. When we divide our peak 24-drive random read results by the number of member SSDs, each SSD DC S3700 contributes an astounding 42,114 IOs every second. A single 800 GB S3700 is rated for 76,000 4 KB read IOPS. So, at first glance it looks like we're losing a ton of performance. But considering the realities of scaling, we're still happy if we only see half of that peak. The fact that we get 42,000 IOPS/drive with 24 SSDs and 50,000 with 16 or less attached is pseudo-miraculous.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

If your application requires tons of flash and you can supply a beefy workload, this is a viable option. Of course, large RAID 0 arrays expose you to a significant risk of failure over a period of years. But being responsible is rarely this fun.

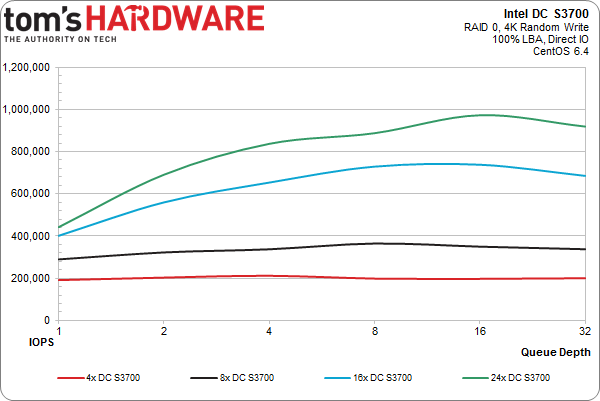

4 KB Random Write Performance Scaling in RAID 0

When we switch to writes, we get more of the same awesomeness.

The fantastic scaling is still apparent, and the parity between read and write performance yields results just a few percentage points away from our read numbers (that is, except for the four-drive array, which doesn't lose anything compared to the read results).

This is a good place to point out that these are basically out-of-the-box scores. We haven't put enough writes on the SSDs to get them into a state where garbage collection is necessary. Limitations on the amount of time Intel could let us keep these things made drawn-out write sessions impractical. However, these 800 GB flagships are steady-state rated at 36,000 IOPS. So, we wouldn't expect a massive performance drop. The 24-drive array already peaks north of 980,000 IOPS, giving us around 41,000 write IOPS per SSD. We could conceivably rip off 860,000 4 KB write IOPS for years with a 24-drive RAID 0 array. How cool is that?

If these were conventional desktop drives, we'd want to over-provision them in an array. Without TRIM, performance could be expected to degrade substantially, and the only preventative measure would be sacrificing usable capacity to maintain speed over time. Intel's SSD DC S3700 line-up, and most other enterprise-oriented SATA drives, are over-provisioned for a reason. In the 800 GB model's case, only 745 GB of 1024 GB is usable. In exchange, though, you get consistent steady-state performance. Consequently, this also translates into lower write amplification, hence the 10 full random drive writes per day over five years that Intel claims the S3700s can endure.

Current page: Results: 4 KB Random Performance Scaling In RAID 0

Prev Page Test Setup And Components Next Page Results: 128 KB Sequential Performance Scaling In RAID 0-

sodaant Those graphs should be labeled IOPS, there's no way you are getting a terabyte per second of throughput.Reply -

cryan mayankleoboy1IIRC, Intel has enabled TRIM for RAID 0 setups. Doesnt that work here too?Reply

Intel has implemented TRIM in RAID, but you need to be using TRIM-enabled SSDs attached to their 7 series motherboards. Then, you have to be using Intel's latest 11.x RST drivers. If you're feeling frisky, you can update most recent motherboards with UEFI ROMs injected with the proper OROMs for some black market TRIM. Works like a charm.

In this case, we used host bus adapters, not Intel onboard PHYs, so Intel's TRIM in RAID doesn't really apply here.

Regards,

Christopher Ryan -

cryan DarkSableIdbuaha.I want.Reply

And I want it back! Intel needed the drives back, so off they went. I can't say I blame them since 24 800GB S3700s is basically the entire GDP of Canada.

techcuriousI like the 3D graphs..

Thanks! I think they complement the line charts and bar charts well. That, and they look pretty bitchin'.

Regards,

Christopher Ryan

-

utroz That sucks about your backplanes holding you back, and yes trying to do it with regular breakout cables and power cables would have been a total nightmare, possible only if you made special holding racks for the drives and had multiple power suppy units to have enough sata power connectors. (unless you used the dreaded y-connectors that are know to be iffy and are not commercial grade) I still would have been interested in someone doing that if someone is crazy enough to do it just for testing purposes to see how much the backplanes are holding performance back... But thanks for all the hard work, this type of benching is by no means easy. I remember doing my first Raid with Iwill 2 port ATA-66 Raid controller with 4 30GB 7200RPM drives and it hit the limits of PCI at 133MB/sec. I tried Raid 0, 1, and 0+1. You had to have all the same exact drives or it would be slower than single drives. The thing took forever to build the arrays and if you shut off the computer wrong it would cause huge issues in raid 0... Fun times...Reply