Almost 20 TB (Or $50,000) Of SSD DC S3700 Drives, Benchmarked

We've already reviewed Intel's SSD DC S3700 and determined it to be a fast, consistent performer. But what happens when we take two-dozen (or about $50,000) worth of them and create a massive RAID 0 array? Come along as we play around in storage heaven.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Results: Going For Broke

Up until now, we haven't been using Linux's disk caching to inflate our benchmark numbers. Unlike most mainstream and higher-end hardware RAID cards, we don't necessarily have a direct equivalent while using mdadm and Linux. At least, not in RAID 0. We do get to get to allocate a certain number of memory pages per drive in RAID 5/6, so that does translate into a direct analog to the high-speed DRAM caches found on cards like LSI's 9266-8i.

Linux is crafty, though. Any memory not explicitly allocated is used for caching, and if that memory is needed for other purposes, it gets released. In our case, that 64 GB of DDR3-1333 from Kingston is mostly free for caching drives or filesystems, since our server isn't using much on its own with CentOS 6.

When it comes to benchmark drive arrays, we want to disable caching to get a truer sense of what the SSDs themselves can do. But now that we already know those performance results, let's see what happens when we mix in two quad-channel memory controllers and eight 1,333 MT/s modules.

Sequential 4 KB Testing, Cached I/O

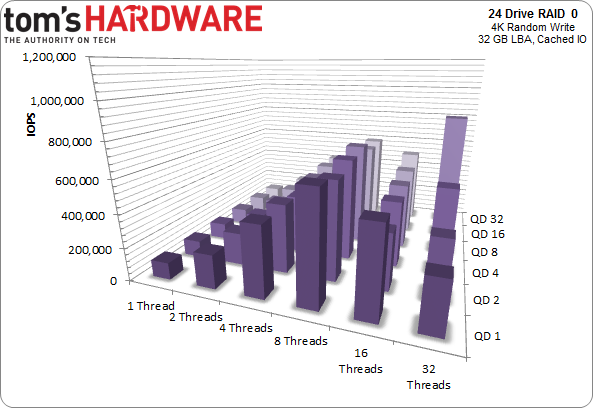

When we let the OS cache 4 KB random transfers, we see different performance characteristics than what we already reported. The potential is there for much higher numbers, but only if the system is requesting data cached in memory. The software only knows to cache LBAs into DRAM if they've already been accessed during a run.

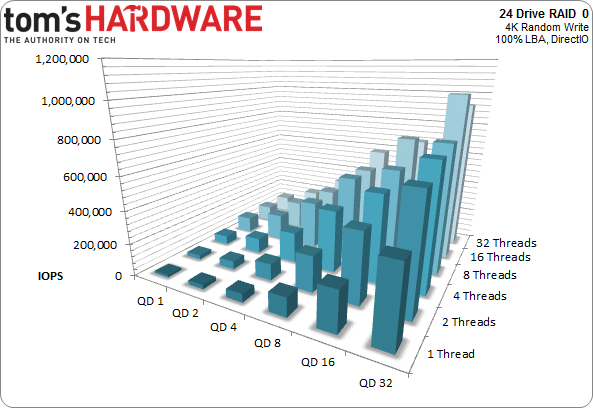

Four-kilobyte random reads are tested from queue depths of one to 32, using from one thread up to 32. The chart below reflects the results from 36 of those runs to create a matrix of 20-second tests.

In that short amount of time, the system doesn't really have a chance to access many LBAs more than once. With our workload generator randomly deciding which LBAs of the test range to pick, some addresses might get accessed several times, while others get touched one time or not at all.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Testing with caching yields an orderly performance picture. If we allow our 24-drive to cache, we see something a little different.

The first and second charts are basically the same test. The former doesn't benefit from caching, while the one directly above does. Performance at lower queue depths and thread counts is significantly better, albeit less consistent. As we encounter more taxing loads, the array just can't achieve the same number of transactions per second characterized in the direct (not cached) test run. In this scenario, maximum performance is lower, but minimum performance is better.

That dynamic gets flipped on its head when we switch to sequential transfers.

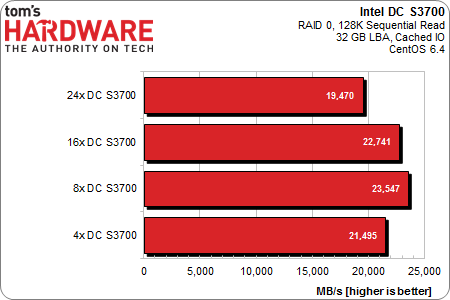

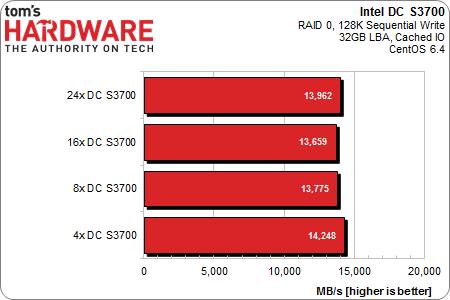

Sequential 128 KB Testing, Cached I/O

By restricting the tested range of LBAs to just 32 GB, we can actually cache the entire test area into DRAM. It doesn't take long, either, especially when we're reading and writing thousands of megabytes per second. We let the test run for 10 minutes, and then take the average bandwidth in MB/s.

It doesn't matter how many drives are in the array. The entire 32 GB LBA space is cached within seconds, and after that we get up to 23,000 MB/s of read bandwidth over the 10-minute test run. We generate a ton of throughput with writes, too, a stupefying 14 GB/s. This feels a lot like cheating, but is it? Not necessarily. That's one reason DRAM caches are used in the first place. We're simply looking at what happens when we let our OS do what it does, and then make the most of it.

Max IOPS

We've seen what caching can do for sequential transfers, and how it affects random performance within a narrowly defined test scenario. If we want to shoot for max I/Os, we have to mix the two methodologies together.

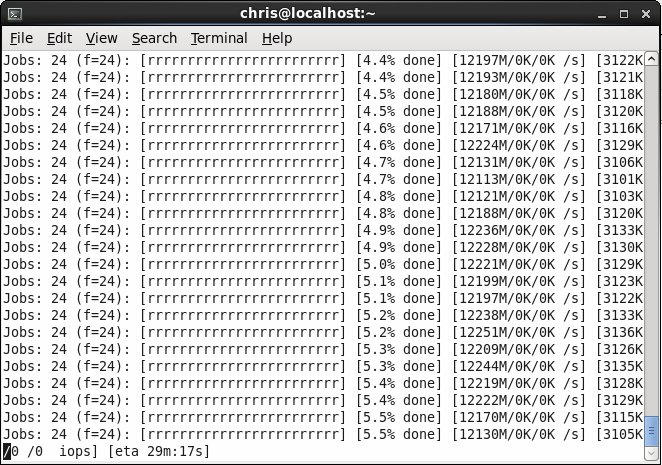

First, we create a 4 KB random read workload, spread out over a 32 GB LBA space, and then let it run for a few minutes. After a while, the system caches the entire 32 GB LBA area and we get the full effect of servicing requests straight out of DRAM. How high can we go?

The answer? Up to 3,136,000 IOPS, or in excess of 12,000 MB/s. At this point, we're using just about all of processing power our dual-Xeon server can muster, pegging both CPUs. Generating the workload to push so many I/Os is hard work, and with the extra duties of handling RAID calculations get intense. After extensive trial and error, 3.136M IOPS is the best we can do. That's all our 19 trillion-byte array and 16 physical cores manage. It's a sufficiently gaudy number to end on.

Current page: Results: Going For Broke

Prev Page Results: Server Profile Testing Next Page 24 SSD DC S3700s: So Choice. If You Have The Means...-

sodaant Those graphs should be labeled IOPS, there's no way you are getting a terabyte per second of throughput.Reply -

cryan mayankleoboy1IIRC, Intel has enabled TRIM for RAID 0 setups. Doesnt that work here too?Reply

Intel has implemented TRIM in RAID, but you need to be using TRIM-enabled SSDs attached to their 7 series motherboards. Then, you have to be using Intel's latest 11.x RST drivers. If you're feeling frisky, you can update most recent motherboards with UEFI ROMs injected with the proper OROMs for some black market TRIM. Works like a charm.

In this case, we used host bus adapters, not Intel onboard PHYs, so Intel's TRIM in RAID doesn't really apply here.

Regards,

Christopher Ryan -

cryan DarkSableIdbuaha.I want.Reply

And I want it back! Intel needed the drives back, so off they went. I can't say I blame them since 24 800GB S3700s is basically the entire GDP of Canada.

techcuriousI like the 3D graphs..

Thanks! I think they complement the line charts and bar charts well. That, and they look pretty bitchin'.

Regards,

Christopher Ryan

-

utroz That sucks about your backplanes holding you back, and yes trying to do it with regular breakout cables and power cables would have been a total nightmare, possible only if you made special holding racks for the drives and had multiple power suppy units to have enough sata power connectors. (unless you used the dreaded y-connectors that are know to be iffy and are not commercial grade) I still would have been interested in someone doing that if someone is crazy enough to do it just for testing purposes to see how much the backplanes are holding performance back... But thanks for all the hard work, this type of benching is by no means easy. I remember doing my first Raid with Iwill 2 port ATA-66 Raid controller with 4 30GB 7200RPM drives and it hit the limits of PCI at 133MB/sec. I tried Raid 0, 1, and 0+1. You had to have all the same exact drives or it would be slower than single drives. The thing took forever to build the arrays and if you shut off the computer wrong it would cause huge issues in raid 0... Fun times...Reply