Almost 20 TB (Or $50,000) Of SSD DC S3700 Drives, Benchmarked

We've already reviewed Intel's SSD DC S3700 and determined it to be a fast, consistent performer. But what happens when we take two-dozen (or about $50,000) worth of them and create a massive RAID 0 array? Come along as we play around in storage heaven.

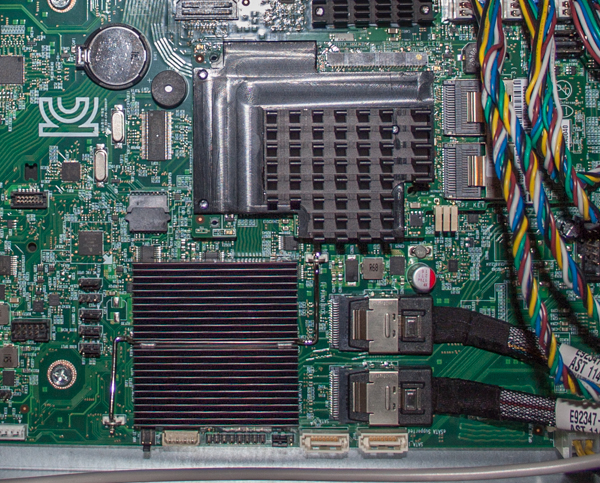

Test Setup And Components

HBAs and Hardware RAID

If you've looked at any motherboard based on an Intel or AMD chipset lately, you probably noticed that it didn't have anywhere close to 24 SATA ports on it. It goes without saying, but we need some help in that department to facilitate communication with our SSDs.

Intel markets its RMSKB080 and RMSJB080 (shown above) as entry-level RAID cards. It's true that they're hardware-based RAID controllers. But really, these cards are just HBAs in disguise. The KB and JB are identical, feature-wise. The JB simply slots into that proprietary mezzanine connector we mentioned on the previous page.

Our controllers center on LSI's SAS2308 PowerPC-based silicon. It might even help to think of them as mostly rebadged LSI 9207-8i HBAs. Whereas the 9207-8i ships without RAID functionality by default (Initiator-Target mode), the RMSKB080s do ship with firmware that enables this feature (known as Integrated RAID mode). We're not really interested in using them for their hardware RAID capabilities, but rather their ability to pass a drive through directly to the host. Then, our server can handle all of the RAID calculations and overhead in software.

We do have one Intel RMS25CB080 adapter on-hand to try a little hardware RAID action, if the need arises. But with just one card, it's hard to harness the performance of 24 drives in an appropriately speedy fashion. Based on LSI's Gen3 PCIe SAS2208 RAID offerings with 1 GB of DDR3 cache and a beefier PowerPC processor, the CB handles the computationally-intense parity RAID levels (5/6) that the lighter KB cards cannot. RAID 0 and 1 calculations aren't very taxing, but the parity calculations involved in RAID 5 and 6 necessitate more serious muscle.

It's worth pointing out that these three Intel storage products only work in the company's Xeon E5-compatible motherboards. You have to be using an LGA 2011-equipped platform and it has to be Intel-branded, else the cards don't even power up. The mezzanine add-in employs a proprietary form factor anyways, so that's less of an issue. The RMSKB080 can be found for a third of the price of LSI's 9207-8i, but as far as we can tell, there's no way to cross-flash it for broader compatibility. Also, it doesn't appear that flashing the firmware from IR to IT mode is supported. Intel does sell products intended for more general compatibility. However, the models we have here are basically upgrades for this platform specifically.

Software RAID: Not Evil After All

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Armed with three HBAs, we'll be using the server's operating system to create RAID volumes. Windows has long supported striping, mirroring, and even RAID 5. But its performance is generally pretty poor, and there's a complete lack of flexibility in terms of settings. Windows 8 introduces some interesting new concepts through Storage Spaces, but these aren't useful to us for this exhibition.

Linux is a different beast. Modern Linux distros include a number of RAID options. Somewhat analogous to Windows' disk management RAID modes, logical volume management provides RAID through the file system. But Linux's true ace is mdadm, which facilitates the creation of RAID 1/0/5/6 volumes (plus compound modes like RAID 50/60). We can define the strip size and even allocate system memory for cache, the same way a hardware-based RAID adapter would. This is a far more alluring prospect, essentially turning our Xeon server into one big RAID controller.

RAID 5/6 levels require some truly sophisticated math to create and recover arrays. Those algorithms benefit from instruction extensions built into architectures like PowerPC. Fortunately, x86 processors can accelerate these calculations when the software is designed to exploit them. mdadm has been worked over to take advantage of these benefits wherever possible, and the open source community can continue to improve upon it when necessary.

| Header Cell - Column 0 | Test Configuration |

|---|---|

| Server | Intel R2224IP4LHPCBPPP |

| Mainboard | Intel S2600IP4 "Iron Pass", Dual Socket R/LGA 2011 |

| Processors | 2 x Intel Xeon E5-2665 (Sandy Bridge-EP): 2.4 GHz Base Clock Rate, 3.1 GHz Max. Turbo Boost, 32 nm, 8C/16T, 115 W TDP, LGA 2011, 20 MB Shared L3 Cache |

| Memory | 8 x Kingston KVR13LR9D4/8HC 1.35 V, 1,333 MT/s ECC LRDIMM |

| Chassis | Intel Server System R2200GZ Family, 24-Drive Bay Backplane, 2U Rack Chassis |

| PSU | 2 x Intel Redundant 750 W, 80 PLUS Platinum, FS750HS1-00 |

| Expander | Intel RES2CV360 36-Port SAS2 Expander |

| Storage Controllers | 2 x Intel RMS25KB080 Integrated RAID Modules1 x Intel RMS25JB080 Integrated RAID Module, Mezzanine1 x Intel RMS25CB080 RAID Controller, MezzanineIntel C600 AHCI SATA 6Gb/s |

| Boot Drive | Kingston 200 GB E100, SATA 6Gb/s, FW: 5.15 |

| Test Drives | 24 x 800 GB Intel SSD DC S3700, SATA 6Gb/s, FW: 5DVA0138 |

| Operating Systems | CentOS 6.4 x86_64Windows Server 2012 |

| Management | Intel RMM4 BMC Remote Management System |

Current page: Test Setup And Components

Prev Page The Platform: Built For Storage Next Page Results: 4 KB Random Performance Scaling In RAID 0-

sodaant Those graphs should be labeled IOPS, there's no way you are getting a terabyte per second of throughput.Reply -

cryan mayankleoboy1IIRC, Intel has enabled TRIM for RAID 0 setups. Doesnt that work here too?Reply

Intel has implemented TRIM in RAID, but you need to be using TRIM-enabled SSDs attached to their 7 series motherboards. Then, you have to be using Intel's latest 11.x RST drivers. If you're feeling frisky, you can update most recent motherboards with UEFI ROMs injected with the proper OROMs for some black market TRIM. Works like a charm.

In this case, we used host bus adapters, not Intel onboard PHYs, so Intel's TRIM in RAID doesn't really apply here.

Regards,

Christopher Ryan -

cryan DarkSableIdbuaha.I want.Reply

And I want it back! Intel needed the drives back, so off they went. I can't say I blame them since 24 800GB S3700s is basically the entire GDP of Canada.

techcuriousI like the 3D graphs..

Thanks! I think they complement the line charts and bar charts well. That, and they look pretty bitchin'.

Regards,

Christopher Ryan

-

utroz That sucks about your backplanes holding you back, and yes trying to do it with regular breakout cables and power cables would have been a total nightmare, possible only if you made special holding racks for the drives and had multiple power suppy units to have enough sata power connectors. (unless you used the dreaded y-connectors that are know to be iffy and are not commercial grade) I still would have been interested in someone doing that if someone is crazy enough to do it just for testing purposes to see how much the backplanes are holding performance back... But thanks for all the hard work, this type of benching is by no means easy. I remember doing my first Raid with Iwill 2 port ATA-66 Raid controller with 4 30GB 7200RPM drives and it hit the limits of PCI at 133MB/sec. I tried Raid 0, 1, and 0+1. You had to have all the same exact drives or it would be slower than single drives. The thing took forever to build the arrays and if you shut off the computer wrong it would cause huge issues in raid 0... Fun times...Reply