Wi-Fi Security: Cracking WPA With CPUs, GPUs, And The Cloud

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

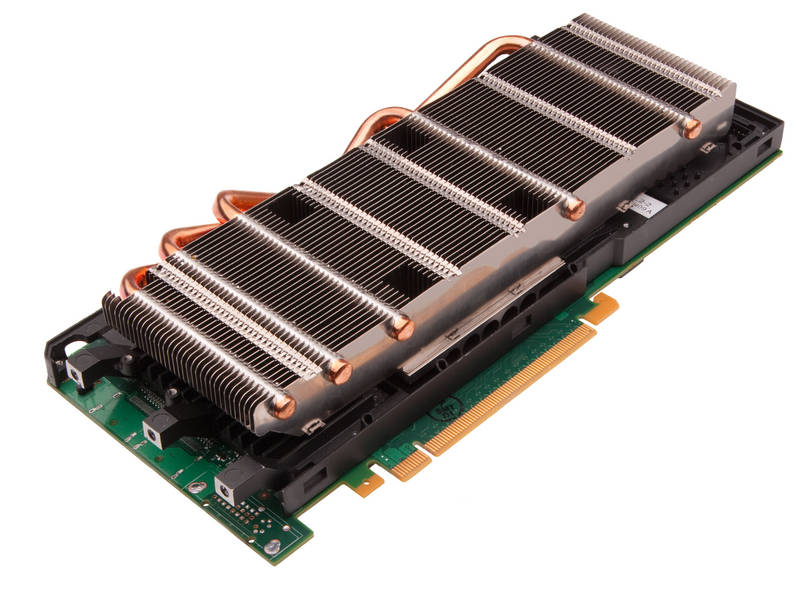

Nvidia's Tesla And Amazon's EC2: Hacking In The Cloud

Cracking passwords works best on a scale exceeding what an enthusiast would have at home. That's why we took Pyrit and put it to work on several Tesla-based GPU cluster instances in Amazon's EC2 cloud.

Amazon calls each server a "Cluster GPU Quadruple Extra Large Instance" and it consists of the following:

- 22 GB of memory

- 33.5 EC2 Compute Units (2 x Intel Xeon X5570, quad-core, Nehalem architecture)

- 2 x Nvidia Tesla M2050 GPUs (Fermi architecture)

- 1690 GB of instance storage

- 64-bit platform

- I/O performance: 10 gigabit Ethernet

- API name: cg1.4xlarge

This machine is strictly a Linux affair, which is why we're restricted to Pyrit. The best part, though, is that it's completely scalable. You can add client nodes to your master server in order to distribute the workload. How fast can it go? Well, on the "master" server, we were able to hit between 45 000 to 50 000 PMKs/s.

Computed 47956.23 PMKs/s total. #1: 'CUDA-Device #1 'Tesla M2050'': 21231.7 PMKs/s (RTT 3.0) #2: 'CUDA-Device #2 'Tesla M2050'': 21011.1 PMKs/s (RTT 3.0) #3: 'CPU-Core (SSE2)': 440.9 PMKs/s (RTT 3.0) #4: 'CPU-Core (SSE2)': 421.6 PMKs/s (RTT 3.0) #5: 'CPU-Core (SSE2)': 447.0 PMKs/s (RTT 3.0) #6: 'CPU-Core (SSE2)': 442.1 PMKs/s (RTT 3.0) #7: 'CPU-Core (SSE2)': 448.7 PMKs/s (RTT 3.0) #8: 'CPU-Core (SSE2)': 435.6 PMKs/s (RTT 3.0) #9: 'CPU-Core (SSE2)': 437.8 PMKs/s (RTT 3.0) #10: 'CPU-Core (SSE2)': 435.5 PMKs/s (RTT 3.0) #11: 'CPU-Core (SSE2)': 445.8 PMKs/s (RTT 3.0) #12: 'CPU-Core (SSE2)': 443.4 PMKs/s (RTT 3.0) #13: 'CPU-Core (SSE2)': 443.0 PMKs/s (RTT 3.0) #14: 'CPU-Core (SSE2)': 444.2 PMKs/s (RTT 3.0) #15: 'CPU-Core (SSE2)': 434.3 PMKs/s (RTT 3.0) #16: 'CPU-Core (SSE2)': 429.7 PMKs/s (RTT 3.0)

Are you scratching your head at this point? Only 50 000 PMK/s with two Tesla M2050s?! Although the hardware might seem to be under-performing, the results are on the order of a single GeForce GTX 590, which of course is armed with two GF110 GPUs. Why is that?

Nvidia's Teslas were designed for complex scientific calculations (like CFDs), which is why they so prominently feature fast double-precision floating-point math that the desktop GeForce cards cannot match. Tesla boards also boast 3 and 6 GB of memory with ECC support. However, the process we're testing doesn't tax any of those differentiated capabilities. And to make matters worse, Nvidia down-clocks the Tesla boards to ensure the 24/7 availability needed in an enterprise-class HPC environment.

But the real reason to try cracking WPA in the cloud is scaling potential. For every node we add, the process speeds up by 18 000 to 20 000 PMKs/s. That's probably not what most folks have in mind when they talk about the cloud's redeeming qualities, but it does demonstrate the effectiveness of distributing workloads across more machines that what any one person could procure on their own.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Each GPU cluster instance is armed with a 10 Gb Ethernet link, restricting bidirectional traffic between the master and nodes to 1.25 GB/s. This is what bottlenecks the cracking speed. Remember that a single ASCII character consumes one byte. So, as you start cracking longer passwords, the master server has to send more data to the clients. Worse still, the clients have to send the processed PMK/PTK back to the master server. As the network grows, the number of passwords each additional node processes goes down, resulting in diminishing returns.

Harnessing multiple networked computers to crack passwords isn't a new concept. But ultimately, it would have to be done differently to be more of a threat. Otherwise, desktop-class hardware is going to be faster than most cloud-based alternatives. For example, about a month ago, Passware, Inc. used eight Amazon Cluster GPU Instances to crack MS Office passwords at a speed of 30 000 passwords per second. We can do the same thing with a single Radeon HD 5970 using Accent's Office Password Recovery.

Current page: Nvidia's Tesla And Amazon's EC2: Hacking In The Cloud

Prev Page GPU-Based Cracking: AMD Vs. Nvidia In Brute-Force Attack Performance Next Page Securing Your WPA-Protected Network-

fstrthnu Well it's good to see that WPA(2) is still going to hold out as a reliable security measure for years to come.Reply -

runswindows95 The 12 pack of Newcastles works for me! Give that to me, and I will set you up on my wifi! Free beer for free wifi!Reply -

Pyree runswindows95The 12 pack of Newcastles works for me! Give that to me, and I will set you up on my wifi! Free beer for free wifi!Reply

Then either beer at your place is really expensive or internet is really cheap. Need 6x12 pack for me. -

compton Thanks for another article that obviously took a lot of work to put together. The last couple of articles on WiFi and archive cracking were all excellent reads, and this is a welcome addition.Reply -

mikaelgrev "Why? Because an entire word is functionally the same as a single letter, like "a." So searching for "thematrix" is treated the same as "12" in a brute-force attack."Reply

This is an extremely wrong conclusion. Extremely wrong. -

What about the permutations of the words?Reply

i.e ape can be written:

ape, Ape, aPe, apE, APe, aPE, ApE, APE.

Thats 2^3=8 permutations. Add a number after and you get (2^3)*(10^1)=80 permutations.

You can write PasswordPassword in 2^16=65536 ways.

How about using a long sentence as a password?

i.e MyCatIsSuperCuteAndCuddly, thats 2^25 permutations :) -

molo9000 Any word on MAC address filtering?Reply

Can you scan for the MAC addresses? It's probably easy to get and fake MAC adresses, or it would have been mentioned.

*scans networks*

12 networks here,

1 still using WEP

10 allowing WPA with TKIP

only 1 using WPA2 with AES only (my network) -

agnickolov Considering my WPA password is over 20 characters long I should be safe for the foreseeable future...Reply -

aaron88_7 "12345, that's amazing, I've got the same combination on my luggage!"Still makes me laugh every time!Reply