Photorealistic Game Engine Tech Demos

The progress never ends!

Since their inception in the 1970s, video games have evolved almost continuously, closely following advancements in technology. Beginning as a bunch of monochrome pixels, they became loads of sprites in 8, then 16, then 32 colors, and then they gained particle effects, polygons, motion capture, dynamic lighting, and so on and so forth until they attained the level of photorealism that we know today.

For your viewing pleasure, and to better gauge the progress that has been accomplished, here are ten technical demos from recent game engines. Some are simple demonstrations of the features offered by a given engine, while others are actual video clips made for this purpose. All are impressive.

MORE: Tom's Hardware's GDC 2017 Highlights

MORE: The VR Releases Of (Early) Summer 2017

Adam (Unity 5)

Rendered in real time

Revealed in at GDC 2016, Adam is a short film just over five minutes in length created explicitly to demonstrate technologies offered by version 5.4 of the Unity engine.

This engine is preferred by many independent developers because of its exceptional versatility. Unity is an extremely powerful tool, and Adam is the proof. The demo runs in real time (at 1440p) on an Nvidia GeForce GTX 980, and Adam makes the most of all of the features in the engine's arsenal: real time lighting, physics simulation (with CaronteFXC), volumetric fog, motion blurring, diverse shaders, and more. Not too shabby for a “simple” technological demo.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

MORE: Tom's Hardware's GDC 2017 Highlights

MORE: The VR Releases Of (Early) Summer 2017

Agni’s Philosophy (Luminous Engine)

Rendered in real time

At E3 2013, Square Enix revealed this tech demo of its Luminous Engine. The graphics engine was designed to run on eighth-gen consoles (PlayStation 4 and Xbox One). The animated short film introduced Agni, a young female sorcerer. The principal goal for this demonstration was to show what the future of the Final Fantasy franchise would look like. Agni's Philosophy ran on a PC equipped with a GeForce GTX 680 and 16 GB of RAM. The demo displayed nearly 10 million polygons per frame (up to 400,000 per character).

Two years later, Square Enix revealed a new tech demo using this engine. Dubbed Witch Chapter 0 [Cry], it revisited Agni (the character) and brilliantly demonstrates the evolution of the Luminous Engine. This time, the short film was running on a machine equipped with four Titan X GPUs and displayed some 63 million polygons per frame, all at a 8192x8192 resolution. This spectacular evolution was put to good use during the production of Final Fantasy XV.

MORE: Tom's Hardware's GDC 2017 Highlights

MORE: The VR Releases Of (Early) Summer 2017

Kara (Quantic Dream Engine)

Rendered in real time

Whether one loves or hates David Cage and his games, you have to admit that when it comes to graphics, he gives it his all. In 2012, Quantic Dream unveiled Kara, an animation short employing performance capture (a method derived from motion capture, adding the reproduction of actor's facial expressions). The result was incredible for a demonstration running on a PlayStation 3.

In 2013, Quantic Dream released a new demo of its engine, The Dark Sorcerer, which demonstrated advances made to the engine since moving to the PlayStation 4. At the same time, The Dark Sorcerer shows off all of the power of this in-house engine, which is capable of displaying some convincing particle effects.

MORE: Tom's Hardware's GDC 2017 Highlights

MORE: The VR Releases Of (Early) Summer 2017

Loft in London (Unreal Engine 4)

Rendered in real time

Created by Epic Games, the Unreal Engine has reigned as master of the world of video games ever since its creation in 1994. At the heart of numerous games (Deus Ex, Splinter Cell Chaos Theory, Bioshock, Borderlands, Mirror’s Edge, and so on), the Unreal Engine still remains today among the best graphics engines around, even if Unity is seriously biting at its heels.

In 2015, Loft in London was released. This demo utilized Unreal Engine 4 to present a loft situated in London, repeatably explorable from every angle, and with the ability to modify the scene in real time. It was effectively possible to select decorative elements to modify their color or texture. Although this is far from what would commonly be rendered with an engine used for the heart of a video game (there are few particle effects, explosions, smoke, etc.), the handling of textures and lighting is spectacular, altogether conveying extremely advanced photorealism.

MORE: Tom's Hardware's GDC 2017 Highlights

MORE: The VR Releases Of (Early) Summer 2017

Lost (Lumberyard)

Rendered in real time

Lumberyard is a game engine introduced by Amazon, and it was adopted by Chris Roberts for Star Citizen. (Whether or not that was a wise decision remains to be seen.) Lumberyard proposes many functions correlating with various services available from Amazon (with Twitch at the head), and it's technically based on Crytek's CryEngine.

In the demo for Lost, we follow the path of a mech tiredly swaying through a post-apocalyptic universe. This demo is quite simple, and less impressive than others such as Adam, but even so, this short film realized by RealtimeUK demonstrates the capabilities of this engine. The textures here are quite detailed, and one can appreciate the quality of lighting and different particle effects. The rendering of vegetation as well as the fur is also impressive.

MORE: Tom's Hardware's GDC 2017 Highlights

MORE: The VR Releases Of (Early) Summer 2017

Museum (Mizuchi Game Engine)

Rendered in real time

The Mizuchi Game Engine was created by Silicon Studio, the Japanese studio that gave birth to 3D Dot Game Heroes and Bravely Default. Having worked for many years in the middleware domain (for example, these are the folks who made the Bishamon particle tool), Mizuchi emphasizes the rendering of textures and materials, as well as lighting, to offer sumptuous graphics, be it for video games, cinema, or even simply for architecture.

In 2014, the studio revealed a technical demo titled Museum. The short film is set inside of a museum (hence the name), where you observe the curator (a small robot) moving around the artifacts. It shows numerous highly detailed objects, perfectly highlighting the attractive features of Muzychi.

MORE: Tom's Hardware's GDC 2017 Highlights

MORE: The VR Releases Of (Early) Summer 2017

Frostbite 3 Feature (Frostbite Engine)

Pre-rendered

An in-house engine from Electronic Arts originally conceived by DICE, the Frostbite Engine debuted in the Battlefield series and has since served in numerous titles such as Need for Speed: Rivals, Dragon Age: Inquisition, Star Wars: Battlefront, and even Mass Effect: Andromeda. The Frostbite Engine is known for its destructible environments, which have helped to make the Battlefield series famous.

With the arrival of Frostbite 3 for Battlefield 4, DICE further pushed the limits of its engine, notably by insisting on the realism of environments rendered by the engine. Effectively, Frostbite is capable of modifying the physical properties of a game in its entirety, from the movement of trees in the wind to particles projected by an explosion. Focus was also placed on character rendering, from their animations to facial expressions. Many of these elements are present in trailer for Battlefield 4.

MORE: Tom's Hardware's GDC 2017 Highlights

MORE: The VR Releases Of (Early) Summer 2017

Tech Demo Showcase (CryEngine)

Pre-rendered

Originally developed by Crytek as part of a simple technical demo for Nvidia, CryEngine was impressive from the start. It rapidly transformed into a game engine and realized the first Far Cry title. It was with version two, and the release of Crysis, that the CryEngine really showed what it was made of (and entered the gamer vernacular). At the time, few machines were able to take advantage of all of the graphics options available in the game. The developers at Crytek have thought big from the beginning, by offering an engine capable of supporting Model Shaders, HDR rendering, and even Dolby 5.1.

With version 5 presented in this video, the CryEngine shows once again that it is tailored to offer ultra-impressive graphics. Notably the developers focused on improved rendering of particles and vegetation, advanced handling of lighting and reflections, as well as a physics engine for games. A large effort was also made on compatibility with virtual reality technologies.

MORE: 'Far Cry 4' Performance Review

MORE: Ubisoft Shows Off 'Far Cry 5' Gameplay

The Blacksmith (Unity 5)

Rendered in real time

Released just prior to Adam, The Blacksmith is another short film demonstrating the technical capabilities offered by Unity 5. Conceived by a team of only three people (an artist, an animator, and a programmer) and assisted by a few others for the motion capture bits, this film (inspired by Nordic mythology) shows the interactions of two characters situated in a beautiful natural landscape.

This film focuses on the use of shaders, be it for the overall rendering, handling shadows in real time, or even rendering hair and beards for the characters (which is typically a pain in the neck for video games). From a technological standpoint, the film's rendering was done on a PC equipped with an Intel Core i7 4770 and an Nvidia GTX 760, all running at 30 FPS and at Full HD resolution.

MORE: Tom's Hardware's GDC 2017 Highlights

MORE: The VR Releases Of (Early) Summer 2017

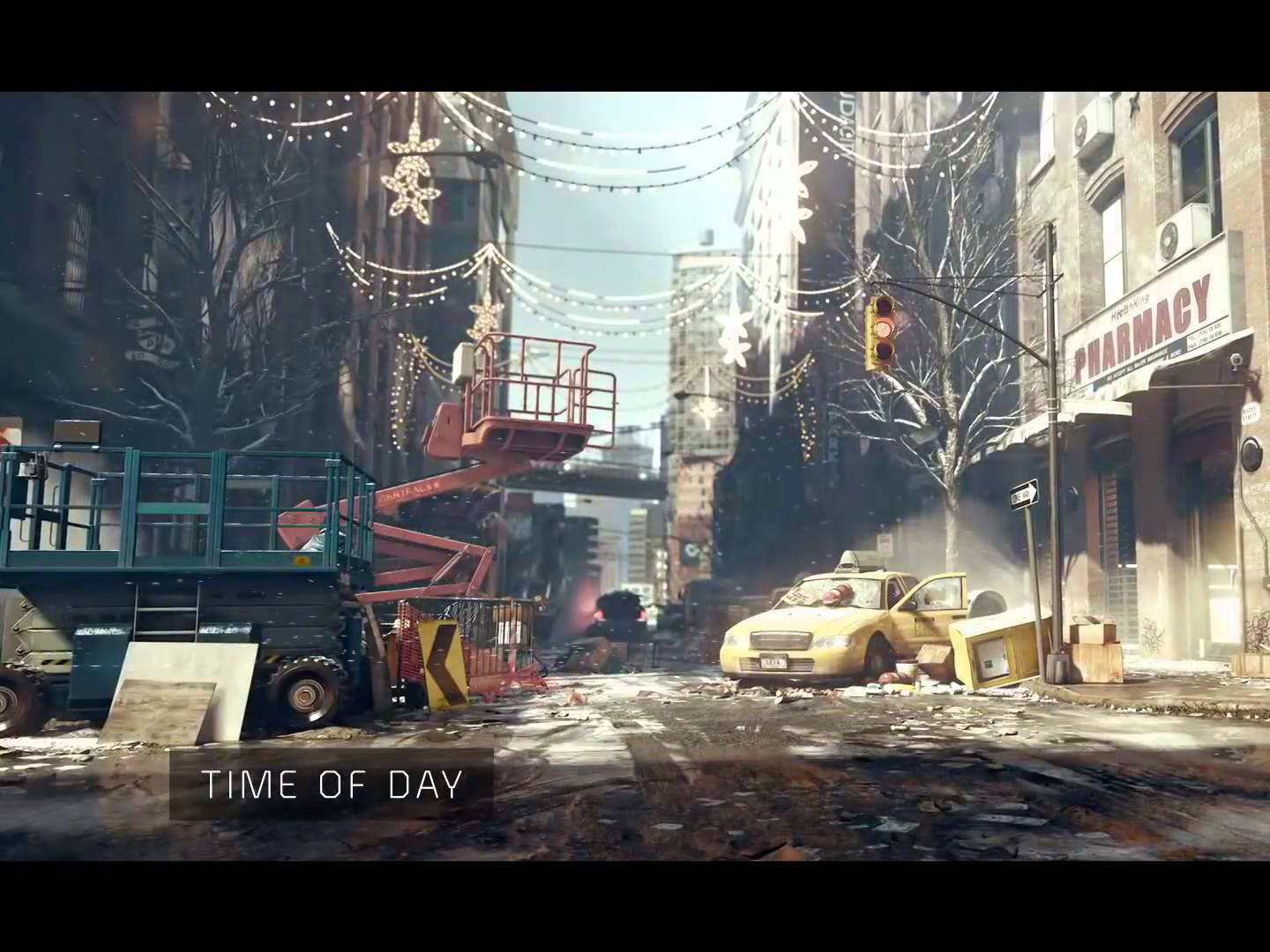

The Division (Snowdrop Engine)

Pre-rendered

For Ubisoft, each game has its own engine. For example, The Assassin's Creed series uses the Anvil engine, whereas Watch Dogs uses Disrupt; both were developed by Ubisoft Montréal. When Ubisoft launched its project for The Division, of course it was only natural for Ubisoft's Massive Entertainment to develop its own game engine, Snowdrop.

The majority of the code is written in C++, and it's based on a system of scripts and relies on nodes to manage everything, from rendering to artificial intelligence throughout the missions. Graphically, Snowdrop offers control of the day/night cycle; management of volumetric lighting, particles, and other visual effects; and most of all, an advanced system of procedural destruction. One amusing aspect is that this engine, designed for the sombre, vulgar, and hopeless universe of The Division is also that which was used to realize Mario + Rabbids Kingdom Battle.

-

falchard The trouble with realistic real-time engines is making it look realistic in gameplay. Something like micro-displacement mapping lip movement is something you would need to do on a scene by scene basis instead of a modular approach. It would eat up a lot of space.Reply

Its also deceiving. Look at our realistic car in the foreground while you can't really see the shitty trees in the background. With most games today, you need to do everything at a higher quality where for a simple driven animation you can concentrate all the detail in the foreground and skip out on most of the games calculations.

How it translates into gameplay will always be the final criteria. If it's just an animation with little player control, you might as well have packaged it as a movie. -

d_kuhn RE: Agni's Philosophy: shaky-cam sucks when it's live action - and now I know it's just as bad in realtime rendered engines. It luckily didn't live long as a tool for cinematographers - though you still see it used for low-budget TV occasionally.Reply -

bit_user Thanks for posting. I always download and run the latest 3DMark and Unigine demos whenever I upgrade GPUs. It's amazing to see the evolution of realtime graphics.Reply

Truth be told, I once thought mainstream games might be on to realtime ray tracing, by now. -

cryoburner I would say none of these demos look truly photorealistic, as in looking like a photo, or a live-action video. Out of these examples, the Unreal Loft demo probably comes closest, along with perhaps the Mizuchi Museum demo, though that has a very narrow focal range hiding any potential imperfections behind lots of lens blur. And of course, the thing these two demos have in common is that they are limited to a single room with little organic material. There are no humans or animals with unrealistic skin and chunky hair, no cloth or plants blowing in the wind, no complex character animations or collision detection between objects. They are not actually games. To be fair though, even big-budget films tend to struggle to make CG look realistic, despite it not even being rendered in real time.Reply