PowerColor Devil 13 Dual Core R9 290X 8 GB Review: Dual Hawaii on Air

PowerColor’s Devil 13 graphics card, with its two Hawaii GPUs and massive heat sink, weighs in at more than two kilograms and exudes luxury. But can it compete with AMD’s dual-GPU reference design with closed-loop water cooling? Let’s find out!

Power Consumption: A Detailed Look At Gaming And Stress Testing

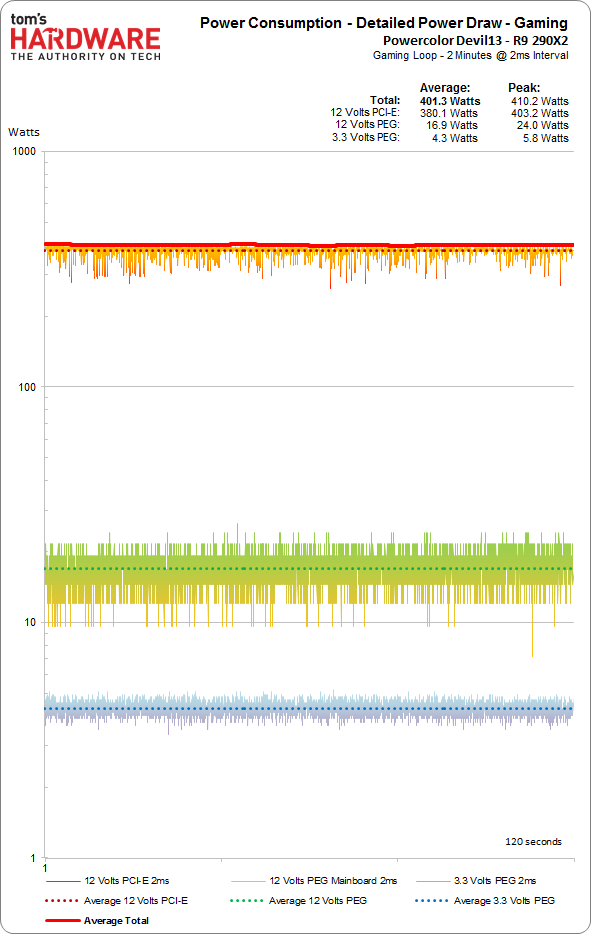

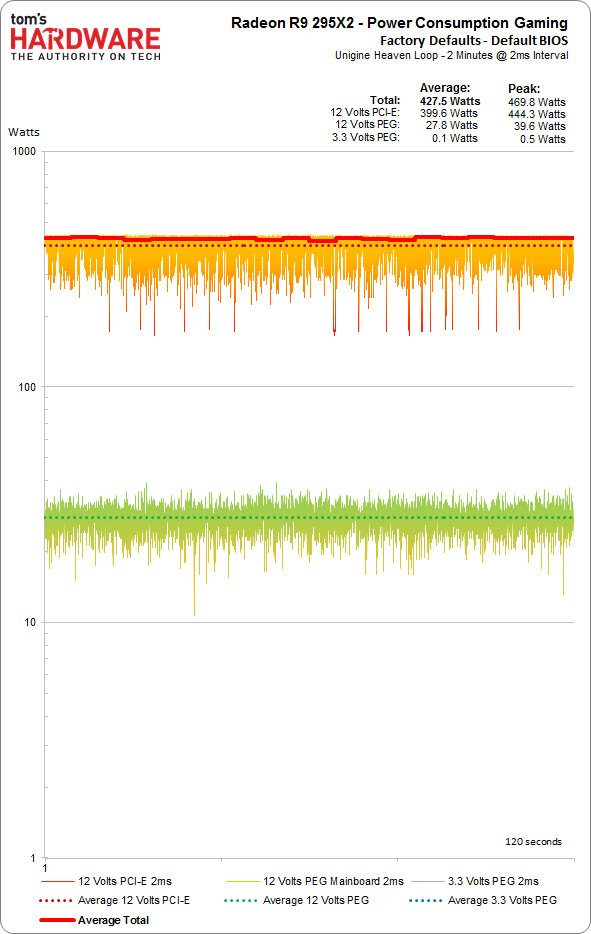

Gaming Power Consumption

Two things jump out when we compare PowerColor's Devil 13 to AMD's reference Radeon R9 295X2. First, PowerColor manages to limit the peaks in power consumption so that they’re barely above the average. Even though the Devil 13's PowerTune-based jumps are a bit more pronounced than the reference card, its sophisticated power supply smooths out the extreme peaks, relieving pressure on the PSU.

Second, it’s very noticeable that PowerColor uses the motherboard's 3.3 V rail to take care of components like the Devil 13's GDDR5 memory, whereas the reference card stays exclusive to 12 V.

Both solutions are very different. But PowerColor comes out ahead in the end. Our measurements also show that the two graphics cards have roughly the same ratio of power draw to performance. In the Devil 13's case, that means we observe lower consumption corresponding to slightly less speed.

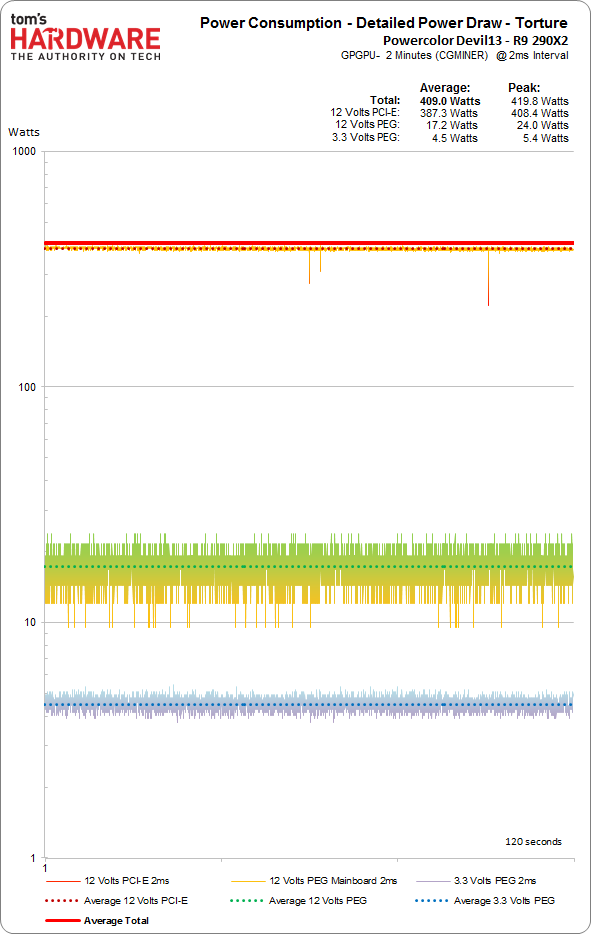

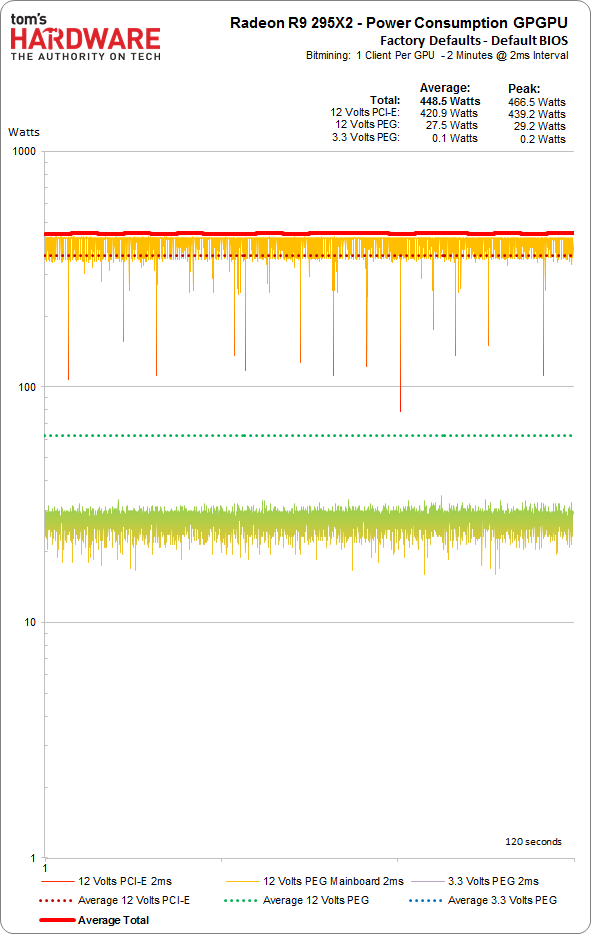

Stress Test

Again, a direct comparison shows some differences. Even though the Devil 13 manages to sustain its clock rate in every scenario, some kind of measurable voltage clipping limits the maximum power draw to an average of 409 W (power target), which is barely higher than our gaming benchmark.

Lower compute-based stress test results confirm our findings. The power-to-performance ratio holds steady, which exposes the tricks used to avoid pushing this three-slot air cooler past its limits. Even though AMD sets a power target of 208 W per GPU (and sticks to it exactly with the Radeon R9 295X2), PowerColor's Devil 13 stays below that number.

Looking at the 12 V power supply through the four eight-pin PCIe connectors, the Devil 13's smoother curve is noticeable yet again. This consistent power draw is different from AMD’s reference design, which has been known to push PSUs beyond their limits. Certain supplies that weren't able to properly support AMD's Radeon R9 295X2 due to its peak power draw have no problem with PowerColor's board.

As for the 3.3 V rail through the motherboard slot, it's the same here as it was during our gaming workload. The wheel doesn't need to be reinvented.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Power Consumption: A Detailed Look At Gaming And Stress Testing

Prev Page Power Consumption: Idle, All Cards Next Page Power Consumption: Gaming, All Cards

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

jlwtech Was this card staying at 1000Mhz during those benchmark runs?Reply

The FPS difference at 1080p is ~7%, yet the clock difference is less than 2%.

Also,

Did you find the Devils maximum stable overclock? With all that power available, I'd imagine this thing could achieve better overclocks than the 295x2.

-

FormatC OC makes no sense, because the card will be really loud. And please read the review attentively! The performance difference between both cards reflects the difference in power consumption nearly 1:1! To handle this cooling by air, the power color card uses a lower power target. Since AMDs Power Tune and Nvidias Boost the pure core clock rates says nothing about the final performance! In my eyes this is also a good study about the limits of an air-cooler.Reply

Take a look at the page with the HiRes power draw. This card isn't a perpetuum mobile. Less power consumption = less gaming performance. OC brings really nothing. Ok, you can destroy your ears... (or the card). We had to handle this rare card very carefully, so I was not able to break the voltage barrier. -

jlwtech Reply13766047 said:OC makes no sense, because the card will be really loud. And please read the review carefully! The performance difference between both cards reflects the difference in power consumption! To handle this cooling by air, the power color card uses a lower power target. Since Power Tune and Boost the pure core clock says nothing abou the final performance!

Take a look at the page with the HiRes power draw. This card isn't a perpetuum mobile. Less power consumption = less gaming performance. OC brings really nothing. Ok, you can destroy your ears...

"Destroy my ears"?

"OC makes no sense because the card will be really loud"? Are you serious??????

Since when has that stopped anyone?

This thing is quiet compared to high end cards from 5+ years ago....

Have we become so spoiled by the advances in technology, that has enabled higher performance at lower noise levels, that we will not push the limits in fear of a little noise!?

It's an ultra high end GFX card made for the kind of people who like to push the limits. It should absolutely be overclocked and benchmarked. With a big fat mind-blowing power usage chart with figures higher than any card has ever pushed!

Also, clock rates still directly correlate to performance. Lowering the power tune limit will limit clock rates, and vice versa. Lower clock rates equals lower performance, but lower power does not always equal lower performance.

-

jlwtech I just got done reading 3 other reviews for this card, and each of those reviews had this card slightly above the 295x2 in their gaming benchmarks. (despite the Devil's boost clock being slightly lower).Reply

That seems a little odd.

The games, game settings, drivers, average clock rates, and Bios mode used for the benchmark figures/comparison, are not listed in this review. (unusual for Toms) That information would be very helpful.

I suspect that the 295x2 was maintaining a higher average clock rate, in this comparison. (higher than 2%, anyways)

PowerColor, almost certainly made sure that this card meets-or-beats the performance of the 295x2, before sending it off for review.

-

Menigmand It's interesting to see this kind of enormous, powerful, noisy card being developed in a market where most games are designed to fit the limits of console hardware.Reply -

mapesdhs Why have they called this a 290X? Rather confusing, it should be called a 295X.Reply

Having it listed in the 290X section on seller sites is dumb. Also, it's not an 8GB

card, it's a 2x4GB card. I really wish tech sites would stop GPU vendors from

getting away with this inaccurate product spec PR. Call it for what it is, 2x4GB,

and if vendors don't like it, say tough cookies. The user will never see '8GB' so

the phrase should not be used as if they could (though PowerColor seems happy

to have such misleading info on its product page). I'm assuming you agree with this

Igor, because the table on pp. 11 does refer to the Devil 13 as a 2x4GB... ;)

Btw, checking a typical seller site here (UK), the cheapest 290X is 1040MHz core,

so given the Devil 13 uses 3 slots anyway, IMO two factory oc'd 290Xs make more

sense, and would save more than 300 UKP.

Ian.

PS. The typo Mac266 mentioned is still present.

-

jlwtech Why did the person who wrote this article focus primarily on power consumption and efficiency?Reply

This review has 4 pages of power consumption/efficiency data, with some impressively detailed information. But, it only has 2 pages of actual performance data, with almost no details at all.

Who wouldn't want to see this card overclocked to a ridiculous extent, with plumes of smoke coming off of it, and the only power consumption figures showing that it's consuming more power than any other card ever made?

(I had to edit this comment. That first revision was a little crazy.)

-

bemused_fred Reply13766675 said:The primary focus of this review is absurd!

Why did the person who wrote this article focus primarily on power consumption and efficiency?

This review has 4 pages of power consumption/efficiency data, with some impressively detailed information. But, it only has 2 pages of actual performance data, with almost no details at all.

The performance is so close to the performance of the liquid-cooled R9-295X that it would basically be a repeat of that review. If you want an idea of its performance, just re-read that review and maybe reduce each frame rate by 3%-ish.

OT: 60Db? Into the trash it goes. I don't care how expensive a card is, if it's too loud that I can't have a goddamn normal conversation near my computer, it's going in the skip. I don't want to surround myself in an anti-social bubble of noise-induced hearing loss every time I want to game.