ARM Cortex-A72 Architecture Deep Dive

ARM's Cortex-A72 CPU adds power and performance optimizations to the previous A57 design. Here's an in-depth look at the changes to each stage in the pipeline, from better branch prediction to next-gen execution units.

Introduction

ARM announced the Cortex-A72, the high-end successor to the Cortex-A57, near the beginning of 2015. For more than a year now, SoC vendors have been working on integrating the new CPU core into their products. Now that mobile devices using the A72 are imminent, it’s a good time to discuss what makes ARM’s flagship CPU tick.

With the A57, ARM looked to expand the market for its CPUs beyond mobile devices and into the low-power server market. Using a single CPU architecture for both smartphones and servers sounds unreasonable, but according to ARM’s Mike Filippo, lead architect for the A72, high-end mobile workloads put a lot of pressure on caches, branch prediction, and the translation lookaside buffer (TLB), which are also important for server workloads. Where the A57 seemed skewed towards server applications based on its power consumption, the A72 takes a more balanced approach and looks to be a better fit for mobile.

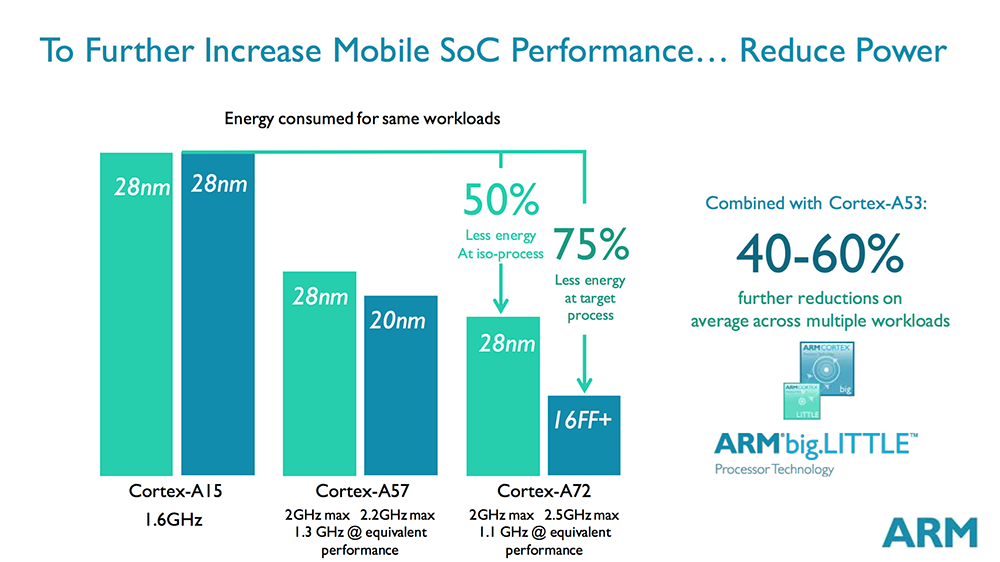

The Cortex-A72 is an evolution of the Cortex-A57; the baseline architecture is very similar. However, ARM tweaked the entire pipeline for better power and performance. Perhaps the A57’s biggest weakness was its relatively high power consumption, especially on the 20nm node, which severely limited sustained performance in mobile devices, relegating it to short, bursty workloads and forcing SoCs to use the lower-performing Cortex-A53 cores for extended use.

ARM looks to correct this issue with the A72, going back and optimizing nearly every one of the A57’s logical blocks to reduce power consumption. For example, ARM was able to realize a 35-40% reduction in dynamic power for the decoder stage, and by using an early IC tag lookup, the A72’s 3-way L1 instruction and 2-way L1 data caches also use less power, similar to what direct-mapped caches would use. According to ARM, all of the changes made to the A72 result in about a 15% reduction in energy use compared to the A57 when both cores are running the same workload at the same frequency and using the same 28nm process. The A72 sees an even more significant reduction when using a modern FinFET process, such as TSMC’s 16nm FinFET+, where an A72 core stays within a 750mW power envelope at 2.5GHz, according to ARM.

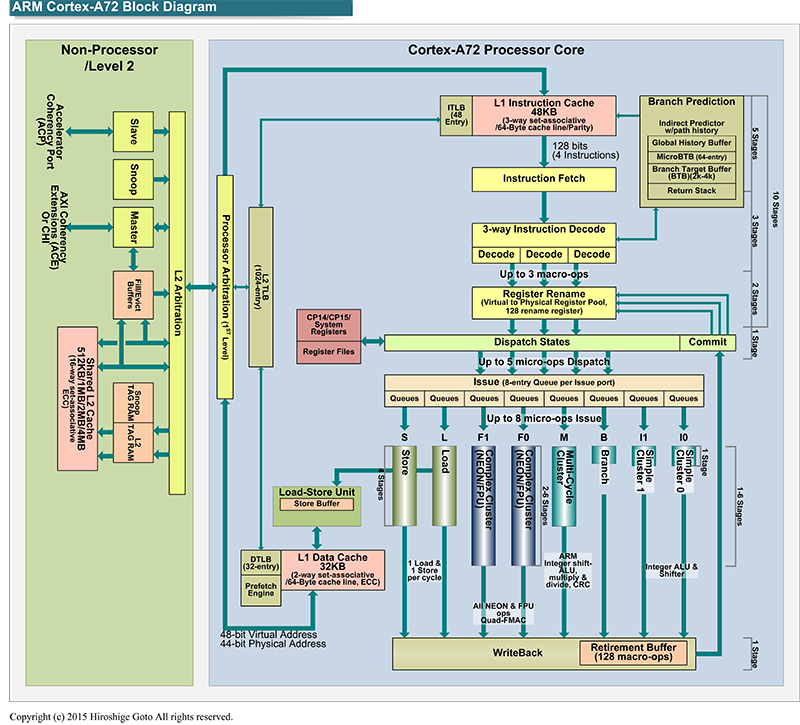

[Image Source: Hiroshige Goto PC Watch]

MORE: Best SmartphonesMORE: How We Test Smartphones & TabletsMORE: All Smartphone ContentMORE: All Tablet Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

-

Aspiring techie I wonder what would happen if these guys dipped their toe into the desktop CPU market.Reply -

utroz ReplyI wonder what would happen if these guys dipped their toe into the desktop CPU market.

Well no current Windows support so you would need to run Linux or other ARMv8 compatible OS. For people that just watch netflix, check facebook and other web pages, and type up a few papers for school or work an ARM cpu would have plenty of CPU performance.. -

InvalidError Reply

Not much.17300174 said:I wonder what would happen if these guys dipped their toe into the desktop CPU market.

Changing instruction set does not magically free the architecture from process limitations nor remove bottlenecks from software architecture. If ARM designed a CPU core specifically for desktop, it would hit most of the same performance scaling bottlenecks x86 has. You would likely end up with a 50W ARM chip being roughly even with a 50W Intel chip, the main difference between the two - aside from the ISA - being that the ARM chip is $100 while the Intel chip is $400. -

somebodyspecial And I think that's his point...The PRICE and how much of the public at large such a machine could get. Myself I can't wait until they put out a full desktop chip with heatsink/fan big psu, HD or SSD, 16-32GB mem etc (hopefully with an optional slot for discrete gpu when desired). As games amp up on ARM you'd most likely only be missing WINDOWS/x86 if you use pro apps stuff. Unreal4/Unity5 etc will provide nice graphics for games on the ARM side (most engines port easily today and get even better with Vulkan coming), so only pro apps would be left off for years and some of the big ones (adobe etc) might put out full apps soon anyway. Take off $200-300 for cpu and $100 for windows and I'm guessing an ARM desktop could do quite a bit of damage to WINTEL.Reply

We see people already opting for chromebooks, tablets etc as PC's. Knock a chunk off desktop prices and you'll gains some users and push devs past mobile on arm. I'm hoping NV builds such a box at some point (just a much bigger Shield TV box really), but with multiple OS's (steamos, linux, and android) or at least a way to do it yourself. That would be a pretty versatile box ;) It isn't so much about if they BEAT intel, as it is about dropping the price of PC's everywhere. If ARM's side wants to grow much more they have to go to desktops. Surely everyone wants a chunk of Intel's ~13B a year. -

viewtyjoe ReplyIf ARM's side wants to grow much more they have to go to desktops. Surely everyone wants a chunk of Intel's ~13B a year.

ARM makes their money by licensing out their designs to other companies which manufacture the actual chips. The only company I'm aware of with the resources and ARM license to theoretically make something like this happen is AMD, and their use of ARM is more directed towards the server sector. -

TechyInAZ ReplyI wonder what would happen if these guys dipped their toe into the desktop CPU market.

I doubt that would happen, having ARM AND X86 on the desktop patform is just going to cause frustration. -

pug_s ReplyIf ARM's side wants to grow much more they have to go to desktops. Surely everyone wants a chunk of Intel's ~13B a year.

ARM makes their money by licensing out their designs to other companies which manufacture the actual chips. The only company I'm aware of with the resources and ARM license to theoretically make something like this happen is AMD, and their use of ARM is more directed towards the server sector.And I think that's his point...The PRICE and how much of the public at large such a machine could get. Myself I can't wait until they put out a full desktop chip with heatsink/fan big psu, HD or SSD, 16-32GB mem etc (hopefully with an optional slot for discrete gpu when desired). As games amp up on ARM you'd most likely only be missing WINDOWS/x86 if you use pro apps stuff. Unreal4/Unity5 etc will provide nice graphics for games on the ARM side (most engines port easily today and get even better with Vulkan coming), so only pro apps would be left off for years and some of the big ones (adobe etc) might put out full apps soon anyway. Take off $200-300 for cpu and $100 for windows and I'm guessing an ARM desktop could do quite a bit of damage to WINTEL.

We see people already opting for chromebooks, tablets etc as PC's. Knock a chunk off desktop prices and you'll gains some users and push devs past mobile on arm. I'm hoping NV builds such a box at some point (just a much bigger Shield TV box really), but with multiple OS's (steamos, linux, and android) or at least a way to do it yourself. That would be a pretty versatile box ;) It isn't so much about if they BEAT intel, as it is about dropping the price of PC's everywhere. If ARM's side wants to grow much more they have to go to desktops. Surely everyone wants a chunk of Intel's ~13B a year.And I think that's his point...The PRICE and how much of the public at large such a machine could get. Myself I can't wait until they put out a full desktop chip with heatsink/fan big psu, HD or SSD, 16-32GB mem etc (hopefully with an optional slot for discrete gpu when desired). As games amp up on ARM you'd most likely only be missing WINDOWS/x86 if you use pro apps stuff. Unreal4/Unity5 etc will provide nice graphics for games on the ARM side (most engines port easily today and get even better with Vulkan coming), so only pro apps would be left off for years and some of the big ones (adobe etc) might put out full apps soon anyway. Take off $200-300 for cpu and $100 for windows and I'm guessing an ARM desktop could do quite a bit of damage to WINTEL.

We see people already opting for chromebooks, tablets etc as PC's. Knock a chunk off desktop prices and you'll gains some users and push devs past mobile on arm. I'm hoping NV builds such a box at some point (just a much bigger Shield TV box really), but with multiple OS's (steamos, linux, and android) or at least a way to do it yourself. That would be a pretty versatile box ;) It isn't so much about if they BEAT intel, as it is about dropping the price of PC's everywhere. If ARM's side wants to grow much more they have to go to desktops. Surely everyone wants a chunk of Intel's ~13B a year.

It is possible to make a hardware ARM chip that rivals Intel. The only problem is software as there is no software that would take advantage of it. Maybe in the distant future that Android OS and its apps would morph to a desktop OS that would rival to Microsoft, but not yet. Microsoft's closest adaptation of ARM soc's are windows 10 developer edition running in the Raspberry Pi 2. All of the software and games have to be written to ARM compatible. Even so, Intel's main focus now are now on low powered and mobile chips that is competing with ARM itself. Who knows, maybe by then AMD would get its act together and compete with Intel on the low powered space when they have access to 14-16nm technologies.

-

MichaelWest With regard to the discussion of when someone is going to provide ARM type cpu's for desktops for ARM gaming etc we already pretty much have the beginning of this with all the many Media streaming TV boxes on the market. They mainly run Android which is not ideal for desktop applications yet and this could take many years to see this improve. Hardware wise they are more than fast enough for all the current ARM android games and there is room for them to get even faster. They don't face the same power usage limitations mobile devices face. They may be targeted for use on TV's with remotes but they can work just as well with HDMI monitors, keyboards, mice and game controllers. If all you wanted was a simple cheap desktop for email/internet and ARM gaming then they already fit the bill.Reply

-

cbxbiker61 ReplyIt is possible to make a hardware ARM chip that rivals Intel. The only problem is software as there is no software that would take advantage of it. Maybe in the distant future that Android OS and its apps would morph to a desktop OS that would rival to Microsoft, but not yet. Microsoft's closest adaptation of ARM soc's are windows 10 developer edition running in the Raspberry Pi 2. All of the software and games have to be written to ARM compatible. Even so, Intel's main focus now are now on low powered and mobile chips that is competing with ARM itself. Who knows, maybe by then AMD would get its act together and compete with Intel on the low powered space when they have access to 14-16nm technologies.

That is only true when you define "software" being "Windows binaries".

More and more people every day are waking up to the fact that "software" is really source code, which can be compiled on any architecture for which there is a compiler. You just have to use an open platform with an open compiler, i.e. Linux/BSD.

-

bit_user Reply

If that were true, Intel could've made an Atom that's at least as efficient as competing ARM cores. But ISA does actually count for something. x86 is significantly harder to decode, and occupies more space in ICaches.17300684 said:Changing instruction set does not magically free the architecture from process limitations nor remove bottlenecks from software architecture. If ARM designed a CPU core specifically for desktop, it would hit most of the same performance scaling bottlenecks x86 has. You would likely end up with a 50W ARM chip being roughly even with a 50W Intel chip

It would be interesting if someone designed an ARM v8 core for optimal single-thread performance. I'm pretty sure it could provide superior performance at the same power, and use less power at the same performance as Skylake (assuming similar design resources & process node as Intel). But this is a tall order, and there's not yet a big enough market. Maybe in 5 years, once ARM has grabbed a significant chunk of server market share, there'll be enough interest in building workstation-oriented ARM cores.