ATI Enters The X1000 Promised Land

Memory Mastery

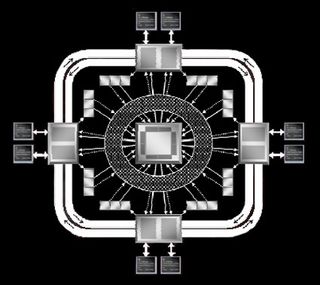

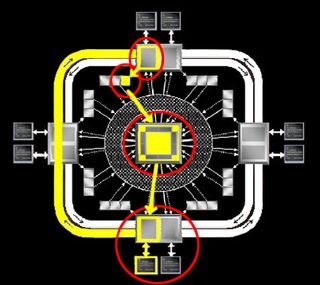

The other major overhaul was with the memory architecture. If a maximum of 512 simultaneous threads wasn't enough, the memory transportation hub was moved from the core to the outside. Described as a "ring bus," this new superhighway allows for traffic to not congest downtown where all of the work is going on. Why add more clutter to an already sophisticated and complex infrastructure?

Now you savvy microprocessor architects are going to start saying that the amount of time to finish a successful memory read will take longer than it did before. This is true and ATI cannot deny that they have effectively added latency to the memory access cycle.

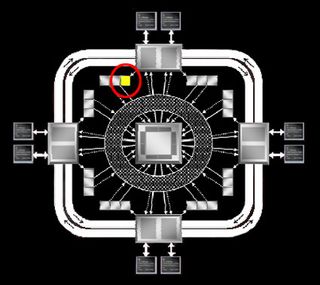

Here is how the read access commences. First a client sends a request for information stored in memory.

The request is received by the memory controller.

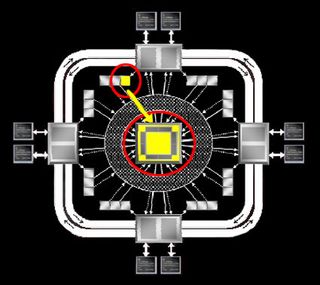

The memory controller then sends a command to the correct memory module.

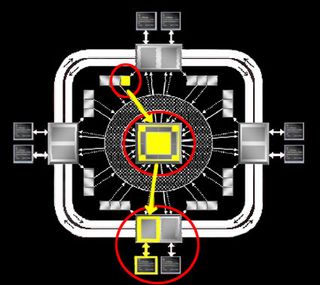

Lastly, the data read is made and sent to the local (closest) ring stop. The data is sent along the ring to the stop closest to the initiating device. The bus drops off its passenger to the client and the cycle is completed.

Although a traditional architecture would be plagued by such a change in the memory architecture, the ultra-threaded process eliminates any such penalty within only four threads. This means that when the memory access is called for, it is put on hold to handle four other threads. Then when it is done with those it will have the information it was looking for. The key to this structure is the coordination of the pixel dispatch processor, the memory controller and all of the other parts linked together. Ultra-threading coupled with the ring architecture is a great advancement to memory accesses.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

There are two other advances in the memory architecture. The first is the design of the channel design. In the X850 series, there were four 64-bit channels. The X1000 utilizes eight 32-bit channels. This allows for more data transfers and much better control of the channels by the memory controller.

The second is the cache design. Previous cache lines were directly mapped. This allowed for direct accessing but if there was a lot of traffic, the access was stalled. ATI now uses a fully associative cache. There is more need for managerial oversight but the amount and frequency of accesses increases and the amount of bandwidth requirements decreases.

Most Popular