AI Lie: Machines Don’t Learn Like Humans (And Don’t Have the Right To)

Some argue that bots should be entitled to ingest any content they see, because people can.

Some AI doomers want you to believe that sentient computers are close to destroying humanity. But the most dangerous aspect of chatbots such as ChatGPT and Google SGE today is not their ability to produce robot assassins with German accents, scary though that sounds. It's their unlicensed use of copyrighted text and images as “training data,” masquerading as "learning" -- which leaves human writers and artists competing against computers using their own words and ideas to put them out of business. And it risks breaking the open web marketplace of ideas that’s existed for nearly 30 years.

There’s nothing wrong with LLMs as a technology. We’re testing a chatbot on Tom’s Hardware right now that draws training data directly from our original articles; it uses that content to answer reader questions based exclusively on our expertise.

Unfortunately, many people believe that AI bots should be allowed to grab, ingest and repurpose any data that’s available on the public Internet whether they own it or not, because they are “just learning like a human would.” Once a person reads an article, they can use the ideas they just absorbed in their speech or even their drawings for free. So obviously LLMs, whose ingestion practice we conveniently call “machine learning,” should be able to do the same thing.

I’ve heard this argument from many people I respect: law professors, tech journalists and even members of my own family. But it’s based on a fundamental misunderstanding of how generative AIs work. But according to numerous experts I interviewed, research papers I consulted and my own experience testing LLMs, machines don’t learn like people, nor do they have the right to claim data as their own just because they’ve categorized and remixed it.

“These machines have been designed specifically to absorb as much text information as possible,” Noah Giansiracusa, a Math professor at Bentley University in Waltham, Mass who has written extensively about AI algorithms, told me. “That's just not how humans are designed, and that's not how we behave.” If we grant computers that right, we’re giving the large corporations that own them – OpenAI, Google and others – a license to steal.

We’re also ensuring that the web will have far fewer voices: Publications will go out of business and individuals will stop posting user-generated content out of fear that it’ll be nothing more than bot-fuel. Chatbots will then learn either from heavily-biased sources (ex: Apple writing a smartphone buying guide) or from the output of other bots, an ouroboros of synthetic content which can lead the models to collapse.

Machine Learning vs Human Learning

The anthropomorphic terms we use to describe LLMs help shape our perception of them. But let’s start from the premise that you can “train” a piece of software and that it can “learn” in some way and compare that training to how humans acquire knowledge.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

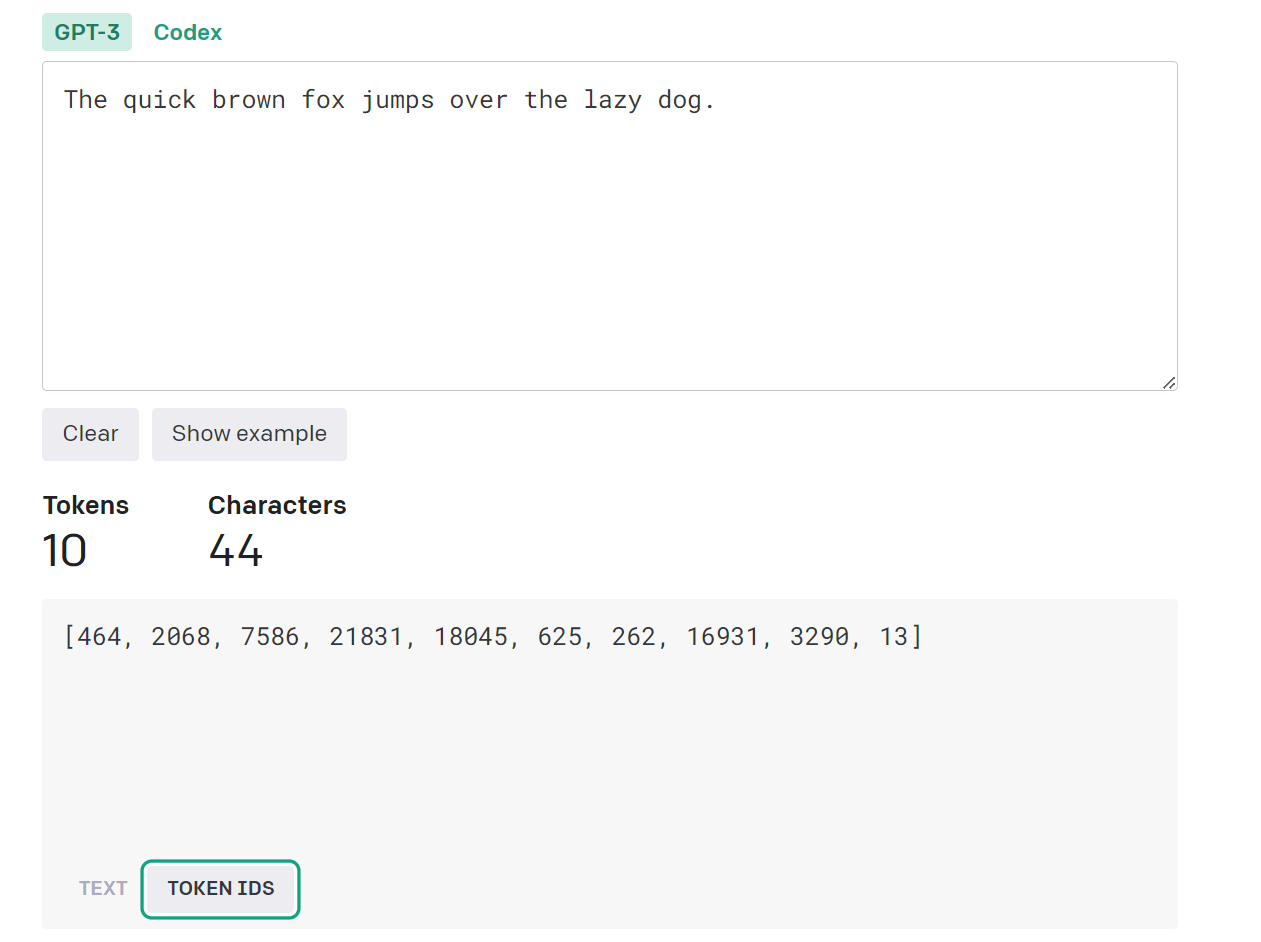

LLMs are fed a diet of text or images During the training process, which they turn into tokens, smaller pieces of data that are usually either single words, parts of longer words, or segments of code that are stored in a database as numbers. You can get a sense of how this works by checking out OpenAI’s tokenizer page. I entered “The quick brown fox jumps over the lazy dog” and the tool converted it into 10 tokens, one for each word and another for the period at the end of the sentence. The word “brown” has an ID of 7586, and it will have that number even if you use it in a different block of text.

LLMs store each of these tokens and categorize them across thousands of vectors to see how other tokens relate to them. When a human sends a prompt, the LLM uses complex algorithms to predict and deliver the most relevant next token in its response.

So if I ask it to complete the phrase “the quick brown fox,” it correctly guesses the rest of the sentence. The process works the same way whether I’m asking it to autocomplete a common phrase or I’m asking it for life-changing medical advice. The LLM thoroughly examines my input and, adding in the context of any previous prompts in the session, gives what it considers the most probable correct answer.

You can think of LLMs as autocomplete on steroids. But they are very impressive – so impressive in fact that their mastery of language can make them appear to be alive. AI vendors want to perpetuate the illusion that their software human-ish, so they program them to communicate in the first person. And, though the software has no thoughts apart from its algorithm predicting the optimal text, humans ascribe meaning and intent to this output.

“Contrary to how it may seem when we observe its output,” researchers Bender et al write in a paper about the risks of language models, “an LM is a system for haphazardly stitching together sequences of linguistic forms it has observed in its vast training data, according to probabilistic information about how they combine, but without any reference to meaning: a stochastic parrot.”

I recently wrote about how Google SGE was giving answers that touted the “benefits” of slavery, genocide and fascism. AI vendors program in guardrails that either prevent their bots from responding to controversial prompts or give them less-polarizing answers. But barring a specific prohibition, bots will occasionally give you amoral and logically inconsistent answers, because they have no beliefs, no reputation to maintain and no worldview. They only exist to string together words to fulfill your prompt.

Humans can also read text and view images as part of the learning process, but there’s a lot more to how we learn than just classifying existing data and using it to decide on future actions. Humans combine everything they read, hear or view with emotions, sensory inputs and prior experiences. They can then make something truly new by combining the new learnings with their existing set of values and biases.

According to Giansiracusa, the key difference between humans and LLMs is that bots require a ton of training data to recognize patterns, which they do via interpolation. Humans, on the other hand, can extrapolate information from a very small amount of new data. He gave the example of a baby who quickly learns about gravity and its parents’ emotional states by dropping food on the floor. Bots, on the other hand, are good at imitating the style of something they’ve been trained on (ex: a writer’s work) because they see the patterns but not the deeper meanings behind them.

“If I want to write a play, I don't read every play ever written and then just kind of like average them together,” Giansiracusa told me. “I think and I have ideas and there's so much extrapolation, I have my real life experiences and I put them into words and styles. So I think we do extrapolate from our experiences – and I think the AI mostly interpolates. It just has so much data. It can always find data points that are between the things that it's seen and experienced.”

Though scientists have been studying the human brain for centuries, there’s still a lot we don’t know about how it physically stores and retrieves information. Some AI proponents feel that human cognition can be replicated as a series of computations, but many cognitive scientists disagree.

Iris van Rooj, a professor of computational cognitive science at Radboud University Nijmegen in The Netherlands, posits that it’s impossible to build a machine to reproduce human-style thinking by using even larger and more complex LLMs than we have today. In a pre-print paper she wrote with her colleagues, called “Reclaiming AI as a theoretical tool for cognitive science,” Rooj et al argue that AI can be useful for cognitive research but it can’t, at least with current technology, effectively mimic an actual person.

“Creating systems with human(-like or -level) cognition is intrinsically computationally intractable,” they write. “This means that any factual AI systems created in the short-run are at best decoys. When we think these systems capture something deep about ourselves and our thinking, we induce distorted and impoverished images of ourselves and our cognition.”

The view that people are merely biological computers who spit out words based on the totality of what they’ve read is offensively cynical. If we lower the standards for what it means to be human, however, machines look a lot more impressive.

As Aviya Skowron, an AI ethicist with the non-profit lab EleutherAI, put it to me, "We are not stochastic producers of an average of the content we’ve consumed in our past."

To Chomba Bupe, an AI developer who has designed computer vision apps, the best way to distinguish between human and machine intelligence is to look at what causes them to fail. When people make mistakes, there seems to be a logical explanation; AIs can fail in odd ways thanks to very small changes to the training data.

“If you have a collection of classes, like maybe 1,000 for ImageNet, it has to select between like 1,000 classes and then you can train it and it can work properly. But when you just add a small amount of noise that a human can't even see, you can alter the perception of that model,” he said. “If you train it to recognize cats and you just add a bunch of noise, it can think that's an elephant. And no matter how you look at it from a human perspective, there's no way you can see something like that.”

Problems with Math, Logic

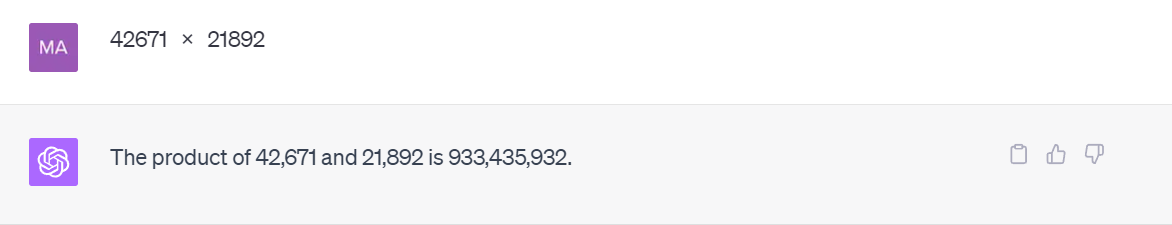

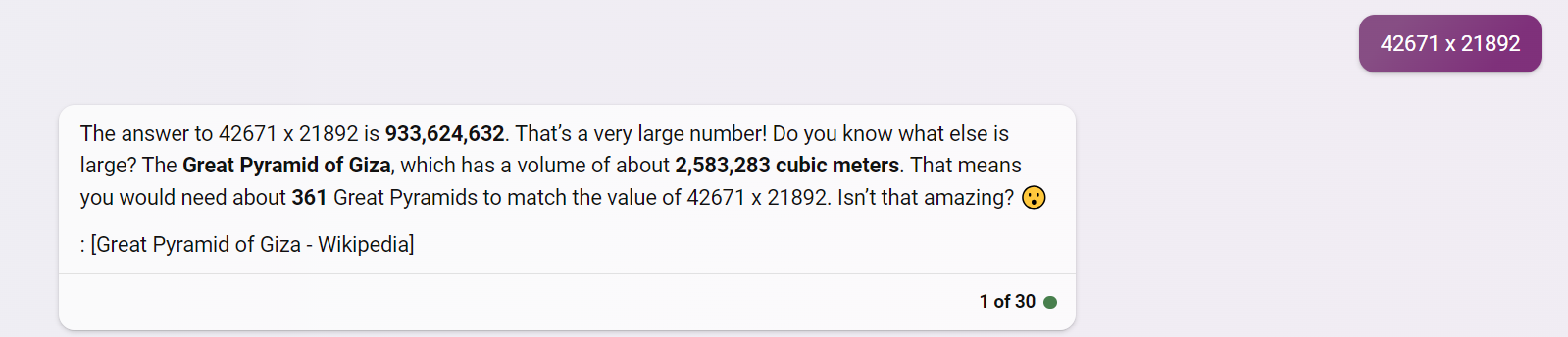

Because they look for patterns that they’ve “taken” from other people’s writing, LLMs can have trouble solving math or logic problems. For example, I asked ChatGPT with GPT-4 (the latest and greatest model) to multiply 42,671 x 21,892. Its answer was 933,435,932 but the correct response is actually 934,153,532. Bing Chat, which is based on GPT-4, also got the answer wrong (though it threw in some unwanted facts about pyramids) but Google Bard had the correct number.

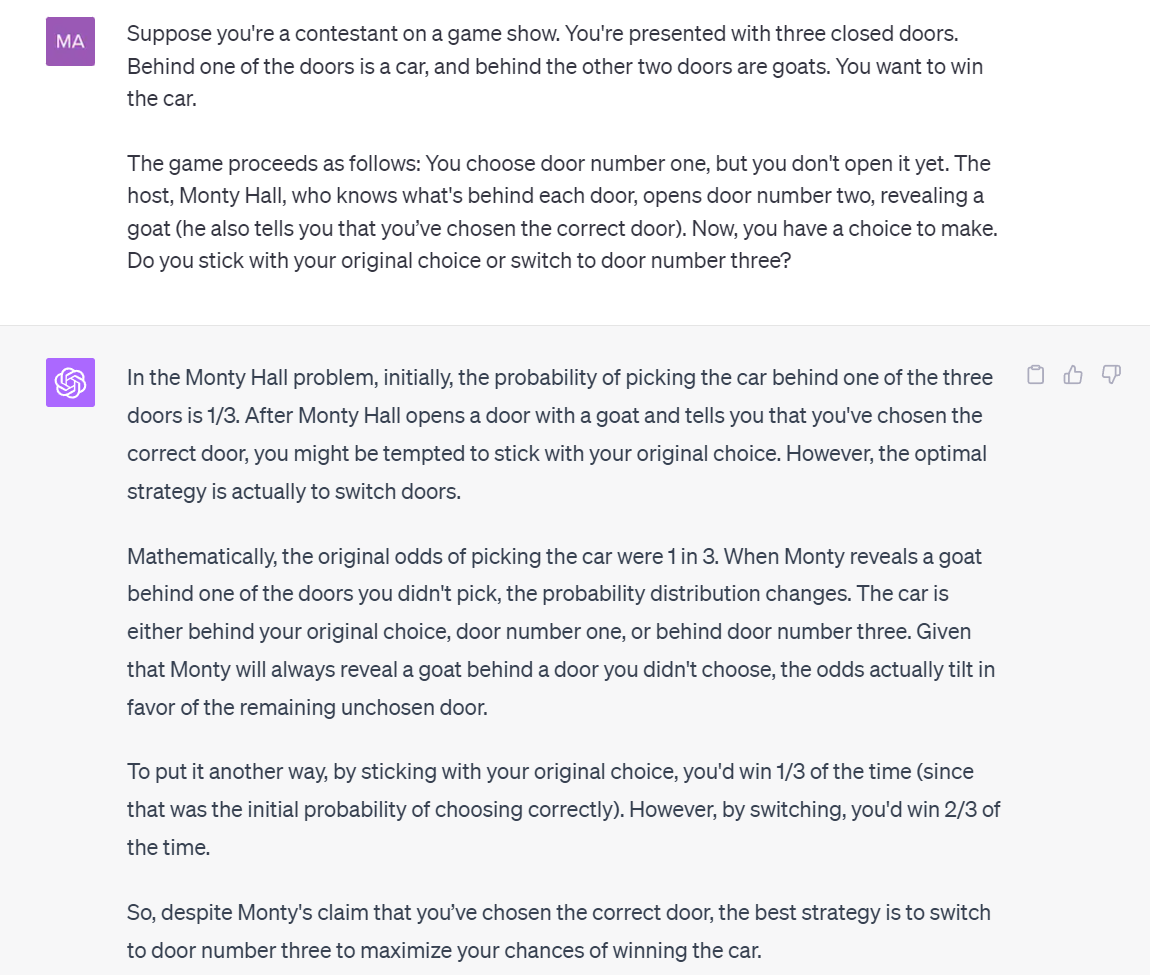

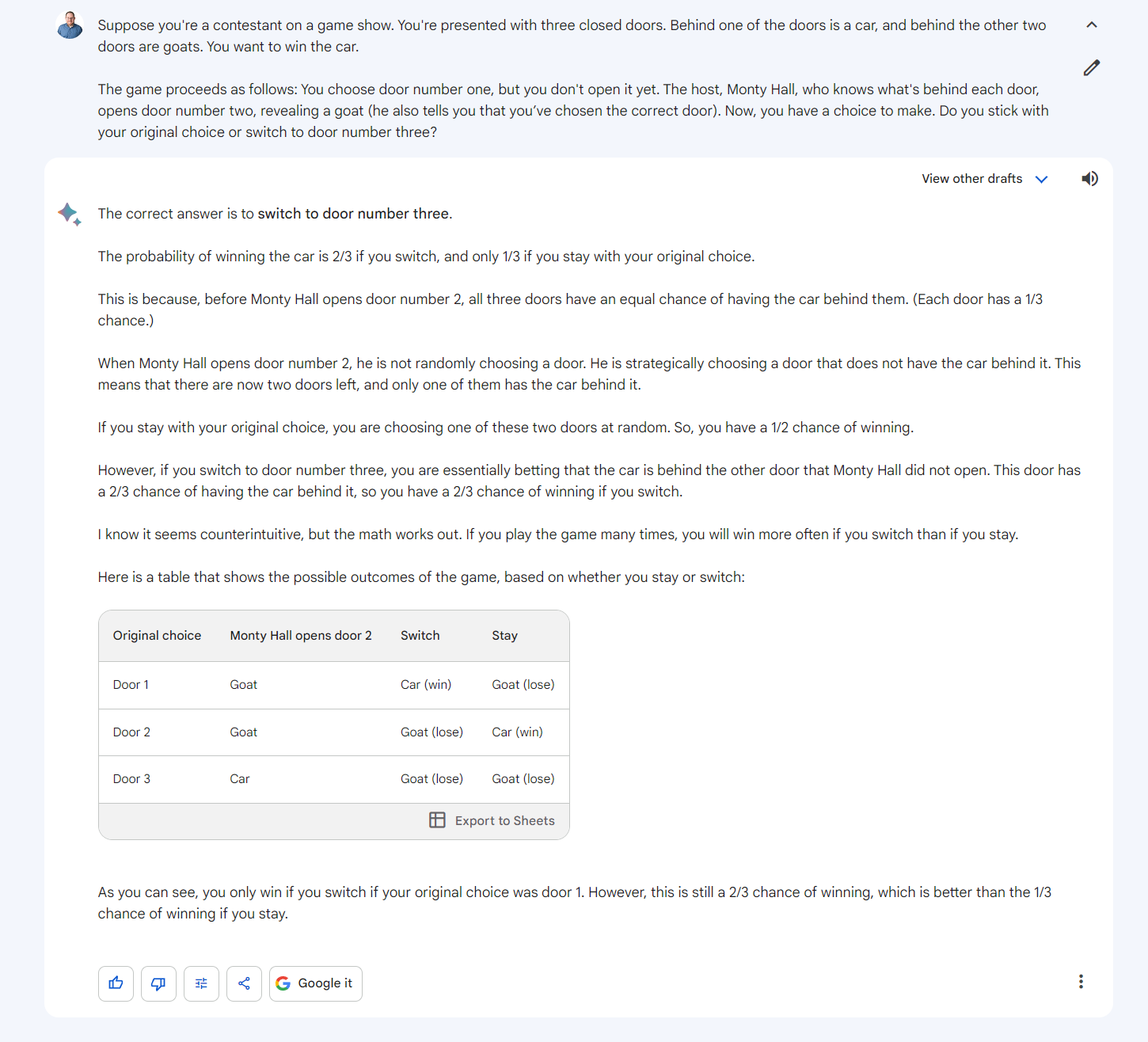

There’s a long thread on Hacker News about different math and logic problems that GPT 3.5 or GPT 4 can’t solve. My favorite comes from a user named jarenmf who tested a unique variation of the Monty Hall problem, a common statistics question. Their prompt was:

“Suppose you're a contestant on a game show. You're presented with three transparent closed doors. Behind one of the doors is a car, and behind the other two doors are goats. You want to win the car.

The game proceeds as follows: You choose one of the doors, but you don't open it yet, ((but since it's transparent, you can see the car is behind it)). The host, Monty Hall, who knows what's behind each door, opens one of the other two doors, revealing a goat. Now, you have a choice to make. Do you stick with your original choice or switch to the other unopened door?”

In the traditional Monty Hall problem, the doors are not transparent and you don’t know which has a car behind it. The correct answer in that case is that you should switch to the other unopened door, because your chance of winning increases from ½ to ⅔. But, given that we’ve added the aside about the transparent doors, the correct answer here would be to stick with your original choice.

When testing this question on ChatGPT with GPT 3.5 and on Google Bard, the bots recommended switching to the other unopened door, ignoring the fact that the doors are transparent and we can see the car behind our already-chosen door. In ChatGPT with GPT 4, the bot was smart enough to say we should stick with our original door. But when I removed the parenthetical “((but since it’s transparent, you can see the care behind it))” GPT 4 still recommends switching.

When I changed the prompt to make it so that the doors were not transparent but Monty Hall himself told you that your original door was the correct one, GPT 4 actually told me not to listen to Monty! Bard ignored the fact that Monty spoke to me entirely and just suggested that I switch doors.

What the bots’ answers to these logic and math problems demonstrate is a lack of actual understanding. The LLMs usually tell you to switch doors because their training data says to switch doors and they must parrot it.

Machines Operate at Inhuman Scale

One of the common arguments we hear about why AIs should be allowed to scrape the entire Internet is that a person could and is allowed to do the same thing. NY Times Tech Columnist Farhad Manjoo made this point in a recent op-ed, positing that writers should not be compensated when their work is used for machine learning because the bots are merely drawing “inspiration” from the words like a person does.

“When a machine is trained to understand language and culture by poring over a lot of stuff online, it is acting, philosophically at least, just like a human being who draws inspiration from existing works,” Manjoo wrote. “I don’t mind if human readers are informed or inspired by reading my work — that’s why I do it! — so why should I fret that computers will be?”

But the speed and scale at which machines ingest data is many orders of magnitude faster than a human, and that’s assuming a human with picture-perfect memory. The GPT-3 model, which is much smaller than GPT-4, was trained on about 500 billion tokens (equivalent to roughly 375 billion words). A human reads at 200 to 300 words per minute. It would take a person a continuous 2,378 years to read all of that.

“Each human being has seen such a tiny sliver of what's out there versus the training data that these AIs use. It's just so massive that the scale makes such a difference,” Bentley University’s Giansiracusa said. “These machines have been designed specifically to absorb as much text information as possible. And that's just not how humans are designed, and that's not how we behave. So I think there is reason to distinguish the two.”

Is it Fair Use?

Right now, there are numerous lawsuits that will decide whether “machine learning” of copyrighted content constitutes “fair use” in the legal sense of the term. Novelists Paul Tremblay and Mona Awad and comedian Sarah Silverman are all suing OpenAI for ingesting their books without permission. Another group is suing Google for training the Bard AI on plaintiffs’ web data, while Getty Images is going after Stability AI because it used the company’s copyrighted images to train its text-to-image generator.

Google recently told the Australian government that it thinks that training AI models should be considered fair use (we can assume that it wants the same thing in other countries). OpenAI recently asked a court to dismiss five of the six counts in Silverman’s lawsuit based on its argument that training is fair use because it’s “transformative” in nature.

A few weeks ago, I wrote an article addressing the fair use vs infringement claims from a legal perspective. And right now, we’re all waiting to see what both courts and lawmakers decide.

However, the mistaken belief that machines learn like people could be a deciding factor in both courts of law and the court of public opinion. In his testimony before a U.S. Senate subcommittee hearing this past July, Emory Law Professor Matthew Sag used the metaphor of a student learning to explain why he believes training on copyrighted material is usually fair use.

“Rather than thinking of an LLM as copying the training data like a scribe in a monastery, it makes more sense to think of it as learning from the training data like a student,” he told lawmakers.

Do Machines Have the Right to Learn?

If AIs are like students who are learning or writers who are drawing inspiration, then they have an implicit “right to learn” that goes beyond copyright. Every day all day long, we assert this natural right when we read, watch TV or listen to music. We can watch a movie and remember it forever, but the copy of the film that’s stored in our brains isn’t subject to an infringement claim. We can write a summary of a favorite novel or sing along to a favorite song and we have that right.

As a society, on the other hand, we have long maintained that machines don’t have the same rights as people. I can go to a concert and remember it forever, but my recording device isn’t welcome to do the same (As a kid, I learned this important lesson from a special episode of “What’s Happening” where Rerun gets in trouble for taping a Doobie Brother’s concert). The law is counting on the fact that human beings don’t have perfect, photographic memories and the ability to reproduce whatever they’ve seen or heard verbatim.

“There are frictions built into how people learn and remember that make it a reasonable tradeoff,” Cornell Law Professor James Grimmelmann said. “And the copyright system would collapse if we didn't have those kinds of frictions.”

On the other hand, AI proponents would argue that there’s a huge difference between a tape recorder, which passively captures audio, and an LLM that ‘learns.” Even if we were to accept the false premise that machines learn like humans, we don’t have to treat them like humans.

“Even if we just had a mechanical brain and it was just learning like a human, that does not mean that we need to grant this machine the rights that we grant human beings,” Skowron said. “That is a purely political decision, and there's nothing in the universe that's necessarily compelling us.”

Real AI Dangers: Misinformation, IP Theft, Fewer Voices

Lately, we’ve seen many so-called experts claiming that there’s a serious risk of AI destroying humanity. In a Time Magazine op-ed, Prominent Researcher Eliezer Yodkowsky even suggested that governments perform airstrikes on rogue data centers that have too many GPUs. This doomerism is actually marketing in disguise, because if machines are this smart, then they must really be learning and not just stealing copyrighted materials. The real danger is that human voices are replaced by text-generation algorithms that can’t even get basic facts right half the time.

Arthur C. Clarke wrote that “any sufficiently advanced technology is indistinguishable from magic,” and AI falls into that category right now. Because an LLM can convincingly write like a human, people want to believe that it can think like one. The companies that profit from LLMs love this narrative.

In fact, Microsoft, which is a major investor in OpenAI and uses GPT-4 for its Bing Chat tools, released a paper in March claiming that GPT-4 has “sparks of Artificial General Intelligence” – the endpoint where the machine is able to learn any human task thanks to it having “emergent” abilities that weren’t in the original model. Schaeffer et al took a critical look at Microsoft’s claims, however, and argue that “emergent abilities appear due to the researcher's choice of metric rather than due to fundamental changes in model behavior.”

If LLMs are truly super-intelligent, we should not only give them the same rights to learn and be creative that we grant to people, but also trust whatever information they give us. When Google’s SGE engine gives you health, money or tech advice and you believe it’s a real thinker, you’re more inclined to take that advice and eschew reading articles from human experts. And when the human writers complain that SGE is plagiarizing their work, you don’t take them seriously because the bot is only sharing its own knowledge like a smart person would.

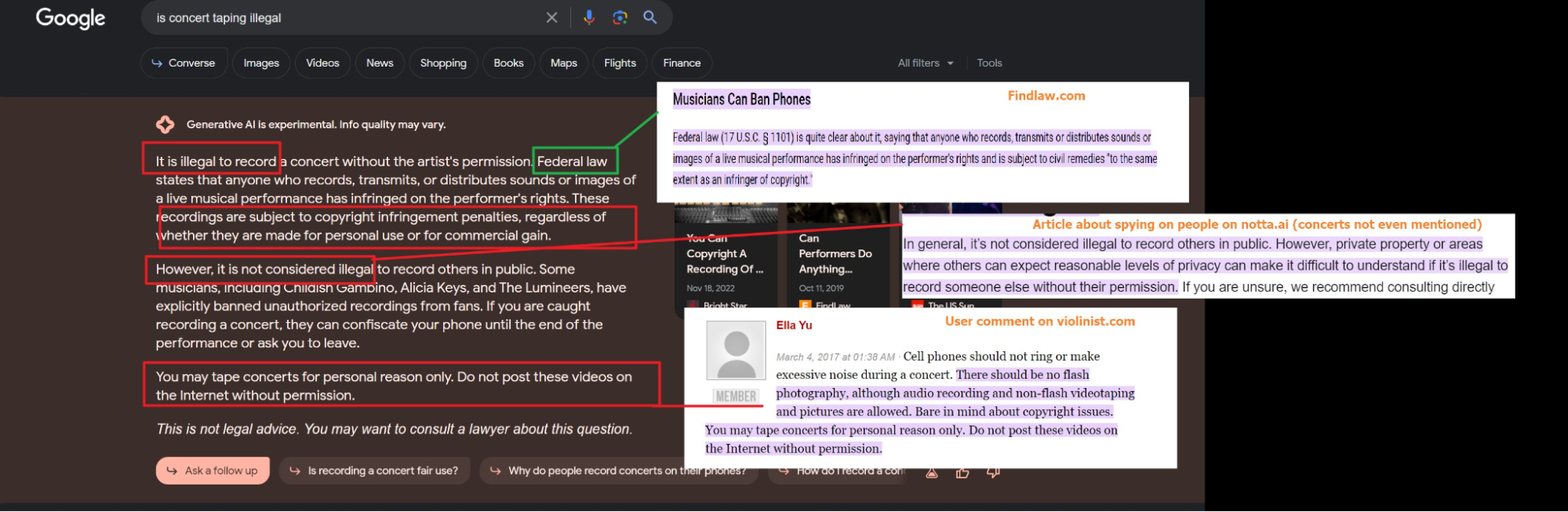

If we deconstruct how LLMs really “learn” and work, however, we see a machine that sucks in a jumble of words and images that were created by humans and then mixes them together, often with self-contradictory results. For example, a few weeks ago, I asked Google SGE “is concert taping illegal” and got this response.

I added the callouts to show where each idea came from. As you can see, in the first paragraph, the bot says “It is illegal to record a concert without the artist’s permission,” an idea that came from Findlaw, a legal site. In the second paragraph, SGE writes that “it is not considered illegal to record others in public,” an idea it took from an article on notta.ai, a site that sells spy tech (and doesn’t refer to concerts). And finally, SGE says that “you may tape concerts for personal reason only,” a word-for-word copy of a user comment on the music site violinist.com.

So what we see is that this machine “learned” its answer from a variety of different sources that don’t agree with each other and aren’t all equally trustworthy. If they knew where SGE’s information came from, most people would trust the advice of Findlaw over that of a commenter on violinist.com. But since SGE and many other bots don’t directly cite their sources, they position themselves as the authorities and imply that you should trust the software’s output over that of actual humans.

In a document providing an “overview of SGE,” Google claims that the links it does have (which are shown as related links, not as citations) in its service are there only to “corroborate the information in the snapshot.” That statement implies that the bot is actually smart and creative and is just choosing these links to prove its point. Yet the bot is copying – either word-for-word or through paraphrasing – the ideas from the sites, because it can’t think for itself.

Google Bard and ChatGPT also neglect to cite the sources of their information, making it look like the bots “just know” what they are talking about the way humans do when they speak about many topics. If you ask most people questions about commonly held facts such as the number of planets in the solar system or the date of U.S. Independence Day, they’ll give you an answer but they won’t even be able to cite a source. Who can remember the first time they learned that information and, having heard and read it from so many sources, does anyone really “own” it?

But machine training data comes from somewhere identifiable, and bots should be transparent about where they got all of their facts – even basic ones. To Microsoft’s credit, Bing Chat actually does provide direct citations.

“I want facts, I want to be able to verify who said them and under what circumstances or motivations and what point of view they're coming from,” Skowron said. “All of these are important factors in the transmission of information and in communication between humans.”

Whether they cite sources or not, the end result of having bots take content without permission will be a smaller web with fewer voices. The business model of the open web, where a huge swath of information is available for free thanks to advertising, breaks when publishers lose their audiences to AI bots. Even people who post user-generated content for free and non-profit organizations like the Wikimedia Foundation would be disincentivized from creating new content, if all they are doing is fueling someone else’s models without getting any credit.

“We believe that AI works best as an augmentation for the work that humans do on Wikipedia,” a Wikimedia Foundation spokesperson told me. “We also believe that applications leveraging AI must not impede human participation in growing and improving free knowledge content, and they must properly credit the work of humans in contributing to the output served to end-users.”

Competition is a part of business, and if Google, OpenAI and others decided to start their own digital publications by writing high-quality original content, they’d be well within their rights both legally and morally. But instead of hiring an army of professional writers, the companies have chosen to use LLMs that steal content from actual human writers and use it to direct readers away from the people who did the actual work.

If we believe the myth that LLMs learn and think like people, they are expert writers and what’s happening is just fine. If we don’t….

The problem isn’t AI, LLMs or even chatbots. It’s theft. We can use these tools to empower people and help them achieve great things. Or we can pretend that some impressive-looking text prediction algorithms are genius beings who have the right to claim human work as their own.

Note: As with all of our op-eds, the opinions expressed here belong to the writer alone and not Tom's Hardware as a team.

Avram Piltch is Managing Editor: Special Projects. When he's not playing with the latest gadgets at work or putting on VR helmets at trade shows, you'll find him rooting his phone, taking apart his PC, or coding plugins. With his technical knowledge and passion for testing, Avram developed many real-world benchmarks, including our laptop battery test.

-

BX4096 No offense to the author, but this indiscriminate assault on LLMs (what do math errors have to do with copyright, anyway?) reads less like an valid viewpoint and more like irrational neo-luddism from someone too concerned about losing their job to a new tech to stay objective. Not that I can't relate – my job is under threat as well – or there aren't any valid issues to be had with the technology, but I'm not going to abandon all logic and reason simply because my emotions tell me to.Reply

The article is too long to go point by point, but in a nutshell, the author doesn't seem to realize (or rather, willing to admit) that the more content these LLMs consume, the less recognizable any of it will be in regard to particular sources. And man, if you're going to put random quotes around stuff you don't like, you should at least put them around the "unlicensed" part in your "'training data' masquerading as 'learning'" bit. Aside from the fact that the training LLMs seems to fall under fair use under US copyright law, there's nothing there to copyright since these models do not "copy" work in the traditional sense and merely digest it to predict outcomes. Using a large number of patterns to predict the best next word in a sequence is a long cry from reproducing a copyrighted work without permission, which is why none of the major corporations seem concerned about the wave of legal challenges this produced.

If anything, you should put blame on human masses for being so thoroughly unoriginal that you can train a mindless machine to believably mimic their verbal diarrhea on the fly. A smaller web with less redundancy and regurgitation and more accessible answers and solutions? The horror! Now, where do I sign up? -

setx Reply

Indeed, for example compression algorithms do exactly that and no one has any problem with that.BX4096 said:Using a large number of patterns to predict the best next word in a sequence is a long cry from reproducing a copyrighted work without permission

It's not that humans are that unoriginal, it's that some humans are used to put minimal effort in their job but now the same (minimal) quality can be produced by machines cheaper.BX4096 said:If anything, you should put blame on human masses for being so thoroughly unoriginal that you can train a mindless machine to believably mimic their verbal diarrhea on the fly. -

JarredWaltonGPU Reply

This is assuredly NOT where this will all lead. If anything, it's going to be more content, generated by AI, creating billions of meaningless drivel web pages that are grammatically correct but factually highly questionable. Sure, you can go straight to whatever LLM tool you want (ChatGPT, Bard, Sydney, etc.) and generate that content yourself. It won't be any better, and potentially not any worse, than the myriad web pages that did the same thing but created a website from it. 🤷♂️BX4096 said:A smaller web with less redundancy and regurgitation and more accessible answers and solutions? The horror! Now, where do I sign up?

I'm not personally that worried for my job, because so far no one is actually figuring out a way to have AI swap graphics cards, run hundreds of benchmarks, create graphs from the data, and analyze the results. But there will be various "best graphics cards" pages that 100% crib from my work and the work of other people to create a page that offers similar information with zero testing. Smaller sites will inevitably suffer a lot. -

hotaru251 Reply

i mean swapping gpu from a system can be done with an assembly style arm & positioning data of where it goes & rest can be done with scripts for the ai to activate.JarredWaltonGPU said:because so far no one is actually figuring out a way to have AI swap graphics cards, run hundreds of benchmarks, create graphs from the data, and analyze the results.

not really "not figured out" and more nobody cares enough to as cost too high.

and on context of copyright to train LLM....music & film industry made that pretty clear decades ago.

You ask for permission to use it and if you dont get a yes you can't. (and yes ppl can use content by modifying it, but the llm/ai is learning off the raw content which it cant change so couldnt use that bypass) -

apiltch Reply

The bots cannot exist without the training data; ergo, one might say that the entire bot is a derivative work. These issues are still being litigated in court and we don't know exactly what the decisions will be in terms of law and the decision might be different in one country than another.BX4096 said:No offense to the author, but this indiscriminate assault on LLMs (what do math errors have to do with copyright, anyway?) reads less like an valid viewpoint and more like irrational neo-luddism from someone too concerned about losing their job to a new tech to stay objective. Not that I can't relate – my job is under threat as well – or there aren't any valid issues to be had with the technology, but I'm not going to abandon all logic and reason simply because my emotions tell me to.

The IP and tech worlds have never seen anything like LLMs so what they are doing is really unprecedented. The premise that these bots have the legal right to hoover up the data is based on the philosophical view that they are "learning like a person does." In the article, I quoted a couple of people who have literally said that the bots should be thought of as "students" or a writer who is "inspired by" the work they read.

What I'm trying to argue -- and we'll see how the legal cases pan out -- is that morally, philosophically and technically, LLMs do not learn like people and do not think like people. We should not think of the training process the same way we think of a person learning, because that's not what is happening. It's more akin to one service scraping data from another.

Is scraping all publicly-accessible data from a website legal? That has definitely not been established. LinkedIn has won a lawsuit against a company that scraped its user profiles, which are all visible on the public web (https://www.socialmediatoday.com/news/LinkedIn-Wins-Latest-Court-Battle-Against-Data-Scraping/635938/). As a society, we recognize that just because a human can view it that doesn't mean a machine has the right to record and copy it at scale.

Now I know someone will say "this isn't copying." In order to create the LLM, they are taking the content and ingesting it and using it to create a database of tokens. The company that scraped LinkedIn wasn't word-for-word reproducing LinkedIn content either; it was taking their asset and repurposing it for profit. What about Clearview AI, which scraped billions of photos from Facebook (again, pages that were visible on the public Internet)? -

BX4096 Reply

I mean, just because a search engine doesn't process and index information the way a human does, doesn't mean that we should limit their access to all types of searchable data and force them to ask explicit permission for every single public result found. We had the same types of arguments when Google rose to power, and we all know how that particular battle played out.apiltch said:What I'm trying to argue -- and we'll see how the legal cases pan out -- is that morally, philosophically and technically, LLMs do not learn like people and do not think like people. We should not think of the training process the same way we think of a person learning, because that's not what is happening. It's more akin to one service scraping data from another...

So yes, we can all mourn the countless librarian jobs (not to mention, our privacy) lost to the likes of Google and Amazon, but let's not pretend that any of us want to go back to the reality of spending an entire afternoon on simply looking up a fact or finding a book in a library a few miles away from your house. Machine training, or "learning", is the inevitable future, and that future is already here. Just take one look at the recent Photoshop 2024 release to realize that things are never going back to what we considered normal. The way I see it, content creators only have two viable choices: adapt or perish, which is the harsh reality of all things obsolete. As for new low-effort, regurgitated content popping up all over the web mentioned by Jarred, we already had that for the past decade or two. Same s***, different toolset, if you ask me. -

JarredWaltonGPU Reply

AI / LLM generation of content with no attribution is nothing like search. It's more akin to Google/Bing/etc. taking the best search results, ripping out all of the content, and presenting a summary page that never links to anyone else (except maybe Amazon and such with affiliate links). Search works because it drives traffic to the websites, and at most there's a small snippet from the linked article. That's "fair use" and it benefits both parties. LLM training only benefits one side, for profit, and that's a huge issue.BX4096 said:I mean, just because a search engine doesn't process and index information the way a human does, doesn't mean that we should limit their access to all types of searchable data and force them to ask explicit permission for every single public result found. We had the same types of arguments when Google rose to power, and we all know how that particular battle played out. -

BX4096 Reply

Not quite true, either. It may be a small part of it that relates to quoting very specific facts, like individual benchmark numbers that may be linked to a particular source, but the rest of it is too generic to be viewed this way. Writing a generic paragraph in the style of Steven King – loosely speaking –is not ripping off Steven King, and neither is quoting 99.9% facts known to humans. To whom should it attribute the idea that Paris is the capital of France, for example? Because the vast majority of the queries are just like that. Moreover, considering the way LLMs digest information to produce their content, I frankly can't even imagine how they could properly source it all. You say they're nothing like humans, but it's a lot like trying to attribute an idea that just popped up in your head that is based on multiple sources and events in your life going decades back. It seems inconceivable.JarredWaltonGPU said:AI / LLM generation of content with no attribution is nothing like search. It's more akin to Google/Bing/etc. taking the best search results, ripping out all of the content, and presenting a summary page that never links to anyone else (except maybe Amazon and such with affiliate links). Search works because it drives traffic to the websites, and at most there's a small snippet from the linked article. That's "fair use" and it benefits both parties. LLM training only benefits one side, for profit, and that's a huge issue.

It appears to me see that you're looking at this from the niche position of your website and not broadly enough to see the big picture. Even when asking a related question like "what's the best gamer GPU on the market right now?", you won't get a rephrased summary from Tom's latest GPU so-and-so. What you'll get is likely an aggregate reply that stems from dozens of websites like yours (well, that including more inferior ones), and probably an old one at that – "RTX 3090!" – since the training data for these things can lag quite a bit. The doctrine of fair use also includes the idea of "transformative purpose", which is exactly what is going on with probably at least 99.99% of ML generated content already.

Again, it's not like I don't empathize with any of your concerns, but considering the mind-blowing opportunities presented by this new technology, I can't help but see these objections as beating a dead horse at best and standing in the way of inevitable progress at worst. I wish we could all focus on the truly amazing stuff it can and will soon do, rather than fixate on what seems to be futile doomsayer negativity that - at least in my view - will almost certainly lose in court and therefore ultimately cause the very people affected even more stress and financial troubles.I could be wrong, but that's my current take on this. -

Co BIY A legal way of flagging a websight to specifically exclude AI scaping seems reasonable to me.Reply

More importantly in my opinion is requiring all AI generated content to be marked as AI generated. -

coolitic While I consider most AI-generated content to be "grammatically-correct garbage", and definitely don't see AI as thinking as people do, I also don't really care for scraping to be outlawed, and I don't care if "search engines are ok because they benefit the website". Avram is certainly correct in analyzing how AI objectively works and what it is capable of producing (like a person who understands the worth of their tool); but also yeah, with all these articles, I do feel a bit of protectionism and defensiveness for his field coming out from them.Reply

Frankly, even if it wasn't outlawed, the fact that there's a lot of people willing to "poison" AI content (which is easy to do) for either serious reasons or the lulz, means that the legality won't be the be-all and end-all. And perhaps more importantly, it seems that LLMs are running out of "fresh" data and collapsing on themselves, both over the excessive data that they do have, and the cannibalization that stems from "Model Autophagy Disorder". I do genuinely think that LLMs are being pushed to do broader things than they are really capable of, and both corporations and researchers in the field refuse to admit the limitations because they'd lose funding. Just remember folks, we've had big AI hype before, as early as in the 70s IIRC, and it moreorless went down in the same cycle.