AMD CDNA 3 Roadmap: MI300 APU With 5X Performance/Watt Uplift

Probably lots of INT8 matrix operations

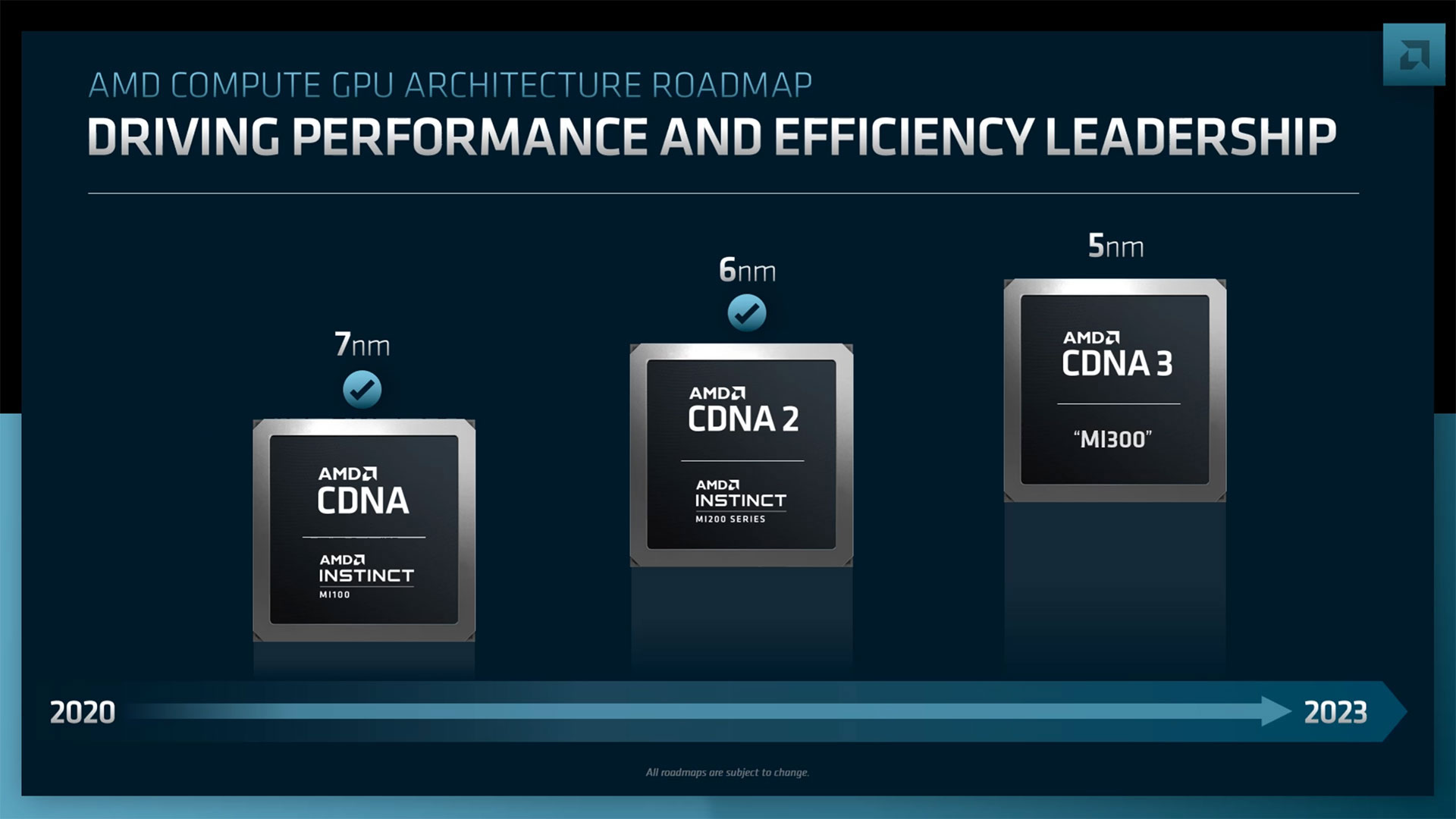

AMD talked about its latest CDNA roadmap at its financial analysts day, and the big news for data center graphics is the upcoming CDNA 3 architecture and the MI300 APU. Yes, you saw that right: AMD will be making a full-blown APU that combines CPU and GPU chips in a single product.

- AMD CPU Core Roadmap, 3nm Zen 5 by 2024, 4th-Gen Infinity Architecture

- AMD GPU Roadmap: RDNA 3 With 5nm GPU Chiplets Coming This Year

- AMD Zen 4 Ryzen 7000 Has 8–10% IPC Uplift, 35% Overall Performance Gain

- AMD’s Data Center Roadmap: EPYC Genoa-X, Siena, and Turin

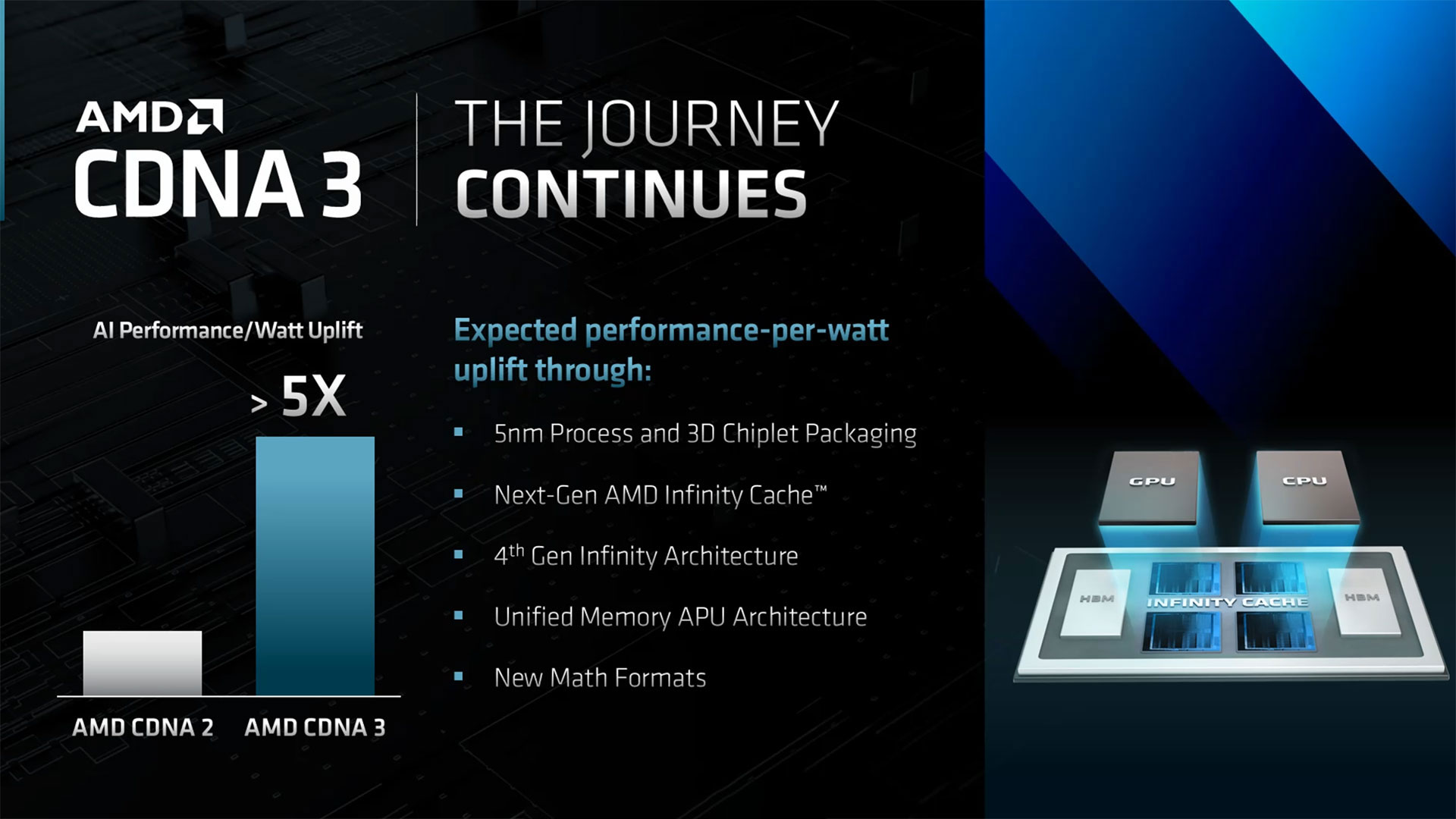

Starting with CDNA 3, AMD claims it will deliver more than a 5X performance per watt (perf/watt) upgrade over the existing CDNA 2 products. That might seem like an incredible feat, but digging a bit deeper suggests an easy way AMD could get to that figure. Unlike its consumer graphics products, CDNA contains matrix cores (similar to Nvidia's tensor cores). The Instinct MI250X currently delivers up to 95.7 teraflops of peak FP64 matrix operations, or four times that figure for peak FP16 and bfloat16 throughput of 383 teraflops. The catch is that MI250X has the same 383 teraflops for INT8 and INT4 performance.

It's a safe bet that AMD's claims of 5X the perf/watt aren't a universal increase in efficiency for FP64, FP32, and other formats. More likely is that AMD will boost the INT8 throughput to being double the FP16/bfloat16 rate, and INT4 could double that again. That would provide a 4X improvement, and architectural improvements of 25% would get that to 5X. AMD also mentions new math formats, which would go along with that boost in performance. Of course, perf/watt is always a nebulous metric anyway, so file this away as an "up to 5X or more" improvement and we'll wait to see what the actual performance looks like.

Besides improvements in performance and efficiency, CDNA 3 will contain a 4th generation Infinity Fabric and a next generation Infinity Cache. As expected, CDNA 3 will use a 5nm process technology, likely TSMC N5 or N5P. That should help with reaching the other goals for the design.

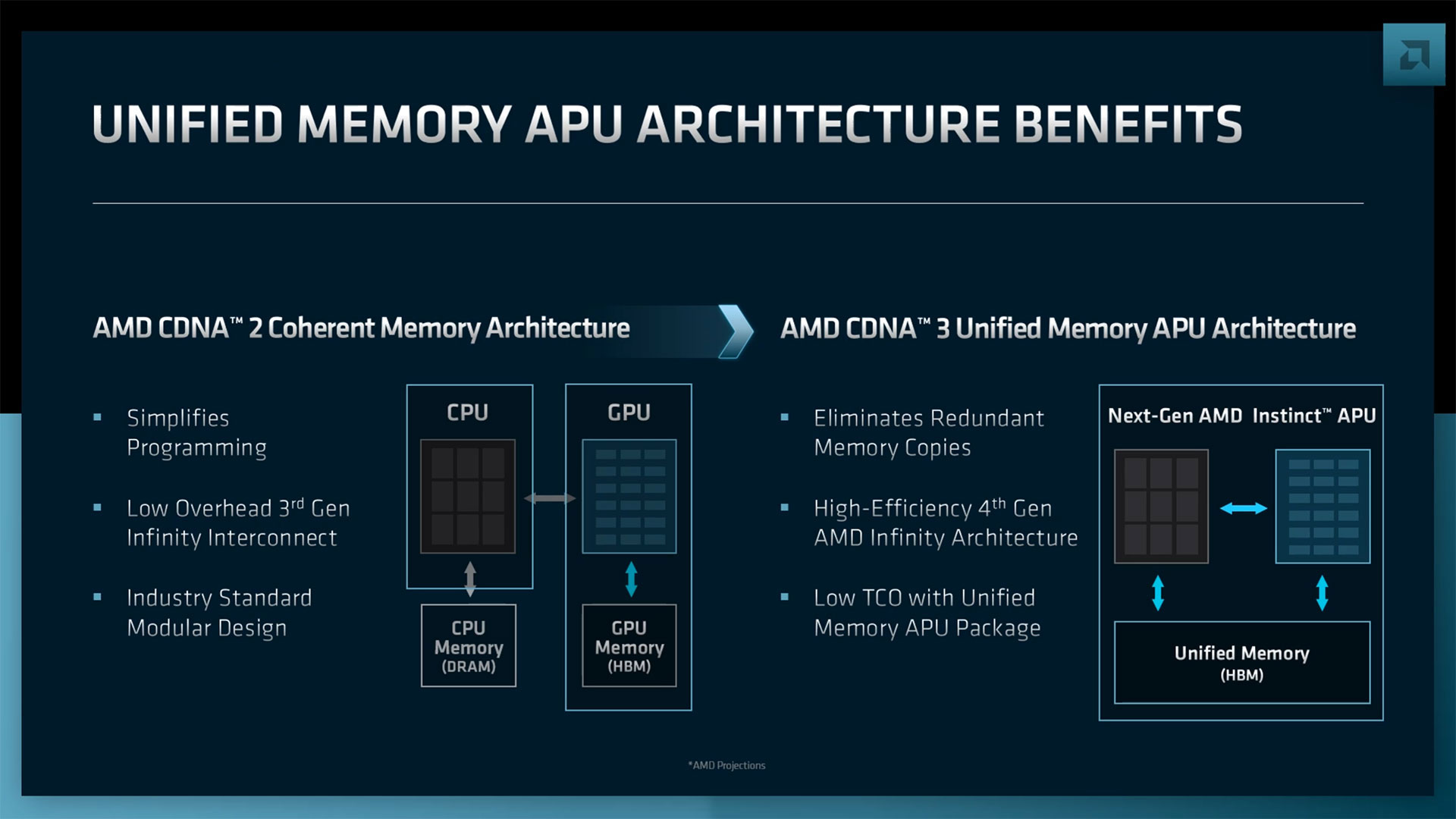

CDNA 3 will also move from a coherent memory architecture used in CDNA 2 to a unified memory architecture with CDNA 3. This is a critical improvement, as a lot of the power used in data center workloads goes to moving data around. Reducing the need for redundant copies can greatly increase overall efficiency, which brings up the next point.

AMD's Instinct MI300 solution will feature both CPU and GPU chiplets in the packaging. AMD calls this the first data center APU, accelerated processing unit. That's interesting as AMD hasn't used the term APU as much in recent years with its Ryzen solutions that have integrated graphics. Perhaps there will be a resurgence of APU branding, but the combination of Zen 4 CPU cores and CDNA 3 GPU cores should prove incredibly potent.

MI300 will feature advanced packaging that puts CPUs, GPUs, cache, and HMB together on a single package. The earlier slide shows a package with what appear to be four CPU/GPU chiplets paired with HBM. Given the focus on computational throughput, if that rendering is reasonably accurate, we suspect AMD will use three GPU chiplets with a single CPU chiplet, but AMD hasn't said one way or another.

This has some interesting implications for AMD's upcoming supercomputer endeavors, as MI300 will likely be the main engine behind the El Capitan system. Where Frontier uses Zen 3 EPYC "Trento" processors, and links each 64-core CPU with four MI250X GPUs, El Capitan may end up being quite different. Using an external Zen 4 CPU and then combining that with four MI300 APUs that each contain a CPU seems unnecessary. Instead, El Capitan could simply have a 1U blade that packs in as many MI300 APUs as will fit.

AMD says the resulting MI300 will deliver an 8X increase in AI training performance versus MI250X, and again that likely goes back to improvements in INT8 and INT4 throughput, combined with more GPU cores in general. MI250X contains a pair of graphics compute dies (GCD) in a package, and MI300 looks like it may have three CDNA 3 GCDs alongside a Zen 4 CPU die. That's 50% more graphics potential, plus the architectural enhancements.

AMD didn't reveal anything beyond CDNA 3 in its roadmap, though CDNA 4 will almost certainly follow. The GPU roadmap did mention RDNA 4, with a 2024 launch time frame, and CDNA 4 would likely follow a year later. We'll let AMD get CDNA 3 out the door first, and look forward to hearing additional details on the design and architecture in the coming months.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Bikki If they claim 8x AI training, that is float matrix multiplication performance, something like bfloat16 and not int8.Reply -

JarredWaltonGPU Reply

AI training has been looking into lower precision alternatives for years. Google's TPUs focus mostly on INT8 from what we can tell. Nvidia has talked teraops (INT8) for a few years, and I think Intel or Nvidia even talked about INT2 for certain AI applications as having a benefit. Given MI250X has the same teraflops for bfloat16, fp16, int8, and int4, that means there's no speedup right now. But if AMD reworks things so that two int8 or four int4 can execute in the same time as a single 16-bit operation, they get a 2x or 4x speedup.Bikki said:If they claim 8x AI training, that is float matrix multiplication performance, something like bfloat16 and not int8. -

bit_user Reply

Jarred, you're missing a key point: training vs. inference. @Bikki was pointing out that int8 isn't useful for training, which traditionally requires more range & precision, like BF16. It's really inference that uses the lower-precision data types you mentioned.JarredWaltonGPU said:AI training has been looking into lower precision alternatives for years. Google's TPUs focus mostly on INT8 from what we can tell. Nvidia has talked teraops (INT8) for a few years, and I think Intel or Nvidia even talked about INT2 for certain AI applications as having a benefit. -

JarredWaltonGPU Reply

AFAIK, Nvidia and others are actively researching training as well as inference using lower precision formats. Some things do fine, others need the higher precision of BF16. If some specific algorithms can work with INT8 or FP8 instead of BF16/FP16, that portion of the algorithm can effectively run twice as fast. Nvidia's transformer engine is supposed to help with switching formats based on what is needed. https://blogs.nvidia.com/blog/2022/03/22/h100-transformer-engine/bit_user said:Jarred, you're missing a key point: training vs. inference. @Bikki was pointing out that int8 isn't useful for training, which traditionally requires more range & precision, like BF16. It's really inference that uses the lower-precision data types you mentioned. -

bit_user Reply

That link only mentions int8 in passing, but actually talks about using fp8 for training.JarredWaltonGPU said:AFAIK, Nvidia and others are actively researching training as well as inference using lower precision formats. Some things do fine, others need the higher precision of BF16. If some specific algorithms can work with INT8 or FP8 instead of BF16/FP16, that portion of the algorithm can effectively run twice as fast. Nvidia's transformer engine is supposed to help with switching formats based on what is needed. https://blogs.nvidia.com/blog/2022/03/22/h100-transformer-engine/

The cute thing about fp8 is that it's so small you can exhaustively enumerate all possible values in a reasonably-sized table. The Wikipedia page has one that's 32 rows and 8 columns:

https://en.wikipedia.org/wiki/Minifloat#Table_of_values