Asus Resurrects GeForce GT 710 GPU With Four HDMI Ports

Apparently, having six different variants of the GeForce GT 710 simply isn't enough for Asus. As spotted by Japanese publication Hermitage Akihabara, Asus has quietly added a new seventh variant to the mix.

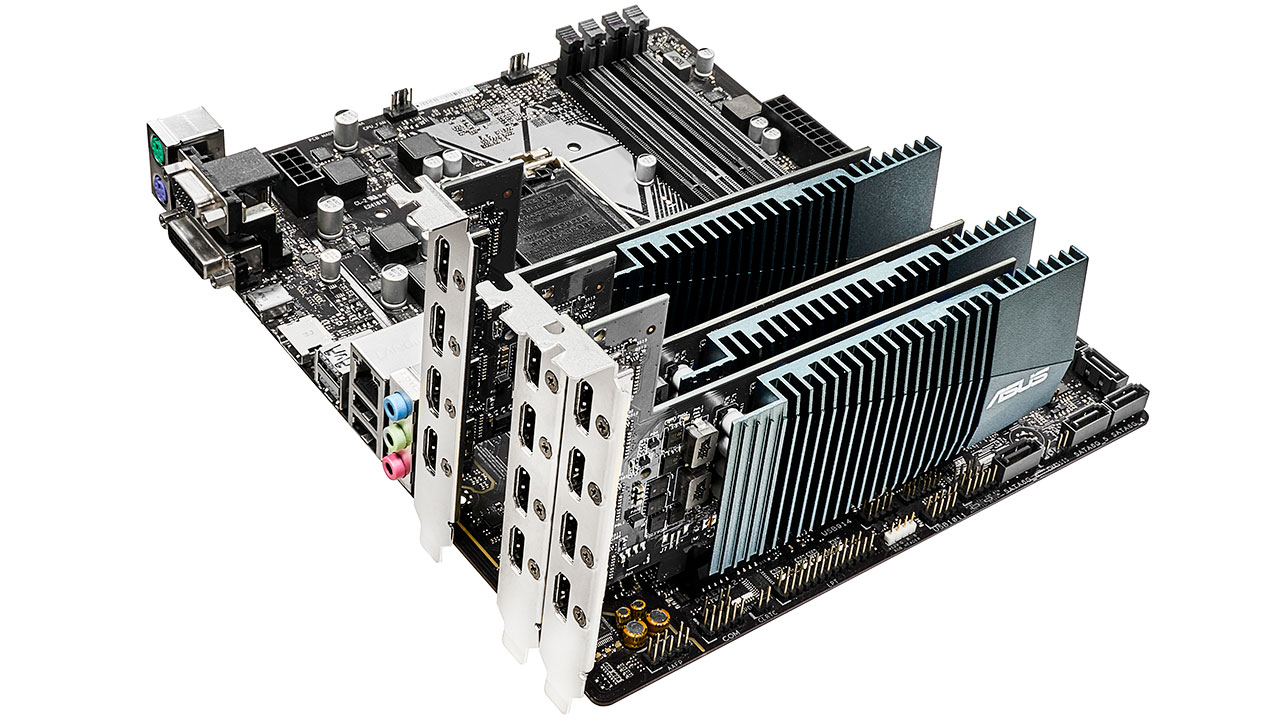

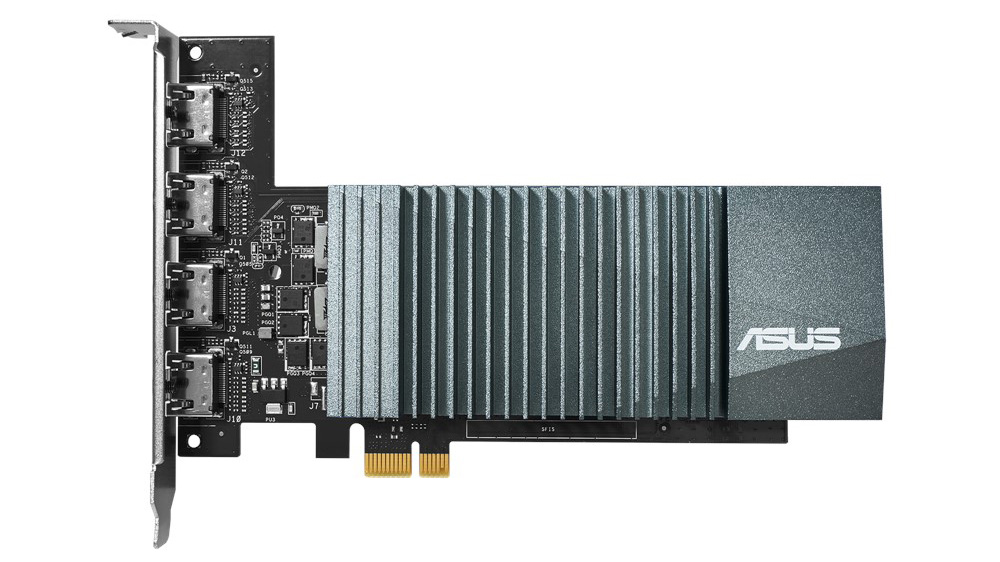

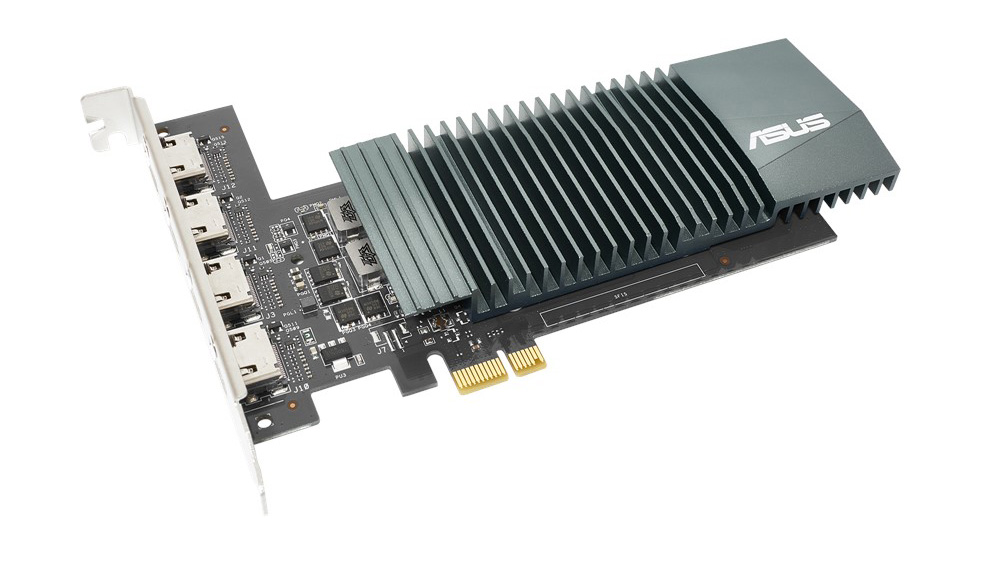

The new Asus GeForce GT 710, which goes under the GT710-4H-SL-2GD5 part number, features a friendly, single-slot design with a passive cooling solution and dimensions of 6.57 x 4.13 x 0.74 inches (16.7 x 10.5 x 1.9cm). The GeForce GT 710 doesn't even require a PCIe 3.0 slot; it's perfectly happy on a PCIe 2.0 x1 interface, presenting the opportunity to use the graphics card on older motherboards that lack a PCIe 3.0 slot.

Asus' latest Kepler-powered graphics card is based on the GK208 silicon that comes sporting 192 CUDA cores that clock up to 954 MHz. There's also 2GB of GDDR5 memory running at 5,012 MHz across a 64-bit memory bus. The GeForce GT 710 isn't the type of graphics card that you would use to game on. Instead, it's an affordable option for users that are looking for a upgrade above integrated graphics or want to use multiple monitors simultaneously.

The design of the display outputs is what separates this specific GeForce GT 710 from Asus' previous models. The GT710-4H-SL-2GD5 comes with four HDMI ports so it can accommodate up to four 4K monitors.

Originally, Kepler didn't natively support the HDMI 2.0 standard, which is required to push 4K at 60 Hz over an HDMI port. Through a technique called chroma subsampling, Nvidia was able to let the Kepler-based graphics card output an image at the aforementioned resolution and refresh rate. There are still some caveats though.

The GT710-4H-SL-2GD5 can only do 60 Hz if paired with a single monitor. When two or more monitors are used at the same time, the refresh rate drops down to 30 Hz.

Although not many are left, the GeForce GT 710, which came out in January 2016, is still in circulation. Online retailers typically sell the graphics cards for $50-$100, depending on the brand.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Zhiye Liu is a news editor, memory reviewer, and SSD tester at Tom’s Hardware. Although he loves everything that’s hardware, he has a soft spot for CPUs, GPUs, and RAM.

-

derekullo "Instead, it's an affordable option for users that are looking for a upgrade above integrated graphics or want to use multiple monitors simultaneously "Reply

This is useful if you need 4 HDMI ports but as for gaming, current generation integrated from AMD and Intel are better.

https://www.videocardbenchmark.net/compare/Radeon-RX-Vega-11-vs-GeForce-GT-710-vs-Intel-HD-P630/3893vs2910vs3682

This is comparing the Geforce GT 710 to the integrated graphics in the Ryzen 5 3400G and the integrated graphics in an Intel i5-9500.

Radeon RX Vega 11

2102

GeForce GT 710

607

Intel HD P630

1727

Obviously if you had a Pentium 4 with Potato HD graphics the Geforce GT 710 would be an upgrade, assuming you found a motherboard with PCI-E 2.0 that was the same socket as the Pentium 4, but you have much bigger problems at this point. -

BaRoMeTrIc Reply

Why does it have to be pcie 2.0? it's a pcie 3.0 device, but it will run just fine on pcie 2.0 because of the low memory bandwdith. Even if it was a 8gb gddr5 256bit device it would run on pcie 2.0, you would just reach the bandwidth threshold and bottleneck. It will also run fne on pcie 4.0 because pcie is backward compatible.derekullo said:"Instead, it's an affordable option for users that are looking for a upgrade above integrated graphics or want to use multiple monitors simultaneously "

This is useful if you need 4 HDMI ports but as for gaming, current generation integrated from AMD and Intel are better.

https://www.videocardbenchmark.net/compare/Radeon-RX-Vega-11-vs-GeForce-GT-710-vs-Intel-HD-P630/3893vs2910vs3682

This is comparing the Geforce GT 710 to the integrated graphics in the Ryzen 5 3400G and the integrated graphics in an Intel i5-9500.

Radeon RX Vega 112102

GeForce GT 710607

Intel HD P6301727

Obviously if you had a Pentium 4 with Potato HD graphics the Geforce GT 710 would be an upgrade, assuming you found a motherboard with PCI-E 2.0 that was the same socket as the Pentium 4, but you have much bigger problems at this point. -

InvalidError I'd imagine the primary market for those would be digital signage - running advertisements and other information across multiple large-format public displays.Reply -

mchldpy at invaliderror,Reply

Why would you imagine "the primary market would be ...

across multiple large-format public displays"?

It says with 1 monitor you get 60hz, with 2 you get 30hz, do you get 15hz with 4?

So you think this thing will push multiple large-format public displays?

No fan, no intestinal fortitude. -

derekullo ReplyBaRoMeTrIc said:Why does it have to be pcie 2.0? it's a pcie 3.0 device, but it will run just fine on pcie 2.0 because of the low memory bandwdith. Even if it was a 8gb gddr5 256bit device it would run on pcie 2.0, you would just reach the bandwidth threshold and bottleneck. It will also run fne on pcie 4.0 because pcie is backward compatible.

It needs to be a minimum of PCIe 2.0 because Nvidia and math says so. (Math is at the end)

https://www.evga.com/products/specs/gpu.aspx?pn=f345c5ec-d00d-4818-9b43-79885e8a161f

I am not sure where you are getting the PCIe 3.0 information

The point I was trying to make was if the motherboard doesn't have PCIe and instead has AGP you are out of luck but at that point you have bigger problems ... dead end architecture.

Extending your argument that the PCIe level doesn't matter lets compute the numbers for 4 - 4k video feeds at 30 hertz all through a graphics card on a PCIe 1.0

(You may have meant the version doesn't matter as long as you go higher / more bandwidth but I felt like doing the math anyway ... FOR SCIENCE!)

I'm assuming if they had to use chroma subsampling on PCIe 2.0 then there won't be enough bandwidth at PCIe 1.0 but lets let the math tell us!

3840 x 2160 = 8,294,400 pixels on a single 4k screen

8,294,400 x 4 screens = 33,177,600 pixels on 4 - 4k screens

The screen is refreshed 30 times a second so

33,177,600 x 30 hertz = 995,328,000 pixels per second

But this is only for one color.

995,328,000 x 3 primary colors = 2,985,984,000

Then you divide by 100 million because math and you get

2,985,984,000 / 100,000,000 = 29.85 Gigabits per second or 3.73 Gigabytes per second of bandwidth to power all 4 screens.

Now lets compare this to the PCIe chart

https://en.wikipedia.org/wiki/PCI_Express#History_and_revisions

PCIe 1.0 x8 link

2 gigabytes a second

O darn it isn't quite there.

A PCIe 1.0 x16 link would do it but unfortunately we can't just glue on 8 more links.

PCIe 2.0 x8 link

4 gigabytes a second

We have a winner!

Mathematically it is not possible to run 4 - 4k monitors at 30 hertz on anything short of a PCIe 2.0 x8 link, although a single 1280x720 monitor will work just fine.

I hope this sufficiently answers "Why does it have to be pcie 2.0"

Edit: Apparently the chroma subsampling mentioned earlier was only to cram the information over HDMI 1.4 and had nothing to do with the PCIe bandwidth. -

InvalidError Reply

Yes, a GT710 is perfectly fine for driving 1080p digital signage across multiple TVs. You don't need super-powerful GPUs to push some video and static images with transitions.mchldpy said:at invaliderror,

Why would you imagine "the primary market would be ...

across multiple large-format public displays"?

It says with 1 monitor you get 60hz, with 2 you get 30hz, do you get 15hz with 4?

So you think this thing will push multiple large-format public displays? -

logainofhades Replyderekullo said:

The point I was trying to make was if the motherboard doesn't have PCIe and instead has AGP you are out of luck but at that point you have bigger problems ... dead end architecture.

LGA 775 had PCI-E. Even P4 compatible boards. While I didn't have a P4, I did have one of those said boards. I ran mine with a Xeon X3210.

https://www.gigabyte.com/Motherboard/GA-EP35-DS3L-rev-1x/support#support-cpu

An IGP model

https://www.gigabyte.com/Motherboard/GA-G31M-S2L-rev-11-20/support#support-cpu -

spongiemaster Replymchldpy said:at invaliderror,

It says with 1 monitor you get 60hz, with 2 you get 30hz, do you get 15hz with 4?

So you think this thing will push multiple large-format public displays?

No fan, no intestinal fortitude.

The card will do 30Hz using 2 or more screens at 2160p. At 1080p it should be able to do 60Hz on 4 screens. InvalidError is right, this is perfect for marketing. You don't need RTX Titans to drive the digital menu boards at McDonalds. -

InvalidError Reply

One company I worked for over 10 years ago did digital signage software and was running dual displays on hardware a fraction as powerful as modern entry-level stuff. On many chipsets, hardware acceleration had to be disabled due to driver or hardware bugs when handling multiple video overlays. Modern entry-level IGPs would have been worth their weight in gold back then if they had more outputs.spongiemaster said:You don't need RTX Titans to drive the digital menu boards at McDonalds.