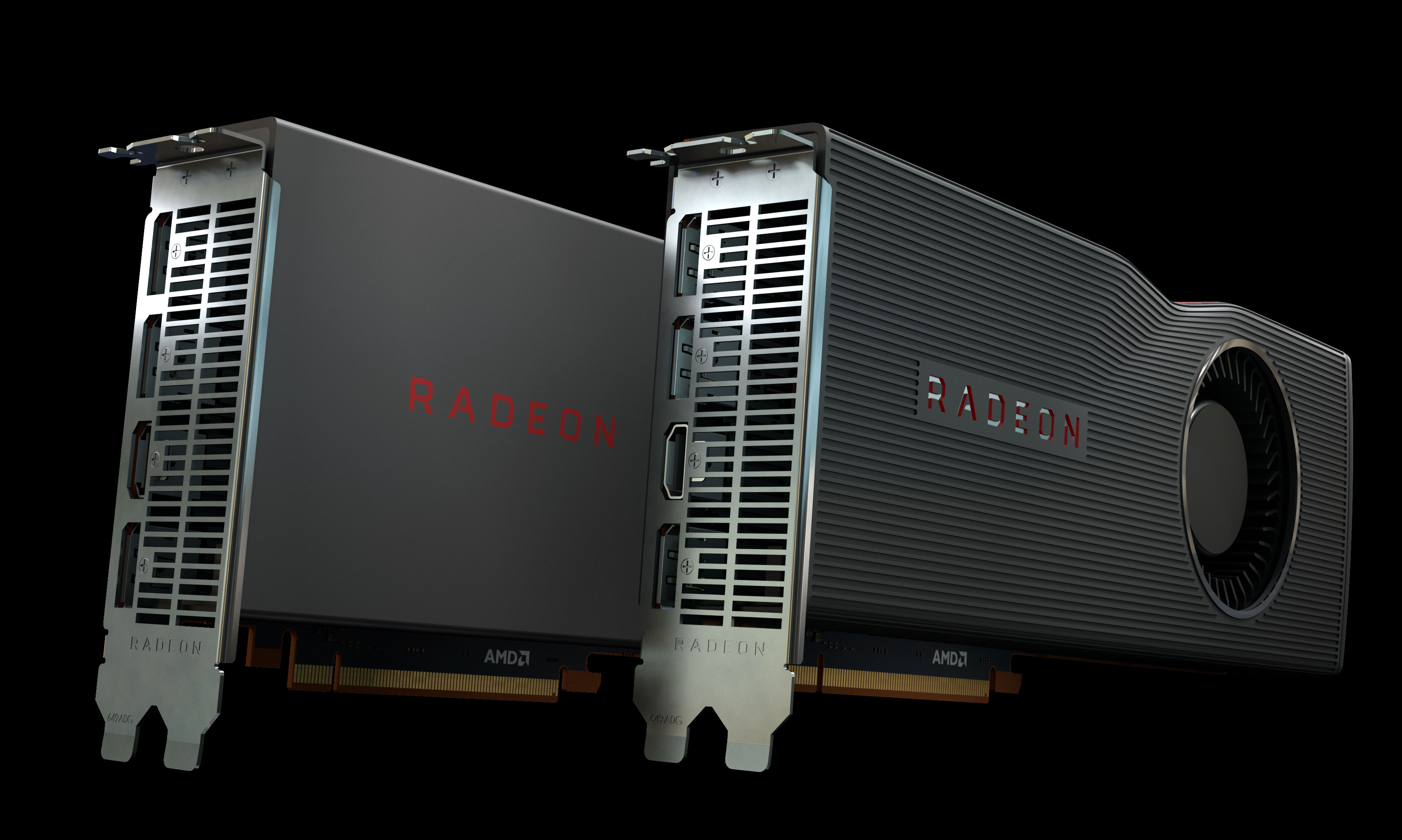

Franken-CrossFire: Radeon RX 5600 XT Joins Radeon RX 5700

AMD completely abandoned the idea of CrossFire support when it introduced its Navi lineup, but if you ever wondered how two Navi graphics cards would perform together, Uniko's Hardware has the answer for you. For the sake of science, the publication paired a Radeon RX 5600 XT together with the Radeon RX 5700.

In the old days, it was pretty common to run a multi-GPU setup. On some occasions, a single graphics card wasn't powerful enough to deliver an adequate gaming experience, and in other occasions, it was just more cost-effective to buy two cheaper graphics cards. Before AMD launched Navi last year, the chipmaker estimated that less than 1% of gamers still use a multi-GPU configuration. Therefore, the company decided to axe CrossFire support with Navi. If you look at it, it was a sound decision as it frees up resources for AMD to distribute elsewhere.

You can't run two Navi graphics cards in a CrossFire configuration. However, you can still achieve a similar effect using the explicit multi-GPU (mGPU) functionality, which is present in the DirectX 12 and Vulkan APIs. It's a flexible technology as you can mix and match different graphics cards from both AMD and Nvidia to your heart's desire.

There are a lot of modern games that leverage the DirectX 12 and Vulkan APIs. Nevertheless, it's up to the developer to decide whether it wants to support mGPU or not. Uniko's Hardware pointed out that Rise of the Tomb Raider and Strange Brigade accepted the mGPU setup without problems. However, Strange Brigade only works with DirectX 12 mode as the game would crash in Vulkan mode. Interestingly, Shadow of the Tomb Raider would crash instantly as soon as it opened. Triple-A titles, including Gears 5, Borderlands 3, Tom Clancy's Rainbow Six Siege and The Division 2 don't support mGPU.

Radeon RX 5600 XT and Radeon RX 5700 mGPU Benchmarks

The test platform was comprised of the latest Ryzen 7 3700X processor, ASRock X570 Taichi motherboard and a G.Skill Flare X DDR4-3200 16GB (2x8GB) memory kit overclocked to 3,600 MHz with a Cas Latency of 16. The graphics cards in question are the Asus Dual Radeon RX 5700 EVO OC Edition and Asus TUF Gaming X3 Radeon RX 5600 XT EVO.

On the software side, Uniko's Hardware is using Windows 10 with the November 2019 update (version 1909) and Radeon Software Adrenalin 2020 Edition 20.2.1. The publication performed the tests at the 1920 x 1080 resolution with Ultra settings in Strange Brigade and Very High settings in Rise of the Tomb Raider.

| Header Cell - Column 0 | Radeon RX 5700 | Radeon RX 5700 + Radeon Rx 5600 XT |

|---|---|---|

| 3DMark Time Spy | 8,508 points | 13,342 points |

| 3DMark Time Spy Graphics Score | 8,271 points | 14,156 points |

| Strange Brigade Average | 138.6 FPS | 228.8 FPS |

| Strange Brigade 1% Low | 98 FPS | 151.2 FPS |

| Rise of the Tomb Raider Average | 116.9 FPS | 191.3 FPS |

| Rise of the Tomb Raider 1% Low | 87.4 FPS | 111.5 FPS |

| Power Consumption | 259.6W | 446.4W |

After enabling mGPU, the 3DMark Time Spy score jumped up by 56.8%. The real-world gaming results show performance improvements up to 65.1% and 63.6% in Strange Brigade and Rise of the Tomb Raider, respectively.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

When it comes to power consumption, the sole Radeon RX 5700 draws up to 259.6W while the mGPU configuration pulls around 446.4W. That's a very significant 72% increase in power draw.

The performance uplift is there, but you won't be enjoying it as much since mGPU support is limited to a handful of games. And then there is also the money issue. The cheapest RX 5600 XT and RX 5700 sell for $275 and $295, respectively. In total, you're looking at a $570 investment. You can pick up a custom Nvidia GeForce RTX 2070 Super for $500 and likely get the same amount of performance at around half the power consumption.

Unless you've recently upgraded to a new graphics card and have an old one lying around, it's pointless to run a multi-GPU setup in this day and age. Even so, you'll still have to find a game that supports it, and that's not to mention having to put up with micro-stuttering.

Zhiye Liu is a news editor, memory reviewer, and SSD tester at Tom’s Hardware. Although he loves everything that’s hardware, he has a soft spot for CPUs, GPUs, and RAM.

-

techgeek Being that this is supported in DX12, one would assume this could be done on nVidia hardware. I wonder if they'll try to benchmark this on nVidia hardware. nVidia still technically "supports" SLI, but not many games support it, especially new titles. I just wonder how the scaling compares between SLI and mGPU?Reply

As for the article, scaling isn't terrible. I like the uplift in the .1% in Rise of the Tomb Raider, because this is where gains are the most important.

I wonder if they were looking for micro-stuttering? As this seemed to plague multi-GPU systems, especially CrossFire, that's why we got FCAT from nVidia which exposed this. -

cusbrar2 Replytechgeek said:I wonder if they were looking for micro-stuttering? As this seemed to plague multi-GPU systems, especially CrossFire, that's why we got FCAT from nVidia which exposed this.

The stuttering exhibits on Navi hardware even with 1 GPU so I don't think that is part of it, once it is fixed for single GPUs it should also go away for mGPU setups... Note that SLI almost always fails to impove 1% lows or min frametimes, while a very good increase is seen here. You'll see games in SLI configurations with 40FPS 1% lows pushing 160FPS etc... -

dehdstar emGPU was such a let down. This is because software companies are too busy pointing the finger at the other as to how it's actually going to be implemented. The result is a stand off.Reply

If you ask me Microsoft should have some sort of system built in so that any dedicated GPU can intuitively begin processing, so long as they are like API cards. It shouldn't be up to the devs, as this just results in some games having the feature and others not. Which means we have wasted silicon sitting in our systems most of the time and inconsistent performances that range from nearly twice the performance to single GPU performance. A clever API should even be able to take an old game and toss extra GPUs at it, as if it were seen as just one (bigger) GPU. It should just be an OS function, in other words. Microsoft can do it, I mean virtual OS's and machines already essentially do this. You can run a server loaded with massive Quadros or Teslas and tell the software how much GPU to dedicate to each workstation. That's got to be harder than an OS just auto-delegating the task to apps, as it would a CPU. -

dehdstar Replytechgeek said:Being that this is supported in DX12, one would assume this could be done on nVidia hardware. I wonder if they'll try to benchmark this on nVidia hardware. nVidia still technically "supports" SLI, but not many games support it, especially new titles. I just wonder how the scaling compares between SLI and mGPU?

As for the article, scaling isn't terrible. I like the uplift in the .1% in Rise of the Tomb Raider, because this is where gains are the most important.

I wonder if they were looking for micro-stuttering? As this seemed to plague multi-GPU systems, especially CrossFire, that's why we got FCAT from nVidia which exposed this.

Depends on how eMGPU is leveraged. Since it's evidently up to the devs to implement, many of them are just going to gravitate to AFR methods, such as SLI/ Crossfire because it's easier. But it's the poorest use for two GPUs. All it's doing is making one GPU render frame 1, while the other renders frame 2. This means you're paying an entire GPU price for at best? 10-20% real world performance.

The end goal with eMGPU was a literal compute pool. Something like the old Voodoo SLI, or even SFR. In the old days? Especially going back to Voodoo SLI? The goal was to create performance that was nearly 2x that of a single GPU. One Voodoo GPU would render the top half and the other the bottom half and there was I think a VSYNC-like feature called Z-sync, or Z-buffer to sync the two GPUs. Around 2008(?) SFR was born, kudos to Nvidia. It attempted to bring back that concept, but was scrapped due to "syncing" issues, allegedly. Honestly? I think that GPU vendors just didn't like people buying multiple cards and not having to upgrading for another 5 years lol.