Nvidia Reveals Ada Lovelace GPU Secrets: Extreme Transistor Counts at High Clocks

Nvidia's Ada GPUs are extremely complex and fast

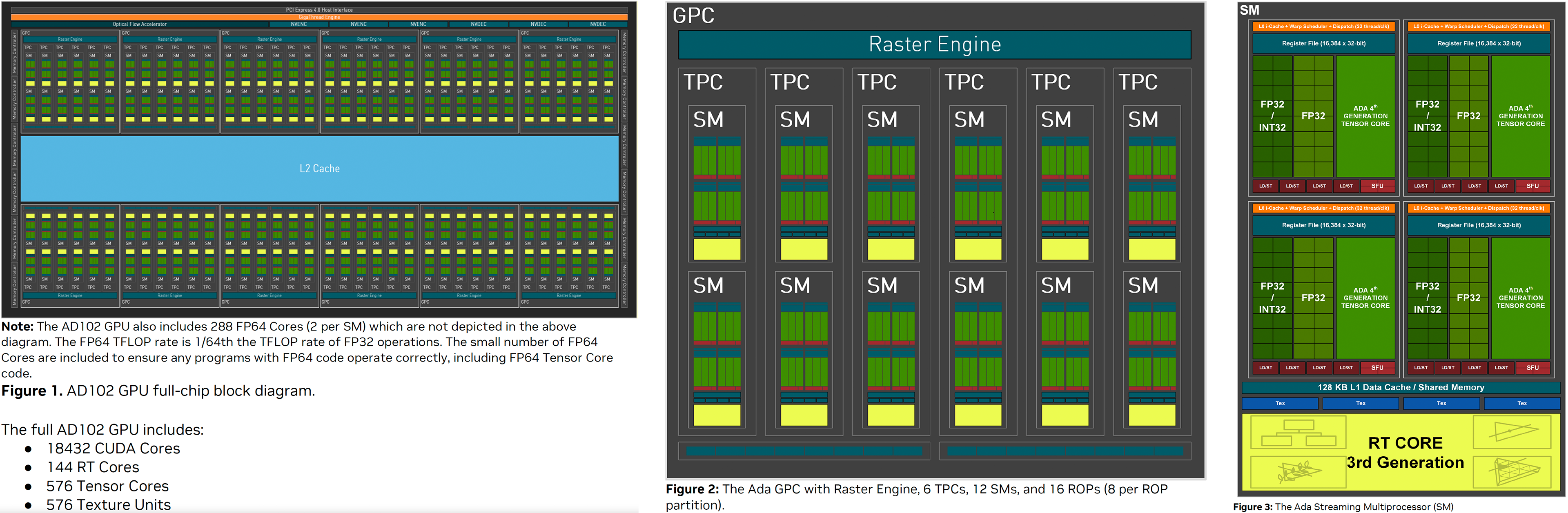

When Nvidia introduced its Ada Lovelace family of graphics processing units earlier this week, it mainly focused on its top-of-the-range AD102 GPU and its flagship GeForce RTX 4090 graphics card. It didn't release too many details about its AD103 and AD104 graphics chips. Fortunately, Nvidia uploaded its Ada Lovelace whitepaper today that contains loads of data about the new GPUs and fills in many gaps. We've updated the RTX 40-series GPUs everything we know hub with the new details, but here's the overview of the new and interesting information.

Big GPUs for Big Gaming

We already know that Nvidia's range-topping AD102 is a 608-mm^2 GPU containing 76.3 billion transistors, 18,432 CUDA cores, and 96MB of L2 cache. We now also know that AD103 is a 378.6 mm^2 graphics processor featuring 45.9 billion transistors, 10,240 CUDA cores, and 64MB L2 cache. As for the AD104, it has a die size of 294.5 mm^2, 35.8 billion transistors, 7680 CUDA cores, and 48MB of L2.

| GPU/Graphics Card | Full AD102 | RTX 4090 | RTX 4080 16GB | RTX 4080 12GB | RTX 3090 Ti |

|---|---|---|---|---|---|

| Architecture | AD102 | AD102 | AD103 | AD104 | GA102 |

| Process Technology | TSMC 4N | TSMC 4N | TSMC 4N | TSMC 4N | Samsung 8LPP |

| Transistors (Billion) | 76.3 | 76.3 | 45.9 | 35.8 | 28.3 |

| Die size (mm^2) | 608 | 608 | 378.6 | 294.5 | 628.4 |

| Streaming Multiprocessors | 144 | 128 | 76 | 60 | 84 |

| GPU Cores (Shaders) | 18432 | 16384 | 9728 | 7680 | 10752 |

| Tensor Cores | 576 | 512 | 320 | 240 | 336 |

| Ray Tracing Cores | 144 | 144 | 80 | 60 | 84 |

| TMUs | 512 | 512 | 304? | 240 | 336 |

| ROPs | 192 | 192 | 112 | 80 | 112 |

| L2 Cache (MB) | 96 | 96 | 64 | 48 | 6 |

| Boost Clock (MHz) | ? | 2520 | 2505 | 2600 | 1860 |

| TFLOPS FP32 (Boost) | ? | 82.6 | 48.7 | 40.1 | 40.0 |

| TFLOPS FP16 (FP8) | ? | 661 (1321) | 390 (780) | 319 (639) | 320 (N/A) |

| TFLOPS Ray Tracing | ? | 191 | 113 | 82 | 78.1 |

| Memory Interface (bit) | 384 | 384 | 256 | 192 | 384 |

| Memory Speed (GT/s) | ? | 21 | 22.4 | 21 | 21 |

| Bandwidth (GBps) | ? | 1008 | 736 | 504 | 1008 |

| TDP (watts) | ? | 450 | 320 | 285 | 450 |

| Launch Date | ? | Oct 12, 2022 | Nov 2022? | Nov 2022? | Mar 2022 |

| Launch Price | ? | $1,599 | $1,199 | $899 | $1,999 |

One of the interesting things that Nvidia tells in its whitepaper is that Ada Lovelace GPUs use high-speed transistors in critical paths to boost maximum clock speeds. As a result, its fully-enabled AD102 GPU with 18,432 CUDA cores is ”capable of running at clocks over 2.5 GHz, while maintaining the same 450W TGP.” Keeping this in mind, we're not surprised that the company is talking about 3.0 GHz clocks for the GeForce RTX 4090 (with 16,384 CUDA cores) reached in its labs. At 3.0 GHz, the GeForce RTX 4090 will absolutely headline our list of the best graphics cards around.

In addition to high clocks, Nvidia's Ada Lovelace GPU also boast massive L2 caches that improve performance in compute intensive workloads (e.g., ray tracing, path tracing, simulations, etc.) and reduces memory bandwidth requirements. Essentially, Nvidia's Ada GPUs take a page from RDNA 2 Infinity Cache's book here, although we believe that general targets for the new architecture were set well before AMD's Radeon RX 6000-series products debuted in 2020.

Speaking of workloads like simulations, we must note that in the supercomputer world they are performed with numbers in double-precision floating-point format (FP64) to improve accuracy of the results. FP64 is more costly than FP32 both in terms of performance and in terms of hardware complexity. This is why computer graphics use FP32 formats and many simulations of non-critical assets are also done with FP32 precision. Meanwhile, the AD102 GPU features just 288 FP64 cores (two per streaming multiprocessors) included to ensure any programs with FP64 code operate correctly, including FP64 Tensor Core code.

Still, AD102's FP64 rate is 1/64th the TFLOP rate of FP32 operations (which is in line with the Ampere architecture). Nvidia does not depict its FP64 cores in diagrams of its streaming multiprocessor (SM) modules and does not disclose the number of such cores in AD103 and AD104 GPUs. The poor FP64 rate of Ada graphics processors emphasizes that these parts are aimed primarily at gaming.

More Transistors = More Performance

Complexity and die sizes of Nvidia's Ada Lovelace graphics processors compared to the company's Ampere GPUs should not come as a surprise. The new Ada GPUs are made using TSMC's 4N (5nm-class) fabrication technologies, whereas Ampere was fabbed on Samsung Foundry's 8LPP process (a 10nm-class node with a 10% optical shrink). That added complexity (transistor count) is what enables impressive performance gains in things like ray tracing and quality gains with DLSS 3.0.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

| GPU/Graphics Card | AD102 | RTX 4090 | RTX 4080 16GB | RTX 4080 12GB | RTX 3090 Ti |

|---|---|---|---|---|---|

| GPU | AD102 | AD102 | AD103 | AD104 | GA102 |

| TFLOPS FP32 (Boost) | ? | 82.6 | 48.7 | 40.1 | 40.0 |

| TFLOPS FP16 (FP8) | ? | 661 (1321) | 390 (780) | 319 (639) | 320 (N/A) |

| TFLOPS Ray Tracing | ? | 191 | 113 | 82 | 78.1 |

Another thing to note is that Nvidia's AD102 GPU has a higher transistor density than its lesser siblings. On the one hand, that 3.6% added transistor density allows it to pack significantly more execution units into AD102 compared to its smaller brethren. But on the other hand, the relaxed transistor density of AD103 and AD104 in many cases enables better yields (assuming that the node's defect density is not high in general) and higher clocks.

It is hard to make predictions about the frequency potential of AD103 and AD104 without access to actual hardware and/or knowledge of their actual yield rates. However, if the AD102 can run at 2.50 GHz ~ 3.0 GHz, then it is reasonable to expect that AD103 and AD104 have even higher potential. We know as well that the RTX 4080 12GB uses a fully enabled AD104 chip running at 2610 MHz, while RTX 4080 16GB uses 95% of an AD103 chip (76 of 80 SMs) running at 2505 MHz, and RTX 4090 only uses 89% (128 of 144 SMs) running at 2510 MHz — also with 25% of the L2 cache disabled.

An extreme number of execution units, enabled by high complexity, coupled with high clocks should deliver remarkable performance gains. Nvidia's GeForce RTX 4090 has over two times higher peak theoretical FP32 compute rate (~82.6 TFLOPS) compared to the GeForce RTX 3090 Ti (~40 TFLOPS).

Meanwhile, the current lineup of Nvidia's Ada GPUs for demanding gamers shows that the company is back on track with its three-chip approach to the high-end gaming market. Normally, Nvidia releases its flagship gaming GPU, follows it up with a chip that has roughly 66% ~ 75% of the flagship's resources (e.g., CUDA cores), and then unveils a graphics processor that has about 50% of the flagship's units. With the Ampere family, that strategy was somewhat adjusted as Nvidia's GA103 chip was mainly designed with laptops in mind and barely made it to desktops (it was late to the party too), yet with the Ada generation Nvidia is back to its usual approach with three chips.

More SKUs Incoming

One interesting takeaway is the disparity between maximum configurations offered by the AD102 GPU and the GeForce RTX 4090 graphics card. AD102 packs 18,432 CUDA cores, whereas the GeForce RTX 4090 comes with 16,384 CUDA cores enabled. Such an approach gives Nvidia some additional flexibility regarding yields and the introduction of new graphics cards in the future, so there's plenty of room for an RTX 4090 Ti, RTX 4080 Ti, and RTX 5500/5000 Ada Generation for ProViz markets, etc.

Meanwhile, the GeForce RTX 4080 16GB and RTX 4080 12GB use nearly complete AD103 and fully-fledged AD104 GPUs, respectively. We do not know what the future brings, but we anticipate we'll eventually see cut-down versions of AD103 and AD104 GPUs. We can speculate about GeForce RTX 4070 Ti and/or RTX 4070 based on cut-down bins of the AD104 chip, as well as the potential for ultra-high-end graphics solutions for laptops powered by the AD103 graphics processor, but we can only guess about the specifications of these parts.

Some Thoughts

Nvidia's Ada Lovelace architecture is both a qualitative and quantitative leap over the Ampere architecture. Nvidia not only seriously enhanced the performance of its ray tracing, tensor cores, and some other units on the architectural level, but it also increased their number, and boosted their clocks. A major enhancement here are the massively increased L2 caches of Ada GPUs compared to Ampere GPUs.

To a large degree, these leaps were enabled by the Nvidia GPU-optimized 4N process technology from TSMC. Furthermore, the company also used high-speed transistors to increase the frequencies of its new graphics processors, which provided additional performance gains.

But a leading-edge production node and large die sizes of Nvidia's new GPUs also make the parts significantly more expensive to build, which is why prices of GeForce RTX 4080 and 4090 graphics cards carry considerably higher price tags than their direct predecessors.

Nvidia has introduced only five Ada Lovelace-based products so far: GeForce RTX 4080 12GB, RTX 4080 16GB, and RTX 4090 graphics cards for desktops, alongside the RTX 6000 Ada generation for workstation/datacenters and L40 (Lovelace 40) boards for high-end workstations and virtualized workstation environments.

Considering that the company can offer full-fat AD102 and cut-down versions of AD102, AD103, and AD104 GPUs, we can envision a great number of new GeForce RTX 40-series cards for client machines and Ada RTX-series solutions for datacenters. Meanwhile, Nvidia is probably prepping some smaller GPUs (AD106, AD107), so it looks like the Ada Lovelace family of products will be at least as broad as the Ampere lineup.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

NeatOman Nvidia; 🙈 🤑 🙈Reply

Long->Short; guess where going to have to take them apart and clean them with an Ultra Sonic machine before using them 😡

Nvidia has some pretty aggressive and creative ways to keep there prices up. My (total) guess is there's some type of backroom benefit to flooding a small part of the market and have tons of initial bad reviews/articles about how used mining GPUs are not worth buying.

Personally I got a used HD 7950 after the O.G. crypto crash, guy was totally transparent and told me he ran it with custom bios to maximize it's Hash rate for about a year and a half. I got that card to clock 1650MHz ! ! with a bios volt mod and liquid metal (when it was some weird new thing, had to clean up and reapply every 6 months to a year). Anywho!!! Let it rest at 1450Mhz and ran perfect for YEARS -

COLGeek Reply

Did you mean to reply to this article, vice the one in this thread?NeatOman said:Nvidia; 🙈 🤑 🙈

Long->Short; guess where going to have to take them apart and clean them with an Ultra Sonic machine before using them 😡

Nvidia has some pretty aggressive and creative ways to keep there prices up. My (total) guess is there's some type of backroom benefit to flooding a small part of the market and have tons of initial bad reviews/articles about how used mining GPUs are not worth buying.

Personally I got a used HD 7950 after the O.G. crypto crash, guy was totally transparent and told me he ran it with custom bios to maximize it's Hash rate for about a year and a half. I got that card to clock 1650MHz ! ! with a bios volt mod and liquid metal (when it was some weird new thing, had to clean up and reapply every 6 months to a year). Anywho!!! Let it rest at 1450Mhz and ran perfect for YEARS

https://forums.tomshardware.com/threads/video-allegedly-shows-crypto-miners-jet-washing-nvidia-rtx-gpus.3778881/ -

hotaru251 i kind of hope in near future nvidia cant sell gpu lineup attheir prices due to fighting mores law.Reply

and then AMD who is adapting (like they did on cpu) gets similar performance at much lwoer pwr & cost.

i'd LOVE to see Nvidia get shafted on an entire generation (as hurting the profit is only way they will change) -

hannibal The point is that these are gonna sell very well... pity but true.Reply

And Nvidia will maintain its about 80% market share, and that means the next gen 5000 series can be even more expensive! -

btmedic04 I for one am looking forward to the 4080 8gb :ROFLMAO: (satire... hopefully)Reply

a/nS2dQ6LView: https://imgur.com/a/nS2dQ6L