Nvidia Announces RTX 4090 Coming October 12, RTX 4080 Later

Say hello to Ada Lovelace

Since the Nvidia hack back in February, we've had a decent idea of what we might expect from Nvidia's RTX 40-series Ada Lovelace GPUs. Early figures put the maximum number of Streaming Multiprocessors (SMs) at 144 for AD102, though we wouldn't expect Nvidia to launch with a fully-enabled GPU right off the bat.

Today, during the GTC 2022 keynote (which you can view in its entirety on YouTube, though the "good stuff" starts at the 6:03 mark and runs until about 24:32), Nvidia CEO Jensen Huang revealed the specifications for the RTX 4090 and RTX 4080, along with details of the Ada Lovelace architecture. Most of the most recent leaks appear to have been reasonably accurate.

| Graphics Card | RTX 4090 | RTX 4080 16GB | RTX 4080 12GB | RTX 3090 Ti |

|---|---|---|---|---|

| Architecture | AD102 | AD103 | AD104 | GA102 |

| Process Technology | TSMC 4N | TSMC 4N | TSMC 4N | Samsung 8N |

| Transistors (Billion) | 76.3 | 45.9 | 35.8 | 28.3 |

| Die size (mm^2) | 608.4 | 378.6 | 294.5 | 628.4 |

| Streaming Multiprocessors | 128 | 76 | 60 | 84 |

| GPU Cores (Shaders) | 16384 | 9728 | 7680 | 10752 |

| Tensor Cores | 512 | 304 | 240 | 336 |

| Ray Tracing "Cores" | 128 | 76 | 60 | 84 |

| Boost Clock (MHz) | 2520 | 2505 | 2610 | 1860 |

| VRAM Speed (Gbps) | 21 | 22.4 | 21 | 21 |

| VRAM (GB) | 24 | 16 | 12 | 24 |

| VRAM Bus Width | 384 | 256 | 192 | 384 |

| L2 Cache (MB) | 72 | 64 | 48 | 6 |

| ROPs | 176 | 112 | 80 | 112 |

| TMUs | 512 | 304 | 240 | 336 |

| TFLOPS FP32 (Boost) | 82.6 | 48.7 | 40.1 | 40.0 |

| TFLOPS FP16 (FP8) | 661 (1321) | 390 (780) | 321 (641) | 320 (N/A) |

| Bandwidth (GBps) | 1008 | 717 | 504 | 1008 |

| TDP (watts) | 450 | 320 | 285 | 450 |

| Launch Date | Oct 12, 2022 | Nov 2022 | Nov 2022 | Mar 2022 |

| Launch Price | $1,599 | $1,199 | $899 | $1,999 |

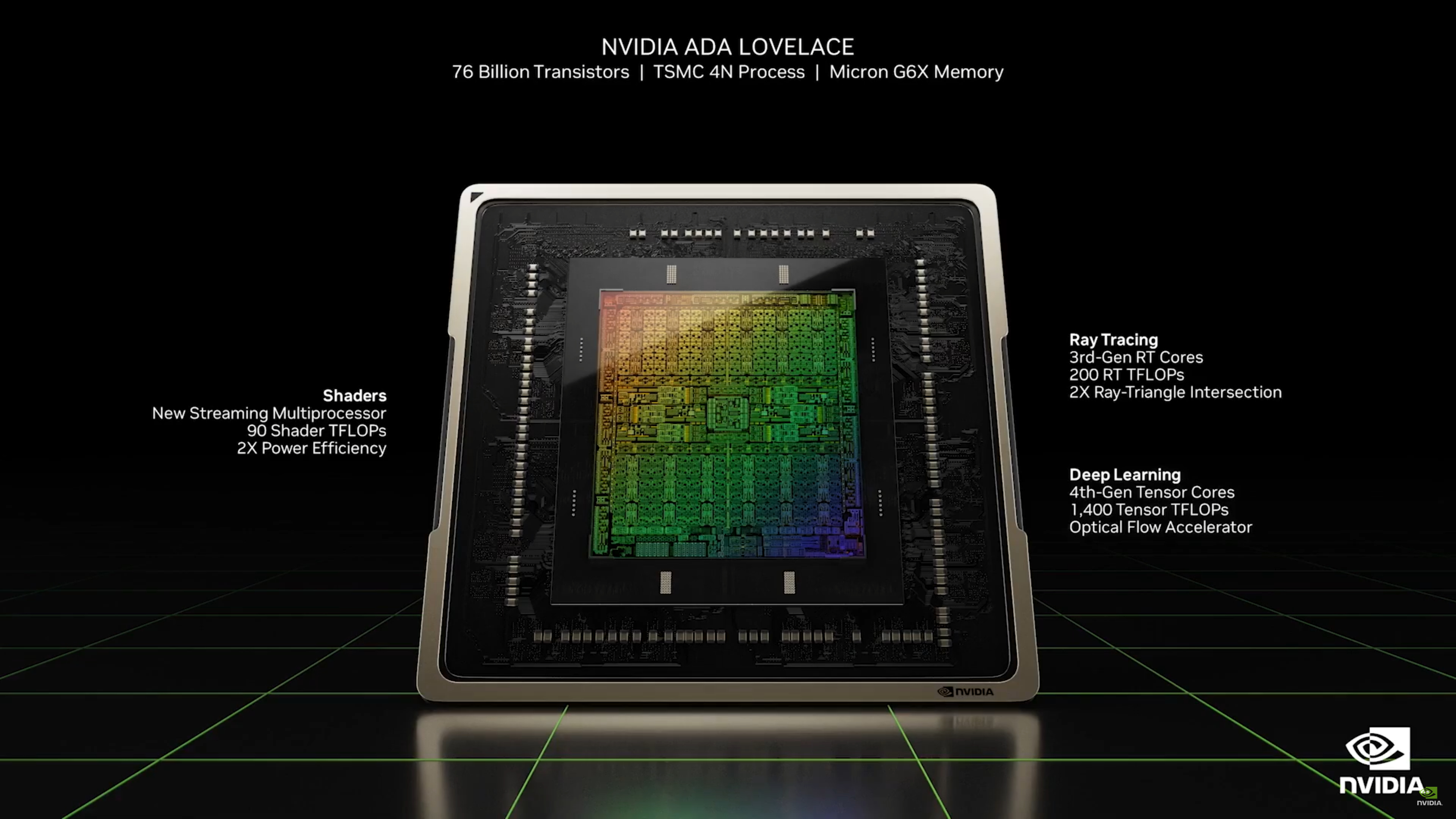

Core counts and clock speeds are all known at this point. The RTX 4090 will have 128 SMs with a 2,520 MHz boost clock, coupled with 24GB of GDDR6X memory running at 21 Gbps with a 384-bit interface. The memory configuration looks unchanged from the RTX 3090 Ti, which on the surface is basically correct. However, much like AMD did with RDNA 2’s Infinity Cache, Nvidia will apparently be packing up to 96MB of L2 cache in AD102, compared to just 6MB of L2 cache in GA102 — the RTX 4090 disables 24MB of the L2 cache.

Core counts receive a greater than 50% boost over Ampere, with 128 SMs instead of only 84 SMs maximum — and there’s still room for a 140–144 SM model in the future, perhaps a new Titan RTX, or at least a future RTX 4090 Ti. Core counts alone would provide a big jump in performance, but Nvidia has also tuned Ada to reach higher clocks, again similar to what AMD did with RDNA 2, and the result is the expected 2.5–2.6 GHz boost clocks on the announced models. That’s nearly 50% more than the RTX 3090’s 1,695 MHz boost clock and 35% higher than the RTX 3090 Ti’s 1,860 MHz — and Jensen says that Nvidia has hit clock speeds in excess of 3.0 GHz with overclocking in its labs. (Hello, 800W custom RTX 4090 cards?)

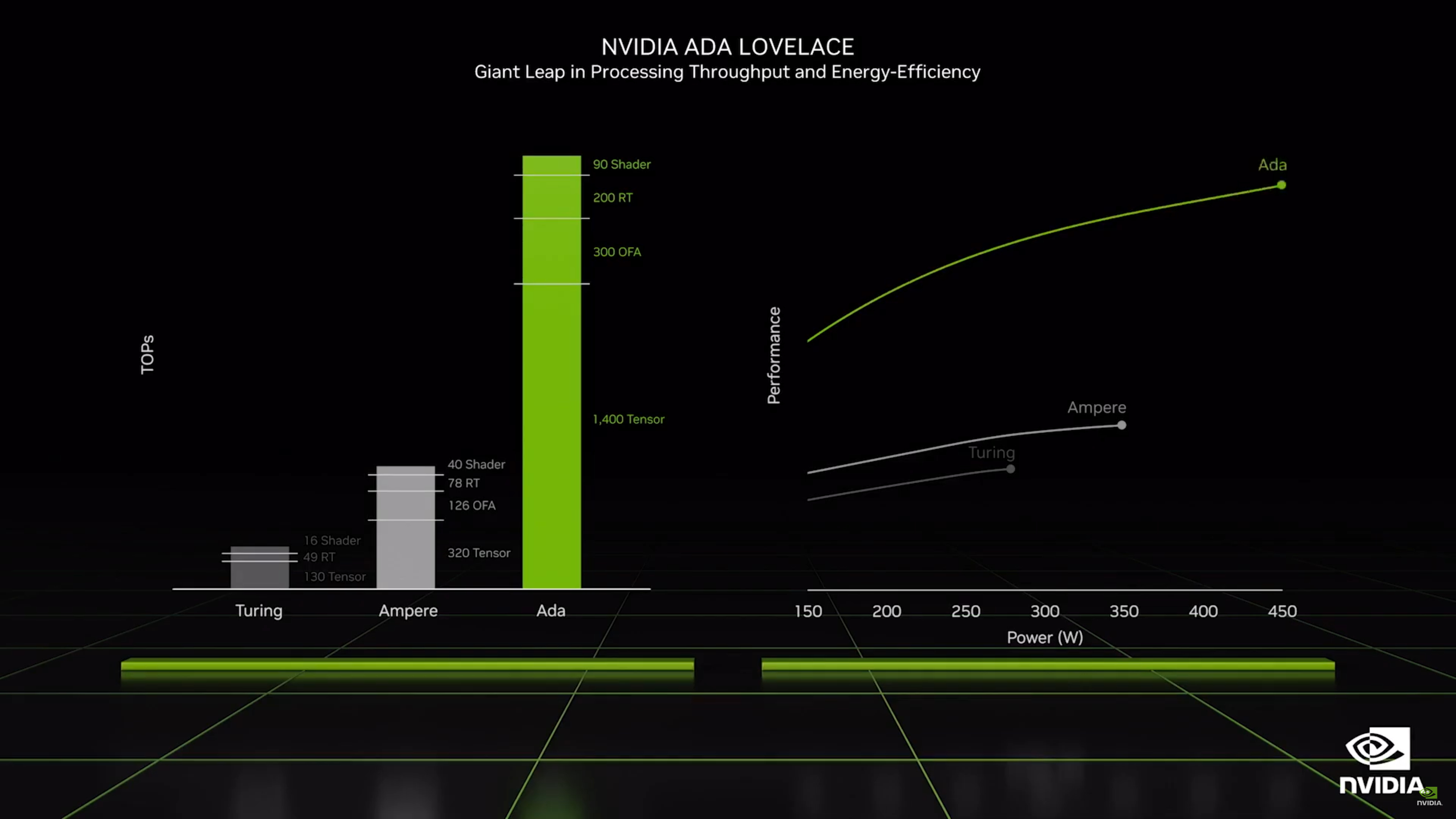

Combined, the GPU shader counts and clock speeds yield the theoretical maximum performance figure. RTX 3090 was rated at 35.6 teraflops, RTX 3090 Ti bumped that up to 40 teraflops, and now the RTX 4090 pushes the needle up to 82.6 teraflops — more than double the compute, in other words. While teraflops alone can be a somewhat meaningless figure, it’s still useful within similar architectures, and we’re looking at perhaps the largest generational jump in performance that we’ve seen from Nvidia since the GeForce brand first came into being.

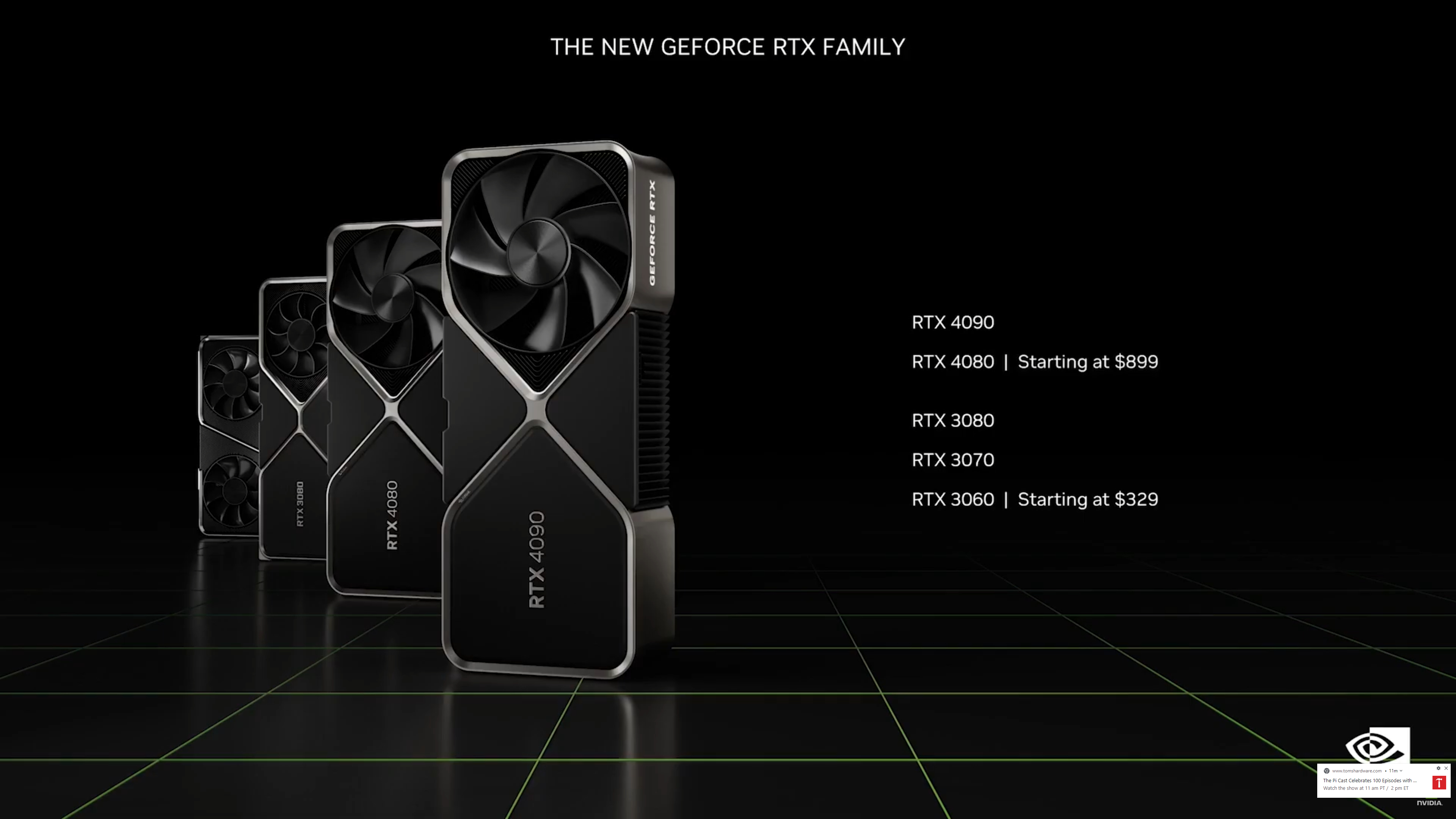

It's not just RTX 4090, either, though some will undoubtedly be unhappy with the launch prices for the RTX 4080 16GB and RTX 4080 12GB models. Yes, much to my chagrin, Nvidia will have two different 4080 SKUs separated by memory capacity. Based on the specs alone, these will deliver wildly differing performance levels, probably larger than the gap between the RTX 3080 Ti and the RTX 3080 10GB. Of course, the price difference should make it immediately clear which model you’re buying, with the 16GB card starting at $1,199 and the 12GB model starting at $899. On paper, it looks as though the 16GB card will deliver about 20% more performance, give or take.

We're also looking at three separate chips: AD102, AD103, and AD104. Note that Nvidia hasn’t specified an exact launch date for the RTX 4080 cards other than "November." Perhaps that will be early November, given AMD now plans to announce RDNA 3 GPUs on November 3, which sets a pretty firm time limit. We’ll probably see RTX 4080 GPUs arrive right before whenever AMD’s RX 7900 XT retail launch occurs.

The bigger question will be real-world gains, of course, and the lack of substantial gains on memory bandwidth does raise some flags. However, keep in mind that when AMD basically slapped a bunch of L3 cache onto its RDNA design and then boosted clock speeds, cards like the RX 6600 XT were able to stay ahead of the previous generation RX 5700 XT, which had nearly twice the memory bandwidth — and that was with only 32MB on Navi 23. Up to 72MB of L2 cache should give Nvidia cache hit rates of 50% or more, which means the effective memory bandwidth is doubled.

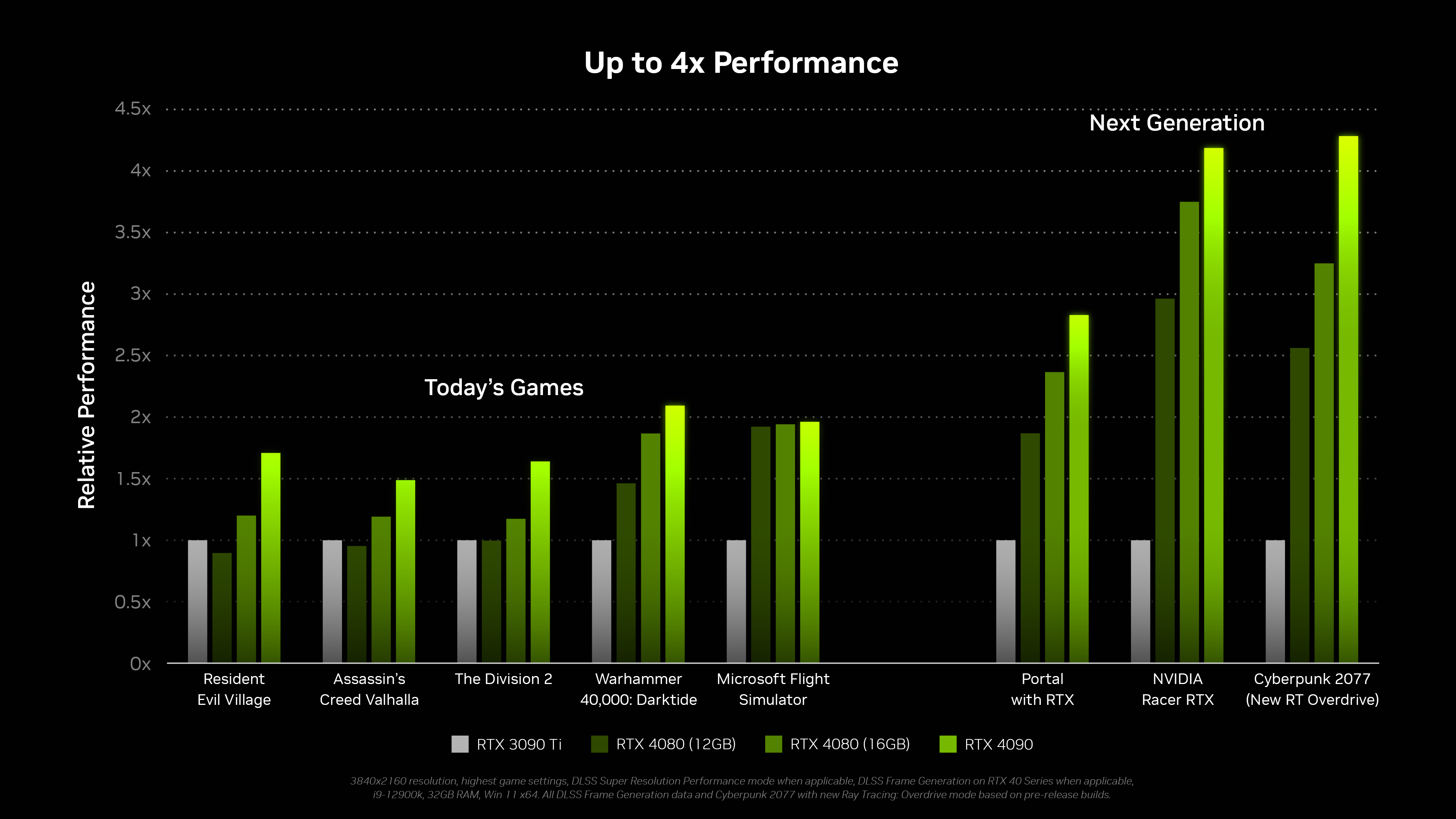

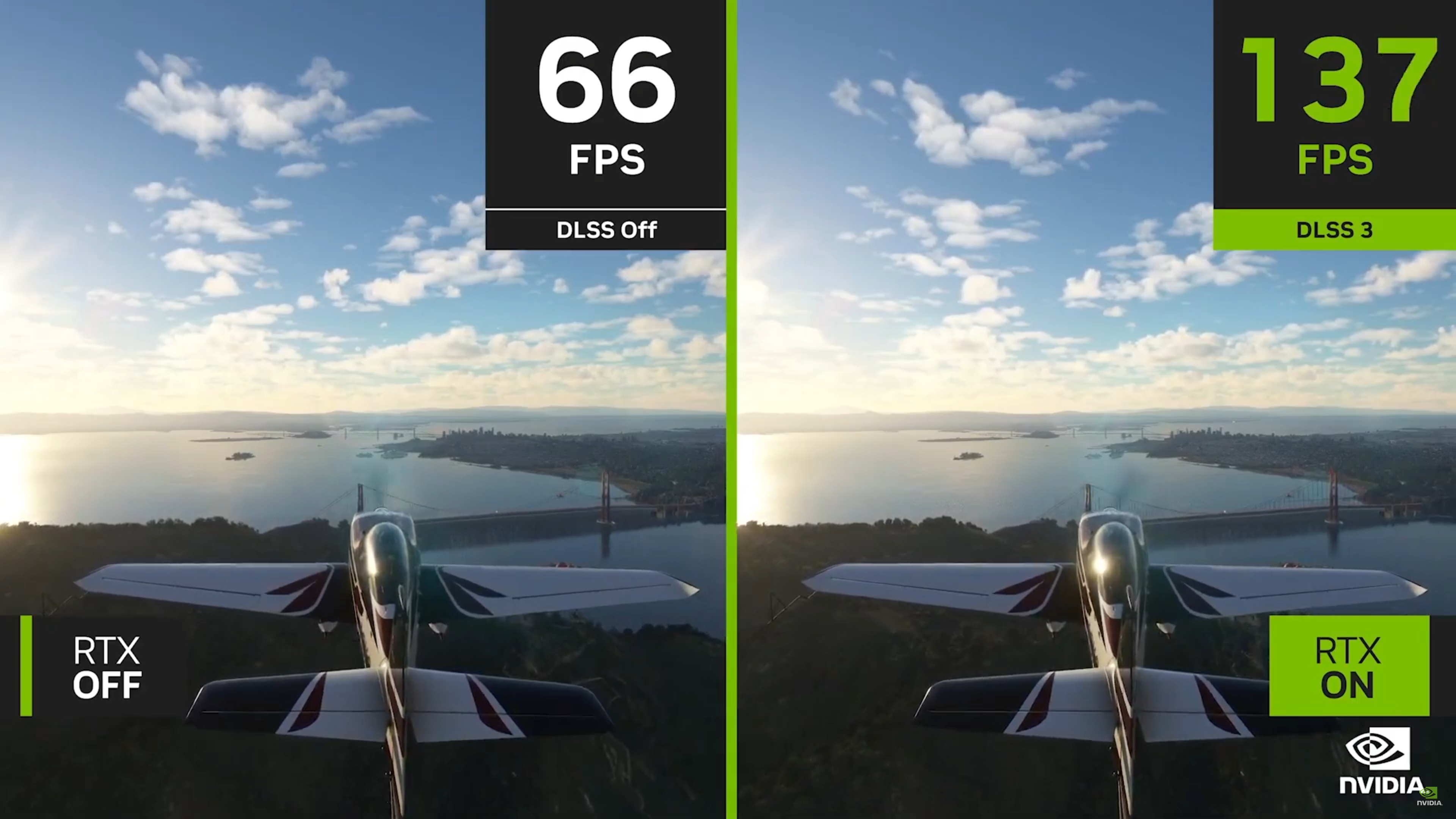

Theoretical performance looks exceptionally strong, but what about the rest of the package? Nvidia provided the above benchmark results, comparing the three new GPUs against the existing RTX 3090 Ti. You can see that in traditional games, on the left, the RTX 4080 12GB can be slightly slower than the 3090 Ti up to quite a bit faster. Some of the above testing was done with DLSS 3 enabled, which is only available on RTX 40-series cards, giving them a sizeable performance advantage.

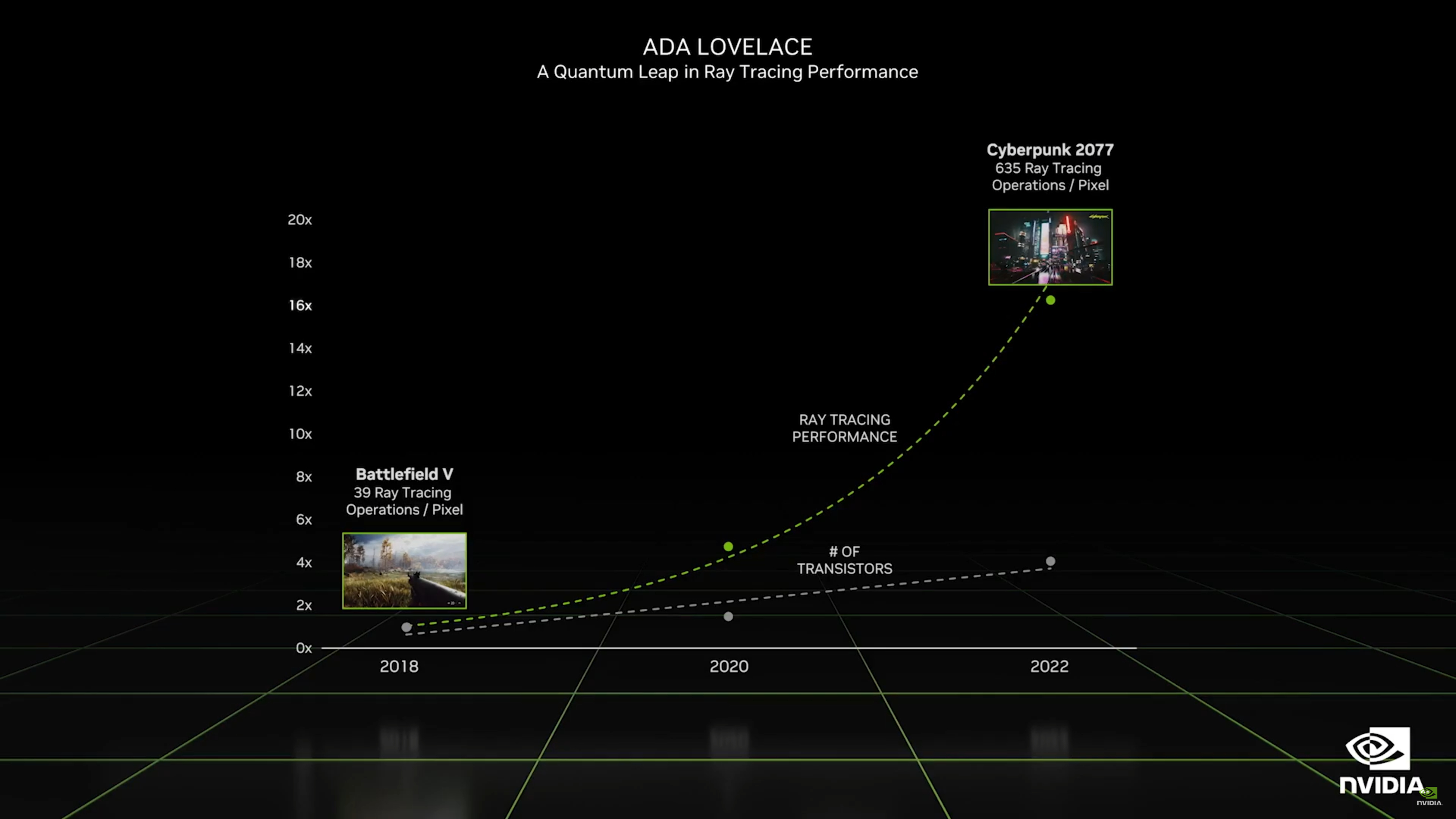

On the right, that's certainly the case. Racer RTX, Portal RTX, and Cyberpunk 2077 "RT Overdrive" all crank up the ray tracing effects to new extremes. We don't have baseline fps figures, but the RTX 4080 12GB is over twice as fast as the 3090 Ti in some cases, while the RTX 4090 is up to four times as fast. The RTX 3090 Ti was still allowed to use DLSS 2, but it's a bit apples and oranges.

Let's get into the architectural updates briefly for some additional background.

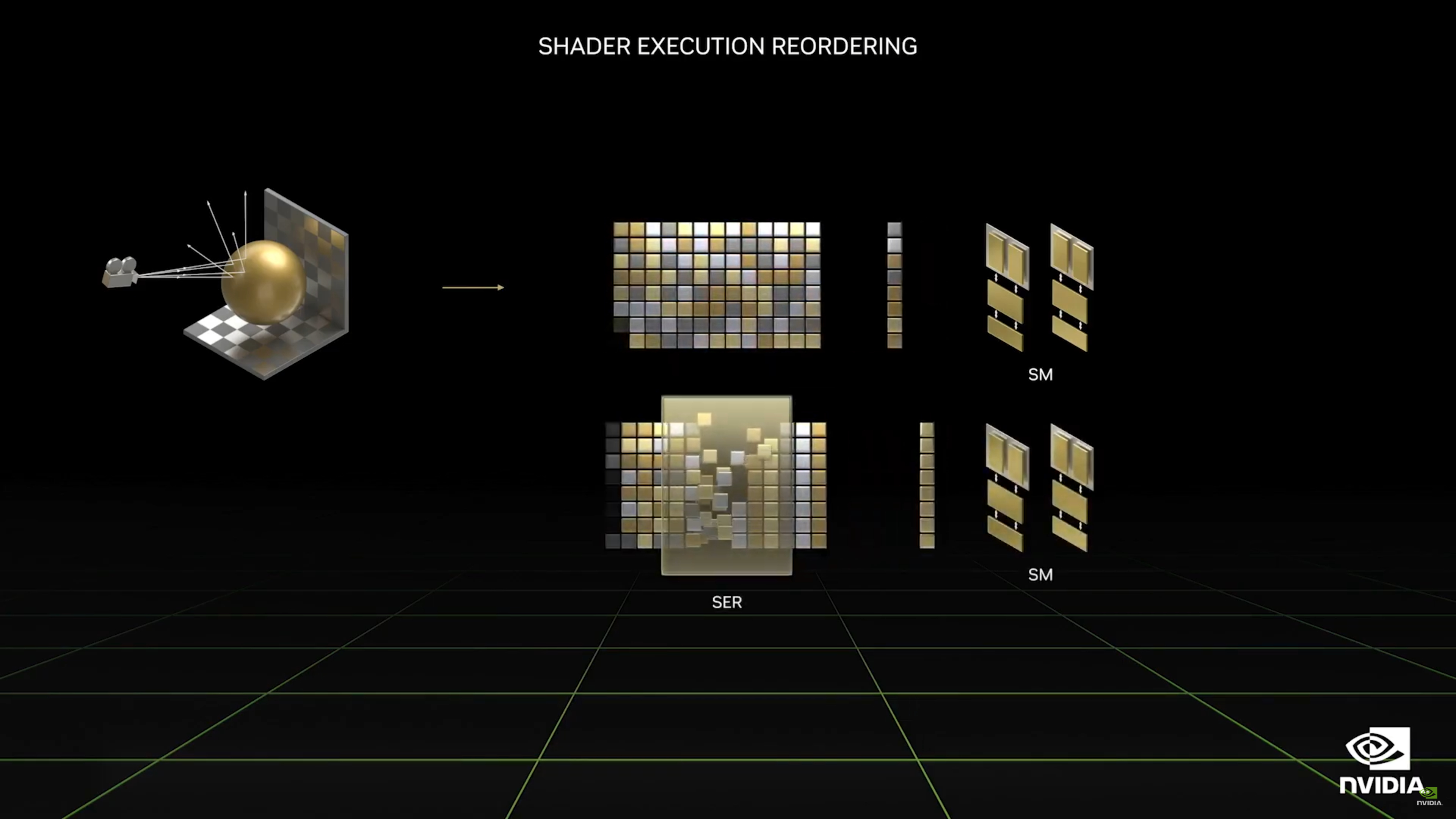

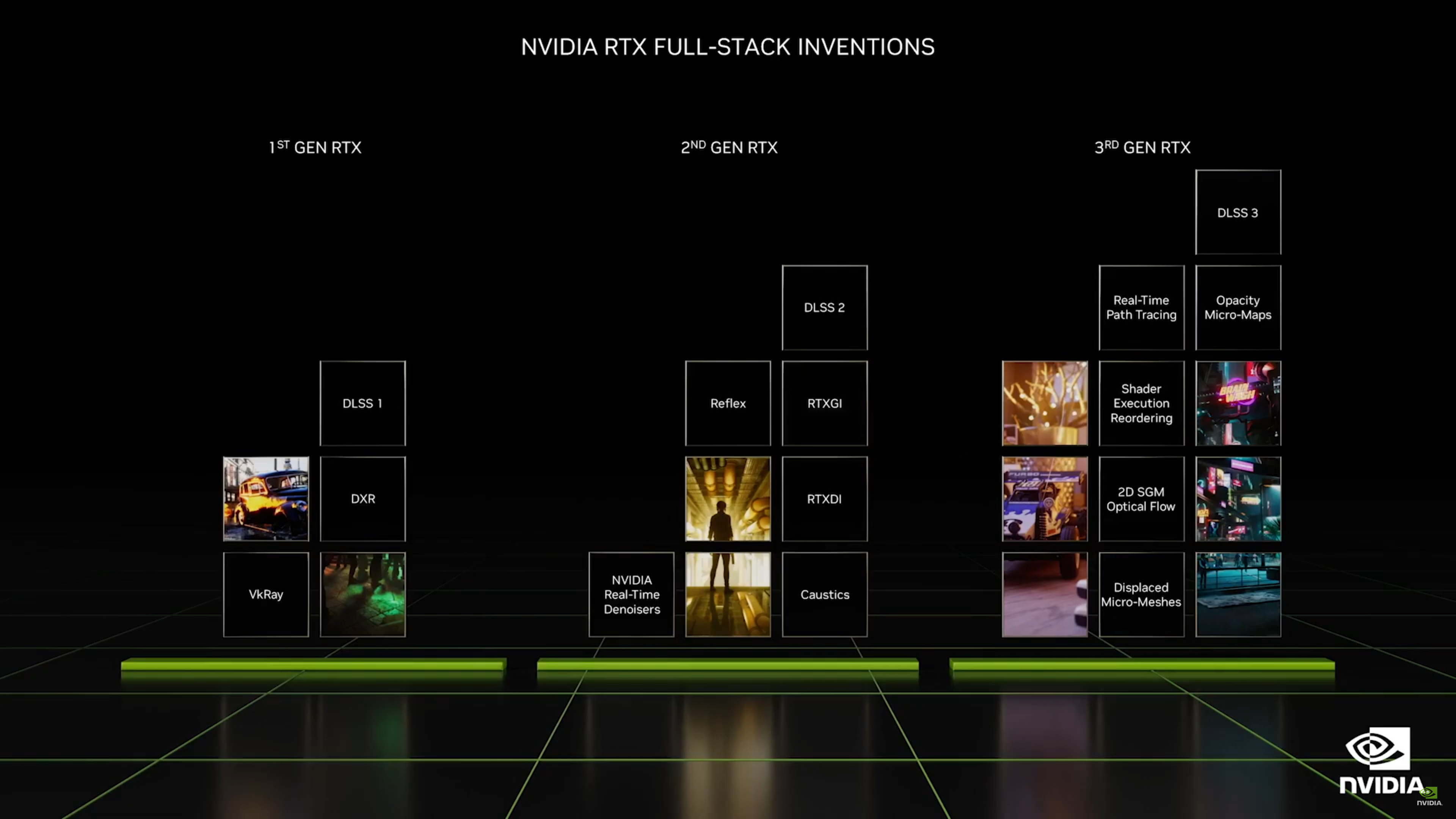

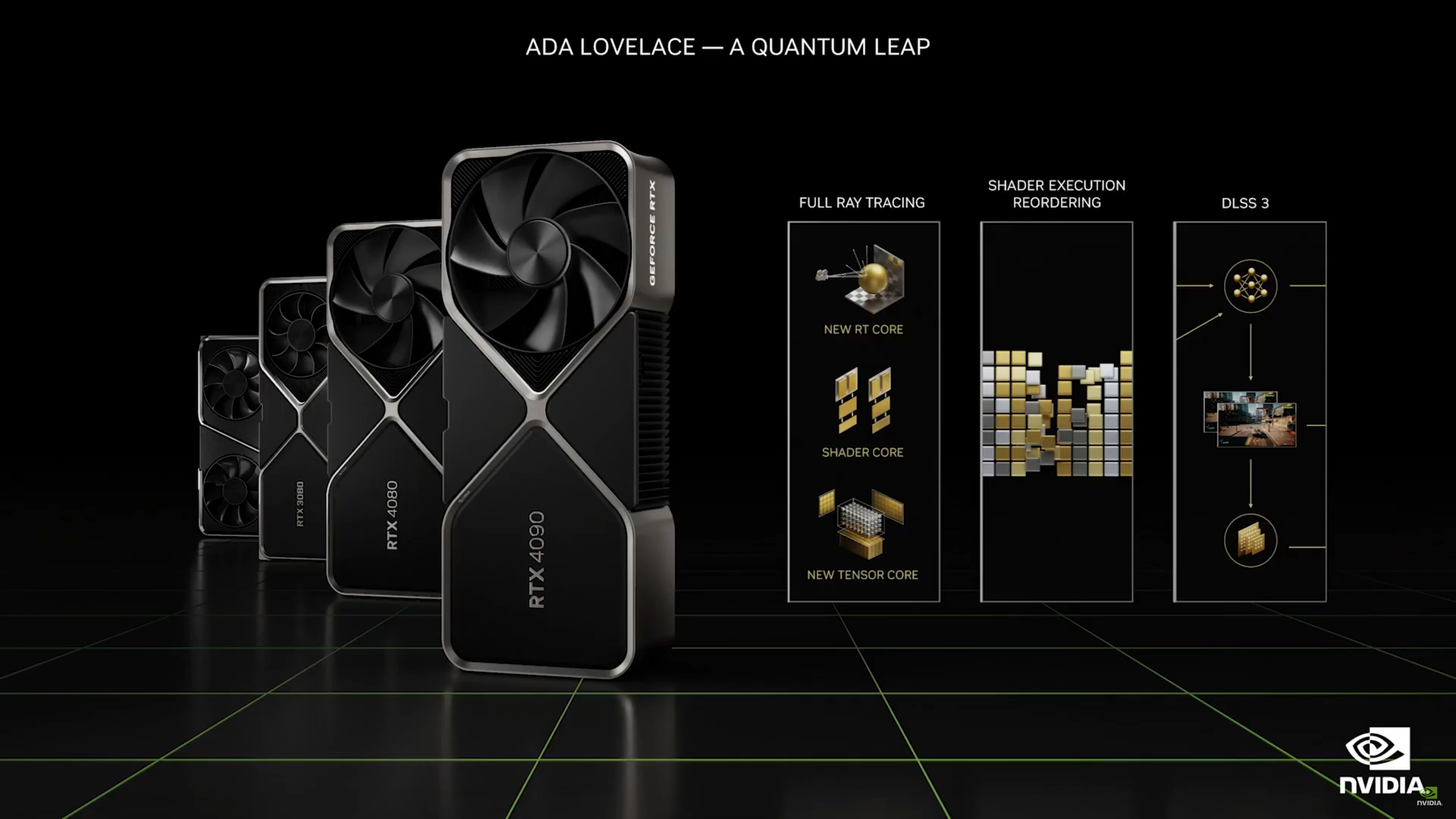

Core counts and clock speeds have improved, but more importantly, there are architectural updates that can further boost performance. On the GPU shaders, Nvidia says Ada cores are up to twice the power efficiency. The shaders also support a new feature called SER, Shader Execution Reordering, which appears to mostly help with ray tracing performance but may also be useful in traditional rendering modes.

Moving on to the RT cores themselves, Nvidia has added more ray/triangle intersection hardware, allowing for up to twice the throughput in that area. A new opacity micro-map engine also speeds up ray tracing for transparent textures. Similarly, the displacement micro-mesh engine apparently can add geometry "richness" without the BVH build and storage cost — meaning, fewer triangles for the BVH but more for the final render. Nvidia says that the 3rd gen RT cores can generate the BVH structure 10 times faster than the 2nd gen cores, while using 20 times less memory — or 5% of the VRAM requirement.

Note that all of these above changes — SER, OMM, and DMM — require developers to use NVAPI extensions to access them. That's unfortunate, as it means they're proprietary (for now) and won't benefit existing games.

Finally, the Tensor cores have been upgraded with Hopper's support of FP8 data types. That effectively doubles the compute throughput, assuming the workload can get by with the reduced precision. Note that the number of Tensor cores per SM appears unchanged, and throughput per Tensor core in FP16 operations remains the same. But the new Tensor cores are apparently part of the requirement for DLSS 3.

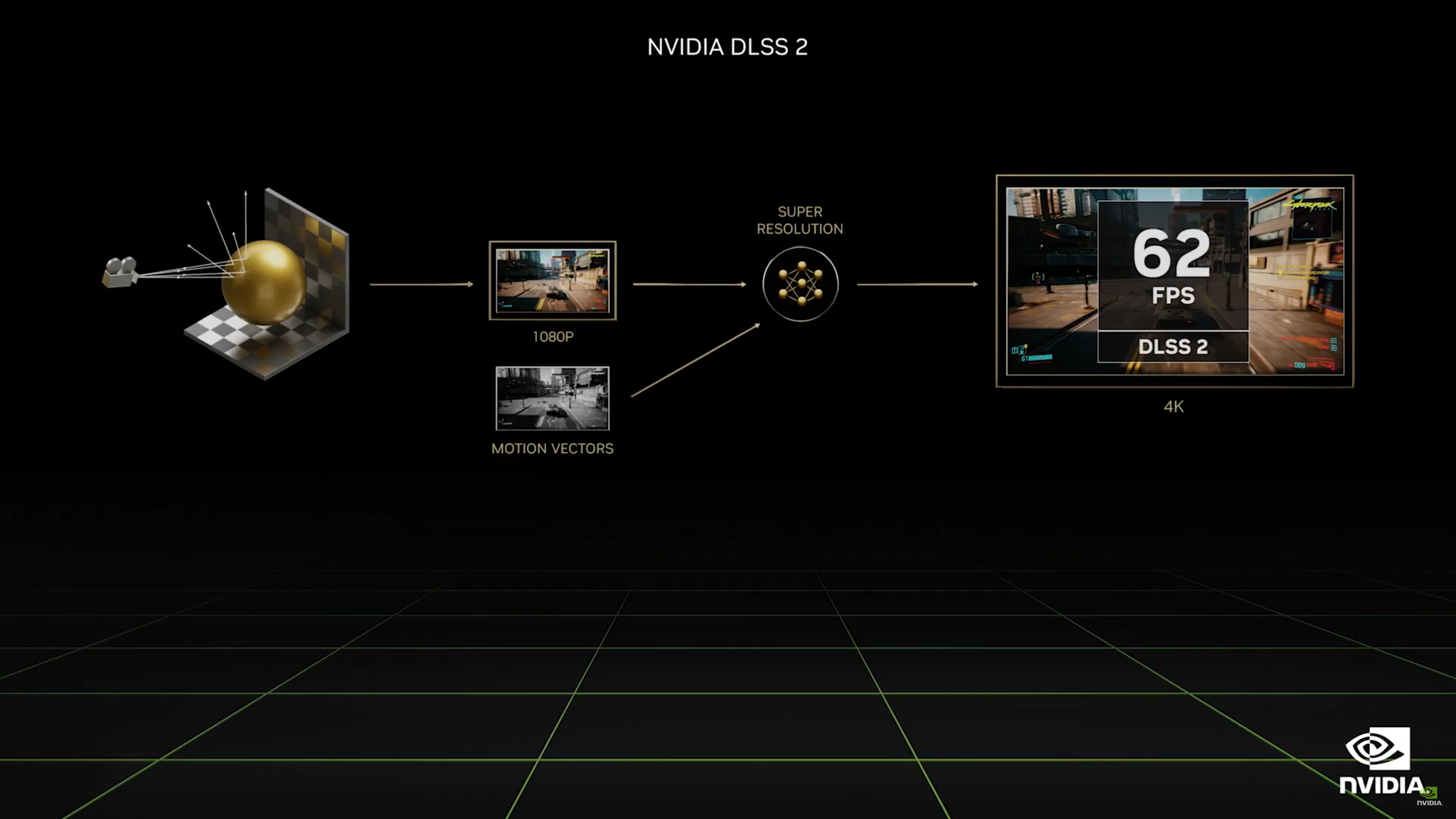

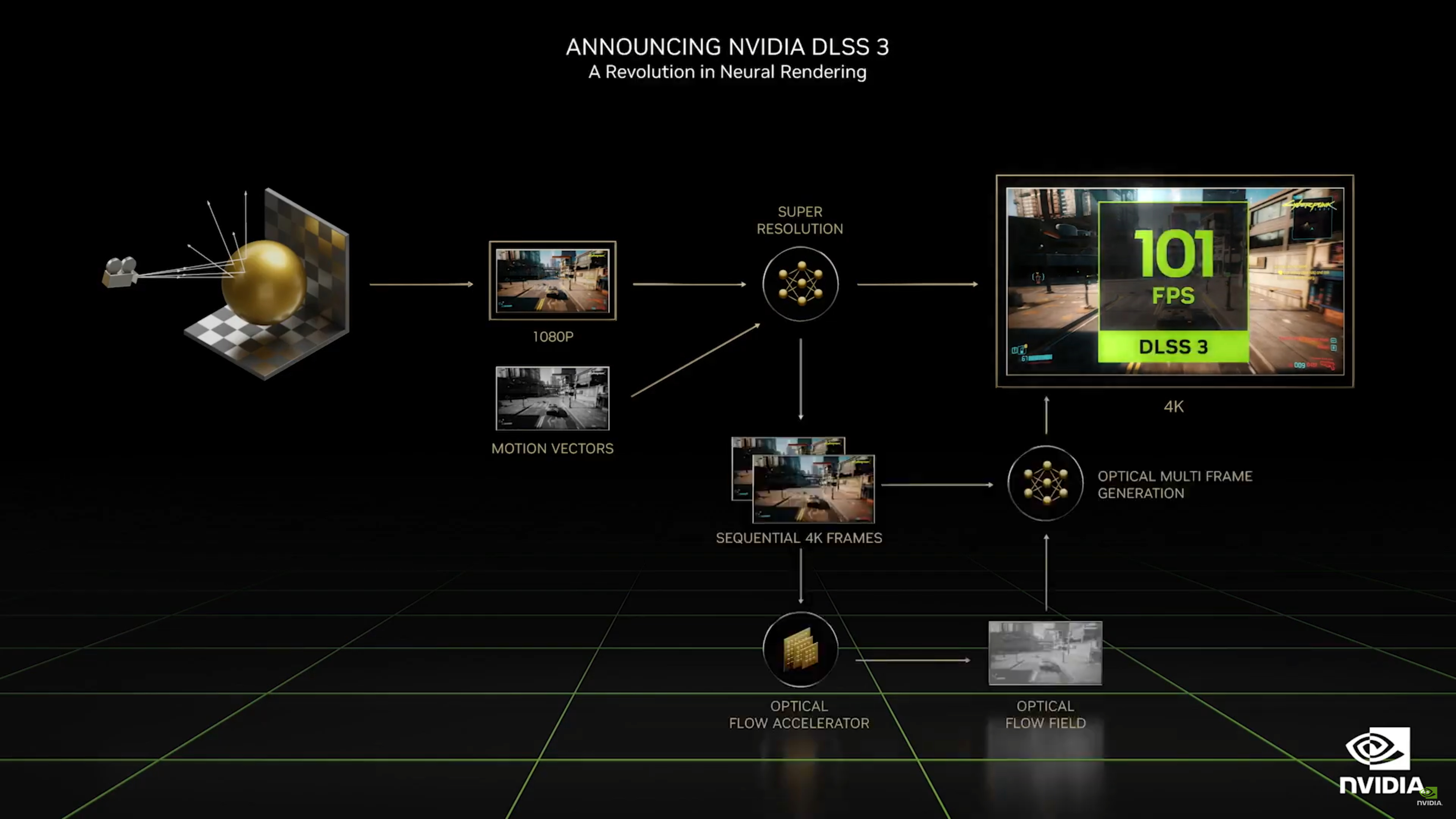

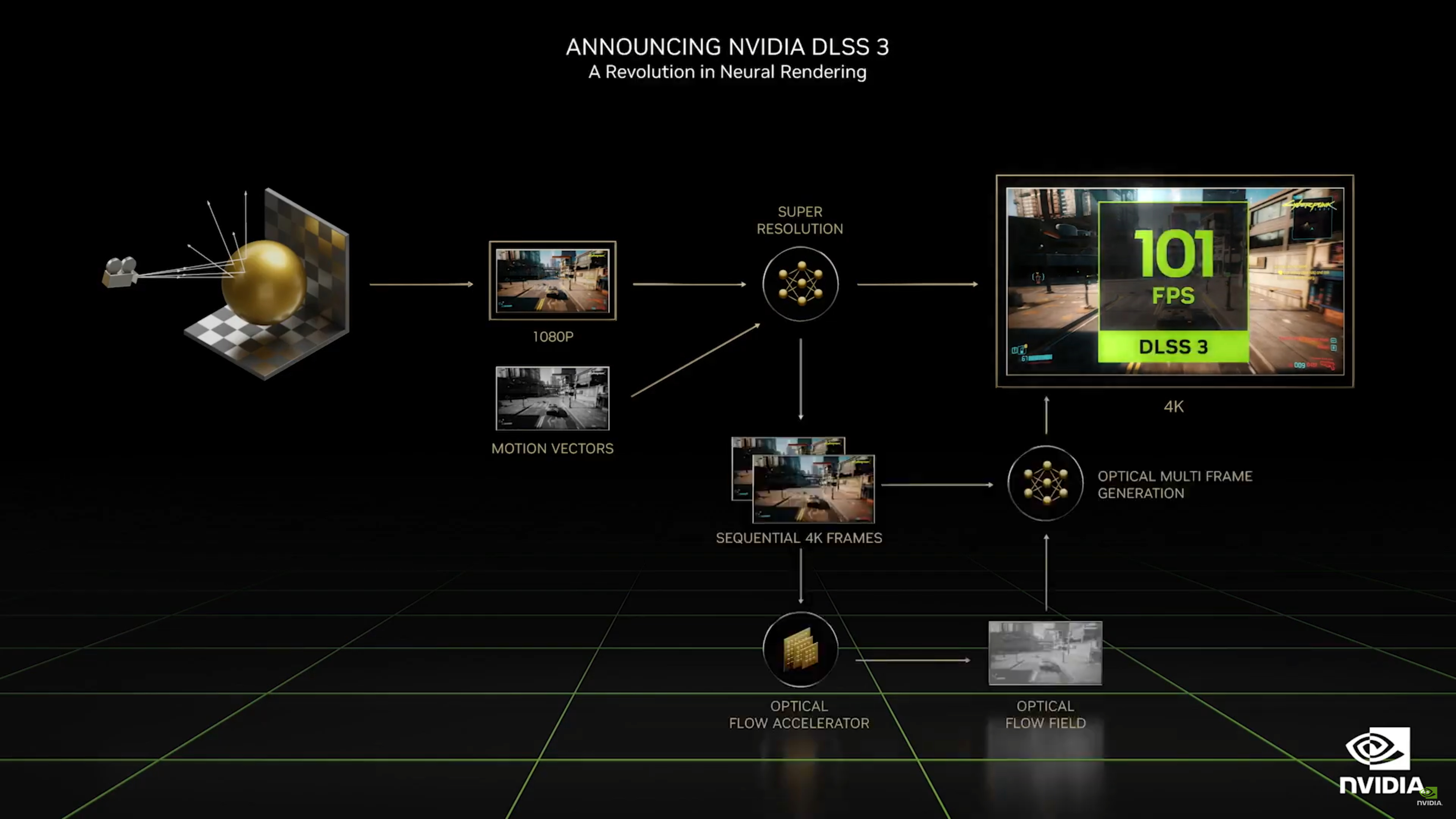

While the architectural updates are great, Nvidia has also been hard at work on software updates. DLSS 3 is now official, with support for it coming in several of the games shown during the keynote, and many more (over 35+) on the way. Nvidia showed a performance boost using DLSS 3 vs. DLSSS 2 in Cyberpunk 2077 of 63%, presumably with similar visual fidelity on the final output.

We haven't been able to test DLSS 3, obviously, so we'll have to wait and see how it fares, but DLSS 2 has already set a high bar for overall upscaling quality. DLSS 3 will take the existing inputs — frame data, motion vectors, depth buffer, and the previous frame(s) — and adds a new Optical Flow Accelerator. (It's actually not entirely new, as Ampere GPUs had a slower OFA, but apparently it's not sufficient for DLSS 3 — yet.)

DLSS 3 and the OFA can generate multiple (two) frames out of a single source image by looking at the previous data. So in theory, it could potentially double the framerate, and in motion, it will probably help make games look smoother, though we do wonder how individual frame comparisons will stand up. In some ways, it sounds a bit like asynchronous space warp (ASW) from VR getting some AI enhancement and being applied alongside upscaling, which actually sounds quite clever if you're looking to boost framerates.

The OFA is a piece of fixed function hardware, dedicated to generation the optical flow field. This is sort of like a motion vector map on steroids. The Ada OFA has a rated performance of 305 teraops, whereas Ampere GPUs had an OFA rated for 126 teraops (integer operations of some form). Again, that suggests a future update to DLSS 3 could enable the algorithm on Ampere GPUs, though perhaps there would be more of a quality loss.

For now, DLSS 3 will only work with RTX 40-series (and later) GPUs, which is certainly a concern. Game developers that support DLSS 3 will also get DLSS 2 "super resolution" support by default, and there will probably be a "Frame Generation" tickbox to enable the extra performance that DLSS 3 offers. Hopefully, if they want to cater to a wider set of gamers, they'll also see fit to add in FSR 2.0 and XeSS support as well. That probably won't happen, but we can dream.

It's worth noting that up until now, all versions of DLSS have worked on every RTX card, from the lowly RTX 2060 and RTX 3050 all the way up to the RTX 3090 Ti. There's a huge discrepancy in potential Tensor core compute on those GPUs, however, with the RTX 2060 only providing about 52 teraflops of FP16 while the 3090 Ti (with sparsity) has up to 640 teraflops. Now, with FP8 on RTX 40-series, even a hypothetical 20 SM RTX 4050 would provide around 200 teraflops of compute, while the RTX 4090 has up to 1.4 petaflops of throughput.

Pricing isn’t going to win any fans for Nvidia, as it’s bumping up the launch price by $100–$200 compared to the RTX 3080/3090 back in 2020. That’s not as bad as it could have been, and clearly Nvidia is trying to protect sales of the existing RTX 30-series GPUs for the time being.

At least it’s not the anticipated $1,999 price point that the RTX 3090 Ti had, which later proved unsustainable after crypto mining profitability collapsed, ultimately leading to price cuts and unhappy partners. EVGA announced last week that it would exit the graphics card business largely due to Nvidia’s tactics. We can’t help but think the RTX 3080 Ti and 3090 Ti pricing shenanigans of the past year played a big role.

Availability of the RTX 4090 is scheduled for October 12, 2022. That’s about a week ahead of when Intel’s Raptor Lake CPUs are expected to launch, and of course, AMD Ryzen 7000-series Zen 4 CPUs will be available next week. That means anyone looking to upgrade to a completely new PC will have plenty of options soon.

Will there actually be a sufficient supply of RTX 4090 and 4080 cards to meet demand, though? That remains to be seen, but even without miners trying to scoop up cards, we expect 4090 to sell out for at least the first few weeks. Let's hope scalpers don't get in on the action and ruin it for everyone. As for the RTX 4080, that will arrive the following month, and retail availability will again be important for potential customers.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

What about lower spec RTX 40-series cards — stuff that won't cost $900 or more? Unfortunately, the cards most people are likely waiting for haven't been revealed. We've heard rumors of RTX 4070 and RTX 4060, but so far, we've only seen AIB images for the RTX 4090 series, not 4080, and not anything lower down the pecking order. We've provided some insight into why the RTX 4090 and 4080 cost so much as well, not to excuse Nvidia but to also discuss more of what's going on.

Given Nvidia has stated that it expects to have excess GeForce gaming card inventory until perhaps April 2023 (you can hear this in the Q2 FY23 Earnings Report), that means there are a lot of RTX 30-series cards still waiting to be sold. And that "April 2023" estimate is probably a lot better than what will actually happen, which means Nvidia could be in an oversupply of RTX 30-series GPUs for almost another year!

Since mining pushed Nvidia to prioritize the larger, faster chips like GA102 over smaller chips like GA104, a lot of those cards are probably RTX 3080 and 3090 variants. Nvidia doesn't want to kill sales of those cards by releasing a newer, faster, and cheaper card, which explains why we're only hearing about RTX 4090 and 4080 right now, and why prices are generally creeping up.

But Nvidia has a big problem, namely AMD. AMD might be coming to market a bit later with RDNA 3 and the RX 7900 XT compared to RTX 4090. Still, with one-quarter of the overall GPU market share of Nvidia, plus CPU and console product lines it could use on wafers to avoid getting into a massive GPU oversupply situation, it's in a far better position to react. AMD has long said that its RX 7000-series RDNA 3 GPUs would come to market this year, and it's sticking to that.

We don't know if AMD will deliver better performance than Nvidia, but the chiplet design of RDNA 3 should mean it has far more ability to undercut Nvidia on prices. Who knows, we could end up with the reverse of the RX 580/570 situation in 2018, where you could pick up those AMD GPUs for a song. RTX 3050 for under $200 and RTX 3060 for under $250? That would be a nice change of pace. Black Friday could actually be quite exciting this year for PC gamers!

With the official reveal now out of the way, we're looking forward to testing all of the new graphics cards slated to launch in the coming months. Again, given the oversupply currently happening on existing GPU lines, the new parts will hopefully be readily available at retail — a stark contrast to the past two years. Still, even with the high prices, don't be shocked when all of the RTX 4090 and RTX 4080 GPUs are sold out at launch. It happens every time there's a new Nvidia architecture.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

cknobman Those prices can suck it.Reply

Crypto mining is kaput and Nvidia is going to have a hard time selling this stuff at such astronomical pricing. -

-Fran- The 3070 replacement was called 4080 12GB and priced $900.Reply

Welp, nVidia not impressing me with anything here today... Maybe DLSS 3 sounds cool, but not being backwards compatible (acceleration-wise) takes brownie points. I wonder why they touched so little on raster and perf/watt, lol.

Regards. -

bigdragon The high prices of the 40-series make the 30-series look way more attractive until you consider the best 30-series cards are still double or more the price of a console. Best play is to sit and wait Nvidia out. Gaming hardware shouldn't be a luxury that costs more than a weeklong trip to the Caribbean. Those 40-series prices will come down, and the 30-series prices will continue to fall too. Just wait.Reply

I do wonder what the real-world performance of the 4080 16GB is compared to the 3090. Specifically, Blender performance and a few games. Synthetic and vendor-provided benchmarks don't give a clear indication of the performance the actual user sees. -

Giroro I'm going to wait for Nvidia to flesh out the rest of their lineup. I hear they are going to have a midrange RTX 4080 8GB at $799 and the entry level RTX 4080 6GB at $699.Reply

But personally, I need a low end rig to run OBS, so I'm super excited for their lowest end RTX 40xx card, the budget gamer RTX 4080 4GB. It's going to be a total steal at $599. That's $100 cheaper than the launch price of the RTX 3080! -

Geef Its too bad they can't use the extra space they have for more Ray Tracing cores. Instead of giving GPU Shader Cores a boost, they decide to throw an extra 5k Ray Tracing cores in there. I am sure they are totally different so they could never do that but whatever.Reply -

SkyBill40 Replycknobman said:Those prices can suck it.

Crypto mining is kaput and Nvidia is going to have a hard time selling this stuff at such astronomical pricing.

Actually, no, they won't have ANY trouble. Book it. -

jkflipflop98 I guess they're hoping crypto will make a comeback. . . because there's no way we're spending $1700 on a graphics card.Reply

I greatly look forward to watching their little pricing scheme completely collapse over the coming months. -

JarredWaltonGPU Reply

Short-term, I absolutely agree. I know a lot of gamers who will queue up for the new uber-GPU. The same thing happened with the RTX 3090 at $1499 — except they were all sold out, and part of that was scalpers and part of it was prospective miners. Hopefully, with the glut of 30-series GPUs hitting the market, we see less success from the scalping zone. Basically, a scalper needs to be able to sell a card for 25% more than they pay for it to come out ahead. Which, save us Lord Gaben if there are a bunch of people paying $2,000+ for RTX 4090 cards. But there probably will be, especially if the supply is in the tens of thousands at most.SkyBill40 said:Actually, no, they won't have ANY trouble. Book it. -

domih On videocardz, the thread EVGA ends relationship with NVIDIA reached 757 comments after a few days. The thread NVIDIA debuts “Ada” GeForce RTX 40 series is already at 785 comments and the day has not even ended yet.Reply

I guess the NVIDIA astroturfers are going to be extremely busy for weeks :ROFLMAO: