Nvidia’s GeForce RTX 3060 Ti Rumored to Launch Next Month

Nvidia reportedly preps GeForce RTX 3060 Ti to steal RDNA 2's thunder

If the latest rumor (via VideoCardz) is to be believed, Nvidia may launch a mid-range GeForce RTX 3060 Ti graphics card as soon as next month, which is a lot earlier than expected. The product might offer ~80% of GeForce RTX 2070’s performance, which would stack up pretty nicely on the GPU hierarchy, yet its price remains to be seen.

With the launch of its GeForce RTX 30-series ‘Ampere’ graphics cards, Nvidia significantly changed its rules of engagement. Firstly, the company decided not to use the ‘Ti’ moniker for its top-of-the-range gaming offering and called it the GeForce RTX 3090 instead. Secondly, the highest-end GeForce now carries a record $1,499 MSRP. Thirdly, it decided not to enable NVLink-powered multi-GPU support on anything else but the top-of-the-line board. Apparently, there are more changes incoming.

Instead of launching a mid-range ‘Ampere’ offering several months after the GeForce RTX 3090/3080/3070 debut in mid-September and October, the company intends to release its GeForce RTX 3060 Ti already in the second half of October, according to an unconfirmed report from VideoCardz.

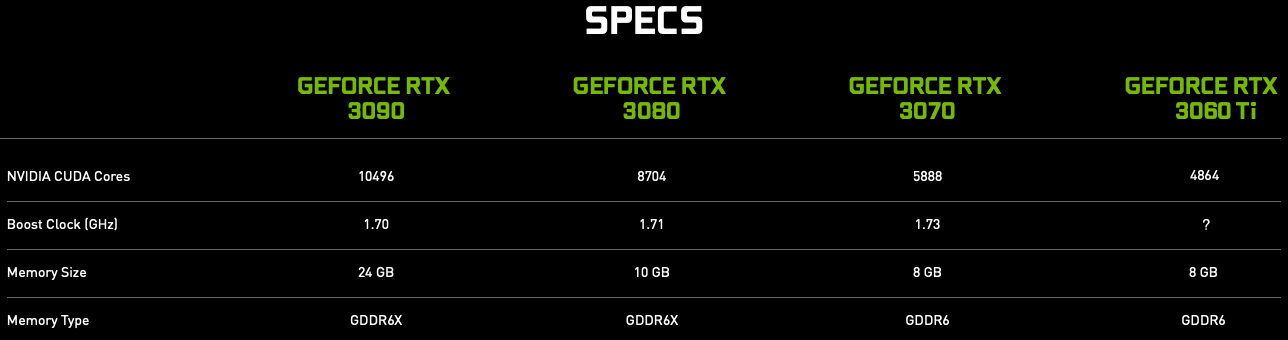

Nvidia’s GeForce RTX 3060 Ti is said to be based on the GA104-200 GPU featuring 4864 CUDA cores, 152 Tensor cores, and 38 RT cores. The product will retain an 8GB 256-bit GDDR6 memory subsystem running at 14 Gbps and offering 448 GB/s of bandwidth. Actual frequencies of the RTX 3060 Ti chip are yet to be determined, but if it runs at the same clocks as the RTX 3070, it will offer around 82% of the latter’s performance. If the frequencies are higher, the gap between the RTX 3060 Ti and the RTX 3070 may shrink further.

Nvidia yet has to disclose the actual launch date of its GeForce RTX 3070 product, so it is not a good business to speculate on the launch date of the GeForce RTX 3060 Ti. Meanwhile, since AMD is set to unveil its RDNA 2-powered GPUs on October 28, it is more than likely that Nvidia might try to steal the thunder and launch its new product around the same time.

As for price, the GeForce RTX 3060 Ti will clearly cost less than $499 as it sits below the RTX 3070, but there are no clear details right now. In fact, it is not clear whether the RTX 3060 Ti product will be accompanied by something called the GeForce RTX 3060 any time soon, or will stay the lowest-priced ‘Ampere’ for a while.

Nvidia traditionally does not comment on rumors and speculations, so it is impossible to get a confirmation or denial from the GPU developer itself. Meanwhile, the news comes from only one source and could not be verified.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

fevanson 8gb vram? My RTX 2060 Super OC'd to base RTX 2070+ performance has 8gb vram so I guess I will have to hold out until Nvidia Hopper drops in 2021-2022, as I've never upgraded my video card without an increase in vram. As VRAM bottlenecks have always resulted in buying a new card throughout my entire period building pc's since the Radeon 9100 128mb AGP and the Nvidia 7300gt 512mb AGP.Reply -

TechyInAZ Replyfevanson said:8gb vram? My RTX 2060 Super OC'd to base RTX 2070+ performance has 8gb vram so I guess I will have to hold out until Nvidia Hopper drops in 2021-2022, as I've never upgraded my video card without an increase in vram. As VRAM bottlenecks have always resulted in buying a new card throughout my entire period building pc's since the Radeon 9100 128mb AGP and the Nvidia 7300gt 512mb AGP.

Things have changed for this time period though. Game engines don't consume as much VRAM as we thought, some of the vram is used for non-important stuff, like a buffer zone and isn't actually required to be on the vram.

Plus, Nvidia keeps improving their memory compression so 8GB of vram on Ampere should be capable of holding at least a few GB more than Turing and especially Pascal. -

Chung Leong I don't think it's going to be the 3060 Ti, as Nvidia seems to be moving towards using the "Ti" moniker only for one stand-out card in a series . Titanium is an element, with fixed properties. Using the name to denote relative position on the performance chart is kinda stupid. "Super" is much clearer. There will be either a 3080 or 3090 Ti and that's it.Reply -

Au_equus 1st paragraph-Reply

"The product might offer ~80% of GeForce RTX 2070’s performance"

4th paragraph-

"Actual frequencies of the RTX 3060 Ti chip are yet to be determined, but if it runs at the same clocks as the RTX 3070, it will offer around 82% of the latter’s performance."

I would assume the latter is correct given that the former would be a very steep decline in performance metrics. -

Chung Leong ReplyAu_equus said:I would assume the latter is correct given that the former would be a very steep decline in performance metrics.

The 3060 will have more thermal legroom. Boost clock will likely be a little higher. -

mac_angel At first I was excited by the "ti". It more or less confirms they will be releasing "ti" versions of all their cards (the 3090 was meant to replace Titan, not 1080ti. Not sure why that was in there when it's been talked about already in so many other articles on here).Reply

But then reading that they are only putting NVLink on the 3090 alone, and none of the others, was heartbreaking.

.

And I can already imagine the comments of how many people believe SLI is dead, not needed, etc, etc. That's fine if you believe that. Doesn't mean you have to dictate what everyone else would like. It is rare that a single 1080ti or 2080ti is able to push a 4K display with max setting and stay constantly above 60fps. Now we are also talking about higher refresh rate displays, VRR or GSync, and even higher resolutions, all of which are becoming more and more popular. Can a single 3080 play Cyberpunk 2077 with Ray-Tracing and everything else maxed on a 4k display and stay above 60fps? We have to wait and see, but I'm willing to bet the answer is no. And what about every other game that comes out for the next 2 years? -

King_V Reply

Game developers had to do extra to support it. They don't do that anymore. Are you going to blame them for not throwing money and time at adding the support for SLI or Crossfire to the tiny percentage of potential customers that will pay for an SLI setup. Why should they take the financial hit?mac_angel said:At first I was excited by the "ti". It more or less confirms they will be releasing "ti" versions of all their cards (the 3090 was meant to replace Titan, not 1080ti. Not sure why that was in there when it's been talked about already in so many other articles on here).

But then reading that they are only putting NVLink on the 3090 alone, and none of the others, was heartbreaking.

.

And I can already imagine the comments of how many people believe SLI is dead, not needed, etc, etc. That's fine if you believe that. Doesn't mean you have to dictate what everyone else would like. It is rare that a single 1080ti or 2080ti is able to push a 4K display with max setting and stay constantly above 60fps. Now we are also talking about higher refresh rate displays, VRR or GSync, and even higher resolutions, all of which are becoming more and more popular. Can a single 3080 play Cyberpunk 2077 with Ray-Tracing and everything else maxed on a 4k display and stay above 60fps? We have to wait and see, but I'm willing to bet the answer is no. And what about every other game that comes out for the next 2 years?

DX12, and I believe Vulkan as well, handle it through the API - if I'm not mistaken, they can even handle sharing the load among cards of different performance/capability.

There's no need for Crossfire/SLI anymore in the way that it used to be done. -

mac_angel ReplyKing_V said:Game developers had to do extra to support it. They don't do that anymore. Are you going to blame them for not throwing money and time at adding the support for SLI or Crossfire to the tiny percentage of potential customers that will pay for an SLI setup. Why should they take the financial hit?

DX12, and I believe Vulkan as well, handle it through the API - if I'm not mistaken, they can even handle sharing the load among cards of different performance/capability.

There's no need for Crossfire/SLI anymore in the way that it used to be done.

For SLI support from game developers, yes and no. One, it depends on the engine used. Many games run off of a specific engine and that either has SLI support built in or not. And even those times when it's not, 99% of the time you can still turn it on through NVidia Inspector. I honestly can't remember the last time I was NOT able to get SLI to work. Even Unigen's Heaven and Superposition, SLI isn't natively supported, but you can find SLI profiles online to get it to work. And, yes, it does work, and does have a large speed increase. I can't remember the exact number, but something like 80%+, and that was on my older system, which was a Core i7 6850k. Newer CPUs handle the APIs much better now.

As for DX12, it's not nearly as great as it was meant to be. It brings in new eye candy, but I can't think of any games off the top of my head that do well with scaling multiple GPUs in non-SLI configurations.

Personally, I think NVidia is a little foolish on some of their ideas, and it's only a matter of time before their hubris really bites them in the ass. They screwed themselves over with the TSMC deal, thinking they were all high and mighty and trying to strong arm TSMC. And they are pushing proprietary game stuff (which proprietary stuff like that just hurts the consumer in the long run), but they abandoned the other tech they have that is also proprietary that could have easily worked hand in hand, AND sold more GPUs over all. They have PhysX, but aren't doing much with it any more. Meanwhile, HairWorks, Turf Effects, WaveWorks, FleX, Flow, PhysX, etc; all stuff that can easily be programed to offload onto a PhysX card. These things are already written into the games, it isn't that hard to add a couple of lines of code to direct CUDA cores. Maybe not very many people can afford to buy an SLI setup, but it easy to see the big difference in benchmarks in games when you turn HairWorks on and off. To buy a $120 card to run PhysX (or repurpose an older card when you upgrade) and get to be able to turn on all of these extra effects, I think a lot of people would. Especially with the right marketing. Not to mention the amount of mid level GPUs that NVidia had returned from the Crypto-bubble popping, they could have easily spun that around, push PhysX, slap a sticker on the box and put up some marketing hype. -

King_V Replymac_angel said:For SLI support from game developers, yes and no. One, it depends on the engine used. Many games run off of a specific engine and that either has SLI support built in or not. And even those times when it's not, 99% of the time you can still turn it on through NVidia Inspector. I honestly can't remember the last time I was NOT able to get SLI to work. Even Unigen's Heaven and Superposition, SLI isn't natively supported, but you can find SLI profiles online to get it to work. And, yes, it does work, and does have a large speed increase. I can't remember the exact number, but something like 80%+, and that was on my older system, which was a Core i7 6850k. Newer CPUs handle the APIs much better now.

As for DX12, it's not nearly as great as it was meant to be. It brings in new eye candy, but I can't think of any games off the top of my head that do well with scaling multiple GPUs in non-SLI configurations.

This sounds completely wrong, and that percentage of speed increase sounds far more like fantasy than reality.

Care to cite sources on this? -

nofanneeded ReplyThe product might offer ~80% of GeForce RTX 2070’s performance,

Correct this please , RTX 3060 Ti should be 80% of RTX 3070 ...

Again errors from tomshardware and no Editor in chief to check for errors .. so far 4 errors in 4 articles in just one month.