When Will AMD Launch the RX 7700 and RX 7800?

Navi 32 is MIA and the previous gen holdovers are getting stale.

AMD published a blog yesterday highlighting the importance of having sufficient VRAM for 1440p gaming. The short summary is that it suggests you need 12GB or 16GB to get the most out of 1440p gaming. It's an interesting take, because we still don't have AMD RDNA 3 architecture GPUs with 12GB or 16GB of VRAM. We're specifically talking about the Navi 32-based parts, the RX 7800 (XT) and RX 7700 (XT), which at this point are long overdue, leaving the previous generation parts to vie for a place on the best graphics cards.

There are reasons for AMD to not launch another series of GPUs yet, of course. Like the absolute bloodbath that's taking place in sales of dedicated graphics cards right now — the last quarter saw the lowest number of units sold in decades. Ouch.

Previous generation AMD RX 6000-series GPUs are still sitting in warehouses and on retail shelves, even at greatly discounted prices. It's all reminiscent of the RX 500-series parts sitting around at rock bottom prices back in 2018–2019, and in fact that surplus wasn't resolved until the 2020 GPU shortages finally wiped out stock of every GPU.

At the same time, AMD would have ordered wafers and planned for the launch of new Navi 32 GPUs well over a year ago. There are probably chips sitting around, just waiting for a perceived good time to launch. AMD can try to play the waiting game, but, at some point, it's going to need to push out the new generation Navi 32 GPUs.

Currently, AMD has the RX 7900 XTX and RX 7900 XT, originally priced at $999 and $899 but now selling for around $950 and $780. The next step down for the RX 7000-series is a massive plunge all the way down to the RX 7600 and its $269 launch price. Everything in between and even below the RX 7600 is still being serviced by previous generation parts — one of the biggest complaints about the RX 7600 was that it only matched the existing RX 6650 XT in performance while costing $40 extra.

AMD surely knew this was a problem, but what else could it do? Price the RX 7600 any lower and sales of RX 6650 XT and below would either falter, or those GPUs would need to drop even lower in pricing. Then potential buyers would be right back to the same dilemma: Pay more for the latest generation architecture and features with often similar performance, or pay less for a previous generation card?

If you really need/want AV1 support, that's the biggest draw right now for AMD's RX 7600. Except, our video encoding testing shows that AMD's quality still trails Nvidia and Intel. Maybe the additional compute offered by RDNA 3 will start to be a factor in more games and applications going forward, but so far the GPUs seem to be landing well short of their theoretical potential. But let's get back to Navi 32.

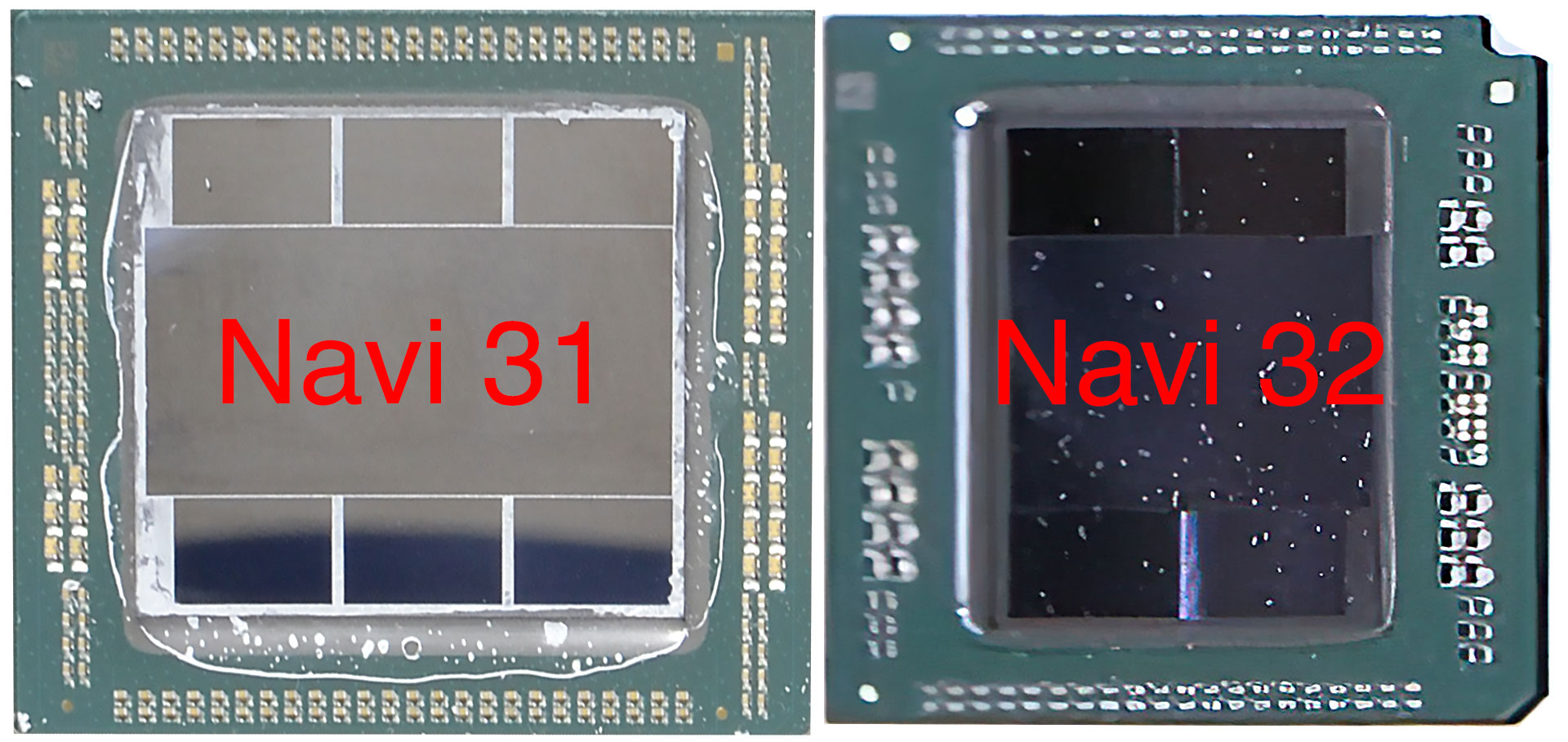

What we've heard about Navi 32 and the upcoming RX 7800- and 7700-class GPUs is pretty straightforward. They'll use chiplets, just like Navi 31 and the RX 7900-class GPUs. The MCDs (Memory Controller Dies) will be the exact same as on the 7900 XTX/XT, but the GCD will be different. Instead of up to 96 Compute Units (CUs) and support for six MCDs, Navi 32's GCD (Graphics Compute Die) will top out at 60 to 64 CUs and four MCDs. It will also be around 200 mm^2 in size, compared to the 300 mm^2 Navi 31 GCD.

AMD will then launch at least two variants. The top solution will be the complete GCD with four MCDs, giving the RX 7800 (or RX 7800 XT) support for 16GB of GDDR6 memory with a 64MB Infinity Cache. A trimmed down GCD will drop one of the MCDs and come with 12GB of GDDR6 and 48MB of Infinity Cache as an RX 7700 (possibly XT).

One interesting tidbit is that RDNA 3 likely won't ever offer a configuration with 10GB of memory and a 160-bit interface. That's what the vanilla RX 6700 10GB uses, and it would require one of the MCDs to only connect to a single GDDR6 chip. Perhaps it's not impossible, but the RX 6700 always felt like a bit of an afterthought, and in a crowded GPU market where sales are faltering, having fewer SKUs would be better than pushing out in between products — save those for a brighter future when PC and component sales start picking back up.

When will AMD finally launch these Navi 32-based GPUs? There are hints it could happen in the July–August timeframe, just in time for the back-to-school season. AMD and its partners would also like to clear out as much of the RX 6800/6700/6600-series inventory as possible in the meantime. Articles highlighting the importance of 12GB VRAM for 1440p gaming may help in that endeavor, but for cards that are now past their two years old mark, it's becoming an increasingly tough sell. Maybe we'll get some great Prime Day deals on the RX 6800/6700 series to help move things along.

The current prices on RX 6000-series GPUs are good, but they've gone back up over the past month or so (since the launch of the RX 7600, RTX 4070 and RTX 4060 Ti). Except Fathers' Day deals are also a thing, and right now, RX 6950 XT starts at $579, RX 6800 XT starts at $469, and RX 6800 starts at $459. (The RX 6800 XT is the best value out of those, if you're wondering.)

Meanwhile, the RX 6750 XT starts at $359, the fractionally slower RX 6700 XT goes for $309, and the RX 6700 10GB costs $279. If you want an AMD card with 10GB or more VRAM, those are your only options outside of the significantly more expensive (and faster) $779 RX 7900 XT and $959 RX 7900 XTX.

You might be wondering: If the RX 6000-series parts already generally fill in the mainstream to high-end price bracket, why does AMD even need RDNA 3 alternatives? The answer is efficiency as well as a desire for new product names. OEMs and system integrators love having something new to sell, rather than a two-year-old RX 6800 XT as an example. But let's quickly talk efficiency.

Nvidia paid the TSMC piper and has delivered exceptional efficiency from its RTX 40-series GPUs and the 4nm TSMC 4N node. AMD's RDNA 3 GPUs are on a newer process node from TSMC as well, at least for the GCDs, utilizing TSMC N5, opting to stick with N6 for the MCDs for cost saving reasons. Here's a look at the current and previous generation GPUs from AMD and Nvidia, sorted by performance per watt at 1440p:

| Graphics Card | FPS/W | 1440p FPS | Power |

|---|---|---|---|

| GeForce RTX 4080 | 0.412 | 108.3 | 263W |

| GeForce RTX 4090 | 0.397 | 133.2 | 335W |

| GeForce RTX 4070 | 0.386 | 73.2 | 190W |

| GeForce RTX 4060 Ti | 0.382 | 54.9 | 144W |

| GeForce RTX 4070 Ti | 0.367 | 90.5 | 246W |

| Radeon RX 7900 XT | 0.281 | 86.2 | 307W |

| Radeon RX 7900 XTX | 0.278 | 96.6 | 347W |

| GeForce RTX 3070 | 0.255 | 55.5 | 217W |

| Radeon RX 6800 | 0.247 | 56.3 | 228W |

| GeForce RTX 3060 | 0.230 | 37.2 | 162W |

| Radeon RX 6950 XT | 0.230 | 74.5 | 324W |

| GeForce RTX 3080 | 0.228 | 73.3 | 321W |

| Radeon RX 7600 | 0.227 | 34.3 | 151W |

| Radeon RX 6800 XT | 0.222 | 65.4 | 294W |

| Radeon RX 6600 XT | 0.212 | 32.2 | 152W |

| GeForce RTX 3090 Ti | 0.208 | 90.6 | 435W |

| Radeon RX 6700 XT | 0.207 | 44.3 | 214W |

| Radeon RX 6600 | 0.199 | 26.8 | 134W |

Nvidia takes the top five spots in efficiency with its five new Ada Lovelace RTX 40-series GPUs. It's a safe bet that the RTX 4060 will join its siblings near the end of this month, if rumors about its release date are correct. Meanwhile, AMD's RX 7900 XTX/XT are a clear step ahead of the previous generation RTX 30-series and RX 6000-series parts, with the RX 7600 in the middle of that mix as well.

In short, Nvidia already has a big lead in market share, it now has a significantly more efficient architecture, and it also has extra features like DLSS, Frame Generation, and AI computational power at its disposal. AMD needs to close the gap, and RDNA 3 mainstream to high-end GPUs would at least be more competitive than the previous-generation RDNA 2 offerings.

Navi32 die shot (?!) pic.twitter.com/rVGYGeIpUEJune 13, 2023

And there's good news, in that Navi 32 has apparently been pictured. Twitter user Hoang Anh Phu posted the above image today, with some additional AI upscaled variants. Some wondered if that was AMD Instinct MI300, but we did some Photoshopping and scaling to match the size of the MCDs to AMD's existing Navi 31 (as much as possible). The results are pretty conclusive in that the Navi 32 GCD looks to be around 210 mm^2, compared to 300 mm^2 on the Navi 31 GCD — that's in line with previous speculation of an approximately 200 mm^2 die size.

Obviously, the scaling and skewing of the Navi 32 photo isn't as nice as the high resolution photograph we took of a Navi 31 die. The MCD sizes in the above photo aren't exactly the same, so we could easily be off on the Navi 32 GCD size by 5% or so. But the rest of the photo does match what we'd expect from the future Navi 32 GPU.

Given that photos of Navi 32 are now leaking, it's a safe bet that we'll see GPUs based on the chip sooner rather than later. We don't have exact details on model names or the release date, but we've previously heard from AMD CEO Lisa Su saying "mainstream" RDNA 3 chips would be here before July. Was the RX 7600 the only GPU she was referring to? Perhaps, but a July or August launch of 7700-class and 7800-class parts would still make a lot of sense, timing-wise.

Will the new GPUs be substantially better than the existing RX 6000-series Navi 21/22 parts? Based on what we've seen with other RDNA 3 GPUs, we'd anticipate a 60 CU RX 7800 part to land at roughly the same performance as the existing RX 6800 XT, while a 48 CU RX 7700 variant would end up closer to the RX 6800 than the RX 6750 XT. Even if the new parts are slightly faster than that projection, they'll need equally attractive pricing if they're going to attract buyers.

We should find out more within the next month or two, if all goes as expected.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Alvar "Miles" Udell Have to disagree with that, I want to see the RX 7800 16GB at $500-$599, and judging by the cliff GPU sales fell off of, I'm not the only one tired of these prices.Reply -

-Fran- I'll offer another theory: they could be stocking up the mid-range chips for FirePro cards with a lot of VRAM in order to "promote" AMD with the AI crowd. Seeing how their latest drivers specifically called out AI workloads improvements in SDiff and such, I'd imagine it's not a far fetched theory. This also includes the big strides with ROCm.Reply

They would still sell those for more money and, probably, sell everything to the Prosumer and Professional markets before their CDNA cards are ready for workstations (IIRC).

Do I like this? Generally speaking, not really. I still have my Vega64 plowing through games just fine and the 6900XT for VR, so I'm not really bothered by current and, possibly, next gen, but I do mind "the trends" I guess.

Anyway, we'll see how this one pans out rather soon!

Regards. -

abufrejoval I'd have said "CUDA pays the rent, gaming is a welcome windfall" until very recently.Reply

Now I'd have to slightly modify that to PyTorch instead of CUDA.

But it doesn't actually change the results, because PyTorch on AMD isn't yet where it needs to be to be attractive.

And I really need 2-4x the VRAM on team red for the equivalent Nvidia product to switch.

But market segmentation in AI is much more profound on AMD than it is currently with team green so as ready as I am to change horses, there is nothing attractive around.

MI300 sounds nice but there is no affordable way to fit that into one of my workstations. -

Reply

"The MCD sizes aren't exactly the same either, so we could easily be off on the Navi 32 GCD size by 5% or so"

IMO, since the Navi 32 chiplet design features the GCD in the center measuring around 200mm2, each of the four MCDs measures around 37.5mm2, so that should round up to around 350mm2.

For comparison, the Navi 31 GCD measures 304.35mm2, and the whole chip with MCDs included has an area of 529.34mm2. So we are looking at a -34% smaller die size for the Navi 32 GPU.

Also, Navi 32 should feature up to 60 Compute Units, or 3840 Stream Processors, on a 256-bit memory bus interface. -

jackt I knew that! They released the mid level cards so late that now the next gen is close ! And weeks before the ram price drop!Reply -

MooseMuffin My baseless guess is the additional complexity involved in the chiplet design means they can't hit the prices they need to hit to be competitive.Reply -

salgado18 I get your point, Jared. But RDNA3 has the same efficiency as RDNA2 (look at 7600 vs 6600 XT), with very few new features, same ray tracing. RTX 4000 improved on every feature, brought new ones, and even almost doubled efficiency. To me, Nvidia won this round. If the Radeon cards are the same, we might as well buy what we have today (be it 6- or 7-series), or wait for the next gen. The mid-range Radeons just don't matter much anymore.Reply -

JarredWaltonGPU Reply

Disagree with what? Sounds like you just agreed with wanting to see “RDNA 3 upgrades in the $400–$600 range.”Alvar Miles Udell said:Have to disagree with that, I want to see the RX 7800 16GB at $500-$599, and judging by the cliff GPU sales fell off of, I'm not the only one tired of these prices.

Which we probably will, given 7900 XT is selling at $780. Knock off 4GB VRAM and use a smaller GCD and 7800 for $600 (maybe less) is certainly viable. 7700 with 12GB will hopefully land at $400. -

sherhi Reply

Could be the case but how many times did we expect them to make some serious leap ahead of competition only to fail miserably? Ryzen 7000 was praised to oblivion, with headlines and clickbait titles on YouTube screaming "Intel is in trouble", same thing happened to 7000 GPU lineup "Nvidia is in trouble"...Maybe they are waiting with full stock and based on final performance and market reaction they will go one way or the other and most likely, sadly, fail no matter which way they go (if you don't know where you are sailing no wind is favourable).-Fran- said:I'll offer another theory: they could be stocking up the mid-range chips for FirePro cards with a lot of VRAM in order to "promote" AMD with the AI crowd. Seeing how their latest drivers specifically called out AI workloads improvements in SDiff and such, I'd imagine it's not a far fetched theory. This also includes the big strides with ROCm.

They would still sell those for more money and, probably, sell everything to the Prosumer and Professional markets before their CDNA cards are ready for workstations (IIRC).

Do I like this? Generally speaking, not really. I still have my Vega64 plowing through games just fine and the 6900XT for VR, so I'm not really bothered by current and, possibly, next gen, but I do mind "the trends" I guess.

Anyway, we'll see how this one pans out rather soon!

Regards.

In my country those cards offered interesting value, like 6700 was often cheaper than 6600XT or even 6650XT, which was sometimes cheaper than 6600XT...but does anyone know why are they doing midgen refresh while still producing regular models? Or has there been a mountain of regular models in stock up to this day? If not how is it possible to have refreshed model (for example 6650XT from may 2022) still mixed up with regular model (6600XT from August 2021) even 1 year after its release?jackt said:I knew that! They released the mid level cards so late that now the next gen is close ! And weeks before the ram price drop!

Maybe it has nothing to do with complexity but rather finding out their architecture is not really competitive in this generation, combined with plenty of old cards available almost everywhere and Nvidia doing Nvidia things, they just play a waiting game, keeping old cards higher and earning whats left to earn. Why would they push new cards out, cannibalise their own products and god forbid maybe start a price war in duopoly? Nobody (out of those 2 relevant players) wants that.MooseMuffin said:My baseless guess is the additional complexity involved in the chiplet design means they can't hit the prices they need to hit to be competitive.

I'll offer different perspective maybe 🤔 Catcher in the rye was replaced by Harry Potter when it comes to most common book(s) children read (ask any literature teacher) and finally somewhat solid Harry Potter game is released and whoa oh my oh my, it eats even more than 12 gigs of vram, sometimes even on 1080p. Do you want 450-500€ GPU, replace the old one, maybe it fits, maybe it doesn't, maybe you have time to research all those cards I don't know...or you prefer a 500€ console to plug and play with no issues whatsoever? This Nvidia's push towards average GPU price qual to console price is a trap. 600usd (720€) for 1440p 16gb GPU? Is that supposed to be a good deal or something? :DJarredWaltonGPU said:Disagree with what? Sounds like you just agreed with wanting to see “RDNA 3 upgrades in the $400–$600 range.”

Which we probably will, given 7900 XT is selling at $780. Knock off 4GB VRAM and use a smaller GCD and 7800 for $600 (maybe less) is certainly viable. 7700 with 12GB will hopefully land at $400.