New Zen 5 128-core EPYC CPU wields 512MB of L3 cache

The highest capacity to date for non-3D V-Cache parts.

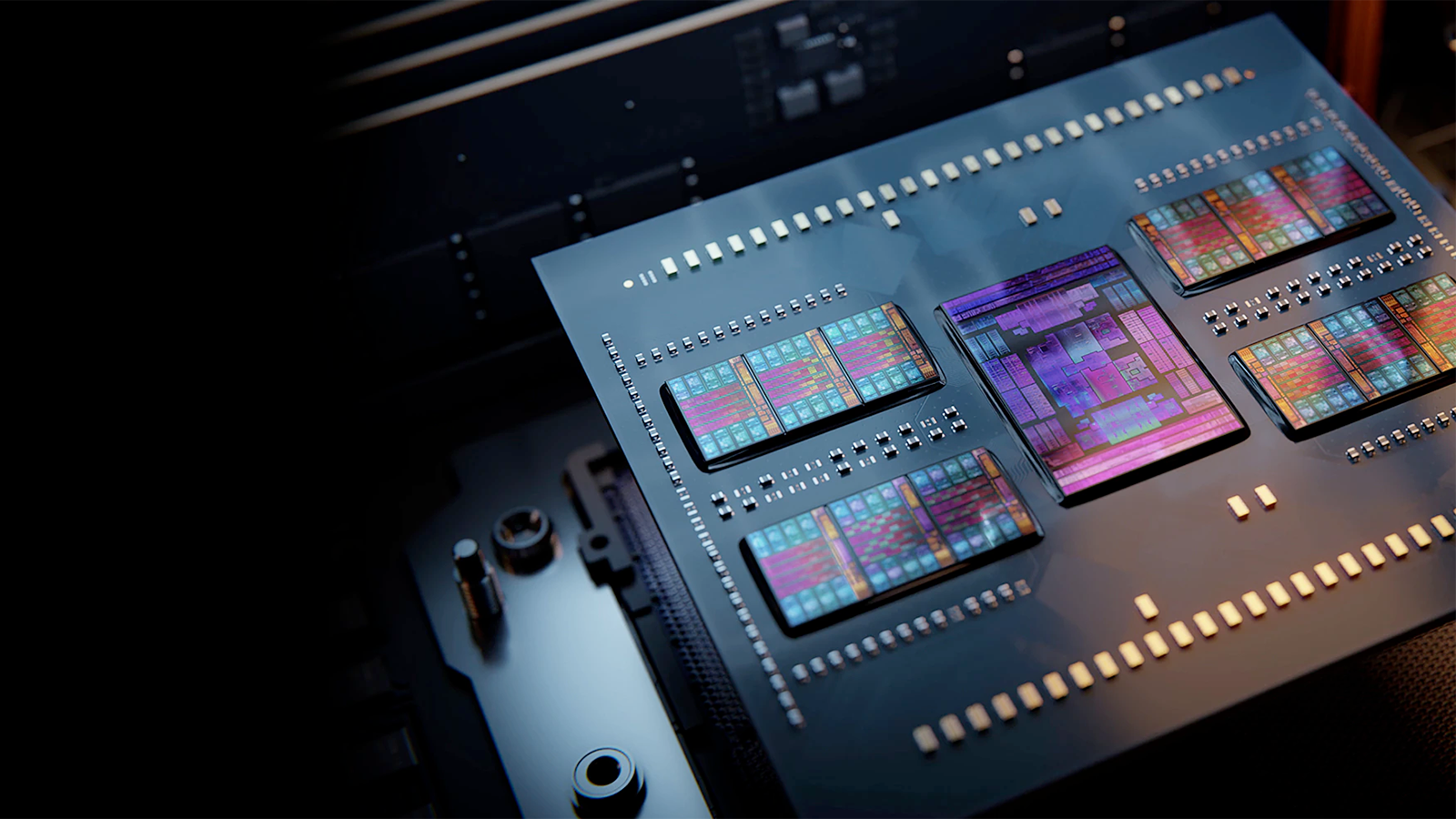

AMD is gearing up to launch one of the most cache-dense EPYC processors to date. HXL has discovered a new EPYC 9005 series Zen 5 part that boasts 512MB of L3 cache without using AMD's 3D V-Cache technology.

The EPYC 9755 sports 128 cores, 256 threads, and a peak turbo clock of 4.1GHz. The most interesting tidbit about this processor is that it boasts half a Gigabyte worth of L3 cache but doesn't have an "X" moniker at the end of its model name to denote that it comes with 3D V-Cache, similar to X3D models in AMD's Ryzen 7000 and Ryzen 5000 series.

AMD EPYC 9755 128C256T2.7GHz-4.1GHzSource: QQ pic.twitter.com/TbKKtJPZcnJuly 23, 2024

If these new specs are not fabricated, it suggests that AMD will increase L3 cache sizes for its Zen 5-based EPYC processors. Compared to its predecessors, the EPYC 9755 has 33% more L3 cache than the 96-core EPYC 9654, which has 384MB of L3 cache spread across 12 CCDs. Compared to 3D V-Cache-enabled parts, the EPYC 9755 is just 128MB behind the 96-core EPYC 9684X, which features an additional slab of L3 cache installed above its 12 CCDs.

| CPUs | Core / Thread Count | L3 cache |

| EPYC 9755 (Turin) | 128 / 256 (Zen 5) | 512MB |

| EPYC 9754 (Bergamo) | 128 / 256 (Zen 4c) | 256MB |

| EPYC 9654 (Genoa) | 96 / 192 (Zen 4) | 384MB |

| EPYC 9384X (Genoa-X) | 32 / 64 (Zen 4) | 768MB |

| EPYC 9684X (Genoa-X) | 96 /192 (Zen 4) | 1,152 MB (1.2 GB) |

AMD is also purportedly increasing core counts for Zen 5 EPYC. With 128 cores and 256 threads, the EPYC 9755 has the same core count as AMD's EPYC 9754 Bergamo flagship, boasting 128 Zen 4c cores and 33% more cores than its more direct predecessor, the 96-core EPYC 9654.

This core count has several implications. It suggests that AMD is finally upgrading EPYC from 8-core CCDs to 16-core CCDs for its high-performance Zen cores. Bergamo had the same 16-core CCD configuration, but AMD could do this with Zen 4 by utilizing its more compact Zen 4c cores.

The higher core count means AMD can cram more cores into EPYC with fewer CCDs (or run more cores with the same amount of CCDs as Genoa). Assuming a 16 CCD configuration, the EPYC 9755 will only come with 8 CCDs, four fewer than the 96-core Genoa flagships.

Based on these simple specifications, Zen 5 EPYC looks very impressive. Not only is AMD boosting core counts, but it appears to be massively improving L3 cache density at the same time. With just 8 CCDs, AMD is cramming 64MB of cache into each individual CCD, which is twice the size of AMD's Ryzen 9000 and Ryzen 7000 L3 cache capacities.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

usertests Didn't they just say that going from L3 to L3 on different chiplets takes about as long as going to RAM?Reply

For that reason, "32 MB per CCX" remains the important number (96 MB for 3D cache models). 512 MB, or 640 MB with L2 cache are just marketing numbers or trivia. -

jeremyj_83 "If these new specs are not fabricated, it suggests that AMD will increase L3 cache sizes for its Zen 5-based EPYC processors."Reply

512MB cache on 128 cores is the same per CCD amount as 384MB on 96 cores. This assumes that AMD is keeping the 8 big cores per CCD as they have done on the desktop. -

bit_user Reply

No, they just said it's still faster than going to DRAM!usertests said:Didn't they just say that going from L3 to L3 on different chiplets takes about as long as going to RAM?

Yes, AMD's L3 cache is segmented, unlike Intel's. So, what really matters is the L3 cache per CCD, but it's easier to compare if you look at it in terms of L3 cache per core.usertests said:For that reason, "32 MB per CCX" remains the important number (96 MB for 3D cache models). 512 MB, or 640 MB with L2 cache are just marketing numbers or trivia.

For me, this article is a nothing burger. All it does is confirm that they're keeping the same base configuration of 4 MiB of L3 per Zen 5 core that AMD has used since Zen 2! I mean, there's absolutely no way they'd realistically regress on that front (leaving C-cores aside). -

usertests Reply

I don't like that number either, because a core can use all of the L3 cache available to it in the CCX.bit_user said:All it does is confirm that they're keeping the same base configuration of 4 MiB of L3 per Zen 5 core that AMD has used since Zen 2!

The big change between Zen 2 desktop and Zen 3 desktop was unifying the CCX and giving 32 MiB to any of the cores on the chiplet instead of 16 MiB.

Renoir to Cezanne was even more dramatic: It went from 4 MiB available to a whopping 16 MiB, a quadrupling.

The new Strix Point effectively has no improvement for big/fast cores: 16 MiB max, same as the previous generations since Cezanne. Then 8 MiB serving the C cores. So when someone inevitably highlights that it's 24 MiB now (!), there's nothing to be impressed by.

I look forward to 3D cache making it onto the mainstream APUs in the future. -

bit_user Reply

That was an architectural optimization, but the amount of L3 per core is what costs money and impacts scaling. That's why I choose to focus on the amount per core.usertests said:I don't like that number either, because a core can use all of the L3 cache available to it in the CCX.

The big change between Zen 2 desktop and Zen 3 desktop was unifying the CCX and giving 32 MiB to any of the cores on the chiplet instead of 16 MiB.

Yes, I should've qualified that I'm talking just about server CPUs, here. The article was about server CPUs, so I took it as given, but it's fair to point out the ratio didn't hold for APUs.usertests said:Renoir to Cezanne was even more dramatic: It went from 4 MiB available to a whopping 16 MiB, a quadrupling.

See, that's where we disagree. It increases the number per Big core to 4 MiB, like the chiplet CCDs have, which I think is a material change that should be reflected in its performance.usertests said:The new Strix Point effectively has no improvement for big/fast cores: 16 MiB max, -

Avro Arrow As much as that seems like a lot, this is a server CPU and so even as much as 512MB of L3 cache would get used so this would have easily measurable benefits in the data centre space.Reply

On the other hand, that much cache in a desktop environment would be mostly wasted because there's nothing I can think of that could make use of that kind of cache. -

Avro Arrow Reply

Who said that? That seems impossible because even cache on a different CCX would be physically closer (and therefore faster) than even the fastest RAM. Compared to all levels of cache, RAM is painfully slow. I really think that you read something wrong because saying that cache is no faster than RAM is like saying that VRAM is no faster than RAM.usertests said:Didn't they just say that going from L3 to L3 on different chiplets takes about as long as going to RAM?

It just doesn't seem possible. -

bit_user Reply

Yes, it takes time for electric signals to propagate, but consider that they do so on the order of 150 mm per nanosecond. DRAM latencies are on the order of 100 nanoseconds. Electric signals can propagate about 15 meters in that amount of time. So, the reason DRAM latencies are so long is not because of physical distances.Avro Arrow said:Who said that? That seems impossible because even cache on a different CCX would be physically closer (and therefore faster) than even the fastest RAM.

Where I see this flawed assumption arise most often is when people assume that on-package memory is lower latency because it's physically closer. Do the math, people. In fact, HBM traditionally has longer best-case latencies than DIMMs. -

Rob1C Not sure why a few thought that L3 would be slow or have higher latency.Reply

Check out:

https://www.nextplatform.com/2023/06/12/intel-pits-its-sapphire-rapids-xeon-sp-against-amd-genoa-epycs/

https://hothardware.com/news/cpu-cache-explained

https://www.phoronix.com/review/xeon-max-9468-9480-hbm2e/7

L3 latency is always lower than RAM, and a large cache is sometimes better, sometimes not; compared with a smaller cache. It all depends on the workload, fortunately for some gaming is frequently better off with a larger cache; but this is a thread about servers.

Where I would spend my money is on clock frequency, having purchased a CPU with a reasonable size cache (and not paying for -X) a faster clock helps you all the time; while an extremely large cache only help you to a lesser extent.

Still, it's great that they are making these enormous caches, as someday the price will come down. Till then I'll spend my cache on clocks. -

Avro Arrow Reply

I don't get it either. It's like saying that an HDD is faster than an SSD. It's so fundamentally incorrect that I didn't even know how to respond at first. It's like someone saying that the sky is red, you're stunned for a moment that anyone would un-ironically say that. :ROFLMAO:Rob1C said:Not sure why a few thought that L3 would be slow or have higher latency.