Arc A750 vs RX 6600 GPU faceoff: Intel Alchemist takes on AMD RDNA 2 in the budget sector

Intel drivers have improved, so let's discuss how it stacks up against AMD at the $200 price point.

The $200 price bracket is filled with a lot of competitive GPUs. Arguably, two of the most competitive GPUs in that space right now are the Radeon RX 6600 and the Arc A750, with the latter sporting Intel's bevy of driver optimizations from the past two years. These two GPUs have ranked among the best graphics cards in times past. For related reading, check our RTX 3050 vs Arc A750 and RTX 3050 vs RX 6600 GPU faceoffs; here, we'll declare once and for all (until the next gen GPUs arrive) which card reigns at the $200 price point.

The Intel Arc A750 debuted two years ago as part of Intel's first batch of dedicated gaming GPUs to hit the market in 24 years. Despite having good specs on paper, the GPU didn't perform well in our original review, with driver issues cropping up in some of the games we tested. However, Arc A750 now comes with two years' worth of Intel optimizations and driver updates that could make a difference in our latest performance benchmarks. It will be interesting to see how the A750 has matured since our original review.

The AMD RX 6600 debuted a year earlier than the A750 in October of 2021. Initially, the AMD GPU was ostensibly priced at $329 and designed to compete with the RTX 3060. That felt like a cynical move by AMD, where it dramatically increased GPU MSRPs on some of the RDNA 2 GPUs due to cryptomining-induced shortages — though Nvidia's MSRPs were equally ridiculous as it was nearly impossible to find cards at anything close to official recommended pricing until mid-2022. Three years on, AMD still has Navi 23 cards floating around, with the RX 6600 now serving as a near entry-level GPU competitor thanks to a massive $129 price drop.

How do the RX 6600 and Arc A750 stack up in today's market? We declared both to be generally superior to the RTX 3050, at least in the performance metric, but now it's time to pit them against each other. We'll discuss their strengths and weaknesses as we check out performance, pricing, features, technology, software, and power efficiency — listed in order of generally decreasing importance.

Arc A750 vs RX 6600: Performance

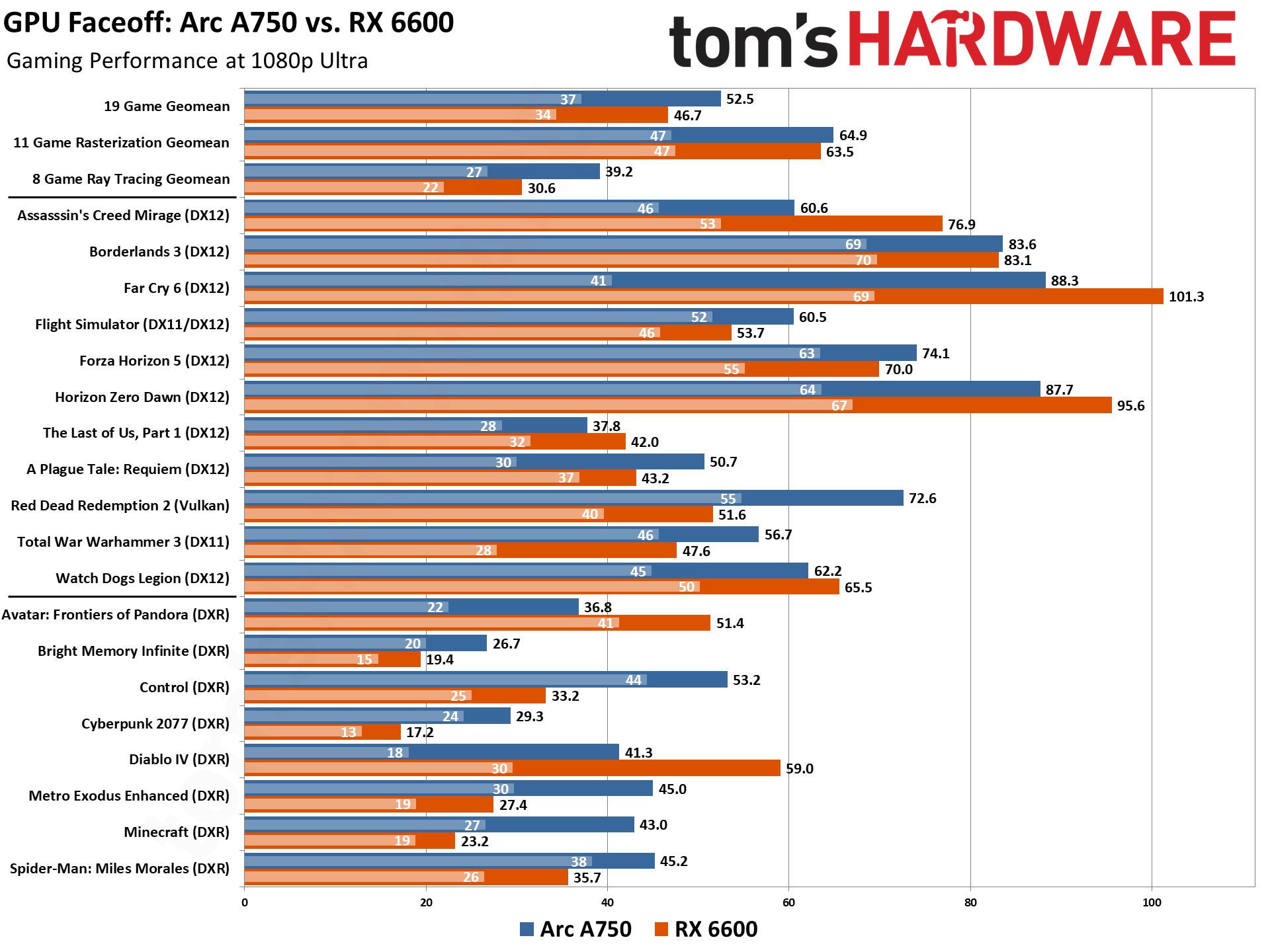

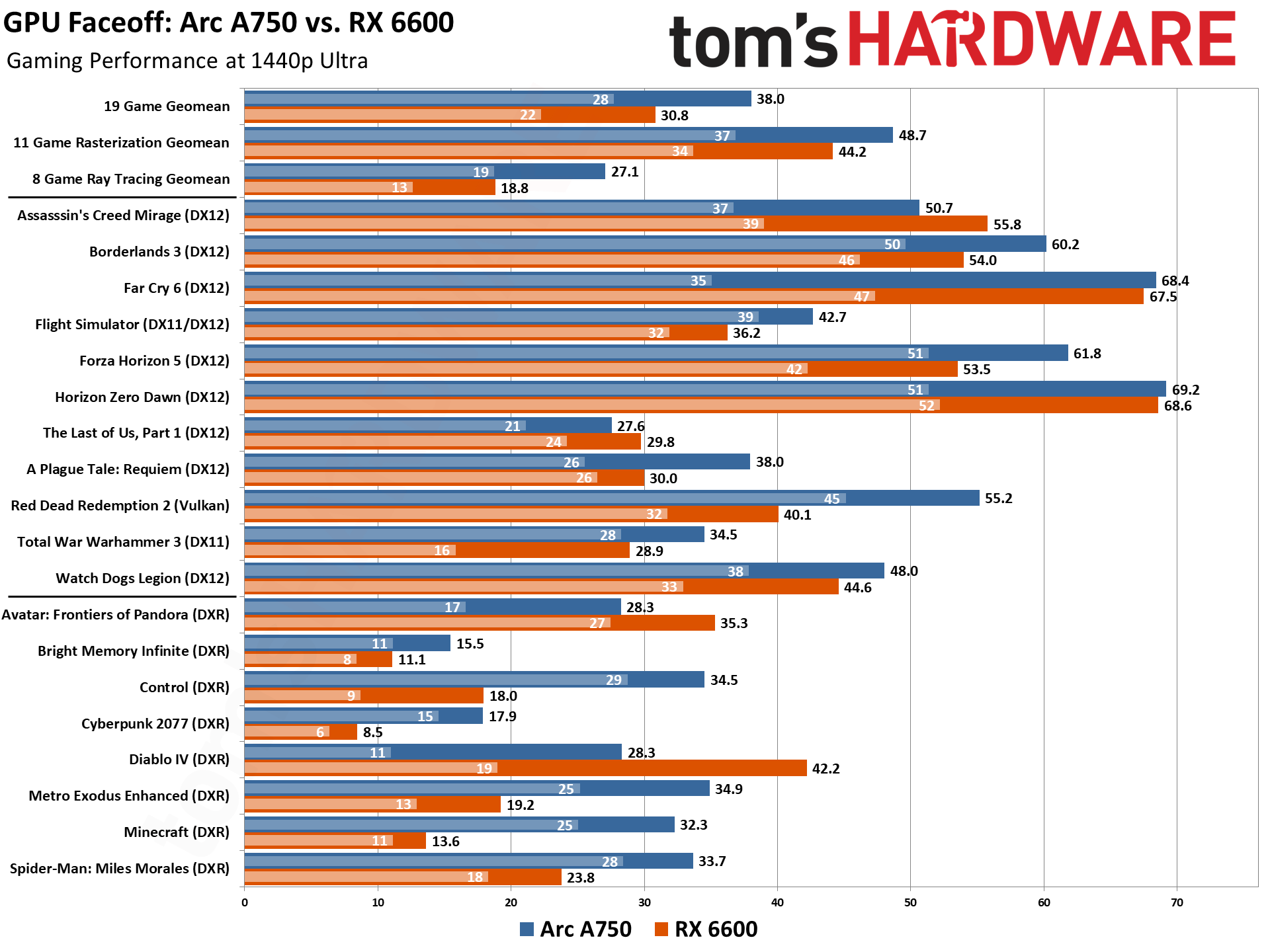

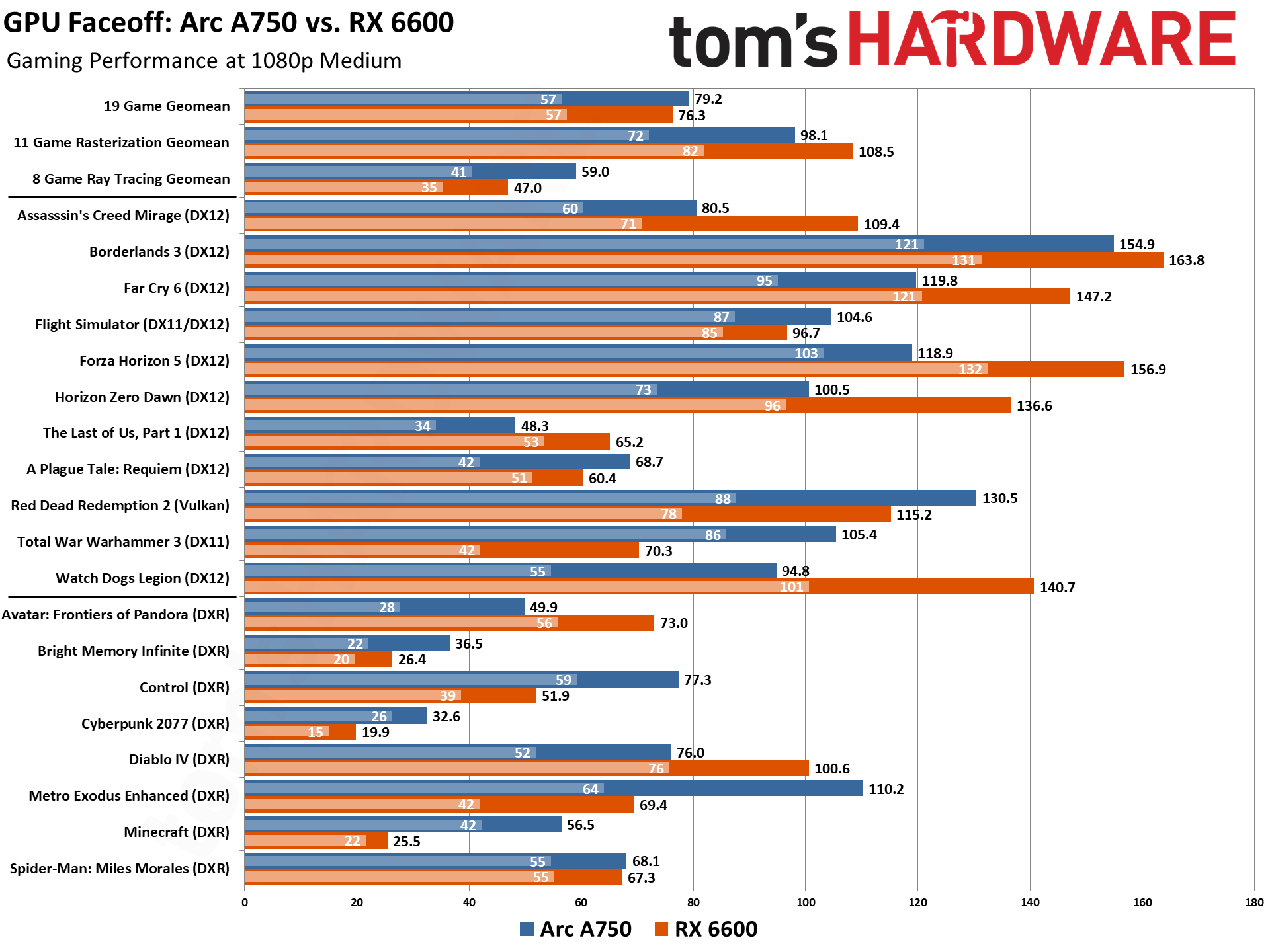

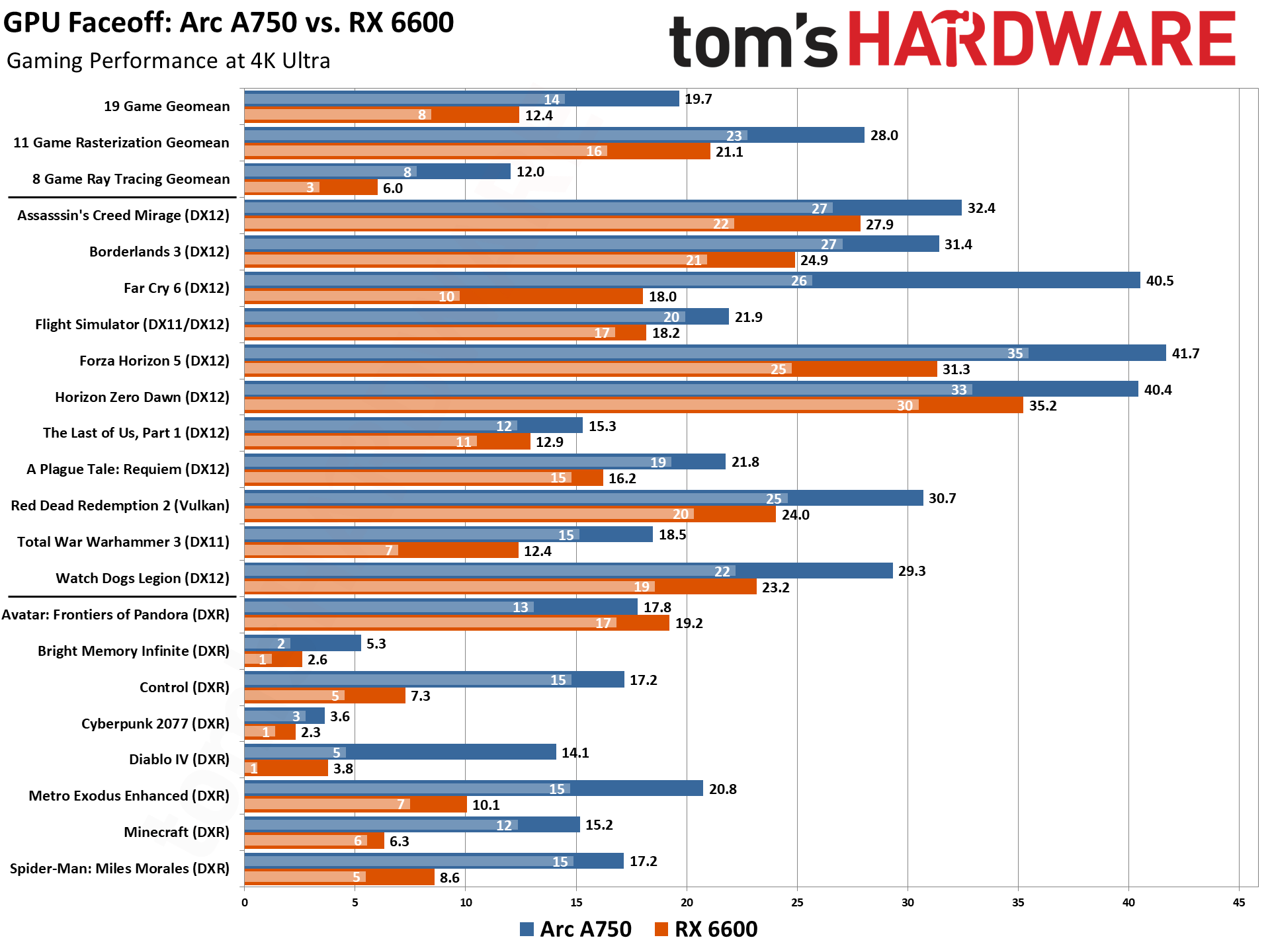

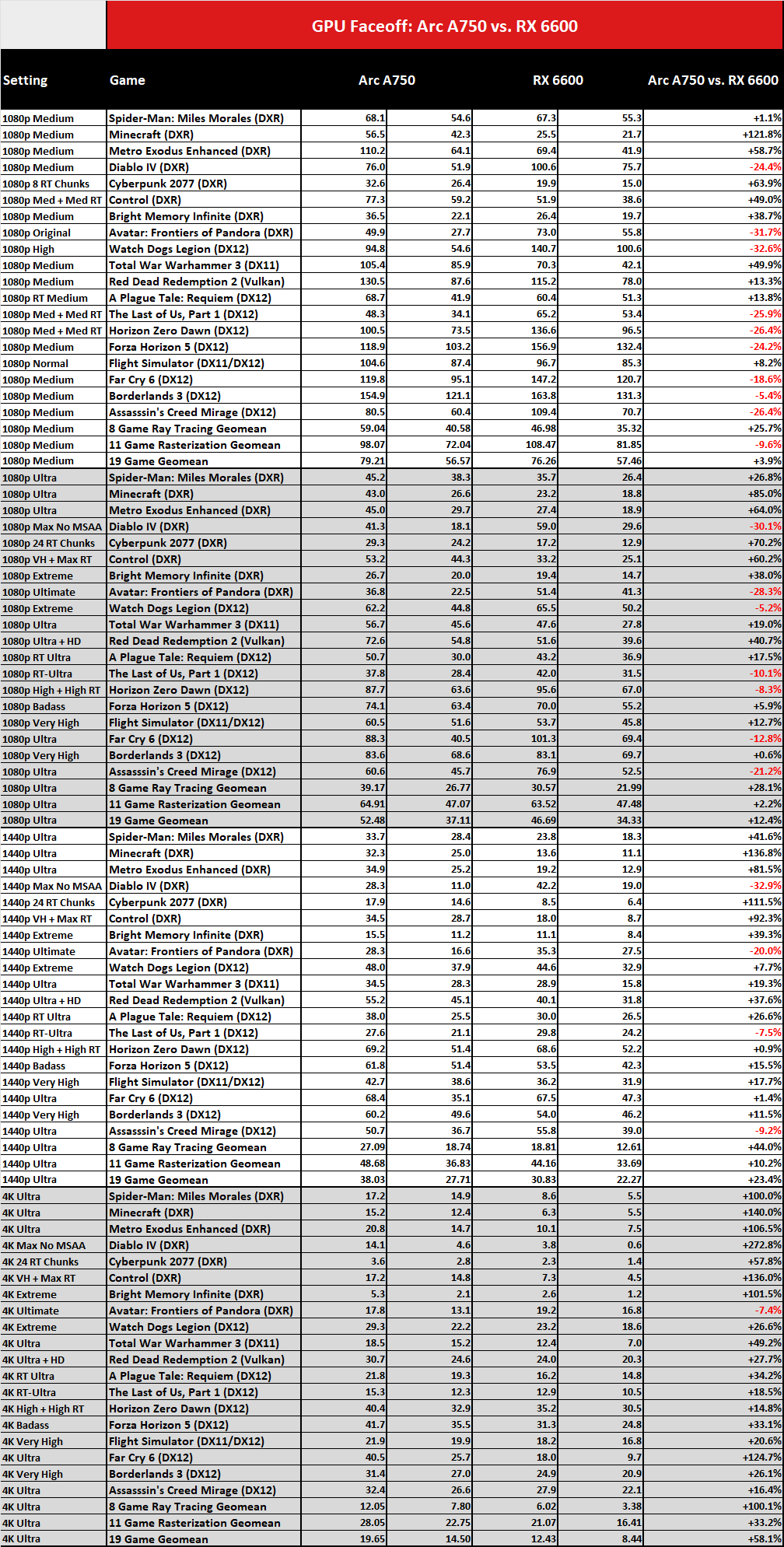

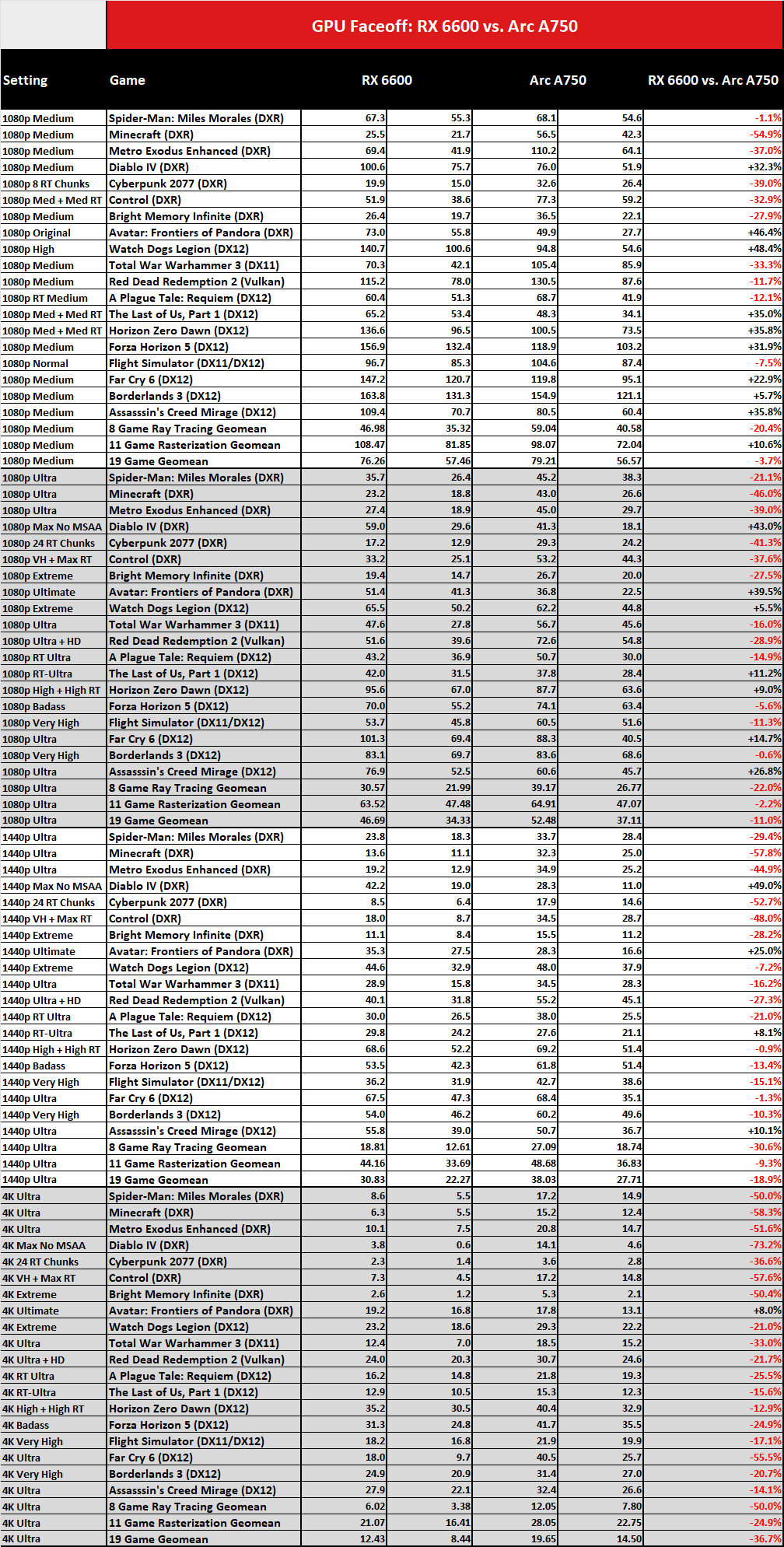

The Arc A750 puts up an excellent fight against the RX 6600, outperforming the AMD GPU at all resolutions in our 19-game Geomean. Even when taking ray tracing out of the equation, the Arc A750 outperformed the RX 6600 in three of our four 11-game rasterization geomeans: 1080p ultra, 1440p ultra, and 4K. The only rasterized geomean where AMD's card came out on top was the 1080p medium settings.

Looking closer at the rasterized results specifically, the Arc A750 outperforms the RX 6600 by just 2% at 1080p ultra. The gap widens up significantly at 1440p where the Arc A750 is 10% quicker. At 4K, it's not even a contest, with the Intel Arc GPU outperforming the RX 6600 by 32%. It's only at 1080p medium that the RX 6600 comes out on top with a 10% lead over the Intel GPU.

Again, that's only the rasterization results. Circling back to our 19-game geomean that takes into account ray tracing games, the Arc A750 outperforms the RX 6600 by 12% at 1080p ultra, 23% at 1440p ultra, and a whopping 59% at 4K. Even at 1080P medium, the Arc card comes out on top, barely, with a 3% victory.

Diving into the per-game benchmarks, the Arc A750 doesn't win as consistently as the overall results might suggest. Out of the 11 rasterization games, five perform better on the Radeon card at 1080p ultra. Some of the best performers for AMD include Assassin's Creed Mirage, boasting a 26.8% advantage for the RX 6600, and Far Cry 6 (an AMD-sponsored title) where the RX 6600 comes out 14.7% ahead of the Intel GPU.

Things change at 1440p, where very few titles run better on the RX 6600 compared to the Arc A750. The Last of Us Part 1 and Assassin's Creed Mirage are the only titles that perform better on the AMD GPU. And at 4K, no rasterization games from our test suite perform better on the RX 6600.

So what's going on? A big part of this ties into the architectures of the two GPUs. AMD has a 128-bit memory interface with 8GB of VRAM, while Intel has a 256-bit interface with 8GB. This gives Intel far more raw memory bandwidth. But AMD also has a largish 32MB L3 cache that improves its effective bandwidth, and that cache will inherently have higher hit rates at lower resolutions and settings. Plus, AMD's drivers tend to be better optimized, especially at settings where the CPU can be more of a bottleneck — 1080p medium, in other words.

Moving onto ray tracing specific titles, its clear that AMD has the worst ray tracing architecture — not just in comparison to Nvidia, but when looking at Intel as well. Intel shows very strong RT performance, compared to AMD at least, with the Arc A750 being over twice as fast as the RX 6600 in some RT titles. In our eight game ray tracing geomean, the Arc A750 boasts an impressive 28% performance lead over the RX 6600 at 1080p ultra, and it's still a 25% lead at 1080p medium — and that grows to 44% at 1440p ultra and 100% at 4K ultra.

The only two titles that favor AMD's GPU are Avatar: Frontiers of Pandora (another AMD-sponsored game with relatively limited use of RT) and Diablo IV (which appears to have received quite a bit of input from Nvidia). The former featuring a surprising 40% lead over the Arc card, and the latter boasting an even greater 43 % lead. When Intel loses, it's by a large margin, and we'd venture to guess that this is largely a case of Intel having not spent time optimizing its drivers for these two particular games.

The opposite is also true. In the six titles that favor the A750, the Arc card wins by a massive margin. Control, Cyberpunk, Metro Exodus, and Minecraft all show a significant performance advantage for Intel, often by 60% or more. In several games, its the difference between playable and not playable results.

At 1440p and above the Arc A750 demolishes the RX 6600 for our RT suite, though at least three of the games aren't even playable. And while the A750 claims an even larger victory at 4K ultra, there's not a single RT game where performance was even remotely playable, making it a less meaningful victory. While the Arc A750 can have a huge performance advantage at these resolutions, the reality is that most people won't be using the card at 1440p or 4K for RT gaming — at least not without XeSS or FSR upscaling.

Overall Performance Winner: Intel Arc A750

Looking at pure performance, Intel's Arc A750 comes out on top. Not only is it fast in ray tracing titles, it also performs better in rasterized games most of the time. The performance also scales considerably at higher resolutions. If you're looking to game at 1440p with one of these GPUs, the Intel card does much better, and it also leads at 1080p ultra in the majority of our tested games.

Arc A750 vs RX 6600: Price

Pricing is very similar between the Arc A750 and the RX 6600. Both currently sell for right around $200, often with $10 discounts on select models — which we're seeing at the time of writing. We've seen the Arc A750 go for even less (it was $179 for Prime Day), but such deals don't tend to stick around too long.

The Arc A750 has two AIB partners selling the card for $200 or less right now, the dual-fan ASrock Challenger D at $189 and the dual-fan Sparkle ORC OC at $199. Premium models start as low as $209 and reach up to $239, with Intel's Limited Edition being the most expensive A750 variant you can buy — though Intel no longer makes A750 LE cards, there's still some unsold inventory.

There are far more RX 6600 models to choose from, thanks to AMD's larger group of AIB partners. Several models exist at the $200 and lower price point including the Sapphire Pulse and ASRock Challenger. Surprisingly, Gigabyte even has a triple-fan Eagle variant of the RX 6600 going for just $189 at the time of writing, and that card has been sitting at this price for quite a while. Premium options come in anywhere between $229 and $249, but most of these models consist of dual-fan solutions, so you aren't getting a noticeably better card over the baseline SKUs.

Price Winner: Tie

Whether we're looking at the typical $199 price or the current $189 price, both GPUs have models that fit the bill. Intel comes out ahead as the better value, in terms of performance per dollar, but raw pricing ends up as a tie.

Arc A750 vs RX 6600: Features, Technology, and Software

| Graphics Card | Arc A750 | RX 6600 |

|---|---|---|

| Architecture | ACM-G10 | Navi 23 |

| Process Technology | TSMC N6 | TSMC N7 |

| Transistors (Billion) | 21.7 | 11.1 |

| Die size (mm^2) | 406 | 237 |

| CUs / Xe-Cores | 28 | 28 |

| GPU Cores (Shaders) | 3584 | 1792 |

| Tensor / AI Cores | 448 | N/A |

| Ray Tracing Cores | 28 | 28 |

| Boost Clock (MHz) | 2400 | 2491 |

| VRAM Speed (Gbps) | 16 | 14 |

| VRAM (GB) | 8 | 8 |

| VRAM Bus Width | 256 | 128 |

| L2 / Infinity Cache | 16 | 32 |

| Render Output Units | 128 | 64 |

| Texture Mapping Units | 224 | 112 |

| TFLOPS FP32 (Boost) | 17.2 | 8.9 |

| TFLOPS FP16 (FP8) | 138 | 17.8 |

| Bandwidth (GBps) | 512 | 224 |

| TDP (watts) | 225 | 132 |

| Launch Date | Sep 2022 | Oct 2021 |

| MSRP | $289, now $249 | $329 |

| Online Price | $190 | $190 |

Diving into the specifications, the Arc A750 and RX 6600 couldn't be more different if you asked. Besides the obvious difference in GPU architectures, both GPUs rely on completely different memory subsystems despite being in the same performance class. The only aspect of the two GPU's memory systems that is identical is the actual memory capacity. Both GPUs sport 8GB capacities.

The RX 6600 uses a 128-bit wide interface that's more typical of modern $200 midrange GPUs, while the Arc A750 utilizes a much more potent 256-bit interface. The Intel card also boasts faster 16 Gbps GDDR6 modules compared to the RX 6600's 14Gbps chips.

This works out to a pretty impressive 512 GB/s of raw memory bandwidth for the A750, compared to just 224 GB/s for the 6600 — less than half the bandwidth for the math impaired. Again, this helps explains why the Arc A750 performs vastly better at higher resolutions. Even though both GPUs suffer from memory capacity issues at higher than 1080p resolutions, the Arc A750 has substantially more bandwidth to help deal with the problem.

This level of diversity extends to the underlying GPUs architectures as well. The RX 6600 employs AMD's RDNA 2 architecture, while the A750 sports Intel's first discrete GPU architecture ever, Arc Alchemist. AMD has far more expertise and experience in developing discrete GPU architectures, as is evident from the specs. Look at the transistor count as an example, where the Arc A750 ACM-G10 die has 21.7 billion transistors compared to the RX 6600's Navi 23 die with just 11.1 billion transistors. That's basically twice as many transistors as AMD, but it's nowhere near 2x the performance.

The Arc GPU comes with more ROPs (render outputs), shader cores, and TMUs (texture mapping units), which helps explain the higher transistor count, plus dedicated AI processing clusters called XMX cores. The A750 features 3,584 shaders, 448 XMX cores, 28 ray tracing units, 128 ROPS, and 224 TMUs. The RX 6600 features 1,792 cores, 28 ray accelerators, 64 ROPs, and 112 TMUs. On paper, you'd expect the A750 to be even faster than the RX 6600 than our benchmarks show, but this is basically Intel's first dedicated GPU rodeo in over two decades (since the ancient i740 GPU).

But it's not just about games. All the GPU companies are talking about AI these days, but for consumer GPUs only Nvidia and Intel offer dedicated matrix cores. (AMD's newer RDNA 3 architecture straddles the line with some pseudo-matrix WMMA instructions that make better use of the FP16 hardware, but the RX 6600 isn't an RDNA 3 chip.) Intel uses the XMX cores for AI-powered XeSS upscaling, which tends to deliver better image fidelity than AMD's FSR 2/3 upscaling. AI workloads that properly utilize the XMX cores also perform quite well.

Xe-Cores are a very rough equivalent to the likes of Nvidia's Streaming Multiprocessor (SM) and AMD's Compute Unit (CU). These cores do all the compute calculations the GPU needs, and comes with load/store, an L1 cache, and a BVH cache for ray tracing work. The main unit that each Xe-Core comes with is a Xe Vector Engine or XVE, which is basically a shader core. These XVE's can compute eight FP32 operations per cycle and also support INT and extended math calculations.

While Intel might not be as efficient with its hardware as AMD in gaming, Intel's better specs aren't for nothing. The A750 boasts 14.7 TFLOPs of FP32 compute power and 116 TFLOPs of FP16. The RX 6600 features 8.9 TFLOPS of FP32 and 17.8 TFLOPS of FP16. The huge deficit in FP16 power is the result of a lack of hardware accelerated AI silicon on the AMD GPU.

It's also worth pointing out that Intel Arc GPUs really do need a reasonably modern platform to reach their performance potential. We've tested on a high-end Core i9-13900K PC, and if you have an older system that doesn't support resizable BAR (Base Address Register), the A750 can lose 10~20 percent of its performance. Though most Windows 11 compatible PCs these days should at least meet this requirement.

RDNA 2 is clearly more optimized for traditional gaming workloads. AMD does not employ any sort of AI hardware acceleration for AI-intensive tasks, instead favoring traditional rendering for tasks such as image upscaling. AMD does employ ray tracing tech in its GPUs, but this was the company's first attempt at ray tracing, and as our performance benchmarks show, it's not very good — RDNA 2 GPUs often fail to rise to the level of even Nvidia's first generation RTX 20-series Turing GPUs.

But what RDNA 2 does well is rasterized gaming, and it's very efficient at such tasks. AMD's caching architecture helps a lot, with a 32MB L3 cache that AMD dubs Infinity Cache. Not even counting the RX 6600's L2 cache, the AMD GPU boasts 32MB of Infinity Cache while the Arc A750 has just 16MB of L2 cache and no L3 cache. It's not enough for AMD to consistently outperform Intel in raw gaming performance, but there's no denying AMD has a much more optimized GPU architecture, at least for gaming.

One thing that has plagued Intel's Arc GPUs since launch is Intel's sketchy GPU drivers. Unfortunately, two years on, this is still somewhat of a problem even though Intel has made some great strides in improving its drivers. Much of this has to do with Intel working smarter instead of harder, by utilizing compatibility layers for DX9, DX10, and DX11 titles that converts calls from these older APIs into DX12 calls. Intel designed Arc Alchemist primarily around DX12, so it makes sense for Intel to rely as much as it can on its DX12 driver optimizations.

If you're a gamer that mostly plays older titles, Intel's drivers are pretty solid now, but Intel still has problems when brand new games come out. Often performance is wildly inconsistent in new releases, and sometimes it takes a while for games to received decent optimizations — look at Avatar and Diablo IV (ray tracing — Diablo runs fine if you only use rasterization). Both have been out for several months but Intel still hasn't released what we would call a performant driver for these games.

AMD's drivers are unsurprisingly much more mature than Intel's. It has been in the GPU game far longer than Intel, and as a result there's a very solid driver stack that performs well in most conditions. AMD also tends to be better about having game ready drivers for new releases. It's not always perfect, but overall it's far more consistent than Intel.

Outside of game support, there are other software aspects to consider. AMD has been releasing various GPU software libraries via it's GPUOpen platform for several years now, including FSR 1/2/3, Frame Generation, and more. The driver interface as a whole also feels far more refined, where Intel's drivers feel pretty barebones. Things have improved a lot in the past two years, but there's more work to be done.

Features, Technology, and Software Winner: AMD

Ultimately, we're giving this category to AMD. Intel can be a more robust option if you're looking at non-gaming tasks, in particular AI, but even there drivers and software support aren't always as easy as we'd like. For gaming, however, which is still the most important aspect for the majority of consumers, there are far more performance oddities from Intel. AMD also gets a lot more done with less hardware in this case. AMD wins as the superior option, letting you get to gaming and other tasks without the teething pains.

Arc A750 vs RX 6600: Power Efficiency

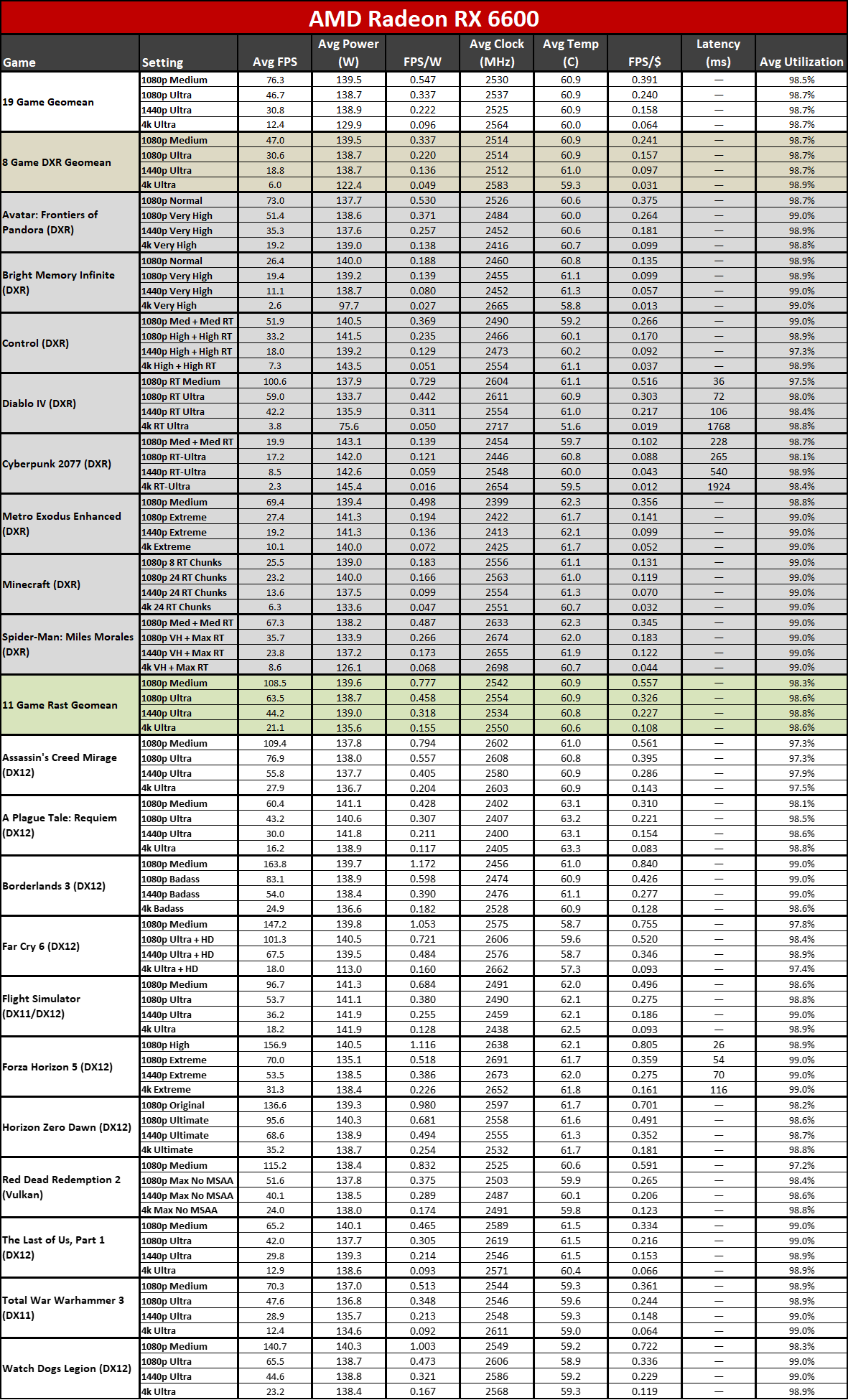

If the architecture discussion above wasn't a big enough hint already, the RX 6600 wins big time in the power efficiency. With substantially fewer transistors, using a similar "7nm-class" process node on both GPUs (TSMC's N6 isn't that different from N7), AMD ends up with an impressive 30% reduction in power consumption compared to the Arc A750.

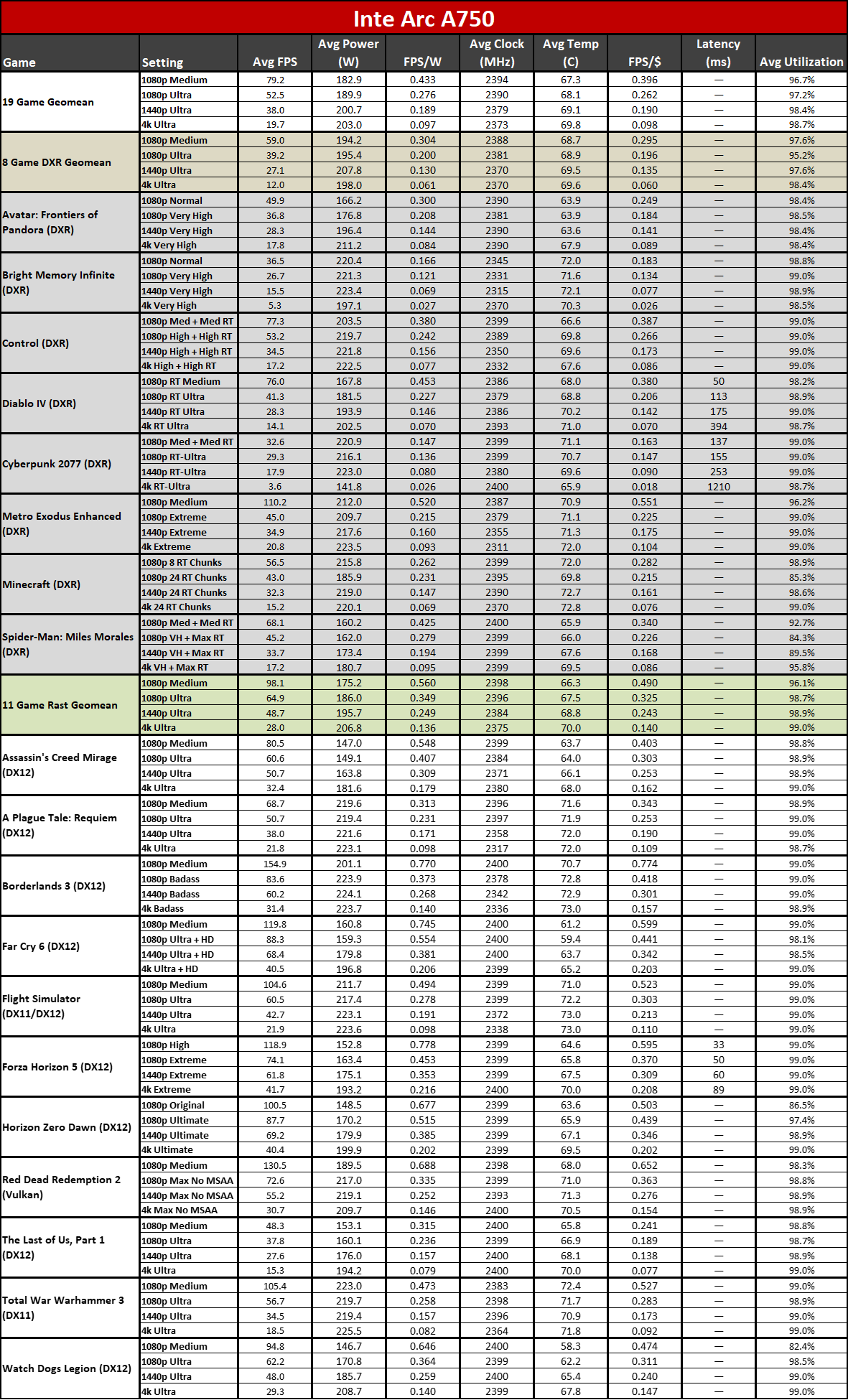

In our testing, the RX 6600 averaged around 139 watts at 1080p and 1440p — and a slightly lower 130 watts at 4K, largely due to running out of VRAM and thus struggling to keep the GPU cores fed with data. Interestingly, the highest average power consumption in our testing was at 1080p medium, where the GPU pulled 139.5 watts. Again, that's where the GPU performed best relative to the competition.

The Arc A750's power consumption looks more like a midrange GPU, matching Nvidia's RTX 3060 Ti and landing between AMD's more performant RX 6700 10GB and 6700 XT. The A750 used 190W at 1080p ultra, and that dropped to 183W at 1080p medium. 1440p ultra power use was a bit higher at 201W, and increased yet again to 203W on average at 4K.

200W isn't a ton of power these days for a GPU, but the gap between the RX 6600 and Arc A750 is more than enough to make Intel's power consumption a negative aspect. You'll potentially need a PSU upgrade for a budget PC to handle the A750, and you'll need an 8-pin and 6-pin (or dual 8-pin, depending on the card) connectors to run the A750. RX 6600 GPUs typically only use a single 8-pin connector.

Power Efficiency Winner: AMD

The RX 6600 wins this part of the faceoff. The power consumption differences between the two aren't massive, but Intel uses anywhere from 43W to 73W more power on average, depending on the resolution and settings. In performance per watt, that makes the RX 6600 26% more efficient at 1080p medium, 22% better at 1080p ultra, and 17% more efficient for 1440p ultra — with 4K ultra being a tie, and also a resolution that neither GPU really manages well.

Arc A750 vs RX 6600: Verdict

| Row 0 - Cell 0 | Arc A750 | RX 6600 |

| Performance | X | Row 1 - Cell 2 |

| Price | X | X |

| Features, Technology, Software | Row 3 - Cell 1 | X |

| Power and Efficiency | Row 4 - Cell 1 | X |

| Total | 2 | 3 |

This faceoff ends up being quite close, which makes sense as GPU prices often trend toward giving similar overall value, particularly in the competitive budget and mainstream markets. Intel's Arc A750 and AMD's RX 6600 are both good options for the sub-$200 crowd, and as we discussed with the RTX 3050 versus Arc A750, the two cards behave very differently.

When we look at the full picture, the Radeon RX 6600 comes out on top as the more well-rounded solution. It's not the fastest GPU, though it's at least reasonably close a lot of the time at 1080p. What it does best is to provide a consistent experience, with better drivers and power efficiency. That makes it a safer bet for most gamers.

Intel mostly gives you higher performance, but it can win big or lose big, depending on the game and settings being used. The Arc A750 has clearly superior ray tracing capabilities, but even then, performance at native 1080p can struggle and so it's less of a factor. While Intel wins in overall performance, for rasterization games — which will be the most important criterion at this price — the gap is small enough that we're only awarding the A750 a single point.

Drivers continue to be the Achilles Heel of Intel's GPUs and are the main reason the RX 6600 comes out on top. When customers buy a GPU, they expect it to work well in any games they might want to play. At launch, Intel Arc felt at times like Dr. Jekyll and Mr. Hyde. Some games would run great, others would fail to run at all or exhibited severe rendering errors. Things have improved, undoubtedly, but even today there are certainly games and settings that should work fine on the A750 that simply don't work as expected. Drivers/software and power efficiency end up going to AMD, with pricing being a tie.

For most people, that makes the RX 6600 the better choice. If you like to tinker, or maybe you want to support the first real competitor to the AMD/Nvidia GPU duopoly in decades, Intel has some interesting hardware on offer. For under $200, you get some serious computational potential for AI and other tasks. Intel also has better hardware video encoding, if that's important to you. Hardware enthusiasts might even like having something new and different to poke around at, while more casual gamers could end up yanking their hair out in frustration.

Ultimately, at the $200 price point, most people are buying a GPU for gaming, not for professional or AI applications. And games new and old will benefit from AMD's experience in building graphics drivers. Still, we're happy to have Intel Arc join the GPU party, and we're looking forward to seeing what round two of dedicated GPUs brings when the Battlemage chips ship some time presumably in the coming months.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

kano1337 Why the A750 has a so much bigger die compared to the RX 6600?Reply

I mean 406mm^2 vs 237mm^2, while the A750 even has the node size advantage N6 vs N7.

The performance is largely about the same, the clocks are largely same, the transistor and shader count are much higher at the A750.

I think it is a nice improvement, on the driver side, especially as the 1pct lows are in line with the avg fps, so they are not bad vs the more known competitor. I think here the raster performance is the thing what really matters, not many will use cards of this tier for any kind of raytracing, and in raster performance they are on par.

But for this performance level, I would not ever buy a card for regular use what consumes 200-250 watts. It is a bit like some older period of gaming, when they tried to sell games, with 6 CDs of video content, and a rudiemntary game logic, because the latter worked for many with 1-2CDs of multimedia content. Perception and values have shifted for most. No, do not buy low and mid-low tier with a wattage comparable to a deep freezer. -

rluker5 Since both of these cards will likely use upscaling it should be mentioned that the A750 has significantly better upscaling.Reply -

TheSecondPower There are a few advantages the A750 has that require more transistors (although AMD's Infinity Cache probably uses more transistors than Intel's bigger memory bus):Reply

FeatureRX 6600A750Result for Arcmemory width128-bit256-bitless performance loss at 1440p+PCIe lanes816no performance hit on PCIe 3.0 computersray tracing performance30.6fps39.2fpsit ray traces goodtensor cores0

448

XESS > FSRClearly Battlemage is going to need to get more out of its transistors if this is going to be a successful business for Intel. But the A750 does have good reason to have at least a few more transistors than the RX 6600: -

maestro0428 Intel has come a long way. I used an Intel Arc a750 for a while in my editing machine. Worked great after I got Adobes apps to see it. Hoping that the next round is even better.Reply -

King_V Reply

Exactly how much of a hit is there with an 8 vs 16 PCIe lane? As I recall, nothing's really saturating it much to where 8 lanes is a problem.TheSecondPower said:FeatureRX 6600A750Result for ArcPCIe lanes816no performance hit on PCIe 3.0 computers

Why do you say that?rluker5 said:Since both of these cards will likely use upscaling -

NinoPino In this faceoff, performance and price metrics are way more important than software and power usage. This should be take into account.Reply -

Reality_checker I'm not surprised that the A750 demolished the RX 6600.Reply

AMD makes weak products, especially in the GPU sector these days. -

Reality_checker ReplyPierce2623 said:Double the transistor budget for equal performance is pathetic. It really is.

Losing to another company's first discrete GPU product is a really bad look for AMD.

Almost as if Radeon went downhill since AMD bought ATI. -

usertests ReplyReality_checker said:I'm not surprised that the A750 demolished the RX 6600.

AMD makes weak products, especially in the GPU sector these days.

They're not in the same tier. AMD's gimped leftover (not even the 6600 XT) is a little worse than Intel's GPU with nearly double the die size and double the memory bus. The price is the same because Intel GPUs have to be practically given away.Reality_checker said:Losing to another company's first discrete GPU product is a really bad look for AMD.

Almost as if Radeon went downhill since AMD bought ATI.